@huggingface/tasks 0.0.5 → 0.0.7

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/README.md +16 -2

- package/dist/index.d.ts +364 -3

- package/dist/index.js +1942 -72

- package/dist/index.mjs +1934 -71

- package/package.json +1 -1

- package/src/default-widget-inputs.ts +718 -0

- package/src/index.ts +39 -4

- package/src/library-to-tasks.ts +47 -0

- package/src/library-ui-elements.ts +765 -0

- package/src/model-data.ts +239 -0

- package/src/{modelLibraries.ts → model-libraries.ts} +4 -0

- package/src/pipelines.ts +22 -0

- package/src/snippets/curl.ts +63 -0

- package/src/snippets/index.ts +6 -0

- package/src/snippets/inputs.ts +129 -0

- package/src/snippets/js.ts +150 -0

- package/src/snippets/python.ts +114 -0

- package/src/tags.ts +15 -0

- package/src/{audio-classification → tasks/audio-classification}/about.md +2 -1

- package/src/{audio-classification → tasks/audio-classification}/data.ts +3 -3

- package/src/{audio-to-audio → tasks/audio-to-audio}/data.ts +1 -1

- package/src/{automatic-speech-recognition → tasks/automatic-speech-recognition}/about.md +3 -2

- package/src/{automatic-speech-recognition → tasks/automatic-speech-recognition}/data.ts +6 -6

- package/src/{conversational → tasks/conversational}/data.ts +1 -1

- package/src/{depth-estimation → tasks/depth-estimation}/data.ts +1 -1

- package/src/{document-question-answering → tasks/document-question-answering}/data.ts +1 -1

- package/src/{feature-extraction → tasks/feature-extraction}/data.ts +2 -7

- package/src/{fill-mask → tasks/fill-mask}/data.ts +1 -1

- package/src/{image-classification → tasks/image-classification}/data.ts +1 -1

- package/src/{image-segmentation → tasks/image-segmentation}/data.ts +1 -1

- package/src/{image-to-image → tasks/image-to-image}/about.md +8 -7

- package/src/{image-to-image → tasks/image-to-image}/data.ts +1 -1

- package/src/{image-to-text → tasks/image-to-text}/data.ts +1 -1

- package/src/{tasksData.ts → tasks/index.ts} +140 -15

- package/src/{object-detection → tasks/object-detection}/data.ts +1 -1

- package/src/{placeholder → tasks/placeholder}/data.ts +1 -1

- package/src/{question-answering → tasks/question-answering}/data.ts +1 -1

- package/src/{reinforcement-learning → tasks/reinforcement-learning}/data.ts +1 -1

- package/src/{sentence-similarity → tasks/sentence-similarity}/data.ts +1 -1

- package/src/{summarization → tasks/summarization}/data.ts +1 -1

- package/src/{table-question-answering → tasks/table-question-answering}/data.ts +1 -1

- package/src/{tabular-classification → tasks/tabular-classification}/data.ts +1 -1

- package/src/{tabular-regression → tasks/tabular-regression}/data.ts +1 -1

- package/src/{text-classification → tasks/text-classification}/data.ts +1 -1

- package/src/{text-generation → tasks/text-generation}/about.md +3 -3

- package/src/{text-generation → tasks/text-generation}/data.ts +2 -2

- package/src/{text-to-image → tasks/text-to-image}/data.ts +1 -1

- package/src/{text-to-speech → tasks/text-to-speech}/about.md +2 -1

- package/src/{text-to-speech → tasks/text-to-speech}/data.ts +4 -4

- package/src/{text-to-video → tasks/text-to-video}/data.ts +1 -1

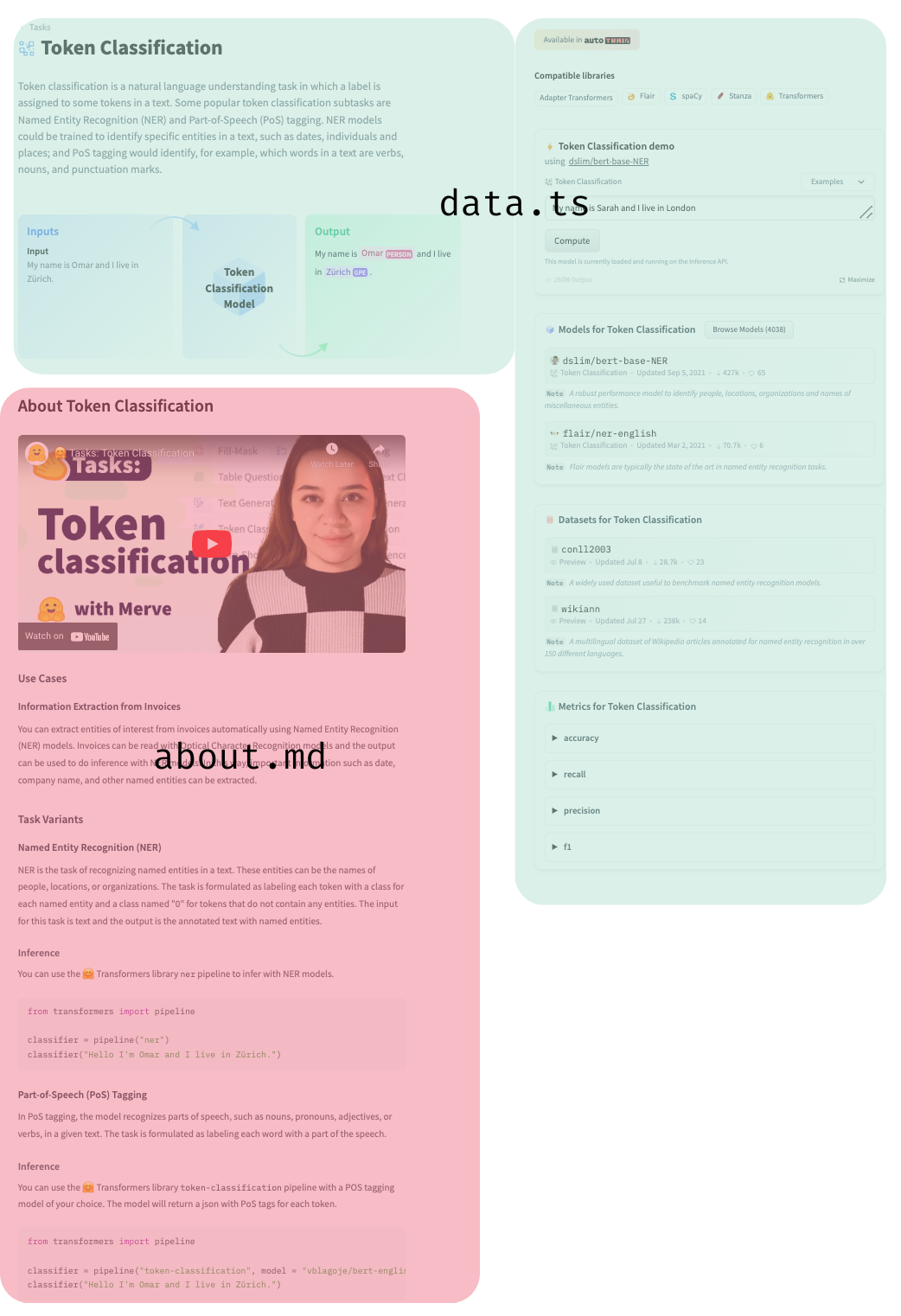

- package/src/{token-classification → tasks/token-classification}/data.ts +1 -1

- package/src/{translation → tasks/translation}/data.ts +1 -1

- package/src/{unconditional-image-generation → tasks/unconditional-image-generation}/data.ts +1 -1

- package/src/{video-classification → tasks/video-classification}/about.md +8 -28

- package/src/{video-classification → tasks/video-classification}/data.ts +1 -1

- package/src/{visual-question-answering → tasks/visual-question-answering}/data.ts +1 -1

- package/src/{zero-shot-classification → tasks/zero-shot-classification}/data.ts +1 -1

- package/src/{zero-shot-image-classification → tasks/zero-shot-image-classification}/data.ts +1 -1

- package/src/Types.ts +0 -64

- package/src/const.ts +0 -59

- /package/src/{audio-to-audio → tasks/audio-to-audio}/about.md +0 -0

- /package/src/{conversational → tasks/conversational}/about.md +0 -0

- /package/src/{depth-estimation → tasks/depth-estimation}/about.md +0 -0

- /package/src/{document-question-answering → tasks/document-question-answering}/about.md +0 -0

- /package/src/{feature-extraction → tasks/feature-extraction}/about.md +0 -0

- /package/src/{fill-mask → tasks/fill-mask}/about.md +0 -0

- /package/src/{image-classification → tasks/image-classification}/about.md +0 -0

- /package/src/{image-segmentation → tasks/image-segmentation}/about.md +0 -0

- /package/src/{image-to-text → tasks/image-to-text}/about.md +0 -0

- /package/src/{object-detection → tasks/object-detection}/about.md +0 -0

- /package/src/{placeholder → tasks/placeholder}/about.md +0 -0

- /package/src/{question-answering → tasks/question-answering}/about.md +0 -0

- /package/src/{reinforcement-learning → tasks/reinforcement-learning}/about.md +0 -0

- /package/src/{sentence-similarity → tasks/sentence-similarity}/about.md +0 -0

- /package/src/{summarization → tasks/summarization}/about.md +0 -0

- /package/src/{table-question-answering → tasks/table-question-answering}/about.md +0 -0

- /package/src/{tabular-classification → tasks/tabular-classification}/about.md +0 -0

- /package/src/{tabular-regression → tasks/tabular-regression}/about.md +0 -0

- /package/src/{text-classification → tasks/text-classification}/about.md +0 -0

- /package/src/{text-to-image → tasks/text-to-image}/about.md +0 -0

- /package/src/{text-to-video → tasks/text-to-video}/about.md +0 -0

- /package/src/{token-classification → tasks/token-classification}/about.md +0 -0

- /package/src/{translation → tasks/translation}/about.md +0 -0

- /package/src/{unconditional-image-generation → tasks/unconditional-image-generation}/about.md +0 -0

- /package/src/{visual-question-answering → tasks/visual-question-answering}/about.md +0 -0

- /package/src/{zero-shot-classification → tasks/zero-shot-classification}/about.md +0 -0

- /package/src/{zero-shot-image-classification → tasks/zero-shot-image-classification}/about.md +0 -0

package/README.md

CHANGED

|

@@ -9,7 +9,7 @@ The Task pages are made to lower the barrier of entry to understand a task that

|

|

|

9

9

|

The task pages avoid jargon to let everyone understand the documentation, and if specific terminology is needed, it is explained on the most basic level possible. This is important to understand before contributing to Tasks: at the end of every task page, the user is expected to be able to find and pull a model from the Hub and use it on their data and see if it works for their use case to come up with a proof of concept.

|

|

10

10

|

|

|

11

11

|

## How to Contribute

|

|

12

|

-

You can open a pull request to contribute a new documentation about a new task. Under `src` we have a folder for every task that contains two files, `about.md` and `data.ts`. `about.md` contains the markdown part of the page, use cases, resources and minimal code block to infer a model that belongs to the task. `data.ts` contains redirections to canonical models and datasets, metrics, the schema of the task and the information the inference widget needs.

|

|

12

|

+

You can open a pull request to contribute a new documentation about a new task. Under `src/tasks` we have a folder for every task that contains two files, `about.md` and `data.ts`. `about.md` contains the markdown part of the page, use cases, resources and minimal code block to infer a model that belongs to the task. `data.ts` contains redirections to canonical models and datasets, metrics, the schema of the task and the information the inference widget needs.

|

|

13

13

|

|

|

14

14

|

|

|

15

15

|

|

|

@@ -17,4 +17,18 @@ We have a [`dataset`](https://huggingface.co/datasets/huggingfacejs/tasks) that

|

|

|

17

17

|

|

|

18

18

|

|

|

19

19

|

|

|

20

|

-

This might seem overwhelming, but you don't necessarily need to add all of these in one pull request or on your own, you can simply contribute one section. Feel free to ask for help whenever you need.

|

|

20

|

+

This might seem overwhelming, but you don't necessarily need to add all of these in one pull request or on your own, you can simply contribute one section. Feel free to ask for help whenever you need.

|

|

21

|

+

|

|

22

|

+

## Other data

|

|

23

|

+

|

|

24

|

+

This package contains the definition files (written in Typescript) for the huggingface.co hub's:

|

|

25

|

+

|

|

26

|

+

- **pipeline types** a.k.a. **task types** (used to determine which widget to display on the model page, and which inference API to run)

|

|

27

|

+

- **default widget inputs** (when they aren't provided in the model card)

|

|

28

|

+

- definitions and UI elements for **third party libraries**.

|

|

29

|

+

|

|

30

|

+

Please add to any of those definitions by opening a PR. Thanks 🔥

|

|

31

|

+

|

|

32

|

+

⚠️ The hub's definitive doc is at https://huggingface.co/docs/hub.

|

|

33

|

+

|

|

34

|

+

## Feedback (feature requests, bugs, etc.) is super welcome 💙💚💛💜♥️🧡

|

package/dist/index.d.ts

CHANGED

|

@@ -40,6 +40,7 @@ declare enum ModelLibrary {

|

|

|

40

40

|

"mindspore" = "MindSpore"

|

|

41

41

|

}

|

|

42

42

|

type ModelLibraryKey = keyof typeof ModelLibrary;

|

|

43

|

+

declare const ALL_DISPLAY_MODEL_LIBRARY_KEYS: string[];

|

|

43

44

|

|

|

44

45

|

declare const MODALITIES: readonly ["cv", "nlp", "audio", "tabular", "multimodal", "rl", "other"];

|

|

45

46

|

type Modality = (typeof MODALITIES)[number];

|

|

@@ -278,6 +279,11 @@ declare const PIPELINE_DATA: {

|

|

|

278

279

|

modality: "cv";

|

|

279

280

|

color: "indigo";

|

|

280

281

|

};

|

|

282

|

+

"image-to-video": {

|

|

283

|

+

name: string;

|

|

284

|

+

modality: "multimodal";

|

|

285

|

+

color: "indigo";

|

|

286

|

+

};

|

|

281

287

|

"unconditional-image-generation": {

|

|

282

288

|

name: string;

|

|

283

289

|

modality: "cv";

|

|

@@ -400,6 +406,16 @@ declare const PIPELINE_DATA: {

|

|

|

400

406

|

modality: "multimodal";

|

|

401

407

|

color: "green";

|

|

402

408

|

};

|

|

409

|

+

"mask-generation": {

|

|

410

|

+

name: string;

|

|

411

|

+

modality: "cv";

|

|

412

|

+

color: "indigo";

|

|

413

|

+

};

|

|

414

|

+

"zero-shot-object-detection": {

|

|

415

|

+

name: string;

|

|

416

|

+

modality: "cv";

|

|

417

|

+

color: "yellow";

|

|

418

|

+

};

|

|

403

419

|

other: {

|

|

404

420

|

name: string;

|

|

405

421

|

modality: "other";

|

|

@@ -409,8 +425,251 @@ declare const PIPELINE_DATA: {

|

|

|

409

425

|

};

|

|

410

426

|

};

|

|

411

427

|

type PipelineType = keyof typeof PIPELINE_DATA;

|

|

412

|

-

declare const PIPELINE_TYPES: ("other" | "text-classification" | "token-classification" | "table-question-answering" | "question-answering" | "zero-shot-classification" | "translation" | "summarization" | "conversational" | "feature-extraction" | "text-generation" | "text2text-generation" | "fill-mask" | "sentence-similarity" | "text-to-speech" | "text-to-audio" | "automatic-speech-recognition" | "audio-to-audio" | "audio-classification" | "voice-activity-detection" | "depth-estimation" | "image-classification" | "object-detection" | "image-segmentation" | "text-to-image" | "image-to-text" | "image-to-image" | "unconditional-image-generation" | "video-classification" | "reinforcement-learning" | "robotics" | "tabular-classification" | "tabular-regression" | "tabular-to-text" | "table-to-text" | "multiple-choice" | "text-retrieval" | "time-series-forecasting" | "text-to-video" | "visual-question-answering" | "document-question-answering" | "zero-shot-image-classification" | "graph-ml")[];

|

|

428

|

+

declare const PIPELINE_TYPES: ("other" | "text-classification" | "token-classification" | "table-question-answering" | "question-answering" | "zero-shot-classification" | "translation" | "summarization" | "conversational" | "feature-extraction" | "text-generation" | "text2text-generation" | "fill-mask" | "sentence-similarity" | "text-to-speech" | "text-to-audio" | "automatic-speech-recognition" | "audio-to-audio" | "audio-classification" | "voice-activity-detection" | "depth-estimation" | "image-classification" | "object-detection" | "image-segmentation" | "text-to-image" | "image-to-text" | "image-to-image" | "image-to-video" | "unconditional-image-generation" | "video-classification" | "reinforcement-learning" | "robotics" | "tabular-classification" | "tabular-regression" | "tabular-to-text" | "table-to-text" | "multiple-choice" | "text-retrieval" | "time-series-forecasting" | "text-to-video" | "visual-question-answering" | "document-question-answering" | "zero-shot-image-classification" | "graph-ml" | "mask-generation" | "zero-shot-object-detection")[];

|

|

429

|

+

declare const SUBTASK_TYPES: string[];

|

|

430

|

+

declare const PIPELINE_TYPES_SET: Set<"other" | "text-classification" | "token-classification" | "table-question-answering" | "question-answering" | "zero-shot-classification" | "translation" | "summarization" | "conversational" | "feature-extraction" | "text-generation" | "text2text-generation" | "fill-mask" | "sentence-similarity" | "text-to-speech" | "text-to-audio" | "automatic-speech-recognition" | "audio-to-audio" | "audio-classification" | "voice-activity-detection" | "depth-estimation" | "image-classification" | "object-detection" | "image-segmentation" | "text-to-image" | "image-to-text" | "image-to-image" | "image-to-video" | "unconditional-image-generation" | "video-classification" | "reinforcement-learning" | "robotics" | "tabular-classification" | "tabular-regression" | "tabular-to-text" | "table-to-text" | "multiple-choice" | "text-retrieval" | "time-series-forecasting" | "text-to-video" | "visual-question-answering" | "document-question-answering" | "zero-shot-image-classification" | "graph-ml" | "mask-generation" | "zero-shot-object-detection">;

|

|

431

|

+

|

|

432

|

+

/**

|

|

433

|

+

* Mapping from library name (excluding Transformers) to its supported tasks.

|

|

434

|

+

* Inference API should be disabled for all other (library, task) pairs beyond this mapping.

|

|

435

|

+

* As an exception, we assume Transformers supports all inference tasks.

|

|

436

|

+

* This mapping is generated automatically by "python-api-export-tasks" action in huggingface/api-inference-community repo upon merge.

|

|

437

|

+

* Ref: https://github.com/huggingface/api-inference-community/pull/158

|

|

438

|

+

*/

|

|

439

|

+

declare const LIBRARY_TASK_MAPPING_EXCLUDING_TRANSFORMERS: Partial<Record<ModelLibraryKey, PipelineType[]>>;

|

|

413

440

|

|

|

441

|

+

type TableData = Record<string, (string | number)[]>;

|

|

442

|

+

type WidgetExampleOutputLabels = Array<{

|

|

443

|

+

label: string;

|

|

444

|

+

score: number;

|

|

445

|

+

}>;

|

|

446

|

+

interface WidgetExampleOutputAnswerScore {

|

|

447

|

+

answer: string;

|

|

448

|

+

score: number;

|

|

449

|

+

}

|

|

450

|

+

interface WidgetExampleOutputText {

|

|

451

|

+

text: string;

|

|

452

|

+

}

|

|

453

|

+

interface WidgetExampleOutputUrl {

|

|

454

|

+

url: string;

|

|

455

|

+

}

|

|

456

|

+

type WidgetExampleOutput = WidgetExampleOutputLabels | WidgetExampleOutputAnswerScore | WidgetExampleOutputText | WidgetExampleOutputUrl;

|

|

457

|

+

interface WidgetExampleBase<TOutput> {

|

|

458

|

+

example_title?: string;

|

|

459

|

+

group?: string;

|

|

460

|

+

/**

|

|

461

|

+

* Potential overrides to API parameters for this specific example

|

|

462

|

+

* (takes precedences over the model card metadata's inference.parameters)

|

|

463

|

+

*/

|

|

464

|

+

parameters?: {

|

|

465

|

+

aggregation_strategy?: string;

|

|

466

|

+

top_k?: number;

|

|

467

|

+

top_p?: number;

|

|

468

|

+

temperature?: number;

|

|

469

|

+

max_new_tokens?: number;

|

|

470

|

+

do_sample?: boolean;

|

|

471

|

+

negative_prompt?: string;

|

|

472

|

+

guidance_scale?: number;

|

|

473

|

+

num_inference_steps?: number;

|

|

474

|

+

};

|

|

475

|

+

/**

|

|

476

|

+

* Optional output

|

|

477

|

+

*/

|

|

478

|

+

output?: TOutput;

|

|

479

|

+

}

|

|

480

|

+

interface WidgetExampleTextInput<TOutput = WidgetExampleOutput> extends WidgetExampleBase<TOutput> {

|

|

481

|

+

text: string;

|

|

482

|

+

}

|

|

483

|

+

interface WidgetExampleTextAndContextInput<TOutput = WidgetExampleOutput> extends WidgetExampleTextInput<TOutput> {

|

|

484

|

+

context: string;

|

|

485

|

+

}

|

|

486

|

+

interface WidgetExampleTextAndTableInput<TOutput = WidgetExampleOutput> extends WidgetExampleTextInput<TOutput> {

|

|

487

|

+

table: TableData;

|

|

488

|

+

}

|

|

489

|

+

interface WidgetExampleAssetInput<TOutput = WidgetExampleOutput> extends WidgetExampleBase<TOutput> {

|

|

490

|

+

src: string;

|

|

491

|

+

}

|

|

492

|

+

interface WidgetExampleAssetAndPromptInput<TOutput = WidgetExampleOutput> extends WidgetExampleAssetInput<TOutput> {

|

|

493

|

+

prompt: string;

|

|

494

|

+

}

|

|

495

|

+

type WidgetExampleAssetAndTextInput<TOutput = WidgetExampleOutput> = WidgetExampleAssetInput<TOutput> & WidgetExampleTextInput<TOutput>;

|

|

496

|

+

type WidgetExampleAssetAndZeroShotInput<TOutput = WidgetExampleOutput> = WidgetExampleAssetInput<TOutput> & WidgetExampleZeroShotTextInput<TOutput>;

|

|

497

|

+

interface WidgetExampleStructuredDataInput<TOutput = WidgetExampleOutput> extends WidgetExampleBase<TOutput> {

|

|

498

|

+

structured_data: TableData;

|

|

499

|

+

}

|

|

500

|

+

interface WidgetExampleTableDataInput<TOutput = WidgetExampleOutput> extends WidgetExampleBase<TOutput> {

|

|

501

|

+

table: TableData;

|

|

502

|

+

}

|

|

503

|

+

interface WidgetExampleZeroShotTextInput<TOutput = WidgetExampleOutput> extends WidgetExampleTextInput<TOutput> {

|

|

504

|

+

text: string;

|

|

505

|

+

candidate_labels: string;

|

|

506

|

+

multi_class: boolean;

|

|

507

|

+

}

|

|

508

|

+

interface WidgetExampleSentenceSimilarityInput<TOutput = WidgetExampleOutput> extends WidgetExampleBase<TOutput> {

|

|

509

|

+

source_sentence: string;

|

|

510

|

+

sentences: string[];

|

|

511

|

+

}

|

|

512

|

+

type WidgetExample<TOutput = WidgetExampleOutput> = WidgetExampleTextInput<TOutput> | WidgetExampleTextAndContextInput<TOutput> | WidgetExampleTextAndTableInput<TOutput> | WidgetExampleAssetInput<TOutput> | WidgetExampleAssetAndPromptInput<TOutput> | WidgetExampleAssetAndTextInput<TOutput> | WidgetExampleAssetAndZeroShotInput<TOutput> | WidgetExampleStructuredDataInput<TOutput> | WidgetExampleTableDataInput<TOutput> | WidgetExampleZeroShotTextInput<TOutput> | WidgetExampleSentenceSimilarityInput<TOutput>;

|

|

513

|

+

type KeysOfUnion<T> = T extends unknown ? keyof T : never;

|

|

514

|

+

type WidgetExampleAttribute = KeysOfUnion<WidgetExample>;

|

|

515

|

+

declare enum InferenceDisplayability {

|

|

516

|

+

/**

|

|

517

|

+

* Yes

|

|

518

|

+

*/

|

|

519

|

+

Yes = "Yes",

|

|

520

|

+

/**

|

|

521

|

+

* And then, all the possible reasons why it's no:

|

|

522

|

+

*/

|

|

523

|

+

ExplicitOptOut = "ExplicitOptOut",

|

|

524

|

+

CustomCode = "CustomCode",

|

|

525

|

+

LibraryNotDetected = "LibraryNotDetected",

|

|

526

|

+

PipelineNotDetected = "PipelineNotDetected",

|

|

527

|

+

PipelineLibraryPairNotSupported = "PipelineLibraryPairNotSupported"

|

|

528

|

+

}

|

|

529

|

+

/**

|

|

530

|

+

* Public interface for model metadata

|

|

531

|

+

*/

|

|

532

|

+

interface ModelData {

|

|

533

|

+

/**

|

|

534

|

+

* id of model (e.g. 'user/repo_name')

|

|

535

|

+

*/

|

|

536

|

+

id: string;

|

|

537

|

+

/**

|

|

538

|

+

* Kept for backward compatibility

|

|

539

|

+

*/

|

|

540

|

+

modelId?: string;

|

|

541

|

+

/**

|

|

542

|

+

* Whether or not to enable inference widget for this model

|

|

543

|

+

*/

|

|

544

|

+

inference: InferenceDisplayability;

|

|

545

|

+

/**

|

|

546

|

+

* is this model private?

|

|

547

|

+

*/

|

|

548

|

+

private?: boolean;

|

|

549

|

+

/**

|

|

550

|

+

* this dictionary has useful information about the model configuration

|

|

551

|

+

*/

|

|

552

|

+

config?: Record<string, unknown> & {

|

|

553

|

+

adapter_transformers?: {

|

|

554

|

+

model_class?: string;

|

|

555

|

+

model_name?: string;

|

|

556

|

+

};

|

|

557

|

+

architectures?: string[];

|

|

558

|

+

sklearn?: {

|

|

559

|

+

filename?: string;

|

|

560

|

+

model_format?: string;

|

|

561

|

+

};

|

|

562

|

+

speechbrain?: {

|

|

563

|

+

interface?: string;

|

|

564

|

+

};

|

|

565

|

+

peft?: {

|

|

566

|

+

base_model_name?: string;

|

|

567

|

+

task_type?: string;

|

|

568

|

+

};

|

|

569

|

+

};

|

|

570

|

+

/**

|

|

571

|

+

* all the model tags

|

|

572

|

+

*/

|

|

573

|

+

tags?: string[];

|

|

574

|

+

/**

|

|

575

|

+

* transformers-specific info to display in the code sample.

|

|

576

|

+

*/

|

|

577

|

+

transformersInfo?: TransformersInfo;

|

|

578

|

+

/**

|

|

579

|

+

* Pipeline type

|

|

580

|

+

*/

|

|

581

|

+

pipeline_tag?: PipelineType | undefined;

|

|

582

|

+

/**

|

|

583

|

+

* for relevant models, get mask token

|

|

584

|

+

*/

|

|

585

|

+

mask_token?: string | undefined;

|

|

586

|

+

/**

|

|

587

|

+

* Example data that will be fed into the widget.

|

|

588

|

+

*

|

|

589

|

+

* can be set in the model card metadata (under `widget`),

|

|

590

|

+

* or by default in `DefaultWidget.ts`

|

|

591

|

+

*/

|

|

592

|

+

widgetData?: WidgetExample[] | undefined;

|

|

593

|

+

/**

|

|

594

|

+

* Parameters that will be used by the widget when calling Inference API

|

|

595

|

+

* https://huggingface.co/docs/api-inference/detailed_parameters

|

|

596

|

+

*

|

|

597

|

+

* can be set in the model card metadata (under `inference/parameters`)

|

|

598

|

+

* Example:

|

|

599

|

+

* inference:

|

|

600

|

+

* parameters:

|

|

601

|

+

* key: val

|

|

602

|

+

*/

|

|

603

|

+

cardData?: {

|

|

604

|

+

inference?: boolean | {

|

|

605

|

+

parameters?: Record<string, unknown>;

|

|

606

|

+

};

|

|

607

|

+

base_model?: string;

|

|

608

|

+

};

|

|

609

|

+

/**

|

|

610

|

+

* Library name

|

|

611

|

+

* Example: transformers, SpeechBrain, Stanza, etc.

|

|

612

|

+

*/

|

|

613

|

+

library_name?: string;

|

|

614

|

+

}

|

|

615

|

+

/**

|

|

616

|

+

* transformers-specific info to display in the code sample.

|

|

617

|

+

*/

|

|

618

|

+

interface TransformersInfo {

|

|

619

|

+

/**

|

|

620

|

+

* e.g. AutoModelForSequenceClassification

|

|

621

|

+

*/

|

|

622

|

+

auto_model: string;

|

|

623

|

+

/**

|

|

624

|

+

* if set in config.json's auto_map

|

|

625

|

+

*/

|

|

626

|

+

custom_class?: string;

|

|

627

|

+

/**

|

|

628

|

+

* e.g. text-classification

|

|

629

|

+

*/

|

|

630

|

+

pipeline_tag?: PipelineType;

|

|

631

|

+

/**

|

|

632

|

+

* e.g. "AutoTokenizer" | "AutoFeatureExtractor" | "AutoProcessor"

|

|

633

|

+

*/

|

|

634

|

+

processor?: string;

|

|

635

|

+

}

|

|

636

|

+

|

|

637

|

+

/**

|

|

638

|

+

* Elements configurable by a model library.

|

|

639

|

+

*/

|

|

640

|

+

interface LibraryUiElement {

|

|

641

|

+

/**

|

|

642

|

+

* Name displayed on the main

|

|

643

|

+

* call-to-action button on the model page.

|

|

644

|

+

*/

|

|

645

|

+

btnLabel: string;

|

|

646

|

+

/**

|

|

647

|

+

* Repo name

|

|

648

|

+

*/

|

|

649

|

+

repoName: string;

|

|

650

|

+

/**

|

|

651

|

+

* URL to library's repo

|

|

652

|

+

*/

|

|

653

|

+

repoUrl: string;

|

|

654

|

+

/**

|

|

655

|

+

* URL to library's docs

|

|

656

|

+

*/

|

|

657

|

+

docsUrl?: string;

|

|

658

|

+

/**

|

|

659

|

+

* Code snippet displayed on model page

|

|

660

|

+

*/

|

|

661

|

+

snippets: (model: ModelData) => string[];

|

|

662

|

+

}

|

|

663

|

+

declare const MODEL_LIBRARIES_UI_ELEMENTS: Partial<Record<ModelLibraryKey, LibraryUiElement>>;

|

|

664

|

+

|

|

665

|

+

type PerLanguageMapping = Map<PipelineType, string[] | WidgetExample[]>;

|

|

666

|

+

declare const MAPPING_DEFAULT_WIDGET: Map<string, PerLanguageMapping>;

|

|

667

|

+

|

|

668

|

+

/**

|

|

669

|

+

* Model libraries compatible with each ML task

|

|

670

|

+

*/

|

|

671

|

+

declare const TASKS_MODEL_LIBRARIES: Record<PipelineType, ModelLibraryKey[]>;

|

|

672

|

+

declare const TASKS_DATA: Record<PipelineType, TaskData | undefined>;

|

|

414

673

|

interface ExampleRepo {

|

|

415

674

|

description: string;

|

|

416

675

|

id: string;

|

|

@@ -461,7 +720,109 @@ interface TaskData {

|

|

|

461

720

|

widgetModels: string[];

|

|

462

721

|

youtubeId?: string;

|

|

463

722

|

}

|

|

723

|

+

type TaskDataCustom = Omit<TaskData, "id" | "label" | "libraries">;

|

|

464

724

|

|

|

465

|

-

declare const

|

|

725

|

+

declare const TAG_NFAA_CONTENT = "not-for-all-audiences";

|

|

726

|

+

declare const OTHER_TAGS_SUGGESTIONS: string[];

|

|

727

|

+

declare const TAG_TEXT_GENERATION_INFERENCE = "text-generation-inference";

|

|

728

|

+

declare const TAG_CUSTOM_CODE = "custom_code";

|

|

729

|

+

|

|

730

|

+

declare function getModelInputSnippet(model: ModelData, noWrap?: boolean, noQuotes?: boolean): string;

|

|

731

|

+

|

|

732

|

+

declare const inputs_getModelInputSnippet: typeof getModelInputSnippet;

|

|

733

|

+

declare namespace inputs {

|

|

734

|

+

export {

|

|

735

|

+

inputs_getModelInputSnippet as getModelInputSnippet,

|

|

736

|

+

};

|

|

737

|

+

}

|

|

738

|

+

|

|

739

|

+

declare const snippetBasic$2: (model: ModelData, accessToken: string) => string;

|

|

740

|

+

declare const snippetZeroShotClassification$2: (model: ModelData, accessToken: string) => string;

|

|

741

|

+

declare const snippetFile$2: (model: ModelData, accessToken: string) => string;

|

|

742

|

+

declare const curlSnippets: Partial<Record<PipelineType, (model: ModelData, accessToken: string) => string>>;

|

|

743

|

+

declare function getCurlInferenceSnippet(model: ModelData, accessToken: string): string;

|

|

744

|

+

declare function hasCurlInferenceSnippet(model: ModelData): boolean;

|

|

745

|

+

|

|

746

|

+

declare const curl_curlSnippets: typeof curlSnippets;

|

|

747

|

+

declare const curl_getCurlInferenceSnippet: typeof getCurlInferenceSnippet;

|

|

748

|

+

declare const curl_hasCurlInferenceSnippet: typeof hasCurlInferenceSnippet;

|

|

749

|

+

declare namespace curl {

|

|

750

|

+

export {

|

|

751

|

+

curl_curlSnippets as curlSnippets,

|

|

752

|

+

curl_getCurlInferenceSnippet as getCurlInferenceSnippet,

|

|

753

|

+

curl_hasCurlInferenceSnippet as hasCurlInferenceSnippet,

|

|

754

|

+

snippetBasic$2 as snippetBasic,

|

|

755

|

+

snippetFile$2 as snippetFile,

|

|

756

|

+

snippetZeroShotClassification$2 as snippetZeroShotClassification,

|

|

757

|

+

};

|

|

758

|

+

}

|

|

759

|

+

|

|

760

|

+

declare const snippetZeroShotClassification$1: (model: ModelData) => string;

|

|

761

|

+

declare const snippetBasic$1: (model: ModelData) => string;

|

|

762

|

+

declare const snippetFile$1: (model: ModelData) => string;

|

|

763

|

+

declare const snippetTextToImage$1: (model: ModelData) => string;

|

|

764

|

+

declare const snippetTextToAudio$1: (model: ModelData) => string;

|

|

765

|

+

declare const pythonSnippets: Partial<Record<PipelineType, (model: ModelData) => string>>;

|

|

766

|

+

declare function getPythonInferenceSnippet(model: ModelData, accessToken: string): string;

|

|

767

|

+

declare function hasPythonInferenceSnippet(model: ModelData): boolean;

|

|

768

|

+

|

|

769

|

+

declare const python_getPythonInferenceSnippet: typeof getPythonInferenceSnippet;

|

|

770

|

+

declare const python_hasPythonInferenceSnippet: typeof hasPythonInferenceSnippet;

|

|

771

|

+

declare const python_pythonSnippets: typeof pythonSnippets;

|

|

772

|

+

declare namespace python {

|

|

773

|

+

export {

|

|

774

|

+

python_getPythonInferenceSnippet as getPythonInferenceSnippet,

|

|

775

|

+

python_hasPythonInferenceSnippet as hasPythonInferenceSnippet,

|

|

776

|

+

python_pythonSnippets as pythonSnippets,

|

|

777

|

+

snippetBasic$1 as snippetBasic,

|

|

778

|

+

snippetFile$1 as snippetFile,

|

|

779

|

+

snippetTextToAudio$1 as snippetTextToAudio,

|

|

780

|

+

snippetTextToImage$1 as snippetTextToImage,

|

|

781

|

+

snippetZeroShotClassification$1 as snippetZeroShotClassification,

|

|

782

|

+

};

|

|

783

|

+

}

|

|

784

|

+

|

|

785

|

+

declare const snippetBasic: (model: ModelData, accessToken: string) => string;

|

|

786

|

+

declare const snippetZeroShotClassification: (model: ModelData, accessToken: string) => string;

|

|

787

|

+

declare const snippetTextToImage: (model: ModelData, accessToken: string) => string;

|

|

788

|

+

declare const snippetTextToAudio: (model: ModelData, accessToken: string) => string;

|

|

789

|

+

declare const snippetFile: (model: ModelData, accessToken: string) => string;

|

|

790

|

+

declare const jsSnippets: Partial<Record<PipelineType, (model: ModelData, accessToken: string) => string>>;

|

|

791

|

+

declare function getJsInferenceSnippet(model: ModelData, accessToken: string): string;

|

|

792

|

+

declare function hasJsInferenceSnippet(model: ModelData): boolean;

|

|

793

|

+

|

|

794

|

+

declare const js_getJsInferenceSnippet: typeof getJsInferenceSnippet;

|

|

795

|

+

declare const js_hasJsInferenceSnippet: typeof hasJsInferenceSnippet;

|

|

796

|

+

declare const js_jsSnippets: typeof jsSnippets;

|

|

797

|

+

declare const js_snippetBasic: typeof snippetBasic;

|

|

798

|

+

declare const js_snippetFile: typeof snippetFile;

|

|

799

|

+

declare const js_snippetTextToAudio: typeof snippetTextToAudio;

|

|

800

|

+

declare const js_snippetTextToImage: typeof snippetTextToImage;

|

|

801

|

+

declare const js_snippetZeroShotClassification: typeof snippetZeroShotClassification;

|

|

802

|

+

declare namespace js {

|

|

803

|

+

export {

|

|

804

|

+

js_getJsInferenceSnippet as getJsInferenceSnippet,

|

|

805

|

+

js_hasJsInferenceSnippet as hasJsInferenceSnippet,

|

|

806

|

+

js_jsSnippets as jsSnippets,

|

|

807

|

+

js_snippetBasic as snippetBasic,

|

|

808

|

+

js_snippetFile as snippetFile,

|

|

809

|

+

js_snippetTextToAudio as snippetTextToAudio,

|

|

810

|

+

js_snippetTextToImage as snippetTextToImage,

|

|

811

|

+

js_snippetZeroShotClassification as snippetZeroShotClassification,

|

|

812

|

+

};

|

|

813

|

+

}

|

|

814

|

+

|

|

815

|

+

declare const index_curl: typeof curl;

|

|

816

|

+

declare const index_inputs: typeof inputs;

|

|

817

|

+

declare const index_js: typeof js;

|

|

818

|

+

declare const index_python: typeof python;

|

|

819

|

+

declare namespace index {

|

|

820

|

+

export {

|

|

821

|

+

index_curl as curl,

|

|

822

|

+

index_inputs as inputs,

|

|

823

|

+

index_js as js,

|

|

824

|

+

index_python as python,

|

|

825

|

+

};

|

|

826

|

+

}

|

|

466

827

|

|

|

467

|

-

export { ExampleRepo, MODALITIES, MODALITY_LABELS, Modality, ModelLibrary, ModelLibraryKey, PIPELINE_DATA, PIPELINE_TYPES, PipelineData, PipelineType, TASKS_DATA, TaskData, TaskDemo, TaskDemoEntry };

|

|

828

|

+

export { ALL_DISPLAY_MODEL_LIBRARY_KEYS, ExampleRepo, InferenceDisplayability, LIBRARY_TASK_MAPPING_EXCLUDING_TRANSFORMERS, LibraryUiElement, MAPPING_DEFAULT_WIDGET, MODALITIES, MODALITY_LABELS, MODEL_LIBRARIES_UI_ELEMENTS, Modality, ModelData, ModelLibrary, ModelLibraryKey, OTHER_TAGS_SUGGESTIONS, PIPELINE_DATA, PIPELINE_TYPES, PIPELINE_TYPES_SET, PipelineData, PipelineType, SUBTASK_TYPES, TAG_CUSTOM_CODE, TAG_NFAA_CONTENT, TAG_TEXT_GENERATION_INFERENCE, TASKS_DATA, TASKS_MODEL_LIBRARIES, TaskData, TaskDataCustom, TaskDemo, TaskDemoEntry, TransformersInfo, WidgetExample, WidgetExampleAssetAndPromptInput, WidgetExampleAssetAndTextInput, WidgetExampleAssetAndZeroShotInput, WidgetExampleAssetInput, WidgetExampleAttribute, WidgetExampleOutput, WidgetExampleOutputAnswerScore, WidgetExampleOutputLabels, WidgetExampleOutputText, WidgetExampleOutputUrl, WidgetExampleSentenceSimilarityInput, WidgetExampleStructuredDataInput, WidgetExampleTableDataInput, WidgetExampleTextAndContextInput, WidgetExampleTextAndTableInput, WidgetExampleTextInput, WidgetExampleZeroShotTextInput, index as snippets };

|