cobweb-launcher 1.0.5__py3-none-any.whl → 3.2.18__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- cobweb/__init__.py +5 -1

- cobweb/base/__init__.py +3 -3

- cobweb/base/common_queue.py +37 -16

- cobweb/base/item.py +40 -14

- cobweb/base/{log.py → logger.py} +3 -3

- cobweb/base/request.py +744 -47

- cobweb/base/response.py +381 -13

- cobweb/base/seed.py +98 -50

- cobweb/base/task_queue.py +180 -0

- cobweb/base/test.py +257 -0

- cobweb/constant.py +39 -2

- cobweb/crawlers/__init__.py +1 -2

- cobweb/crawlers/crawler.py +27 -0

- cobweb/db/__init__.py +1 -0

- cobweb/db/api_db.py +83 -0

- cobweb/db/redis_db.py +118 -27

- cobweb/launchers/__init__.py +3 -1

- cobweb/launchers/distributor.py +141 -0

- cobweb/launchers/launcher.py +103 -130

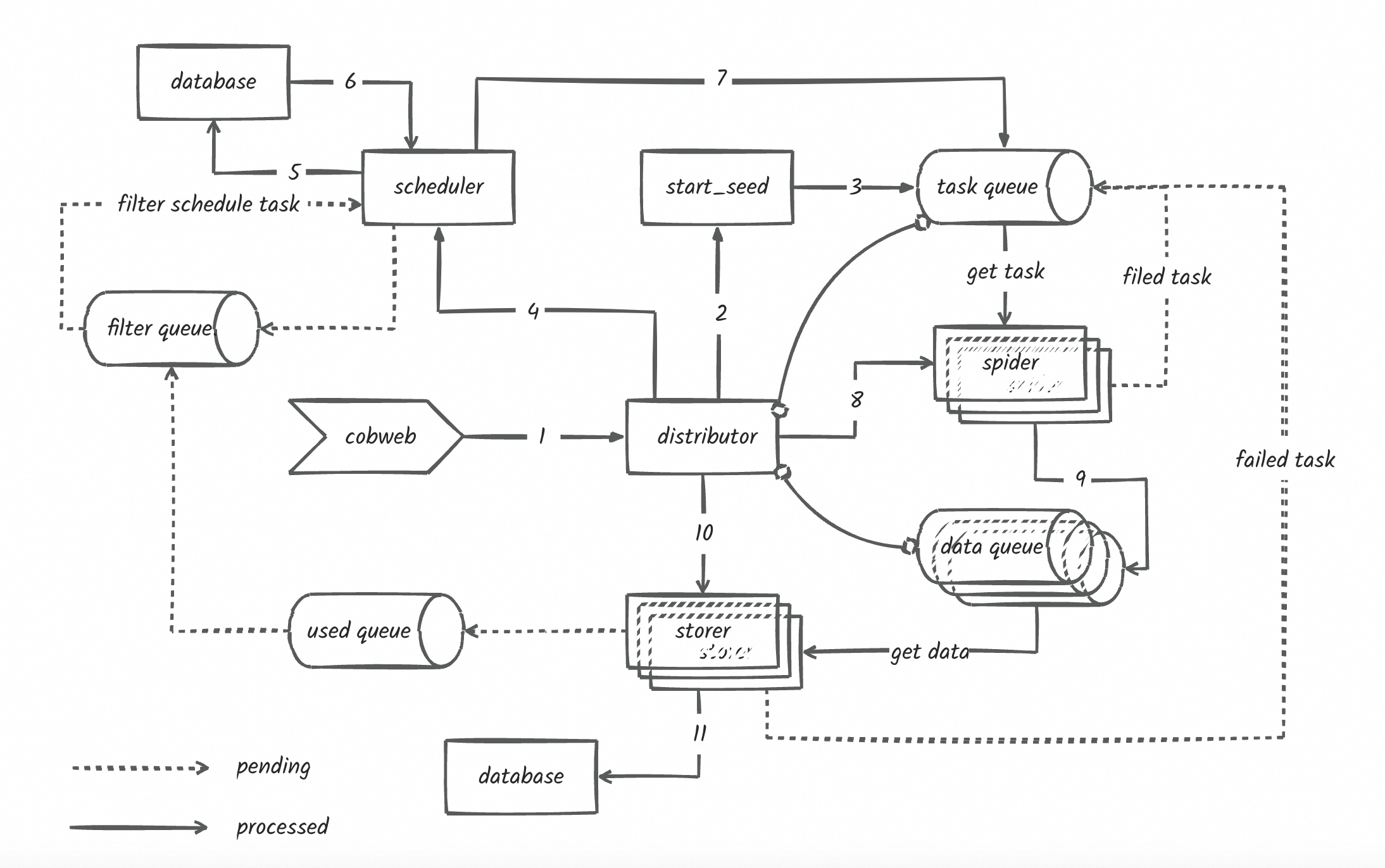

- cobweb/launchers/uploader.py +68 -0

- cobweb/log_dots/__init__.py +2 -0

- cobweb/log_dots/dot.py +258 -0

- cobweb/log_dots/loghub_dot.py +53 -0

- cobweb/pipelines/__init__.py +3 -2

- cobweb/pipelines/pipeline.py +19 -0

- cobweb/pipelines/pipeline_csv.py +25 -0

- cobweb/pipelines/pipeline_loghub.py +54 -0

- cobweb/schedulers/__init__.py +1 -0

- cobweb/schedulers/scheduler.py +66 -0

- cobweb/schedulers/scheduler_with_redis.py +189 -0

- cobweb/setting.py +37 -38

- cobweb/utils/__init__.py +5 -2

- cobweb/utils/bloom.py +58 -0

- cobweb/{base → utils}/decorators.py +14 -12

- cobweb/utils/dotting.py +300 -0

- cobweb/utils/oss.py +113 -86

- cobweb/utils/tools.py +3 -15

- cobweb_launcher-3.2.18.dist-info/METADATA +193 -0

- cobweb_launcher-3.2.18.dist-info/RECORD +44 -0

- {cobweb_launcher-1.0.5.dist-info → cobweb_launcher-3.2.18.dist-info}/WHEEL +1 -1

- cobweb/crawlers/base_crawler.py +0 -121

- cobweb/crawlers/file_crawler.py +0 -181

- cobweb/launchers/launcher_pro.py +0 -174

- cobweb/pipelines/base_pipeline.py +0 -54

- cobweb/pipelines/loghub_pipeline.py +0 -34

- cobweb_launcher-1.0.5.dist-info/METADATA +0 -48

- cobweb_launcher-1.0.5.dist-info/RECORD +0 -32

- {cobweb_launcher-1.0.5.dist-info → cobweb_launcher-3.2.18.dist-info}/LICENSE +0 -0

- {cobweb_launcher-1.0.5.dist-info → cobweb_launcher-3.2.18.dist-info}/top_level.txt +0 -0

cobweb/utils/oss.py

CHANGED

|

@@ -1,86 +1,113 @@

|

|

|

1

|

-

|

|

2

|

-

from

|

|

3

|

-

from

|

|

4

|

-

from

|

|

5

|

-

from cobweb.

|

|

6

|

-

|

|

7

|

-

|

|

8

|

-

|

|

9

|

-

|

|

10

|

-

|

|

11

|

-

|

|

12

|

-

|

|

13

|

-

|

|

14

|

-

|

|

15

|

-

|

|

16

|

-

|

|

17

|

-

|

|

18

|

-

|

|

19

|

-

|

|

20

|

-

|

|

21

|

-

|

|

22

|

-

|

|

23

|

-

|

|

24

|

-

|

|

25

|

-

|

|

26

|

-

|

|

27

|

-

|

|

28

|

-

|

|

29

|

-

|

|

30

|

-

|

|

31

|

-

|

|

32

|

-

|

|

33

|

-

|

|

34

|

-

|

|

35

|

-

|

|

36

|

-

|

|

37

|

-

|

|

38

|

-

|

|

39

|

-

|

|

40

|

-

|

|

41

|

-

|

|

42

|

-

|

|

43

|

-

|

|

44

|

-

|

|

45

|

-

|

|

46

|

-

|

|

47

|

-

|

|

48

|

-

|

|

49

|

-

|

|

50

|

-

|

|

51

|

-

|

|

52

|

-

|

|

53

|

-

|

|

54

|

-

|

|

55

|

-

|

|

56

|

-

|

|

57

|

-

|

|

58

|

-

|

|

59

|

-

|

|

60

|

-

|

|

61

|

-

|

|

62

|

-

|

|

63

|

-

|

|

64

|

-

|

|

65

|

-

|

|

66

|

-

|

|

67

|

-

|

|

68

|

-

|

|

69

|

-

|

|

70

|

-

|

|

71

|

-

|

|

72

|

-

|

|

73

|

-

|

|

74

|

-

|

|

75

|

-

|

|

76

|

-

|

|

77

|

-

|

|

78

|

-

|

|

79

|

-

|

|

80

|

-

|

|

81

|

-

|

|

82

|

-

|

|

83

|

-

|

|

84

|

-

|

|

85

|

-

|

|

86

|

-

|

|

1

|

+

#

|

|

2

|

+

# from cobweb import setting

|

|

3

|

+

# from requests import Response

|

|

4

|

+

# from oss2 import Auth, Bucket, models, PartIterator

|

|

5

|

+

# from cobweb.exceptions import oss_db_exception

|

|

6

|

+

# from cobweb.utils.decorators import decorator_oss_db

|

|

7

|

+

#

|

|

8

|

+

#

|

|

9

|

+

# class OssUtil:

|

|

10

|

+

#

|

|

11

|

+

# def __init__(

|

|

12

|

+

# self,

|

|

13

|

+

# bucket=None,

|

|

14

|

+

# endpoint=None,

|

|

15

|

+

# access_key=None,

|

|

16

|

+

# secret_key=None,

|

|

17

|

+

# chunk_size=None,

|

|

18

|

+

# min_upload_size=None,

|

|

19

|

+

# **kwargs

|

|

20

|

+

# ):

|

|

21

|

+

# self.bucket = bucket or setting.OSS_BUCKET

|

|

22

|

+

# self.endpoint = endpoint or setting.OSS_ENDPOINT

|

|

23

|

+

# self.chunk_size = int(chunk_size or setting.OSS_CHUNK_SIZE)

|

|

24

|

+

# self.min_upload_size = int(min_upload_size or setting.OSS_MIN_UPLOAD_SIZE)

|

|

25

|

+

#

|

|

26

|

+

# self.failed_count = 0

|

|

27

|

+

# self._kw = kwargs

|

|

28

|

+

#

|

|

29

|

+

# self._auth = Auth(

|

|

30

|

+

# access_key_id=access_key or setting.OSS_ACCESS_KEY,

|

|

31

|

+

# access_key_secret=secret_key or setting.OSS_SECRET_KEY

|

|

32

|

+

# )

|

|

33

|

+

# self._client = Bucket(

|

|

34

|

+

# auth=self._auth,

|

|

35

|

+

# endpoint=self.endpoint,

|

|

36

|

+

# bucket_name=self.bucket,

|

|

37

|

+

# **self._kw

|

|

38

|

+

# )

|

|

39

|

+

#

|

|

40

|

+

# def failed(self):

|

|

41

|

+

# self.failed_count += 1

|

|

42

|

+

# if self.failed_count >= 5:

|

|

43

|

+

# self._client = Bucket(

|

|

44

|

+

# auth=self._auth,

|

|

45

|

+

# endpoint=self.endpoint,

|

|

46

|

+

# bucket_name=self.bucket,

|

|

47

|

+

# **self._kw

|

|

48

|

+

# )

|

|

49

|

+

#

|

|

50

|

+

# def exists(self, key: str) -> bool:

|

|

51

|

+

# try:

|

|

52

|

+

# result = self._client.object_exists(key)

|

|

53

|

+

# self.failed_count = 0

|

|

54

|

+

# return result

|

|

55

|

+

# except Exception as e:

|

|

56

|

+

# self.failed()

|

|

57

|

+

# raise e

|

|

58

|

+

#

|

|

59

|

+

# def head(self, key: str) -> models.HeadObjectResult:

|

|

60

|

+

# return self._client.head_object(key)

|

|

61

|

+

#

|

|

62

|

+

# @decorator_oss_db(exception=oss_db_exception.OssDBInitPartError)

|

|

63

|

+

# def init_part(self, key) -> models.InitMultipartUploadResult:

|

|

64

|

+

# """初始化分片上传"""

|

|

65

|

+

# return self._client.init_multipart_upload(key)

|

|

66

|

+

#

|

|

67

|

+

# @decorator_oss_db(exception=oss_db_exception.OssDBPutObjError)

|

|

68

|

+

# def put(self, key, data) -> models.PutObjectResult:

|

|

69

|

+

# """文件上传"""

|

|

70

|

+

# return self._client.put_object(key, data)

|

|

71

|

+

#

|

|

72

|

+

# @decorator_oss_db(exception=oss_db_exception.OssDBPutPartError)

|

|

73

|

+

# def put_part(self, key, upload_id, position, data) -> models.PutObjectResult:

|

|

74

|

+

# """分片上传"""

|

|

75

|

+

# return self._client.upload_part(key, upload_id, position, data)

|

|

76

|

+

#

|

|

77

|

+

# def list_part(self, key, upload_id): # -> List[models.ListPartsResult]:

|

|

78

|

+

# """获取分片列表"""

|

|

79

|

+

# return [part_info for part_info in PartIterator(self._client, key, upload_id)]

|

|

80

|

+

#

|

|

81

|

+

# @decorator_oss_db(exception=oss_db_exception.OssDBMergeError)

|

|

82

|

+

# def merge(self, key, upload_id, parts=None) -> models.PutObjectResult:

|

|

83

|

+

# """合并分片"""

|

|

84

|

+

# headers = None if parts else {"x-oss-complete-all": "yes"}

|

|

85

|

+

# return self._client.complete_multipart_upload(key, upload_id, parts, headers=headers)

|

|

86

|

+

#

|

|

87

|

+

# @decorator_oss_db(exception=oss_db_exception.OssDBAppendObjError)

|

|

88

|

+

# def append(self, key, position, data) -> models.AppendObjectResult:

|

|

89

|

+

# """追加上传"""

|

|

90

|

+

# return self._client.append_object(key, position, data)

|

|

91

|

+

#

|

|

92

|

+

# def iter_data(self, data, chunk_size=None):

|

|

93

|

+

# chunk_size = chunk_size or self.chunk_size

|

|

94

|

+

# if isinstance(data, Response):

|

|

95

|

+

# for part_data in data.iter_content(chunk_size):

|

|

96

|

+

# yield part_data

|

|

97

|

+

# if isinstance(data, bytes):

|

|

98

|

+

# for i in range(0, len(data), chunk_size):

|

|

99

|

+

# yield data[i:i + chunk_size]

|

|

100

|

+

#

|

|

101

|

+

# def assemble(self, ready_data, data, chunk_size=None):

|

|

102

|

+

# upload_data = b""

|

|

103

|

+

# ready_data = ready_data + data

|

|

104

|

+

# chunk_size = chunk_size or self.chunk_size

|

|

105

|

+

# if len(ready_data) >= chunk_size:

|

|

106

|

+

# upload_data = ready_data[:chunk_size]

|

|

107

|

+

# ready_data = ready_data[chunk_size:]

|

|

108

|

+

# return ready_data, upload_data

|

|

109

|

+

#

|

|

110

|

+

# def content_length(self, key: str) -> int:

|

|

111

|

+

# head = self.head(key)

|

|

112

|

+

# return head.content_length

|

|

113

|

+

#

|

cobweb/utils/tools.py

CHANGED

|

@@ -1,5 +1,6 @@

|

|

|

1

1

|

import re

|

|

2

2

|

import hashlib

|

|

3

|

+

import inspect

|

|

3

4

|

from typing import Union

|

|

4

5

|

from importlib import import_module

|

|

5

6

|

|

|

@@ -10,18 +11,6 @@ def md5(text: Union[str, bytes]) -> str:

|

|

|

10

11

|

return hashlib.md5(text).hexdigest()

|

|

11

12

|

|

|

12

13

|

|

|

13

|

-

def build_path(site, url, file_type):

|

|

14

|

-

return f"{site}/{md5(url)}.{file_type}"

|

|

15

|

-

|

|

16

|

-

|

|

17

|

-

def format_size(content_length: int) -> str:

|

|

18

|

-

units = ["KB", "MB", "GB", "TB"]

|

|

19

|

-

for i in range(4):

|

|

20

|

-

num = content_length / (1024 ** (i + 1))

|

|

21

|

-

if num < 1024:

|

|

22

|

-

return f"{round(num, 2)} {units[i]}"

|

|

23

|

-

|

|

24

|

-

|

|

25

14

|

def dynamic_load_class(model_info):

|

|

26

15

|

if isinstance(model_info, str):

|

|

27

16

|

if "import" in model_info:

|

|

@@ -35,8 +24,7 @@ def dynamic_load_class(model_info):

|

|

|

35

24

|

model = import_module(model_path)

|

|

36

25

|

class_object = getattr(model, class_name)

|

|

37

26

|

return class_object

|

|

27

|

+

elif inspect.isclass(model_info):

|

|

28

|

+

return model_info

|

|

38

29

|

raise TypeError()

|

|

39

30

|

|

|

40

|

-

|

|

41

|

-

def download_log_info(item:dict) -> str:

|

|

42

|

-

return "\n".join([" " * 12 + f"{k.ljust(14)}: {v}" for k, v in item.items()])

|

|

@@ -0,0 +1,193 @@

|

|

|

1

|

+

Metadata-Version: 2.1

|

|

2

|

+

Name: cobweb-launcher

|

|

3

|

+

Version: 3.2.18

|

|

4

|

+

Summary: spider_hole

|

|

5

|

+

Home-page: https://github.com/Juannie-PP/cobweb

|

|

6

|

+

Author: Juannie-PP

|

|

7

|

+

Author-email: 2604868278@qq.com

|

|

8

|

+

License: MIT

|

|

9

|

+

Keywords: cobweb-launcher,cobweb,spider

|

|

10

|

+

Platform: UNKNOWN

|

|

11

|

+

Classifier: Programming Language :: Python :: 3

|

|

12

|

+

Classifier: License :: OSI Approved :: MIT License

|

|

13

|

+

Classifier: Operating System :: OS Independent

|

|

14

|

+

Requires-Python: >=3.7

|

|

15

|

+

Description-Content-Type: text/markdown

|

|

16

|

+

Requires-Dist: requests>=2.19.1

|

|

17

|

+

Requires-Dist: redis>=4.4.4

|

|

18

|

+

Requires-Dist: aliyun-log-python-sdk

|

|

19

|

+

|

|

20

|

+

# cobweb

|

|

21

|

+

cobweb是一个基于python的分布式爬虫调度框架,目前支持分布式爬虫,单机爬虫,支持自定义数据库,支持自定义数据存储,支持自定义数据处理等操作。

|

|

22

|

+

|

|

23

|

+

cobweb主要由3个模块和一个配置文件组成:Launcher启动器、Crawler采集器、Pipeline存储和setting配置文件。

|

|

24

|

+

1. Launcher启动器:用于启动爬虫任务,控制爬虫任务的执行流程,以及数据存储和数据处理。

|

|

25

|

+

框架提供两种启动器模式:LauncherAir、LauncherPro,分别对应单机爬虫模式和分布式调度模式。

|

|

26

|

+

2. Crawler采集器:用于控制采集流程、数据下载和数据处理。

|

|

27

|

+

框架提供了基础的采集器,用于控制采集流程、数据下载和数据处理,用户也可在创建任务时自定义请求、下载和解析方法,具体看使用方法介绍。

|

|

28

|

+

3. Pipeline存储:用于存储采集到的数据,支持自定义数据存储和数据处理。框架提供了Console和Loghub两种存储方式,用户也可继承Pipeline抽象类自定义存储方式。

|

|

29

|

+

4. setting配置文件:用于配置采集器、存储器、队列长度、采集线程数等参数,框架提供了默认配置,用户也可自定义配置。

|

|

30

|

+

## 安装

|

|

31

|

+

```

|

|

32

|

+

pip3 install --upgrade cobweb-launcher

|

|

33

|

+

```

|

|

34

|

+

## 使用方法介绍

|

|

35

|

+

### 1. 任务创建

|

|

36

|

+

- Launcher任务创建

|

|

37

|

+

```python

|

|

38

|

+

from cobweb import Launcher

|

|

39

|

+

|

|

40

|

+

# 创建启动器

|

|

41

|

+

app = Launcher(task="test", project="test")

|

|

42

|

+

|

|

43

|

+

# 设置采集种子

|

|

44

|

+

app.SEEDS = [{

|

|

45

|

+

"url": "https://www.baidu.com"

|

|

46

|

+

}]

|

|

47

|

+

...

|

|

48

|

+

# 启动任务

|

|

49

|

+

app.start()

|

|

50

|

+

```

|

|

51

|

+

### 2. 自定义配置文件参数

|

|

52

|

+

- 通过自定义setting文件,配置文件导入字符串方式

|

|

53

|

+

> 默认配置文件:import cobweb.setting

|

|

54

|

+

> 不推荐!!!目前有bug,随缘使用...

|

|

55

|

+

例如:同级目录下自定义创建了setting.py文件。

|

|

56

|

+

```python

|

|

57

|

+

from cobweb import Launcher

|

|

58

|

+

|

|

59

|

+

app = Launcher(

|

|

60

|

+

task="test",

|

|

61

|

+

project="test",

|

|

62

|

+

setting="import setting"

|

|

63

|

+

)

|

|

64

|

+

|

|

65

|

+

...

|

|

66

|

+

|

|

67

|

+

app.start()

|

|

68

|

+

```

|

|

69

|

+

- 自定义修改setting中对象值

|

|

70

|

+

```python

|

|

71

|

+

from cobweb import Launcher

|

|

72

|

+

|

|

73

|

+

# 创建启动器

|

|

74

|

+

app = Launcher(

|

|

75

|

+

task="test",

|

|

76

|

+

project="test",

|

|

77

|

+

REDIS_CONFIG = {

|

|

78

|

+

"host": ...,

|

|

79

|

+

"password":...,

|

|

80

|

+

"port": ...,

|

|

81

|

+

"db": ...

|

|

82

|

+

}

|

|

83

|

+

)

|

|

84

|

+

...

|

|

85

|

+

# 启动任务

|

|

86

|

+

app.start()

|

|

87

|

+

```

|

|

88

|

+

### 3. 自定义请求

|

|

89

|

+

`@app.request`使用装饰器封装自定义请求方法,作用于发生请求前的操作,返回Request对象或继承于BaseItem对象,用于控制请求参数。

|

|

90

|

+

```python

|

|

91

|

+

from typing import Union

|

|

92

|

+

from cobweb import Launcher

|

|

93

|

+

from cobweb.base import Seed, Request, BaseItem

|

|

94

|

+

|

|

95

|

+

app = Launcher(

|

|

96

|

+

task="test",

|

|

97

|

+

project="test"

|

|

98

|

+

)

|

|

99

|

+

|

|

100

|

+

...

|

|

101

|

+

|

|

102

|

+

@app.request

|

|

103

|

+

def request(seed: Seed) -> Union[Request, BaseItem]:

|

|

104

|

+

# 可自定义headers,代理,构造请求参数等操作

|

|

105

|

+

proxies = {"http": ..., "https": ...}

|

|

106

|

+

yield Request(seed.url, seed, ..., proxies=proxies, timeout=15)

|

|

107

|

+

# yield xxxItem(seed, ...) # 跳过请求和解析直接进入数据存储流程

|

|

108

|

+

|

|

109

|

+

...

|

|

110

|

+

|

|

111

|

+

app.start()

|

|

112

|

+

```

|

|

113

|

+

> 默认请求方法

|

|

114

|

+

> def request(seed: Seed) -> Union[Request, BaseItem]:

|

|

115

|

+

> yield Request(seed.url, seed, timeout=5)

|

|

116

|

+

### 4. 自定义下载

|

|

117

|

+

`@app.download`使用装饰器封装自定义下载方法,作用于发生请求时的操作,返回Response对象或继承于BaseItem对象,用于控制请求参数。

|

|

118

|

+

```python

|

|

119

|

+

from typing import Union

|

|

120

|

+

from cobweb import Launcher

|

|

121

|

+

from cobweb.base import Request, Response, BaseItem

|

|

122

|

+

|

|

123

|

+

app = Launcher(

|

|

124

|

+

task="test",

|

|

125

|

+

project="test"

|

|

126

|

+

)

|

|

127

|

+

|

|

128

|

+

...

|

|

129

|

+

|

|

130

|

+

@app.download

|

|

131

|

+

def download(item: Request) -> Union[BaseItem, Response]:

|

|

132

|

+

...

|

|

133

|

+

response = ...

|

|

134

|

+

...

|

|

135

|

+

yield Response(item.seed, response, ...) # 返回Response对象,进行解析

|

|

136

|

+

# yield xxxItem(seed, ...) # 跳过请求和解析直接进入数据存储流程

|

|

137

|

+

|

|

138

|

+

...

|

|

139

|

+

|

|

140

|

+

app.start()

|

|

141

|

+

```

|

|

142

|

+

> 默认下载方法

|

|

143

|

+

> def download(item: Request) -> Union[Seed, BaseItem, Response, str]:

|

|

144

|

+

> response = item.download()

|

|

145

|

+

> yield Response(item.seed, response, **item.to_dict)

|

|

146

|

+

### 5. 自定义解析

|

|

147

|

+

自定义解析需要由一个存储数据类和解析方法组成。存储数据类继承于BaseItem的对象,规定存储表名及字段,

|

|

148

|

+

解析方法返回继承于BaseItem的对象,yield返回进行控制数据存储流程。

|

|

149

|

+

```python

|

|

150

|

+

from typing import Union

|

|

151

|

+

from cobweb import Launcher

|

|

152

|

+

from cobweb.base import Seed, Response, BaseItem

|

|

153

|

+

|

|

154

|

+

class TestItem(BaseItem):

|

|

155

|

+

__TABLE__ = "test_data" # 表名

|

|

156

|

+

__FIELDS__ = "field1, field2, field3" # 字段名

|

|

157

|

+

|

|

158

|

+

app = Launcher(

|

|

159

|

+

task="test",

|

|

160

|

+

project="test"

|

|

161

|

+

)

|

|

162

|

+

|

|

163

|

+

...

|

|

164

|

+

|

|

165

|

+

@app.parse

|

|

166

|

+

def parse(item: Response) -> Union[Seed, BaseItem]:

|

|

167

|

+

...

|

|

168

|

+

yield TestItem(item.seed, field1=..., field2=..., field3=...)

|

|

169

|

+

# yield Seed(...) # 构造新种子推送至消费队列

|

|

170

|

+

|

|

171

|

+

...

|

|

172

|

+

|

|

173

|

+

app.start()

|

|

174

|

+

```

|

|

175

|

+

> 默认解析方法

|

|

176

|

+

> def parse(item: Request) -> Union[Seed, BaseItem]:

|

|

177

|

+

> upload_item = item.to_dict

|

|

178

|

+

> upload_item["text"] = item.response.text

|

|

179

|

+

> yield ConsoleItem(item.seed, data=json.dumps(upload_item, ensure_ascii=False))

|

|

180

|

+

## todo

|

|

181

|

+

- [ ] 队列优化完善,使用queue的机制wait()同步各模块执行?

|

|

182

|

+

- [x] 日志功能完善,单机模式调度和保存数据写入文件,结构化输出各任务日志

|

|

183

|

+

- [ ] 去重过滤(布隆过滤器等)

|

|

184

|

+

- [ ] 请求检验

|

|

185

|

+

- [ ] 异常回调

|

|

186

|

+

- [ ] 失败回调

|

|

187

|

+

|

|

188

|

+

> 未更新流程图!!!

|

|

189

|

+

|

|

190

|

+

|

|

191

|

+

|

|

192

|

+

|

|

193

|

+

|

|

@@ -0,0 +1,44 @@

|

|

|

1

|

+

cobweb/__init__.py,sha256=1V2fOFvCncbsPlyzAOo0o6FB9mJfJfaomIVWgNb4hMk,155

|

|

2

|

+

cobweb/constant.py,sha256=u44MFrduzcuITPto9MwPuAGavfDjzi0FKh2looF-9FY,2988

|

|

3

|

+

cobweb/setting.py,sha256=Mte2hQPo1HQUTtOePIfP69GUR7TjeTA2AedaFLndueI,1679

|

|

4

|

+

cobweb/base/__init__.py,sha256=NanSxJr0WsqjqCNOQAlxlkt-vQEsERHYBzacFC057oI,222

|

|

5

|

+

cobweb/base/common_queue.py,sha256=hYdaM70KrWjvACuLKaGhkI2VqFCnd87NVvWzmnfIg8Q,1423

|

|

6

|

+

cobweb/base/item.py,sha256=1bS4U_3vzI2jzSSeoEbLoLT_5CfgLPopWiEYtaahbvw,1674

|

|

7

|

+

cobweb/base/logger.py,sha256=Vsg1bD4LXW91VgY-ANsmaUu-mD88hU_WS83f7jX3qF8,2011

|

|

8

|

+

cobweb/base/request.py,sha256=8CrnQQ9q4R6pX_DQmeaytboVxXquuQsYH-kfS8ECjIw,28845

|

|

9

|

+

cobweb/base/response.py,sha256=L3sX2PskV744uz3BJ8xMuAoAfGCeh20w8h0Cnd9vLo0,11377

|

|

10

|

+

cobweb/base/seed.py,sha256=ddaWCq_KaWwpmPl1CToJlfCxEEnoJ16kjo6azJs9uls,5000

|

|

11

|

+

cobweb/base/task_queue.py,sha256=2MqGpHGNmK5B-kqv7z420RWyihzB9zgDHJUiLsmtzOI,6402

|

|

12

|

+

cobweb/base/test.py,sha256=N8MDGb94KQeI4pC5rCc2QdohE9_5AgcOyGqKjbMsOEs,9588

|

|

13

|

+

cobweb/crawlers/__init__.py,sha256=msvkB9mTpsgyj8JfNMsmwAcpy5kWk_2NrO1Adw2Hkw0,29

|

|

14

|

+

cobweb/crawlers/crawler.py,sha256=ZZVZJ17RWuvzUFGLjqdvyVZpmuq-ynslJwXQzdm_UdQ,709

|

|

15

|

+

cobweb/db/__init__.py,sha256=uZwSkd105EAwYo95oZQXAfofUKHVIAZZIPpNMy-hm2Q,56

|

|

16

|

+

cobweb/db/api_db.py,sha256=qIhEGB-reKPVFtWPIJYFVK16Us32GBgYjgFjcF-V0GM,3036

|

|

17

|

+

cobweb/db/redis_db.py,sha256=X7dUpW50QcmRPjYlYg7b-fXF_fcjuRRk3DBx2ggetXk,7687

|

|

18

|

+

cobweb/exceptions/__init__.py,sha256=E9SHnJBbhD7fOgPFMswqyOf8SKRDrI_i25L0bSpohvk,32

|

|

19

|

+

cobweb/exceptions/oss_db_exception.py,sha256=iP_AImjNHT3-Iv49zCFQ3rdLnlvuHa3h2BXApgrOYpA,636

|

|

20

|

+

cobweb/launchers/__init__.py,sha256=6_v2jd2sgj6YnOB1nPKiYBskuXVb5xpQnq2YaDGJgQ8,100

|

|

21

|

+

cobweb/launchers/distributor.py,sha256=Ay44iPDNOlrZIW1wgNa4aijRNZsuLLfBz-QlFYXEKDs,5667

|

|

22

|

+

cobweb/launchers/launcher.py,sha256=Shb6o6MAM38d32ybW2gY6qpGmhuiV7jo9TDh0f7rud8,5694

|

|

23

|

+

cobweb/launchers/uploader.py,sha256=QwJOmG7jq2T5sRzrT386zJ0YYNz-hAv0i6GOpoEaRdU,2075

|

|

24

|

+

cobweb/log_dots/__init__.py,sha256=F-3sNXac5uI80j-tNFGPv0lygy00uEI9XfFvK178RC4,55

|

|

25

|

+

cobweb/log_dots/dot.py,sha256=ng6PAtQwR56F-421jJwablAmvMM_n8CR0gBJBJTBMhM,9927

|

|

26

|

+

cobweb/log_dots/loghub_dot.py,sha256=HGbvvVuJqFCOa17-bDeZokhetOmo8J6YpnhL8prRxn4,1741

|

|

27

|

+

cobweb/pipelines/__init__.py,sha256=rtkaaCZ4u1XcxpkDLHztETQjEcLZ_6DXTHjdfcJlyxQ,97

|

|

28

|

+

cobweb/pipelines/pipeline.py,sha256=OgSEZ2DdqofpZcer1Wj1tuBqn8OHVjrYQ5poqt75czQ,357

|

|

29

|

+

cobweb/pipelines/pipeline_csv.py,sha256=TFqxqgVUqkBF6Jott4zd6fvCSxzG67lpafRQtXPw1eg,807

|

|

30

|

+

cobweb/pipelines/pipeline_loghub.py,sha256=zwIa_pcWBB2UNGd32Cu-i1jKGNruTbo2STdxl1WGwZ0,1829

|

|

31

|

+

cobweb/schedulers/__init__.py,sha256=LEya11fdAv0X28YzbQTeC1LQZ156Fj4cyEMGqQHUWW0,49

|

|

32

|

+

cobweb/schedulers/scheduler.py,sha256=Of-BjbBh679R6glc12Kc8iugeERCSusP7jolpCc1UMI,1740

|

|

33

|

+

cobweb/schedulers/scheduler_with_redis.py,sha256=iaImkTldr203Xm5kbBWPJ2fLUUe2mgcmUoDUQYfn5iM,7370

|

|

34

|

+

cobweb/utils/__init__.py,sha256=HV0rDW6PVpIVmN_bKquKufli15zuk50tw1ZZejyZ4Pc,173

|

|

35

|

+

cobweb/utils/bloom.py,sha256=A8xqtHXp7jgRoBuUlpovmq8lhU5y7IEF0FOCjfQDb6s,1855

|

|

36

|

+

cobweb/utils/decorators.py,sha256=ZwVQlz-lYHgXgKf9KRCp15EWPzTDdhoikYUNUCIqNeM,1140

|

|

37

|

+

cobweb/utils/dotting.py,sha256=e0pLEWB8sly1xvXOmJ_uHGQ6Bbw3O9tLcmUBfyNKRmQ,10633

|

|

38

|

+

cobweb/utils/oss.py,sha256=wmToIIVNO8nCQVRmreVaZejk01aCWS35e1NV6cr0yGI,4192

|

|

39

|

+

cobweb/utils/tools.py,sha256=14TCedqt07m4z6bCnFAsITOFixeGr8V3aOKk--L7Cr0,879

|

|

40

|

+

cobweb_launcher-3.2.18.dist-info/LICENSE,sha256=z1rxSIGOyzcSb3orZxFPxzx-0C1vTocmswqBNxpKfEk,1063

|

|

41

|

+

cobweb_launcher-3.2.18.dist-info/METADATA,sha256=bdZ30kO73t3cqozkOyfhr0iEmrDDxPxiqVR9J3oITbE,6115

|

|

42

|

+

cobweb_launcher-3.2.18.dist-info/WHEEL,sha256=tZoeGjtWxWRfdplE7E3d45VPlLNQnvbKiYnx7gwAy8A,92

|

|

43

|

+

cobweb_launcher-3.2.18.dist-info/top_level.txt,sha256=4GETBGNsKqiCUezmT-mJn7tjhcDlu7nLIV5gGgHBW4I,7

|

|

44

|

+

cobweb_launcher-3.2.18.dist-info/RECORD,,

|

cobweb/crawlers/base_crawler.py

DELETED

|

@@ -1,121 +0,0 @@

|

|

|

1

|

-

import threading

|

|

2

|

-

|

|

3

|

-

from inspect import isgenerator

|

|

4

|

-

from typing import Union, Callable, Mapping

|

|

5

|

-

|

|

6

|

-

from cobweb.base import Queue, Seed, BaseItem, Request, Response, logger

|

|

7

|

-

from cobweb.constant import DealModel, LogTemplate

|

|

8

|

-

from cobweb.utils import download_log_info

|

|

9

|

-

from cobweb import setting

|

|

10

|

-

|

|

11

|

-

|

|

12

|

-

class Crawler(threading.Thread):

|

|

13

|

-

|

|

14

|

-

def __init__(

|

|

15

|

-

self,

|

|

16

|

-

upload_queue: Queue,

|

|

17

|

-

custom_func: Union[Mapping[str, Callable]],

|

|

18

|

-

launcher_queue: Union[Mapping[str, Queue]],

|

|

19

|

-

):

|

|

20

|

-

super().__init__()

|

|

21

|

-

|

|

22

|

-

self.upload_queue = upload_queue

|

|

23

|

-

for func_name, _callable in custom_func.items():

|

|

24

|

-

if isinstance(_callable, Callable):

|

|

25

|

-

self.__setattr__(func_name, _callable)

|

|

26

|

-

|

|

27

|

-

self.launcher_queue = launcher_queue

|

|

28

|

-

|

|

29

|

-

self.spider_thread_num = setting.SPIDER_THREAD_NUM

|

|

30

|

-

self.max_retries = setting.SPIDER_MAX_RETRIES

|

|

31

|

-

|

|

32

|

-

@staticmethod

|

|

33

|

-

def request(seed: Seed) -> Union[Request, BaseItem]:

|

|

34

|

-

stream = True if setting.DOWNLOAD_MODEL else False

|

|

35

|

-

return Request(seed.url, seed, stream=stream, timeout=5)

|

|

36

|

-

|

|

37

|

-

@staticmethod

|

|

38

|

-

def download(item: Request) -> Union[Seed, BaseItem, Response, str]:

|

|

39

|

-

response = item.download()

|

|

40

|

-

yield Response(item.seed, response)

|

|

41

|

-

|

|

42

|

-

@staticmethod

|

|

43

|

-

def parse(item: Response) -> BaseItem:

|

|

44

|

-

pass

|

|

45

|

-

|

|

46

|

-

def get(self) -> Seed:

|

|

47

|

-

return self.launcher_queue['todo'].pop()

|

|

48

|

-

|

|

49

|

-

def spider(self):

|

|

50

|

-

while True:

|

|

51

|

-

seed = self.get()

|

|

52

|

-

|

|

53

|

-

if not seed:

|

|

54

|

-

continue

|

|

55

|

-

|

|

56

|

-

elif seed.params.retry >= self.max_retries:

|

|

57

|

-

self.launcher_queue['done'].push(seed)

|

|

58

|

-

continue

|

|

59

|

-

|

|

60

|

-

item = self.request(seed)

|

|

61

|

-

|

|

62

|

-

if isinstance(item, Request):

|

|

63

|

-

|

|

64

|

-

download_iterators = self.download(item)

|

|

65

|

-

|

|

66

|

-

if not isgenerator(download_iterators):

|

|

67

|

-

raise TypeError("download function isn't a generator")

|

|

68

|

-

|

|

69

|

-

seed_detail_log_info = download_log_info(seed.to_dict)

|

|

70

|

-

|

|

71

|

-

try:

|

|

72

|

-

for it in download_iterators:

|

|

73

|

-

if isinstance(it, Response):

|

|

74

|

-

response_detail_log_info = download_log_info(it.to_dict())

|

|

75

|

-

logger.info(LogTemplate.download_info.format(

|

|

76

|

-

detail=seed_detail_log_info, retry=item.seed.params.retry,

|

|

77

|

-

priority=item.seed.params.priority,

|

|

78

|

-

seed_version=item.seed.params.seed_version,

|

|

79

|

-

identifier=item.seed.params.identifier,

|

|

80

|

-

status=it.response, response=response_detail_log_info

|

|

81

|

-

))

|

|

82

|

-

parse_iterators = self.parse(it)

|

|

83

|

-

if not isgenerator(parse_iterators):

|

|

84

|

-

raise TypeError("parse function isn't a generator")

|

|

85

|

-

for upload_item in parse_iterators:

|

|

86

|

-

if not isinstance(upload_item, BaseItem):

|

|

87

|

-

raise TypeError("upload_item isn't BaseItem subclass")

|

|

88

|

-

self.upload_queue.push(upload_item)

|

|

89

|

-

elif isinstance(it, BaseItem):

|

|

90

|

-

self.upload_queue.push(it)

|

|

91

|

-

elif isinstance(it, Seed):

|

|

92

|

-

self.launcher_queue['new'].push(it)

|

|

93

|

-

elif isinstance(it, str) and it == DealModel.poll:

|

|

94

|

-

self.launcher_queue['todo'].push(item)

|

|

95

|

-

break

|

|

96

|

-

elif isinstance(it, str) and it == DealModel.done:

|

|

97

|

-

self.launcher_queue['done'].push(seed)

|

|

98

|

-

break

|

|

99

|

-

elif isinstance(it, str) and it == DealModel.fail:

|

|

100

|

-

seed.params.identifier = DealModel.fail

|

|

101

|

-

self.launcher_queue['done'].push(seed)

|

|

102

|

-

break

|

|

103

|

-

else:

|

|

104

|

-

raise TypeError("yield value type error!")

|

|

105

|

-

|

|

106

|

-

except Exception as e:

|

|

107

|

-

logger.info(LogTemplate.download_exception.format(

|

|

108

|

-

detail=seed_detail_log_info, retry=seed.params.retry,

|

|

109

|

-

priority=seed.params.priority, seed_version=seed.params.seed_version,

|

|

110

|

-

identifier=seed.params.identifier, exception=e

|

|

111

|

-

))

|

|

112

|

-

seed.params.retry += 1

|

|

113

|

-

self.launcher_queue['todo'].push(seed)

|

|

114

|

-

|

|

115

|

-

elif isinstance(item, BaseItem):

|

|

116

|

-

self.upload_queue.push(item)

|

|

117

|

-

|

|

118

|

-

def run(self):

|

|

119

|

-

for index in range(self.spider_thread_num):

|

|

120

|

-

threading.Thread(name=f"spider_{index}", target=self.spider).start()

|

|

121

|

-

|