@rubeneschauzier/solidbench 2.1.0

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/LICENSE.txt +21 -0

- package/README.md +211 -0

- package/bin/solidbench +2 -0

- package/bin/solidbench.d.ts +2 -0

- package/bin/solidbench.js +6 -0

- package/bin/solidbench.js.map +1 -0

- package/lib/CliRunner.d.ts +1 -0

- package/lib/CliRunner.js +20 -0

- package/lib/CliRunner.js.map +1 -0

- package/lib/Generator.d.ts +76 -0

- package/lib/Generator.js +231 -0

- package/lib/Generator.js.map +1 -0

- package/lib/Server.d.ts +19 -0

- package/lib/Server.js +34 -0

- package/lib/Server.js.map +1 -0

- package/lib/Templates.d.ts +14 -0

- package/lib/Templates.js +19 -0

- package/lib/Templates.js.map +1 -0

- package/lib/commands/CommandGenerate.d.ts +5 -0

- package/lib/commands/CommandGenerate.js +77 -0

- package/lib/commands/CommandGenerate.js.map +1 -0

- package/lib/commands/CommandServe.d.ts +5 -0

- package/lib/commands/CommandServe.js +50 -0

- package/lib/commands/CommandServe.js.map +1 -0

- package/lib/index.d.ts +4 -0

- package/lib/index.js +21 -0

- package/lib/index.js.map +1 -0

- package/package.json +68 -0

- package/templates/enhancer-config-pod.json +29 -0

- package/templates/enhancer-similarities-config-pod.json +39 -0

- package/templates/fragmenter-config-pod.json +525 -0

- package/templates/fragmenter-config-subject.json +63 -0

- package/templates/params.ini +5 -0

- package/templates/queries/README.md +43 -0

- package/templates/queries/interactive-complex-1.sparql +138 -0

- package/templates/queries/interactive-complex-10.sparql +89 -0

- package/templates/queries/interactive-complex-11.sparql +43 -0

- package/templates/queries/interactive-complex-12.sparql +36 -0

- package/templates/queries/interactive-complex-2.sparql +48 -0

- package/templates/queries/interactive-complex-3-duration-as-function.sparql +85 -0

- package/templates/queries/interactive-complex-3.sparql +85 -0

- package/templates/queries/interactive-complex-4-duration-as-function.sparql +36 -0

- package/templates/queries/interactive-complex-4.sparql +36 -0

- package/templates/queries/interactive-complex-5.sparql +55 -0

- package/templates/queries/interactive-complex-6.sparql +41 -0

- package/templates/queries/interactive-complex-7.sparql +84 -0

- package/templates/queries/interactive-complex-8.sparql +36 -0

- package/templates/queries/interactive-complex-9.sparql +45 -0

- package/templates/queries/interactive-discover-1.sparql +23 -0

- package/templates/queries/interactive-discover-2.sparql +23 -0

- package/templates/queries/interactive-discover-3.sparql +22 -0

- package/templates/queries/interactive-discover-4.sparql +23 -0

- package/templates/queries/interactive-discover-5.sparql +19 -0

- package/templates/queries/interactive-discover-6.sparql +22 -0

- package/templates/queries/interactive-discover-7.sparql +23 -0

- package/templates/queries/interactive-discover-8.sparql +22 -0

- package/templates/queries/interactive-short-1-nocity.sparql +33 -0

- package/templates/queries/interactive-short-1.sparql +34 -0

- package/templates/queries/interactive-short-2.sparql +39 -0

- package/templates/queries/interactive-short-3-unidir.sparql +27 -0

- package/templates/queries/interactive-short-3.sparql +32 -0

- package/templates/queries/interactive-short-4-creator.sparql +21 -0

- package/templates/queries/interactive-short-4.sparql +20 -0

- package/templates/queries/interactive-short-5.sparql +23 -0

- package/templates/queries/interactive-short-6.sparql +32 -0

- package/templates/queries/interactive-short-7.sparql +39 -0

- package/templates/query-config-single-pod.json +46 -0

- package/templates/query-config.json +778 -0

- package/templates/query-sequence-config.json +183 -0

- package/templates/refinements/interactive-discover-1.json +144 -0

- package/templates/refinements/interactive-discover-2.json +200 -0

- package/templates/refinements/interactive-discover-3.json +254 -0

- package/templates/refinements/interactive-discover-4.json +156 -0

- package/templates/refinements/interactive-discover-5.json +10 -0

- package/templates/refinements/interactive-discover-6.json +10 -0

- package/templates/refinements/interactive-discover-7.json +10 -0

- package/templates/refinements/interactive-discover-8.json +10 -0

- package/templates/refinements/interactive-short-1-bak.json +180 -0

- package/templates/refinements/interactive-short-1.json +65 -0

- package/templates/refinements/interactive-short-2.json +3 -0

- package/templates/server-config.json +72 -0

- package/templates/validation-config.json +644 -0

package/LICENSE.txt

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

1

|

+

The MIT License (MIT)

|

|

2

|

+

|

|

3

|

+

Copyright © 2021 - now Ruben Taelman

|

|

4

|

+

|

|

5

|

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

|

6

|

+

of this software and associated documentation files (the "Software"), to deal

|

|

7

|

+

in the Software without restriction, including without limitation the rights

|

|

8

|

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

|

9

|

+

copies of the Software, and to permit persons to whom the Software is

|

|

10

|

+

furnished to do so, subject to the following conditions:

|

|

11

|

+

|

|

12

|

+

The above copyright notice and this permission notice shall be included in

|

|

13

|

+

all copies or substantial portions of the Software.

|

|

14

|

+

|

|

15

|

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

|

16

|

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

|

17

|

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

|

18

|

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

|

19

|

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

|

20

|

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

|

|

21

|

+

THE SOFTWARE.

|

package/README.md

ADDED

|

@@ -0,0 +1,211 @@

|

|

|

1

|

+

# SolidBench.js

|

|

2

|

+

|

|

3

|

+

[](https://github.com/SolidBench/SolidBench.js/actions?query=workflow%3ACI)

|

|

4

|

+

[](https://coveralls.io/github/SolidBench/SolidBench.js?branch=master)

|

|

5

|

+

[](https://www.npmjs.com/package/solidbench)

|

|

6

|

+

|

|

7

|

+

### A benchmark for [Solid](https://solidproject.org/) to simulate vaults with social network data.

|

|

8

|

+

|

|

9

|

+

This benchmark allows you to generate a large amount of Solid data vaults with **simulated social network data**,

|

|

10

|

+

and serve them over HTTP using a built-in [Solid Community Server](https://github.com/CommunitySolidServer/CommunitySolidServer) instance.

|

|

11

|

+

Furthermore, different SPARQL queries will be generated to simulate workloads of social network apps for Solid.

|

|

12

|

+

|

|

13

|

+

This benchmark is based on the [LDBC SNB](https://github.com/ldbc/ldbc_snb_datagen_hadoop) **social network** dataset.

|

|

14

|

+

|

|

15

|

+

## Requirements

|

|

16

|

+

|

|

17

|

+

* [Node.js](https://nodejs.org/en/) _(16 or higher)_

|

|

18

|

+

* [Docker](https://www.docker.com/) _(required for invoking [LDBC SNB generator](https://github.com/ldbc/ldbc_snb_datagen_hadoop))_

|

|

19

|

+

|

|

20

|

+

## Installation

|

|

21

|

+

|

|

22

|

+

```bash

|

|

23

|

+

$ npm install -g solidbench

|

|

24

|

+

```

|

|

25

|

+

or

|

|

26

|

+

```bash

|

|

27

|

+

$ yarn global add solidbench --ignore-engines

|

|

28

|

+

```

|

|

29

|

+

|

|

30

|

+

## Quick start

|

|

31

|

+

|

|

32

|

+

1. `$ solidbench generate`: Generate Solid data vaults with social network data.

|

|

33

|

+

2. `$ solidbench serve`: Serve datasets over HTTP.

|

|

34

|

+

3. Initiate HTTP requests over `http://localhost:3000/`, such as `$ curl http://localhost:3000/pods/00000000000000000933/profile/card`

|

|

35

|

+

|

|

36

|

+

## Usage

|

|

37

|

+

|

|

38

|

+

### 1. Generate

|

|

39

|

+

|

|

40

|

+

The social network data can be generated using the default options:

|

|

41

|

+

|

|

42

|

+

```bash

|

|

43

|

+

$ solidbench generate

|

|

44

|

+

```

|

|

45

|

+

|

|

46

|

+

**Full usage options:**

|

|

47

|

+

|

|

48

|

+

```bash

|

|

49

|

+

solidbench generate

|

|

50

|

+

|

|

51

|

+

Generate social network data

|

|

52

|

+

|

|

53

|

+

Options:

|

|

54

|

+

--version Show version number [boolean]

|

|

55

|

+

--cwd The current working directory

|

|

56

|

+

[string] [default: .]

|

|

57

|

+

--verbose If more output should be printed [boolean]

|

|

58

|

+

--help Show help [boolean]

|

|

59

|

+

-o, --overwrite If existing files should be overwritten

|

|

60

|

+

[string] [default: false]

|

|

61

|

+

-s, --scale The SNB scale factor [number] [default: 0.1]

|

|

62

|

+

-e, --enhancementConfig Path to enhancement config

|

|

63

|

+

[string] [default: enhancer-config-pod.json]

|

|

64

|

+

-f, --fragmentConfig Path to fragmentation config

|

|

65

|

+

[string] [default: fragmenter-config-pod.json]

|

|

66

|

+

-q, --queryConfig Path to query instantiation config

|

|

67

|

+

[string] [default: query-config.json]

|

|

68

|

+

--validationParams URL of the validation parameters zip file

|

|

69

|

+

[string] [default: https://.../validation_params.zip]

|

|

70

|

+

-v, --validationConfig Path to validation generator config

|

|

71

|

+

[string] [default: validation-config.json]

|

|

72

|

+

--hadoopMemory Memory limit for Hadoop

|

|

73

|

+

[string] [default: "4G"]

|

|

74

|

+

```

|

|

75

|

+

|

|

76

|

+

**Memory usage**

|

|

77

|

+

|

|

78

|

+

For certain scale factors, you may have to increase your default Node memory limit.

|

|

79

|

+

You can do this as follows (set RAM limit to 8GB):

|

|

80

|

+

|

|

81

|

+

```bash

|

|

82

|

+

NODE_OPTIONS=--max-old-space-size=8192 solidbench.js generate

|

|

83

|

+

```

|

|

84

|

+

|

|

85

|

+

**What does this do?**

|

|

86

|

+

|

|

87

|

+

This generate command will first use the (interactive) [LDBC SNB generator](https://github.com/ldbc/ldbc_snb_datagen_hadoop)

|

|

88

|

+

to **create one large Turtle file** with a given scale factor (defaults to `0.1`, allowed values: `[0.1, 0.3, 1, 3, 10, 30, 100, 300, 1000]).

|

|

89

|

+

The default scale factor of `0.1` produces around 5M triples, and requires around 15 minutes for full generation on an average machine.

|

|

90

|

+

|

|

91

|

+

Then, **auxiliary data** will be generated using [`ldbc-snb-enhancer.js`](https://github.com/SolidBench/ldbc-snb-enhancer.js/)

|

|

92

|

+

based on the given enhancement config (defaults to an empty config).

|

|

93

|

+

|

|

94

|

+

Next, this Turtle file will be **fragmented** using [`rdf-dataset-fragmenter.js`](https://github.com/SolidBench/rdf-dataset-fragmenter.js)

|

|

95

|

+

and the given fragmentation strategy config (defaults to a Solid vault-based fragmentation).

|

|

96

|

+

|

|

97

|

+

Then, **query** templates will be instantiated based on the generated data.

|

|

98

|

+

This is done using [`sparql-query-parameter-instantiator.js`](https://github.com/SolidBench/sparql-query-parameter-instantiator.js)

|

|

99

|

+

with the given query instantiation config (defaults to a config instantiating [all LDBC SNB interactive queries](https://github.com/SolidBench/SolidBench.js/tree/master/templates/queries)).

|

|

100

|

+

|

|

101

|

+

Finally, **validation queries and results** will be generated.

|

|

102

|

+

This is done using [`ldbc-snb-validation-generator.js`](https://github.com/SolidBench/ldbc-snb-validation-generator.js/) with the given validation config.

|

|

103

|

+

This defaults to a config instantiating all queries and results from the `validation_params-sf1-without-updates.csv` file from LDBC SNB.

|

|

104

|

+

This default config will produce queries and expected results in the `out-validate/` directory,

|

|

105

|

+

which are expected to be executed on a scale factor of `1`.

|

|

106

|

+

|

|

107

|

+

### 2. Serve

|

|

108

|

+

|

|

109

|

+

The social network data can be served over HTTP as actual Solid vaults:

|

|

110

|

+

|

|

111

|

+

```bash

|

|

112

|

+

$ solidbench serve

|

|

113

|

+

```

|

|

114

|

+

|

|

115

|

+

**Full usage options:**

|

|

116

|

+

|

|

117

|

+

```bash

|

|

118

|

+

solidbench serve

|

|

119

|

+

|

|

120

|

+

Serves the fragmented dataset via an HTTP server

|

|

121

|

+

|

|

122

|

+

Options:

|

|

123

|

+

--version Show version number [boolean]

|

|

124

|

+

--cwd The current working directory [string] [default: .]

|

|

125

|

+

--verbose If more output should be printed [boolean]

|

|

126

|

+

--help Show help [boolean]

|

|

127

|

+

-p, --port The HTTP port to run on [number] [default: 3000]

|

|

128

|

+

-b, --baseUrl The base URL of the server [string]

|

|

129

|

+

-r, --rootFilePath Path to the root of the files to serve

|

|

130

|

+

[string] [default: "out-fragments/http/localhost_3000/"]

|

|

131

|

+

-c, --config Path to server config

|

|

132

|

+

[string] [default: server-config.json]

|

|

133

|

+

-l, --logLevel Logging level (error, warn, info, verbose, debug, silly)

|

|

134

|

+

[string] [default: "info"]

|

|

135

|

+

```

|

|

136

|

+

|

|

137

|

+

**What does this do?**

|

|

138

|

+

|

|

139

|

+

The fragmented dataset from the preparation phase is loaded into the [Solid Community Server](https://github.com/CommunitySolidServer/CommunitySolidServer)

|

|

140

|

+

so that it can be served over HTTP.

|

|

141

|

+

|

|

142

|

+

The provided server config uses a simple file-based mapping, so that for example the file in `out-fragments/http/localhost:3000/pods/00000000000000000933/profile/card` is served on `http://localhost:3000/pods/00000000000000000933/profile/card`.

|

|

143

|

+

Once the server is live, you can perform requests such as:

|

|

144

|

+

|

|

145

|

+

```bash

|

|

146

|

+

$ curl http://localhost:3000/pods/00000000000000000933/profile/card

|

|

147

|

+

```

|

|

148

|

+

|

|

149

|

+

## Data model

|

|

150

|

+

|

|

151

|

+

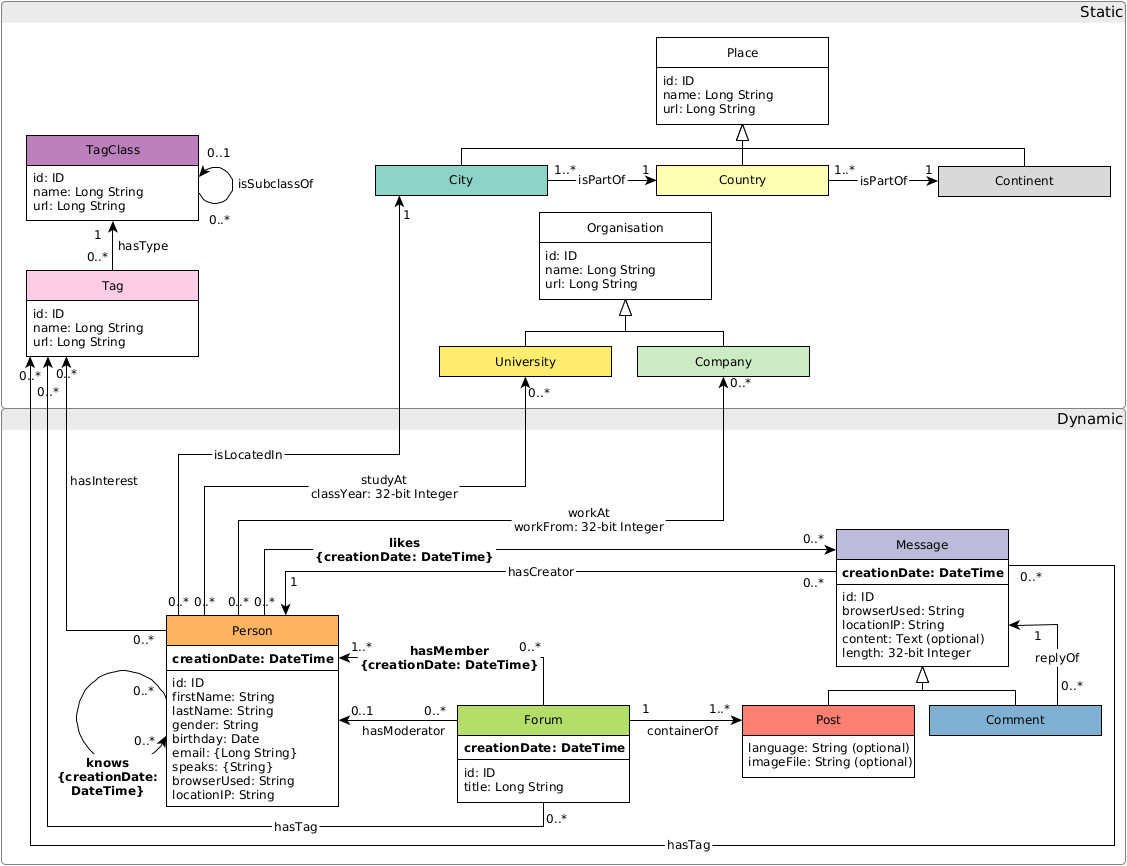

By default, the following data model is used where all triples are placed in the document identified by their subject URL.

|

|

152

|

+

|

|

153

|

+

|

|

154

|

+

|

|

155

|

+

Query templates can be found in [`templates/queries/`](https://github.com/rubensworks/solidbench.js/tree/master/templates/queries).

|

|

156

|

+

|

|

157

|

+

## Pod-based fragmentation

|

|

158

|

+

|

|

159

|

+

By default, data will be fragmented into files resembling [Solid data pods](https://solidproject.org/).

|

|

160

|

+

|

|

161

|

+

For example, a generated pod can contain the following files:

|

|

162

|

+

|

|

163

|

+

```text

|

|

164

|

+

pods/00000000000000000290/

|

|

165

|

+

comments/

|

|

166

|

+

2010-12-02

|

|

167

|

+

2012-07-14

|

|

168

|

+

noise/

|

|

169

|

+

NOISE-1411

|

|

170

|

+

NOISE-83603

|

|

171

|

+

posts/

|

|

172

|

+

2010-02-14

|

|

173

|

+

2012-09-09

|

|

174

|

+

profile/

|

|

175

|

+

card.

|

|

176

|

+

settings/

|

|

177

|

+

publicTypeIndex

|

|

178

|

+

```

|

|

179

|

+

|

|

180

|

+

All files are serialized using the [N-Quads serialization](https://www.w3.org/TR/n-quads/).

|

|

181

|

+

|

|

182

|

+

The `noise/` directory contains dummy triples with the main purpose of increasing the size of a pod.

|

|

183

|

+

The amount of noise that is produced can be configured using the enhancement config file.

|

|

184

|

+

|

|

185

|

+

Below, a minimalized example of the contents of a profile can be found:

|

|

186

|

+

```turtle

|

|

187

|

+

<http://localhost:3000/pods/00000000000000000290/profile/card#me> <http://www.w3.org/ns/solid/terms#publicTypeIndex> <http://localhost:3000/pods/00000000000000000290/settings/publicTypeIndex> .

|

|

188

|

+

<http://localhost:3000/pods/00000000000000000290/profile/card#me> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://localhost:3000/www.ldbc.eu/ldbc_socialnet/1.0/vocabulary/Person> .

|

|

189

|

+

<http://localhost:3000/pods/00000000000000000290/profile/card#me> <http://www.w3.org/ns/pim/space#storage> <http://localhost:3000/pods/00000000000000000290/> .

|

|

190

|

+

<http://localhost:3000/pods/00000000000000000290/profile/card#me> <http://localhost:3000/www.ldbc.eu/ldbc_socialnet/1.0/vocabulary/id> "290"^^<http://www.w3.org/2001/XMLSchema#long> .

|

|

191

|

+

<http://localhost:3000/pods/00000000000000000290/profile/card#me> <http://localhost:3000/www.ldbc.eu/ldbc_socialnet/1.0/vocabulary/firstName> "Ayesha" .

|

|

192

|

+

<http://localhost:3000/pods/00000000000000000290/profile/card#me> <http://localhost:3000/www.ldbc.eu/ldbc_socialnet/1.0/vocabulary/lastName> "Baloch" .

|

|

193

|

+

<http://localhost:3000/pods/00000000000000000290/profile/card#me> <http://localhost:3000/www.ldbc.eu/ldbc_socialnet/1.0/vocabulary/gender> "male" .

|

|

194

|

+

<http://localhost:3000/pods/00000000000000000290/profile/card#me> <http://localhost:3000/www.ldbc.eu/ldbc_socialnet/1.0/vocabulary/hasInterest> <http://localhost:3000/www.ldbc.eu/ldbc_socialnet/1.0/tag/John_the_Baptist> .

|

|

195

|

+

<http://localhost:3000/pods/00000000000000000290/profile/card#me> <http://localhost:3000/www.ldbc.eu/ldbc_socialnet/1.0/vocabulary/knows> _:b4_knows00000000000000124063 .

|

|

196

|

+

_:b4_knows00000000000000124063 <http://localhost:3000/www.ldbc.eu/ldbc_socialnet/1.0/vocabulary/hasPerson> <http://localhost:3000/pods/00000000000000001753/profile/card#me> .

|

|

197

|

+

```

|

|

198

|

+

|

|

199

|

+

## Limitations

|

|

200

|

+

|

|

201

|

+

At this stage, this benchmark has the following limitations:

|

|

202

|

+

|

|

203

|

+

- Vaults don't make use of authentication, and all data is readable by everyone without authentication.

|

|

204

|

+

- SPARQL update queries for modifying data within vaults are not being generated yet: https://github.com/SolidBench/SolidBench.js/issues/3

|

|

205

|

+

- All vaults make use of the same vocabulary: https://github.com/SolidBench/SolidBench.js/issues/4

|

|

206

|

+

|

|

207

|

+

## License

|

|

208

|

+

|

|

209

|

+

This software is written by [Ruben Taelman](https://rubensworks.net/).

|

|

210

|

+

|

|

211

|

+

This code is released under the [MIT license](http://opensource.org/licenses/MIT).

|

package/bin/solidbench

ADDED

|

@@ -0,0 +1 @@

|

|

|

1

|

+

{"version":3,"file":"solidbench.js","sourceRoot":"","sources":["solidbench.ts"],"names":[],"mappings":";;;AACA,gDAA0C;AAE1C,IAAA,kBAAM,EAAC,OAAO,CAAC,GAAG,EAAE,EAAE,OAAO,CAAC,IAAI,CAAC,CAAC","sourcesContent":["#!/usr/bin/env node\nimport { runCli } from '../lib/CliRunner';\n\nrunCli(process.cwd(), process.argv);\n"]}

|

|

@@ -0,0 +1 @@

|

|

|

1

|

+

export declare function runCli(cwd: string, argv: string[]): void;

|

package/lib/CliRunner.js

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

1

|

+

"use strict";

|

|

2

|

+

var __importDefault = (this && this.__importDefault) || function (mod) {

|

|

3

|

+

return (mod && mod.__esModule) ? mod : { "default": mod };

|

|

4

|

+

};

|

|

5

|

+

Object.defineProperty(exports, "__esModule", { value: true });

|

|

6

|

+

exports.runCli = runCli;

|

|

7

|

+

const yargs_1 = __importDefault(require("yargs"));

|

|

8

|

+

const helpers_1 = require("yargs/helpers");

|

|

9

|

+

function runCli(cwd, argv) {

|

|

10

|

+

const _ = (0, yargs_1.default)((0, helpers_1.hideBin)(argv))

|

|

11

|

+

.options({

|

|

12

|

+

cwd: { type: 'string', default: cwd, describe: 'The current working directory', defaultDescription: '.' },

|

|

13

|

+

verbose: { type: 'boolean', describe: 'If more output should be printed' },

|

|

14

|

+

})

|

|

15

|

+

.commandDir('commands')

|

|

16

|

+

.demandCommand()

|

|

17

|

+

.help()

|

|

18

|

+

.parse();

|

|

19

|

+

}

|

|

20

|

+

//# sourceMappingURL=CliRunner.js.map

|

|

@@ -0,0 +1 @@

|

|

|

1

|

+

{"version":3,"file":"CliRunner.js","sourceRoot":"","sources":["CliRunner.ts"],"names":[],"mappings":";;;;;AAGA,wBAUC;AAbD,kDAA0B;AAC1B,2CAAwC;AAExC,SAAgB,MAAM,CAAC,GAAW,EAAE,IAAc;IAChD,MAAM,CAAC,GAAG,IAAA,eAAK,EAAC,IAAA,iBAAO,EAAC,IAAI,CAAC,CAAC;SAC3B,OAAO,CAAC;QACP,GAAG,EAAE,EAAE,IAAI,EAAE,QAAQ,EAAE,OAAO,EAAE,GAAG,EAAE,QAAQ,EAAE,+BAA+B,EAAE,kBAAkB,EAAE,GAAG,EAAE;QACzG,OAAO,EAAE,EAAE,IAAI,EAAE,SAAS,EAAE,QAAQ,EAAE,kCAAkC,EAAE;KAC3E,CAAC;SACD,UAAU,CAAC,UAAU,CAAC;SACtB,aAAa,EAAE;SACf,IAAI,EAAE;SACN,KAAK,EAAE,CAAC;AACb,CAAC","sourcesContent":["import yargs from 'yargs';\nimport { hideBin } from 'yargs/helpers';\n\nexport function runCli(cwd: string, argv: string[]): void {\n const _ = yargs(hideBin(argv))\n .options({\n cwd: { type: 'string', default: cwd, describe: 'The current working directory', defaultDescription: '.' },\n verbose: { type: 'boolean', describe: 'If more output should be printed' },\n })\n .commandDir('commands')\n .demandCommand()\n .help()\n .parse();\n}\n"]}

|

|

@@ -0,0 +1,76 @@

|

|

|

1

|

+

/**

|

|

2

|

+

* Generates decentralized social network data in different phases.

|

|

3

|

+

*/

|

|

4

|

+

export declare class Generator {

|

|

5

|

+

static readonly COLOR_RESET: string;

|

|

6

|

+

static readonly COLOR_RED: string;

|

|

7

|

+

static readonly COLOR_GREEN: string;

|

|

8

|

+

static readonly COLOR_YELLOW: string;

|

|

9

|

+

static readonly COLOR_BLUE: string;

|

|

10

|

+

static readonly COLOR_MAGENTA: string;

|

|

11

|

+

static readonly COLOR_CYAN: string;

|

|

12

|

+

static readonly COLOR_GRAY: string;

|

|

13

|

+

static readonly LDBC_SNB_DATAGEN_DOCKER_IMAGE: string;

|

|

14

|

+

private readonly cwd;

|

|

15

|

+

private readonly verbose;

|

|

16

|

+

private readonly overwrite;

|

|

17

|

+

private readonly scale;

|

|

18

|

+

private readonly enhancementConfig;

|

|

19

|

+

private readonly fragmentConfig;

|

|

20

|

+

private readonly queryConfig;

|

|

21

|

+

private readonly validationParams;

|

|

22

|

+

private readonly validationConfig;

|

|

23

|

+

private readonly hadoopMemory;

|

|

24

|

+

private readonly mainModulePath;

|

|

25

|

+

constructor(opts: IGeneratorOptions);

|

|

26

|

+

protected targetExists(path: string): Promise<boolean>;

|

|

27

|

+

protected log(phase: string, status: string): void;

|

|

28

|

+

protected runPhase(name: string, directory: string, runner: () => Promise<void>): Promise<void>;

|

|

29

|

+

/**

|

|

30

|

+

* Run all generator phases.

|

|

31

|

+

*/

|

|

32

|

+

generate(): Promise<void>;

|

|

33

|

+

/**

|

|

34

|

+

* Invoke the LDBC SNB generator.

|

|

35

|

+

*/

|

|

36

|

+

generateSnbDataset(): Promise<void>;

|

|

37

|

+

/**

|

|

38

|

+

* Enhance the generated LDBC SNB dataset.

|

|

39

|

+

*/

|

|

40

|

+

enhanceSnbDataset(): Promise<void>;

|

|

41

|

+

/**

|

|

42

|

+

* Fragment the generated and enhanced LDBC SNB datasets.

|

|

43

|

+

*/

|

|

44

|

+

fragmentSnbDataset(): Promise<void>;

|

|

45

|

+

/**

|

|

46

|

+

* Instantiate queries based on the LDBC SNB datasets.

|

|

47

|

+

*/

|

|

48

|

+

instantiateQueries(): Promise<void>;

|

|

49

|

+

/**

|

|

50

|

+

* Download validation parameters

|

|

51

|

+

*/

|

|

52

|

+

downloadValidationParams(): Promise<void>;

|

|

53

|

+

/**

|

|

54

|

+

* Generate validation queries and results.

|

|

55

|

+

*/

|

|

56

|

+

generateValidation(): Promise<void>;

|

|

57

|

+

protected generateVariables(): Promise<Record<string, string>>;

|

|

58

|

+

/**

|

|

59

|

+

* Return a string in a given color

|

|

60

|

+

* @param str The string that should be printed in

|

|

61

|

+

* @param color A given color

|

|

62

|

+

*/

|

|

63

|

+

static withColor(str: any, color: string): string;

|

|

64

|

+

}

|

|

65

|

+

export interface IGeneratorOptions {

|

|

66

|

+

cwd: string;

|

|

67

|

+

verbose: boolean;

|

|

68

|

+

overwrite: boolean;

|

|

69

|

+

scale: string;

|

|

70

|

+

enhancementConfig: string;

|

|

71

|

+

fragmentConfig: string;

|

|

72

|

+

queryConfig: string;

|

|

73

|

+

validationParams?: string;

|

|

74

|

+

validationConfig?: string;

|

|

75

|

+

hadoopMemory: string;

|

|

76

|

+

}

|

package/lib/Generator.js

ADDED

|

@@ -0,0 +1,231 @@

|

|

|

1

|

+

"use strict";

|

|

2

|

+

var __importDefault = (this && this.__importDefault) || function (mod) {

|

|

3

|

+

return (mod && mod.__esModule) ? mod : { "default": mod };

|

|

4

|

+

};

|

|

5

|

+

Object.defineProperty(exports, "__esModule", { value: true });

|

|

6

|

+

exports.Generator = void 0;

|

|

7

|

+

const promises_1 = require("node:fs/promises");

|

|

8

|

+

const node_https_1 = require("node:https");

|

|

9

|

+

const node_path_1 = require("node:path");

|

|

10

|

+

const dockerode_1 = __importDefault(require("dockerode"));

|

|

11

|

+

const ldbc_snb_enhancer_1 = require("ldbc-snb-enhancer");

|

|

12

|

+

const ldbc_snb_validation_generator_1 = require("ldbc-snb-validation-generator");

|

|

13

|

+

const rdf_dataset_fragmenter_1 = require("rdf-dataset-fragmenter");

|

|

14

|

+

const sparql_query_parameter_instantiator_1 = require("sparql-query-parameter-instantiator");

|

|

15

|

+

const unzipper_1 = require("unzipper");

|

|

16

|

+

/**

|

|

17

|

+

* Generates decentralized social network data in different phases.

|

|

18

|

+

*/

|

|

19

|

+

class Generator {

|

|

20

|

+

constructor(opts) {

|

|

21

|

+

this.cwd = opts.cwd;

|

|

22

|

+

this.verbose = opts.verbose;

|

|

23

|

+

this.overwrite = opts.overwrite;

|

|

24

|

+

this.scale = opts.scale;

|

|

25

|

+

this.enhancementConfig = opts.enhancementConfig;

|

|

26

|

+

this.fragmentConfig = opts.fragmentConfig;

|

|

27

|

+

this.queryConfig = opts.queryConfig;

|

|

28

|

+

this.validationParams = opts.validationParams;

|

|

29

|

+

this.validationConfig = opts.validationConfig;

|

|

30

|

+

this.hadoopMemory = opts.hadoopMemory;

|

|

31

|

+

this.mainModulePath = (0, node_path_1.join)(__dirname, '..');

|

|

32

|

+

}

|

|

33

|

+

async targetExists(path) {

|

|

34

|

+

try {

|

|

35

|

+

await (0, promises_1.stat)(path);

|

|

36

|

+

return true;

|

|

37

|

+

}

|

|

38

|

+

catch {

|

|

39

|

+

return false;

|

|

40

|

+

}

|

|

41

|

+

}

|

|

42

|

+

log(phase, status) {

|

|

43

|

+

process.stdout.write(`${Generator.withColor(`[${phase}]`, Generator.COLOR_CYAN)} ${status}\n`);

|

|

44

|

+

}

|

|

45

|

+

async runPhase(name, directory, runner) {

|

|

46

|

+

if (this.overwrite || !await this.targetExists((0, node_path_1.join)(this.cwd, directory))) {

|

|

47

|

+

this.log(name, 'Started');

|

|

48

|

+

const timeStart = process.hrtime();

|

|

49

|

+

await runner();

|

|

50

|

+

const timeEnd = process.hrtime(timeStart);

|

|

51

|

+

this.log(name, `Done in ${timeEnd[0] + (timeEnd[1] / 1_000_000_000)} seconds`);

|

|

52

|

+

}

|

|

53

|

+

else {

|

|

54

|

+

this.log(name, `Skipped (/${directory} already exists, remove to regenerate)`);

|

|

55

|

+

}

|

|

56

|

+

}

|

|

57

|

+

/**

|

|

58

|

+

* Run all generator phases.

|

|

59

|

+

*/

|

|

60

|

+

async generate() {

|

|

61

|

+

const timeStart = process.hrtime();

|

|

62

|

+

await this.runPhase('SNB dataset generator', 'out-snb', () => this.generateSnbDataset());

|

|

63

|

+

await this.runPhase('SNB dataset enhancer', 'out-enhanced', () => this.enhanceSnbDataset());

|

|

64

|

+

await this.runPhase('SNB dataset fragmenter', 'out-fragments', () => this.fragmentSnbDataset());

|

|

65

|

+

await this.runPhase('SPARQL query instantiator', 'out-queries', () => this.instantiateQueries());

|

|

66

|

+

if (this.validationParams && this.validationConfig) {

|

|

67

|

+

await this.runPhase('SNB validation downloader', 'out-validate-params', () => this.downloadValidationParams());

|

|

68

|

+

await this.runPhase('SNB validation generator', 'out-validate', () => this.generateValidation());

|

|

69

|

+

}

|

|

70

|

+

const timeEnd = process.hrtime(timeStart);

|

|

71

|

+

this.log('All', `Done in ${timeEnd[0] + (timeEnd[1] / 1_000_000_000)} seconds`);

|

|

72

|

+

}

|

|

73

|

+

/**

|

|

74

|

+

* Invoke the LDBC SNB generator.

|

|

75

|

+

*/

|

|

76

|

+

async generateSnbDataset() {

|

|

77

|

+

// Create params.ini file

|

|

78

|

+

const paramsTemplate = await (0, promises_1.readFile)((0, node_path_1.join)(__dirname, '../templates/params.ini'), 'utf8');

|

|

79

|

+

const paramsPath = (0, node_path_1.join)(this.cwd, 'params.ini');

|

|

80

|

+

await (0, promises_1.writeFile)(paramsPath, paramsTemplate.replaceAll('SCALE', this.scale), 'utf8');

|

|

81

|

+

// Pull the base Docker image

|

|

82

|

+

const dockerode = new dockerode_1.default();

|

|

83

|

+

const buildStream = await dockerode.pull(Generator.LDBC_SNB_DATAGEN_DOCKER_IMAGE);

|

|

84

|

+

await new Promise((resolve, reject) => {

|

|

85

|

+

dockerode.modem.followProgress(buildStream, (err, res) => err ? reject(err) : resolve(res));

|

|

86

|

+

});

|

|

87

|

+

// Start Docker container

|

|

88

|

+

const container = await dockerode.createContainer({

|

|

89

|

+

Image: Generator.LDBC_SNB_DATAGEN_DOCKER_IMAGE,

|

|

90

|

+

Tty: true,

|

|

91

|

+

AttachStdout: true,

|

|

92

|

+

AttachStderr: true,

|

|

93

|

+

Env: [`HADOOP_CLIENT_OPTS=-Xmx${this.hadoopMemory}`],

|

|

94

|

+

HostConfig: {

|

|

95

|

+

Binds: [

|

|

96

|

+

`${this.cwd}/out-snb/:/opt/ldbc_snb_datagen/out`,

|

|

97

|

+

`${paramsPath}:/opt/ldbc_snb_datagen/params.ini`,

|

|

98

|

+

],

|

|

99

|

+

},

|

|

100

|

+

});

|

|

101

|

+

await container.start();

|

|

102

|

+

// Stop process on force-exit

|

|

103

|

+

let containerEnded = false;

|

|

104

|

+

// eslint-disable-next-line ts/no-misused-promises

|

|

105

|

+

process.on('SIGINT', async () => {

|

|

106

|

+

if (!containerEnded) {

|

|

107

|

+

await container.kill();

|

|

108

|

+

await cleanup();

|

|

109

|

+

}

|

|

110

|

+

});

|

|

111

|

+

async function cleanup() {

|

|

112

|

+

await container.remove();

|

|

113

|

+

await (0, promises_1.unlink)(paramsPath);

|

|

114

|

+

}

|

|

115

|

+

// Attach output to stdout

|

|

116

|

+

const out = await container.attach({

|

|

117

|

+

stream: true,

|

|

118

|

+

stdout: true,

|

|

119

|

+

stderr: true,

|

|

120

|

+

});

|

|

121

|

+

if (this.verbose) {

|

|

122

|

+

out.pipe(process.stdout);

|

|

123

|

+

}

|

|

124

|

+

else {

|

|

125

|

+

out.resume();

|

|

126

|

+

}

|

|

127

|

+

// Wait until generation ends

|

|

128

|

+

await new Promise((resolve, reject) => {

|

|

129

|

+

out.on('end', resolve);

|

|

130

|

+

out.on('error', reject);

|

|

131

|

+

});

|

|

132

|

+

containerEnded = true;

|

|

133

|

+

// Cleanup

|

|

134

|

+

await cleanup();

|

|

135

|

+

}

|

|

136

|

+

/**

|

|

137

|

+

* Enhance the generated LDBC SNB dataset.

|

|

138

|

+

*/

|

|

139

|

+

async enhanceSnbDataset() {

|

|

140

|

+

// Create target directory

|

|

141

|

+

await (0, promises_1.mkdir)((0, node_path_1.join)(this.cwd, 'out-enhanced'));

|

|

142

|

+

// Run enhancer

|

|

143

|

+

const oldCwd = process.cwd();

|

|

144

|

+

process.chdir(this.cwd);

|

|

145

|

+

await (0, ldbc_snb_enhancer_1.runConfig)(this.enhancementConfig, { mainModulePath: this.mainModulePath });

|

|

146

|

+

process.chdir(oldCwd);

|

|

147

|

+

}

|

|

148

|

+

/**

|

|

149

|

+

* Fragment the generated and enhanced LDBC SNB datasets.

|

|

150

|

+

*/

|

|

151

|

+

async fragmentSnbDataset() {

|

|

152

|

+

const oldCwd = process.cwd();

|

|

153

|

+

process.chdir(this.cwd);

|

|

154

|

+

// Initial fragmentation

|

|

155

|

+

await (0, rdf_dataset_fragmenter_1.runConfig)(this.fragmentConfig, { mainModulePath: this.mainModulePath });

|

|

156

|

+

process.chdir(oldCwd);

|

|

157

|

+

}

|

|

158

|

+

/**

|

|

159

|

+

* Instantiate queries based on the LDBC SNB datasets.

|

|

160

|

+

*/

|

|

161

|

+

async instantiateQueries() {

|

|

162

|

+

// Create target directory

|

|

163

|

+

await (0, promises_1.mkdir)((0, node_path_1.join)(this.cwd, 'out-queries'));

|

|

164

|

+

// Run instantiator

|

|

165

|

+

const oldCwd = process.cwd();

|

|

166

|

+

process.chdir(this.cwd);

|

|

167

|

+

await (0, sparql_query_parameter_instantiator_1.runConfig)(this.queryConfig, { mainModulePath: this.mainModulePath }, {

|

|

168

|

+

variables: await this.generateVariables(),

|

|

169

|

+

});

|

|

170

|

+

process.chdir(oldCwd);

|

|

171

|

+

}

|

|

172

|

+

/**

|

|

173

|

+

* Download validation parameters

|

|

174

|

+

*/

|

|

175

|

+

async downloadValidationParams() {

|

|

176

|

+

// Create target directory

|

|

177

|

+

const target = (0, node_path_1.join)(this.cwd, 'out-validate-params');

|

|

178

|

+

await (0, promises_1.mkdir)(target);

|

|

179

|

+

// Download and extract zip file

|

|

180

|

+

return new Promise((resolve, reject) => {

|

|

181

|

+

(0, node_https_1.request)(this.validationParams, (res) => {

|

|

182

|

+

res

|

|

183

|

+

.on('error', reject)

|

|

184

|

+

.pipe((0, unzipper_1.Extract)({ path: target }))

|

|

185

|

+

.on('error', reject)

|

|

186

|

+

.on('close', resolve);

|

|

187

|

+

}).end();

|

|

188

|

+

});

|

|

189

|

+

}

|

|

190

|

+

/**

|

|

191

|

+

* Generate validation queries and results.

|

|

192

|

+

*/

|

|

193

|

+

async generateValidation() {

|

|

194

|

+

// Create target directory

|

|

195

|

+

await (0, promises_1.mkdir)((0, node_path_1.join)(this.cwd, 'out-validate'));

|

|

196

|

+

// Run generator

|

|

197

|

+

const oldCwd = process.cwd();

|

|

198

|

+

process.chdir(this.cwd);

|

|

199

|

+

await (0, ldbc_snb_validation_generator_1.runConfig)(this.validationConfig, { mainModulePath: this.mainModulePath }, {

|

|

200

|

+

variables: await this.generateVariables(),

|

|

201

|

+

});

|

|

202

|

+

process.chdir(oldCwd);

|

|

203

|

+

}

|

|

204

|

+

async generateVariables() {

|

|

205

|

+

const templateMappings = (await (0, promises_1.readdir)((0, node_path_1.join)(__dirname, '../templates/queries/')))

|

|

206

|

+

.map(name => [`urn:variables:query-templates:${name}`, (0, node_path_1.join)(__dirname, `../templates/queries/${name}`)]);

|

|

207

|

+

const refinementMappings = (await (0, promises_1.readdir)((0, node_path_1.join)(__dirname, '../templates/refinements/')))

|

|

208

|

+

.map(name => [`urn:variables:query-refinements:${name}`, (0, node_path_1.join)(__dirname, `../templates/refinements/${name}`)]);

|

|

209

|

+

const result = Object.fromEntries([...templateMappings, ...refinementMappings]);

|

|

210

|

+

return result;

|

|

211

|

+

}

|

|

212

|

+

/**

|

|

213

|

+

* Return a string in a given color

|

|

214

|

+

* @param str The string that should be printed in

|

|

215

|

+

* @param color A given color

|

|

216

|

+

*/

|

|

217

|

+

static withColor(str, color) {

|

|

218

|

+

return `${color}${str}${Generator.COLOR_RESET}`;

|

|

219

|

+

}

|

|

220

|

+

}

|

|

221

|

+

exports.Generator = Generator;

|

|

222

|

+

Generator.COLOR_RESET = '\u001B[0m';

|

|

223

|

+

Generator.COLOR_RED = '\u001B[31m';

|

|

224

|

+

Generator.COLOR_GREEN = '\u001B[32m';

|

|

225

|

+

Generator.COLOR_YELLOW = '\u001B[33m';

|

|

226

|

+

Generator.COLOR_BLUE = '\u001B[34m';

|

|

227

|

+

Generator.COLOR_MAGENTA = '\u001B[35m';

|

|

228

|

+

Generator.COLOR_CYAN = '\u001B[36m';

|

|

229

|

+

Generator.COLOR_GRAY = '\u001B[90m';

|

|

230

|

+

Generator.LDBC_SNB_DATAGEN_DOCKER_IMAGE = 'rubensworks/ldbc_snb_datagen:latest';

|

|

231

|

+

//# sourceMappingURL=Generator.js.map

|

|

@@ -0,0 +1 @@

|

|

|

1

|

+

{"version":3,"file":"Generator.js","sourceRoot":"","sources":["Generator.ts"],"names":[],"mappings":";;;;;;AAAA,+CAAqF;AACrF,2CAAqC;AACrC,yCAAiC;AACjC,0DAAkC;AAClC,yDAA6D;AAC7D,iFAAoF;AACpF,mEAAoE;AACpE,6FAAwF;AACxF,uCAAmC;AAEnC;;GAEG;AACH,MAAa,SAAS;IAuBpB,YAAmB,IAAuB;QACxC,IAAI,CAAC,GAAG,GAAG,IAAI,CAAC,GAAG,CAAC;QACpB,IAAI,CAAC,OAAO,GAAG,IAAI,CAAC,OAAO,CAAC;QAC5B,IAAI,CAAC,SAAS,GAAG,IAAI,CAAC,SAAS,CAAC;QAChC,IAAI,CAAC,KAAK,GAAG,IAAI,CAAC,KAAK,CAAC;QACxB,IAAI,CAAC,iBAAiB,GAAG,IAAI,CAAC,iBAAiB,CAAC;QAChD,IAAI,CAAC,cAAc,GAAG,IAAI,CAAC,cAAc,CAAC;QAC1C,IAAI,CAAC,WAAW,GAAG,IAAI,CAAC,WAAW,CAAC;QACpC,IAAI,CAAC,gBAAgB,GAAG,IAAI,CAAC,gBAAgB,CAAC;QAC9C,IAAI,CAAC,gBAAgB,GAAG,IAAI,CAAC,gBAAgB,CAAC;QAC9C,IAAI,CAAC,YAAY,GAAG,IAAI,CAAC,YAAY,CAAC;QACtC,IAAI,CAAC,cAAc,GAAG,IAAA,gBAAI,EAAC,SAAS,EAAE,IAAI,CAAC,CAAC;IAC9C,CAAC;IAES,KAAK,CAAC,YAAY,CAAC,IAAY;QACvC,IAAI,CAAC;YACH,MAAM,IAAA,eAAI,EAAC,IAAI,CAAC,CAAC;YACjB,OAAO,IAAI,CAAC;QACd,CAAC;QAAC,MAAM,CAAC;YACP,OAAO,KAAK,CAAC;QACf,CAAC;IACH,CAAC;IAES,GAAG,CAAC,KAAa,EAAE,MAAc;QACzC,OAAO,CAAC,MAAM,CAAC,KAAK,CAAC,GAAG,SAAS,CAAC,SAAS,CAAC,IAAI,KAAK,GAAG,EAAE,SAAS,CAAC,UAAU,CAAC,IAAI,MAAM,IAAI,CAAC,CAAC;IACjG,CAAC;IAES,KAAK,CAAC,QAAQ,CAAC,IAAY,EAAE,SAAiB,EAAE,MAA2B;QACnF,IAAI,IAAI,CAAC,SAAS,IAAI,CAAC,MAAM,IAAI,CAAC,YAAY,CAAC,IAAA,gBAAI,EAAC,IAAI,CAAC,GAAG,EAAE,SAAS,CAAC,CAAC,EAAE,CAAC;YAC1E,IAAI,CAAC,GAAG,CAAC,IAAI,EAAE,SAAS,CAAC,CAAC;YAC1B,MAAM,SAAS,GAAG,OAAO,CAAC,MAAM,EAAE,CAAC;YACnC,MAAM,MAAM,EAAE,CAAC;YACf,MAAM,OAAO,GAAG,OAAO,CAAC,MAAM,CAAC,SAAS,CAAC,CAAC;YAC1C,IAAI,CAAC,GAAG,CAAC,IAAI,EAAE,WAAW,OAAO,CAAC,CAAC,CAAC,GAAG,CAAC,OAAO,CAAC,CAAC,CAAC,GAAG,aAAa,CAAC,UAAU,CAAC,CAAC;QACjF,CAAC;aAAM,CAAC;YACN,IAAI,CAAC,GAAG,CAAC,IAAI,EAAE,aAAa,SAAS,wCAAwC,CAAC,CAAC;QACjF,CAAC;IACH,CAAC;IAED;;OAEG;IACI,KAAK,CAAC,QAAQ;QACnB,MAAM,SAAS,GAAG,OAAO,CAAC,MAAM,EAAE,CAAC;QACnC,MAAM,IAAI,CAAC,QAAQ,CAAC,uBAAuB,EAAE,SAAS,EAAE,GAAG,EAAE,CAAC,IAAI,CAAC,kBAAkB,EAAE,CAAC,CAAC;QACzF,MAAM,IAAI,CAAC,QAAQ,CAAC,sBAAsB,EAAE,cAAc,EAAE,GAAG,EAAE,CAAC,IAAI,CAAC,iBAAiB,EAAE,CAAC,CAAC;QAC5F,MAAM,IAAI,CAAC,QAAQ,CAAC,wBAAwB,EAAE,eAAe,EAAE,GAAG,EAAE,CAAC,IAAI,CAAC,kBAAkB,EAAE,CAAC,CAAC;QAChG,MAAM,IAAI,CAAC,QAAQ,CAAC,2BAA2B,EAAE,aAAa,EAAE,GAAG,EAAE,CAAC,IAAI,CAAC,kBAAkB,EAAE,CAAC,CAAC;QACjG,IAAI,IAAI,CAAC,gBAAgB,IAAI,IAAI,CAAC,gBAAgB,EAAE,CAAC;YACnD,MAAM,IAAI,CAAC,QAAQ,CAAC,2BAA2B,EAAE,qBAAqB,EAAE,GAAG,EAAE,CAAC,IAAI,CAAC,wBAAwB,EAAE,CAAC,CAAC;YAC/G,MAAM,IAAI,CAAC,QAAQ,CAAC,0BAA0B,EAAE,cAAc,EAAE,GAAG,EAAE,CAAC,IAAI,CAAC,kBAAkB,EAAE,CAAC,CAAC;QACnG,CAAC;QACD,MAAM,OAAO,GAAG,OAAO,CAAC,MAAM,CAAC,SAAS,CAAC,CAAC;QAC1C,IAAI,CAAC,GAAG,CAAC,KAAK,EAAE,WAAW,OAAO,CAAC,CAAC,CAAC,GAAG,CAAC,OAAO,CAAC,CAAC,CAAC,GAAG,aAAa,CAAC,UAAU,CAAC,CAAC;IAClF,CAAC;IAED;;OAEG;IACI,KAAK,CAAC,kBAAkB;QAC7B,yBAAyB;QACzB,MAAM,cAAc,GAAG,MAAM,IAAA,mBAAQ,EAAC,IAAA,gBAAI,EAAC,SAAS,EAAE,yBAAyB,CAAC,EAAE,MAAM,CAAC,CAAC;QAC1F,MAAM,UAAU,GAAG,IAAA,gBAAI,EAAC,IAAI,CAAC,GAAG,EAAE,YAAY,CAAC,CAAC;QAChD,MAAM,IAAA,oBAAS,EAAC,UAAU,EAAE,cAAc,CAAC,UAAU,CAAC,OAAO,EAAE,IAAI,CAAC,KAAK,CAAC,EAAE,MAAM,CAAC,CAAC;QAEpF,6BAA6B;QAC7B,MAAM,SAAS,GAAG,IAAI,mBAAS,EAAE,CAAC;QAClC,MAAM,WAAW,GAAG,MAAM,SAAS,CAAC,IAAI,CAAC,SAAS,CAAC,6BAA6B,CAAC,CAAC;QAClF,MAAM,IAAI,OAAO,CAAC,CAAC,OAAO,EAAE,MAAM,EAAE,EAAE;YACpC,SAAS,CAAC,KAAK,CAAC,cAAc,CAAC,WAAW,EAAE,CAAC,GAAiB,EAAE,GAAU,EAAE,EAAE,CAAC,GAAG,CAAC,CAAC,CAAC,MAAM,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC,OAAO,CAAC,GAAG,CAAC,CAAC,CAAC;QACnH,CAAC,CAAC,CAAC;QAEH,yBAAyB;QACzB,MAAM,SAAS,GAAG,MAAM,SAAS,CAAC,eAAe,CAAC;YAChD,KAAK,EAAE,SAAS,CAAC,6BAA6B;YAC9C,GAAG,EAAE,IAAI;YACT,YAAY,EAAE,IAAI;YAClB,YAAY,EAAE,IAAI;YAClB,GAAG,EAAE,CAAE,0BAA0B,IAAI,CAAC,YAAY,EAAE,CAAE;YACtD,UAAU,EAAE;gBACV,KAAK,EAAE;oBACL,GAAG,IAAI,CAAC,GAAG,qCAAqC;oBAChD,GAAG,UAAU,mCAAmC;iBACjD;aACF;SACF,CAAC,CAAC;QACH,MAAM,SAAS,CAAC,KAAK,EAAE,CAAC;QAExB,6BAA6B;QAC7B,IAAI,cAAc,GAAG,KAAK,CAAC;QAC3B,kDAAkD;QAClD,OAAO,CAAC,EAAE,CAAC,QAAQ,EAAE,KAAK,IAAG,EAAE;YAC7B,IAAI,CAAC,cAAc,EAAE,CAAC;gBACpB,MAAM,SAAS,CAAC,IAAI,EAAE,CAAC;gBACvB,MAAM,OAAO,EAAE,CAAC;YAClB,CAAC;QACH,CAAC,CAAC,CAAC;QACH,KAAK,UAAU,OAAO;YACpB,MAAM,SAAS,CAAC,MAAM,EAAE,CAAC;YACzB,MAAM,IAAA,iBAAM,EAAC,UAAU,CAAC,CAAC;QAC3B,CAAC;QAED,0BAA0B;QAC1B,MAAM,GAAG,GAAG,MAAM,SAAS,CAAC,MAAM,CAAC;YACjC,MAAM,EAAE,IAAI;YACZ,MAAM,EAAE,IAAI;YACZ,MAAM,EAAE,IAAI;SACb,CAAC,CAAC;QACH,IAAI,IAAI,CAAC,OAAO,EAAE,CAAC;YACjB,GAAG,CAAC,IAAI,CAAC,OAAO,CAAC,MAAM,CAAC,CAAC;QAC3B,CAAC;aAAM,CAAC;YACN,GAAG,CAAC,MAAM,EAAE,CAAC;QACf,CAAC;QAED,6BAA6B;QAC7B,MAAM,IAAI,OAAO,CAAC,CAAC,OAAO,EAAE,MAAM,EAAE,EAAE;YACpC,GAAG,CAAC,EAAE,CAAC,KAAK,EAAE,OAAO,CAAC,CAAC;YACvB,GAAG,CAAC,EAAE,CAAC,OAAO,EAAE,MAAM,CAAC,CAAC;QAC1B,CAAC,CAAC,CAAC;QACH,cAAc,GAAG,IAAI,CAAC;QAEtB,UAAU;QACV,MAAM,OAAO,EAAE,CAAC;IAClB,CAAC;IAED;;OAEG;IACI,KAAK,CAAC,iBAAiB;QAC5B,0BAA0B;QAC1B,MAAM,IAAA,gBAAK,EAAC,IAAA,gBAAI,EAAC,IAAI,CAAC,GAAG,EAAE,cAAc,CAAC,CAAC,CAAC;QAE5C,eAAe;QACf,MAAM,MAAM,GAAG,OAAO,CAAC,GAAG,EAAE,CAAC;QAC7B,OAAO,CAAC,KAAK,CAAC,IAAI,CAAC,GAAG,CAAC,CAAC;QACxB,MAAM,IAAA,6BAAW,EAAC,IAAI,CAAC,iBAAiB,EAAE,EAAE,cAAc,EAAE,IAAI,CAAC,cAAc,EAAE,CAAC,CAAC;QACnF,OAAO,CAAC,KAAK,CAAC,MAAM,CAAC,CAAC;IACxB,CAAC;IAED;;OAEG;IACI,KAAK,CAAC,kBAAkB;QAC7B,MAAM,MAAM,GAAG,OAAO,CAAC,GAAG,EAAE,CAAC;QAC7B,OAAO,CAAC,KAAK,CAAC,IAAI,CAAC,GAAG,CAAC,CAAC;QAExB,wBAAwB;QACxB,MAAM,IAAA,kCAAa,EAAC,IAAI,CAAC,cAAc,EAAE,EAAE,cAAc,EAAE,IAAI,CAAC,cAAc,EAAE,CAAC,CAAC;QAElF,OAAO,CAAC,KAAK,CAAC,MAAM,CAAC,CAAC;IACxB,CAAC;IAED;;OAEG;IACI,KAAK,CAAC,kBAAkB;QAC7B,0BAA0B;QAC1B,MAAM,IAAA,gBAAK,EAAC,IAAA,gBAAI,EAAC,IAAI,CAAC,GAAG,EAAE,aAAa,CAAC,CAAC,CAAC;QAE3C,mBAAmB;QACnB,MAAM,MAAM,GAAG,OAAO,CAAC,GAAG,EAAE,CAAC;QAC7B,OAAO,CAAC,KAAK,CAAC,IAAI,CAAC,GAAG,CAAC,CAAC;QACxB,MAAM,IAAA,+CAAoB,EAAC,IAAI,CAAC,WAAW,EAAE,EAAE,cAAc,EAAE,IAAI,CAAC,cAAc,EAAE,EAAE;YACpF,SAAS,EAAE,MAAM,IAAI,CAAC,iBAAiB,EAAE;SAC1C,CAAC,CAAC;QACH,OAAO,CAAC,KAAK,CAAC,MAAM,CAAC,CAAC;IACxB,CAAC;IAED;;OAEG;IACI,KAAK,CAAC,wBAAwB;QACnC,0BAA0B;QAC1B,MAAM,MAAM,GAAG,IAAA,gBAAI,EAAC,IAAI,CAAC,GAAG,EAAE,qBAAqB,CAAC,CAAC;QACrD,MAAM,IAAA,gBAAK,EAAC,MAAM,CAAC,CAAC;QAEpB,gCAAgC;QAChC,OAAO,IAAI,OAAO,CAAC,CAAC,OAAO,EAAE,MAAM,EAAE,EAAE;YACrC,IAAA,oBAAO,EAAC,IAAI,CAAC,gBAAiB,EAAE,CAAC,GAAG,EAAE,EAAE;gBACtC,GAAG;qBACA,EAAE,CAAC,OAAO,EAAE,MAAM,CAAC;qBACnB,IAAI,CAAC,IAAA,kBAAO,EAAC,EAAE,IAAI,EAAE,MAAM,EAAE,CAAC,CAAC;qBAC/B,EAAE,CAAC,OAAO,EAAE,MAAM,CAAC;qBACnB,EAAE,CAAC,OAAO,EAAE,OAAO,CAAC,CAAC;YAC1B,CAAC,CAAC,CAAC,GAAG,EAAE,CAAC;QACX,CAAC,CAAC,CAAC;IACL,CAAC;IAED;;OAEG;IACI,KAAK,CAAC,kBAAkB;QAC7B,0BAA0B;QAC1B,MAAM,IAAA,gBAAK,EAAC,IAAA,gBAAI,EAAC,IAAI,CAAC,GAAG,EAAE,cAAc,CAAC,CAAC,CAAC;QAE5C,gBAAgB;QAChB,MAAM,MAAM,GAAG,OAAO,CAAC,GAAG,EAAE,CAAC;QAC7B,OAAO,CAAC,KAAK,CAAC,IAAI,CAAC,GAAG,CAAC,CAAC;QACxB,MAAM,IAAA,yCAAsB,EAAC,IAAI,CAAC,gBAAiB,EAAE,EAAE,cAAc,EAAE,IAAI,CAAC,cAAc,EAAE,EAAE;YAC5F,SAAS,EAAE,MAAM,IAAI,CAAC,iBAAiB,EAAE;SAC1C,CAAC,CAAC;QACH,OAAO,CAAC,KAAK,CAAC,MAAM,CAAC,CAAC;IACxB,CAAC;IAES,KAAK,CAAC,iBAAiB;QAC/B,MAAM,gBAAgB,GAAe,CAAC,MAAM,IAAA,kBAAO,EAAC,IAAA,gBAAI,EAAC,SAAS,EAAE,uBAAuB,CAAC,CAAC,CAAC;aAC3F,GAAG,CAAC,IAAI,CAAC,EAAE,CAAC,CAAE,iCAAiC,IAAI,EAAE,EAAE,IAAA,gBAAI,EAAC,SAAS,EAAE,wBAAwB,IAAI,EAAE,CAAC,CAAE,CAAC,CAAC;QAC7G,MAAM,kBAAkB,GAAe,CAAC,MAAM,IAAA,kBAAO,EAAC,IAAA,gBAAI,EAAC,SAAS,EAAE,2BAA2B,CAAC,CAAC,CAAC;aACjG,GAAG,CAAC,IAAI,CAAC,EAAE,CAAC,CAAE,mCAAmC,IAAI,EAAE,EAAE,IAAA,gBAAI,EAAC,SAAS,EAAE,4BAA4B,IAAI,EAAE,CAAC,CAAE,CAAC,CAAC;QACnH,MAAM,MAAM,GAA4B,MAAM,CAAC,WAAW,CAAC,CAAE,GAAG,gBAAgB,EAAE,GAAG,kBAAkB,CAAE,CAAC,CAAC;QAC3G,OAAO,MAAM,CAAC;IAChB,CAAC;IAED;;;;OAIG;IACI,MAAM,CAAC,SAAS,CAAC,GAAQ,EAAE,KAAa;QAC7C,OAAO,GAAG,KAAK,GAAG,GAAG,GAAG,SAAS,CAAC,WAAW,EAAE,CAAC;IAClD,CAAC;;AAnPH,8BAoPC;AAnPwB,qBAAW,GAAW,WAAW,CAAC;AAClC,mBAAS,GAAW,YAAY,CAAC;AACjC,qBAAW,GAAW,YAAY,CAAC;AACnC,sBAAY,GAAW,YAAY,CAAC;AACpC,oBAAU,GAAW,YAAY,CAAC;AAClC,uBAAa,GAAW,YAAY,CAAC;AACrC,oBAAU,GAAW,YAAY,CAAC;AAClC,oBAAU,GAAW,YAAY,CAAC;AAClC,uCAA6B,GAAW,qCAAqC,CAAC","sourcesContent":["import { stat, unlink, mkdir, readdir, readFile, writeFile } from 'node:fs/promises';\nimport { request } from 'node:https';\nimport { join } from 'node:path';\nimport Dockerode from 'dockerode';\nimport { runConfig as runEnhancer } from 'ldbc-snb-enhancer';\nimport { runConfig as runValidationGenerator } from 'ldbc-snb-validation-generator';\nimport { runConfig as runFragmenter } from 'rdf-dataset-fragmenter';\nimport { runConfig as runQueryInstantiator } from 'sparql-query-parameter-instantiator';\nimport { Extract } from 'unzipper';\n\n/**\n * Generates decentralized social network data in different phases.\n */\nexport class Generator {\n public static readonly COLOR_RESET: string = '\\u001B[0m';\n public static readonly COLOR_RED: string = '\\u001B[31m';\n public static readonly COLOR_GREEN: string = '\\u001B[32m';\n public static readonly COLOR_YELLOW: string = '\\u001B[33m';\n public static readonly COLOR_BLUE: string = '\\u001B[34m';\n public static readonly COLOR_MAGENTA: string = '\\u001B[35m';\n public static readonly COLOR_CYAN: string = '\\u001B[36m';\n public static readonly COLOR_GRAY: string = '\\u001B[90m';\n public static readonly LDBC_SNB_DATAGEN_DOCKER_IMAGE: string = 'rubensworks/ldbc_snb_datagen:latest';\n\n private readonly cwd: string;\n private readonly verbose: boolean;\n private readonly overwrite: boolean;\n private readonly scale: string;\n private readonly enhancementConfig: string;\n private readonly fragmentConfig: string;\n private readonly queryConfig: string;\n private readonly validationParams: string | undefined;\n private readonly validationConfig: string | undefined;\n private readonly hadoopMemory: string;\n private readonly mainModulePath: string;\n\n public constructor(opts: IGeneratorOptions) {\n this.cwd = opts.cwd;\n this.verbose = opts.verbose;\n this.overwrite = opts.overwrite;\n this.scale = opts.scale;\n this.enhancementConfig = opts.enhancementConfig;\n this.fragmentConfig = opts.fragmentConfig;\n this.queryConfig = opts.queryConfig;\n this.validationParams = opts.validationParams;\n this.validationConfig = opts.validationConfig;\n this.hadoopMemory = opts.hadoopMemory;\n this.mainModulePath = join(__dirname, '..');\n }\n\n protected async targetExists(path: string): Promise<boolean> {\n try {\n await stat(path);\n return true;\n } catch {\n return false;\n }\n }\n\n protected log(phase: string, status: string): void {\n process.stdout.write(`${Generator.withColor(`[${phase}]`, Generator.COLOR_CYAN)} ${status}\\n`);\n }\n\n protected async runPhase(name: string, directory: string, runner: () => Promise<void>): Promise<void> {\n if (this.overwrite || !await this.targetExists(join(this.cwd, directory))) {\n this.log(name, 'Started');\n const timeStart = process.hrtime();\n await runner();\n const timeEnd = process.hrtime(timeStart);\n this.log(name, `Done in ${timeEnd[0] + (timeEnd[1] / 1_000_000_000)} seconds`);\n } else {\n this.log(name, `Skipped (/${directory} already exists, remove to regenerate)`);\n }\n }\n\n /**\n * Run all generator phases.\n */\n public async generate(): Promise<void> {\n const timeStart = process.hrtime();\n await this.runPhase('SNB dataset generator', 'out-snb', () => this.generateSnbDataset());\n await this.runPhase('SNB dataset enhancer', 'out-enhanced', () => this.enhanceSnbDataset());\n await this.runPhase('SNB dataset fragmenter', 'out-fragments', () => this.fragmentSnbDataset());\n await this.runPhase('SPARQL query instantiator', 'out-queries', () => this.instantiateQueries());\n if (this.validationParams && this.validationConfig) {\n await this.runPhase('SNB validation downloader', 'out-validate-params', () => this.downloadValidationParams());\n await this.runPhase('SNB validation generator', 'out-validate', () => this.generateValidation());\n }\n const timeEnd = process.hrtime(timeStart);\n this.log('All', `Done in ${timeEnd[0] + (timeEnd[1] / 1_000_000_000)} seconds`);\n }\n\n /**\n * Invoke the LDBC SNB generator.\n */\n public async generateSnbDataset(): Promise<void> {\n // Create params.ini file\n const paramsTemplate = await readFile(join(__dirname, '../templates/params.ini'), 'utf8');\n const paramsPath = join(this.cwd, 'params.ini');\n await writeFile(paramsPath, paramsTemplate.replaceAll('SCALE', this.scale), 'utf8');\n\n // Pull the base Docker image\n const dockerode = new Dockerode();\n const buildStream = await dockerode.pull(Generator.LDBC_SNB_DATAGEN_DOCKER_IMAGE);\n await new Promise((resolve, reject) => {\n dockerode.modem.followProgress(buildStream, (err: Error | null, res: any[]) => err ? reject(err) : resolve(res));\n });\n\n // Start Docker container\n const container = await dockerode.createContainer({\n Image: Generator.LDBC_SNB_DATAGEN_DOCKER_IMAGE,\n Tty: true,\n AttachStdout: true,\n AttachStderr: true,\n Env: [ `HADOOP_CLIENT_OPTS=-Xmx${this.hadoopMemory}` ],\n HostConfig: {\n Binds: [\n `${this.cwd}/out-snb/:/opt/ldbc_snb_datagen/out`,\n `${paramsPath}:/opt/ldbc_snb_datagen/params.ini`,\n ],\n },\n });\n await container.start();\n\n // Stop process on force-exit\n let containerEnded = false;\n // eslint-disable-next-line ts/no-misused-promises\n process.on('SIGINT', async() => {\n if (!containerEnded) {\n await container.kill();\n await cleanup();\n }\n });\n async function cleanup(): Promise<void> {\n await container.remove();\n await unlink(paramsPath);\n }\n\n // Attach output to stdout\n const out = await container.attach({\n stream: true,\n stdout: true,\n stderr: true,\n });\n if (this.verbose) {\n out.pipe(process.stdout);\n } else {\n out.resume();\n }\n\n // Wait until generation ends\n await new Promise((resolve, reject) => {\n out.on('end', resolve);\n out.on('error', reject);\n });\n containerEnded = true;\n\n // Cleanup\n await cleanup();\n }\n\n /**\n * Enhance the generated LDBC SNB dataset.\n */\n public async enhanceSnbDataset(): Promise<void> {\n // Create target directory\n await mkdir(join(this.cwd, 'out-enhanced'));\n\n // Run enhancer\n const oldCwd = process.cwd();\n process.chdir(this.cwd);\n await runEnhancer(this.enhancementConfig, { mainModulePath: this.mainModulePath });\n process.chdir(oldCwd);\n }\n\n /**\n * Fragment the generated and enhanced LDBC SNB datasets.\n */\n public async fragmentSnbDataset(): Promise<void> {\n const oldCwd = process.cwd();\n process.chdir(this.cwd);\n\n // Initial fragmentation\n await runFragmenter(this.fragmentConfig, { mainModulePath: this.mainModulePath });\n\n process.chdir(oldCwd);\n }\n\n /**\n * Instantiate queries based on the LDBC SNB datasets.\n */\n public async instantiateQueries(): Promise<void> {\n // Create target directory\n await mkdir(join(this.cwd, 'out-queries'));\n\n // Run instantiator\n const oldCwd = process.cwd();\n process.chdir(this.cwd);\n await runQueryInstantiator(this.queryConfig, { mainModulePath: this.mainModulePath }, {\n variables: await this.generateVariables(),\n });\n process.chdir(oldCwd);\n }\n\n /**\n * Download validation parameters\n */\n public async downloadValidationParams(): Promise<void> {\n // Create target directory\n const target = join(this.cwd, 'out-validate-params');\n await mkdir(target);\n\n // Download and extract zip file\n return new Promise((resolve, reject) => {\n request(this.validationParams!, (res) => {\n res\n .on('error', reject)\n .pipe(Extract({ path: target }))\n .on('error', reject)\n .on('close', resolve);\n }).end();\n });\n }\n\n /**\n * Generate validation queries and results.\n */\n public async generateValidation(): Promise<void> {\n // Create target directory\n await mkdir(join(this.cwd, 'out-validate'));\n\n // Run generator\n const oldCwd = process.cwd();\n process.chdir(this.cwd);\n await runValidationGenerator(this.validationConfig!, { mainModulePath: this.mainModulePath }, {\n variables: await this.generateVariables(),\n });\n process.chdir(oldCwd);\n }\n\n protected async generateVariables(): Promise<Record<string, string>> {\n const templateMappings: string[][] = (await readdir(join(__dirname, '../templates/queries/')))\n .map(name => [ `urn:variables:query-templates:${name}`, join(__dirname, `../templates/queries/${name}`) ]);\n const refinementMappings: string[][] = (await readdir(join(__dirname, '../templates/refinements/')))\n .map(name => [ `urn:variables:query-refinements:${name}`, join(__dirname, `../templates/refinements/${name}`) ]);\n const result = <Record<string, string>> Object.fromEntries([ ...templateMappings, ...refinementMappings ]);\n return result;\n }\n\n /**\n * Return a string in a given color\n * @param str The string that should be printed in\n * @param color A given color\n */\n public static withColor(str: any, color: string): string {\n return `${color}${str}${Generator.COLOR_RESET}`;\n }\n}\n\nexport interface IGeneratorOptions {\n cwd: string;\n verbose: boolean;\n overwrite: boolean;\n scale: string;\n enhancementConfig: string;\n fragmentConfig: string;\n queryConfig: string;\n validationParams?: string;\n validationConfig?: string;\n hadoopMemory: string;\n}\n"]}

|

package/lib/Server.d.ts

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

1

|

+

/**

|

|

2

|

+

* Serves generated fragments over HTTP.

|

|

3

|

+

*/

|

|

4

|

+

export declare class Server {

|

|

5

|

+

private readonly configPath;

|

|

6

|

+

private readonly port;

|

|

7

|

+

private readonly baseUrl;

|

|

8

|

+

private readonly rootFilePath;

|

|

9

|

+

private readonly logLevel;

|

|

10

|

+

constructor(options: IServerOptions);

|

|

11

|

+

serve(): Promise<void>;

|

|

12

|

+

}

|

|

13

|

+

export interface IServerOptions {

|

|

14

|

+

configPath: string;

|

|

15

|

+

port: number;

|

|

16

|

+

baseUrl: string | undefined;

|

|

17

|

+

rootFilePath: string;

|

|

18

|

+

logLevel: string;

|

|

19

|

+

}

|