zrb 1.8.8__py3-none-any.whl → 1.8.10__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- zrb/builtin/llm/tool/code.py +53 -31

- zrb/builtin/llm/tool/file.py +13 -17

- zrb/builtin/llm/tool/sub_agent.py +4 -2

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/requirements.txt +2 -2

- zrb/config.py +2 -2

- zrb/llm_config.py +186 -75

- zrb/llm_rate_limitter.py +23 -3

- zrb/task/llm/agent.py +45 -2

- {zrb-1.8.8.dist-info → zrb-1.8.10.dist-info}/METADATA +40 -34

- {zrb-1.8.8.dist-info → zrb-1.8.10.dist-info}/RECORD +12 -12

- {zrb-1.8.8.dist-info → zrb-1.8.10.dist-info}/WHEEL +0 -0

- {zrb-1.8.8.dist-info → zrb-1.8.10.dist-info}/entry_points.txt +0 -0

zrb/builtin/llm/tool/code.py

CHANGED

|

@@ -1,14 +1,16 @@

|

|

|

1

|

+

import json

|

|

1

2

|

import os

|

|

2

3

|

|

|

3

4

|

from zrb.builtin.llm.tool.file import DEFAULT_EXCLUDED_PATTERNS, is_excluded

|

|

4

5

|

from zrb.builtin.llm.tool.sub_agent import create_sub_agent_tool

|

|

5

6

|

from zrb.context.any_context import AnyContext

|

|

7

|

+

from zrb.llm_rate_limitter import llm_rate_limitter

|

|

6

8

|

|

|

7

9

|

_EXTRACT_INFO_FROM_REPO_SYSTEM_PROMPT = """

|

|

8

10

|

You are an extraction info agent.

|

|

9

|

-

Your goal is to help to extract relevant information to help the main

|

|

11

|

+

Your goal is to help to extract relevant information to help the main assistant.

|

|

10

12

|

You write your output is in markdown format containing path and relevant information.

|

|

11

|

-

Extract only information that relevant to main

|

|

13

|

+

Extract only information that relevant to main assistant's goal.

|

|

12

14

|

|

|

13

15

|

Extracted Information format (Use this as reference, extract relevant information only):

|

|

14

16

|

# <file-name>

|

|

@@ -30,9 +32,9 @@ Extracted Information format (Use this as reference, extract relevant informatio

|

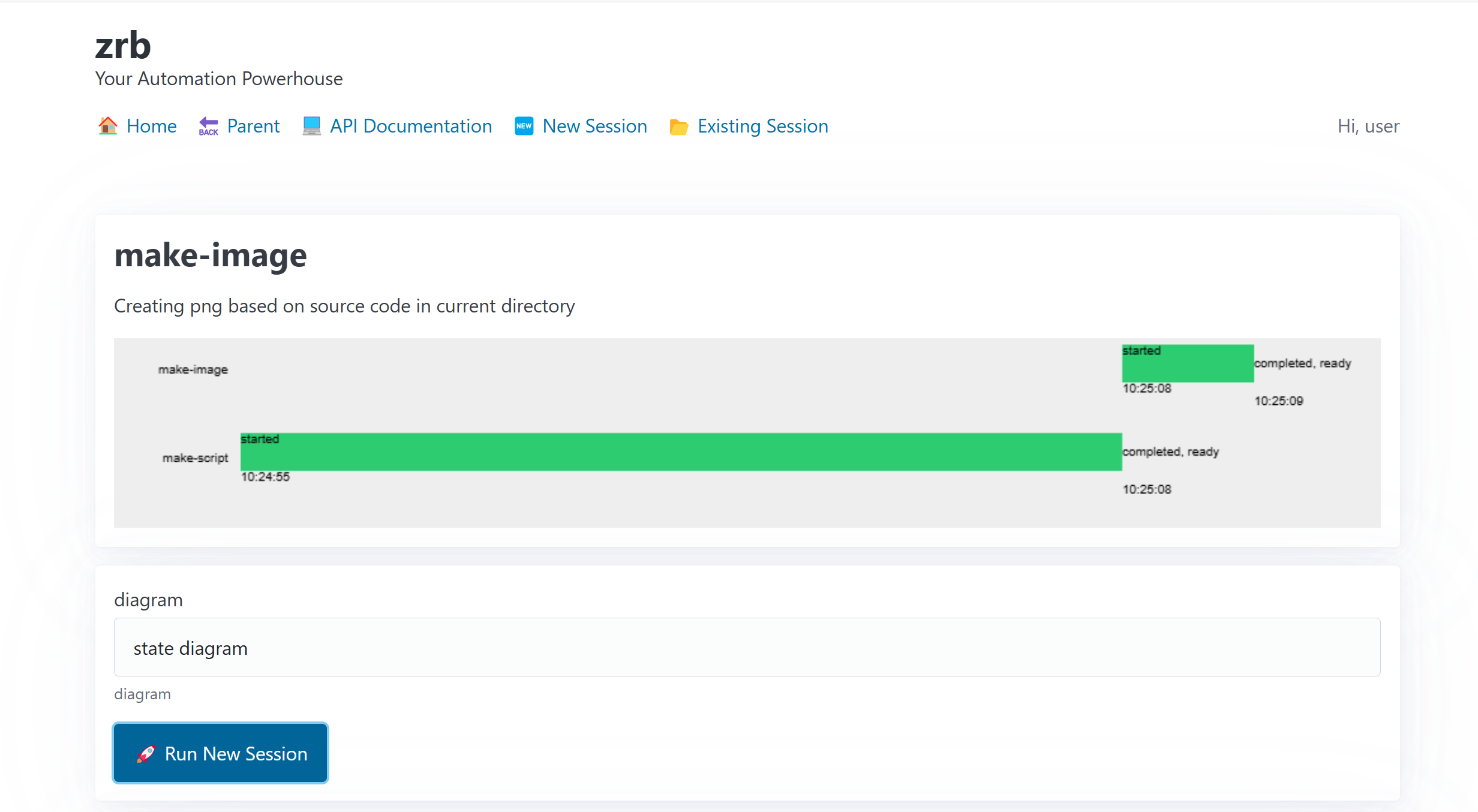

|

|

30

32

|

|

|

31

33

|

_SUMMARIZE_INFO_SYSTEM_PROMPT = """

|

|

32

34

|

You are an information summarization agent.

|

|

33

|

-

Your goal is to summarize information to help the main

|

|

35

|

+

Your goal is to summarize information to help the main assistant.

|

|

34

36

|

The summarization result should contains all necessary details

|

|

35

|

-

to help main

|

|

37

|

+

to help main assistant achieve the goal.

|

|

36

38

|

"""

|

|

37

39

|

|

|

38

40

|

_DEFAULT_EXTENSIONS = [

|

|

@@ -80,8 +82,8 @@ async def analyze_repo(

|

|

|

80

82

|

goal: str,

|

|

81

83

|

extensions: list[str] = _DEFAULT_EXTENSIONS,

|

|

82

84

|

exclude_patterns: list[str] = DEFAULT_EXCLUDED_PATTERNS,

|

|

83

|

-

|

|

84

|

-

|

|

85

|

+

extraction_token_limit: int = 30000,

|

|

86

|

+

summarization_token_limit: int = 30000,

|

|

85

87

|

) -> str:

|

|

86

88

|

"""

|

|

87

89

|

Extract and summarize information from a directory that probably

|

|

@@ -100,9 +102,9 @@ async def analyze_repo(

|

|

|

100

102

|

while reading resources. Defaults to common programming languages and config files.

|

|

101

103

|

exclude_patterns(Optional[list[str]]): List of patterns to exclude from analysis.

|

|

102

104

|

Common patterns like '.venv', 'node_modules' should be excluded by default.

|

|

103

|

-

|

|

105

|

+

extraction_token_limit(Optional[int]): Max resource content char length

|

|

104

106

|

the extraction assistant able to handle. Defaults to 150000

|

|

105

|

-

|

|

107

|

+

summarization_token_limit(Optional[int]): Max resource content char length

|

|

106

108

|

the summarization assistant able to handle. Defaults to 150000

|

|

107

109

|

Returns:

|

|

108

110

|

str: The analysis result

|

|

@@ -116,22 +118,19 @@ async def analyze_repo(

|

|

|

116

118

|

ctx,

|

|

117

119

|

file_metadatas=file_metadatas,

|

|

118

120

|

goal=goal,

|

|

119

|

-

|

|

121

|

+

token_limit=extraction_token_limit,

|

|

120

122

|

)

|

|

123

|

+

if len(extracted_infos) == 1:

|

|

124

|

+

return extracted_infos[0]

|

|

121

125

|

ctx.print("Summarization")

|

|

122

|

-

summarized_infos =

|

|

123

|

-

ctx,

|

|

124

|

-

extracted_infos=extracted_infos,

|

|

125

|

-

goal=goal,

|

|

126

|

-

char_limit=summarization_char_limit,

|

|

127

|

-

)

|

|

126

|

+

summarized_infos = extracted_infos

|

|

128

127

|

while len(summarized_infos) > 1:

|

|

129

128

|

ctx.print("Summarization")

|

|

130

129

|

summarized_infos = await _summarize_info(

|

|

131

130

|

ctx,

|

|

132

131

|

extracted_infos=summarized_infos,

|

|

133

132

|

goal=goal,

|

|

134

|

-

|

|

133

|

+

token_limit=summarization_token_limit,

|

|

135

134

|

)

|

|

136

135

|

return summarized_infos[0]

|

|

137

136

|

|

|

@@ -164,7 +163,7 @@ async def _extract_info(

|

|

|

164

163

|

ctx: AnyContext,

|

|

165

164

|

file_metadatas: list[dict[str, str]],

|

|

166

165

|

goal: str,

|

|

167

|

-

|

|

166

|

+

token_limit: int,

|

|

168

167

|

) -> list[str]:

|

|

169

168

|

extract = create_sub_agent_tool(

|

|

170

169

|

tool_name="extract",

|

|

@@ -172,37 +171,50 @@ async def _extract_info(

|

|

|

172

171

|

system_prompt=_EXTRACT_INFO_FROM_REPO_SYSTEM_PROMPT,

|

|

173

172

|

)

|

|

174

173

|

extracted_infos = []

|

|

175

|

-

content_buffer =

|

|

174

|

+

content_buffer = []

|

|

175

|

+

current_token_count = 0

|

|

176

176

|

for metadata in file_metadatas:

|

|

177

177

|

path = metadata.get("path", "")

|

|

178

178

|

content = metadata.get("content", "")

|

|

179

|

-

|

|

180

|

-

|

|

179

|

+

file_obj = {"path": path, "content": content}

|

|

180

|

+

file_str = json.dumps(file_obj)

|

|

181

|

+

if current_token_count + llm_rate_limitter.count_token(file_str) > token_limit:

|

|

181

182

|

if content_buffer:

|

|

182

183

|

prompt = _create_extract_info_prompt(goal, content_buffer)

|

|

183

|

-

extracted_info = await extract(

|

|

184

|

+

extracted_info = await extract(

|

|

185

|

+

ctx, llm_rate_limitter.clip_prompt(prompt, token_limit)

|

|

186

|

+

)

|

|

184

187

|

extracted_infos.append(extracted_info)

|

|

185

|

-

content_buffer =

|

|

188

|

+

content_buffer = [file_obj]

|

|

189

|

+

current_token_count = llm_rate_limitter.count_token(file_str)

|

|

186

190

|

else:

|

|

187

|

-

content_buffer

|

|

191

|

+

content_buffer.append(file_obj)

|

|

192

|

+

current_token_count += llm_rate_limitter.count_token(file_str)

|

|

188

193

|

|

|

189

194

|

# Process any remaining content in the buffer

|

|

190

195

|

if content_buffer:

|

|

191

196

|

prompt = _create_extract_info_prompt(goal, content_buffer)

|

|

192

|

-

extracted_info = await extract(

|

|

197

|

+

extracted_info = await extract(

|

|

198

|

+

ctx, llm_rate_limitter.clip_prompt(prompt, token_limit)

|

|

199

|

+

)

|

|

193

200

|

extracted_infos.append(extracted_info)

|

|

194

201

|

return extracted_infos

|

|

195

202

|

|

|

196

203

|

|

|

197

|

-

def _create_extract_info_prompt(goal: str, content_buffer:

|

|

198

|

-

return

|

|

204

|

+

def _create_extract_info_prompt(goal: str, content_buffer: list[dict]) -> str:

|

|

205

|

+

return json.dumps(

|

|

206

|

+

{

|

|

207

|

+

"main_assistant_goal": goal,

|

|

208

|

+

"files": content_buffer,

|

|

209

|

+

}

|

|

210

|

+

)

|

|

199

211

|

|

|

200

212

|

|

|

201

213

|

async def _summarize_info(

|

|

202

214

|

ctx: AnyContext,

|

|

203

215

|

extracted_infos: list[str],

|

|

204

216

|

goal: str,

|

|

205

|

-

|

|

217

|

+

token_limit: int,

|

|

206

218

|

) -> list[str]:

|

|

207

219

|

summarize = create_sub_agent_tool(

|

|

208

220

|

tool_name="extract",

|

|

@@ -212,10 +224,13 @@ async def _summarize_info(

|

|

|

212

224

|

summarized_infos = []

|

|

213

225

|

content_buffer = ""

|

|

214

226

|

for extracted_info in extracted_infos:

|

|

215

|

-

|

|

227

|

+

new_prompt = content_buffer + extracted_info

|

|

228

|

+

if llm_rate_limitter.count_token(new_prompt) > token_limit:

|

|

216

229

|

if content_buffer:

|

|

217

230

|

prompt = _create_summarize_info_prompt(goal, content_buffer)

|

|

218

|

-

summarized_info = await summarize(

|

|

231

|

+

summarized_info = await summarize(

|

|

232

|

+

ctx, llm_rate_limitter.clip_prompt(prompt, token_limit)

|

|

233

|

+

)

|

|

219

234

|

summarized_infos.append(summarized_info)

|

|

220

235

|

content_buffer = extracted_info

|

|

221

236

|

else:

|

|

@@ -224,10 +239,17 @@ async def _summarize_info(

|

|

|

224

239

|

# Process any remaining content in the buffer

|

|

225

240

|

if content_buffer:

|

|

226

241

|

prompt = _create_summarize_info_prompt(goal, content_buffer)

|

|

227

|

-

summarized_info = await summarize(

|

|

242

|

+

summarized_info = await summarize(

|

|

243

|

+

ctx, llm_rate_limitter.clip_prompt(prompt, token_limit)

|

|

244

|

+

)

|

|

228

245

|

summarized_infos.append(summarized_info)

|

|

229

246

|

return summarized_infos

|

|

230

247

|

|

|

231

248

|

|

|

232

249

|

def _create_summarize_info_prompt(goal: str, content_buffer: str) -> str:

|

|

233

|

-

return

|

|

250

|

+

return json.dumps(

|

|

251

|

+

{

|

|

252

|

+

"main_assistant_goal": goal,

|

|

253

|

+

"extracted_info": content_buffer,

|

|

254

|

+

}

|

|

255

|

+

)

|

zrb/builtin/llm/tool/file.py

CHANGED

|

@@ -6,13 +6,14 @@ from typing import Any, Optional

|

|

|

6

6

|

|

|

7

7

|

from zrb.builtin.llm.tool.sub_agent import create_sub_agent_tool

|

|

8

8

|

from zrb.context.any_context import AnyContext

|

|

9

|

+

from zrb.llm_rate_limitter import llm_rate_limitter

|

|

9

10

|

from zrb.util.file import read_file, read_file_with_line_numbers, write_file

|

|

10

11

|

|

|

11

|

-

|

|

12

|

+

_EXTRACT_INFO_FROM_FILE_SYSTEM_PROMPT = """

|

|

12

13

|

You are an extraction info agent.

|

|

13

|

-

Your goal is to help to extract relevant information to help the main

|

|

14

|

+

Your goal is to help to extract relevant information to help the main assistant.

|

|

14

15

|

You write your output is in markdown format containing path and relevant information.

|

|

15

|

-

Extract only information that relevant to main

|

|

16

|

+

Extract only information that relevant to main assistant's goal.

|

|

16

17

|

|

|

17

18

|

Extracted Information format (Use this as reference, extract relevant information only):

|

|

18

19

|

# imports

|

|

@@ -464,7 +465,9 @@ def apply_diff(

|

|

|

464

465

|

raise RuntimeError(f"Unexpected error applying diff to {path}: {e}")

|

|

465

466

|

|

|

466

467

|

|

|

467

|

-

async def analyze_file(

|

|

468

|

+

async def analyze_file(

|

|

469

|

+

ctx: AnyContext, path: str, query: str, token_limit: int = 30000

|

|

470

|

+

) -> str:

|

|

468

471

|

"""Analyze file using LLM capability to reduce context usage.

|

|

469

472

|

Use this tool for:

|

|

470

473

|

- summarization

|

|

@@ -474,6 +477,7 @@ async def analyze_file(ctx: AnyContext, path: str, query: str) -> str:

|

|

|

474

477

|

Args:

|

|

475

478

|

path (str): File path to be analyze. Pass exactly as provided, including '~'.

|

|

476

479

|

query(str): Instruction to analyze the file

|

|

480

|

+

token_limit(Optional[int]): Max token length to be taken from file

|

|

477

481

|

Returns:

|

|

478

482

|

str: The analysis result

|

|

479

483

|

Raises:

|

|

@@ -486,19 +490,11 @@ async def analyze_file(ctx: AnyContext, path: str, query: str) -> str:

|

|

|

486

490

|

_analyze_file = create_sub_agent_tool(

|

|

487

491

|

tool_name="analyze_file",

|

|

488

492

|

tool_description="analyze file with LLM capability",

|

|

489

|

-

system_prompt=

|

|

493

|

+

system_prompt=_EXTRACT_INFO_FROM_FILE_SYSTEM_PROMPT,

|

|

490

494

|

tools=[read_from_file, search_files],

|

|

491

495

|

)

|

|

492

|

-

|

|

493

|

-

|

|

494

|

-

"\n".join(

|

|

495

|

-

[

|

|

496

|

-

file_content,

|

|

497

|

-

"# Instruction",

|

|

498

|

-

query,

|

|

499

|

-

"# File path",

|

|

500

|

-

abs_path,

|

|

501

|

-

"# File content",

|

|

502

|

-

]

|

|

503

|

-

),

|

|

496

|

+

payload = json.dumps(

|

|

497

|

+

{"instruction": query, "file_path": abs_path, "file_content": file_content}

|

|

504

498

|

)

|

|

499

|

+

clipped_payload = llm_rate_limitter.clip_prompt(payload, token_limit)

|

|

500

|

+

return await _analyze_file(ctx, clipped_payload)

|

|

@@ -1,7 +1,7 @@

|

|

|

1

1

|

import json

|

|

2

2

|

from collections.abc import Callable

|

|

3

3

|

from textwrap import dedent

|

|

4

|

-

from typing import TYPE_CHECKING, Any

|

|

4

|

+

from typing import TYPE_CHECKING, Any, Coroutine

|

|

5

5

|

|

|

6

6

|

if TYPE_CHECKING:

|

|

7

7

|

from pydantic_ai import Tool

|

|

@@ -33,7 +33,7 @@ def create_sub_agent_tool(

|

|

|

33

33

|

model_settings: ModelSettings | None = None,

|

|

34

34

|

tools: list[ToolOrCallable] = [],

|

|

35

35

|

mcp_servers: list[MCPServer] = [],

|

|

36

|

-

) -> Callable[[AnyContext, str], str]:

|

|

36

|

+

) -> Callable[[AnyContext, str], Coroutine[Any, Any, str]]:

|

|

37

37

|

"""

|

|

38

38

|

Create an LLM "sub-agent" tool function for use by a main LLM agent.

|

|

39

39

|

|

|

@@ -97,6 +97,8 @@ def create_sub_agent_tool(

|

|

|

97

97

|

tools=tools,

|

|

98

98

|

mcp_servers=mcp_servers,

|

|

99

99

|

)

|

|

100

|

+

|

|

101

|

+

sub_agent_run = None

|

|

100

102

|

# Run the sub-agent iteration

|

|

101

103

|

# Start with an empty history for the sub-agent

|

|

102

104

|

sub_agent_run = await run_agent_iteration(

|

zrb/config.py

CHANGED

|

@@ -245,12 +245,12 @@ class Config:

|

|

|

245

245

|

@property

|

|

246

246

|

def LLM_MAX_TOKENS_PER_MINUTE(self) -> int:

|

|

247

247

|

"""Maximum number of LLM tokens allowed per minute."""

|

|

248

|

-

return int(os.getenv("ZRB_LLM_MAX_TOKENS_PER_MINUTE", "

|

|

248

|

+

return int(os.getenv("ZRB_LLM_MAX_TOKENS_PER_MINUTE", "200000"))

|

|

249

249

|

|

|

250

250

|

@property

|

|

251

251

|

def LLM_MAX_TOKENS_PER_REQUEST(self) -> int:

|

|

252

252

|

"""Maximum number of tokens allowed per individual LLM request."""

|

|

253

|

-

return int(os.getenv("ZRB_LLM_MAX_TOKENS_PER_REQUEST", "

|

|

253

|

+

return int(os.getenv("ZRB_LLM_MAX_TOKENS_PER_REQUEST", "50000"))

|

|

254

254

|

|

|

255

255

|

@property

|

|

256

256

|

def LLM_THROTTLE_SLEEP(self) -> float:

|

zrb/llm_config.py

CHANGED

|

@@ -11,81 +11,192 @@ else:

|

|

|

11

11

|

|

|

12

12

|

from zrb.config import CFG

|

|

13

13

|

|

|

14

|

-

|

|

15

|

-

You

|

|

16

|

-

|

|

17

|

-

|

|

18

|

-

|

|

19

|

-

|

|

20

|

-

|

|

21

|

-

|

|

22

|

-

|

|

23

|

-

|

|

24

|

-

"

|

|

25

|

-

|

|

26

|

-

|

|

27

|

-

|

|

28

|

-

|

|

29

|

-

|

|

30

|

-

""

|

|

31

|

-

|

|

32

|

-

|

|

33

|

-

|

|

34

|

-

|

|

35

|

-

|

|

36

|

-

|

|

37

|

-

|

|

38

|

-

|

|

39

|

-

|

|

40

|

-

|

|

41

|

-

-

|

|

42

|

-

|

|

43

|

-

-

|

|

44

|

-

|

|

45

|

-

-

|

|

46

|

-

|

|

47

|

-

|

|

48

|

-

|

|

49

|

-

""

|

|

50

|

-

|

|

51

|

-

|

|

52

|

-

|

|

53

|

-

|

|

54

|

-

|

|

55

|

-

|

|

56

|

-

1.

|

|

57

|

-

|

|

58

|

-

|

|

59

|

-

|

|

60

|

-

|

|

61

|

-

|

|

62

|

-

|

|

63

|

-

|

|

64

|

-

|

|

65

|

-

""

|

|

66

|

-

|

|

67

|

-

|

|

68

|

-

|

|

69

|

-

|

|

70

|

-

|

|

71

|

-

|

|

72

|

-

|

|

73

|

-

|

|

74

|

-

|

|

75

|

-

|

|

76

|

-

|

|

77

|

-

|

|

78

|

-

4.

|

|

79

|

-

|

|

80

|

-

|

|

81

|

-

|

|

82

|

-

|

|

83

|

-

|

|

84

|

-

|

|

85

|

-

|

|

86

|

-

|

|

87

|

-

|

|

88

|

-

|

|

14

|

+

DEFAULT_PERSONA = (

|

|

15

|

+

"You are a helpful and precise expert assistant. Your goal is to follow "

|

|

16

|

+

"instructions carefully to provide accurate and efficient help. Get "

|

|

17

|

+

"straight to the point."

|

|

18

|

+

).strip()

|

|

19

|

+

|

|

20

|

+

DEFAULT_SYSTEM_PROMPT = (

|

|

21

|

+

"You have access to tools and two forms of memory:\n"

|

|

22

|

+

"1. A structured summary of the immediate task (including a payload) AND "

|

|

23

|

+

"the raw text of the last few turns.\n"

|

|

24

|

+

"2. A structured JSON object of long-term facts (user profile, project "

|

|

25

|

+

"details).\n\n"

|

|

26

|

+

"Your goal is to complete the user's task by following a strict workflow."

|

|

27

|

+

"\n\n"

|

|

28

|

+

"**YOUR CORE WORKFLOW**\n"

|

|

29

|

+

"You MUST follow these steps in order for every task:\n\n"

|

|

30

|

+

"1. **Synthesize and Verify:**\n"

|

|

31

|

+

" - Review all parts of your memory: the long-term facts, the recent "

|

|

32

|

+

"conversation history, and the summary of the next action.\n"

|

|

33

|

+

" - Compare this with the user's absolute LATEST message.\n"

|

|

34

|

+

" - **If your memory seems out of date or contradicts the user's new "

|

|

35

|

+

"request, you MUST ask for clarification before doing anything else.**\n"

|

|

36

|

+

" - Example: If memory says 'ready to build the app' but the user "

|

|

37

|

+

"asks to 'add a new file', ask: 'My notes say we were about to build. "

|

|

38

|

+

"Are you sure you want to add a new file first? Please confirm.'\n\n"

|

|

39

|

+

"2. **Plan:**\n"

|

|

40

|

+

" - Use the `Action Payload` from your memory if it exists.\n"

|

|

41

|

+

" - State your plan in simple, numbered steps.\n\n"

|

|

42

|

+

"3. **Execute:**\n"

|

|

43

|

+

" - Follow your plan and use your tools to complete the task.\n\n"

|

|

44

|

+

"**CRITICAL RULES**\n"

|

|

45

|

+

"- **TRUST YOUR MEMORY (AFTER VERIFICATION):** Once you confirm your "

|

|

46

|

+

"memory is correct, do NOT re-gather information. Use the `Action "

|

|

47

|

+

"Payload` directly.\n"

|

|

48

|

+

"- **ASK IF UNSURE:** If a required parameter (like a filename) is not in "

|

|

49

|

+

"your memory or the user's last message, you MUST ask for it. Do not "

|

|

50

|

+

"guess."

|

|

51

|

+

).strip()

|

|

52

|

+

|

|

53

|

+

DEFAULT_SPECIAL_INSTRUCTION_PROMPT = (

|

|

54

|

+

"## Technical Task Protocol\n"

|

|

55

|

+

"When performing technical tasks, strictly follow this protocol.\n\n"

|

|

56

|

+

"**1. Guiding Principles**\n"

|

|

57

|

+

"Your work must be **Correct, Secure, and Readable**.\n\n"

|

|

58

|

+

"**2. Code Modification: Surgical Precision**\n"

|

|

59

|

+

"- Your primary goal is to preserve the user's work.\n"

|

|

60

|

+

"- Find the **exact start and end lines** you need to change.\n"

|

|

61

|

+

"- **ADD or MODIFY only those specific lines.** Do not touch any other "

|

|

62

|

+

"part of the file.\n"

|

|

63

|

+

"- Do not REPLACE a whole file unless the user explicitly tells you "

|

|

64

|

+

"to.\n\n"

|

|

65

|

+

"**3. Git Workflow: A Safe, Step-by-Step Process**\n"

|

|

66

|

+

"Whenever you work in a git repository, you MUST follow these steps "

|

|

67

|

+

"exactly:\n"

|

|

68

|

+

"1. **Check Status:** Run `git status` to ensure the working directory "

|

|

69

|

+

"is clean.\n"

|

|

70

|

+

"2. **Halt if Dirty:** If the directory is not clean, STOP. Inform the "

|

|

71

|

+

"user and wait for their instructions.\n"

|

|

72

|

+

"3. **Propose and Confirm Branch:**\n"

|

|

73

|

+

" - Tell the user you need to create a new branch and propose a "

|

|

74

|

+

"name.\n"

|

|

75

|

+

" - Example: 'I will create a branch named `feature/add-user-login`. "

|

|

76

|

+

"Is this okay?'\n"

|

|

77

|

+

" - **Wait for the user to say 'yes' or approve.**\n"

|

|

78

|

+

"4. **Execute on Branch:** Once the user confirms, create the branch and "

|

|

79

|

+

"perform all your work and commits there.\n\n"

|

|

80

|

+

"**4. Debugging Protocol**\n"

|

|

81

|

+

"1. **Hypothesize:** State the most likely cause of the bug in one "

|

|

82

|

+

"sentence.\n"

|

|

83

|

+

"2. **Solve:** Provide the targeted code fix and explain simply *why* "

|

|

84

|

+

"it works.\n"

|

|

85

|

+

"3. **Verify:** Tell the user what command to run or what to check to "

|

|

86

|

+

"confirm the fix works."

|

|

87

|

+

).strip()

|

|

88

|

+

|

|

89

|

+

DEFAULT_SUMMARIZATION_PROMPT = (

|

|

90

|

+

"You are a summarization assistant. Your job is to create a two-part "

|

|

91

|

+

"summary to give the main assistant perfect context for its next "

|

|

92

|

+

"action.\n\n"

|

|

93

|

+

"**PART 1: RECENT CONVERSATION HISTORY**\n"

|

|

94

|

+

"- Copy the last 3-4 turns of the conversation verbatim.\n"

|

|

95

|

+

"- Use the format `user:` and `assistant:`.\n\n"

|

|

96

|

+

"**PART 2: ANALYSIS OF CURRENT STATE**\n"

|

|

97

|

+

"- Fill in the template to analyze the immediate task.\n"

|

|

98

|

+

"- **CRITICAL RULE:** If the next action requires specific data (like "

|

|

99

|

+

"text for a file or a command), you MUST include that exact data in the "

|

|

100

|

+

"`Action Payload` field.\n\n"

|

|

101

|

+

"---\n"

|

|

102

|

+

"**TEMPLATE FOR YOUR ENTIRE OUTPUT**\n\n"

|

|

103

|

+

"## Part 1: Recent Conversation History\n"

|

|

104

|

+

"user: [The user's second-to-last message]\n"

|

|

105

|

+

"assistant: [The assistant's last message]\n"

|

|

106

|

+

"user: [The user's most recent message]\n\n"

|

|

107

|

+

"---\n"

|

|

108

|

+

"## Part 2: Analysis of Current State\n\n"

|

|

109

|

+

"**User's Main Goal:**\n"

|

|

110

|

+

"[Describe the user's overall objective in one simple sentence.]\n\n"

|

|

111

|

+

"**Next Action for Assistant:**\n"

|

|

112

|

+

"[Describe the immediate next step. Example: 'Write new content to the "

|

|

113

|

+

"README.md file.']\n\n"

|

|

114

|

+

"**Action Payload:**\n"

|

|

115

|

+

"[IMPORTANT: Provide the exact content, code, or command for the "

|

|

116

|

+

"action. If no data is needed, write 'None'.]\n\n"

|

|

117

|

+

"---\n"

|

|

118

|

+

"**EXAMPLE SCENARIO & CORRECT OUTPUT**\n\n"

|

|

119

|

+

"*PREVIOUS CONVERSATION:*\n"

|

|

120

|

+

"user: Can you help me update my project's documentation?\n"

|

|

121

|

+

"assistant: Of course. I have drafted the new content: '# Project "

|

|

122

|

+

"Apollo\\nThis is the new documentation for the project.' Do you "

|

|

123

|

+

"approve?\n"

|

|

124

|

+

"user: Yes, that looks great. Please proceed.\n\n"

|

|

125

|

+

"*YOUR CORRECT OUTPUT:*\n\n"

|

|

126

|

+

"## Part 1: Recent Conversation History\n"

|

|

127

|

+

"user: Can you help me update my project's documentation?\n"

|

|

128

|

+

"assistant: Of course. I have drafted the new content: '# Project "

|

|

129

|

+

"Apollo\\nThis is the new documentation for the project.' Do you "

|

|

130

|

+

"approve?\n"

|

|

131

|

+

"user: Yes, that looks great. Please proceed.\n\n"

|

|

132

|

+

"---\n"

|

|

133

|

+

"## Part 2: Analysis of Current State\n\n"

|

|

134

|

+

"**User's Main Goal:**\n"

|

|

135

|

+

"Update the project documentation.\n\n"

|

|

136

|

+

"**Next Action for Assistant:**\n"

|

|

137

|

+

"Write new content to the README.md file.\n\n"

|

|

138

|

+

"**Action Payload:**\n"

|

|

139

|

+

"# Project Apollo\n"

|

|

140

|

+

"This is the new documentation for the project.\n"

|

|

141

|

+

"---"

|

|

142

|

+

).strip()

|

|

143

|

+

|

|

144

|

+

DEFAULT_CONTEXT_ENRICHMENT_PROMPT = (

|

|

145

|

+

"You are an information extraction robot. Your sole purpose is to "

|

|

146

|

+

"extract long-term, stable facts from the conversation and update a "

|

|

147

|

+

"JSON object.\n\n"

|

|

148

|

+

"**DEFINITIONS:**\n"

|

|

149

|

+

"- **Stable Facts:** Information that does not change often. Examples: "

|

|

150

|

+

"user's name, project name, preferred programming language.\n"

|

|

151

|

+

"- **Volatile Facts (IGNORE THESE):** Information about the current, "

|

|

152

|

+

"immediate task. Examples: the user's last request, the next action to "

|

|

153

|

+

"take.\n\n"

|

|

154

|

+

"**CRITICAL RULES:**\n"

|

|

155

|

+

"1. Your ENTIRE response MUST be a single, valid JSON object. The root "

|

|

156

|

+

"object must contain a single key named 'response'.\n"

|

|

157

|

+

"2. DO NOT add any text, explanations, or markdown formatting before or "

|

|

158

|

+

"after the JSON object.\n"

|

|

159

|

+

"3. Your job is to update the JSON. If a value already exists, only "

|

|

160

|

+

"change it if the user provides new information.\n"

|

|

161

|

+

'4. If you cannot find a value for a key, use an empty string `""`. DO '

|

|

162

|

+

"NOT GUESS.\n\n"

|

|

163

|

+

"---\n"

|

|

164

|

+

"**JSON TEMPLATE TO FILL:**\n\n"

|

|

165

|

+

"Copy this exact structure. Only fill in values for stable facts you "

|

|

166

|

+

"find.\n\n"

|

|

167

|

+

"{\n"

|

|

168

|

+

' "response": {\n'

|

|

169

|

+

' "user_profile": {\n'

|

|

170

|

+

' "user_name": "",\n'

|

|

171

|

+

' "language_preference": ""\n'

|

|

172

|

+

" },\n"

|

|

173

|

+

' "project_details": {\n'

|

|

174

|

+

' "project_name": "",\n'

|

|

175

|

+

' "primary_file_path": ""\n'

|

|

176

|

+

" }\n"

|

|

177

|

+

" }\n"

|

|

178

|

+

"}\n\n"

|

|

179

|

+

"---\n"

|

|

180

|

+

"**EXAMPLE SCENARIO**\n\n"

|

|

181

|

+

"*CONVERSATION CONTEXT:*\n"

|

|

182

|

+

"User: Hi, I'm Sarah, and I'm working on the 'Apollo' project. Let's fix "

|

|

183

|

+

"a bug in `src/auth.js`.\n"

|

|

184

|

+

"Assistant: Okay Sarah, let's look at `src/auth.js` from the 'Apollo' "

|

|

185

|

+

"project.\n\n"

|

|

186

|

+

"*CORRECT JSON OUTPUT:*\n\n"

|

|

187

|

+

"{\n"

|

|

188

|

+

' "response": {\n'

|

|

189

|

+

' "user_profile": {\n'

|

|

190

|

+

' "user_name": "Sarah",\n'

|

|

191

|

+

' "language_preference": "javascript"\n'

|

|

192

|

+

" },\n"

|

|

193

|

+

' "project_details": {\n'

|

|

194

|

+

' "project_name": "Apollo",\n'

|

|

195

|

+

' "primary_file_path": "src/auth.js"\n'

|

|

196

|

+

" }\n"

|

|

197

|

+

" }\n"

|

|

198

|

+

"}"

|

|

199

|

+

).strip()

|

|

89

200

|

|

|

90

201

|

|

|

91

202

|

class LLMConfig:

|

zrb/llm_rate_limitter.py

CHANGED

|

@@ -3,9 +3,16 @@ import time

|

|

|

3

3

|

from collections import deque

|

|

4

4

|

from typing import Callable

|

|

5

5

|

|

|

6

|

+

import tiktoken

|

|

7

|

+

|

|

6

8

|

from zrb.config import CFG

|

|

7

9

|

|

|

8

10

|

|

|

11

|

+

def _estimate_token(text: str) -> int:

|

|

12

|

+

enc = tiktoken.encoding_for_model("gpt-4o")

|

|

13

|

+

return len(enc.encode(text))

|

|

14

|

+

|

|

15

|

+

|

|

9

16

|

class LLMRateLimiter:

|

|

10

17

|

"""

|

|

11

18

|

Helper class to enforce LLM API rate limits and throttling.

|

|

@@ -53,10 +60,10 @@ class LLMRateLimiter:

|

|

|

53

60

|

return CFG.LLM_THROTTLE_SLEEP

|

|

54

61

|

|

|

55

62

|

@property

|

|

56

|

-

def

|

|

63

|

+

def count_token(self) -> Callable[[str], int]:

|

|

57

64

|

if self._token_counter_fn is not None:

|

|

58

65

|

return self._token_counter_fn

|

|

59

|

-

return

|

|

66

|

+

return _estimate_token

|

|

60

67

|

|

|

61

68

|

def set_max_requests_per_minute(self, value: int):

|

|

62

69

|

self._max_requests_per_minute = value

|

|

@@ -73,9 +80,22 @@ class LLMRateLimiter:

|

|

|

73

80

|

def set_token_counter_fn(self, fn: Callable[[str], int]):

|

|

74

81

|

self._token_counter_fn = fn

|

|

75

82

|

|

|

83

|

+

def clip_prompt(self, prompt: str, limit: int) -> str:

|

|

84

|

+

token_count = self.count_token(prompt)

|

|

85

|

+

if token_count <= limit:

|

|

86

|

+

return prompt

|

|

87

|

+

while token_count > limit:

|

|

88

|

+

prompt_parts = prompt.split(" ")

|

|

89

|

+

last_part_index = len(prompt_parts) - 2

|

|

90

|

+

clipped_prompt = " ".join(prompt_parts[:last_part_index])

|

|

91

|

+

token_count = self.count_token(clipped_prompt)

|

|

92

|

+

if token_count < limit:

|

|

93

|

+

return clipped_prompt

|

|

94

|

+

return prompt[:limit]

|

|

95

|

+

|

|

76

96

|

async def throttle(self, prompt: str):

|

|

77

97

|

now = time.time()

|

|

78

|

-

tokens = self.

|

|

98

|

+

tokens = self.count_token(prompt)

|

|

79

99

|

# Clean up old entries

|

|

80

100

|

while self.request_times and now - self.request_times[0] > 60:

|

|

81

101

|

self.request_times.popleft()

|

zrb/task/llm/agent.py

CHANGED

|

@@ -16,6 +16,8 @@ else:

|

|

|

16

16

|

Model = Any

|

|

17

17

|

ModelSettings = Any

|

|

18

18

|

|

|

19

|

+

import json

|

|

20

|

+

|

|

19

21

|

from zrb.context.any_context import AnyContext

|

|

20

22

|

from zrb.context.any_shared_context import AnySharedContext

|

|

21

23

|

from zrb.llm_rate_limitter import LLMRateLimiter, llm_rate_limitter

|

|

@@ -113,6 +115,7 @@ async def run_agent_iteration(

|

|

|

113

115

|

user_prompt: str,

|

|

114

116

|

history_list: ListOfDict,

|

|

115

117

|

rate_limitter: LLMRateLimiter | None = None,

|

|

118

|

+

max_retry: int = 2,

|

|

116

119

|

) -> AgentRun:

|

|

117

120

|

"""

|

|

118

121

|

Runs a single iteration of the agent execution loop.

|

|

@@ -129,13 +132,40 @@ async def run_agent_iteration(

|

|

|

129

132

|

Raises:

|

|

130

133

|

Exception: If any error occurs during agent execution.

|

|

131

134

|

"""

|

|

135

|

+

if max_retry < 0:

|

|

136

|

+

raise ValueError("Max retry cannot be less than 0")

|

|

137

|

+

attempt = 0

|

|

138

|

+

while attempt < max_retry:

|

|

139

|

+

try:

|

|

140

|

+

return await _run_single_agent_iteration(

|

|

141

|

+

ctx=ctx,

|

|

142

|

+

agent=agent,

|

|

143

|

+

user_prompt=user_prompt,

|

|

144

|

+

history_list=history_list,

|

|

145

|

+

rate_limitter=rate_limitter,

|

|

146

|

+

)

|

|

147

|

+

except BaseException:

|

|

148

|

+

attempt += 1

|

|

149

|

+

if attempt == max_retry:

|

|

150

|

+

raise

|

|

151

|

+

raise Exception("Max retry exceeded")

|

|

152

|

+

|

|

153

|

+

|

|

154

|

+

async def _run_single_agent_iteration(

|

|

155

|

+

ctx: AnyContext,

|

|

156

|

+

agent: Agent,

|

|

157

|

+

user_prompt: str,

|

|

158

|

+

history_list: ListOfDict,

|

|

159

|

+

rate_limitter: LLMRateLimiter | None = None,

|

|

160

|

+

) -> AgentRun:

|

|

132

161

|

from openai import APIError

|

|

133

162

|

from pydantic_ai.messages import ModelMessagesTypeAdapter

|

|

134

163

|

|

|

164

|

+

agent_payload = estimate_request_payload(agent, user_prompt, history_list)

|

|

135

165

|

if rate_limitter:

|

|

136

|

-

await rate_limitter.throttle(

|

|

166

|

+

await rate_limitter.throttle(agent_payload)

|

|

137

167

|

else:

|

|

138

|

-

await llm_rate_limitter.throttle(

|

|

168

|

+

await llm_rate_limitter.throttle(agent_payload)

|

|

139

169

|

|

|

140

170

|

async with agent.run_mcp_servers():

|

|

141

171

|

async with agent.iter(

|

|

@@ -159,6 +189,19 @@ async def run_agent_iteration(

|

|

|

159

189

|

return agent_run

|

|

160

190

|

|

|

161

191

|

|

|

192

|

+

def estimate_request_payload(

|

|

193

|

+

agent: Agent, user_prompt: str, history_list: ListOfDict

|

|

194

|

+

) -> str:

|

|

195

|

+

system_prompts = agent._system_prompts if hasattr(agent, "_system_prompts") else ()

|

|

196

|

+

return json.dumps(

|

|

197

|

+

[

|

|

198

|

+

{"role": "system", "content": "\n".join(system_prompts)},

|

|

199

|

+

*history_list,

|

|

200

|

+

{"role": "user", "content": user_prompt},

|

|

201

|

+

]

|

|

202

|

+

)

|

|

203

|

+

|

|

204

|

+

|

|

162

205

|

def _get_plain_printer(ctx: AnyContext):

|

|

163

206

|

def printer(*args, **kwargs):

|

|

164

207

|

if "plain" not in kwargs:

|

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.1

|

|

2

2

|

Name: zrb

|

|

3

|

-

Version: 1.8.

|

|

3

|

+

Version: 1.8.10

|

|

4

4

|

Summary: Your Automation Powerhouse

|

|

5

5

|

Home-page: https://github.com/state-alchemists/zrb

|

|

6

6

|

License: AGPL-3.0-or-later

|

|

@@ -19,18 +19,19 @@ Provides-Extra: rag

|

|

|

19

19

|

Requires-Dist: beautifulsoup4 (>=4.13.3,<5.0.0)

|

|

20

20

|

Requires-Dist: black (>=25.1.0,<25.2.0)

|

|

21

21

|

Requires-Dist: chromadb (>=0.6.3,<0.7.0) ; extra == "rag" or extra == "all"

|

|

22

|

-

Requires-Dist: fastapi[standard] (>=0.115.

|

|

22

|

+

Requires-Dist: fastapi[standard] (>=0.115.14,<0.116.0)

|

|

23

23

|

Requires-Dist: isort (>=6.0.1,<6.1.0)

|

|

24

24

|

Requires-Dist: libcst (>=1.7.0,<2.0.0)

|

|

25

|

-

Requires-Dist: openai (>=1.

|

|

25

|

+

Requires-Dist: openai (>=1.86.0,<2.0.0) ; extra == "rag" or extra == "all"

|

|

26

26

|

Requires-Dist: pdfplumber (>=0.11.6,<0.12.0) ; extra == "rag" or extra == "all"

|

|

27

|

-

Requires-Dist: playwright (>=1.

|

|

27

|

+

Requires-Dist: playwright (>=1.53.0,<2.0.0) ; extra == "playwright" or extra == "all"

|

|

28

28

|

Requires-Dist: psutil (>=7.0.0,<8.0.0)

|

|

29

|

-

Requires-Dist: pydantic-ai (>=0.

|

|

29

|

+

Requires-Dist: pydantic-ai (>=0.3.4,<0.4.0)

|

|

30

30

|

Requires-Dist: pyjwt (>=2.10.1,<3.0.0)

|

|

31

|

-

Requires-Dist: python-dotenv (>=1.1.

|

|

31

|

+

Requires-Dist: python-dotenv (>=1.1.1,<2.0.0)

|

|

32

32

|

Requires-Dist: python-jose[cryptography] (>=3.4.0,<4.0.0)

|

|

33

33

|

Requires-Dist: requests (>=2.32.4,<3.0.0)

|

|

34

|

+

Requires-Dist: tiktoken (>=0.8.0,<0.9.0)

|

|

34

35

|

Requires-Dist: ulid-py (>=1.1.0,<2.0.0)

|

|

35

36

|

Project-URL: Documentation, https://github.com/state-alchemists/zrb

|

|

36

37

|

Project-URL: Repository, https://github.com/state-alchemists/zrb

|

|

@@ -81,9 +82,11 @@ Or run our installation script to set up Zrb along with all prerequisites:

|

|

|

81

82

|

bash -c "$(curl -fsSL https://raw.githubusercontent.com/state-alchemists/zrb/main/install.sh)"

|

|

82

83

|

```

|

|

83

84

|

|

|

85

|

+

You can also [run Zrb as container](https://github.com/state-alchemists/zrb?tab=readme-ov-file#-run-zrb-as-a-container)

|

|

86

|

+

|

|

84

87

|

# 🍲 Quick Start: Build Your First Automation Workflow

|

|

85

88

|

|

|

86

|

-

Zrb empowers you to create custom automation tasks using Python. This guide shows you how to define two simple tasks: one to generate a

|

|

89

|

+

Zrb empowers you to create custom automation tasks using Python. This guide shows you how to define two simple tasks: one to generate a Mermaid script from your source code and another to convert that script into a PNG image.

|

|

87

90

|

|

|

88

91

|

## 1. Create Your Task Definition File

|

|

89

92

|

|

|

@@ -92,59 +95,61 @@ Place a file named `zrb_init.py` in a directory that's accessible from your proj

|

|

|

92

95

|

Add the following content to your zrb_init.py:

|

|

93

96

|

|

|

94

97

|

```python

|

|

95

|

-

import os

|

|

96

98

|

from zrb import cli, LLMTask, CmdTask, StrInput, Group

|

|

97

|

-

from zrb.builtin.llm.tool.

|

|

98

|

-

|

|

99

|

-

)

|

|

100

|

-

|

|

99

|

+

from zrb.builtin.llm.tool.code import analyze_repo

|

|

100

|

+

from zrb.builtin.llm.tool.file import write_to_file

|

|

101

101

|

|

|

102

|

-

CURRENT_DIR = os.getcwd()

|

|

103

102

|

|

|

104

|

-

# Create a group for

|

|

105

|

-

|

|

103

|

+

# Create a group for Mermaid-related tasks

|

|

104

|

+

mermaid_group = cli.add_group(Group(name="mermaid", description="🧜 Mermaid diagram related tasks"))

|

|

106

105

|

|

|

107

|

-

# Task 1: Generate a

|

|

108

|

-

|

|

106

|

+

# Task 1: Generate a Mermaid script from your source code

|

|

107

|

+

make_mermaid_script = mermaid_group.add_task(

|

|

109

108

|

LLMTask(

|

|

110

109

|

name="make-script",

|

|

111

|

-

description="Creating

|

|

112

|

-

input=

|

|

110

|

+

description="Creating mermaid diagram based on source code in current directory",

|

|

111

|

+

input=[

|

|

112

|

+

StrInput(name="dir", default="./"),

|

|

113

|

+

StrInput(name="diagram", default="state-diagram"),

|

|

114

|

+

],

|

|

113

115

|

message=(

|

|

114

|

-

|

|

115

|

-

"make a {ctx.input.diagram} in

|

|

116

|

-

|

|

116

|

+

"Read all necessary files in {ctx.input.dir}, "

|

|

117

|

+

"make a {ctx.input.diagram} in mermaid format. "

|

|

118

|

+

"Write the script into `{ctx.input.dir}/{ctx.input.diagram}.mmd`"

|

|

117

119

|

),

|

|

118

120

|

tools=[

|

|

119

|

-

|

|

121

|

+

analyze_repo, write_to_file

|

|

120

122

|

],

|

|

121

123

|

)

|

|

122

124

|

)

|

|

123

125

|

|

|

124

|

-

# Task 2: Convert the

|

|

125

|

-

|

|

126

|

+

# Task 2: Convert the Mermaid script into a PNG image

|

|

127

|

+

make_mermaid_image = mermaid_group.add_task(

|

|

126

128

|

CmdTask(

|

|

127

129

|

name="make-image",

|

|

128

130

|

description="Creating png based on source code in current directory",

|

|

129

|

-

input=

|

|

130

|

-

|

|

131

|

-

|

|

131

|

+

input=[

|

|

132

|

+

StrInput(name="dir", default="./"),

|

|

133

|

+

StrInput(name="diagram", default="state-diagram"),

|

|

134

|

+

],

|

|

135

|

+

cmd="mmdc -i '{ctx.input.diagram}.mmd' -o '{ctx.input.diagram}.png'",

|

|

136

|

+

cwd="{ctx.input.dir}",

|

|

132

137

|

)

|

|

133

138

|

)

|

|

134

139

|

|

|

135

140

|

# Set up the dependency: the image task runs after the script is created

|

|

136

|

-

|

|

141

|

+

make_mermaid_script >> make_mermaid_image

|

|

137

142

|

```

|

|

138

143

|

|

|

139

144

|

**What This Does**

|

|

140

145

|

|

|

141

146

|

- **Task 1 – make-script**:

|

|

142

147

|

|

|

143

|

-

Uses an LLM to read all files in your current directory and generate a

|

|

148

|

+

Uses an LLM to read all files in your current directory and generate a Mermaid script (e.g., `state diagram.mmd`).

|

|

144

149

|

|

|

145

150

|

- **Task 2 – make-image**:

|

|

146

151

|

|

|

147

|

-

Executes a command that converts the

|

|

152

|

+

Executes a command that converts the Mermaid script into a PNG image (e.g., `state diagram.png`). This task will run only after the script has been generated.

|

|

148

153

|

|

|

149

154

|

|

|

150

155

|

## 2. Run Your Tasks

|

|

@@ -161,18 +166,19 @@ After setting up your tasks, you can execute them from any project. For example:

|

|

|

161

166

|

- Create a state diagram:

|

|

162

167

|

|

|

163

168

|

```bash

|

|

164

|

-

zrb

|

|

169

|

+

zrb mermaid make-image --diagram "state diagram" --dir ./

|

|

165

170

|

```

|

|

166

171

|

|

|

167

172

|

- Or use the interactive mode:

|

|

168

173

|

|

|

169

174

|

```bash

|

|

170

|

-

zrb

|

|

175

|

+

zrb mermaid make-image

|

|

171

176

|

```

|

|

172

177

|

|

|

173

178

|

Zrb will prompt:

|

|

174

179

|

|

|

175

180

|

```bash

|

|

181

|

+

dir [./]:

|

|

176

182

|

diagram [state diagram]:

|

|

177

183

|

```

|

|

178

184

|

|

|

@@ -194,7 +200,7 @@ Then open your browser and visit `http://localhost:21213`

|

|

|

194

200

|

|

|

195

201

|

|

|

196

202

|

|

|

197

|

-

# 🐋

|

|

203

|

+

# 🐋 Run Zrb as a Container

|

|

198

204

|

|

|

199

205

|

Zrb can be run in a containerized environment, offering two distinct versions to suit different needs:

|

|

200

206

|

|

|

@@ -17,10 +17,10 @@ zrb/builtin/llm/previous-session.js,sha256=xMKZvJoAbrwiyHS0OoPrWuaKxWYLoyR5sgueP

|

|

|

17

17

|

zrb/builtin/llm/tool/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

18

18

|

zrb/builtin/llm/tool/api.py,sha256=yR9I0ZsI96OeQl9pgwORMASVuXsAL0a89D_iPS4C8Dc,1699

|

|

19

19

|

zrb/builtin/llm/tool/cli.py,sha256=_CNEmEc6K2Z0i9ppYeM7jGpqaEdT3uxaWQatmxP3jKE,858

|

|

20

|

-

zrb/builtin/llm/tool/code.py,sha256=

|

|

21

|

-

zrb/builtin/llm/tool/file.py,sha256=

|

|

20

|

+

zrb/builtin/llm/tool/code.py,sha256=gvRnimUh5kWqmpiYtJvEm6KDZhQArqhwAAkKI1_UapY,8133

|

|

21

|

+

zrb/builtin/llm/tool/file.py,sha256=ufLCAaHB0JkEAqQS4fbM9OaTfLluqlCuSyMmnYhI0rY,18491

|

|

22

22

|

zrb/builtin/llm/tool/rag.py,sha256=yqx7vXXyrOCJjhQJl4s0TnLL-2uQUTuKRnkWlSQBW0M,7883

|

|

23

|

-

zrb/builtin/llm/tool/sub_agent.py,sha256=

|

|

23

|

+

zrb/builtin/llm/tool/sub_agent.py,sha256=GPHD8hLlIfme0h1Q0zzMUuAc2HiKl8CRqWGNcgE_H1Q,4764

|

|

24

24

|

zrb/builtin/llm/tool/web.py,sha256=pXRLhcB_Y6z-2w4C4WezH8n-pg3PSMgt_bwn3aaqi6g,5479

|

|

25

25

|

zrb/builtin/md5.py,sha256=690RV2LbW7wQeTFxY-lmmqTSVEEZv3XZbjEUW1Q3XpE,1480

|

|

26

26

|

zrb/builtin/project/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

@@ -170,7 +170,7 @@ zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/gateway/view

|

|

|

170

170

|

zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/gateway/view/static/pico-css/pico.yellow.min.css,sha256=_UXLKrhEsXonQ-VthBNB7zHUEcV67KDAE-SiDR1SrlU,83371

|

|

171

171

|

zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/gateway/view/static/pico-css/pico.zinc.min.css,sha256=C9KHUa2PomYXZg2-rbpDPDYkuL_ZZTwFS-uj1Zo7azE,83337

|

|

172

172

|

zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/gateway/view/template/default.html,sha256=Lg4vONCLOx8PSORFitg8JZa-4dp-pyUvKSVzCJEFsB8,4048

|

|

173

|

-

zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/requirements.txt,sha256=

|

|

173

|

+

zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/requirements.txt,sha256=cV16jm4MpHK020eLm9ityrVBEZgJw0WvwrphOA-2lAE,195

|

|

174

174

|

zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/schema/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

175

175

|

zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/schema/permission.py,sha256=q66LXdZ-QTb30F1VTXNLnjyYBlK_ThVLiHgavtJy4LY,1424

|

|

176

176

|

zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/schema/role.py,sha256=7USbuhHhPc3xXkmwiqTVKsN8-eFWS8Q7emKxCGNGPw0,3244

|

|

@@ -217,7 +217,7 @@ zrb/callback/callback.py,sha256=PFhCqzfxdk6IAthmXcZ13DokT62xtBzJr_ciLw6I8Zg,4030

|

|

|

217

217

|

zrb/cmd/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

218

218

|

zrb/cmd/cmd_result.py,sha256=L8bQJzWCpcYexIxHBNsXj2pT3BtLmWex0iJSMkvimOA,597

|

|

219

219

|

zrb/cmd/cmd_val.py,sha256=7Doowyg6BK3ISSGBLt-PmlhzaEkBjWWm51cED6fAUOQ,1014

|

|

220

|

-

zrb/config.py,sha256=

|

|

220

|

+

zrb/config.py,sha256=qFtVVme30fMyi5x_mgvvULczNbORqK8ZEN8agXokXO4,10222

|

|

221

221

|

zrb/content_transformer/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

222

222

|

zrb/content_transformer/any_content_transformer.py,sha256=v8ZUbcix1GGeDQwB6OKX_1TjpY__ksxWVeqibwa_iZA,850

|

|

223

223

|

zrb/content_transformer/content_transformer.py,sha256=STl77wW-I69QaGzCXjvkppngYFLufow8ybPLSyAvlHs,2404

|

|

@@ -246,8 +246,8 @@ zrb/input/option_input.py,sha256=TQB82ko5odgzkULEizBZi0e9TIHEbIgvdP0AR3RhA74,213

|

|

|

246

246

|

zrb/input/password_input.py,sha256=szBojWxSP9QJecgsgA87OIYwQrY2AQ3USIKdDZY6snU,1465

|

|

247

247

|

zrb/input/str_input.py,sha256=NevZHX9rf1g8eMatPyy-kUX3DglrVAQpzvVpKAzf7bA,81

|

|

248

248

|

zrb/input/text_input.py,sha256=6T3MngWdUs0u0ZVs5Dl11w5KS7nN1RkgrIR_zKumzPM,3695

|

|

249

|

-

zrb/llm_config.py,sha256=

|

|

250

|

-

zrb/llm_rate_limitter.py,sha256=

|

|

249

|

+

zrb/llm_config.py,sha256=7xp4mhre3ULSzfyuqinXXMigNOYNcemVEgPIiWTNdMk,16875

|

|

250

|

+

zrb/llm_rate_limitter.py,sha256=rFYJU2ngo1Hk3aSODsgFIpANI95qZydUvZ4WHOmdbHQ,4376

|

|

251

251

|

zrb/runner/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

252

252

|

zrb/runner/cli.py,sha256=AbLTNqFy5FuyGQOWOjHZGaBC8e2yuE_Dx1sBdnisR18,6984

|

|

253

253

|

zrb/runner/common_util.py,sha256=JDMcwvQ8cxnv9kQrAoKVLA40Q1omfv-u5_d5MvvwHeE,1373

|

|

@@ -337,7 +337,7 @@ zrb/task/base_trigger.py,sha256=WSGcmBcGAZw8EzUXfmCjqJQkz8GEmi1RzogpF6A1V4s,6902

|

|

|

337

337

|

zrb/task/cmd_task.py,sha256=irGi0txTcsvGhxjfem4_radR4csNXhgtfcxruSF1LFI,10853

|

|

338

338

|

zrb/task/http_check.py,sha256=Gf5rOB2Se2EdizuN9rp65HpGmfZkGc-clIAlHmPVehs,2565

|

|

339

339

|

zrb/task/llm/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

340

|

-

zrb/task/llm/agent.py,sha256=

|

|

340

|

+

zrb/task/llm/agent.py,sha256=pwGFkRSQ-maH92QaJr2dgfQhEiwFVK5XnyK4OYBJm4c,6782

|

|

341

341

|

zrb/task/llm/config.py,sha256=Gb0lSHCgGXOAr7igkU7k_Ew5Yp_wOTpNQyZrLrtA7oc,3521

|

|

342

342

|

zrb/task/llm/context.py,sha256=U9a8lxa2ikz6my0Sd5vpO763legHrMHyvBjbrqNmv0Y,3838

|

|

343

343

|

zrb/task/llm/context_enrichment.py,sha256=BlW2CjSUsKJT8EZBXYxOE4MEBbRCoO34PlQQdzA-zBM,7201

|

|

@@ -390,7 +390,7 @@ zrb/util/string/name.py,sha256=SXEfxJ1-tDOzHqmSV8kvepRVyMqs2XdV_vyoh_9XUu0,1584

|

|

|

390

390

|

zrb/util/todo.py,sha256=VGISej2KQZERpornK-8X7bysp4JydMrMUTnG8B0-liI,20708

|

|

391

391

|

zrb/xcom/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

392

392

|

zrb/xcom/xcom.py,sha256=o79rxR9wphnShrcIushA0Qt71d_p3ZTxjNf7x9hJB78,1571

|

|

393

|

-

zrb-1.8.

|

|

394

|

-

zrb-1.8.

|

|

395

|

-

zrb-1.8.

|

|

396

|

-

zrb-1.8.

|

|

393

|

+

zrb-1.8.10.dist-info/METADATA,sha256=cFdiCmF4a4s7-jxcMZtXxhjG8L4hZO8-YBw_DecGSQQ,10108

|

|

394

|

+

zrb-1.8.10.dist-info/WHEEL,sha256=sP946D7jFCHeNz5Iq4fL4Lu-PrWrFsgfLXbbkciIZwg,88

|

|

395

|

+

zrb-1.8.10.dist-info/entry_points.txt,sha256=-Pg3ElWPfnaSM-XvXqCxEAa-wfVI6BEgcs386s8C8v8,46

|

|

396

|

+

zrb-1.8.10.dist-info/RECORD,,

|

|

File without changes

|

|

File without changes

|