zrb 1.4.1__py3-none-any.whl → 1.4.3__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

Potentially problematic release.

This version of zrb might be problematic. Click here for more details.

- zrb/builtin/llm/llm_chat.py +7 -6

- zrb/builtin/llm/tool/api.py +1 -1

- zrb/builtin/llm/tool/file.py +4 -2

- zrb/builtin/llm/tool/rag.py +28 -10

- zrb/builtin/llm/tool/web.py +55 -15

- zrb/config.py +4 -13

- zrb/llm_config.py +64 -12

- zrb/task/llm_task.py +36 -19

- {zrb-1.4.1.dist-info → zrb-1.4.3.dist-info}/METADATA +66 -46

- {zrb-1.4.1.dist-info → zrb-1.4.3.dist-info}/RECORD +12 -12

- {zrb-1.4.1.dist-info → zrb-1.4.3.dist-info}/WHEEL +0 -0

- {zrb-1.4.1.dist-info → zrb-1.4.3.dist-info}/entry_points.txt +0 -0

zrb/builtin/llm/llm_chat.py

CHANGED

|

@@ -22,7 +22,6 @@ from zrb.config import (

|

|

|

22

22

|

LLM_ALLOW_ACCESS_LOCAL_FILE,

|

|

23

23

|

LLM_ALLOW_ACCESS_SHELL,

|

|

24

24

|

LLM_HISTORY_DIR,

|

|

25

|

-

LLM_SYSTEM_PROMPT,

|

|

26

25

|

SERP_API_KEY,

|

|

27

26

|

)

|

|

28

27

|

from zrb.context.any_shared_context import AnySharedContext

|

|

@@ -119,7 +118,7 @@ llm_chat: LLMTask = llm_group.add_task(

|

|

|

119

118

|

"system-prompt",

|

|

120

119

|

description="System prompt",

|

|

121

120

|

prompt="System prompt",

|

|

122

|

-

default=

|

|

121

|

+

default="",

|

|

123

122

|

allow_positional_parsing=False,

|

|

124

123

|

always_prompt=False,

|

|

125

124

|

),

|

|

@@ -141,17 +140,19 @@ llm_chat: LLMTask = llm_group.add_task(

|

|

|

141

140

|

always_prompt=False,

|

|

142

141

|

),

|

|

143

142

|

],

|

|

144

|

-

model=lambda ctx: None if ctx.input.model == "" else ctx.input.model,

|

|

143

|

+

model=lambda ctx: None if ctx.input.model.strip() == "" else ctx.input.model,

|

|

145

144

|

model_base_url=lambda ctx: (

|

|

146

|

-

None if ctx.input.base_url == "" else ctx.input.base_url

|

|

145

|

+

None if ctx.input.base_url.strip() == "" else ctx.input.base_url

|

|

147

146

|

),

|

|

148

147

|

model_api_key=lambda ctx: (

|

|

149

|

-

None if ctx.input.api_key == "" else ctx.input.api_key

|

|

148

|

+

None if ctx.input.api_key.strip() == "" else ctx.input.api_key

|

|

150

149

|

),

|

|

151

150

|

conversation_history_reader=_read_chat_conversation,

|

|

152

151

|

conversation_history_writer=_write_chat_conversation,

|

|

153

152

|

description="Chat with LLM",

|

|

154

|

-

system_prompt=

|

|

153

|

+

system_prompt=lambda ctx: (

|

|

154

|

+

None if ctx.input.system_prompt.strip() == "" else ctx.input.system_prompt

|

|

155

|

+

),

|

|

155

156

|

message="{ctx.input.message}",

|

|

156

157

|

retries=0,

|

|

157

158

|

),

|

zrb/builtin/llm/tool/api.py

CHANGED

|

@@ -5,7 +5,7 @@ from typing import Annotated, Literal

|

|

|

5

5

|

def get_current_location() -> (

|

|

6

6

|

Annotated[str, "JSON string representing latitude and longitude"]

|

|

7

7

|

): # noqa

|

|

8

|

-

"""Get the user's current location."""

|

|

8

|

+

"""Get the user's current location. This function take no argument."""

|

|

9

9

|

import requests

|

|

10

10

|

|

|

11

11

|

return json.dumps(requests.get("http://ip-api.com/json?fields=lat,lon").json())

|

zrb/builtin/llm/tool/file.py

CHANGED

|

@@ -93,6 +93,7 @@ def list_files(

|

|

|

93

93

|

"""List all files in a directory that match any of the included glob patterns

|

|

94

94

|

and do not reside in any directory matching an excluded pattern.

|

|

95

95

|

Patterns are evaluated using glob-style matching.

|

|

96

|

+

included_patterns and excluded_patterns already has sane default values.

|

|

96

97

|

"""

|

|

97

98

|

all_files: list[str] = []

|

|

98

99

|

for root, dirs, files in os.walk(directory):

|

|

@@ -127,12 +128,12 @@ def _should_exclude(full_path: str, excluded_patterns: list[str]) -> bool:

|

|

|

127

128

|

|

|

128

129

|

|

|

129

130

|

def read_text_file(file: str) -> str:

|

|

130

|

-

"""Read a text file"""

|

|

131

|

+

"""Read a text file and return a string containing the file content."""

|

|

131

132

|

return read_file(os.path.abspath(file))

|

|

132

133

|

|

|

133

134

|

|

|

134

135

|

def write_text_file(file: str, content: str):

|

|

135

|

-

"""Write a text file"""

|

|

136

|

+

"""Write content to a text file"""

|

|

136

137

|

return write_file(os.path.abspath(file), content)

|

|

137

138

|

|

|

138

139

|

|

|

@@ -144,6 +145,7 @@ def read_all_files(

|

|

|

144

145

|

"""Read all files in a directory that match any of the included glob patterns

|

|

145

146

|

and do not match any of the excluded glob patterns.

|

|

146

147

|

Patterns are evaluated using glob-style matching.

|

|

148

|

+

included_patterns and excluded_patterns already has sane default values.

|

|

147

149

|

"""

|

|

148

150

|

files = list_files(directory, included_patterns, excluded_patterns)

|

|

149

151

|

for index, file in enumerate(files):

|

zrb/builtin/llm/tool/rag.py

CHANGED

|

@@ -9,6 +9,8 @@ import ulid

|

|

|

9

9

|

|

|

10

10

|

from zrb.config import (

|

|

11

11

|

RAG_CHUNK_SIZE,

|

|

12

|

+

RAG_EMBEDDING_API_KEY,

|

|

13

|

+

RAG_EMBEDDING_BASE_URL,

|

|

12

14

|

RAG_EMBEDDING_MODEL,

|

|

13

15

|

RAG_MAX_RESULT_COUNT,

|

|

14

16

|

RAG_OVERLAP,

|

|

@@ -35,24 +37,34 @@ def create_rag_from_directory(

|

|

|

35

37

|

tool_name: str,

|

|

36

38

|

tool_description: str,

|

|

37

39

|

document_dir_path: str = "./documents",

|

|

38

|

-

model: str = RAG_EMBEDDING_MODEL,

|

|

39

40

|

vector_db_path: str = "./chroma",

|

|

40

41

|

vector_db_collection: str = "documents",

|

|

41

42

|

chunk_size: int = RAG_CHUNK_SIZE,

|

|

42

43

|

overlap: int = RAG_OVERLAP,

|

|

43

44

|

max_result_count: int = RAG_MAX_RESULT_COUNT,

|

|

44

45

|

file_reader: list[RAGFileReader] = [],

|

|

46

|

+

openai_api_key: str = RAG_EMBEDDING_API_KEY,

|

|

47

|

+

openai_base_url: str = RAG_EMBEDDING_BASE_URL,

|

|

48

|

+

openai_embedding_model: str = RAG_EMBEDDING_MODEL,

|

|

45

49

|

):

|

|

46

50

|

async def retrieve(query: str) -> str:

|

|

47

51

|

from chromadb import PersistentClient

|

|

48

52

|

from chromadb.config import Settings

|

|

49

|

-

from

|

|

50

|

-

|

|

51

|

-

|

|

52

|

-

|

|

53

|

+

from openai import OpenAI

|

|

54

|

+

|

|

55

|

+

# Initialize OpenAI client with custom URL if provided

|

|

56

|

+

client_args = {}

|

|

57

|

+

if openai_api_key:

|

|

58

|

+

client_args["api_key"] = openai_api_key

|

|

59

|

+

if openai_base_url:

|

|

60

|

+

client_args["base_url"] = openai_base_url

|

|

61

|

+

# Initialize OpenAI client for embeddings

|

|

62

|

+

openai_client = OpenAI(**client_args)

|

|

63

|

+

# Initialize ChromaDB client

|

|

64

|

+

chroma_client = PersistentClient(

|

|

53

65

|

path=vector_db_path, settings=Settings(allow_reset=True)

|

|

54

66

|

)

|

|

55

|

-

collection =

|

|

67

|

+

collection = chroma_client.get_or_create_collection(vector_db_collection)

|

|

56

68

|

# Track file changes using a hash-based approach

|

|

57

69

|

hash_file_path = os.path.join(vector_db_path, "file_hashes.json")

|

|

58

70

|

previous_hashes = _load_hashes(hash_file_path)

|

|

@@ -89,8 +101,11 @@ def create_rag_from_directory(

|

|

|

89

101

|

),

|

|

90

102

|

file=sys.stderr,

|

|

91

103

|

)

|

|

92

|

-

|

|

93

|

-

|

|

104

|

+

# Get embeddings using OpenAI

|

|

105

|

+

embedding_response = openai_client.embeddings.create(

|

|

106

|

+

input=chunk, model=openai_embedding_model

|

|

107

|

+

)

|

|

108

|

+

vector = embedding_response.data[0].embedding

|

|

94

109

|

collection.upsert(

|

|

95

110

|

ids=[chunk_id],

|

|

96

111

|

embeddings=[vector],

|

|

@@ -113,8 +128,11 @@ def create_rag_from_directory(

|

|

|

113

128

|

)

|

|

114

129

|

# Vectorize query and get related document chunks

|

|

115

130

|

print(stylize_faint("Vectorizing query"), file=sys.stderr)

|

|

116

|

-

|

|

117

|

-

|

|

131

|

+

# Get embeddings using OpenAI

|

|

132

|

+

embedding_response = openai_client.embeddings.create(

|

|

133

|

+

input=query, model=openai_embedding_model

|

|

134

|

+

)

|

|

135

|

+

query_vector = embedding_response.data[0].embedding

|

|

118

136

|

print(stylize_faint("Searching documents"), file=sys.stderr)

|

|

119

137

|

results = collection.query(

|

|

120

138

|

query_embeddings=query_vector,

|

zrb/builtin/llm/tool/web.py

CHANGED

|

@@ -3,21 +3,61 @@ from collections.abc import Callable

|

|

|

3

3

|

from typing import Annotated

|

|

4

4

|

|

|

5

5

|

|

|

6

|

-

def open_web_page(url: str) -> str:

|

|

7

|

-

"""Get content from a web page."""

|

|

8

|

-

|

|

9

|

-

|

|

10

|

-

|

|

11

|

-

|

|

12

|

-

|

|

13

|

-

|

|

14

|

-

|

|

15

|

-

|

|

16

|

-

|

|

17

|

-

|

|

18

|

-

|

|

19

|

-

|

|

20

|

-

|

|

6

|

+

async def open_web_page(url: str) -> str:

|

|

7

|

+

"""Get content from a web page using a headless browser."""

|

|

8

|

+

|

|

9

|

+

async def get_page_content(page_url: str):

|

|

10

|

+

try:

|

|

11

|

+

from playwright.async_api import async_playwright

|

|

12

|

+

|

|

13

|

+

async with async_playwright() as p:

|

|

14

|

+

browser = await p.chromium.launch(headless=True)

|

|

15

|

+

page = await browser.new_page()

|

|

16

|

+

# Set user agent to mimic a regular browser

|

|

17

|

+

user_agent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) "

|

|

18

|

+

user_agent += "AppleWebKit/537.36 (KHTML, like Gecko) "

|

|

19

|

+

user_agent += "Chrome/91.0.4472.124 Safari/537.36"

|

|

20

|

+

await page.set_extra_http_headers({"User-Agent": user_agent})

|

|

21

|

+

try:

|

|

22

|

+

# Navigate to the URL with a timeout of 30 seconds

|

|

23

|

+

await page.goto(page_url, wait_until="networkidle", timeout=30000)

|

|

24

|

+

# Wait for the content to load

|

|

25

|

+

await page.wait_for_load_state("domcontentloaded")

|

|

26

|

+

# Get the page content

|

|

27

|

+

content = await page.content()

|

|

28

|

+

# Extract all links from the page

|

|

29

|

+

links = await page.eval_on_selector_all(

|

|

30

|

+

"a[href]",

|

|

31

|

+

"""

|

|

32

|

+

(elements) => elements.map(el => {

|

|

33

|

+

const href = el.getAttribute('href');

|

|

34

|

+

if (href && !href.startsWith('#') && !href.startsWith('/')) {

|

|

35

|

+

return href;

|

|

36

|

+

}

|

|

37

|

+

return null;

|

|

38

|

+

}).filter(href => href !== null)

|

|

39

|

+

""",

|

|

40

|

+

)

|

|

41

|

+

return {"content": content, "links_on_page": links}

|

|

42

|

+

finally:

|

|

43

|

+

await browser.close()

|

|

44

|

+

except ImportError:

|

|

45

|

+

import requests

|

|

46

|

+

|

|

47

|

+

response = requests.get(

|

|

48

|

+

url,

|

|

49

|

+

headers={

|

|

50

|

+

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36" # noqa

|

|

51

|

+

},

|

|

52

|

+

)

|

|

53

|

+

if response.status_code != 200:

|

|

54

|

+

msg = f"Unable to retrieve search results. Status code: {response.status_code}"

|

|

55

|

+

raise Exception(msg)

|

|

56

|

+

return {"content": response.text, "links_on_page": []}

|

|

57

|

+

|

|

58

|

+

result = await get_page_content(url)

|

|

59

|

+

# Parse the HTML content

|

|

60

|

+

return json.dumps(parse_html_text(result["content"]))

|

|

21

61

|

|

|

22

62

|

|

|

23

63

|

def create_search_internet_tool(serp_api_key: str) -> Callable[[str, int], str]:

|

zrb/config.py

CHANGED

|

@@ -76,15 +76,6 @@ WEB_AUTH_REFRESH_TOKEN_EXPIRE_MINUTES = int(

|

|

|

76

76

|

os.getenv("ZRB_WEB_REFRESH_TOKEN_EXPIRE_MINUTES", "60")

|

|

77

77

|

)

|

|

78

78

|

|

|

79

|

-

_DEFAULT_PROMPT = (

|

|

80

|

-

"You are a helpful AI assistant capable of using various tools to answer user queries. When solving a problem:\n"

|

|

81

|

-

"1. Carefully analyze the user's request and identify what information is needed to provide a complete answer.\n"

|

|

82

|

-

"2. Determine which available tools can help you gather the necessary information.\n"

|

|

83

|

-

"3. Call tools strategically and in a logical sequence to collect required data.\n"

|

|

84

|

-

"4. If a tool provides incomplete information, intelligently decide which additional tool or approach to use.\n"

|

|

85

|

-

"5. Always aim to provide the most accurate and helpful response possible."

|

|

86

|

-

)

|

|

87

|

-

LLM_SYSTEM_PROMPT = os.getenv("ZRB_LLM_SYSTEM_PROMPT", _DEFAULT_PROMPT)

|

|

88

79

|

LLM_HISTORY_DIR = os.getenv(

|

|

89

80

|

"ZRB_LLM_HISTORY_DIR", os.path.expanduser(os.path.join("~", ".zrb-llm-history"))

|

|

90

81

|

)

|

|

@@ -94,10 +85,10 @@ LLM_HISTORY_FILE = os.getenv(

|

|

|

94

85

|

LLM_ALLOW_ACCESS_LOCAL_FILE = to_boolean(os.getenv("ZRB_LLM_ACCESS_LOCAL_FILE", "1"))

|

|

95

86

|

LLM_ALLOW_ACCESS_SHELL = to_boolean(os.getenv("ZRB_LLM_ACCESS_SHELL", "1"))

|

|

96

87

|

LLM_ALLOW_ACCESS_INTERNET = to_boolean(os.getenv("ZRB_LLM_ACCESS_INTERNET", "1"))

|

|

97

|

-

#

|

|

98

|

-

|

|

99

|

-

|

|

100

|

-

)

|

|

88

|

+

# RAG Configuration

|

|

89

|

+

RAG_EMBEDDING_API_KEY = os.getenv("ZRB_RAG_EMBEDDING_API_KEY", None)

|

|

90

|

+

RAG_EMBEDDING_BASE_URL = os.getenv("ZRB_RAG_EMBEDDING_BASE_URL", None)

|

|

91

|

+

RAG_EMBEDDING_MODEL = os.getenv("ZRB_RAG_EMBEDDING_MODEL", "text-embedding-ada-002")

|

|

101

92

|

RAG_CHUNK_SIZE = int(os.getenv("ZRB_RAG_CHUNK_SIZE", "1024"))

|

|

102

93

|

RAG_OVERLAP = int(os.getenv("ZRB_RAG_OVERLAP", "128"))

|

|

103

94

|

RAG_MAX_RESULT_COUNT = int(os.getenv("ZRB_RAG_MAX_RESULT_COUNT", "5"))

|

zrb/llm_config.py

CHANGED

|

@@ -2,35 +2,72 @@ import os

|

|

|

2

2

|

|

|

3

3

|

from pydantic_ai.models import Model

|

|

4

4

|

from pydantic_ai.models.openai import OpenAIModel

|

|

5

|

+

from pydantic_ai.providers import Provider

|

|

5

6

|

from pydantic_ai.providers.openai import OpenAIProvider

|

|

6

7

|

|

|

8

|

+

DEFAULT_SYSTEM_PROMPT = """

|

|

9

|

+

You have access to tools.

|

|

10

|

+

Your goal is to answer user queries accurately.

|

|

11

|

+

Follow these instructions precisely:

|

|

12

|

+

1. ALWAYS use available tools to gather information BEFORE asking the user questions

|

|

13

|

+

2. For tools that require arguments: provide arguments in valid JSON format

|

|

14

|

+

3. For tools with no args: call the tool without args. Do NOT pass "" or {}.

|

|

15

|

+

4. NEVER pass arguments to tools that don't accept parameters

|

|

16

|

+

5. NEVER ask users for information obtainable through tools

|

|

17

|

+

6. Use tools in a logical sequence until you have sufficient information

|

|

18

|

+

7. If a tool call fails, check if you're passing arguments in the correct format

|

|

19

|

+

8. Only after exhausting relevant tools should you request clarification

|

|

20

|

+

""".strip()

|

|

21

|

+

|

|

7

22

|

|

|

8

23

|

class LLMConfig:

|

|

9

24

|

|

|

10

25

|

def __init__(

|

|

11

26

|

self,

|

|

12

|

-

|

|

13

|

-

|

|

14

|

-

|

|

27

|

+

default_model_name: str | None = None,

|

|

28

|

+

default_base_url: str | None = None,

|

|

29

|

+

default_api_key: str | None = None,

|

|

30

|

+

default_system_prompt: str | None = None,

|

|

15

31

|

):

|

|

16

32

|

self._model_name = (

|

|

17

|

-

|

|

33

|

+

default_model_name

|

|

34

|

+

if default_model_name is not None

|

|

35

|

+

else os.getenv("ZRB_LLM_MODEL", None)

|

|

36

|

+

)

|

|

37

|

+

self._model_base_url = (

|

|

38

|

+

default_base_url

|

|

39

|

+

if default_base_url is not None

|

|

40

|

+

else os.getenv("ZRB_LLM_BASE_URL", None)

|

|

18

41

|

)

|

|

19

|

-

self.

|

|

20

|

-

|

|

42

|

+

self._model_api_key = (

|

|

43

|

+

default_api_key

|

|

44

|

+

if default_api_key is not None

|

|

45

|

+

else os.getenv("ZRB_LLM_API_KEY", None)

|

|

21

46

|

)

|

|

22

|

-

self.

|

|

23

|

-

|

|

47

|

+

self._system_prompt = (

|

|

48

|

+

default_system_prompt

|

|

49

|

+

if default_system_prompt is not None

|

|

50

|

+

else os.getenv("ZRB_LLM_SYSTEM_PROMPT", None)

|

|

24

51

|

)

|

|

52

|

+

self._default_provider = None

|

|

25

53

|

self._default_model = None

|

|

26

54

|

|

|

27

55

|

def _get_model_name(self) -> str | None:

|

|

28

56

|

return self._model_name if self._model_name is not None else None

|

|

29

57

|

|

|

30

|

-

def

|

|

31

|

-

if self.

|

|

58

|

+

def get_default_model_provider(self) -> Provider | str:

|

|

59

|

+

if self._default_provider is not None:

|

|

60

|

+

return self._default_provider

|

|

61

|

+

if self._model_base_url is None and self._model_api_key is None:

|

|

32

62

|

return "openai"

|

|

33

|

-

return OpenAIProvider(

|

|

63

|

+

return OpenAIProvider(

|

|

64

|

+

base_url=self._model_base_url, api_key=self._model_api_key

|

|

65

|

+

)

|

|

66

|

+

|

|

67

|

+

def get_default_system_prompt(self) -> str:

|

|

68

|

+

if self._system_prompt is not None:

|

|

69

|

+

return self._system_prompt

|

|

70

|

+

return DEFAULT_SYSTEM_PROMPT

|

|

34

71

|

|

|

35

72

|

def get_default_model(self) -> Model | str | None:

|

|

36

73

|

if self._default_model is not None:

|

|

@@ -40,9 +77,24 @@ class LLMConfig:

|

|

|

40

77

|

return None

|

|

41

78

|

return OpenAIModel(

|

|

42

79

|

model_name=model_name,

|

|

43

|

-

provider=self.

|

|

80

|

+

provider=self.get_default_model_provider(),

|

|

44

81

|

)

|

|

45

82

|

|

|

83

|

+

def set_default_system_prompt(self, system_prompt: str):

|

|

84

|

+

self._system_prompt = system_prompt

|

|

85

|

+

|

|

86

|

+

def set_default_model_name(self, model_name: str):

|

|

87

|

+

self._model_name = model_name

|

|

88

|

+

|

|

89

|

+

def set_default_model_api_key(self, model_api_key: str):

|

|

90

|

+

self._model_api_key = model_api_key

|

|

91

|

+

|

|

92

|

+

def set_default_model_base_url(self, model_base_url: str):

|

|

93

|

+

self._model_base_url = model_base_url

|

|

94

|

+

|

|

95

|

+

def set_default_provider(self, provider: Provider | str):

|

|

96

|

+

self._default_provider = provider

|

|

97

|

+

|

|

46

98

|

def set_default_model(self, model: Model | str | None):

|

|

47

99

|

self._default_model = model

|

|

48

100

|

|

zrb/task/llm_task.py

CHANGED

|

@@ -1,5 +1,8 @@

|

|

|

1

|

+

import functools

|

|

2

|

+

import inspect

|

|

1

3

|

import json

|

|

2

4

|

import os

|

|

5

|

+

import traceback

|

|

3

6

|

from collections.abc import Callable

|

|

4

7

|

from typing import Any

|

|

5

8

|

|

|

@@ -18,7 +21,6 @@ from pydantic_ai.models import Model

|

|

|

18

21

|

from pydantic_ai.settings import ModelSettings

|

|

19

22

|

|

|

20

23

|

from zrb.attr.type import StrAttr, fstring

|

|

21

|

-

from zrb.config import LLM_SYSTEM_PROMPT

|

|

22

24

|

from zrb.context.any_context import AnyContext

|

|

23

25

|

from zrb.context.any_shared_context import AnySharedContext

|

|

24

26

|

from zrb.env.any_env import AnyEnv

|

|

@@ -58,7 +60,7 @@ class LLMTask(BaseTask):

|

|

|

58

60

|

ModelSettings | Callable[[AnySharedContext], ModelSettings] | None

|

|

59

61

|

) = None,

|

|

60

62

|

agent: Agent | Callable[[AnySharedContext], Agent] | None = None,

|

|

61

|

-

system_prompt: StrAttr | None =

|

|

63

|

+

system_prompt: StrAttr | None = None,

|

|

62

64

|

render_system_prompt: bool = True,

|

|

63

65

|

message: StrAttr | None = None,

|

|

64

66

|

tools: (

|

|

@@ -202,6 +204,9 @@ class LLMTask(BaseTask):

|

|

|

202

204

|

async with node.stream(agent_run.ctx) as handle_stream:

|

|

203

205

|

async for event in handle_stream:

|

|

204

206

|

if isinstance(event, FunctionToolCallEvent):

|

|

207

|

+

# Fixing anthrophic claude when call function with empty parameter

|

|

208

|

+

if event.part.args == "":

|

|

209

|

+

event.part.args = {}

|

|

205

210

|

ctx.print(

|

|

206

211

|

stylize_faint(

|

|

207

212

|

f"[Tools] The LLM calls tool={event.part.tool_name!r} with args={event.part.args} (tool_call_id={event.part.tool_call_id!r})" # noqa

|

|

@@ -241,7 +246,7 @@ class LLMTask(BaseTask):

|

|

|

241

246

|

)

|

|

242

247

|

tools_or_callables.extend(self._additional_tools)

|

|

243

248

|

tools = [

|

|

244

|

-

tool if isinstance(tool, Tool) else Tool(tool, takes_ctx=False)

|

|

249

|

+

tool if isinstance(tool, Tool) else Tool(_wrap_tool(tool), takes_ctx=False)

|

|

245

250

|

for tool in tools_or_callables

|

|

246

251

|

]

|

|

247

252

|

return Agent(

|

|

@@ -257,21 +262,17 @@ class LLMTask(BaseTask):

|

|

|

257

262

|

if model is None:

|

|

258

263

|

return default_llm_config.get_default_model()

|

|

259

264

|

if isinstance(model, str):

|

|

265

|

+

model_base_url = self._get_model_base_url(ctx)

|

|

266

|

+

model_api_key = self._get_model_api_key(ctx)

|

|

260

267

|

llm_config = LLMConfig(

|

|

261

|

-

|

|

262

|

-

|

|

263

|

-

|

|

264

|

-

self._get_model_base_url(ctx),

|

|

265

|

-

None,

|

|

266

|

-

auto_render=self._render_model_base_url,

|

|

267

|

-

),

|

|

268

|

-

api_key=get_attr(

|

|

269

|

-

ctx,

|

|

270

|

-

self._get_model_api_key(ctx),

|

|

271

|

-

None,

|

|

272

|

-

auto_render=self._render_model_api_key,

|

|

273

|

-

),

|

|

268

|

+

default_model_name=model,

|

|

269

|

+

default_base_url=model_base_url,

|

|

270

|

+

default_api_key=model_api_key,

|

|

274

271

|

)

|

|

272

|

+

if model_base_url is None and model_api_key is None:

|

|

273

|

+

default_model_provider = default_llm_config.get_default_model_provider()

|

|

274

|

+

if default_model_provider is not None:

|

|

275

|

+

llm_config.set_default_provider(default_model_provider)

|

|

275

276

|

return llm_config.get_default_model()

|

|

276

277

|

raise ValueError(f"Invalid model: {model}")

|

|

277

278

|

|

|

@@ -289,15 +290,18 @@ class LLMTask(BaseTask):

|

|

|

289

290

|

)

|

|

290

291

|

if isinstance(api_key, str) or api_key is None:

|

|

291

292

|

return api_key

|

|

292

|

-

raise ValueError(f"Invalid model

|

|

293

|

+

raise ValueError(f"Invalid model API key: {api_key}")

|

|

293

294

|

|

|

294

295

|

def _get_system_prompt(self, ctx: AnyContext) -> str:

|

|

295

|

-

|

|

296

|

+

system_prompt = get_attr(

|

|

296

297

|

ctx,

|

|

297

298

|

self._system_prompt,

|

|

298

|

-

|

|

299

|

+

None,

|

|

299

300

|

auto_render=self._render_system_prompt,

|

|

300

301

|

)

|

|

302

|

+

if system_prompt is not None:

|

|

303

|

+

return system_prompt

|

|

304

|

+

return default_llm_config.get_default_system_prompt()

|

|

301

305

|

|

|

302

306

|

def _get_message(self, ctx: AnyContext) -> str:

|

|

303

307

|

return get_str_attr(ctx, self._message, "How are you?", auto_render=True)

|

|

@@ -323,3 +327,16 @@ class LLMTask(BaseTask):

|

|

|

323

327

|

"",

|

|

324

328

|

auto_render=self._render_history_file,

|

|

325

329

|

)

|

|

330

|

+

|

|

331

|

+

|

|

332

|

+

def _wrap_tool(func):

|

|

333

|

+

@functools.wraps(func)

|

|

334

|

+

async def wrapper(*args, **kwargs):

|

|

335

|

+

try:

|

|

336

|

+

return await run_async(func(*args, **kwargs))

|

|

337

|

+

except Exception as e:

|

|

338

|

+

# Optionally, you can include more details from traceback if needed.

|

|

339

|

+

error_details = traceback.format_exc()

|

|

340

|

+

return f"Error: {e}\nDetails: {error_details}"

|

|

341

|

+

|

|

342

|

+

return wrapper

|

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.1

|

|

2

2

|

Name: zrb

|

|

3

|

-

Version: 1.4.

|

|

3

|

+

Version: 1.4.3

|

|

4

4

|

Summary: Your Automation Powerhouse

|

|

5

5

|

Home-page: https://github.com/state-alchemists/zrb

|

|

6

6

|

License: AGPL-3.0-or-later

|

|

@@ -13,16 +13,20 @@ Classifier: Programming Language :: Python :: 3

|

|

|

13

13

|

Classifier: Programming Language :: Python :: 3.10

|

|

14

14

|

Classifier: Programming Language :: Python :: 3.11

|

|

15

15

|

Classifier: Programming Language :: Python :: 3.12

|

|

16

|

+

Provides-Extra: all

|

|

17

|

+

Provides-Extra: playwright

|

|

16

18

|

Provides-Extra: rag

|

|

17

19

|

Requires-Dist: autopep8 (>=2.0.4,<3.0.0)

|

|

18

20

|

Requires-Dist: beautifulsoup4 (>=4.12.3,<5.0.0)

|

|

19

21

|

Requires-Dist: black (>=24.10.0,<24.11.0)

|

|

20

|

-

Requires-Dist: chromadb (>=0.5.20,<0.6.0) ; extra == "rag"

|

|

22

|

+

Requires-Dist: chromadb (>=0.5.20,<0.6.0) ; extra == "rag" or extra == "all"

|

|

21

23

|

Requires-Dist: fastapi[standard] (>=0.115.6,<0.116.0)

|

|

22

24

|

Requires-Dist: fastembed (>=0.5.1,<0.6.0)

|

|

23

25

|

Requires-Dist: isort (>=5.13.2,<5.14.0)

|

|

24

26

|

Requires-Dist: libcst (>=1.5.0,<2.0.0)

|

|

25

|

-

Requires-Dist:

|

|

27

|

+

Requires-Dist: openai (>=1.10.0,<2.0.0) ; extra == "rag" or extra == "all"

|

|

28

|

+

Requires-Dist: pdfplumber (>=0.11.4,<0.12.0) ; extra == "rag" or extra == "all"

|

|

29

|

+

Requires-Dist: playwright (>=1.43.0,<2.0.0) ; extra == "playwright" or extra == "all"

|

|

26

30

|

Requires-Dist: psutil (>=6.1.1,<7.0.0)

|

|

27

31

|

Requires-Dist: pydantic-ai (>=0.0.42,<0.0.43)

|

|

28

32

|

Requires-Dist: python-dotenv (>=1.0.1,<2.0.0)

|

|

@@ -44,7 +48,6 @@ Description-Content-Type: text/markdown

|

|

|

44

48

|

|

|

45

49

|

Zrb streamlines repetitive tasks, integrates with powerful LLMs, and lets you create custom automation workflows effortlessly. Whether you’re building CI/CD pipelines, code generators, or unique automation scripts, Zrb is designed to simplify and supercharge your workflow.

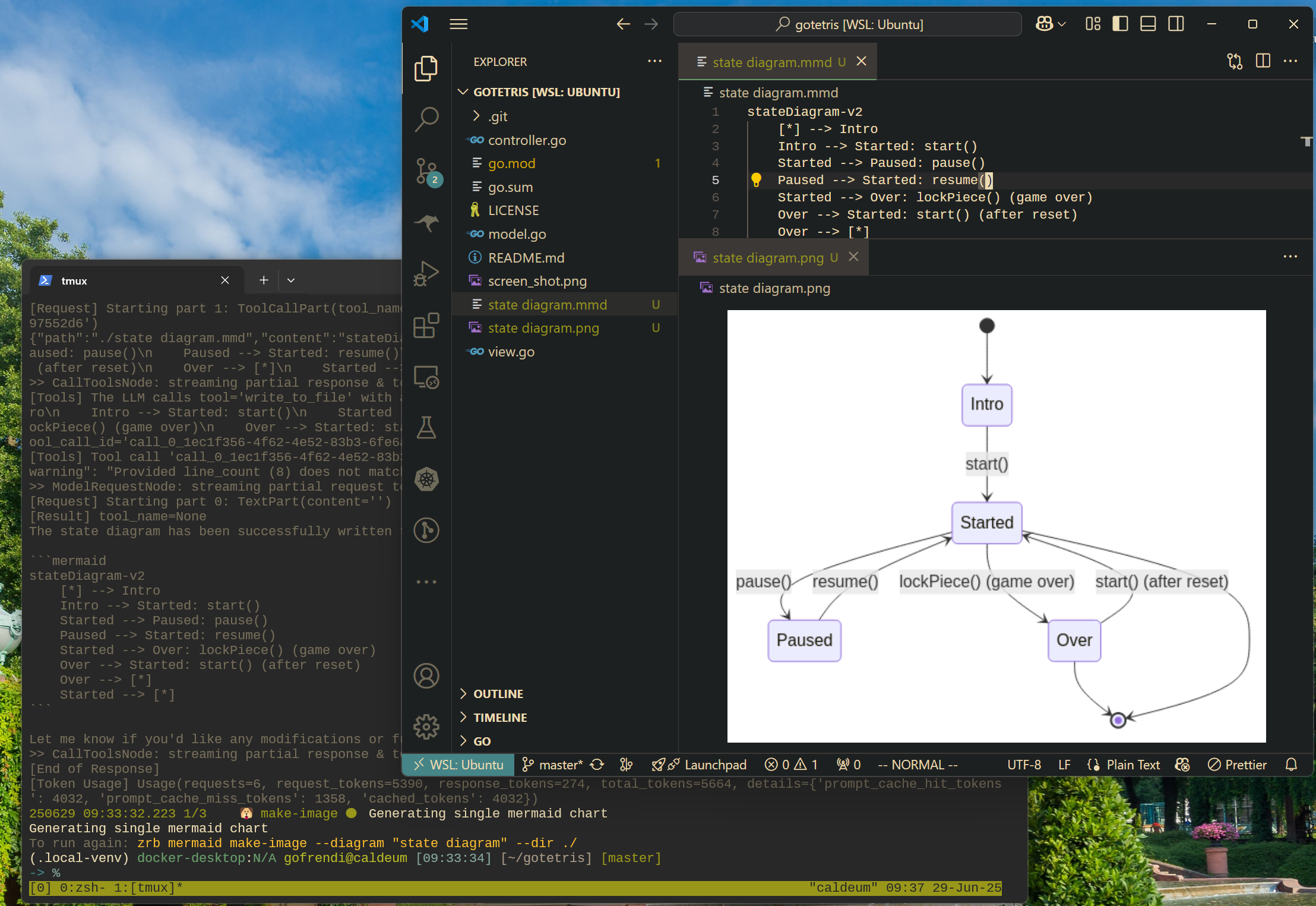

|

|

46

50

|

|

|

47

|

-

---

|

|

48

51

|

|

|

49

52

|

## 🚀 Why Zrb?

|

|

50

53

|

|

|

@@ -54,7 +57,6 @@ Zrb streamlines repetitive tasks, integrates with powerful LLMs, and lets you cr

|

|

|

54

57

|

- **Developer-Friendly:** Quick to install and get started, with clear documentation and examples.

|

|

55

58

|

- **Web Interface:** Run Zrb as a server to make tasks accessible even to non-technical team members.

|

|

56

59

|

|

|

57

|

-

---

|

|

58

60

|

|

|

59

61

|

## 🔥 Key Features

|

|

60

62

|

|

|

@@ -64,11 +66,8 @@ Zrb streamlines repetitive tasks, integrates with powerful LLMs, and lets you cr

|

|

|

64

66

|

- **Flexible Input Handling:** Defaults, prompts, and command-line parameters to suit any workflow.

|

|

65

67

|

- **Extensible & Open Source:** Contribute, customize, or extend Zrb to fit your unique needs.

|

|

66

68

|

|

|

67

|

-

---

|

|

68

69

|

|

|

69

|

-

|

|

70

|

-

|

|

71

|

-

### Quick Installation

|

|

70

|

+

# 🛠️ Installation

|

|

72

71

|

|

|

73

72

|

Install Zrb via pip:

|

|

74

73

|

|

|

@@ -84,40 +83,48 @@ bash -c "$(curl -fsSL https://raw.githubusercontent.com/state-alchemists/zrb/mai

|

|

|

84

83

|

|

|

85

84

|

```

|

|

86

85

|

|

|

87

|

-

|

|

86

|

+

# 🍲 Quick Start: Build Your First Automation Workflow

|

|

87

|

+

|

|

88

|

+

Zrb empowers you to create custom automation tasks using Python. This guide shows you how to define two simple tasks: one to generate a PlantUML script from your source code and another to convert that script into a PNG image.

|

|

89

|

+

|

|

90

|

+

## 1. Create Your Task Definition File

|

|

88

91

|

|

|

89

|

-

|

|

92

|

+

Place a file named `zrb_init.py` in a directory that's accessible from your projects. Zrb will automatically search for this file by starting in your current directory and then moving upward (i.e., checking parent directories) until it finds one. This means if you place your `zrb_init.py` in your home directory (e.g., `/home/<your-user-name>/zrb_init.py`), the tasks defined there will be available for any project.

|

|

90

93

|

|

|

94

|

+

Add the following content to your zrb_init.py:

|

|

91

95

|

|

|

92

96

|

```python

|

|

93

97

|

import os

|

|

94

|

-

from zrb import cli,

|

|

95

|

-

from zrb.builtin.llm.tool.file import

|

|

98

|

+

from zrb import cli, LLMTask, CmdTask, StrInput, Group

|

|

99

|

+

from zrb.builtin.llm.tool.file import (

|

|

100

|

+

read_text_file, list_files, write_text_file

|

|

101

|

+

)

|

|

96

102

|

|

|

97

103

|

CURRENT_DIR = os.getcwd()

|

|

98

104

|

|

|

99

|

-

#

|

|

105

|

+

# Create a group for UML-related tasks

|

|

100

106

|

uml_group = cli.add_group(Group(name="uml", description="UML related tasks"))

|

|

101

107

|

|

|

102

|

-

# Generate

|

|

108

|

+

# Task 1: Generate a PlantUML script from your source code

|

|

103

109

|

make_uml_script = uml_group.add_task(

|

|

104

110

|

LLMTask(

|

|

105

111

|

name="make-script",

|

|

106

112

|

description="Creating plantuml diagram based on source code in current directory",

|

|

107

113

|

input=StrInput(name="diagram", default="state diagram"),

|

|

108

114

|

message=(

|

|

109

|

-

f"Read

|

|

115

|

+

f"Read all necessary files in {CURRENT_DIR}, "

|

|

110

116

|

"make a {ctx.input.diagram} in plantuml format. "

|

|

111

117

|

f"Write the script into {CURRENT_DIR}/{{ctx.input.diagram}}.uml"

|

|

112

118

|

),

|

|

113

119

|

tools=[

|

|

114

|

-

|

|

120

|

+

list_files,

|

|

121

|

+

read_text_file,

|

|

115

122

|

write_text_file,

|

|

116

123

|

],

|

|

117

124

|

)

|

|

118

125

|

)

|

|

119

126

|

|

|

120

|

-

#

|

|

127

|

+

# Task 2: Convert the PlantUML script into a PNG image

|

|

121

128

|

make_uml_image = uml_group.add_task(

|

|

122

129

|

CmdTask(

|

|

123

130

|

name="make-image",

|

|

@@ -128,62 +135,75 @@ make_uml_image = uml_group.add_task(

|

|

|

128

135

|

)

|

|

129

136

|

)

|

|

130

137

|

|

|

131

|

-

#

|

|

138

|

+

# Set up the dependency: the image task runs after the script is created

|

|

132

139

|

make_uml_script >> make_uml_image

|

|

133

140

|

```

|

|

134

141

|

|

|

135

|

-

|

|

142

|

+

**What This Does**

|

|

136

143

|

|

|

137

|

-

|

|

144

|

+

- **Task 1 – make-script**:

|

|

138

145

|

|

|

139

|

-

|

|

146

|

+

Uses an LLM to read all files in your current directory and generate a PlantUML script (e.g., `state diagram.uml`).

|

|

140

147

|

|

|

141

|

-

|

|

148

|

+

- **Task 2 – make-image**:

|

|

142

149

|

|

|

143

|

-

|

|

150

|

+

Executes a command that converts the PlantUML script into a PNG image (e.g., `state diagram.png`). This task will run only after the script has been generated.

|

|

144

151

|

|

|

145

|

-

Now, go to your project and create a state diagram:

|

|

146

152

|

|

|

147

|

-

|

|

148

|

-

git clone git@github.com:jjinux/gotetris.git

|

|

149

|

-

cd gotetris

|

|

150

|

-

zrb uml make-image --diagram "state diagram"

|

|

151

|

-

```

|

|

153

|

+

## 2. Run Your Tasks

|

|

152

154

|

|

|

153

|

-

|

|

155

|

+

After setting up your tasks, you can execute them from any project. For example:

|

|

154

156

|

|

|

155

|

-

|

|

156

|

-

zrb uml make-image

|

|

157

|

-

```

|

|

157

|

+

- Clone/Create a Project:

|

|

158

158

|

|

|

159

|

-

|

|

159

|

+

```bash

|

|

160

|

+

git clone git@github.com:jjinux/gotetris.git

|

|

161

|

+

cd gotetris

|

|

162

|

+

```

|

|

160

163

|

|

|

161

|

-

|

|

162

|

-

|

|

163

|

-

```

|

|

164

|

+

- Create a state diagram:

|

|

165

|

+

|

|

166

|

+

```bash

|

|

167

|

+

zrb uml make-image --diagram "state diagram"

|

|

168

|

+

```

|

|

169

|

+

|

|

170

|

+

- Or use the interactive mode:

|

|

164

171

|

|

|

165

|

-

|

|

172

|

+

```bash

|

|

173

|

+

zrb uml make-image

|

|

174

|

+

```

|

|

166

175

|

|

|

167

|

-

|

|

176

|

+

Zrb will prompt:

|

|

177

|

+

|

|

178

|

+

```bash

|

|

179

|

+

diagram [state diagram]:

|

|

180

|

+

```

|

|

181

|

+

|

|

182

|

+

Press **Enter** to use the default value

|

|

183

|

+

|

|

184

|

+

|

|

185

|

+

|

|

186

|

+

|

|

187

|

+

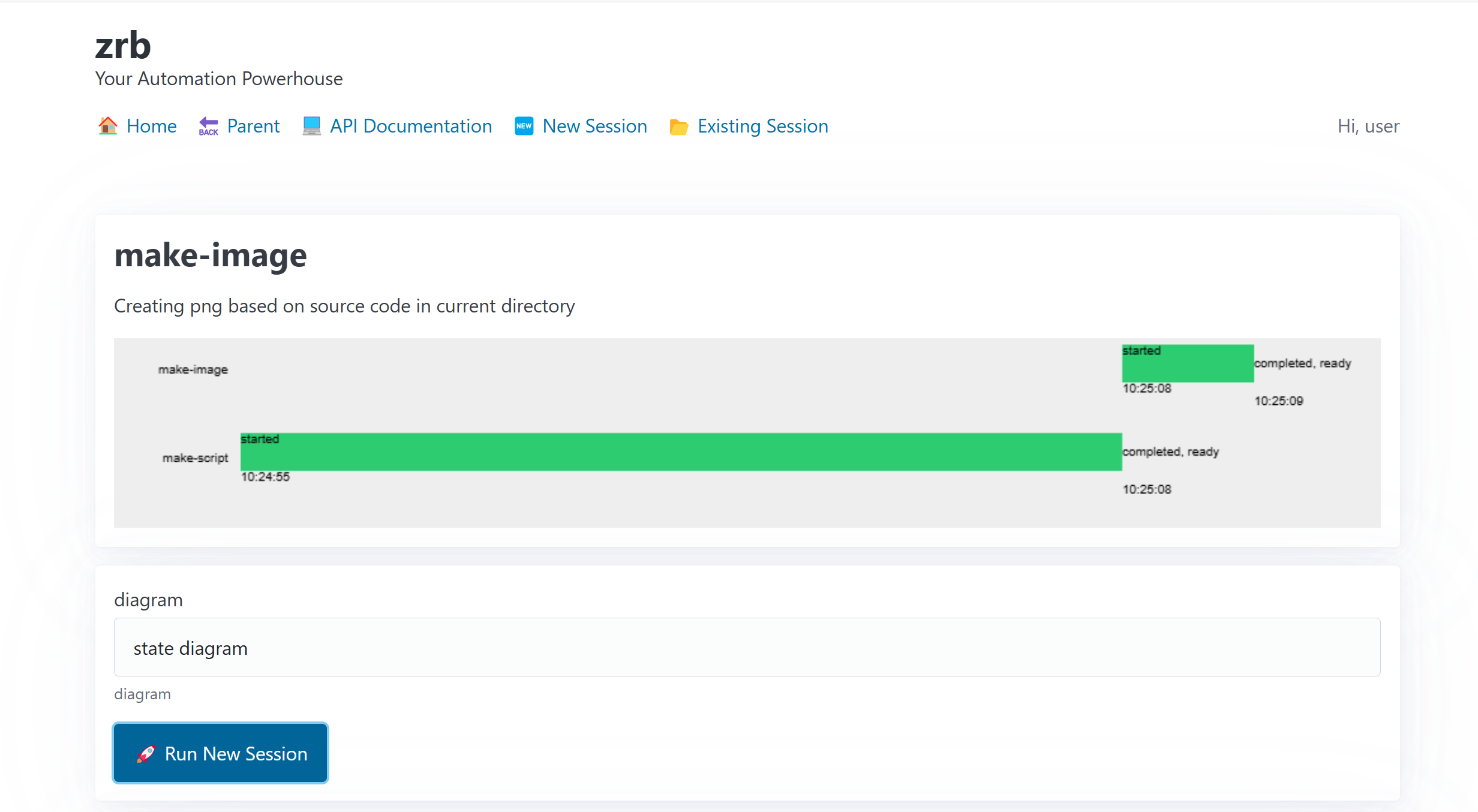

## 3. Try Out the Web UI

|

|

188

|

+

|

|

189

|

+

You can also serve your tasks through a user-friendly web interface:

|

|

168

190

|

|

|

169

191

|

```bash

|

|

170

192

|

zrb server start

|

|

171

193

|

```

|

|

172

194

|

|

|

173

|

-

|

|

195

|

+

Then open your browser and visit `http://localhost:21213`

|

|

174

196

|

|

|

175

197

|

|

|

176

198

|

|

|

177

|

-

Now, let's see how things work in detail. First, Zrb generates a `state diagram.uml` in your current directory, it then transform the UML script into a PNG image `state diagram.png`.

|

|

178

|

-

|

|

179

|

-

|

|

180

|

-

|

|

181

199

|

|

|

182

200

|

# 🎥 Demo & Documentation

|

|

183

201

|

|

|

184

202

|

- **Step by step guide:** [Getting started with Zrb](https://github.com/state-alchemists/zrb/blob/main/docs/recipes/getting-started/README.md).

|

|

185

203

|

- **Full documentation:** [Zrb Documentation](https://github.com/state-alchemists/zrb/blob/main/docs/README.md)

|

|

186

|

-

- **Video demo:**

|

|

204

|

+

- **Video demo:**

|

|

205

|

+

|

|

206

|

+

[](https://www.youtube.com/watch?v=W7dgk96l__o)

|

|

187

207

|

|

|

188

208

|

|

|

189

209

|

# 🤝 Join the Community

|

|

@@ -7,13 +7,13 @@ zrb/builtin/base64.py,sha256=1YnSwASp7OEAvQcsnHZGpJEvYoI1Z2zTIJ1bCDHfcPQ,921

|

|

|

7

7

|

zrb/builtin/git.py,sha256=8_qVE_2lVQEVXQ9vhiw8Tn4Prj1VZB78ZjEJJS5Ab3M,5461

|

|

8

8

|

zrb/builtin/git_subtree.py,sha256=7BKwOkVTWDrR0DXXQ4iJyHqeR6sV5VYRt8y_rEB0EHg,3505

|

|

9

9

|

zrb/builtin/group.py,sha256=-phJfVpTX3_gUwS1u8-RbZUHe-X41kxDBSmrVh4rq8E,1682

|

|

10

|

-

zrb/builtin/llm/llm_chat.py,sha256=

|

|

10

|

+

zrb/builtin/llm/llm_chat.py,sha256=OwbeXNaskyufYIhbhLmj9JRYB9bw5D8JfntAzOhmrP8,6140

|

|

11

11

|

zrb/builtin/llm/previous-session.js,sha256=xMKZvJoAbrwiyHS0OoPrWuaKxWYLoyR5sguePIoCjTY,816

|

|

12

|

-

zrb/builtin/llm/tool/api.py,sha256=

|

|

12

|

+

zrb/builtin/llm/tool/api.py,sha256=U0_PhVuoDLpq4Jak5S45IHhCF1jKmfS0JC8XAnfnOhA,858

|

|

13

13

|

zrb/builtin/llm/tool/cli.py,sha256=to_IjkfrMGs6eLfG0cpVN9oyADWYsJQCtyluUhUdBww,253

|

|

14

|

-

zrb/builtin/llm/tool/file.py,sha256=

|

|

15

|

-

zrb/builtin/llm/tool/rag.py,sha256=

|

|

16

|

-

zrb/builtin/llm/tool/web.py,sha256=

|

|

14

|

+

zrb/builtin/llm/tool/file.py,sha256=v3gaAM442lVZju4LVXqABiIk_H4k5XPiw9JvXYAZbow,4946

|

|

15

|

+

zrb/builtin/llm/tool/rag.py,sha256=pX8N_bYv4axsjhULLvvZtQYW2klZOkeQZ2Tn16083vM,6860

|

|

16

|

+

zrb/builtin/llm/tool/web.py,sha256=LtaKU8BkV5HvKvSwOU59k99_kbbfeBQYS8fuP7l6fJ8,5248

|

|

17

17

|

zrb/builtin/md5.py,sha256=0pNlrfZA0wlZlHvFHLgyqN0JZJWGKQIF5oXxO44_OJk,949

|

|

18

18

|

zrb/builtin/project/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

19

19

|

zrb/builtin/project/add/fastapp/fastapp_input.py,sha256=MKlWR_LxWhM_DcULCtLfL_IjTxpDnDBkn9KIqNmajFs,310

|

|

@@ -208,7 +208,7 @@ zrb/callback/callback.py,sha256=hKefB_Jd1XGjPSLQdMKDsGLHPzEGO2dqrIArLl_EmD0,848

|

|

|

208

208

|

zrb/cmd/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

209

209

|

zrb/cmd/cmd_result.py,sha256=L8bQJzWCpcYexIxHBNsXj2pT3BtLmWex0iJSMkvimOA,597

|

|

210

210

|

zrb/cmd/cmd_val.py,sha256=7Doowyg6BK3ISSGBLt-PmlhzaEkBjWWm51cED6fAUOQ,1014

|

|

211

|

-

zrb/config.py,sha256=

|

|

211

|

+

zrb/config.py,sha256=bYLagRHcReZBrfaQM3y5FaNCflDC9l8Kb6Sw49eD3-o,4023

|

|

212

212

|

zrb/content_transformer/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

213

213

|

zrb/content_transformer/any_content_transformer.py,sha256=v8ZUbcix1GGeDQwB6OKX_1TjpY__ksxWVeqibwa_iZA,850

|

|

214

214

|

zrb/content_transformer/content_transformer.py,sha256=STl77wW-I69QaGzCXjvkppngYFLufow8ybPLSyAvlHs,2404

|

|

@@ -237,7 +237,7 @@ zrb/input/option_input.py,sha256=TQB82ko5odgzkULEizBZi0e9TIHEbIgvdP0AR3RhA74,213

|

|

|

237

237

|

zrb/input/password_input.py,sha256=szBojWxSP9QJecgsgA87OIYwQrY2AQ3USIKdDZY6snU,1465

|

|

238

238

|

zrb/input/str_input.py,sha256=NevZHX9rf1g8eMatPyy-kUX3DglrVAQpzvVpKAzf7bA,81

|

|

239

239

|

zrb/input/text_input.py,sha256=shvVbc2U8Is36h23M5lcW8IEwKc9FR-4uEPZZroj3rU,3377

|

|

240

|

-

zrb/llm_config.py,sha256=

|

|

240

|

+

zrb/llm_config.py,sha256=w_gO_rYUdj8u-lrX3JCZleB7X5PHAC_35ymn-7dYAQo,3601

|

|

241

241

|

zrb/runner/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

242

242

|

zrb/runner/cli.py,sha256=0mT0oO_yEhc8N4nYCJNujhgLjVykZ0B-kAOFXyAvAqM,6672

|

|

243

243

|

zrb/runner/common_util.py,sha256=0zhZn1Jdmr194_nsL5_L-Kn9-_NDpMTI2z6_LXUQJ-U,1369

|

|

@@ -300,7 +300,7 @@ zrb/task/base_task.py,sha256=SQRf37bylS586KwyW0eYDe9JZ5Hl18FP8kScHae6y3A,21251

|

|

|

300

300

|

zrb/task/base_trigger.py,sha256=jC722rDvodaBLeNaFghkTyv1u0QXrK6BLZUUqcmBJ7Q,4581

|

|

301

301

|

zrb/task/cmd_task.py,sha256=pUKRSR4DZKjbmluB6vi7cxqyhxOLfJ2czSpYeQbiDvo,10705

|

|

302

302

|

zrb/task/http_check.py,sha256=Gf5rOB2Se2EdizuN9rp65HpGmfZkGc-clIAlHmPVehs,2565

|

|

303

|

-

zrb/task/llm_task.py,sha256=

|

|

303

|

+

zrb/task/llm_task.py,sha256=oPLruaSCqEF2fX5xtXfXflVYMknT2U5nBqC0CObT-ug,14282

|

|

304

304

|

zrb/task/make_task.py,sha256=PD3b_aYazthS8LHeJsLAhwKDEgdurQZpymJDKeN60u0,2265

|

|

305

305

|

zrb/task/rsync_task.py,sha256=GSL9144bmp6F0EckT6m-2a1xG25AzrrWYzH4k3SVUKM,6370

|

|

306

306

|

zrb/task/scaffolder.py,sha256=rME18w1HJUHXgi9eTYXx_T2G4JdqDYzBoNOkdOOo5-o,6806

|

|

@@ -341,7 +341,7 @@ zrb/util/string/name.py,sha256=8picJfUBXNpdh64GNaHv3om23QHhUZux7DguFLrXHp8,1163

|

|

|

341

341

|

zrb/util/todo.py,sha256=1nDdwPc22oFoK_1ZTXyf3638Bg6sqE2yp_U4_-frHoc,16015

|

|

342

342

|

zrb/xcom/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

343

343

|

zrb/xcom/xcom.py,sha256=o79rxR9wphnShrcIushA0Qt71d_p3ZTxjNf7x9hJB78,1571

|

|

344

|

-

zrb-1.4.

|

|

345

|

-

zrb-1.4.

|

|

346

|

-

zrb-1.4.

|

|

347

|

-

zrb-1.4.

|

|

344

|

+

zrb-1.4.3.dist-info/METADATA,sha256=Ttrj5DxuqudtPbcEULSHZKbU0ithhP1JbMk1FhvCiiE,8557

|

|

345

|

+

zrb-1.4.3.dist-info/WHEEL,sha256=sP946D7jFCHeNz5Iq4fL4Lu-PrWrFsgfLXbbkciIZwg,88

|

|

346

|

+

zrb-1.4.3.dist-info/entry_points.txt,sha256=-Pg3ElWPfnaSM-XvXqCxEAa-wfVI6BEgcs386s8C8v8,46

|

|

347

|

+

zrb-1.4.3.dist-info/RECORD,,

|

|

File without changes

|

|

File without changes

|