zrb 1.2.1__py3-none-any.whl → 1.3.0__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

Potentially problematic release.

This version of zrb might be problematic. Click here for more details.

- zrb/builtin/llm/llm_chat.py +68 -9

- zrb/builtin/llm/tool/api.py +4 -2

- zrb/builtin/llm/tool/file.py +39 -0

- zrb/builtin/llm/tool/rag.py +37 -22

- zrb/builtin/llm/tool/web.py +46 -20

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/_zrb/column/add_column_util.py +28 -6

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/_zrb/entity/template/app_template/module/gateway/view/content/my-module/my-entity.html +206 -178

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/_zrb/entity/template/app_template/schema/my_entity.py +3 -1

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/auth/service/role/repository/role_db_repository.py +18 -1

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/auth/service/role/repository/role_repository.py +4 -0

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/auth/service/role/role_service.py +20 -11

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/auth/service/user/repository/user_db_repository.py +17 -2

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/auth/service/user/repository/user_repository.py +4 -0

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/auth/service/user/user_service.py +19 -11

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/gateway/view/content/auth/permission.html +209 -180

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/gateway/view/content/auth/role.html +362 -0

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/gateway/view/content/auth/user.html +377 -0

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/gateway/view/static/common/util.js +68 -13

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/module/gateway/view/static/crud/util.js +50 -29

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/schema/permission.py +3 -1

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/schema/role.py +6 -5

- zrb/builtin/project/add/fastapp/fastapp_template/my_app_name/schema/user.py +9 -3

- zrb/config.py +3 -1

- zrb/content_transformer/content_transformer.py +7 -1

- zrb/context/context.py +8 -2

- zrb/input/any_input.py +5 -0

- zrb/input/base_input.py +6 -0

- zrb/input/bool_input.py +2 -0

- zrb/input/float_input.py +2 -0

- zrb/input/int_input.py +2 -0

- zrb/input/option_input.py +2 -0

- zrb/input/password_input.py +2 -0

- zrb/input/text_input.py +11 -5

- zrb/runner/cli.py +1 -1

- zrb/runner/common_util.py +3 -3

- zrb/runner/web_route/task_input_api_route.py +1 -1

- zrb/task/llm_task.py +103 -16

- {zrb-1.2.1.dist-info → zrb-1.3.0.dist-info}/METADATA +85 -18

- {zrb-1.2.1.dist-info → zrb-1.3.0.dist-info}/RECORD +41 -40

- {zrb-1.2.1.dist-info → zrb-1.3.0.dist-info}/WHEEL +0 -0

- {zrb-1.2.1.dist-info → zrb-1.3.0.dist-info}/entry_points.txt +0 -0

|

@@ -51,7 +51,9 @@ class UserUpdate(SQLModel):

|

|

|

51

51

|

active: bool | None = None

|

|

52

52

|

|

|

53

53

|

def with_audit(self, updated_by: str) -> "UserUpdateWithAudit":

|

|

54

|

-

return UserUpdateWithAudit(

|

|

54

|

+

return UserUpdateWithAudit(

|

|

55

|

+

**self.model_dump(exclude_none=True), updated_by=updated_by

|

|

56

|

+

)

|

|

55

57

|

|

|

56

58

|

|

|

57

59

|

class UserUpdateWithAudit(UserUpdate):

|

|

@@ -62,7 +64,9 @@ class UserUpdateWithRoles(UserUpdate):

|

|

|

62

64

|

role_names: list[str] | None = None

|

|

63

65

|

|

|

64

66

|

def with_audit(self, updated_by: str) -> "UserUpdateWithRolesAndAudit":

|

|

65

|

-

return UserUpdateWithRolesAndAudit(

|

|

67

|

+

return UserUpdateWithRolesAndAudit(

|

|

68

|

+

**self.model_dump(exclude_none=True), updated_by=updated_by

|

|

69

|

+

)

|

|

66

70

|

|

|

67

71

|

|

|

68

72

|

class UserUpdateWithRolesAndAudit(UserUpdateWithRoles):

|

|

@@ -70,7 +74,9 @@ class UserUpdateWithRolesAndAudit(UserUpdateWithRoles):

|

|

|

70

74

|

|

|

71

75

|

def get_user_update_with_audit(self) -> UserUpdateWithAudit:

|

|

72

76

|

data = {

|

|

73

|

-

key: val

|

|

77

|

+

key: val

|

|

78

|

+

for key, val in self.model_dump(exclude_none=True).items()

|

|

79

|

+

if key != "role_names"

|

|

74

80

|

}

|

|

75

81

|

return UserUpdateWithAudit(**data)

|

|

76

82

|

|

zrb/config.py

CHANGED

|

@@ -92,7 +92,8 @@ LLM_HISTORY_DIR = os.getenv(

|

|

|

92

92

|

LLM_HISTORY_FILE = os.getenv(

|

|

93

93

|

"ZRB_LLM_HISTORY_FILE", os.path.join(LLM_HISTORY_DIR, "history.json")

|

|

94

94

|

)

|

|

95

|

-

|

|

95

|

+

LLM_ALLOW_ACCESS_LOCAL_FILE = to_boolean(os.getenv("ZRB_LLM_ACCESS_LOCAL_FILE", "1"))

|

|

96

|

+

LLM_ALLOW_ACCESS_SHELL = to_boolean(os.getenv("ZRB_LLM_ACCESS_SHELL", "1"))

|

|

96

97

|

LLM_ALLOW_ACCESS_INTERNET = to_boolean(os.getenv("ZRB_LLM_ACCESS_INTERNET", "1"))

|

|

97

98

|

# noqa See: https://qdrant.github.io/fastembed/examples/Supported_Models/#supported-text-embedding-models

|

|

98

99

|

RAG_EMBEDDING_MODEL = os.getenv(

|

|

@@ -101,6 +102,7 @@ RAG_EMBEDDING_MODEL = os.getenv(

|

|

|

101

102

|

RAG_CHUNK_SIZE = int(os.getenv("ZRB_RAG_CHUNK_SIZE", "1024"))

|

|

102

103

|

RAG_OVERLAP = int(os.getenv("ZRB_RAG_OVERLAP", "128"))

|

|

103

104

|

RAG_MAX_RESULT_COUNT = int(os.getenv("ZRB_RAG_MAX_RESULT_COUNT", "5"))

|

|

105

|

+

SERP_API_KEY = os.getenv("SERP_API_KEY", "")

|

|

104

106

|

|

|

105

107

|

|

|

106

108

|

BANNER = f"""

|

|

@@ -1,4 +1,5 @@

|

|

|

1

1

|

import fnmatch

|

|

2

|

+

import os

|

|

2

3

|

import re

|

|

3

4

|

from collections.abc import Callable

|

|

4

5

|

|

|

@@ -40,7 +41,12 @@ class ContentTransformer(AnyContentTransformer):

|

|

|

40

41

|

return True

|

|

41

42

|

except re.error:

|

|

42

43

|

pass

|

|

43

|

-

|

|

44

|

+

if os.sep not in pattern and (

|

|

45

|

+

os.altsep is None or os.altsep not in pattern

|

|

46

|

+

):

|

|

47

|

+

# Pattern like "*.txt" – match only the basename.

|

|

48

|

+

return fnmatch.fnmatch(file_path, os.path.basename(file_path))

|

|

49

|

+

return fnmatch.fnmatch(file_path, file_path)

|

|

44

50

|

|

|

45

51

|

def transform_file(self, ctx: AnyContext, file_path: str):

|

|

46

52

|

if callable(self._transform_file):

|

zrb/context/context.py

CHANGED

|

@@ -88,7 +88,7 @@ class Context(AnyContext):

|

|

|

88

88

|

return template

|

|

89

89

|

return int(self.render(template))

|

|

90

90

|

|

|

91

|

-

def render_float(self, template: str) -> float:

|

|

91

|

+

def render_float(self, template: str | float) -> float:

|

|

92

92

|

if isinstance(template, float):

|

|

93

93

|

return template

|

|

94

94

|

return float(self.render(template))

|

|

@@ -102,9 +102,10 @@ class Context(AnyContext):

|

|

|

102

102

|

flush: bool = True,

|

|

103

103

|

plain: bool = False,

|

|

104

104

|

):

|

|

105

|

+

sep = " " if sep is None else sep

|

|

105

106

|

message = sep.join([f"{value}" for value in values])

|

|

106

107

|

if plain:

|

|

107

|

-

self.append_to_shared_log(remove_style(message))

|

|

108

|

+

# self.append_to_shared_log(remove_style(message))

|

|

108

109

|

print(message, sep=sep, end=end, file=file, flush=flush)

|

|

109

110

|

return

|

|

110

111

|

color = self._color

|

|

@@ -132,6 +133,7 @@ class Context(AnyContext):

|

|

|

132

133

|

flush: bool = True,

|

|

133

134

|

):

|

|

134

135

|

if self._shared_ctx.get_logging_level() <= logging.DEBUG:

|

|

136

|

+

sep = " " if sep is None else sep

|

|

135

137

|

message = sep.join([f"{value}" for value in values])

|

|

136

138

|

stylized_message = stylize_log(f"[DEBUG] {message}")

|

|

137

139

|

self.print(stylized_message, sep=sep, end=end, file=file, flush=flush)

|

|

@@ -145,6 +147,7 @@ class Context(AnyContext):

|

|

|

145

147

|

flush: bool = True,

|

|

146

148

|

):

|

|

147

149

|

if self._shared_ctx.get_logging_level() <= logging.INFO:

|

|

150

|

+

sep = " " if sep is None else sep

|

|

148

151

|

message = sep.join([f"{value}" for value in values])

|

|

149

152

|

stylized_message = stylize_log(f"[INFO] {message}")

|

|

150

153

|

self.print(stylized_message, sep=sep, end=end, file=file, flush=flush)

|

|

@@ -158,6 +161,7 @@ class Context(AnyContext):

|

|

|

158

161

|

flush: bool = True,

|

|

159

162

|

):

|

|

160

163

|

if self._shared_ctx.get_logging_level() <= logging.INFO:

|

|

164

|

+

sep = " " if sep is None else sep

|

|

161

165

|

message = sep.join([f"{value}" for value in values])

|

|

162

166

|

stylized_message = stylize_warning(f"[WARNING] {message}")

|

|

163

167

|

self.print(stylized_message, sep=sep, end=end, file=file, flush=flush)

|

|

@@ -171,6 +175,7 @@ class Context(AnyContext):

|

|

|

171

175

|

flush: bool = True,

|

|

172

176

|

):

|

|

173

177

|

if self._shared_ctx.get_logging_level() <= logging.ERROR:

|

|

178

|

+

sep = " " if sep is None else sep

|

|

174

179

|

message = sep.join([f"{value}" for value in values])

|

|

175

180

|

stylized_message = stylize_error(f"[ERROR] {message}")

|

|

176

181

|

self.print(stylized_message, sep=sep, end=end, file=file, flush=flush)

|

|

@@ -184,6 +189,7 @@ class Context(AnyContext):

|

|

|

184

189

|

flush: bool = True,

|

|

185

190

|

):

|

|

186

191

|

if self._shared_ctx.get_logging_level() <= logging.CRITICAL:

|

|

192

|

+

sep = " " if sep is None else sep

|

|

187

193

|

message = sep.join([f"{value}" for value in values])

|

|

188

194

|

stylized_message = stylize_error(f"[CRITICAL] {message}")

|

|

189

195

|

self.print(stylized_message, sep=sep, end=end, file=file, flush=flush)

|

zrb/input/any_input.py

CHANGED

zrb/input/base_input.py

CHANGED

|

@@ -17,6 +17,7 @@ class BaseInput(AnyInput):

|

|

|

17

17

|

auto_render: bool = True,

|

|

18

18

|

allow_empty: bool = False,

|

|

19

19

|

allow_positional_parsing: bool = True,

|

|

20

|

+

always_prompt: bool = True,

|

|

20

21

|

):

|

|

21

22

|

self._name = name

|

|

22

23

|

self._description = description

|

|

@@ -25,6 +26,7 @@ class BaseInput(AnyInput):

|

|

|

25

26

|

self._auto_render = auto_render

|

|

26

27

|

self._allow_empty = allow_empty

|

|

27

28

|

self._allow_positional_parsing = allow_positional_parsing

|

|

29

|

+

self._always_prompt = always_prompt

|

|

28

30

|

|

|

29

31

|

def __repr__(self):

|

|

30

32

|

return f"<{self.__class__.__name__} name={self._name}>"

|

|

@@ -37,6 +39,10 @@ class BaseInput(AnyInput):

|

|

|

37

39

|

def description(self) -> str:

|

|

38

40

|

return self._description if self._description is not None else self.name

|

|

39

41

|

|

|

42

|

+

@property

|

|

43

|

+

def always_prompt(self) -> bool:

|

|

44

|

+

return self._always_prompt

|

|

45

|

+

|

|

40

46

|

@property

|

|

41

47

|

def prompt_message(self) -> str:

|

|

42

48

|

return self._prompt if self._prompt is not None else self.name

|

zrb/input/bool_input.py

CHANGED

|

@@ -15,6 +15,7 @@ class BoolInput(BaseInput):

|

|

|

15

15

|

auto_render: bool = True,

|

|

16

16

|

allow_empty: bool = False,

|

|

17

17

|

allow_positional_parsing: bool = True,

|

|

18

|

+

always_prompt: bool = True,

|

|

18

19

|

):

|

|

19

20

|

super().__init__(

|

|

20

21

|

name=name,

|

|

@@ -24,6 +25,7 @@ class BoolInput(BaseInput):

|

|

|

24

25

|

auto_render=auto_render,

|

|

25

26

|

allow_empty=allow_empty,

|

|

26

27

|

allow_positional_parsing=allow_positional_parsing,

|

|

28

|

+

always_prompt=always_prompt,

|

|

27

29

|

)

|

|

28

30

|

|

|

29

31

|

def to_html(self, shared_ctx: AnySharedContext) -> str:

|

zrb/input/float_input.py

CHANGED

|

@@ -14,6 +14,7 @@ class FloatInput(BaseInput):

|

|

|

14

14

|

auto_render: bool = True,

|

|

15

15

|

allow_empty: bool = False,

|

|

16

16

|

allow_positional_parsing: bool = True,

|

|

17

|

+

always_prompt: bool = True,

|

|

17

18

|

):

|

|

18

19

|

super().__init__(

|

|

19

20

|

name=name,

|

|

@@ -23,6 +24,7 @@ class FloatInput(BaseInput):

|

|

|

23

24

|

auto_render=auto_render,

|

|

24

25

|

allow_empty=allow_empty,

|

|

25

26

|

allow_positional_parsing=allow_positional_parsing,

|

|

27

|

+

always_prompt=always_prompt,

|

|

26

28

|

)

|

|

27

29

|

|

|

28

30

|

def to_html(self, shared_ctx: AnySharedContext) -> str:

|

zrb/input/int_input.py

CHANGED

|

@@ -14,6 +14,7 @@ class IntInput(BaseInput):

|

|

|

14

14

|

auto_render: bool = True,

|

|

15

15

|

allow_empty: bool = False,

|

|

16

16

|

allow_positional_parsing: bool = True,

|

|

17

|

+

always_prompt: bool = True,

|

|

17

18

|

):

|

|

18

19

|

super().__init__(

|

|

19

20

|

name=name,

|

|

@@ -23,6 +24,7 @@ class IntInput(BaseInput):

|

|

|

23

24

|

auto_render=auto_render,

|

|

24

25

|

allow_empty=allow_empty,

|

|

25

26

|

allow_positional_parsing=allow_positional_parsing,

|

|

27

|

+

always_prompt=always_prompt,

|

|

26

28

|

)

|

|

27

29

|

|

|

28

30

|

def to_html(self, shared_ctx: AnySharedContext) -> str:

|

zrb/input/option_input.py

CHANGED

|

@@ -15,6 +15,7 @@ class OptionInput(BaseInput):

|

|

|

15

15

|

auto_render: bool = True,

|

|

16

16

|

allow_empty: bool = False,

|

|

17

17

|

allow_positional_parsing: bool = True,

|

|

18

|

+

always_prompt: bool = True,

|

|

18

19

|

):

|

|

19

20

|

super().__init__(

|

|

20

21

|

name=name,

|

|

@@ -24,6 +25,7 @@ class OptionInput(BaseInput):

|

|

|

24

25

|

auto_render=auto_render,

|

|

25

26

|

allow_empty=allow_empty,

|

|

26

27

|

allow_positional_parsing=allow_positional_parsing,

|

|

28

|

+

always_prompt=always_prompt,

|

|

27

29

|

)

|

|

28

30

|

self._options = options

|

|

29

31

|

|

zrb/input/password_input.py

CHANGED

|

@@ -15,6 +15,7 @@ class PasswordInput(BaseInput):

|

|

|

15

15

|

auto_render: bool = True,

|

|

16

16

|

allow_empty: bool = False,

|

|

17

17

|

allow_positional_parsing: bool = True,

|

|

18

|

+

always_prompt: bool = True,

|

|

18

19

|

):

|

|

19

20

|

super().__init__(

|

|

20

21

|

name=name,

|

|

@@ -24,6 +25,7 @@ class PasswordInput(BaseInput):

|

|

|

24

25

|

auto_render=auto_render,

|

|

25

26

|

allow_empty=allow_empty,

|

|

26

27

|

allow_positional_parsing=allow_positional_parsing,

|

|

28

|

+

always_prompt=always_prompt,

|

|

27

29

|

)

|

|

28

30

|

self._is_secret = True

|

|

29

31

|

|

zrb/input/text_input.py

CHANGED

|

@@ -19,6 +19,7 @@ class TextInput(BaseInput):

|

|

|

19

19

|

auto_render: bool = True,

|

|

20

20

|

allow_empty: bool = False,

|

|

21

21

|

allow_positional_parsing: bool = True,

|

|

22

|

+

always_prompt: bool = True,

|

|

22

23

|

editor: str = DEFAULT_EDITOR,

|

|

23

24

|

extension: str = ".txt",

|

|

24

25

|

comment_start: str | None = None,

|

|

@@ -32,6 +33,7 @@ class TextInput(BaseInput):

|

|

|

32

33

|

auto_render=auto_render,

|

|

33

34

|

allow_empty=allow_empty,

|

|

34

35

|

allow_positional_parsing=allow_positional_parsing,

|

|

36

|

+

always_prompt=always_prompt,

|

|

35

37

|

)

|

|

36

38

|

self._editor = editor

|

|

37

39

|

self._extension = extension

|

|

@@ -69,16 +71,17 @@ class TextInput(BaseInput):

|

|

|

69

71

|

)

|

|

70

72

|

|

|

71

73

|

def _prompt_cli_str(self, shared_ctx: AnySharedContext) -> str:

|

|

72

|

-

prompt_message = (

|

|

73

|

-

|

|

74

|

+

prompt_message = super().prompt_message

|

|

75

|

+

comment_prompt_message = (

|

|

76

|

+

f"{self.comment_start}{prompt_message}{self.comment_end}"

|

|

74

77

|

)

|

|

75

|

-

|

|

78

|

+

comment_prompt_message_eol = f"{comment_prompt_message}\n"

|

|

76

79

|

default_value = self.get_default_str(shared_ctx)

|

|

77

80

|

with tempfile.NamedTemporaryFile(

|

|

78

81

|

delete=False, suffix=self._extension

|

|

79

82

|

) as temp_file:

|

|

80

83

|

temp_file_name = temp_file.name

|

|

81

|

-

temp_file.write(

|

|

84

|

+

temp_file.write(comment_prompt_message_eol.encode())

|

|

82

85

|

# Pre-fill with default content

|

|

83

86

|

if default_value:

|

|

84

87

|

temp_file.write(default_value.encode())

|

|

@@ -87,7 +90,10 @@ class TextInput(BaseInput):

|

|

|

87

90

|

subprocess.call([self._editor, temp_file_name])

|

|

88

91

|

# Read the edited content

|

|

89

92

|

edited_content = read_file(temp_file_name)

|

|

90

|

-

parts = [

|

|

93

|

+

parts = [

|

|

94

|

+

text.strip() for text in edited_content.split(comment_prompt_message, 1)

|

|

95

|

+

]

|

|

91

96

|

edited_content = "\n".join(parts).lstrip()

|

|

92

97

|

os.remove(temp_file_name)

|

|

98

|

+

print(f"{prompt_message}: {edited_content}")

|

|

93

99

|

return edited_content

|

zrb/runner/cli.py

CHANGED

|

@@ -33,7 +33,7 @@ class Cli(Group):

|

|

|

33

33

|

if "h" in kwargs or "help" in kwargs:

|

|

34

34

|

self._show_task_info(node)

|

|

35

35

|

return

|

|

36

|

-

run_kwargs = get_run_kwargs(task=node, args=args, kwargs=kwargs,

|

|

36

|

+

run_kwargs = get_run_kwargs(task=node, args=args, kwargs=kwargs, cli_mode=True)

|

|

37

37

|

try:

|

|

38

38

|

result = self._run_task(node, args, run_kwargs)

|

|

39

39

|

if result is not None:

|

zrb/runner/common_util.py

CHANGED

|

@@ -5,10 +5,10 @@ from zrb.task.any_task import AnyTask

|

|

|

5

5

|

|

|

6

6

|

|

|

7

7

|

def get_run_kwargs(

|

|

8

|

-

task: AnyTask, args: list[str], kwargs: dict[str, str],

|

|

8

|

+

task: AnyTask, args: list[str], kwargs: dict[str, str], cli_mode: bool

|

|

9

9

|

) -> tuple[Any]:

|

|

10

10

|

arg_index = 0

|

|

11

|

-

str_kwargs = {key: val for key, val in kwargs.items()}

|

|

11

|

+

str_kwargs = {key: f"{val}" for key, val in kwargs.items()}

|

|

12

12

|

run_kwargs = {**str_kwargs}

|

|

13

13

|

shared_ctx = SharedContext(args=args)

|

|

14

14

|

for task_input in task.inputs:

|

|

@@ -21,7 +21,7 @@ def get_run_kwargs(

|

|

|

21

21

|

task_input.update_shared_context(shared_ctx, run_kwargs[task_input.name])

|

|

22

22

|

arg_index += 1

|

|

23

23

|

else:

|

|

24

|

-

if

|

|

24

|

+

if cli_mode and task_input.always_prompt:

|

|

25

25

|

str_value = task_input.prompt_cli_str(shared_ctx)

|

|

26

26

|

else:

|

|

27

27

|

str_value = task_input.get_default_str(shared_ctx)

|

|

@@ -41,7 +41,7 @@ def serve_task_input_api(

|

|

|

41

41

|

return JSONResponse(content={"detail": "Forbidden"}, status_code=403)

|

|

42

42

|

query_dict = json.loads(query)

|

|

43

43

|

run_kwargs = get_run_kwargs(

|

|

44

|

-

task=task, args=[], kwargs=query_dict,

|

|

44

|

+

task=task, args=[], kwargs=query_dict, cli_mode=False

|

|

45

45

|

)

|

|

46

46

|

return run_kwargs

|

|

47

47

|

return JSONResponse(content={"detail": "Not found"}, status_code=404)

|

zrb/task/llm_task.py

CHANGED

|

@@ -4,10 +4,20 @@ from collections.abc import Callable

|

|

|

4

4

|

from typing import Any

|

|

5

5

|

|

|

6

6

|

from pydantic_ai import Agent, Tool

|

|

7

|

-

from pydantic_ai.messages import

|

|

7

|

+

from pydantic_ai.messages import (

|

|

8

|

+

FinalResultEvent,

|

|

9

|

+

FunctionToolCallEvent,

|

|

10

|

+

FunctionToolResultEvent,

|

|

11

|

+

ModelMessagesTypeAdapter,

|

|

12

|

+

PartDeltaEvent,

|

|

13

|

+

PartStartEvent,

|

|

14

|

+

TextPartDelta,

|

|

15

|

+

ToolCallPartDelta,

|

|

16

|

+

)

|

|

17

|

+

from pydantic_ai.models import Model

|

|

8

18

|

from pydantic_ai.settings import ModelSettings

|

|

9

19

|

|

|

10

|

-

from zrb.attr.type import StrAttr

|

|

20

|

+

from zrb.attr.type import StrAttr, fstring

|

|

11

21

|

from zrb.config import LLM_MODEL, LLM_SYSTEM_PROMPT

|

|

12

22

|

from zrb.context.any_context import AnyContext

|

|

13

23

|

from zrb.context.any_shared_context import AnySharedContext

|

|

@@ -15,7 +25,7 @@ from zrb.env.any_env import AnyEnv

|

|

|

15

25

|

from zrb.input.any_input import AnyInput

|

|

16

26

|

from zrb.task.any_task import AnyTask

|

|

17

27

|

from zrb.task.base_task import BaseTask

|

|

18

|

-

from zrb.util.attr import get_str_attr

|

|

28

|

+

from zrb.util.attr import get_attr, get_str_attr

|

|

19

29

|

from zrb.util.cli.style import stylize_faint

|

|

20

30

|

from zrb.util.file import read_file, write_file

|

|

21

31

|

from zrb.util.run import run_async

|

|

@@ -34,7 +44,9 @@ class LLMTask(BaseTask):

|

|

|

34

44

|

cli_only: bool = False,

|

|

35

45

|

input: list[AnyInput | None] | AnyInput | None = None,

|

|

36

46

|

env: list[AnyEnv | None] | AnyEnv | None = None,

|

|

37

|

-

model:

|

|

47

|

+

model: (

|

|

48

|

+

Callable[[AnySharedContext], Model | str | fstring] | Model | None

|

|

49

|

+

) = LLM_MODEL,

|

|

38

50

|

model_settings: (

|

|

39

51

|

ModelSettings | Callable[[AnySharedContext], ModelSettings] | None

|

|

40

52

|

) = None,

|

|

@@ -93,7 +105,7 @@ class LLMTask(BaseTask):

|

|

|

93

105

|

successor=successor,

|

|

94

106

|

)

|

|

95

107

|

self._model = model

|

|

96

|

-

self._model_settings =

|

|

108

|

+

self._model_settings = model_settings

|

|

97

109

|

self._agent = agent

|

|

98

110

|

self._render_model = render_model

|

|

99

111

|

self._system_prompt = system_prompt

|

|

@@ -108,6 +120,9 @@ class LLMTask(BaseTask):

|

|

|

108

120

|

self._render_history_file = render_history_file

|

|

109

121

|

self._max_call_iteration = max_call_iteration

|

|

110

122

|

|

|

123

|

+

def set_model(self, model: Model | str):

|

|

124

|

+

self._model = model

|

|

125

|

+

|

|

111

126

|

def add_tool(self, tool: ToolOrCallable):

|

|

112

127

|

self._additional_tools.append(tool)

|

|

113

128

|

|

|

@@ -115,15 +130,85 @@ class LLMTask(BaseTask):

|

|

|

115

130

|

history = await self._read_conversation_history(ctx)

|

|

116

131

|

user_prompt = self._get_message(ctx)

|

|

117

132

|

agent = self._get_agent(ctx)

|

|

118

|

-

|

|

133

|

+

async with agent.iter(

|

|

119

134

|

user_prompt=user_prompt,

|

|

120

135

|

message_history=ModelMessagesTypeAdapter.validate_python(history),

|

|

121

|

-

)

|

|

122

|

-

|

|

123

|

-

|

|

124

|

-

|

|

136

|

+

) as agent_run:

|

|

137

|

+

async for node in agent_run:

|

|

138

|

+

# Each node represents a step in the agent's execution

|

|

139

|

+

await self._print_node(ctx, agent_run, node)

|

|

140

|

+

new_history = json.loads(agent_run.result.all_messages_json())

|

|

125

141

|

await self._write_conversation_history(ctx, new_history)

|

|

126

|

-

return result.data

|

|

142

|

+

return agent_run.result.data

|

|

143

|

+

|

|

144

|

+

async def _print_node(self, ctx: AnyContext, agent_run: Any, node: Any):

|

|

145

|

+

if Agent.is_user_prompt_node(node):

|

|

146

|

+

# A user prompt node => The user has provided input

|

|

147

|

+

ctx.print(stylize_faint(f">> UserPromptNode: {node.user_prompt}"))

|

|

148

|

+

elif Agent.is_model_request_node(node):

|

|

149

|

+

# A model request node => We can stream tokens from the model"s request

|

|

150

|

+

ctx.print(

|

|

151

|

+

stylize_faint(">> ModelRequestNode: streaming partial request tokens")

|

|

152

|

+

)

|

|

153

|

+

async with node.stream(agent_run.ctx) as request_stream:

|

|

154

|

+

is_streaming = False

|

|

155

|

+

async for event in request_stream:

|

|

156

|

+

if isinstance(event, PartStartEvent):

|

|

157

|

+

if is_streaming:

|

|

158

|

+

ctx.print("", plain=True)

|

|

159

|

+

ctx.print(

|

|

160

|

+

stylize_faint(

|

|

161

|

+

f"[Request] Starting part {event.index}: {event.part!r}"

|

|

162

|

+

),

|

|

163

|

+

)

|

|

164

|

+

is_streaming = False

|

|

165

|

+

elif isinstance(event, PartDeltaEvent):

|

|

166

|

+

if isinstance(event.delta, TextPartDelta):

|

|

167

|

+

ctx.print(

|

|

168

|

+

stylize_faint(f"{event.delta.content_delta}"),

|

|

169

|

+

end="",

|

|

170

|

+

plain=is_streaming,

|

|

171

|

+

)

|

|

172

|

+

elif isinstance(event.delta, ToolCallPartDelta):

|

|

173

|

+

ctx.print(

|

|

174

|

+

stylize_faint(f"{event.delta.args_delta}"),

|

|

175

|

+

end="",

|

|

176

|

+

plain=is_streaming,

|

|

177

|

+

)

|

|

178

|

+

is_streaming = True

|

|

179

|

+

elif isinstance(event, FinalResultEvent):

|

|

180

|

+

if is_streaming:

|

|

181

|

+

ctx.print("", plain=True)

|

|

182

|

+

ctx.print(

|

|

183

|

+

stylize_faint(f"[Result] tool_name={event.tool_name}"),

|

|

184

|

+

)

|

|

185

|

+

is_streaming = False

|

|

186

|

+

if is_streaming:

|

|

187

|

+

ctx.print("", plain=True)

|

|

188

|

+

elif Agent.is_call_tools_node(node):

|

|

189

|

+

# A handle-response node => The model returned some data, potentially calls a tool

|

|

190

|

+

ctx.print(

|

|

191

|

+

stylize_faint(

|

|

192

|

+

">> CallToolsNode: streaming partial response & tool usage"

|

|

193

|

+

)

|

|

194

|

+

)

|

|

195

|

+

async with node.stream(agent_run.ctx) as handle_stream:

|

|

196

|

+

async for event in handle_stream:

|

|

197

|

+

if isinstance(event, FunctionToolCallEvent):

|

|

198

|

+

ctx.print(

|

|

199

|

+

stylize_faint(

|

|

200

|

+

f"[Tools] The LLM calls tool={event.part.tool_name!r} with args={event.part.args} (tool_call_id={event.part.tool_call_id!r})" # noqa

|

|

201

|

+

)

|

|

202

|

+

)

|

|

203

|

+

elif isinstance(event, FunctionToolResultEvent):

|

|

204

|

+

ctx.print(

|

|

205

|

+

stylize_faint(

|

|

206

|

+

f"[Tools] Tool call {event.tool_call_id!r} returned => {event.result.content}" # noqa

|

|

207

|

+

)

|

|

208

|

+

)

|

|

209

|

+

elif Agent.is_end_node(node):

|

|

210

|

+

# Once an End node is reached, the agent run is complete

|

|

211

|

+

ctx.print(stylize_faint(f"{agent_run.result.data}"))

|

|

127

212

|

|

|

128

213

|

async def _write_conversation_history(

|

|

129

214

|

self, ctx: AnyContext, conversations: list[Any]

|

|

@@ -135,11 +220,9 @@ class LLMTask(BaseTask):

|

|

|

135

220

|

write_file(history_file, json.dumps(conversations, indent=2))

|

|

136

221

|

|

|

137

222

|

def _get_model_settings(self, ctx: AnyContext) -> ModelSettings | None:

|

|

138

|

-

if isinstance(self._model_settings, ModelSettings):

|

|

139

|

-

return self._model_settings

|

|

140

223

|

if callable(self._model_settings):

|

|

141

224

|

return self._model_settings(ctx)

|

|

142

|

-

return

|

|

225

|

+

return self._model_settings

|

|

143

226

|

|

|

144

227

|

def _get_agent(self, ctx: AnyContext) -> Agent:

|

|

145

228

|

if isinstance(self._agent, Agent):

|

|

@@ -158,12 +241,16 @@ class LLMTask(BaseTask):

|

|

|

158

241

|

self._get_model(ctx),

|

|

159

242

|

system_prompt=self._get_system_prompt(ctx),

|

|

160

243

|

tools=tools,

|

|

244

|

+

model_settings=self._get_model_settings(ctx),

|

|

161

245

|

)

|

|

162

246

|

|

|

163

|

-

def _get_model(self, ctx: AnyContext) -> str:

|

|

164

|

-

|

|

247

|

+

def _get_model(self, ctx: AnyContext) -> str | Model | None:

|

|

248

|

+

model = get_attr(

|

|

165

249

|

ctx, self._model, "ollama_chat/llama3.1", auto_render=self._render_model

|

|

166

250

|

)

|

|

251

|

+

if isinstance(model, (Model, str)) or model is None:

|

|

252

|

+

return model

|

|

253

|

+

raise ValueError("Invalid model")

|

|

167

254

|

|

|

168

255

|

def _get_system_prompt(self, ctx: AnyContext) -> str:

|

|

169

256

|

return get_str_attr(

|

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.1

|

|

2

2

|

Name: zrb

|

|

3

|

-

Version: 1.

|

|

3

|

+

Version: 1.3.0

|

|

4

4

|

Summary: Your Automation Powerhouse

|

|

5

5

|

Home-page: https://github.com/state-alchemists/zrb

|

|

6

6

|

License: AGPL-3.0-or-later

|

|

@@ -24,7 +24,7 @@ Requires-Dist: isort (>=5.13.2,<5.14.0)

|

|

|

24

24

|

Requires-Dist: libcst (>=1.5.0,<2.0.0)

|

|

25

25

|

Requires-Dist: pdfplumber (>=0.11.4,<0.12.0) ; extra == "rag"

|

|

26

26

|

Requires-Dist: psutil (>=6.1.1,<7.0.0)

|

|

27

|

-

Requires-Dist: pydantic-ai (>=0.0.

|

|

27

|

+

Requires-Dist: pydantic-ai (>=0.0.31,<0.0.32)

|

|

28

28

|

Requires-Dist: python-dotenv (>=1.0.1,<2.0.0)

|

|

29

29

|

Requires-Dist: python-jose[cryptography] (>=3.4.0,<4.0.0)

|

|

30

30

|

Requires-Dist: requests (>=2.32.3,<3.0.0)

|

|

@@ -39,29 +39,96 @@ Description-Content-Type: text/markdown

|

|

|

39

39

|

|

|

40

40

|

# 🤖 Zrb: Your Automation Powerhouse

|

|

41

41

|

|

|

42

|

-

Zrb allows you to write your automation tasks in Python

|

|

42

|

+

Zrb allows you to write your automation tasks in Python. For example, you can define the following script in your home directory (`/home/<your-user-name>/zrb_init.py`).

|

|

43

43

|

|

|

44

44

|

|

|

45

45

|

```python

|

|

46

|

-

|

|

47

|

-

from zrb import cli,

|

|

48

|

-

|

|

49

|

-

|

|

50

|

-

|

|

51

|

-

|

|

52

|

-

|

|

53

|

-

|

|

54

|

-

|

|

55

|

-

|

|

56

|

-

|

|

57

|

-

)

|

|

46

|

+

import os

|

|

47

|

+

from zrb import cli, LLMTask, CmdTask, StrInput

|

|

48

|

+

from zrb.builtin.llm.tool.file import read_source_code, write_text_file

|

|

49

|

+

from pydantic_ai.models.openai import OpenAIModel

|

|

50

|

+

|

|

51

|

+

|

|

52

|

+

CURRENT_DIR = os.getcwd()

|

|

53

|

+

OPENROUTER_BASE_URL = "https://openrouter.ai/api/v1"

|

|

54

|

+

OPENROUTER_API_KEY = os.getenv("OPENROUTER_API_KEY", "")

|

|

55

|

+

OPENROUTER_MODEL_NAME = os.getenv(

|

|

56

|

+

"AGENT_MODEL_NAME", "anthropic/claude-3.7-sonnet"

|

|

57

|

+

)

|

|

58

|

+

|

|

59

|

+

|

|

60

|

+

# Defining a LLM Task to create a Plantuml script based on source code in current directory.

|

|

61

|

+

# User can choose the diagram type. By default it is "state diagram"

|

|

62

|

+

make_uml = cli.add_task(

|

|

63

|

+

LLMTask(

|

|

64

|

+

name="make-uml",

|

|

65

|

+

description="Creating plantuml diagram based on source code in current directory",

|

|

66

|

+

input=StrInput(name="diagram", default="state diagram"),

|

|

67

|

+

model=OpenAIModel(

|

|

68

|

+

OPENROUTER_MODEL_NAME,

|

|

69

|

+

base_url=OPENROUTER_BASE_URL,

|

|

70

|

+

api_key=OPENROUTER_API_KEY,

|

|

71

|

+

),

|

|

72

|

+

message=(

|

|

73

|

+

f"Read source code in {CURRENT_DIR}, "

|

|

74

|

+

"make a {ctx.input.diagram} in plantuml format. "

|

|

75

|

+

f"Write the script into {CURRENT_DIR}/{{ctx.input.diagram}}.uml"

|

|

76

|

+

),

|

|

77

|

+

tools=[

|

|

78

|

+

read_source_code,

|

|

79

|

+

write_text_file,

|

|

80

|

+

],

|

|

81

|

+

)

|

|

82

|

+

)

|

|

83

|

+

|

|

84

|

+

# Defining a Cmd Task to transform Plantuml script into a png image.

|

|

85

|

+

make_png = cli.add_task(

|

|

86

|

+

CmdTask(

|

|

87

|

+

name="make-png",

|

|

88

|

+

description="Creating png based on source code in current directory",

|

|

89

|

+

input=StrInput(name="diagram", default="state diagram"),

|

|

90

|

+

cmd="plantuml -tpng '{ctx.input.diagram}.uml'",

|

|

91

|

+

cwd=CURRENT_DIR,

|

|

92

|

+

)

|

|

93

|

+

)

|

|

94

|

+

|

|

95

|

+

# Making sure that make_png has make_uml as its dependency.

|

|

96

|

+

make_uml >> make_png

|

|

58

97

|

```

|

|

59

98

|

|

|

60

|

-

Once defined,

|

|

99

|

+

Once defined, your automation tasks are immediately accessible from the CLI. You can then invoke the tasks by invoking.

|

|

61

100

|

|

|

62

|

-

|

|

101

|

+

```bash

|

|

102

|

+

zrb make-png --diagram "state diagram"

|

|

103

|

+

```

|

|

104

|

+

|

|

105

|

+

Or you can invoke the tasks without parameter.

|

|

106

|

+

|

|

107

|

+

```bash

|

|

108

|

+

zrb make-png

|

|

109

|

+

```

|

|

110

|

+

|

|

111

|

+

At this point, Zrb will politely ask you to provide the diagram type.

|

|

112

|

+

|

|

113

|

+

```

|

|

114

|

+

diagram [state diagram]:

|

|

115

|

+

```

|

|

116

|

+

|

|

117

|

+

You can just press enter if you want to use the default value.

|

|

118

|

+

|

|

119

|

+

Finally, you can run Zrb as a server and make your tasks available for non technical users by invoking the following command.

|

|

120

|

+

|

|

121

|

+

```bash

|

|

122

|

+

zrb server start

|

|

123

|

+

```

|

|

124

|

+

|

|

125

|

+

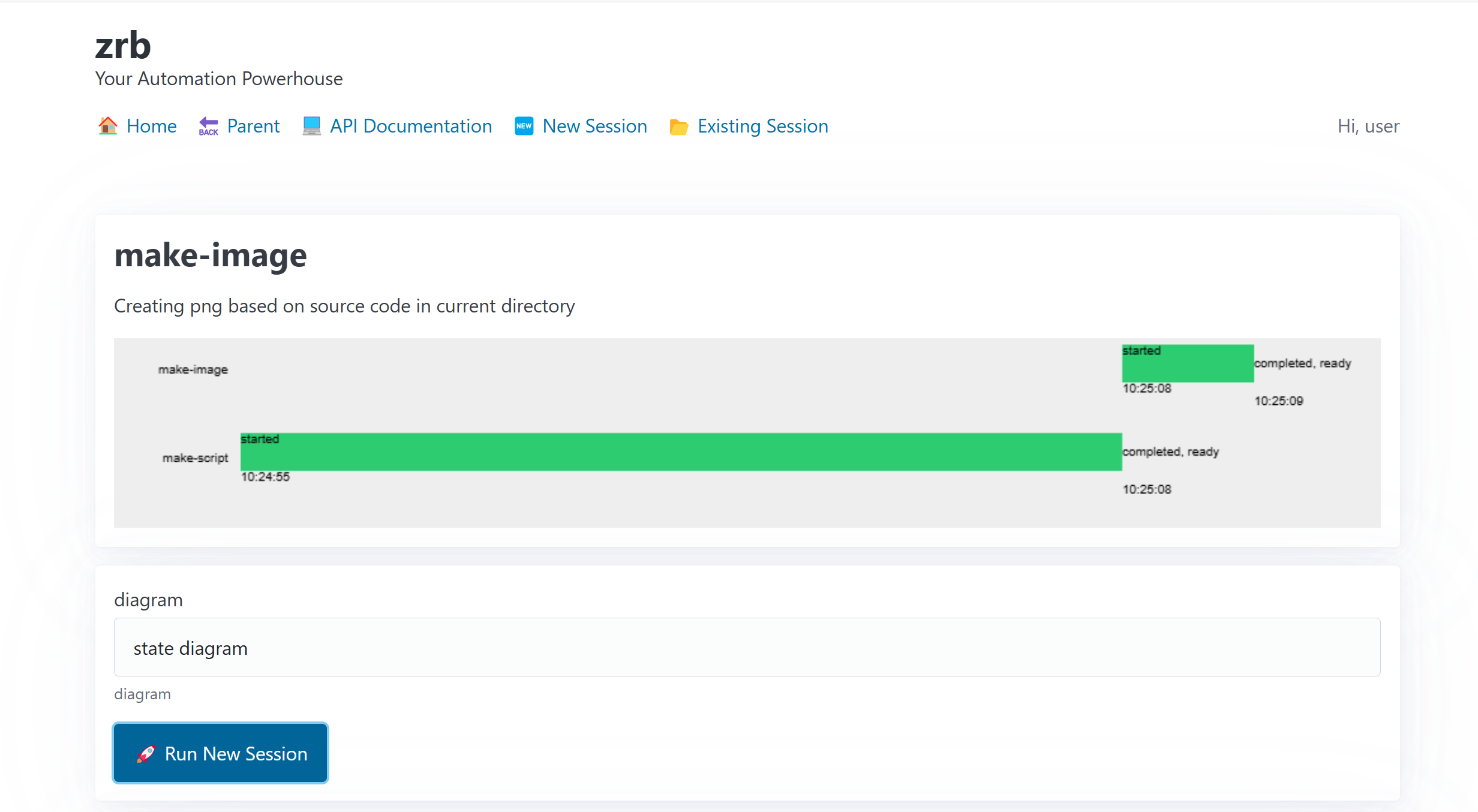

You will have a nice web interface running on `http://localhost:12123`

|

|

126

|

+

|

|

127

|

+

|

|

128

|

+

|

|

129

|

+

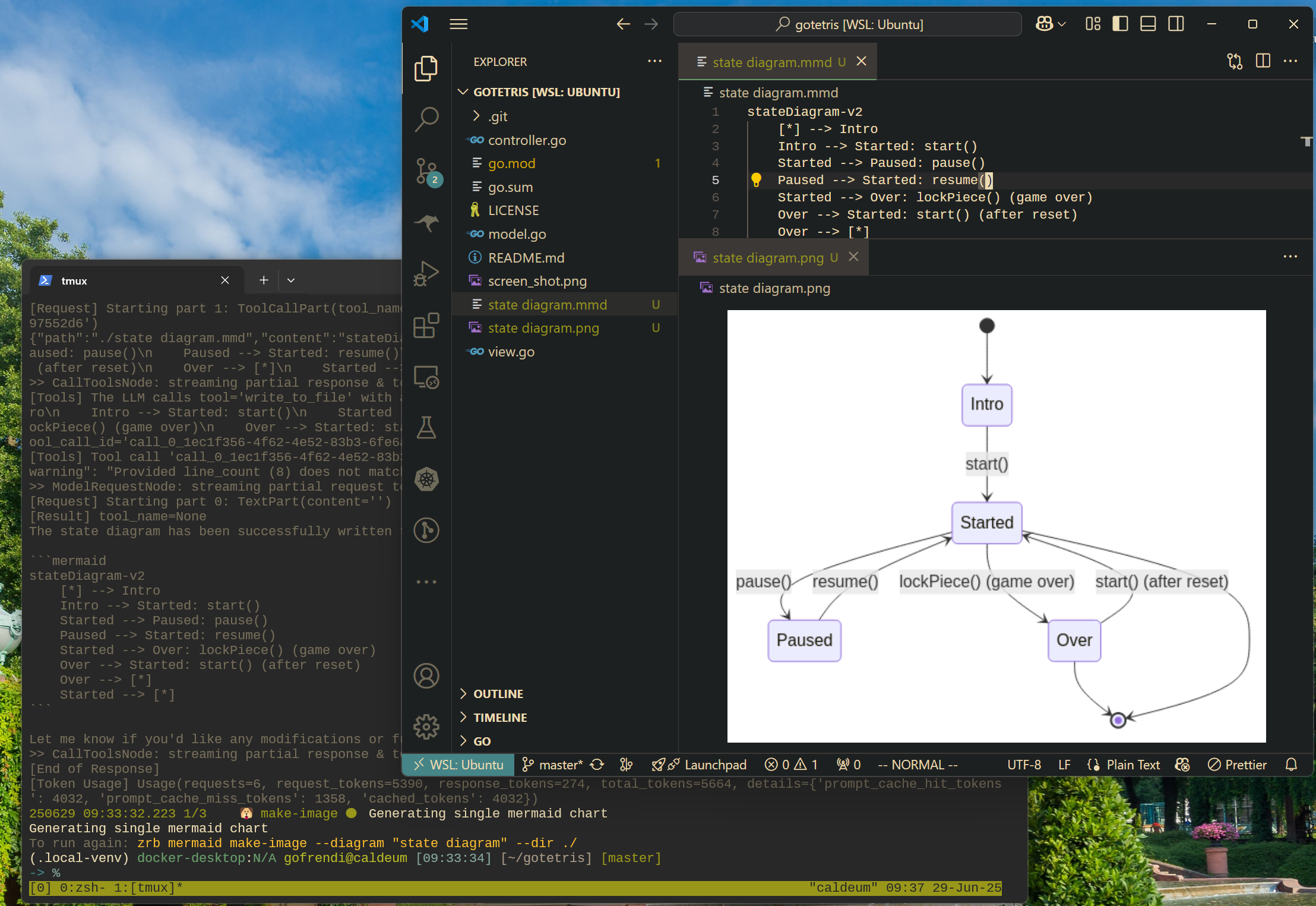

Now, let's see how Zrb generate the state diagram. Based on the source code in your current directory, Zrb will generate a `state diagram.uml` and transform it into `state diagram.png`.

|

|

63

130

|

|

|

64

|

-

|

|

131

|

+

|

|

65

132

|

|

|

66

133

|

See the [getting started guide](https://github.com/state-alchemists/zrb/blob/main/docs/recipes/getting-started/README.md) for more information. Or just watch the demo:

|

|

67

134

|

|