runnable 0.12.2__py3-none-any.whl → 0.12.3__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

Potentially problematic release.

This version of runnable might be problematic. Click here for more details.

- runnable/sdk.py +150 -101

- runnable-0.12.3.dist-info/METADATA +270 -0

- {runnable-0.12.2.dist-info → runnable-0.12.3.dist-info}/RECORD +6 -6

- runnable-0.12.2.dist-info/METADATA +0 -453

- {runnable-0.12.2.dist-info → runnable-0.12.3.dist-info}/LICENSE +0 -0

- {runnable-0.12.2.dist-info → runnable-0.12.3.dist-info}/WHEEL +0 -0

- {runnable-0.12.2.dist-info → runnable-0.12.3.dist-info}/entry_points.txt +0 -0

runnable/sdk.py

CHANGED

|

@@ -61,11 +61,9 @@ class Catalog(BaseModel):

|

|

|

61

61

|

put (List[str]): List of glob patterns to put into central catalog from the compute data folder.

|

|

62

62

|

|

|

63

63

|

Examples:

|

|

64

|

-

>>> from runnable import Catalog

|

|

64

|

+

>>> from runnable import Catalog

|

|

65

65

|

>>> catalog = Catalog(compute_data_folder="/path/to/data", get=["*.csv"], put=["*.csv"])

|

|

66

66

|

|

|

67

|

-

>>> task = Task(name="task", catalog=catalog, command="echo 'hello'")

|

|

68

|

-

|

|

69

67

|

"""

|

|

70

68

|

|

|

71

69

|

model_config = ConfigDict(extra="forbid") # Need to be for command, would be validated later

|

|

@@ -143,50 +141,7 @@ class BaseTraversal(ABC, BaseModel):

|

|

|

143

141

|

|

|

144

142

|

class BaseTask(BaseTraversal):

|

|

145

143

|

"""

|

|

146

|

-

|

|

147

|

-

Please refer to [concepts](concepts/task.md) for more information.

|

|

148

|

-

|

|

149

|

-

Attributes:

|

|

150

|

-

name (str): The name of the node.

|

|

151

|

-

command (str): The command to execute.

|

|

152

|

-

|

|

153

|

-

- For python functions, [dotted path](concepts/task.md/#python_functions) to the function.

|

|

154

|

-

- For shell commands: command to execute in the shell.

|

|

155

|

-

- For notebooks: path to the notebook.

|

|

156

|

-

command_type (str): The type of command to execute.

|

|

157

|

-

Can be one of "shell", "python", or "notebook".

|

|

158

|

-

catalog (Optional[Catalog]): The catalog to sync data from/to.

|

|

159

|

-

Please see Catalog about the structure of the catalog.

|

|

160

|

-

overrides (Dict[str, Any]): Any overrides to the command.

|

|

161

|

-

Individual tasks can override the global configuration config by referring to the

|

|

162

|

-

specific override.

|

|

163

|

-

|

|

164

|

-

For example,

|

|

165

|

-

### Global configuration

|

|

166

|

-

```yaml

|

|

167

|

-

executor:

|

|

168

|

-

type: local-container

|

|

169

|

-

config:

|

|

170

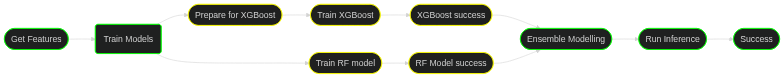

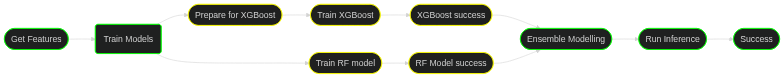

|

-

docker_image: "runnable/runnable:latest"

|

|

171

|

-

overrides:

|

|

172

|

-

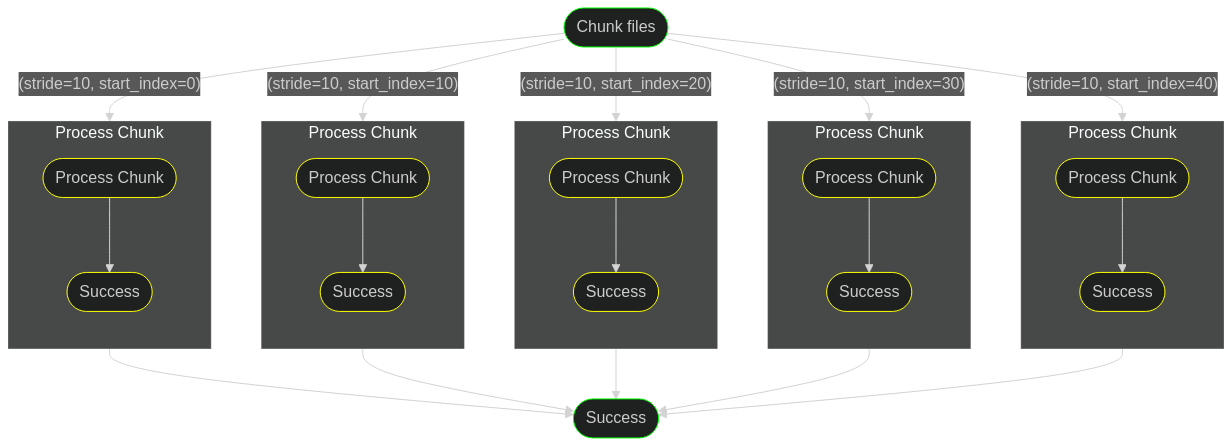

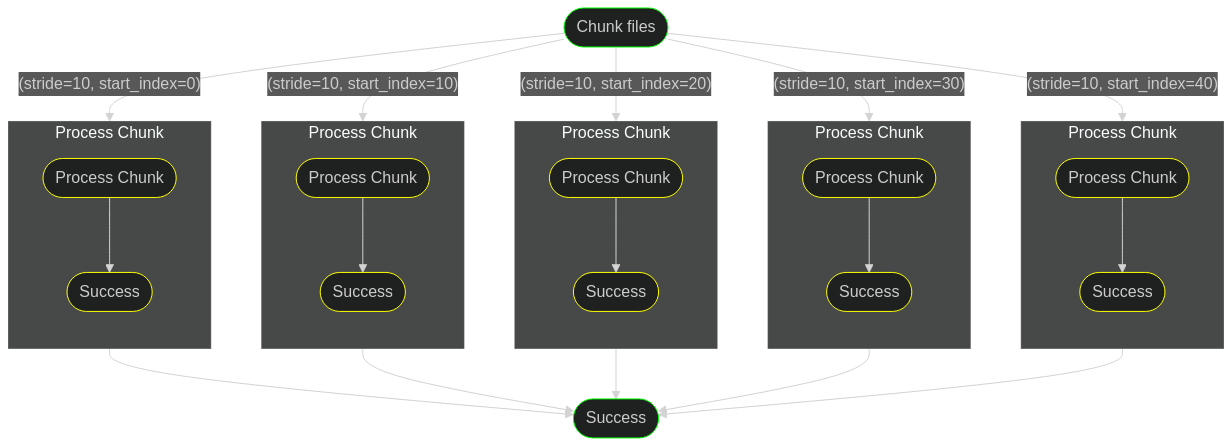

custom_docker_image:

|

|

173

|

-

docker_image: "runnable/runnable:custom"

|

|

174

|

-

```

|

|

175

|

-

### Task specific configuration

|

|

176

|

-

```python

|

|

177

|

-

task = Task(name="task", command="echo 'hello'", command_type="shell",

|

|

178

|

-

overrides={'local-container': custom_docker_image})

|

|

179

|

-

```

|

|

180

|

-

notebook_output_path (Optional[str]): The path to save the notebook output.

|

|

181

|

-

Only used when command_type is 'notebook', defaults to command+_out.ipynb

|

|

182

|

-

optional_ploomber_args (Optional[Dict[str, Any]]): Any optional ploomber args.

|

|

183

|

-

Only used when command_type is 'notebook', defaults to {}

|

|

184

|

-

output_cell_tag (Optional[str]): The tag of the output cell.

|

|

185

|

-

Only used when command_type is 'notebook', defaults to "runnable_output"

|

|

186

|

-

terminate_with_failure (bool): Whether to terminate the pipeline with a failure after this node.

|

|

187

|

-

terminate_with_success (bool): Whether to terminate the pipeline with a success after this node.

|

|

188

|

-

on_failure (str): The name of the node to execute if the step fails.

|

|

189

|

-

|

|

144

|

+

Base task type which has catalog, overrides, returns and secrets.

|

|

190

145

|

"""

|

|

191

146

|

|

|

192

147

|

catalog: Optional[Catalog] = Field(default=None, alias="catalog")

|

|

@@ -220,12 +175,50 @@ class BaseTask(BaseTraversal):

|

|

|

220

175

|

class PythonTask(BaseTask):

|

|

221

176

|

"""

|

|

222

177

|

An execution node of the pipeline of python functions.

|

|

178

|

+

Please refer to [concepts](concepts/task.md/#python_functions) for more information.

|

|

223

179

|

|

|

224

180

|

Attributes:

|

|

225

181

|

name (str): The name of the node.

|

|

226

182

|

function (callable): The function to execute.

|

|

227

|

-

|

|

228

|

-

|

|

183

|

+

|

|

184

|

+

terminate_with_success (bool): Whether to terminate the pipeline with a success after this node.

|

|

185

|

+

Defaults to False.

|

|

186

|

+

terminate_with_failure (bool): Whether to terminate the pipeline with a failure after this node.

|

|

187

|

+

Defaults to False.

|

|

188

|

+

|

|

189

|

+

on_failure (str): The name of the node to execute if the step fails.

|

|

190

|

+

|

|

191

|

+

returns List[Union[str, TaskReturns]] : A list of the names of variables to return from the task.

|

|

192

|

+

The names should match the order of the variables returned by the function.

|

|

193

|

+

|

|

194

|

+

```TaskReturns```: can be JSON friendly variables, objects or metrics.

|

|

195

|

+

|

|

196

|

+

By default, all variables are assumed to be JSON friendly and will be serialized to JSON.

|

|

197

|

+

Pydantic models are readily supported and will be serialized to JSON.

|

|

198

|

+

|

|

199

|

+

To return a python object, please use ```pickled(<name>)```.

|

|

200

|

+

It is advised to use ```pickled(<name>)``` for big JSON friendly variables.

|

|

201

|

+

|

|

202

|

+

For example,

|

|

203

|

+

```python

|

|

204

|

+

from runnable import pickled

|

|

205

|

+

|

|

206

|

+

def f():

|

|

207

|

+

...

|

|

208

|

+

x = 1

|

|

209

|

+

return x, df # A simple JSON friendly variable and a python object.

|

|

210

|

+

|

|

211

|

+

task = PythonTask(name="task", function=f, returns=["x", pickled(df)]))

|

|

212

|

+

```

|

|

213

|

+

|

|

214

|

+

To mark any JSON friendly variable as a ```metric```, please use ```metric(x)```.

|

|

215

|

+

Metric variables should be JSON friendly and can be treated just like any other parameter.

|

|

216

|

+

|

|

217

|

+

catalog Optional[Catalog]: The files sync data from/to, refer to Catalog.

|

|

218

|

+

|

|

219

|

+

secrets List[str]: List of secrets to pass to the task. They are exposed as environment variables

|

|

220

|

+

and removed after execution.

|

|

221

|

+

|

|

229

222

|

overrides (Dict[str, Any]): Any overrides to the command.

|

|

230

223

|

Individual tasks can override the global configuration config by referring to the

|

|

231

224

|

specific override.

|

|

@@ -246,11 +239,6 @@ class PythonTask(BaseTask):

|

|

|

246

239

|

task = PythonTask(name="task", function="function'",

|

|

247

240

|

overrides={'local-container': custom_docker_image})

|

|

248

241

|

```

|

|

249

|

-

|

|

250

|

-

terminate_with_failure (bool): Whether to terminate the pipeline with a failure after this node.

|

|

251

|

-

terminate_with_success (bool): Whether to terminate the pipeline with a success after this node.

|

|

252

|

-

on_failure (str): The name of the node to execute if the step fails.

|

|

253

|

-

|

|

254

242

|

"""

|

|

255

243

|

|

|

256

244

|

function: Callable = Field(exclude=True)

|

|

@@ -269,15 +257,52 @@ class PythonTask(BaseTask):

|

|

|

269

257

|

|

|

270

258

|

class NotebookTask(BaseTask):

|

|

271

259

|

"""

|

|

272

|

-

An execution node of the pipeline of

|

|

273

|

-

Please refer to [concepts](concepts/task.md) for more information.

|

|

260

|

+

An execution node of the pipeline of notebook.

|

|

261

|

+

Please refer to [concepts](concepts/task.md/#notebooks) for more information.

|

|

262

|

+

|

|

263

|

+

We internally use [Ploomber engine](https://github.com/ploomber/ploomber-engine) to execute the notebook.

|

|

274

264

|

|

|

275

265

|

Attributes:

|

|

276

266

|

name (str): The name of the node.

|

|

277

|

-

notebook: The path to the notebook

|

|

278

|

-

|

|

279

|

-

|

|

280

|

-

|

|

267

|

+

notebook (str): The path to the notebook relative the project root.

|

|

268

|

+

optional_ploomber_args (Dict[str, Any]): Any optional ploomber args, please refer to

|

|

269

|

+

[Ploomber engine](https://github.com/ploomber/ploomber-engine) for more information.

|

|

270

|

+

|

|

271

|

+

terminate_with_success (bool): Whether to terminate the pipeline with a success after this node.

|

|

272

|

+

Defaults to False.

|

|

273

|

+

terminate_with_failure (bool): Whether to terminate the pipeline with a failure after this node.

|

|

274

|

+

Defaults to False.

|

|

275

|

+

|

|

276

|

+

on_failure (str): The name of the node to execute if the step fails.

|

|

277

|

+

|

|

278

|

+

returns List[Union[str, TaskReturns]] : A list of the names of variables to return from the task.

|

|

279

|

+

The names should match the order of the variables returned by the function.

|

|

280

|

+

|

|

281

|

+

```TaskReturns```: can be JSON friendly variables, objects or metrics.

|

|

282

|

+

|

|

283

|

+

By default, all variables are assumed to be JSON friendly and will be serialized to JSON.

|

|

284

|

+

Pydantic models are readily supported and will be serialized to JSON.

|

|

285

|

+

|

|

286

|

+

To return a python object, please use ```pickled(<name>)```.

|

|

287

|

+

It is advised to use ```pickled(<name>)``` for big JSON friendly variables.

|

|

288

|

+

|

|

289

|

+

For example,

|

|

290

|

+

```python

|

|

291

|

+

from runnable import pickled

|

|

292

|

+

|

|

293

|

+

# assume, example.ipynb is the notebook with df and x as variables in some cells.

|

|

294

|

+

|

|

295

|

+

task = Notebook(name="task", notebook="example.ipynb", returns=["x", pickled(df)]))

|

|

296

|

+

```

|

|

297

|

+

|

|

298

|

+

To mark any JSON friendly variable as a ```metric```, please use ```metric(x)```.

|

|

299

|

+

Metric variables should be JSON friendly and can be treated just like any other parameter.

|

|

300

|

+

|

|

301

|

+

catalog Optional[Catalog]: The files sync data from/to, refer to Catalog.

|

|

302

|

+

|

|

303

|

+

secrets List[str]: List of secrets to pass to the task. They are exposed as environment variables

|

|

304

|

+

and removed after execution.

|

|

305

|

+

|

|

281

306

|

overrides (Dict[str, Any]): Any overrides to the command.

|

|

282

307

|

Individual tasks can override the global configuration config by referring to the

|

|

283

308

|

specific override.

|

|

@@ -295,18 +320,9 @@ class NotebookTask(BaseTask):

|

|

|

295

320

|

```

|

|

296

321

|

### Task specific configuration

|

|

297

322

|

```python

|

|

298

|

-

task = NotebookTask(name="task", notebook="

|

|

323

|

+

task = NotebookTask(name="task", notebook="example.ipynb",

|

|

299

324

|

overrides={'local-container': custom_docker_image})

|

|

300

325

|

```

|

|

301

|

-

notebook_output_path (Optional[str]): The path to save the notebook output.

|

|

302

|

-

Only used when command_type is 'notebook', defaults to command+_out.ipynb

|

|

303

|

-

optional_ploomber_args (Optional[Dict[str, Any]]): Any optional ploomber args.

|

|

304

|

-

Only used when command_type is 'notebook', defaults to {}

|

|

305

|

-

|

|

306

|

-

terminate_with_failure (bool): Whether to terminate the pipeline with a failure after this node.

|

|

307

|

-

terminate_with_success (bool): Whether to terminate the pipeline with a success after this node.

|

|

308

|

-

on_failure (str): The name of the node to execute if the step fails.

|

|

309

|

-

|

|

310

326

|

"""

|

|

311

327

|

|

|

312

328

|

notebook: str = Field(serialization_alias="command")

|

|

@@ -319,15 +335,33 @@ class NotebookTask(BaseTask):

|

|

|

319

335

|

|

|

320

336

|

class ShellTask(BaseTask):

|

|

321

337

|

"""

|

|

322

|

-

An execution node of the pipeline of

|

|

323

|

-

Please refer to [concepts](concepts/task.md) for more information.

|

|

338

|

+

An execution node of the pipeline of shell script.

|

|

339

|

+

Please refer to [concepts](concepts/task.md/#shell) for more information.

|

|

340

|

+

|

|

324

341

|

|

|

325

342

|

Attributes:

|

|

326

343

|

name (str): The name of the node.

|

|

327

|

-

command: The

|

|

328

|

-

|

|

329

|

-

|

|

330

|

-

|

|

344

|

+

command (str): The path to the notebook relative the project root.

|

|

345

|

+

terminate_with_success (bool): Whether to terminate the pipeline with a success after this node.

|

|

346

|

+

Defaults to False.

|

|

347

|

+

terminate_with_failure (bool): Whether to terminate the pipeline with a failure after this node.

|

|

348

|

+

Defaults to False.

|

|

349

|

+

|

|

350

|

+

on_failure (str): The name of the node to execute if the step fails.

|

|

351

|

+

|

|

352

|

+

returns List[str] : A list of the names of environment variables to collect from the task.

|

|

353

|

+

|

|

354

|

+

The names should match the order of the variables returned by the function.

|

|

355

|

+

Shell based tasks can only return JSON friendly variables.

|

|

356

|

+

|

|

357

|

+

To mark any JSON friendly variable as a ```metric```, please use ```metric(x)```.

|

|

358

|

+

Metric variables should be JSON friendly and can be treated just like any other parameter.

|

|

359

|

+

|

|

360

|

+

catalog Optional[Catalog]: The files sync data from/to, refer to Catalog.

|

|

361

|

+

|

|

362

|

+

secrets List[str]: List of secrets to pass to the task. They are exposed as environment variables

|

|

363

|

+

and removed after execution.

|

|

364

|

+

|

|

331

365

|

overrides (Dict[str, Any]): Any overrides to the command.

|

|

332

366

|

Individual tasks can override the global configuration config by referring to the

|

|

333

367

|

specific override.

|

|

@@ -345,14 +379,10 @@ class ShellTask(BaseTask):

|

|

|

345

379

|

```

|

|

346

380

|

### Task specific configuration

|

|

347

381

|

```python

|

|

348

|

-

task = ShellTask(name="task", command="

|

|

382

|

+

task = ShellTask(name="task", command="export x=1",

|

|

349

383

|

overrides={'local-container': custom_docker_image})

|

|

350

384

|

```

|

|

351

385

|

|

|

352

|

-

terminate_with_failure (bool): Whether to terminate the pipeline with a failure after this node.

|

|

353

|

-

terminate_with_success (bool): Whether to terminate the pipeline with a success after this node.

|

|

354

|

-

on_failure (str): The name of the node to execute if the step fails.

|

|

355

|

-

|

|

356

386

|

"""

|

|

357

387

|

|

|

358

388

|

command: str = Field(alias="command")

|

|

@@ -364,16 +394,20 @@ class ShellTask(BaseTask):

|

|

|

364

394

|

|

|

365

395

|

class Stub(BaseTraversal):

|

|

366

396

|

"""

|

|

367

|

-

A node that

|

|

397

|

+

A node that passes through the pipeline with no action. Just like ```pass``` in Python.

|

|

398

|

+

Please refer to [concepts](concepts/task.md/#stub) for more information.

|

|

368

399

|

|

|

369

400

|

A stub node can tak arbitrary number of arguments.

|

|

370

|

-

Please refer to [concepts](concepts/stub.md) for more information.

|

|

371

401

|

|

|

372

402

|

Attributes:

|

|

373

403

|

name (str): The name of the node.

|

|

374

|

-

|

|

404

|

+

command (str): The path to the notebook relative the project root.

|

|

375

405

|

terminate_with_success (bool): Whether to terminate the pipeline with a success after this node.

|

|

406

|

+

Defaults to False.

|

|

407

|

+

terminate_with_failure (bool): Whether to terminate the pipeline with a failure after this node.

|

|

408

|

+

Defaults to False.

|

|

376

409

|

|

|

410

|

+

on_failure (str): The name of the node to execute if the step fails.

|

|

377

411

|

"""

|

|

378

412

|

|

|

379

413

|

model_config = ConfigDict(extra="ignore")

|

|

@@ -422,12 +456,13 @@ class Map(BaseTraversal):

|

|

|

422

456

|

Please refer to [concepts](concepts/map.md) for more information.

|

|

423

457

|

|

|

424

458

|

Attributes:

|

|

425

|

-

branch: The pipeline to execute for each item.

|

|

459

|

+

branch (Pipeline): The pipeline to execute for each item.

|

|

426

460

|

|

|

427

|

-

iterate_on: The name of the parameter to iterate over.

|

|

461

|

+

iterate_on (str): The name of the parameter to iterate over.

|

|

428

462

|

The parameter should be defined either by previous steps or statically at the start of execution.

|

|

429

463

|

|

|

430

|

-

iterate_as: The name of the iterable to be passed to functions.

|

|

464

|

+

iterate_as (str): The name of the iterable to be passed to functions.

|

|

465

|

+

reducer (Callable): The function to reduce the results of the branches.

|

|

431

466

|

|

|

432

467

|

|

|

433

468

|

overrides (Dict[str, Any]): Any overrides to the command.

|

|

@@ -510,29 +545,44 @@ class Fail(BaseModel):

|

|

|

510

545

|

|

|

511

546

|

class Pipeline(BaseModel):

|

|

512

547

|

"""

|

|

513

|

-

A Pipeline is a

|

|

548

|

+

A Pipeline is a sequence of Steps.

|

|

514

549

|

|

|

515

550

|

Attributes:

|

|

516

|

-

steps (List[Stub | PythonTask | NotebookTask | ShellTask | Parallel | Map

|

|

551

|

+

steps (List[Stub | PythonTask | NotebookTask | ShellTask | Parallel | Map]]):

|

|

517

552

|

A list of Steps that make up the Pipeline.

|

|

518

|

-

|

|

553

|

+

|

|

554

|

+

The order of steps is important as it determines the order of execution.

|

|

555

|

+

Any on failure behavior should the first step in ```on_failure``` pipelines.

|

|

556

|

+

|

|

557

|

+

|

|

558

|

+

|

|

559

|

+

on_failure (List[List[Pipeline], optional): A list of Pipelines to execute in case of failure.

|

|

560

|

+

|

|

561

|

+

For example, for the below pipeline:

|

|

562

|

+

step1 >> step2

|

|

563

|

+

and step1 to reach step3 in case of failure.

|

|

564

|

+

|

|

565

|

+

failure_pipeline = Pipeline(steps=[step1, step3])

|

|

566

|

+

|

|

567

|

+

pipeline = Pipeline(steps=[step1, step2, on_failure=[failure_pipeline])

|

|

568

|

+

|

|

519

569

|

name (str, optional): The name of the Pipeline. Defaults to "".

|

|

520

570

|

description (str, optional): A description of the Pipeline. Defaults to "".

|

|

521

|

-

add_terminal_nodes (bool, optional): Whether to add terminal nodes to the Pipeline. Defaults to True.

|

|

522

571

|

|

|

523

|

-

The

|

|

524

|

-

To add custom success and fail nodes, set add_terminal_nodes=False and create success

|

|

525

|

-

and fail nodes manually.

|

|

572

|

+

The pipeline implicitly add success and fail nodes.

|

|

526

573

|

|

|

527

574

|

"""

|

|

528

575

|

|

|

529

|

-

steps: List[Union[StepType, List[

|

|

576

|

+

steps: List[Union[StepType, List["Pipeline"]]]

|

|

530

577

|

name: str = ""

|

|

531

578

|

description: str = ""

|

|

532

|

-

add_terminal_nodes: bool = True # Adds "success" and "fail" nodes

|

|

533

579

|

|

|

534

580

|

internal_branch_name: str = ""

|

|

535

581

|

|

|

582

|

+

@property

|

|

583

|

+

def add_terminal_nodes(self) -> bool:

|

|

584

|

+

return True

|

|

585

|

+

|

|

536

586

|

_dag: graph.Graph = PrivateAttr()

|

|

537

587

|

model_config = ConfigDict(extra="forbid")

|

|

538

588

|

|

|

@@ -590,6 +640,7 @@ class Pipeline(BaseModel):

|

|

|

590

640

|

Any definition of pipeline should have one node that terminates with success.

|

|

591

641

|

"""

|

|

592

642

|

# TODO: Bug with repeat names

|

|

643

|

+

# TODO: https://github.com/AstraZeneca/runnable/issues/156

|

|

593

644

|

|

|

594

645

|

success_path: List[StepType] = []

|

|

595

646

|

on_failure_paths: List[List[StepType]] = []

|

|

@@ -598,7 +649,7 @@ class Pipeline(BaseModel):

|

|

|

598

649

|

if isinstance(step, (Stub, PythonTask, NotebookTask, ShellTask, Parallel, Map)):

|

|

599

650

|

success_path.append(step)

|

|

600

651

|

continue

|

|

601

|

-

on_failure_paths.append(step)

|

|

652

|

+

# on_failure_paths.append(step)

|

|

602

653

|

|

|

603

654

|

if not success_path:

|

|

604

655

|

raise Exception("There should be some success path")

|

|

@@ -654,21 +705,19 @@ class Pipeline(BaseModel):

|

|

|

654

705

|

|

|

655

706

|

Traverse and execute all the steps of the pipeline, eg. [local execution](configurations/executors/local.md).

|

|

656

707

|

|

|

657

|

-

Or create the

|

|

708

|

+

Or create the representation of the pipeline for other executors.

|

|

658

709

|

|

|

659

710

|

Please refer to [concepts](concepts/executor.md) for more information.

|

|

660

711

|

|

|

661

712

|

Args:

|

|

662

713

|

configuration_file (str, optional): The path to the configuration file. Defaults to "".

|

|

663

|

-

The configuration file can be overridden by the environment variable

|

|

714

|

+

The configuration file can be overridden by the environment variable RUNNABLE_CONFIGURATION_FILE.

|

|

664

715

|

|

|

665

716

|

run_id (str, optional): The ID of the run. Defaults to "".

|

|

666

717

|

tag (str, optional): The tag of the run. Defaults to "".

|

|

667

718

|

Use to group multiple runs.

|

|

668

719

|

|

|

669

720

|

parameters_file (str, optional): The path to the parameters file. Defaults to "".

|

|

670

|

-

use_cached (str, optional): Whether to use cached results. Defaults to "".

|

|

671

|

-

Provide the run_id of the older execution to recover.

|

|

672

721

|

|

|

673

722

|

log_level (str, optional): The log level. Defaults to defaults.LOG_LEVEL.

|

|

674

723

|

"""

|

|

@@ -0,0 +1,270 @@

|

|

|

1

|

+

Metadata-Version: 2.1

|

|

2

|

+

Name: runnable

|

|

3

|

+

Version: 0.12.3

|

|

4

|

+

Summary: A Compute agnostic pipelining software

|

|

5

|

+

Home-page: https://github.com/vijayvammi/runnable

|

|

6

|

+

License: Apache-2.0

|

|

7

|

+

Author: Vijay Vammi

|

|

8

|

+

Author-email: mesanthu@gmail.com

|

|

9

|

+

Requires-Python: >=3.9,<3.13

|

|

10

|

+

Classifier: License :: OSI Approved :: Apache Software License

|

|

11

|

+

Classifier: Programming Language :: Python :: 3

|

|

12

|

+

Classifier: Programming Language :: Python :: 3.9

|

|

13

|

+

Classifier: Programming Language :: Python :: 3.10

|

|

14

|

+

Classifier: Programming Language :: Python :: 3.11

|

|

15

|

+

Classifier: Programming Language :: Python :: 3.12

|

|

16

|

+

Provides-Extra: database

|

|

17

|

+

Provides-Extra: docker

|

|

18

|

+

Provides-Extra: notebook

|

|

19

|

+

Requires-Dist: click

|

|

20

|

+

Requires-Dist: click-plugins (>=1.1.1,<2.0.0)

|

|

21

|

+

Requires-Dist: dill (>=0.3.8,<0.4.0)

|

|

22

|

+

Requires-Dist: docker ; extra == "docker"

|

|

23

|

+

Requires-Dist: mlflow-skinny

|

|

24

|

+

Requires-Dist: ploomber-engine (>=0.0.31,<0.0.32) ; extra == "notebook"

|

|

25

|

+

Requires-Dist: pydantic (>=2.5,<3.0)

|

|

26

|

+

Requires-Dist: rich (>=13.5.2,<14.0.0)

|

|

27

|

+

Requires-Dist: ruamel.yaml

|

|

28

|

+

Requires-Dist: ruamel.yaml.clib

|

|

29

|

+

Requires-Dist: sqlalchemy ; extra == "database"

|

|

30

|

+

Requires-Dist: stevedore (>=3.5.0,<4.0.0)

|

|

31

|

+

Requires-Dist: typing-extensions ; python_version < "3.8"

|

|

32

|

+

Project-URL: Documentation, https://github.com/vijayvammi/runnable

|

|

33

|

+

Project-URL: Repository, https://github.com/vijayvammi/runnable

|

|

34

|

+

Description-Content-Type: text/markdown

|

|

35

|

+

|

|

36

|

+

|

|

37

|

+

|

|

38

|

+

|

|

39

|

+

|

|

40

|

+

|

|

41

|

+

</p>

|

|

42

|

+

<hr style="border:2px dotted orange">

|

|

43

|

+

|

|

44

|

+

<p align="center">

|

|

45

|

+

<a href="https://pypi.org/project/runnable/"><img alt="python:" src="https://img.shields.io/badge/python-3.8%20%7C%203.9%20%7C%203.10-blue.svg"></a>

|

|

46

|

+

<a href="https://pypi.org/project/runnable/"><img alt="Pypi" src="https://badge.fury.io/py/runnable.svg"></a>

|

|

47

|

+

<a href="https://github.com/vijayvammi/runnable/blob/main/LICENSE"><img alt"License" src="https://img.shields.io/badge/license-Apache%202.0-blue.svg"></a>

|

|

48

|

+

<a href="https://github.com/psf/black"><img alt="Code style: black" src="https://img.shields.io/badge/code%20style-black-000000.svg"></a>

|

|

49

|

+

<a href="https://github.com/python/mypy"><img alt="MyPy Checked" src="https://www.mypy-lang.org/static/mypy_badge.svg"></a>

|

|

50

|

+

<a href="https://github.com/vijayvammi/runnable/actions/workflows/release.yaml"><img alt="Tests:" src="https://github.com/vijayvammi/runnable/actions/workflows/release.yaml/badge.svg">

|

|

51

|

+

</p>

|

|

52

|

+

<hr style="border:2px dotted orange">

|

|

53

|

+

|

|

54

|

+

|

|

55

|

+

[Please check here for complete documentation](https://astrazeneca.github.io/runnable/)

|

|

56

|

+

|

|

57

|

+

## Example

|

|

58

|

+

|

|

59

|

+

The below data science flavored code is a well-known

|

|

60

|

+

[iris example from scikit-learn](https://scikit-learn.org/stable/auto_examples/linear_model/plot_iris_logistic.html).

|

|

61

|

+

|

|

62

|

+

|

|

63

|

+

```python

|

|

64

|

+

"""

|

|

65

|

+

Example of Logistic regression using scikit-learn

|

|

66

|

+

https://scikit-learn.org/stable/auto_examples/linear_model/plot_iris_logistic.html

|

|

67

|

+

"""

|

|

68

|

+

|

|

69

|

+

import matplotlib.pyplot as plt

|

|

70

|

+

import numpy as np

|

|

71

|

+

from sklearn import datasets

|

|

72

|

+

from sklearn.inspection import DecisionBoundaryDisplay

|

|

73

|

+

from sklearn.linear_model import LogisticRegression

|

|

74

|

+

|

|

75

|

+

|

|

76

|

+

def load_data():

|

|

77

|

+

# import some data to play with

|

|

78

|

+

iris = datasets.load_iris()

|

|

79

|

+

X = iris.data[:, :2] # we only take the first two features.

|

|

80

|

+

Y = iris.target

|

|

81

|

+

|

|

82

|

+

return X, Y

|

|

83

|

+

|

|

84

|

+

|

|

85

|

+

def model_fit(X: np.ndarray, Y: np.ndarray, C: float = 1e5):

|

|

86

|

+

logreg = LogisticRegression(C=C)

|

|

87

|

+

logreg.fit(X, Y)

|

|

88

|

+

|

|

89

|

+

return logreg

|

|

90

|

+

|

|

91

|

+

|

|

92

|

+

def generate_plots(X: np.ndarray, Y: np.ndarray, logreg: LogisticRegression):

|

|

93

|

+

_, ax = plt.subplots(figsize=(4, 3))

|

|

94

|

+

DecisionBoundaryDisplay.from_estimator(

|

|

95

|

+

logreg,

|

|

96

|

+

X,

|

|

97

|

+

cmap=plt.cm.Paired,

|

|

98

|

+

ax=ax,

|

|

99

|

+

response_method="predict",

|

|

100

|

+

plot_method="pcolormesh",

|

|

101

|

+

shading="auto",

|

|

102

|

+

xlabel="Sepal length",

|

|

103

|

+

ylabel="Sepal width",

|

|

104

|

+

eps=0.5,

|

|

105

|

+

)

|

|

106

|

+

|

|

107

|

+

# Plot also the training points

|

|

108

|

+

plt.scatter(X[:, 0], X[:, 1], c=Y, edgecolors="k", cmap=plt.cm.Paired)

|

|

109

|

+

|

|

110

|

+

plt.xticks(())

|

|

111

|

+

plt.yticks(())

|

|

112

|

+

|

|

113

|

+

plt.savefig("iris_logistic.png")

|

|

114

|

+

|

|

115

|

+

# TODO: What is the right value?

|

|

116

|

+

return 0.6

|

|

117

|

+

|

|

118

|

+

|

|

119

|

+

## Without any orchestration

|

|

120

|

+

def main():

|

|

121

|

+

X, Y = load_data()

|

|

122

|

+

logreg = model_fit(X, Y, C=1.0)

|

|

123

|

+

generate_plots(X, Y, logreg)

|

|

124

|

+

|

|

125

|

+

|

|

126

|

+

## With runnable orchestration

|

|

127

|

+

def runnable_pipeline():

|

|

128

|

+

# The below code can be anywhere

|

|

129

|

+

from runnable import Catalog, Pipeline, PythonTask, metric, pickled

|

|

130

|

+

|

|

131

|

+

# X, Y = load_data()

|

|

132

|

+

load_data_task = PythonTask(

|

|

133

|

+

function=load_data,

|

|

134

|

+

name="load_data",

|

|

135

|

+

returns=[pickled("X"), pickled("Y")], # (1)

|

|

136

|

+

)

|

|

137

|

+

|

|

138

|

+

# logreg = model_fit(X, Y, C=1.0)

|

|

139

|

+

model_fit_task = PythonTask(

|

|

140

|

+

function=model_fit,

|

|

141

|

+

name="model_fit",

|

|

142

|

+

returns=[pickled("logreg")],

|

|

143

|

+

)

|

|

144

|

+

|

|

145

|

+

# generate_plots(X, Y, logreg)

|

|

146

|

+

generate_plots_task = PythonTask(

|

|

147

|

+

function=generate_plots,

|

|

148

|

+

name="generate_plots",

|

|

149

|

+

terminate_with_success=True,

|

|

150

|

+

catalog=Catalog(put=["iris_logistic.png"]), # (2)

|

|

151

|

+

returns=[metric("score")],

|

|

152

|

+

)

|

|

153

|

+

|

|

154

|

+

pipeline = Pipeline(

|

|

155

|

+

steps=[load_data_task, model_fit_task, generate_plots_task],

|

|

156

|

+

) # (4)

|

|

157

|

+

|

|

158

|

+

pipeline.execute()

|

|

159

|

+

|

|

160

|

+

return pipeline

|

|

161

|

+

|

|

162

|

+

|

|

163

|

+

if __name__ == "__main__":

|

|

164

|

+

# main()

|

|

165

|

+

runnable_pipeline()

|

|

166

|

+

|

|

167

|

+

```

|

|

168

|

+

|

|

169

|

+

|

|

170

|

+

1. Return two serialized objects X and Y.

|

|

171

|

+

2. Store the file `iris_logistic.png` for future reference.

|

|

172

|

+

3. Define the sequence of tasks.

|

|

173

|

+

4. Define a pipeline with the tasks

|

|

174

|

+

|

|

175

|

+

The difference between native driver and runnable orchestration:

|

|

176

|

+

|

|

177

|

+

!!! tip inline end "Notebooks and Shell scripts"

|

|

178

|

+

|

|

179

|

+

You can execute notebooks and shell scripts too!!

|

|

180

|

+

|

|

181

|

+

They can be written just as you would want them, *plain old notebooks and scripts*.

|

|

182

|

+

|

|

183

|

+

|

|

184

|

+

|

|

185

|

+

|

|

186

|

+

<div class="annotate" markdown>

|

|

187

|

+

|

|

188

|

+

```diff

|

|

189

|

+

|

|

190

|

+

- X, Y = load_data()

|

|

191

|

+

+load_data_task = PythonTask(

|

|

192

|

+

+ function=load_data,

|

|

193

|

+

+ name="load_data",

|

|

194

|

+

+ returns=[pickled("X"), pickled("Y")], (1)

|

|

195

|

+

+ )

|

|

196

|

+

|

|

197

|

+

-logreg = model_fit(X, Y, C=1.0)

|

|

198

|

+

+model_fit_task = PythonTask(

|

|

199

|

+

+ function=model_fit,

|

|

200

|

+

+ name="model_fit",

|

|

201

|

+

+ returns=[pickled("logreg")],

|

|

202

|

+

+ )

|

|

203

|

+

|

|

204

|

+

-generate_plots(X, Y, logreg)

|

|

205

|

+

+generate_plots_task = PythonTask(

|

|

206

|

+

+ function=generate_plots,

|

|

207

|

+

+ name="generate_plots",

|

|

208

|

+

+ terminate_with_success=True,

|

|

209

|

+

+ catalog=Catalog(put=["iris_logistic.png"]), (2)

|

|

210

|

+

+ )

|

|

211

|

+

|

|

212

|

+

|

|

213

|

+

+pipeline = Pipeline(

|

|

214

|

+

+ steps=[load_data_task, model_fit_task, generate_plots_task], (3)

|

|

215

|

+

|

|

216

|

+

```

|

|

217

|

+

</div>

|

|

218

|

+

|

|

219

|

+

|

|

220

|

+

---

|

|

221

|

+

|

|

222

|

+

- [x] ```Domain``` code remains completely independent of ```driver``` code.

|

|

223

|

+

- [x] The ```driver``` function has an equivalent and intuitive runnable expression

|

|

224

|

+

- [x] Reproducible by default, runnable stores metadata about code/data/config for every execution.

|

|

225

|

+

- [x] The pipeline is `runnable` in any environment.

|

|

226

|

+

|

|

227

|

+

|

|

228

|

+

## Documentation

|

|

229

|

+

|

|

230

|

+

[More details about the project and how to use it available here](https://astrazeneca.github.io/runnable/).

|

|

231

|

+

|

|

232

|

+

<hr style="border:2px dotted orange">

|

|

233

|

+

|

|

234

|

+

## Installation

|

|

235

|

+

|

|

236

|

+

The minimum python version that runnable supports is 3.8

|

|

237

|

+

|

|

238

|

+

```shell

|

|

239

|

+

pip install runnable

|

|

240

|

+

```

|

|

241

|

+

|

|

242

|

+

Please look at the [installation guide](https://astrazeneca.github.io/runnable-core/usage)

|

|

243

|

+

for more information.

|

|

244

|

+

|

|

245

|

+

|

|

246

|

+

## Pipelines can be:

|

|

247

|

+

|

|

248

|

+

### Linear

|

|

249

|

+

|

|

250

|

+

A simple linear pipeline with tasks either

|

|

251

|

+

[python functions](https://astrazeneca.github.io/runnable-core/concepts/task/#python_functions),

|

|

252

|

+

[notebooks](https://astrazeneca.github.io/runnable-core/concepts/task/#notebooks), or [shell scripts](https://astrazeneca.github.io/runnable-core/concepts/task/#shell)

|

|

253

|

+

|

|

254

|

+

[](https://mermaid.live/edit#pako:eNpl0bFuwyAQBuBXQVdZTqTESpxMDJ0ytkszhgwnOCcoNo4OaFVZfvcSx20tGSQ4fn0wHB3o1hBIyLJOWGeDFJ3Iq7r90lfkkA9HHfmTUpnX1hFyLvrHzDLl_qB4-1BOOZGGD3TfSikvTDSNFqdj2sT2vBTr9euQlXNWjqycsN2c7UZWFMUE7udwP0L3y6JenNKiyfvz8t8_b-gavT9QJYY0PcDtjeTLptrAChriBq1JzeoeWkG4UkMKZCoN8k2Bcn1yGEN7_HYaZOBIK4h3g4EOFi-MDcgKa59SMja0_P7s_vAJ_Q_YOH6o)

|

|

255

|

+

|

|

256

|

+

### [Parallel branches](https://astrazeneca.github.io/runnable-core/concepts/parallel)

|

|

257

|

+

|

|

258

|

+

Execute branches in parallel

|

|

259

|

+

|

|

260

|

+

[](https://mermaid.live/edit#pako:eNp9k01rwzAMhv-K8S4ZtJCzDzuMLmWwwkh2KMQ7eImShiZ2sB1KKf3vs52PpsWNT7LySHqlyBeciRwwwUUtTtmBSY2-YsopR8MpQUfAdCdBBekWNBpvv6-EkFICzGAtWcUTDW3wYy20M7lr5QGBK2j-anBAkH4M1z6grnjpy17xAiTwDII07jj6HK8-VnVZBspITnpjztyoVkLLJOy3Qfrdm6gQEu2370Io7WLORo84PbRoA_oOl9BBg4UHbHR58UkMWq_fxjrOnhLRx1nH0SgkjlBjh7ekxNKGc0NelDLknhePI8qf7MVNr_31nm1wwNTeM2Ao6pmf-3y3Mp7WlqA7twOnXfKs17zt-6azmim1gQL1A0NKS3EE8hKZE4Yezm3chIVFiFe4AdmwKjdv7mIjKNYHaIBiYsycySPFlF8NxzotkjPPMNGygxXu2pxp2FSslKzBpGC1Ml7IKy3krn_E7i1f_wEayTcn)

|

|

261

|

+

|

|

262

|

+

### [loops or map](https://astrazeneca.github.io/runnable-core/concepts/map)

|

|

263

|

+

|

|

264

|

+

Execute a pipeline over an iterable parameter.

|

|

265

|

+

|

|

266

|

+

[](https://mermaid.live/edit#pako:eNqVlF1rwjAUhv9KyG4qKNR-3AS2m8nuBgN3Z0Sy5tQG20SSdE7E_76kVVEr2CY3Ied9Tx6Sk3PAmeKACc5LtcsKpi36nlGZFbXciHwfLN79CuWiBLMcEULWGkBSaeosA2OCxbxdXMd89Get2bZASsLiSyuvQE2mJZXIjW27t2rOmQZ3Gp9rD6UjatWnwy7q6zPPukd50WTydmemEiS_QbQ79RwxGoQY9UaMuojRA8TCXexzyHgQZNwbMu5Cxl3IXNX6OWMyiDHpzZh0GZMHjOK3xz2mgxjT3oxplzG9MPp5_nVOhwJjteDwOg3HyFj3L1dCcvh7DUc-iftX18n6Waet1xX8cG908vpKHO6OW7cvkeHm5GR2b3drdvaSGTODHLW37mxabYC8fLgRhlfxpjNdwmEets-Dx7gCXTHBXQc8-D2KbQEVUEzckjO9oZjKo9Ox2qr5XmaYWF3DGNdbzizMBHOVVWGSs9K4XeDCKv3ZttSmsx7_AYa341E)

|

|

267

|

+

|

|

268

|

+

### [Arbitrary nesting](https://astrazeneca.github.io/runnable-core/concepts/nesting/)

|

|

269

|

+

Any nesting of parallel within map and so on.

|

|

270

|

+

|

|

@@ -53,12 +53,12 @@ runnable/names.py,sha256=vn92Kv9ANROYSZX6Z4z1v_WA3WiEdIYmG6KEStBFZug,8134

|

|

|

53

53

|

runnable/nodes.py,sha256=UqR-bJx0Hi7uLSUw_saB7VsNdFh3POKtdgsEPsasHfE,16576

|

|

54

54

|

runnable/parameters.py,sha256=yZkMDnwnkdYXIwQ8LflBzn50Y0xRGxEvLlxwno6ovvs,5163

|

|

55

55

|

runnable/pickler.py,sha256=5SDNf0miMUJ3ZauhQdzwk8_t-9jeOqaTjP5bvRnu9sU,2685

|

|

56

|

-

runnable/sdk.py,sha256=

|

|

56

|

+

runnable/sdk.py,sha256=sZWiyGVIqaAmmJuc4p1OhuqLBSqS7svN1VuSOqeZ_xA,29254

|

|

57

57

|

runnable/secrets.py,sha256=dakb7WRloWVo-KpQp6Vy4rwFdGi58BTlT4OifQY106I,2324

|

|

58

58

|

runnable/tasks.py,sha256=7sAtFMu6ELD3PJoisNbm47rY2wPKVzz5_h4s_QMep0k,22043

|

|

59

59

|

runnable/utils.py,sha256=fXOLoFZKYqh3wQgzA2V-VZOu-dSgLPGqCZIbMmsNzOw,20016

|

|

60

|

-

runnable-0.12.

|

|

61

|

-

runnable-0.12.

|

|

62

|

-

runnable-0.12.

|

|

63

|

-

runnable-0.12.

|

|

64

|

-

runnable-0.12.

|

|

60

|

+

runnable-0.12.3.dist-info/LICENSE,sha256=xx0jnfkXJvxRnG63LTGOxlggYnIysveWIZ6H3PNdCrQ,11357

|

|

61

|

+

runnable-0.12.3.dist-info/METADATA,sha256=spjKwm0NQ2n_MEfz1c0kqe2NkxpFq6nFQwyJ91xfAso,10436

|

|

62

|

+

runnable-0.12.3.dist-info/WHEEL,sha256=sP946D7jFCHeNz5Iq4fL4Lu-PrWrFsgfLXbbkciIZwg,88

|

|

63

|

+

runnable-0.12.3.dist-info/entry_points.txt,sha256=-csEf-FCAqtOboXaBSzpgaTffz1HwlylYPxnppndpFE,1494

|

|

64

|

+

runnable-0.12.3.dist-info/RECORD,,

|

|

@@ -1,453 +0,0 @@

|

|

|

1

|

-

Metadata-Version: 2.1

|

|

2

|

-

Name: runnable

|

|

3

|

-

Version: 0.12.2

|

|

4

|

-

Summary: A Compute agnostic pipelining software

|

|

5

|

-

Home-page: https://github.com/vijayvammi/runnable

|

|

6

|

-

License: Apache-2.0

|

|

7

|

-

Author: Vijay Vammi

|

|

8

|

-

Author-email: mesanthu@gmail.com

|

|

9

|

-

Requires-Python: >=3.9,<3.13

|

|

10

|

-

Classifier: License :: OSI Approved :: Apache Software License

|

|

11

|

-

Classifier: Programming Language :: Python :: 3

|

|

12

|

-

Classifier: Programming Language :: Python :: 3.9

|

|

13

|

-

Classifier: Programming Language :: Python :: 3.10

|

|

14

|

-

Classifier: Programming Language :: Python :: 3.11

|

|

15

|

-

Classifier: Programming Language :: Python :: 3.12

|

|

16

|

-

Provides-Extra: database

|

|

17

|

-

Provides-Extra: docker

|

|

18

|

-

Provides-Extra: notebook

|

|

19

|

-

Requires-Dist: click

|

|

20

|

-

Requires-Dist: click-plugins (>=1.1.1,<2.0.0)

|

|

21

|

-

Requires-Dist: dill (>=0.3.8,<0.4.0)

|

|

22

|

-

Requires-Dist: docker ; extra == "docker"

|

|

23

|

-

Requires-Dist: mlflow-skinny

|

|

24

|

-

Requires-Dist: ploomber-engine (>=0.0.31,<0.0.32) ; extra == "notebook"

|

|

25

|

-

Requires-Dist: pydantic (>=2.5,<3.0)

|

|

26

|

-

Requires-Dist: rich (>=13.5.2,<14.0.0)

|

|

27

|

-

Requires-Dist: ruamel.yaml

|

|

28

|

-

Requires-Dist: ruamel.yaml.clib

|

|

29

|

-

Requires-Dist: sqlalchemy ; extra == "database"

|

|

30

|

-

Requires-Dist: stevedore (>=3.5.0,<4.0.0)

|

|

31

|

-

Requires-Dist: typing-extensions ; python_version < "3.8"

|

|

32

|

-

Project-URL: Documentation, https://github.com/vijayvammi/runnable

|

|

33

|

-

Project-URL: Repository, https://github.com/vijayvammi/runnable

|

|

34

|

-

Description-Content-Type: text/markdown

|

|

35

|

-

|

|

36

|

-

|

|

37

|

-

|

|

38

|

-

|

|

39

|

-

|

|

40

|

-

<p align="center">

|

|

41

|

-

|

|

42

|

-

,////,

|

|

43

|

-

/// 6|

|

|

44

|

-

// _|

|

|

45

|

-

_/_,-'

|

|

46

|

-

_.-/'/ \ ,/;,

|

|

47

|

-

,-' /' \_ \ / _/

|

|

48

|

-

`\ / _/\ ` /

|

|

49

|

-

| /, `\_/

|

|

50

|

-

| \'

|

|

51

|

-

/\_ /` /\

|

|

52

|

-

/' /_``--.__/\ `,. / \

|

|

53

|

-

|_/` `-._ `\/ `\ `.

|

|

54

|

-

`-.__/' `\ |

|

|

55

|

-

`\ \

|

|

56

|

-

`\ \

|

|

57

|

-

\_\__

|

|

58

|

-

\___)

|

|

59

|

-

|

|

60

|

-

</p>

|

|

61

|

-

<hr style="border:2px dotted orange">

|

|

62

|

-

|

|

63

|

-

<p align="center">

|

|

64

|

-

<a href="https://pypi.org/project/runnable/"><img alt="python:" src="https://img.shields.io/badge/python-3.8%20%7C%203.9%20%7C%203.10-blue.svg"></a>

|

|

65

|

-

<a href="https://pypi.org/project/runnable/"><img alt="Pypi" src="https://badge.fury.io/py/runnable.svg"></a>

|

|

66

|

-

<a href="https://github.com/vijayvammi/runnable/blob/main/LICENSE"><img alt"License" src="https://img.shields.io/badge/license-Apache%202.0-blue.svg"></a>

|

|

67

|

-

<a href="https://github.com/psf/black"><img alt="Code style: black" src="https://img.shields.io/badge/code%20style-black-000000.svg"></a>

|

|

68

|

-

<a href="https://github.com/python/mypy"><img alt="MyPy Checked" src="https://www.mypy-lang.org/static/mypy_badge.svg"></a>

|

|

69

|

-

<a href="https://github.com/vijayvammi/runnable/actions/workflows/release.yaml"><img alt="Tests:" src="https://github.com/vijayvammi/runnable/actions/workflows/release.yaml/badge.svg">

|

|

70

|

-

<a href="https://github.com/vijayvammi/runnable/actions/workflows/docs.yaml"><img alt="Docs:" src="https://github.com/vijayvammi/runnable/actions/workflows/docs.yaml/badge.svg">

|

|

71

|

-

</p>

|

|

72

|

-

<hr style="border:2px dotted orange">

|

|

73

|

-

|

|

74

|

-

runnable is a simplified workflow definition language that helps in:

|

|

75

|

-

|

|

76

|

-

- **Streamlined Design Process:** runnable enables users to efficiently plan their pipelines with

|

|

77

|

-

[stubbed nodes](https://astrazeneca.github.io/runnable-core/concepts/stub), along with offering support for various structures such as

|

|

78

|

-

[tasks](https://astrazeneca.github.io/runnable-core/concepts/task), [parallel branches](https://astrazeneca.github.io/runnable-core/concepts/parallel), and [loops or map branches](https://astrazeneca.github.io/runnable-core/concepts/map)

|

|

79

|

-

in both [yaml](https://astrazeneca.github.io/runnable-core/concepts/pipeline) or a [python SDK](https://astrazeneca.github.io/runnable-core/sdk) for maximum flexibility.

|

|

80

|

-

|

|

81

|

-

- **Incremental Development:** Build your pipeline piece by piece with runnable, which allows for the

|

|

82

|

-

implementation of tasks as [python functions](https://astrazeneca.github.io/runnable-core/concepts/task/#python_functions),

|

|

83

|

-

[notebooks](https://astrazeneca.github.io/runnable-core/concepts/task/#notebooks), or [shell scripts](https://astrazeneca.github.io/runnable-core/concepts/task/#shell),

|

|

84

|

-

adapting to the developer's preferred tools and methods.

|

|

85

|

-

|

|

86

|

-

- **Robust Testing:** Ensure your pipeline performs as expected with the ability to test using sampled data. runnable

|

|

87

|

-

also provides the capability to [mock and patch tasks](https://astrazeneca.github.io/runnable-core/configurations/executors/mocked)

|

|

88

|

-

for thorough evaluation before full-scale deployment.

|

|

89

|

-

|

|

90

|

-

- **Seamless Deployment:** Transition from the development stage to production with ease.

|

|

91

|

-

runnable simplifies the process by requiring [only configuration changes](https://astrazeneca.github.io/runnable-core/configurations/overview)

|

|

92

|

-

to adapt to different environments, including support for [argo workflows](https://astrazeneca.github.io/runnable-core/configurations/executors/argo).

|

|

93

|

-

|

|

94

|

-

- **Efficient Debugging:** Quickly identify and resolve issues in pipeline execution with runnable's local

|

|

95

|

-

debugging features. Retrieve data from failed tasks and [retry failures](https://astrazeneca.github.io/runnable-core/concepts/run-log/#retrying_failures)

|

|

96

|

-

using your chosen debugging tools to maintain a smooth development experience.

|

|

97

|

-

|

|

98

|

-

Along with the developer friendly features, runnable also acts as an interface to production grade concepts

|

|

99

|

-

such as [data catalog](https://astrazeneca.github.io/runnable-core/concepts/catalog), [reproducibility](https://astrazeneca.github.io/runnable-core/concepts/run-log),

|

|

100

|

-

[experiment tracking](https://astrazeneca.github.io/runnable-core/concepts/experiment-tracking)

|

|

101

|

-

and secure [access to secrets](https://astrazeneca.github.io/runnable-core/concepts/secrets).

|

|

102

|

-

|

|

103

|

-

<hr style="border:2px dotted orange">

|

|

104

|

-

|

|

105

|

-

## What does it do?

|

|

106

|

-

|

|

107

|

-

|

|

108

|

-

|

|

109

|

-

|

|

110

|

-

<hr style="border:2px dotted orange">

|

|

111

|

-

|

|

112

|

-

## Documentation

|

|

113

|

-

|

|

114

|

-

[More details about the project and how to use it available here](https://astrazeneca.github.io/runnable-core/).

|

|

115

|

-

|

|

116

|

-

<hr style="border:2px dotted orange">

|

|

117

|

-

|

|

118

|

-

## Installation

|

|

119

|

-

|

|

120

|

-

The minimum python version that runnable supports is 3.8

|

|

121

|

-

|

|

122

|

-

```shell

|

|

123

|

-

pip install runnable

|

|

124

|

-

```

|

|

125

|

-

|

|

126

|

-

Please look at the [installation guide](https://astrazeneca.github.io/runnable-core/usage)

|

|

127

|

-

for more information.

|

|

128

|

-

|

|

129

|

-

<hr style="border:2px dotted orange">

|

|

130

|

-

|

|

131

|

-

## Example

|

|

132

|

-

|

|

133

|

-

Your application code. Use pydantic models as DTO.

|

|

134

|

-

|

|

135

|

-

Assumed to be present at ```functions.py```

|

|

136

|

-

```python

|

|

137

|

-

from pydantic import BaseModel

|

|

138

|

-

|

|

139

|

-

class InnerModel(BaseModel):

|

|

140

|

-

"""

|

|

141

|

-

A pydantic model representing a group of related parameters.

|

|

142

|

-

"""

|

|

143

|

-

|

|

144

|

-

foo: int

|

|

145

|

-

bar: str

|

|

146

|

-

|

|

147

|

-

|

|

148

|

-

class Parameter(BaseModel):

|

|

149

|

-

"""

|

|

150

|

-

A pydantic model representing the parameters of the whole pipeline.

|

|

151

|

-

"""

|

|

152

|

-

|

|

153

|

-

x: int

|

|

154

|

-

y: InnerModel

|

|

155

|

-

|

|

156

|

-

|

|

157

|

-

def return_parameter() -> Parameter:

|

|

158

|

-

"""

|

|

159

|

-

The annotation of the return type of the function is not mandatory

|

|

160

|

-

but it is a good practice.

|

|

161

|

-

|

|

162

|

-

Returns:

|

|

163

|

-

Parameter: The parameters that should be used in downstream steps.

|

|

164

|

-

"""

|

|

165

|

-

# Return type of a function should be a pydantic model

|

|

166

|

-

return Parameter(x=1, y=InnerModel(foo=10, bar="hello world"))

|

|

167

|

-

|

|

168

|

-

|

|

169

|

-

def display_parameter(x: int, y: InnerModel):

|

|

170

|

-

"""

|

|

171

|

-

Annotating the arguments of the function is important for

|

|

172

|

-

runnable to understand the type of parameters you want.

|

|

173

|

-

|

|

174

|

-

Input args can be a pydantic model or the individual attributes.

|

|

175

|

-

"""

|

|

176

|

-

print(x)

|

|

177

|

-

# >>> prints 1

|

|

178

|

-

print(y)

|

|

179

|

-

# >>> prints InnerModel(foo=10, bar="hello world")

|

|

180

|

-

```

|

|

181

|

-

|

|

182

|

-

### Application code using driver functions.

|

|

183

|

-

|

|

184

|

-

The code is runnable without any orchestration framework.

|

|

185

|

-

|

|

186

|

-

```python

|

|

187

|

-

from functions import return_parameter, display_parameter

|

|

188

|

-

|

|

189

|

-

my_param = return_parameter()

|

|

190

|

-

display_parameter(my_param.x, my_param.y)

|

|

191

|

-

```

|

|

192

|

-

|

|

193

|

-

### Orchestration using runnable

|

|

194

|

-

|

|

195

|

-

<table>

|

|

196

|

-

<tr>

|

|

197

|

-

<th>python SDK</th>

|

|

198

|

-

<th>yaml</th>

|

|

199

|

-

</tr>

|

|

200

|

-

<tr>

|

|

201

|

-

<td valign="top"><p>

|

|

202

|

-

|

|

203

|

-

Example present at: ```examples/python-tasks.py```

|

|

204

|

-

|

|

205

|

-

Run it as: ```python examples/python-tasks.py```

|

|

206

|

-

|

|

207

|

-

```python

|

|

208

|

-

from runnable import Pipeline, Task

|

|

209

|

-

|

|

210

|

-

def main():

|

|

211

|

-

step1 = Task(

|

|

212

|

-

name="step1",

|

|

213

|

-

command="examples.functions.return_parameter",

|

|

214

|

-

)

|

|

215

|

-

step2 = Task(

|

|

216

|

-

name="step2",

|

|

217

|

-

command="examples.functions.display_parameter",

|

|

218

|

-

terminate_with_success=True,

|

|

219

|

-

)

|

|

220

|

-

|

|

221

|

-

step1 >> step2

|

|

222

|

-

|

|

223

|

-

pipeline = Pipeline(

|

|

224

|

-

start_at=step1,

|

|

225

|

-

steps=[step1, step2],

|

|

226

|

-

add_terminal_nodes=True,

|

|

227

|

-

)

|

|

228

|

-

|

|

229

|

-

pipeline.execute()

|

|

230

|

-

|

|

231

|

-

|

|

232

|

-