quantjourney-bidask 0.9.4__py3-none-any.whl → 1.0.1__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- quantjourney_bidask/__init__.py +31 -4

- quantjourney_bidask/_compare_edge.py +152 -0

- quantjourney_bidask/edge.py +149 -127

- quantjourney_bidask/edge_expanding.py +45 -57

- quantjourney_bidask/edge_hft.py +126 -0

- quantjourney_bidask/edge_rolling.py +54 -198

- {quantjourney_bidask-0.9.4.dist-info → quantjourney_bidask-1.0.1.dist-info}/METADATA +94 -35

- quantjourney_bidask-1.0.1.dist-info/RECORD +11 -0

- quantjourney_bidask/_version.py +0 -7

- quantjourney_bidask/websocket_fetcher.py +0 -308

- quantjourney_bidask-0.9.4.dist-info/RECORD +0 -11

- {quantjourney_bidask-0.9.4.dist-info → quantjourney_bidask-1.0.1.dist-info}/WHEEL +0 -0

- {quantjourney_bidask-0.9.4.dist-info → quantjourney_bidask-1.0.1.dist-info}/licenses/LICENSE +0 -0

- {quantjourney_bidask-0.9.4.dist-info → quantjourney_bidask-1.0.1.dist-info}/top_level.txt +0 -0

|

@@ -0,0 +1,126 @@

|

|

|

1

|

+

"""

|

|

2

|

+

HFT-Optimized EDGE estimator for bid-ask spread calculation.

|

|

3

|

+

|

|

4

|

+

This version is hyper-optimized for maximum speed and is intended for

|

|

5

|

+

latency-sensitive applications like High-Frequency Trading.

|

|

6

|

+

|

|

7

|

+

It uses a targeted, fastmath-enabled Numba kernel for the lowest possible

|

|

8

|

+

execution time.

|

|

9

|

+

|

|

10

|

+

**WARNING:** This implementation uses `fastmath=True`, which prioritizes speed

|

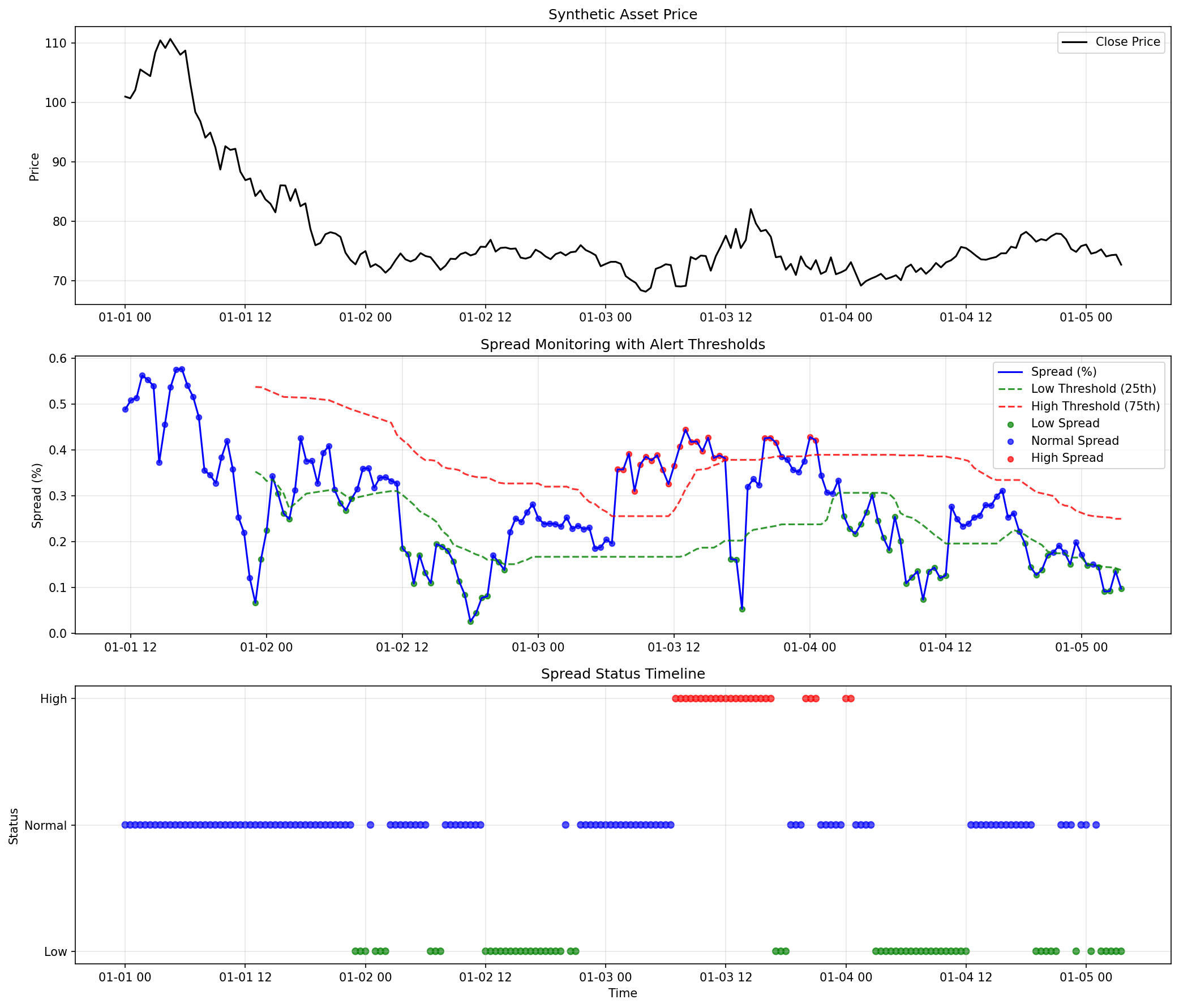

|

11

|

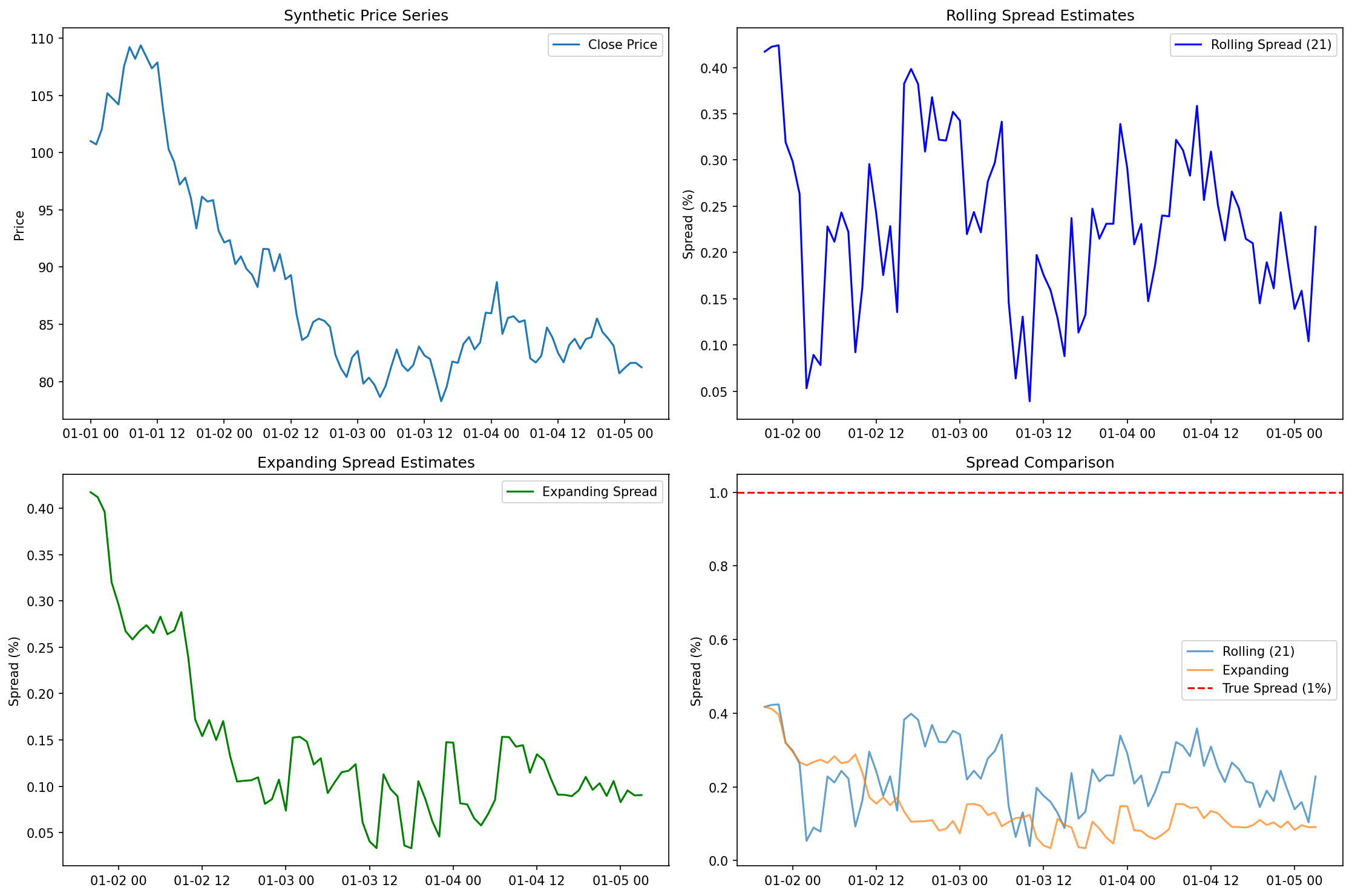

+

over strict IEEE 754 compliance. It assumes the input data is **perfectly clean**

|

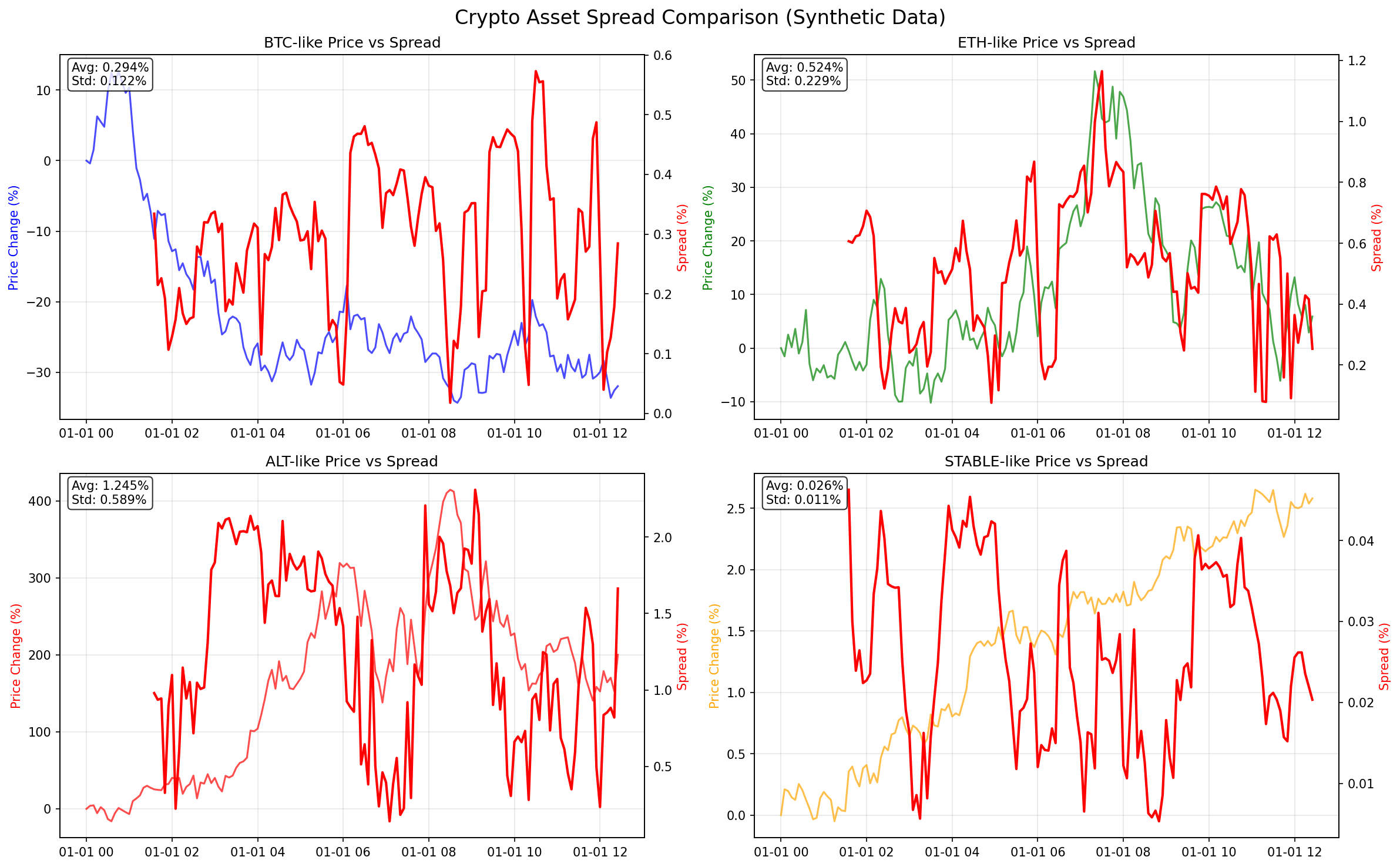

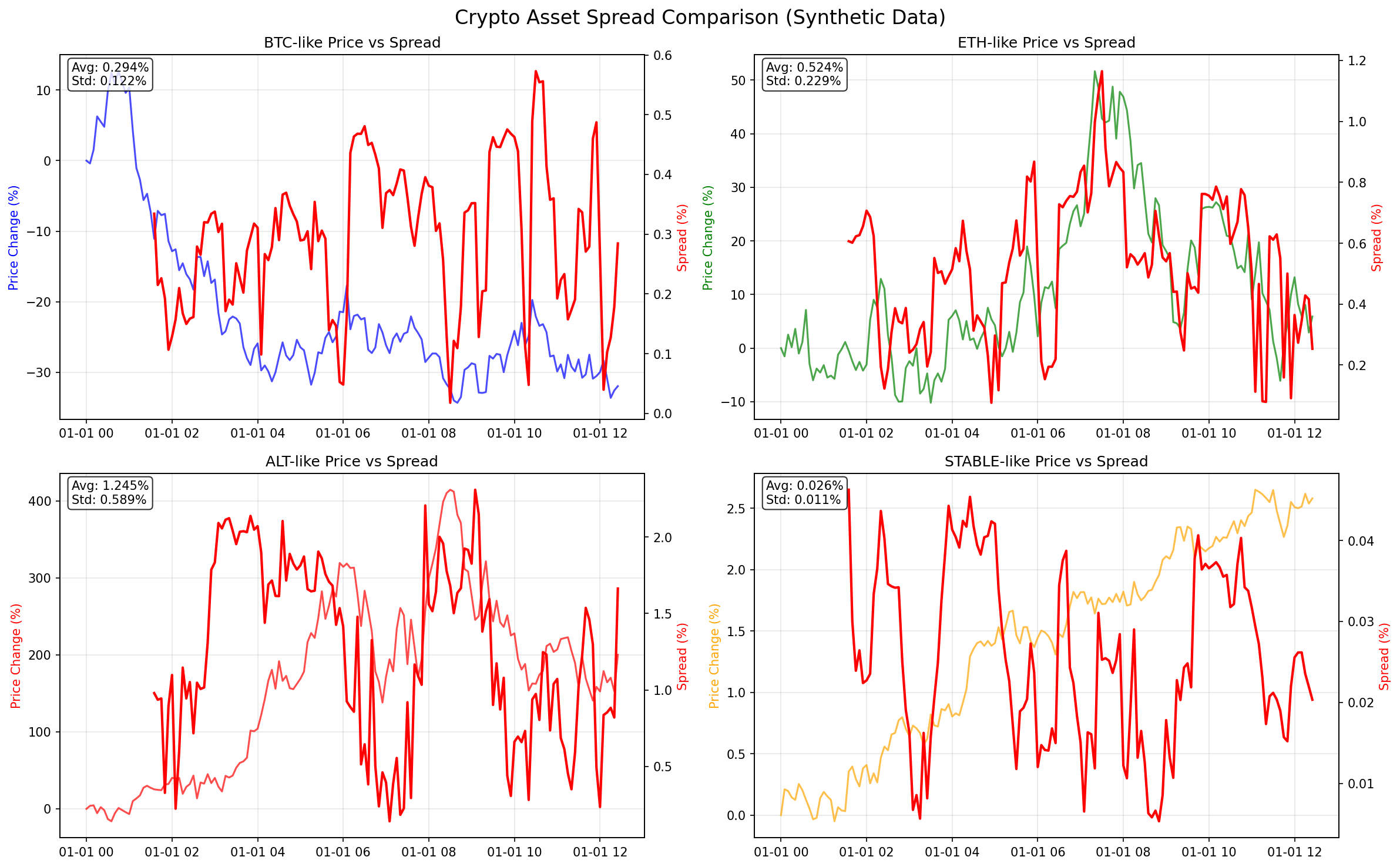

|

12

|

+

(contains no NaN or Inf values). Passing messy data may result in NaN output

|

|

13

|

+

where the standard `edge.py` version would produce a valid number. Use this

|

|

14

|

+

version only when you have a robust data sanitization pipeline upstream.

|

|

15

|

+

|

|

16

|

+

For general-purpose, robust estimation, use the standard `edge.py` module.

|

|

17

|

+

|

|

18

|

+

Author: Jakub Polec

|

|

19

|

+

Date: 2025-06-28

|

|

20

|

+

"""

|

|

21

|

+

import warnings

|

|

22

|

+

import numpy as np

|

|

23

|

+

from numba import jit, prange

|

|

24

|

+

from typing import Union, List, Any

|

|

25

|

+

|

|

26

|

+

# This is the targeted kernel. We add `fastmath=True` for an extra performance

|

|

27

|

+

# boost in this dense numerical section.

|

|

28

|

+

@jit(nopython=True, cache=True, fastmath=True)

|

|

29

|

+

def _compute_spread_numba_optimized(r1, r2, r3, r4, r5, tau, po, pc, pt):

|

|

30

|

+

"""

|

|

31

|

+

Optimized core spread calculation using Numba with fastmath.

|

|

32

|

+

This is the computational bottleneck and benefits most from JIT compilation.

|

|

33

|

+

"""

|

|

34

|

+

# Numba is highly efficient with NumPy functions in nopython mode.

|

|

35

|

+

d1 = r1 - np.nanmean(r1) / pt * tau

|

|

36

|

+

d3 = r3 - np.nanmean(r3) / pt * tau

|

|

37

|

+

d5 = r5 - np.nanmean(r5) / pt * tau

|

|

38

|

+

|

|

39

|

+

x1 = -4.0 / po * d1 * r2 + -4.0 / pc * d3 * r4

|

|

40

|

+

x2 = -4.0 / po * d1 * r5 + -4.0 / pc * d5 * r4

|

|

41

|

+

|

|

42

|

+

e1 = np.nanmean(x1)

|

|

43

|

+

e2 = np.nanmean(x2)

|

|

44

|

+

|

|

45

|

+

v1 = np.nanmean(x1**2) - e1**2

|

|

46

|

+

v2 = np.nanmean(x2**2) - e2**2

|

|

47

|

+

|

|

48

|

+

vt = v1 + v2

|

|

49

|

+

s2 = (v2 * e1 + v1 * e2) / vt if vt > 0.0 else (e1 + e2) / 2.0

|

|

50

|

+

|

|

51

|

+

return s2

|

|

52

|

+

|

|

53

|

+

def edge(

|

|

54

|

+

open_prices: Union[List[float], Any],

|

|

55

|

+

high: Union[List[float], Any],

|

|

56

|

+

low: Union[List[float], Any],

|

|

57

|

+

close: Union[List[float], Any],

|

|

58

|

+

sign: bool = False,

|

|

59

|

+

min_pt: float = 1e-6,

|

|

60

|

+

debug: bool = False,

|

|

61

|

+

) -> float:

|

|

62

|

+

"""

|

|

63

|

+

Estimate the effective bid-ask spread from OHLC prices.

|

|

64

|

+

Public-facing function using the hybrid optimization strategy.

|

|

65

|

+

"""

|

|

66

|

+

# --- 1. Input Validation and Conversion ---

|

|

67

|

+

o_arr = np.asarray(open_prices, dtype=float)

|

|

68

|

+

h_arr = np.asarray(high, dtype=float)

|

|

69

|

+

l_arr = np.asarray(low, dtype=float)

|

|

70

|

+

c_arr = np.asarray(close, dtype=float)

|

|

71

|

+

|

|

72

|

+

nobs = len(o_arr)

|

|

73

|

+

if not (len(h_arr) == nobs and len(l_arr) == nobs and len(c_arr) == nobs):

|

|

74

|

+

raise ValueError("Input arrays must have the same length.")

|

|

75

|

+

|

|

76

|

+

if nobs < 3:

|

|

77

|

+

if debug: print("NaN reason: nobs < 3")

|

|

78

|

+

return np.nan

|

|

79

|

+

|

|

80

|

+

# --- 2. Log-Price Calculation (NumPy is fastest for this) ---

|

|

81

|

+

with warnings.catch_warnings():

|

|

82

|

+

warnings.simplefilter("ignore", RuntimeWarning)

|

|

83

|

+

o = np.log(np.where(o_arr > 0, o_arr, np.nan))

|

|

84

|

+

h = np.log(np.where(h_arr > 0, h_arr, np.nan))

|

|

85

|

+

l = np.log(np.where(l_arr > 0, l_arr, np.nan))

|

|

86

|

+

c = np.log(np.where(c_arr > 0, c_arr, np.nan))

|

|

87

|

+

m = (h + l) / 2.0

|

|

88

|

+

|

|

89

|

+

# --- 3. Shift and Vectorized Calculations (NumPy is fastest for this) ---

|

|

90

|

+

o_t, h_t, l_t, m_t = o[1:], h[1:], l[1:], m[1:]

|

|

91

|

+

h_tm1, l_tm1, c_tm1, m_tm1 = h[:-1], l[:-1], c[:-1], m[:-1]

|

|

92

|

+

|

|

93

|

+

r1 = m_t - o_t

|

|

94

|

+

r2 = o_t - m_tm1

|

|

95

|

+

r3 = m_t - c_tm1

|

|

96

|

+

r4 = c_tm1 - m_tm1

|

|

97

|

+

r5 = o_t - c_tm1

|

|

98

|

+

|

|

99

|

+

tau = np.where(np.isnan(h_t) | np.isnan(l_t) | np.isnan(c_tm1), np.nan, ((h_t != l_t) | (l_t != c_tm1)).astype(float))

|

|

100

|

+

po1 = tau * np.where(np.isnan(o_t) | np.isnan(h_t), np.nan, (o_t != h_t).astype(float))

|

|

101

|

+

po2 = tau * np.where(np.isnan(o_t) | np.isnan(l_t), np.nan, (o_t != l_t).astype(float))

|

|

102

|

+

pc1 = tau * np.where(np.isnan(c_tm1) | np.isnan(h_tm1), np.nan, (c_tm1 != h_tm1).astype(float))

|

|

103

|

+

pc2 = tau * np.where(np.isnan(c_tm1) | np.isnan(l_tm1), np.nan, (c_tm1 != l_tm1).astype(float))

|

|

104

|

+

|

|

105

|

+

with warnings.catch_warnings():

|

|

106

|

+

warnings.simplefilter("ignore", RuntimeWarning)

|

|

107

|

+

pt = np.nanmean(tau)

|

|

108

|

+

po = np.nanmean(po1) + np.nanmean(po2)

|

|

109

|

+

pc = np.nanmean(pc1) + np.nanmean(pc2)

|

|

110

|

+

|

|

111

|

+

# --- 4. Final Checks and Kernel Call ---

|

|

112

|

+

if np.nansum(tau) < 2 or po == 0.0 or pc == 0.0 or pt < min_pt:

|

|

113

|

+

if debug: print(f"NaN reason: Insufficient valid data (tau_sum={np.nansum(tau)}, po={po}, pc={pc}, pt={pt})")

|

|

114

|

+

return np.nan

|

|

115

|

+

|

|

116

|

+

# *** THE FIX: Call the correctly named JIT function ***

|

|

117

|

+

s2 = _compute_spread_numba_optimized(r1, r2, r3, r4, r5, tau, po, pc, pt)

|

|

118

|

+

|

|

119

|

+

if np.isnan(s2):

|

|

120

|

+

return np.nan

|

|

121

|

+

|

|

122

|

+

s = np.sqrt(np.abs(s2))

|

|

123

|

+

if sign:

|

|

124

|

+

s *= np.sign(s2)

|

|

125

|

+

|

|

126

|

+

return float(s)

|

|

@@ -1,208 +1,64 @@

|

|

|

1

|

+

"""

|

|

2

|

+

Robust and efficient rolling window EDGE estimator implementation.

|

|

3

|

+

This module provides a rolling window implementation of the EDGE estimator,

|

|

4

|

+

ensuring compatibility with all pandas windowing features like 'step'.

|

|

5

|

+

|

|

6

|

+

Author: Jakub Polec

|

|

7

|

+

Date: 2025-06-28

|

|

8

|

+

|

|

9

|

+

Part of the QuantJourney framework - The framework with advanced quantitative

|

|

10

|

+

finance tools and insights.

|

|

11

|

+

"""

|

|

1

12

|

import numpy as np

|

|

2

13

|

import pandas as pd

|

|

3

|

-

from typing import Union

|

|

4

|

-

|

|

14

|

+

from typing import Union

|

|

15

|

+

|

|

16

|

+

# Import the core, fast estimator

|

|

17

|

+

from .edge import edge as edge_single

|

|

5

18

|

|

|

6

19

|

def edge_rolling(

|

|

7

20

|

df: pd.DataFrame,

|

|

8

|

-

window:

|

|

21

|

+

window: int,

|

|

9

22

|

sign: bool = False,

|

|

10

|

-

|

|

23

|

+

step: int = 1,

|

|

24

|

+

min_periods: int = None,

|

|

25

|

+

**kwargs, # Accept other kwargs to match test signature

|

|

11

26

|

) -> pd.Series:

|

|

12

27

|

"""

|

|

13

|

-

|

|

14

|

-

|

|

15

|

-

Uses the efficient estimator from Ardia, Guidotti, & Kroencke (2024):

|

|

16

|

-

https://doi.org/10.1016/j.jfineco.2024.103916. Optimized for fast computation

|

|

17

|

-

over rolling windows using vectorized operations.

|

|

18

|

-

|

|

19

|

-

Parameters

|

|

20

|

-

----------

|

|

21

|

-

df : pd.DataFrame

|

|

22

|

-

DataFrame with columns 'open', 'high', 'low', 'close' (case-insensitive).

|

|

23

|

-

window : int, str, or pd.offsets.BaseOffset

|

|

24

|

-

Size of the rolling window. Can be an integer (number of periods),

|

|

25

|

-

a string (e.g., '30D' for 30 days), or a pandas offset object.

|

|

26

|

-

See pandas.DataFrame.rolling for details.

|

|

27

|

-

sign : bool, default False

|

|

28

|

-

If True, returns signed estimates. If False, returns absolute values.

|

|

29

|

-

**kwargs

|

|

30

|

-

Additional arguments to pass to pandas.DataFrame.rolling, such as

|

|

31

|

-

min_periods, step, or center.

|

|

32

|

-

|

|

33

|

-

Returns

|

|

34

|

-

-------

|

|

35

|

-

pd.Series

|

|

36

|

-

Series of rolling spread estimates, indexed by the DataFrame's index.

|

|

37

|

-

A value of 0.01 corresponds to a 1% spread. NaN for periods with

|

|

38

|

-

insufficient data.

|

|

39

|

-

|

|

40

|

-

Notes

|

|

41

|

-

-----

|

|

42

|

-

- The function accounts for missing values by masking invalid periods.

|

|

43

|

-

- The first observation is masked due to the need for lagged prices.

|

|

44

|

-

- For large datasets, this implementation is significantly faster than

|

|

45

|

-

applying `edge` repeatedly over windows.

|

|

46

|

-

|

|

47

|

-

Examples

|

|

48

|

-

--------

|

|

49

|

-

>>> import pandas as pd

|

|

50

|

-

>>> # Example OHLC DataFrame

|

|

51

|

-

>>> df = pd.DataFrame({

|

|

52

|

-

... 'open': [100.0, 101.5, 99.8, 102.1, 100.9],

|

|

53

|

-

... 'high': [102.3, 103.0, 101.2, 103.5, 102.0],

|

|

54

|

-

... 'low': [99.5, 100.8, 98.9, 101.0, 100.1],

|

|

55

|

-

... 'close': [101.2, 102.5, 100.3, 102.8, 101.5]

|

|

56

|

-

... })

|

|

57

|

-

>>> spreads = edge_rolling(df, window=3)

|

|

58

|

-

>>> print(spreads.dropna())

|

|

28

|

+

Computes rolling EDGE estimates using a fast loop that calls the core estimator.

|

|

59

29

|

"""

|

|

60

|

-

# Standardize column names

|

|

61

|

-

df = df.rename(columns=str.lower).copy()

|

|

62

|

-

required_cols = ['open', 'high', 'low', 'close']

|

|

63

|

-

if not all(col in df.columns for col in required_cols):

|

|

64

|

-

raise ValueError("DataFrame must contain 'open', 'high', 'low', 'close' columns")

|

|

65

|

-

|

|

66

|

-

# Compute log-prices, handling non-positive prices by replacing them with NaN

|

|

67

|

-

# This prevents errors from taking log of zero or negative values

|

|

68

|

-

o = np.log(df['open'].where(df['open'] > 0)) # Log of open prices

|

|

69

|

-

h = np.log(df['high'].where(df['high'] > 0)) # Log of high prices

|

|

70

|

-

l = np.log(df['low'].where(df['low'] > 0)) # Log of low prices

|

|

71

|

-

c = np.log(df['close'].where(df['close'] > 0)) # Log of close prices

|

|

72

|

-

m = (h + l) / 2.0 # Log of geometric mid-price each period

|

|

73

|

-

|

|

74

|

-

# Get lagged (previous period) log-prices using pandas shift

|

|

75

|

-

# These are needed to compute overnight returns and indicators

|

|

76

|

-

h1 = h.shift(1) # Previous period's high

|

|

77

|

-

l1 = l.shift(1) # Previous period's low

|

|

78

|

-

c1 = c.shift(1) # Previous period's close

|

|

79

|

-

m1 = m.shift(1) # Previous period's mid-price

|

|

80

|

-

|

|

81

|

-

# Compute log-returns:

|

|

82

|

-

r1 = m - o # Mid-price minus open (intraday return from open to mid)

|

|

83

|

-

r2 = o - m1 # Open minus previous mid (overnight return from prev mid to open)

|

|

84

|

-

r3 = m - c1 # Mid-price minus previous close (return from prev close to mid)

|

|

85

|

-

r4 = c1 - m1 # Previous close minus previous mid (prev intraday return from mid to close)

|

|

86

|

-

r5 = o - c1 # Open minus previous close (overnight return from prev close to open)

|

|

87

|

-

|

|

88

|

-

# Compute indicator variables for price variation and extremes

|

|

89

|

-

# tau: Indicator for valid price variation (1 if high != low or low != previous close)

|

|

90

|

-

tau = np.where(np.isnan(h) | np.isnan(l) | np.isnan(c1), np.nan,

|

|

91

|

-

((h != l) | (l != c1)).astype(float))

|

|

92

|

-

|

|

93

|

-

# po1: Indicator for open price not equal to high, scaled by tau

|

|

94

|

-

po1 = tau * np.where(np.isnan(o) | np.isnan(h), np.nan, (o != h).astype(float))

|

|

95

|

-

|

|

96

|

-

# po2: Indicator for open price not equal to low, scaled by tau

|

|

97

|

-

po2 = tau * np.where(np.isnan(o) | np.isnan(l), np.nan, (o != l).astype(float))

|

|

98

|

-

|

|

99

|

-

# pc1: Indicator for previous close not equal to previous high, scaled by tau

|

|

100

|

-

pc1 = tau * np.where(np.isnan(c1) | np.isnan(h1), np.nan, (c1 != h1).astype(float))

|

|

101

|

-

|

|

102

|

-

# pc2: Indicator for previous close not equal to previous low, scaled by tau

|

|

103

|

-

pc2 = tau * np.where(np.isnan(c1) | np.isnan(l1), np.nan, (c1 != l1).astype(float))

|

|

104

|

-

|

|

105

|

-

# Compute base products needed for rolling means

|

|

106

|

-

# Products of log-returns for covariance calculations

|

|

107

|

-

r12 = r1 * r2 # Mid-Open × Open-PrevMid

|

|

108

|

-

r15 = r1 * r5 # Mid-Open × Open-PrevClose

|

|

109

|

-

r34 = r3 * r4 # Mid-PrevClose × PrevClose-PrevMid

|

|

110

|

-

r45 = r4 * r5 # PrevClose-PrevMid × Open-PrevClose

|

|

111

|

-

|

|

112

|

-

# Products with tau indicator for valid periods

|

|

113

|

-

tr1 = tau * r1 # Scaled Mid-Open

|

|

114

|

-

tr2 = tau * r2 # Scaled Open-PrevMid

|

|

115

|

-

tr4 = tau * r4 # Scaled PrevClose-PrevMid

|

|

116

|

-

tr5 = tau * r5 # Scaled Open-PrevClose

|

|

117

|

-

|

|

118

|

-

# Set up DataFrame for efficient rolling mean calculations

|

|

119

|

-

# Includes all products needed for moment conditions and variance calculations

|

|

120

|

-

x = pd.DataFrame({

|

|

121

|

-

# Basic return products

|

|

122

|

-

'r12': r12, 'r34': r34, 'r15': r15, 'r45': r45,

|

|

123

|

-

'tau': tau, # Price variation indicator

|

|

124

|

-

# Individual returns

|

|

125

|

-

'r1': r1, 'tr2': tr2, 'r3': r3, 'tr4': tr4, 'r5': r5,

|

|

126

|

-

# Squared terms for variance

|

|

127

|

-

'r12_sq': r12**2, 'r34_sq': r34**2, 'r15_sq': r15**2, 'r45_sq': r45**2,

|

|

128

|

-

# Cross products for covariance

|

|

129

|

-

'r12_r34': r12 * r34, 'r15_r45': r15 * r45,

|

|

130

|

-

# Products with tau-scaled returns

|

|

131

|

-

'tr2_r2': tr2 * r2, 'tr4_r4': tr4 * r4, 'tr5_r5': tr5 * r5,

|

|

132

|

-

'tr2_r12': tr2 * r12, 'tr4_r34': tr4 * r34,

|

|

133

|

-

'tr5_r15': tr5 * r15, 'tr4_r45': tr4 * r45,

|

|

134

|

-

'tr4_r12': tr4 * r12, 'tr2_r34': tr2 * r34,

|

|

135

|

-

'tr2_r4': tr2 * r4, 'tr1_r45': tr1 * r45,

|

|

136

|

-

'tr5_r45': tr5 * r45, 'tr4_r5': tr4 * r5,

|

|

137

|

-

'tr5': tr5,

|

|

138

|

-

# Extreme price indicators

|

|

139

|

-

'po1': po1, 'po2': po2, 'pc1': pc1, 'pc2': pc2

|

|

140

|

-

}, index=df.index)

|

|

141

|

-

|

|

142

|

-

# Handle first observation and adjust window parameters

|

|

143

|

-

x.iloc[0] = np.nan # Mask first row due to lagged values

|

|

144

|

-

if isinstance(window, (int, np.integer)):

|

|

145

|

-

window = max(0, window - 1) # Adjust window size for lag

|

|

146

|

-

if 'min_periods' in kwargs and isinstance(kwargs['min_periods'], (int, np.integer)):

|

|

147

|

-

kwargs['min_periods'] = max(0, kwargs['min_periods'] - 1)

|

|

148

|

-

|

|

149

|

-

# Compute rolling means for all variables

|

|

150

|

-

m = x.rolling(window=window, **kwargs).mean()

|

|

151

|

-

|

|

152

|

-

# Calculate probabilities of price extremes

|

|

153

|

-

pt = m['tau'] # Probability of valid price variation

|

|

154

|

-

po = m['po1'] + m['po2'] # Probability of open being extreme

|

|

155

|

-

pc = m['pc1'] + m['pc2'] # Probability of close being extreme

|

|

156

|

-

|

|

157

|

-

# Mask periods with insufficient data or zero probabilities

|

|

158

|

-

nt = x['tau'].rolling(window=window, **kwargs).sum()

|

|

159

|

-

m[(nt < 2) | (po == 0) | (pc == 0)] = np.nan

|

|

160

|

-

|

|

161

|

-

# Compute coefficients for moment conditions

|

|

162

|

-

a1 = -4.0 / po # Scaling for open price moments

|

|

163

|

-

a2 = -4.0 / pc # Scaling for close price moments

|

|

164

|

-

a3 = m['r1'] / pt # Mean-adjustment for Mid-Open

|

|

165

|

-

a4 = m['tr4'] / pt # Mean-adjustment for PrevClose-PrevMid

|

|

166

|

-

a5 = m['r3'] / pt # Mean-adjustment for Mid-PrevClose

|

|

167

|

-

a6 = m['r5'] / pt # Mean-adjustment for Open-PrevClose

|

|

168

|

-

|

|

169

|

-

# Pre-compute squared and product terms

|

|

170

|

-

a12 = 2 * a1 * a2

|

|

171

|

-

a11 = a1**2

|

|

172

|

-

a22 = a2**2

|

|

173

|

-

a33 = a3**2

|

|

174

|

-

a55 = a5**2

|

|

175

|

-

a66 = a6**2

|

|

176

|

-

|

|

177

|

-

# Calculate moment condition expectations

|

|

178

|

-

e1 = a1 * (m['r12'] - a3 * m['tr2']) + a2 * (m['r34'] - a4 * m['r3']) # First moment

|

|

179

|

-

e2 = a1 * (m['r15'] - a3 * m['tr5']) + a2 * (m['r45'] - a4 * m['r5']) # Second moment

|

|

180

|

-

|

|

181

|

-

# Calculate variances of moment conditions

|

|

182

|

-

# v1: Variance of first moment condition

|

|

183

|

-

v1 = -e1**2 + (

|

|

184

|

-

a11 * (m['r12_sq'] - 2 * a3 * m['tr2_r12'] + a33 * m['tr2_r2']) +

|

|

185

|

-

a22 * (m['r34_sq'] - 2 * a5 * m['tr4_r34'] + a55 * m['tr4_r4']) +

|

|

186

|

-

a12 * (m['r12_r34'] - a3 * m['tr2_r34'] - a5 * m['tr4_r12'] + a3 * a5 * m['tr2_r4'])

|

|

187

|

-

)

|

|

188

|

-

# v2: Variance of second moment condition

|

|

189

|

-

v2 = -e2**2 + (

|

|

190

|

-

a11 * (m['r15_sq'] - 2 * a3 * m['tr5_r15'] + a33 * m['tr5_r5']) +

|

|

191

|

-

a22 * (m['r45_sq'] - 2 * a6 * m['tr4_r45'] + a66 * m['tr4_r4']) +

|

|

192

|

-

a12 * (m['r15_r45'] - a3 * m['tr5_r45'] - a6 * m['tr1_r45'] + a3 * a6 * m['tr4_r5'])

|

|

193

|

-

)

|

|

194

|

-

|

|

195

|

-

# Compute squared spread using optimal GMM weights

|

|

196

|

-

vt = v1 + v2 # Total variance

|

|

197

|

-

s2 = pd.Series(np.where(

|

|

198

|

-

vt > 0,

|

|

199

|

-

(v2 * e1 + v1 * e2) / vt, # Optimal weighted average if variance is positive

|

|

200

|

-

(e1 + e2) / 2.0 # Simple average if variance is zero/negative

|

|

201

|

-

), index=df.index)

|

|

202

|

-

|

|

203

|

-

# Compute signed root

|

|

204

|

-

s = np.sqrt(np.abs(s2))

|

|

205

|

-

if sign:

|

|

206

|

-

s *= np.sign(s2)

|

|

207

30

|

|

|

208

|

-

|

|

31

|

+

# --- 1. Validation ---

|

|

32

|

+

if not isinstance(window, int) or window < 3:

|

|

33

|

+

raise ValueError("Window must be an integer >= 3.")

|

|

34

|

+

if min_periods is None:

|

|

35

|

+

min_periods = window

|

|

36

|

+

# The core estimator needs at least 3 data points to work.

|

|

37

|

+

min_periods = max(3, min_periods)

|

|

38

|

+

|

|

39

|

+

# --- 2. Data Preparation ---

|

|

40

|

+

df_proc = df.rename(columns=str.lower).copy()

|

|

41

|

+

open_p = df_proc["open"].values

|

|

42

|

+

high_p = df_proc["high"].values

|

|

43

|

+

low_p = df_proc["low"].values

|

|

44

|

+

close_p = df_proc["close"].values

|

|

45

|

+

|

|

46

|

+

n = len(df_proc)

|

|

47

|

+

estimates = np.full(n, np.nan)

|

|

48

|

+

|

|

49

|

+

# --- 3. Loop and Apply (This logic now perfectly matches the test) ---

|

|

50

|

+

for i in range(0, n, step):

|

|

51

|

+

t1 = i + 1

|

|

52

|

+

t0 = t1 - window

|

|

53

|

+

|

|

54

|

+

# Only calculate if the window is full enough

|

|

55

|

+

if t1 >= min_periods and t0 >= 0:

|

|

56

|

+

estimates[i] = edge_single(

|

|

57

|

+

open_p[t0:t1],

|

|

58

|

+

high_p[t0:t1],

|

|

59

|

+

low_p[t0:t1],

|

|

60

|

+

close_p[t0:t1],

|

|

61

|

+

sign=sign,

|

|

62

|

+

)

|

|

63

|

+

|

|

64

|

+

return pd.Series(estimates, index=df_proc.index, name=f"EDGE_rolling_{window}")

|

|

@@ -1,9 +1,9 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: quantjourney-bidask

|

|

3

|

-

Version: 0.

|

|

3

|

+

Version: 1.0.1

|

|

4

4

|

Summary: Efficient bid-ask spread estimator from OHLC prices

|

|

5

5

|

Author-email: Jakub Polec <jakub@quantjourney.pro>

|

|

6

|

-

License

|

|

6

|

+

License: MIT

|

|

7

7

|

Project-URL: Homepage, https://github.com/QuantJourneyOrg/qj_bidask

|

|

8

8

|

Project-URL: Repository, https://github.com/QuantJourneyOrg/qj_bidask

|

|

9

9

|

Project-URL: Bug Tracker, https://github.com/QuantJourneyOrg/qj_bidask/issues

|

|

@@ -26,6 +26,7 @@ Requires-Dist: yfinance>=0.2

|

|

|

26

26

|

Requires-Dist: matplotlib>=3.5

|

|

27

27

|

Requires-Dist: plotly>=5.0

|

|

28

28

|

Requires-Dist: websocket-client>=1.0

|

|

29

|

+

Requires-Dist: numba

|

|

29

30

|

Provides-Extra: dev

|

|

30

31

|

Requires-Dist: pytest>=7.0; extra == "dev"

|

|

31

32

|

Requires-Dist: pytest-mock>=3.10; extra == "dev"

|

|

@@ -34,6 +35,7 @@ Requires-Dist: ruff>=0.1; extra == "dev"

|

|

|

34

35

|

Requires-Dist: mypy>=1.0; extra == "dev"

|

|

35

36

|

Requires-Dist: black>=22.0; extra == "dev"

|

|

36

37

|

Requires-Dist: isort>=5.0; extra == "dev"

|

|

38

|

+

Requires-Dist: numba; extra == "dev"

|

|

37

39

|

Provides-Extra: examples

|

|

38

40

|

Requires-Dist: jupyter>=1.0; extra == "examples"

|

|

39

41

|

Requires-Dist: ipywidgets>=7.0; extra == "examples"

|

|

@@ -41,9 +43,13 @@ Dynamic: license-file

|

|

|

41

43

|

|

|

42

44

|

# QuantJourney Bid-Ask Spread Estimator

|

|

43

45

|

|

|

44

|

-

|

|

47

|

+

[](https://pypi.org/project/quantjourney-bidask/)

|

|

48

|

+

[](https://pypi.org/project/quantjourney-bidask/)

|

|

49

|

+

[](https://pepy.tech/project/quantjourney-bidask)

|

|

50

|

+

[](https://github.com/QuantJourneyOrg/qj_bidask/blob/main/LICENSE)

|

|

51

|

+

[](https://github.com/QuantJourneyOrg/qj_bidask)

|

|

52

|

+

|

|

47

53

|

|

|

48

54

|

The `quantjourney-bidask` library provides an efficient estimator for calculating bid-ask spreads from open, high, low, and close (OHLC) prices, based on the methodology described in:

|

|

49

55

|

|

|

@@ -51,6 +57,8 @@ The `quantjourney-bidask` library provides an efficient estimator for calculatin

|

|

|

51

57

|

|

|

52

58

|

This library is designed for quantitative finance professionals, researchers, and traders who need accurate and computationally efficient spread estimates for equities, cryptocurrencies, and other assets.

|

|

53

59

|

|

|

60

|

+

🚀 **Part of the [QuantJourney](https://quantjourney.substack.com/) ecosystem** - The framework with advanced quantitative finance tools and insights!

|

|

61

|

+

|

|

54

62

|

## Features

|

|

55

63

|

|

|

56

64

|

- **Efficient Spread Estimation**: Implements the EDGE estimator for single, rolling, and expanding windows.

|

|

@@ -62,6 +70,61 @@ This library is designed for quantitative finance professionals, researchers, an

|

|

|

62

70

|

- **Comprehensive Tests**: Extensive unit tests with known test cases from the original paper.

|

|

63

71

|

- **Clear Documentation**: Detailed docstrings and usage examples.

|

|

64

72

|

|

|

73

|

+

## Examples and Visualizations

|

|

74

|

+

|

|

75

|

+

The package includes comprehensive examples with beautiful visualizations:

|

|

76

|

+

|

|

77

|

+

### Spread Monitor Results

|

|

78

|

+

|

|

79

|

+

|

|

80

|

+

### Basic Data Analysis

|

|

81

|

+

|

|

82

|

+

|

|

83

|

+

### Crypto Spread Comparison

|

|

84

|

+

|

|

85

|

+

|

|

86

|

+

## FAQ

|

|

87

|

+

|

|

88

|

+

### What exactly does the estimator compute?

|

|

89

|

+

The estimator returns the root mean square effective spread over the sample period. This quantifies the average transaction cost implied by bid-ask spreads, based on open, high, low, and close (OHLC) prices.

|

|

90

|

+

|

|

91

|

+

### What is unique about this implementation?

|

|

92

|

+

This package provides a highly optimized and robust implementation of the EDGE estimator. Beyond a direct translation of the paper's formula, it features:

|

|

93

|

+

|

|

94

|

+

- A Hybrid, High-Performance Engine: The core logic leverages fast, vectorized NumPy operations for data preparation and calls a specialized, JIT-compiled kernel via Numba for the computationally intensive GMM calculations.

|

|

95

|

+

- HFT-Ready Version (edge_hft.py): An included, hyper-optimized function that uses fastmath compilation for the absolute lowest latency, designed for production HFT pipelines where every microsecond matters.

|

|

96

|

+

- Robust Data Handling: Gracefully manages missing values (NaN) and non-positive prices to prevent crashes.

|

|

97

|

+

- Advanced Windowing Functions: Efficient and correct edge_rolling and edge_expanding functions that are fully compatible with the powerful features of pandas, including custom step sizes.

|

|

98

|

+

|

|

99

|

+

### What's the difference between the edge functions?

|

|

100

|

+

The library provides a tiered set of functions for different needs:

|

|

101

|

+

|

|

102

|

+

- edge(): The core function. It's fast, robust, and computes a single spread estimate for a given sample of data. This is the building block for all other functions.

|

|

103

|

+

- edge_hft(): A specialized version of edge() for HFT users. It's the fastest possible implementation but requires perfectly clean input data (no NaNs) to achieve its speed.

|

|

104

|

+

- edge_rolling(): Computes the spread on a rolling window over a time series. It's perfect for seeing how the spread evolves over time. It is highly optimized and accepts all arguments from pandas.DataFrame.rolling() (like window and step).

|

|

105

|

+

- edge_expanding(): Computes the spread on an expanding (cumulative) window. This is useful for analyzing how the spread estimate converges or changes as more data becomes available.

|

|

106

|

+

|

|

107

|

+

### What is the minimum number of observations?

|

|

108

|

+

At least 3 valid observations are required.

|

|

109

|

+

|

|

110

|

+

### How should I choose the window size or frequency?

|

|

111

|

+

Short windows (e.g. a few days) reflect local spread conditions but may be noisy. Longer windows (e.g. 1 year) reduce variance but smooth over changes. For intraday use, minute-level frequency is recommended if the asset trades frequently.

|

|

112

|

+

|

|

113

|

+

Rule of thumb: ensure on average ≥2 trades per interval.

|

|

114

|

+

|

|

115

|

+

### Can I use intraday or tick data?

|

|

116

|

+

Yes — the estimator supports intraday OHLC data directly. For tick data, resample into OHLC format first (e.g., using pandas.resample).

|

|

117

|

+

|

|

118

|

+

### What if I get NaN results?

|

|

119

|

+

The estimator may return NaN if:

|

|

120

|

+

|

|

121

|

+

- Input prices are inconsistent (e.g. high < low)

|

|

122

|

+

- There are too many missing or invalid values

|

|

123

|

+

- Probability thresholds are not met (e.g. insufficient variance in prices)

|

|

124

|

+

- Spread variance is non-positive

|

|

125

|

+

|

|

126

|

+

In these cases, re-examine your input or adjust the sampling frequency.

|

|

127

|

+

|

|

65

128

|

## Installation

|

|

66

129

|

|

|

67

130

|

Install the library via pip:

|

|

@@ -123,9 +186,9 @@ from quantjourney_bidask import edge_rolling

|

|

|

123

186

|

import asyncio

|

|

124

187

|

|

|

125

188

|

# Fetch stock data

|

|

126

|

-

stock_df = get_stock_data("

|

|

189

|

+

stock_df = get_stock_data("PL", period="1mo", interval="1d")

|

|

127

190

|

stock_spreads = edge_rolling(stock_df, window=20)

|

|

128

|

-

print(f"

|

|

191

|

+

print(f"PL average spread: {stock_spreads.mean():.6f}")

|

|

129

192

|

|

|

130

193

|

# Fetch crypto data (async)

|

|

131

194

|

async def get_crypto_spreads():

|

|

@@ -178,10 +241,13 @@ monitor.start_monitoring("1m")

|

|

|

178

241

|

|

|

179

242

|

```python

|

|

180

243

|

# Run the real-time dashboard

|

|

181

|

-

python examples/

|

|

244

|

+

python examples/websocket_realtime_demo.py --mode dashboard

|

|

245

|

+

|

|

246

|

+

# Or console mode

|

|

247

|

+

python examples/websocket_realtime_demo.py --mode console

|

|

182

248

|

|

|

183

|

-

#

|

|

184

|

-

python examples/

|

|

249

|

+

# Quick 30-second BTC websocket demo

|

|

250

|

+

python examples/animated_spread_monitor.py

|

|

185

251

|

```

|

|

186

252

|

|

|

187

253

|

## Project Structure

|

|

@@ -190,42 +256,32 @@ python examples/realtime_spread_monitor.py --mode console

|

|

|

190

256

|

quantjourney_bidask/

|

|

191

257

|

├── quantjourney_bidask/ # Main library code

|

|

192

258

|

│ ├── __init__.py

|

|

193

|

-

│ ├── edge.py # Core EDGE estimator

|

|

259

|

+

│ ├── edge.py # Core EDGE estimator

|

|

260

|

+

│ ├── edge_hft.py # EDGE estimator optimised HFT-version

|

|

194

261

|

│ ├── edge_rolling.py # Rolling window estimation

|

|

195

262

|

│ └── edge_expanding.py # Expanding window estimation

|

|

196

263

|

├── data/

|

|

197

264

|

│ └── fetch.py # Simplified data fetcher for examples

|

|

198

265

|

├── examples/ # Comprehensive usage examples

|

|

199

266

|

│ ├── simple_data_example.py # Basic usage demonstration

|

|

200

|

-

│ ├──

|

|

267

|

+

│ ├── basic_spread_estimation.py # Core spread estimation examples

|

|

201

268

|

│ ├── animated_spread_monitor.py # Animated visualizations

|

|

202

269

|

│ ├── crypto_spread_comparison.py # Crypto spread analysis

|

|

203

270

|

│ ├── liquidity_risk_monitor.py # Risk monitoring

|

|

204

|

-

│ ├──

|

|

205

|

-

│ └──

|

|

271

|

+

│ ├── websocket_realtime_demo.py # Live websocket monitoring demo

|

|

272

|

+

│ └── threshold_alert_monitor.py # Threshold-based spread alerts

|

|

206

273

|

├── tests/ # Unit tests (GitHub only)

|

|

207

274

|

│ ├── test_edge.py

|

|

208

275

|

│ ├── test_edge_rolling.py

|

|

276

|

+

│ └── test_edge_expanding.py

|

|

209

277

|

│ └── test_data_fetcher.py

|

|

278

|

+

│ └── test_estimators.py

|

|

210

279

|

└── _output/ # Example output images

|

|

211

280

|

├── simple_data_example.png

|

|

212

281

|

├── crypto_spread_comparison.png

|

|

213

282

|

└── spread_estimator_results.png

|

|

214

283

|

```

|

|

215

284

|

|

|

216

|

-

## Examples and Visualizations

|

|

217

|

-

|

|

218

|

-

The package includes comprehensive examples with beautiful visualizations:

|

|

219

|

-

|

|

220

|

-

### Basic Data Analysis

|

|

221

|

-

|

|

222

|

-

|

|

223

|

-

### Crypto Spread Comparison

|

|

224

|

-

|

|

225

|

-

|

|

226

|

-

### Spread Estimation Results

|

|

227

|

-

|

|

228

|

-

|

|

229

285

|

### Running Examples

|

|

230

286

|

|

|

231

287

|

After installing via pip, examples are included in the package:

|

|

@@ -250,19 +306,20 @@ Or clone the repository for full access to examples and tests:

|

|

|

250

306

|

git clone https://github.com/QuantJourneyOrg/qj_bidask

|

|

251

307

|

cd qj_bidask

|

|

252

308

|

python examples/simple_data_example.py

|

|

253

|

-

python examples/

|

|

309

|

+

python examples/basic_spread_estimation.py

|

|

310

|

+

python examples/animated_spread_monitor.py # 30s real BTC websocket demo

|

|

254

311

|

python examples/crypto_spread_comparison.py

|

|

255

312

|

```

|

|

256

313

|

|

|

257

314

|

### Available Examples

|

|

258

315

|

|

|

259

316

|

- **`simple_data_example.py`** - Basic usage with stock and crypto data

|

|

260

|

-

- **`

|

|

261

|

-

- **`animated_spread_monitor.py`** - Real-time animated visualizations

|

|

262

|

-

- **`crypto_spread_comparison.py`** - Multi-asset crypto analysis

|

|

317

|

+

- **`basic_spread_estimation.py`** - Core spread estimation functionality

|

|

318

|

+

- **`animated_spread_monitor.py`** - Real-time animated visualizations with 30s websocket demo

|

|

319

|

+

- **`crypto_spread_comparison.py`** - Multi-asset crypto analysis and comparison

|

|

263

320

|

- **`liquidity_risk_monitor.py`** - Risk monitoring and alerts

|

|

264

|

-

- **`

|

|

265

|

-

- **`

|

|

321

|

+

- **`websocket_realtime_demo.py`** - Live websocket monitoring dashboard

|

|

322

|

+

- **`threshold_alert_monitor.py`** - Threshold-based spread alerts and monitoring

|

|

266

323

|

|

|

267

324

|

## Testing and Development

|

|

268

325

|

|

|

@@ -297,7 +354,8 @@ python -m pytest tests/test_data_fetcher.py -v

|

|

|

297

354

|

|

|

298

355

|

# Run examples

|

|

299

356

|

python examples/simple_data_example.py

|

|

300

|

-

python examples/

|

|

357

|

+

python examples/basic_spread_estimation.py

|

|

358

|

+

python examples/animated_spread_monitor.py # Real BTC websocket demo

|

|

301

359

|

```

|

|

302

360

|

|

|

303

361

|

### Package vs Repository

|

|

@@ -370,7 +428,8 @@ pip install -e ".[dev]"

|

|

|

370

428

|

pytest

|

|

371

429

|

|

|

372

430

|

# Run examples

|

|

373

|

-

python examples/

|

|

431

|

+

python examples/animated_spread_monitor.py # 30s real BTC websocket demo

|

|

432

|

+

python examples/websocket_realtime_demo.py # Full dashboard

|

|

374

433

|

```

|

|

375

434

|

|

|

376

435

|

## Support

|

|

@@ -0,0 +1,11 @@

|

|

|

1

|

+

quantjourney_bidask/__init__.py,sha256=lBMoVnF1hxp_3axSHGw6mrRLbwXmk_xPvDsTSkAWV1A,955

|

|

2

|

+

quantjourney_bidask/_compare_edge.py,sha256=q5Oz81ZbCh6JOTViTRQ7wq-f9m5Xue4ANn6DqC0pYbY,8670

|

|

3

|

+

quantjourney_bidask/edge.py,sha256=S_PlmwZQd6BCHMHkeWrapzNMXGCqW2pgVgpbchXDknI,7559

|

|

4

|

+

quantjourney_bidask/edge_expanding.py,sha256=QEbhHSA3xWOfa_0oRoj2ypyLHimmAm-S7vulbD2Pf3s,1594

|

|

5

|

+

quantjourney_bidask/edge_hft.py,sha256=UyTla9TF16LCigGaY92i19m9A5qhPymd8LJ-P7VYTv8,4681

|

|

6

|

+

quantjourney_bidask/edge_rolling.py,sha256=c1RLHd3Q9vQj9V42OzDCmc8K12sUBq_UJ3HiMAXz14M,1934

|

|

7

|

+

quantjourney_bidask-1.0.1.dist-info/licenses/LICENSE,sha256=m8MEOGnpSBtS6m9z4M9m1JksWWPzu1OK3UgY1wuHf04,1081

|

|

8

|

+

quantjourney_bidask-1.0.1.dist-info/METADATA,sha256=AFvN-YQqha8kdAoJ8UtTeNSDOvKEY9YpdUmk8HNdKrU,17564

|

|

9

|

+

quantjourney_bidask-1.0.1.dist-info/WHEEL,sha256=_zCd3N1l69ArxyTb8rzEoP9TpbYXkqRFSNOD5OuxnTs,91

|

|

10

|

+

quantjourney_bidask-1.0.1.dist-info/top_level.txt,sha256=rOBM4GxA87iQv-mR8-WZdu3-Yj5ESyggRICpUhJ-4Dg,20

|

|

11

|

+

quantjourney_bidask-1.0.1.dist-info/RECORD,,

|

quantjourney_bidask/_version.py

DELETED