pygpt-net 2.5.19__py3-none-any.whl → 2.5.21__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- pygpt_net/CHANGELOG.txt +13 -0

- pygpt_net/__init__.py +3 -3

- pygpt_net/app.py +8 -4

- pygpt_net/container.py +3 -3

- pygpt_net/controller/chat/command.py +4 -4

- pygpt_net/controller/chat/input.py +2 -2

- pygpt_net/controller/chat/stream.py +6 -2

- pygpt_net/controller/config/placeholder.py +28 -14

- pygpt_net/controller/lang/custom.py +2 -2

- pygpt_net/controller/mode/__init__.py +22 -1

- pygpt_net/controller/model/__init__.py +2 -2

- pygpt_net/controller/model/editor.py +6 -63

- pygpt_net/controller/model/importer.py +9 -7

- pygpt_net/controller/presets/editor.py +8 -8

- pygpt_net/core/agents/legacy.py +2 -2

- pygpt_net/core/bridge/__init__.py +5 -4

- pygpt_net/core/bridge/worker.py +5 -2

- pygpt_net/core/command/__init__.py +10 -8

- pygpt_net/core/debug/presets.py +2 -2

- pygpt_net/core/experts/__init__.py +2 -2

- pygpt_net/core/idx/chat.py +7 -20

- pygpt_net/core/idx/llm.py +27 -28

- pygpt_net/core/llm/__init__.py +25 -3

- pygpt_net/core/models/__init__.py +83 -9

- pygpt_net/core/modes/__init__.py +2 -2

- pygpt_net/core/presets/__init__.py +3 -3

- pygpt_net/core/prompt/__init__.py +5 -5

- pygpt_net/core/render/web/renderer.py +16 -16

- pygpt_net/core/tokens/__init__.py +3 -3

- pygpt_net/core/updater/__init__.py +5 -3

- pygpt_net/data/config/config.json +5 -3

- pygpt_net/data/config/models.json +1302 -3088

- pygpt_net/data/config/modes.json +1 -7

- pygpt_net/data/config/settings.json +60 -0

- pygpt_net/data/css/web-chatgpt.css +2 -2

- pygpt_net/data/locale/locale.de.ini +2 -2

- pygpt_net/data/locale/locale.en.ini +12 -4

- pygpt_net/data/locale/locale.es.ini +2 -2

- pygpt_net/data/locale/locale.fr.ini +2 -2

- pygpt_net/data/locale/locale.it.ini +2 -2

- pygpt_net/data/locale/locale.pl.ini +2 -2

- pygpt_net/data/locale/locale.uk.ini +2 -2

- pygpt_net/data/locale/locale.zh.ini +2 -2

- pygpt_net/item/model.py +49 -34

- pygpt_net/plugin/base/plugin.py +6 -5

- pygpt_net/provider/core/config/patch.py +18 -1

- pygpt_net/provider/core/model/json_file.py +7 -7

- pygpt_net/provider/core/model/patch.py +56 -7

- pygpt_net/provider/core/preset/json_file.py +4 -4

- pygpt_net/provider/gpt/__init__.py +9 -17

- pygpt_net/provider/gpt/chat.py +90 -20

- pygpt_net/provider/gpt/responses.py +58 -21

- pygpt_net/provider/llms/anthropic.py +2 -1

- pygpt_net/provider/llms/azure_openai.py +11 -7

- pygpt_net/provider/llms/base.py +3 -2

- pygpt_net/provider/llms/deepseek_api.py +3 -1

- pygpt_net/provider/llms/google.py +2 -1

- pygpt_net/provider/llms/hugging_face.py +8 -5

- pygpt_net/provider/llms/hugging_face_api.py +3 -1

- pygpt_net/provider/llms/local.py +2 -1

- pygpt_net/provider/llms/ollama.py +8 -6

- pygpt_net/provider/llms/openai.py +11 -7

- pygpt_net/provider/llms/perplexity.py +109 -0

- pygpt_net/provider/llms/x_ai.py +108 -0

- pygpt_net/ui/dialog/about.py +5 -5

- pygpt_net/ui/dialog/preset.py +5 -5

- {pygpt_net-2.5.19.dist-info → pygpt_net-2.5.21.dist-info}/METADATA +173 -285

- {pygpt_net-2.5.19.dist-info → pygpt_net-2.5.21.dist-info}/RECORD +71 -69

- {pygpt_net-2.5.19.dist-info → pygpt_net-2.5.21.dist-info}/LICENSE +0 -0

- {pygpt_net-2.5.19.dist-info → pygpt_net-2.5.21.dist-info}/WHEEL +0 -0

- {pygpt_net-2.5.19.dist-info → pygpt_net-2.5.21.dist-info}/entry_points.txt +0 -0

|

@@ -1,9 +1,9 @@

|

|

|

1

1

|

Metadata-Version: 2.3

|

|

2

2

|

Name: pygpt-net

|

|

3

|

-

Version: 2.5.

|

|

4

|

-

Summary: Desktop AI Assistant powered by models: OpenAI o1,

|

|

3

|

+

Version: 2.5.21

|

|

4

|

+

Summary: Desktop AI Assistant powered by models: OpenAI o1, o3, GPT-4o, GPT-4 Vision, DALL-E 3, Llama 3, Mistral, Gemini, Claude, DeepSeek, Bielik, and other models supported by Llama Index, and Ollama. Features include chatbot, text completion, image generation, vision analysis, speech-to-text, internet access, file handling, command execution and more.

|

|

5

5

|

License: MIT

|

|

6

|

-

Keywords: py_gpt,py-gpt,pygpt,desktop,app,o1,gpt,gpt4,gpt-4o,gpt-4v,gpt3.5,gpt-4,gpt-4-vision,gpt-3.5,llama3,mistral,gemini,deepseek,bielik,claude,tts,whisper,vision,chatgpt,dall-e,chat,chatbot,assistant,text completion,image generation,ai,api,openai,api key,langchain,llama-index,ollama,presets,ui,qt,pyside

|

|

6

|

+

Keywords: py_gpt,py-gpt,pygpt,desktop,app,o1,gpt,gpt4,gpt-4o,gpt-4v,gpt3.5,gpt-4,gpt-4-vision,gpt-3.5,llama3,mistral,gemini,grok,deepseek,bielik,claude,tts,whisper,vision,chatgpt,dall-e,chat,chatbot,assistant,text completion,image generation,ai,api,openai,api key,langchain,llama-index,ollama,presets,ui,qt,pyside

|

|

7

7

|

Author: Marcin Szczyglinski

|

|

8

8

|

Author-email: info@pygpt.net

|

|

9

9

|

Requires-Python: >=3.10,<3.13

|

|

@@ -35,13 +35,9 @@ Requires-Dist: httpx (>=0.27.2,<0.28.0)

|

|

|

35

35

|

Requires-Dist: httpx-socks (>=0.9.2,<0.10.0)

|

|

36

36

|

Requires-Dist: ipykernel (>=6.29.5,<7.0.0)

|

|

37

37

|

Requires-Dist: jupyter_client (>=8.6.3,<9.0.0)

|

|

38

|

-

Requires-Dist:

|

|

39

|

-

Requires-Dist: langchain-community (>=0.2.19,<0.3.0)

|

|

40

|

-

Requires-Dist: langchain-experimental (>=0.0.64,<0.0.65)

|

|

41

|

-

Requires-Dist: langchain-openai (>=0.1.25,<0.2.0)

|

|

42

|

-

Requires-Dist: llama-index (>=0.12.22,<0.13.0)

|

|

38

|

+

Requires-Dist: llama-index (>=0.12.44,<0.13.0)

|

|

43

39

|

Requires-Dist: llama-index-agent-openai (>=0.4.8,<0.5.0)

|

|

44

|

-

Requires-Dist: llama-index-core (==0.12.

|

|

40

|

+

Requires-Dist: llama-index-core (==0.12.44)

|

|

45

41

|

Requires-Dist: llama-index-embeddings-azure-openai (>=0.3.8,<0.4.0)

|

|

46

42

|

Requires-Dist: llama-index-embeddings-gemini (>=0.3.2,<0.4.0)

|

|

47

43

|

Requires-Dist: llama-index-embeddings-huggingface-api (>=0.3.1,<0.4.0)

|

|

@@ -50,12 +46,12 @@ Requires-Dist: llama-index-embeddings-openai (>=0.3.1,<0.4.0)

|

|

|

50

46

|

Requires-Dist: llama-index-llms-anthropic (>=0.6.12,<0.7.0)

|

|

51

47

|

Requires-Dist: llama-index-llms-azure-openai (>=0.3.2,<0.4.0)

|

|

52

48

|

Requires-Dist: llama-index-llms-deepseek (>=0.1.1,<0.2.0)

|

|

53

|

-

Requires-Dist: llama-index-llms-gemini (>=0.

|

|

49

|

+

Requires-Dist: llama-index-llms-gemini (>=0.5.0,<0.6.0)

|

|

54

50

|

Requires-Dist: llama-index-llms-huggingface-api (>=0.3.1,<0.4.0)

|

|

55

|

-

Requires-Dist: llama-index-llms-ollama (>=0.

|

|

56

|

-

Requires-Dist: llama-index-llms-openai (>=0.

|

|

57

|

-

Requires-Dist: llama-index-llms-openai-like (>=0.

|

|

58

|

-

Requires-Dist: llama-index-multi-modal-llms-openai (>=0.

|

|

51

|

+

Requires-Dist: llama-index-llms-ollama (>=0.6.2,<0.7.0)

|

|

52

|

+

Requires-Dist: llama-index-llms-openai (>=0.4.7,<0.5.0)

|

|

53

|

+

Requires-Dist: llama-index-llms-openai-like (>=0.4.0,<0.5.0)

|

|

54

|

+

Requires-Dist: llama-index-multi-modal-llms-openai (>=0.5.1,<0.6.0)

|

|

59

55

|

Requires-Dist: llama-index-readers-chatgpt-plugin (>=0.3.0,<0.4.0)

|

|

60

56

|

Requires-Dist: llama-index-readers-database (>=0.3.0,<0.4.0)

|

|

61

57

|

Requires-Dist: llama-index-readers-file (>=0.4.9,<0.5.0)

|

|

@@ -100,7 +96,7 @@ Description-Content-Type: text/markdown

|

|

|

100

96

|

|

|

101

97

|

[](https://snapcraft.io/pygpt)

|

|

102

98

|

|

|

103

|

-

Release: **2.5.

|

|

99

|

+

Release: **2.5.21** | build: **2025-06-28** | Python: **>=3.10, <3.13**

|

|

104

100

|

|

|

105

101

|

> Official website: https://pygpt.net | Documentation: https://pygpt.readthedocs.io

|

|

106

102

|

>

|

|

@@ -112,7 +108,7 @@ Release: **2.5.19** | build: **2025-06-27** | Python: **>=3.10, <3.13**

|

|

|

112

108

|

|

|

113

109

|

## Overview

|

|

114

110

|

|

|

115

|

-

**PyGPT** is **all-in-one** Desktop AI Assistant that provides direct interaction with OpenAI language models, including `o1`, `gpt-4o`, `gpt-4`, `gpt-4 Vision`, and `gpt-3.5`, through the `OpenAI API`. By utilizing `

|

|

111

|

+

**PyGPT** is **all-in-one** Desktop AI Assistant that provides direct interaction with OpenAI language models, including `o1`, `o3`, `gpt-4o`, `gpt-4`, `gpt-4 Vision`, and `gpt-3.5`, through the `OpenAI API`. By utilizing `LlamaIndex`, the application also supports alternative LLMs, like those available on `HuggingFace`, locally available models via `Ollama` (like `Llama 3`,`Mistral`, `DeepSeek V3/R1` or `Bielik`), `Google Gemini`, `Anthropic Claude`, and `xAI Grok`.

|

|

116

112

|

|

|

117

113

|

This assistant offers multiple modes of operation such as chat, assistants, completions, and image-related tasks using `DALL-E 3` for generation and `gpt-4 Vision` for image analysis. **PyGPT** has filesystem capabilities for file I/O, can generate and run Python code, execute system commands, execute custom commands and manage file transfers. It also allows models to perform web searches with the `Google` and `Microsoft Bing`.

|

|

118

114

|

|

|

@@ -120,7 +116,7 @@ For audio interactions, **PyGPT** includes speech synthesis using the `Microsoft

|

|

|

120

116

|

|

|

121

117

|

**PyGPT**'s functionality extends through plugin support, allowing for custom enhancements. Its multi-modal capabilities make it an adaptable tool for a range of AI-assisted operations, such as text-based interactions, system automation, daily assisting, vision applications, natural language processing, code generation and image creation.

|

|

122

118

|

|

|

123

|

-

Multiple operation modes are included, such as chat, text completion, assistant, vision,

|

|

119

|

+

Multiple operation modes are included, such as chat, text completion, assistant, vision, Chat with Files (via `LlamaIndex`), commands execution, external API calls and image generation, making **PyGPT** a multi-tool for many AI-driven tasks.

|

|

124

120

|

|

|

125

121

|

**Video** (mp4, version `2.4.35`, build `2024-11-28`):

|

|

126

122

|

|

|

@@ -136,8 +132,8 @@ You can download compiled 64-bit versions for Windows and Linux here: https://py

|

|

|

136

132

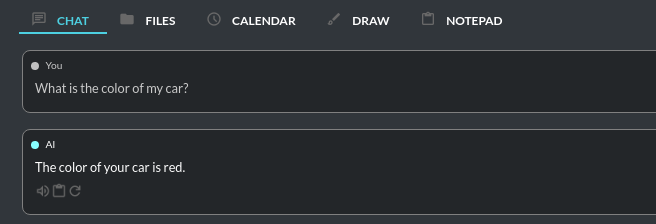

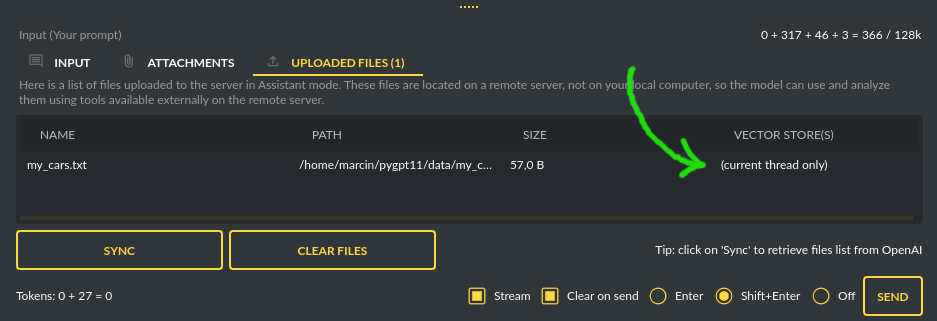

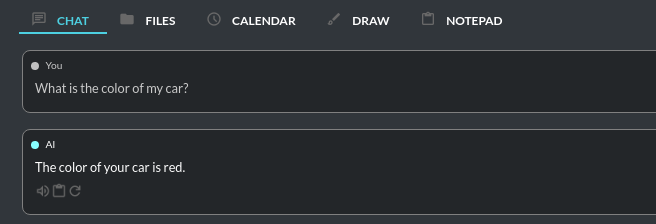

|

|

|

137

133

|

- Desktop AI Assistant for `Linux`, `Windows` and `Mac`, written in Python.

|

|

138

134

|

- Works similarly to `ChatGPT`, but locally (on a desktop computer).

|

|

139

|

-

-

|

|

140

|

-

- Supports multiple models: `o1`, `GPT-4o`, `GPT-4`, `GPT-3.5`, and any model accessible through `

|

|

135

|

+

- 11 modes of operation: Chat, Chat with Files, Chat with Audio, Research (Perplexity), Completion, Image generation, Vision, Assistants, Experts, Agents and Autonomous Mode.

|

|

136

|

+

- Supports multiple models: `o1`, `o1`, `GPT-4o`, `GPT-4`, `GPT-3.5`, and any model accessible through `LlamaIndex` and `Ollama` such as `Llama 3`, `Mistral`, `Google Gemini`, `Anthropic Claude`, `xAI Grok`, `DeepSeek V3/R1`, `Bielik`, etc.

|

|

141

137

|

- Chat with your own Files: integrated `LlamaIndex` support: chat with data such as: `txt`, `pdf`, `csv`, `html`, `md`, `docx`, `json`, `epub`, `xlsx`, `xml`, webpages, `Google`, `GitHub`, video/audio, images and other data types, or use conversation history as additional context provided to the model.

|

|

142

138

|

- Built-in vector databases support and automated files and data embedding.

|

|

143

139

|

- Included support features for individuals with disabilities: customizable keyboard shortcuts, voice control, and translation of on-screen actions into audio via speech synthesis.

|

|

@@ -147,7 +143,6 @@ You can download compiled 64-bit versions for Windows and Linux here: https://py

|

|

|

147

143

|

- Speech recognition via `OpenAI Whisper`, `Google` and `Microsoft Speech Recognition`.

|

|

148

144

|

- Real-time video camera capture in Vision mode.

|

|

149

145

|

- Image analysis via `GPT-4 Vision` and `GPT-4o`.

|

|

150

|

-

- Integrated `LangChain` support (you can connect to any LLM, e.g., on `HuggingFace`).

|

|

151

146

|

- Integrated calendar, day notes and search in contexts by selected date.

|

|

152

147

|

- Tools and commands execution (via plugins: access to the local filesystem, Python Code Interpreter, system commands execution, and more).

|

|

153

148

|

- Custom commands creation and execution.

|

|

@@ -177,7 +172,7 @@ Full Python source code is available on `GitHub`.

|

|

|

177

172

|

**PyGPT uses the user's API key - to use the GPT models,

|

|

178

173

|

you must have a registered OpenAI account and your own API key. Local models do not require any API keys.**

|

|

179

174

|

|

|

180

|

-

You can also use built-it

|

|

175

|

+

You can also use built-it LlamaIndex support to connect to other Large Language Models (LLMs),

|

|

181

176

|

such as those on HuggingFace. Additional API keys may be required.

|

|

182

177

|

|

|

183

178

|

# Installation

|

|

@@ -315,6 +310,13 @@ poetry env use python3.10

|

|

|

315

310

|

poetry shell

|

|

316

311

|

```

|

|

317

312

|

|

|

313

|

+

or (Poetry >= 2.0):

|

|

314

|

+

|

|

315

|

+

```commandline

|

|

316

|

+

poetry env use python3.10

|

|

317

|

+

poetry env activate

|

|

318

|

+

```

|

|

319

|

+

|

|

318

320

|

4. Install requirements:

|

|

319

321

|

|

|

320

322

|

```commandline

|

|

@@ -485,6 +487,114 @@ Plugin allows you to generate images in Chat mode:

|

|

|

485

487

|

|

|

486

488

|

|

|

487

489

|

|

|

490

|

+

## Chat with Files (LlamaIndex)

|

|

491

|

+

|

|

492

|

+

This mode enables chat interaction with your documents and entire context history through conversation.

|

|

493

|

+

It seamlessly incorporates `LlamaIndex` into the chat interface, allowing for immediate querying of your indexed documents.

|

|

494

|

+

|

|

495

|

+

**Querying single files**

|

|

496

|

+

|

|

497

|

+

You can also query individual files "on the fly" using the `query_file` command from the `Files I/O` plugin. This allows you to query any file by simply asking a question about that file. A temporary index will be created in memory for the file being queried, and an answer will be returned from it. From version `2.1.9` similar command is available for querying web and external content: `Directly query web content with LlamaIndex`.

|

|

498

|

+

|

|

499

|

+

**For example:**

|

|

500

|

+

|

|

501

|

+

If you have a file: `data/my_cars.txt` with content `My car is red.`

|

|

502

|

+

|

|

503

|

+

You can ask for: `Query the file my_cars.txt about what color my car is.`

|

|

504

|

+

|

|

505

|

+

And you will receive the response: `Red`.

|

|

506

|

+

|

|

507

|

+

Note: this command indexes the file only for the current query and does not persist it in the database. To store queried files also in the standard index you must enable the option `Auto-index readed files` in plugin settings. Remember to enable `+ Tools` checkbox to allow usage of tools and commands from plugins.

|

|

508

|

+

|

|

509

|

+

**Using Chat with Files mode**

|

|

510

|

+

|

|

511

|

+

In this mode, you are querying the whole index, stored in a vector store database.

|

|

512

|

+

To start, you need to index (embed) the files you want to use as additional context.

|

|

513

|

+

Embedding transforms your text data into vectors. If you're unfamiliar with embeddings and how they work, check out this article:

|

|

514

|

+

|

|

515

|

+

https://stackoverflow.blog/2023/11/09/an-intuitive-introduction-to-text-embeddings/

|

|

516

|

+

|

|

517

|

+

For a visualization from OpenAI's page, see this picture:

|

|

518

|

+

|

|

519

|

+

|

|

520

|

+

|

|

521

|

+

Source: https://cdn.openai.com/new-and-improved-embedding-model/draft-20221214a/vectors-3.svg

|

|

522

|

+

|

|

523

|

+

To index your files, simply copy or upload them into the `data` directory and initiate indexing (embedding) by clicking the `Index all` button, or right-click on a file and select `Index...`. Additionally, you have the option to utilize data from indexed files in any Chat mode by activating the `Chat with Files (LlamaIndex, inline)` plugin.

|

|

524

|

+

|

|

525

|

+

|

|

526

|

+

|

|

527

|

+

After the file(s) are indexed (embedded in vector store), you can use context from them in chat mode:

|

|

528

|

+

|

|

529

|

+

|

|

530

|

+

|

|

531

|

+

Built-in file loaders:

|

|

532

|

+

|

|

533

|

+

**Files:**

|

|

534

|

+

|

|

535

|

+

- CSV files (csv)

|

|

536

|

+

- Epub files (epub)

|

|

537

|

+

- Excel .xlsx spreadsheets (xlsx)

|

|

538

|

+

- HTML files (html, htm)

|

|

539

|

+

- IPYNB Notebook files (ipynb)

|

|

540

|

+

- Image (vision) (jpg, jpeg, png, gif, bmp, tiff, webp)

|

|

541

|

+

- JSON files (json)

|

|

542

|

+

- Markdown files (md)

|

|

543

|

+

- PDF documents (pdf)

|

|

544

|

+

- Txt/raw files (txt)

|

|

545

|

+

- Video/audio (mp4, avi, mov, mkv, webm, mp3, mpeg, mpga, m4a, wav)

|

|

546

|

+

- Word .docx documents (docx)

|

|

547

|

+

- XML files (xml)

|

|

548

|

+

|

|

549

|

+

**Web/external content:**

|

|

550

|

+

|

|

551

|

+

- Bitbucket

|

|

552

|

+

- ChatGPT Retrieval Plugin

|

|

553

|

+

- GitHub Issues

|

|

554

|

+

- GitHub Repository

|

|

555

|

+

- Google Calendar

|

|

556

|

+

- Google Docs

|

|

557

|

+

- Google Drive

|

|

558

|

+

- Google Gmail

|

|

559

|

+

- Google Keep

|

|

560

|

+

- Google Sheets

|

|

561

|

+

- Microsoft OneDrive

|

|

562

|

+

- RSS

|

|

563

|

+

- SQL Database

|

|

564

|

+

- Sitemap (XML)

|

|

565

|

+

- Twitter/X posts

|

|

566

|

+

- Webpages (crawling any webpage content)

|

|

567

|

+

- YouTube (transcriptions)

|

|

568

|

+

|

|

569

|

+

You can configure data loaders in `Settings / Indexes (LlamaIndex) / Data Loaders` by providing list of keyword arguments for specified loaders.

|

|

570

|

+

You can also develop and provide your own custom loader and register it within the application.

|

|

571

|

+

|

|

572

|

+

LlamaIndex is also integrated with context database - you can use data from database (your context history) as additional context in discussion.

|

|

573

|

+

Options for indexing existing context history or enabling real-time indexing new ones (from database) are available in `Settings / Indexes (LlamaIndex)` section.

|

|

574

|

+

|

|

575

|

+

**WARNING:** remember that when indexing content, API calls to the embedding model are used. Each indexing consumes additional tokens. Always control the number of tokens used on the OpenAI page.

|

|

576

|

+

|

|

577

|

+

**Tip:** Using the Chat with Files mode, you have default access to files manually indexed from the /data directory. However, you can use additional context by attaching a file - such additional context from the attachment does not land in the main index, but only in a temporary one, available only for the given conversation.

|

|

578

|

+

|

|

579

|

+

**Token limit:** When you use `Chat with Files` in non-query mode, LlamaIndex adds extra context to the system prompt. If you use a plugins (which also adds more instructions to system prompt), you might go over the maximum number of tokens allowed. If you get a warning that says you've used too many tokens, turn off plugins you're not using or turn off the "+ Tools" option to reduce the number of tokens used by the system prompt.

|

|

580

|

+

|

|

581

|

+

**Available vector stores** (provided by `LlamaIndex`):

|

|

582

|

+

|

|

583

|

+

```

|

|

584

|

+

- ChromaVectorStore

|

|

585

|

+

- ElasticsearchStore

|

|

586

|

+

- PinecodeVectorStore

|

|

587

|

+

- RedisVectorStore

|

|

588

|

+

- SimpleVectorStore

|

|

589

|

+

```

|

|

590

|

+

|

|

591

|

+

You can configure selected vector store by providing config options like `api_key`, etc. in `Settings -> LlamaIndex` window. See the section: `Configuration / Vector stores` for configuration reference.

|

|

592

|

+

|

|

593

|

+

|

|

594

|

+

**Configuring data loaders**

|

|

595

|

+

|

|

596

|

+

In the `Settings -> LlamaIndex -> Data loaders` section you can define the additional keyword arguments to pass into data loader instance. See the section: `Configuration / Data Loaders` for configuration reference.

|

|

597

|

+

|

|

488

598

|

|

|

489

599

|

## Chat with Audio

|

|

490

600

|

|

|

@@ -622,136 +732,6 @@ The vector database in use will be displayed in the list of uploaded files, on t

|

|

|

622

732

|

|

|

623

733

|

|

|

624

734

|

|

|

625

|

-

## LangChain

|

|

626

|

-

|

|

627

|

-

This mode enables you to work with models that are supported by `LangChain`. The LangChain support is integrated

|

|

628

|

-

into the application, allowing you to interact with any LLM by simply supplying a configuration

|

|

629

|

-

file for the specific model. You can add as many models as you like; just list them in the configuration

|

|

630

|

-

file named `models.json`.

|

|

631

|

-

|

|

632

|

-

Available LLMs providers supported by **PyGPT**, in `LangChain` and `Chat with Files (LlamaIndex)` modes:

|

|

633

|

-

|

|

634

|

-

```

|

|

635

|

-

- OpenAI

|

|

636

|

-

- Azure OpenAI

|

|

637

|

-

- Google (Gemini, etc.)

|

|

638

|

-

- HuggingFace

|

|

639

|

-

- Anthropic

|

|

640

|

-

- Ollama (Llama3, Mistral, etc.)

|

|

641

|

-

```

|

|

642

|

-

|

|

643

|

-

You have the ability to add custom model wrappers for models that are not available by default in **PyGPT**.

|

|

644

|

-

To integrate a new model, you can create your own wrapper and register it with the application.

|

|

645

|

-

Detailed instructions for this process are provided in the section titled `Managing models / Adding models via LangChain`.

|

|

646

|

-

|

|

647

|

-

## Chat with Files (LlamaIndex)

|

|

648

|

-

|

|

649

|

-

This mode enables chat interaction with your documents and entire context history through conversation.

|

|

650

|

-

It seamlessly incorporates `LlamaIndex` into the chat interface, allowing for immediate querying of your indexed documents.

|

|

651

|

-

|

|

652

|

-

**Querying single files**

|

|

653

|

-

|

|

654

|

-

You can also query individual files "on the fly" using the `query_file` command from the `Files I/O` plugin. This allows you to query any file by simply asking a question about that file. A temporary index will be created in memory for the file being queried, and an answer will be returned from it. From version `2.1.9` similar command is available for querying web and external content: `Directly query web content with LlamaIndex`.

|

|

655

|

-

|

|

656

|

-

**For example:**

|

|

657

|

-

|

|

658

|

-

If you have a file: `data/my_cars.txt` with content `My car is red.`

|

|

659

|

-

|

|

660

|

-

You can ask for: `Query the file my_cars.txt about what color my car is.`

|

|

661

|

-

|

|

662

|

-

And you will receive the response: `Red`.

|

|

663

|

-

|

|

664

|

-

Note: this command indexes the file only for the current query and does not persist it in the database. To store queried files also in the standard index you must enable the option `Auto-index readed files` in plugin settings. Remember to enable `+ Tools` checkbox to allow usage of tools and commands from plugins.

|

|

665

|

-

|

|

666

|

-

**Using Chat with Files mode**

|

|

667

|

-

|

|

668

|

-

In this mode, you are querying the whole index, stored in a vector store database.

|

|

669

|

-

To start, you need to index (embed) the files you want to use as additional context.

|

|

670

|

-

Embedding transforms your text data into vectors. If you're unfamiliar with embeddings and how they work, check out this article:

|

|

671

|

-

|

|

672

|

-

https://stackoverflow.blog/2023/11/09/an-intuitive-introduction-to-text-embeddings/

|

|

673

|

-

|

|

674

|

-

For a visualization from OpenAI's page, see this picture:

|

|

675

|

-

|

|

676

|

-

|

|

677

|

-

|

|

678

|

-

Source: https://cdn.openai.com/new-and-improved-embedding-model/draft-20221214a/vectors-3.svg

|

|

679

|

-

|

|

680

|

-

To index your files, simply copy or upload them into the `data` directory and initiate indexing (embedding) by clicking the `Index all` button, or right-click on a file and select `Index...`. Additionally, you have the option to utilize data from indexed files in any Chat mode by activating the `Chat with Files (LlamaIndex, inline)` plugin.

|

|

681

|

-

|

|

682

|

-

|

|

683

|

-

|

|

684

|

-

After the file(s) are indexed (embedded in vector store), you can use context from them in chat mode:

|

|

685

|

-

|

|

686

|

-

|

|

687

|

-

|

|

688

|

-

Built-in file loaders:

|

|

689

|

-

|

|

690

|

-

**Files:**

|

|

691

|

-

|

|

692

|

-

- CSV files (csv)

|

|

693

|

-

- Epub files (epub)

|

|

694

|

-

- Excel .xlsx spreadsheets (xlsx)

|

|

695

|

-

- HTML files (html, htm)

|

|

696

|

-

- IPYNB Notebook files (ipynb)

|

|

697

|

-

- Image (vision) (jpg, jpeg, png, gif, bmp, tiff, webp)

|

|

698

|

-

- JSON files (json)

|

|

699

|

-

- Markdown files (md)

|

|

700

|

-

- PDF documents (pdf)

|

|

701

|

-

- Txt/raw files (txt)

|

|

702

|

-

- Video/audio (mp4, avi, mov, mkv, webm, mp3, mpeg, mpga, m4a, wav)

|

|

703

|

-

- Word .docx documents (docx)

|

|

704

|

-

- XML files (xml)

|

|

705

|

-

|

|

706

|

-

**Web/external content:**

|

|

707

|

-

|

|

708

|

-

- Bitbucket

|

|

709

|

-

- ChatGPT Retrieval Plugin

|

|

710

|

-

- GitHub Issues

|

|

711

|

-

- GitHub Repository

|

|

712

|

-

- Google Calendar

|

|

713

|

-

- Google Docs

|

|

714

|

-

- Google Drive

|

|

715

|

-

- Google Gmail

|

|

716

|

-

- Google Keep

|

|

717

|

-

- Google Sheets

|

|

718

|

-

- Microsoft OneDrive

|

|

719

|

-

- RSS

|

|

720

|

-

- SQL Database

|

|

721

|

-

- Sitemap (XML)

|

|

722

|

-

- Twitter/X posts

|

|

723

|

-

- Webpages (crawling any webpage content)

|

|

724

|

-

- YouTube (transcriptions)

|

|

725

|

-

|

|

726

|

-

You can configure data loaders in `Settings / Indexes (LlamaIndex) / Data Loaders` by providing list of keyword arguments for specified loaders.

|

|

727

|

-

You can also develop and provide your own custom loader and register it within the application.

|

|

728

|

-

|

|

729

|

-

LlamaIndex is also integrated with context database - you can use data from database (your context history) as additional context in discussion.

|

|

730

|

-

Options for indexing existing context history or enabling real-time indexing new ones (from database) are available in `Settings / Indexes (LlamaIndex)` section.

|

|

731

|

-

|

|

732

|

-

**WARNING:** remember that when indexing content, API calls to the embedding model are used. Each indexing consumes additional tokens. Always control the number of tokens used on the OpenAI page.

|

|

733

|

-

|

|

734

|

-

**Tip:** Using the Chat with Files mode, you have default access to files manually indexed from the /data directory. However, you can use additional context by attaching a file - such additional context from the attachment does not land in the main index, but only in a temporary one, available only for the given conversation.

|

|

735

|

-

|

|

736

|

-

**Token limit:** When you use `Chat with Files` in non-query mode, LlamaIndex adds extra context to the system prompt. If you use a plugins (which also adds more instructions to system prompt), you might go over the maximum number of tokens allowed. If you get a warning that says you've used too many tokens, turn off plugins you're not using or turn off the "+ Tools" option to reduce the number of tokens used by the system prompt.

|

|

737

|

-

|

|

738

|

-

**Available vector stores** (provided by `LlamaIndex`):

|

|

739

|

-

|

|

740

|

-

```

|

|

741

|

-

- ChromaVectorStore

|

|

742

|

-

- ElasticsearchStore

|

|

743

|

-

- PinecodeVectorStore

|

|

744

|

-

- RedisVectorStore

|

|

745

|

-

- SimpleVectorStore

|

|

746

|

-

```

|

|

747

|

-

|

|

748

|

-

You can configure selected vector store by providing config options like `api_key`, etc. in `Settings -> LlamaIndex` window. See the section: `Configuration / Vector stores` for configuration reference.

|

|

749

|

-

|

|

750

|

-

|

|

751

|

-

**Configuring data loaders**

|

|

752

|

-

|

|

753

|

-

In the `Settings -> LlamaIndex -> Data loaders` section you can define the additional keyword arguments to pass into data loader instance. See the section: `Configuration / Data Loaders` for configuration reference.

|

|

754

|

-

|

|

755

735

|

|

|

756

736

|

## Agent (LlamaIndex)

|

|

757

737

|

|

|

@@ -973,7 +953,7 @@ To allow the model to manage files or python code execution, the `+ Tools` optio

|

|

|

973

953

|

|

|

974

954

|

## What is preset?

|

|

975

955

|

|

|

976

|

-

Presets in **PyGPT** are essentially templates used to store and quickly apply different configurations. Each preset includes settings for the mode you want to use (such as chat, completion, or image generation), an initial system prompt, an assigned name for the AI, a username for the session, and the desired "temperature" for the conversation. A warmer "temperature" setting allows the AI to provide more creative responses, while a cooler setting encourages more predictable replies. These presets can be used across various modes and with models accessed via the `OpenAI API` or `

|

|

956

|

+

Presets in **PyGPT** are essentially templates used to store and quickly apply different configurations. Each preset includes settings for the mode you want to use (such as chat, completion, or image generation), an initial system prompt, an assigned name for the AI, a username for the session, and the desired "temperature" for the conversation. A warmer "temperature" setting allows the AI to provide more creative responses, while a cooler setting encourages more predictable replies. These presets can be used across various modes and with models accessed via the `OpenAI API` or `LlamaIndex`.

|

|

977

957

|

|

|

978

958

|

The application lets you create as many presets as needed and easily switch among them. Additionally, you can clone an existing preset, which is useful for creating variations based on previously set configurations and experimentation.

|

|

979

959

|

|

|

@@ -1079,7 +1059,7 @@ PyGPT has built-in support for models (as of 2025-06-27):

|

|

|

1079

1059

|

|

|

1080

1060

|

All models are specified in the configuration file `models.json`, which you can customize.

|

|

1081

1061

|

This file is located in your working directory. You can add new models provided directly by `OpenAI API`

|

|

1082

|

-

and those supported by `LlamaIndex`

|

|

1062

|

+

and those supported by `LlamaIndex` to this file. Configuration for LlamaIndex is placed in `llama_index` key.

|

|

1083

1063

|

|

|

1084

1064

|

## Adding a custom model

|

|

1085

1065

|

|

|

@@ -1087,13 +1067,16 @@ You can add your own models. See the section `Extending PyGPT / Adding a new mod

|

|

|

1087

1067

|

|

|

1088

1068

|

There is built-in support for those LLM providers:

|

|

1089

1069

|

|

|

1090

|

-

-

|

|

1070

|

+

- Anthropic (anthropic)

|

|

1091

1071

|

- Azure OpenAI (azure_openai)

|

|

1072

|

+

- Deepseek API (deepseek_api)

|

|

1092

1073

|

- Google (google)

|

|

1093

1074

|

- HuggingFace (huggingface)

|

|

1094

|

-

-

|

|

1075

|

+

- Local models (OpenAI API compatible)

|

|

1095

1076

|

- Ollama (ollama)

|

|

1096

|

-

-

|

|

1077

|

+

- OpenAI (openai)

|

|

1078

|

+

- Perplexity (perplexity)

|

|

1079

|

+

- xAI (x_ai)

|

|

1097

1080

|

|

|

1098

1081

|

## How to use local or non-GPT models

|

|

1099

1082

|

|

|

@@ -1531,7 +1514,7 @@ If enabled, plugin will stop after goal is reached." *Default:* `True`

|

|

|

1531

1514

|

|

|

1532

1515

|

- `Reverse roles between iterations` *reverse_roles*

|

|

1533

1516

|

|

|

1534

|

-

Only for Completion

|

|

1517

|

+

Only for Completion modes.

|

|

1535

1518

|

If enabled, this option reverses the roles (AI <> user) with each iteration. For example,

|

|

1536

1519

|

if in the previous iteration the response was generated for "Batman," the next iteration will use that

|

|

1537

1520

|

response to generate an input for "Joker." *Default:* `True`

|

|

@@ -3013,6 +2996,10 @@ Config -> Settings...

|

|

|

3013

2996

|

|

|

3014

2997

|

- `Model used for auto-summary`: Model used for context auto-summary (default: *gpt-3.5-turbo-1106*).

|

|

3015

2998

|

|

|

2999

|

+

**Remote tools**

|

|

3000

|

+

|

|

3001

|

+

Enable/disable remote tools, like Web Search or Image generation to use in OpenAI Responses API (OpenAI models and Chat mode only).

|

|

3002

|

+

|

|

3016

3003

|

**Models**

|

|

3017

3004

|

|

|

3018

3005

|

- `Max Output Tokens`: Sets the maximum number of tokens the model can generate for a single response.

|

|

@@ -3609,7 +3596,7 @@ PyGPT can be extended with:

|

|

|

3609

3596

|

|

|

3610

3597

|

**Examples (tutorial files)**

|

|

3611

3598

|

|

|

3612

|

-

See the `examples` directory in this repository with examples of custom launcher, plugin, vector store, LLM (

|

|

3599

|

+

See the `examples` directory in this repository with examples of custom launcher, plugin, vector store, LLM (LlamaIndex) provider and data loader:

|

|

3613

3600

|

|

|

3614

3601

|

- `examples/custom_launcher.py`

|

|

3615

3602

|

|

|

@@ -3665,7 +3652,7 @@ To register custom web providers:

|

|

|

3665

3652

|

|

|

3666

3653

|

## Adding a custom model

|

|

3667

3654

|

|

|

3668

|

-

To add a new model using the OpenAI API

|

|

3655

|

+

To add a new model using the OpenAI API or LlamaIndex wrapper, use the editor in `Config -> Models` or manually edit the `models.json` file by inserting the model's configuration details. If you are adding a model via LlamaIndex, ensure to include the model's name, its supported modes (either `chat`, `completion`, or both), the LLM provider (such as `OpenAI` or `HuggingFace`), and, if you are using an external API-based model, an optional `API KEY` along with any other necessary environment settings.

|

|

3669

3656

|

|

|

3670

3657

|

Example of models configuration - `%WORKDIR%/models.json`:

|

|

3671

3658

|

|

|

@@ -3679,30 +3666,8 @@ Example of models configuration - `%WORKDIR%/models.json`:

|

|

|

3679

3666

|

"langchain",

|

|

3680

3667

|

"llama_index"

|

|

3681

3668

|

],

|

|

3682

|

-

"

|

|

3683

|

-

"provider": "openai",

|

|

3684

|

-

"mode": [

|

|

3685

|

-

"chat"

|

|

3686

|

-

],

|

|

3687

|

-

"args": [

|

|

3688

|

-

{

|

|

3689

|

-

"name": "model_name",

|

|

3690

|

-

"value": "gpt-3.5-turbo",

|

|

3691

|

-

"type": "str"

|

|

3692

|

-

}

|

|

3693

|

-

],

|

|

3694

|

-

"env": [

|

|

3695

|

-

{

|

|

3696

|

-

"name": "OPENAI_API_KEY",

|

|

3697

|

-

"value": "{api_key}"

|

|

3698

|

-

}

|

|

3699

|

-

]

|

|

3700

|

-

},

|

|

3669

|

+

"provider": "openai"

|

|

3701

3670

|

"llama_index": {

|

|

3702

|

-

"provider": "openai",

|

|

3703

|

-

"mode": [

|

|

3704

|

-

"chat"

|

|

3705

|

-

],

|

|

3706

3671

|

"args": [

|

|

3707

3672

|

{

|

|

3708

3673

|

"name": "model",

|

|

@@ -3725,14 +3690,16 @@ Example of models configuration - `%WORKDIR%/models.json`:

|

|

|

3725

3690

|

|

|

3726

3691

|

There is built-in support for those LLM providers:

|

|

3727

3692

|

|

|

3728

|

-

|

|

3729

|

-

-

|

|

3730

|

-

-

|

|

3731

|

-

-

|

|

3732

|

-

-

|

|

3733

|

-

-

|

|

3734

|

-

-

|

|

3735

|

-

|

|

3693

|

+

- Anthropic (anthropic)

|

|

3694

|

+

- Azure OpenAI (azure_openai)

|

|

3695

|

+

- Deepseek API (deepseek_api)

|

|

3696

|

+

- Google (google)

|

|

3697

|

+

- HuggingFace (huggingface)

|

|

3698

|

+

- Local models (OpenAI API compatible)

|

|

3699

|

+

- Ollama (ollama)

|

|

3700

|

+

- OpenAI (openai)

|

|

3701

|

+

- Perplexity (perplexity)

|

|

3702

|

+

- xAI (x_ai)

|

|

3736

3703

|

|

|

3737

3704

|

**Tip**: `{api_key}` in `models.json` is a placeholder for the main OpenAI API KEY from the settings. It will be replaced by the configured key value.

|

|

3738

3705

|

|

|

@@ -3911,7 +3878,7 @@ Events flow can be debugged by enabling the option `Config -> Settings -> Develo

|

|

|

3911

3878

|

|

|

3912

3879

|

## Adding a custom LLM provider

|

|

3913

3880

|

|

|

3914

|

-

Handling LLMs with

|

|

3881

|

+

Handling LLMs with LlamaIndex is implemented through separated wrappers. This allows for the addition of support for any provider and model available via LlamaIndex. All built-in wrappers for the models and its providers are placed in the `pygpt_net.provider.llms`.

|

|

3915

3882

|

|

|

3916

3883

|

These wrappers are loaded into the application during startup using `launcher.add_llm()` method:

|

|

3917

3884

|

|

|

@@ -3985,7 +3952,7 @@ run(

|

|

|

3985

3952

|

|

|

3986

3953

|

**Examples (tutorial files)**

|

|

3987

3954

|

|

|

3988

|

-

See the `examples` directory in this repository with examples of custom launcher, plugin, vector store, LLM

|

|

3955

|

+

See the `examples` directory in this repository with examples of custom launcher, plugin, vector store, LLM provider and data loader:

|

|

3989

3956

|

|

|

3990

3957

|

- `examples/custom_launcher.py`

|

|

3991

3958

|

|

|

@@ -4007,7 +3974,7 @@ These example files can be used as a starting point for creating your own extens

|

|

|

4007

3974

|

|

|

4008

3975

|

To integrate your own model or provider into **PyGPT**, you can also reference the classes located in the `pygpt_net.provider.llms`. These samples can act as an more complex example for your custom class. Ensure that your custom wrapper class includes two essential methods: `chat` and `completion`. These methods should return the respective objects required for the model to operate in `chat` and `completion` modes.

|

|

4009

3976

|

|

|

4010

|

-

Every single LLM provider (wrapper) inherits from `BaseLLM` class and can provide

|

|

3977

|

+

Every single LLM provider (wrapper) inherits from `BaseLLM` class and can provide 2 components: provider for LlamaIndex, and provider for Embeddings.

|

|

4011

3978

|

|

|

4012

3979

|

|

|

4013

3980

|

## Adding a custom vector store provider

|

|

@@ -4130,6 +4097,19 @@ may consume additional tokens that are not displayed in the main window.

|

|

|

4130

4097

|

|

|

4131

4098

|

## Recent changes:

|

|

4132

4099

|

|

|

4100

|

+

**2.5.21 (2025-06-28)**

|

|

4101

|

+

|

|

4102

|

+

- Fixed JS errors in logger.

|

|

4103

|

+

- Updated CSS.

|

|

4104

|

+

|

|

4105

|

+

**2.5.20 (2025-06-28)**

|

|

4106

|

+

|

|

4107

|

+

- LlamaIndex upgraded to 0.12.44.

|

|

4108

|

+

- Langchain removed from the list of modes and dependencies.

|

|

4109

|

+

- Improved tools execution.

|

|

4110

|

+

- Simplified model configuration.

|

|

4111

|

+

- Added endpoint configuration for non-OpenAI APIs.

|

|

4112

|

+

|

|

4133

4113

|

**2.5.19 (2025-06-27)**

|

|

4134

4114

|

|

|

4135

4115

|

- Added option to enable/disable `Responses API` in `Config -> Settings -> API Keys -> OpenAI`.

|

|

@@ -4159,98 +4139,6 @@ may consume additional tokens that are not displayed in the main window.

|

|

|

4159

4139

|

- Fixed Ollama provider in the newest LlamaIndex.

|

|

4160

4140

|

- Added the ability to set a custom base URL for Ollama -> ENV: OLLAMA_API_BASE.

|

|

4161

4141

|

|

|

4162

|

-

**2.5.14 (2025-06-23)**

|

|

4163

|

-

|

|

4164

|

-

- Fix: crash if empty shortcuts in config.

|

|

4165

|

-

- Fix: UUID serialization.

|

|

4166

|

-

|

|

4167

|

-

**2.5.13 (2025-06-22)**

|

|

4168

|

-

|

|

4169

|

-

- Disabled auto-switch to vision mode in Painter.

|

|

4170

|

-

- UI fixes.

|

|

4171

|

-

|

|

4172

|

-

**2.5.12 (2025-06-22)**

|

|

4173

|

-

|

|

4174

|

-

- Fixed send-mode radio buttons switch.

|

|

4175

|

-

- Added a new models: qwen2.5-coder, OpenAI gpt-4.1-mini.

|

|

4176

|

-

|

|

4177

|

-

**2.5.11 (2025-06-21)**

|

|

4178

|

-

|

|

4179

|

-

- Added a new models: OpenAI o1-pro and o3-pro, Anthropic Claude 4.0 Opus and Claude 4.0 Sonnet, Alibaba Qwen and Qwen2.

|

|

4180

|

-

- Bielik model upgraded to v2.3 / merged PR #101.

|

|

4181

|

-

- Fixed HTML output formatting.

|

|

4182

|

-

- Fixed empty index in chat mode.

|

|

4183

|

-

|

|

4184

|

-

**2.5.10 (2025-03-06)**

|

|

4185

|

-

|

|

4186

|

-

- Added a new model: Claude 3.7 Sonnet.

|

|

4187

|

-

- Fixed the context switch issue when the column changed and the tab is not a chat tab.

|

|

4188

|

-

- LlamaIndex upgraded to 0.12.22.

|

|

4189

|

-

- LlamaIndex LLMs upgraded to recent versions.

|

|

4190

|

-

|

|

4191

|

-

**2.5.9 (2025-03-05)**

|

|

4192

|

-

|

|

4193

|

-

- Improved formatting of HTML code in the output.

|

|

4194

|

-

- Disabled automatic indentation parsing as code blocks.

|

|

4195

|

-

- Disabled automatic scrolling of the notepad when opening a tab.

|

|

4196

|

-

|

|

4197

|

-

**2.5.8 (2025-03-02)**

|

|

4198

|

-

|

|

4199

|

-

- Added a new mode: Research (Perplexity) powered by: https://perplexity.ai - beta.

|

|

4200

|

-

- Added Perplexity models: sonar, sonar-pro, sonar-deep-research, sonar-reasoning, sonar-reasoning-pro, r1-1776.

|

|

4201

|

-

- Added a new OpenAI model: gpt-4.5-preview.

|

|

4202

|

-

|

|

4203

|

-

**2.5.7 (2025-02-26)**

|

|

4204

|

-

|

|

4205

|

-

- Stream mode has been enabled in o1 models.

|

|

4206

|

-

- CSS styling for <think> tags (reasoning models) has been added.

|

|

4207

|

-

- The search input has been moved to the top.

|

|

4208

|

-

- The ChatGPT-based style is now set as default.

|

|

4209

|

-

- Fix: Display of max tokens in models with a context window greater than 128k.

|

|

4210

|

-

|

|

4211

|

-

**2.5.6 (2025-02-03)**

|

|

4212

|

-

|

|

4213

|

-

- Fix: disabled index initialization if embedding provider is OpenAI and no API KEY is provided.

|

|

4214

|

-

- Fix: embedding provider initialization on empty index.

|

|

4215

|

-

|

|

4216

|

-

**2.5.5 (2025-02-02)**

|

|

4217

|

-

|

|

4218

|

-

- Fix: system prompt apply.

|

|

4219

|

-

- Added calendar live update on tab change.

|

|

4220

|

-

- Added API Key monit at launch displayed only once.

|

|

4221

|

-

|

|

4222

|

-

**2.5.4 (2025-02-02)**

|

|

4223

|

-

|

|

4224

|

-

- Added new models: `o3-mini` and `gpt-4o-mini-audio-preview`.

|

|

4225

|

-

- Enabled tool calls in Chat with Audio mode.

|

|

4226

|

-

- Added a check to verify if Ollama is running and if the model is available.

|

|

4227

|

-

|

|

4228

|

-

**2.5.3 (2025-02-01)**

|

|

4229

|

-

|

|

4230

|

-

- Fix: Snap permission denied bug.

|

|

4231

|

-

- Fix: column focus on tab change.

|

|

4232

|

-

- Datetime separators in groups moved to right side.

|

|

4233

|

-

|

|

4234

|

-

**2.5.2 (2025-02-01)**

|

|

4235

|

-

|

|

4236

|

-

- Fix: spinner update after inline image generation.

|

|

4237

|

-

- Added Ollama suffix to Ollama-models in models list.

|

|

4238

|

-

|

|

4239

|

-

**2.5.1 (2025-02-01)**

|

|

4240

|

-

|

|

4241

|

-

- PySide6 upgraded to 6.6.2.

|

|

4242

|

-

- Disabled Transformers startup warnings.

|

|

4243

|

-

|

|

4244

|

-

**2.5.0 (2025-01-31)**

|

|

4245

|

-

|

|

4246

|

-

- Added provider for DeepSeek (in Chat with Files mode, beta).

|

|

4247

|

-

- Added new models: OpenAI o1, Llama 3.3, DeepSeek V3 and R1 (API + local, with Ollama).

|

|

4248

|

-

- Added tool calls for OpenAI o1.

|

|

4249

|

-

- Added native vision for OpenAI o1.

|

|

4250

|

-

- Fix: tool calls in Ollama provider.

|

|

4251

|

-

- Fix: error handling in stream mode.

|

|

4252

|

-

- Fix: added check for active plugin tools before tool call.

|

|

4253

|

-

|

|

4254

4142

|

# Credits and links

|

|

4255

4143

|

|

|

4256

4144

|

**Official website:** <https://pygpt.net>

|