oikan 0.0.2.5__py3-none-any.whl → 0.0.3.1__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- oikan/__init__.py +14 -0

- oikan/exceptions.py +5 -13

- oikan/model.py +303 -443

- oikan/neural.py +43 -0

- oikan/symbolic.py +55 -0

- oikan/utils.py +59 -49

- oikan-0.0.3.1.dist-info/METADATA +233 -0

- oikan-0.0.3.1.dist-info/RECORD +11 -0

- {oikan-0.0.2.5.dist-info → oikan-0.0.3.1.dist-info}/WHEEL +1 -1

- oikan-0.0.2.5.dist-info/METADATA +0 -195

- oikan-0.0.2.5.dist-info/RECORD +0 -9

- {oikan-0.0.2.5.dist-info → oikan-0.0.3.1.dist-info}/licenses/LICENSE +0 -0

- {oikan-0.0.2.5.dist-info → oikan-0.0.3.1.dist-info}/top_level.txt +0 -0

oikan/neural.py

ADDED

|

@@ -0,0 +1,43 @@

|

|

|

1

|

+

import torch.nn as nn

|

|

2

|

+

|

|

3

|

+

class TabularNet(nn.Module):

|

|

4

|

+

"""

|

|

5

|

+

Feedforward neural network for tabular data.

|

|

6

|

+

|

|

7

|

+

Parameters:

|

|

8

|

+

-----------

|

|

9

|

+

input_size : int

|

|

10

|

+

Number of input features.

|

|

11

|

+

hidden_sizes : list

|

|

12

|

+

List of hidden layer sizes.

|

|

13

|

+

output_size : int

|

|

14

|

+

Number of output units.

|

|

15

|

+

activation : str, optional (default='relu')

|

|

16

|

+

Activation function ('relu', 'tanh', 'leaky_relu', 'elu', 'swish', 'gelu').

|

|

17

|

+

"""

|

|

18

|

+

def __init__(self, input_size, hidden_sizes, output_size, activation='relu'):

|

|

19

|

+

super(TabularNet, self).__init__()

|

|

20

|

+

layers = []

|

|

21

|

+

in_size = input_size

|

|

22

|

+

for hidden_size in hidden_sizes:

|

|

23

|

+

layers.append(nn.Linear(in_size, hidden_size))

|

|

24

|

+

if activation == 'relu':

|

|

25

|

+

layers.append(nn.ReLU())

|

|

26

|

+

elif activation == 'tanh':

|

|

27

|

+

layers.append(nn.Tanh())

|

|

28

|

+

elif activation == 'leaky_relu':

|

|

29

|

+

layers.append(nn.LeakyReLU(negative_slope=0.01))

|

|

30

|

+

elif activation == 'elu':

|

|

31

|

+

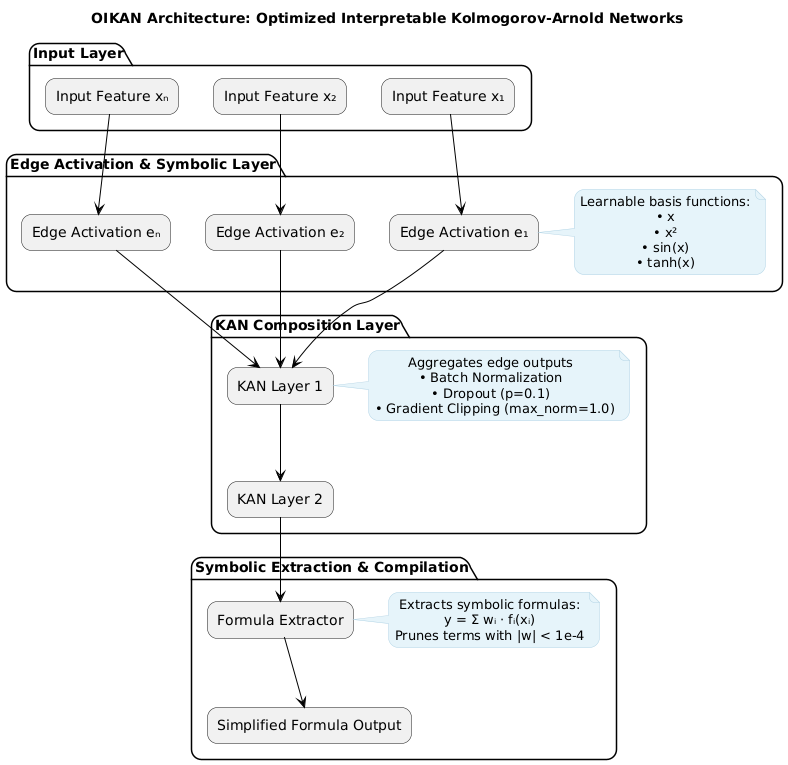

layers.append(nn.ELU(alpha=1.0))

|

|

32

|

+

elif activation == 'swish':

|

|

33

|

+

layers.append(nn.SiLU())

|

|

34

|

+

elif activation == 'gelu':

|

|

35

|

+

layers.append(nn.GELU())

|

|

36

|

+

else:

|

|

37

|

+

raise ValueError("Unsupported activation function.")

|

|

38

|

+

in_size = hidden_size

|

|

39

|

+

layers.append(nn.Linear(in_size, output_size))

|

|

40

|

+

self.net = nn.Sequential(*layers)

|

|

41

|

+

|

|

42

|

+

def forward(self, x):

|

|

43

|

+

return self.net(x)

|

oikan/symbolic.py

ADDED

|

@@ -0,0 +1,55 @@

|

|

|

1

|

+

import numpy as np

|

|

2

|

+

from sklearn.preprocessing import PolynomialFeatures

|

|

3

|

+

from sklearn.linear_model import Lasso

|

|

4

|

+

|

|

5

|

+

def symbolic_regression(X, y, degree=2, alpha=0.1):

|

|

6

|

+

"""

|

|

7

|

+

Performs symbolic regression on the input data.

|

|

8

|

+

|

|

9

|

+

Parameters:

|

|

10

|

+

-----------

|

|

11

|

+

X : array-like of shape (n_samples, n_features)

|

|

12

|

+

Input data.

|

|

13

|

+

y : array-like of shape (n_samples,) or (n_samples, n_targets)

|

|

14

|

+

Target values.

|

|

15

|

+

degree : int, optional (default=2)

|

|

16

|

+

Maximum polynomial degree.

|

|

17

|

+

alpha : float, optional (default=0.1)

|

|

18

|

+

L1 regularization strength.

|

|

19

|

+

|

|

20

|

+

Returns:

|

|

21

|

+

--------

|

|

22

|

+

dict : Contains 'basis_functions', 'coefficients' (or 'coefficients_list'), 'n_features', 'degree'

|

|

23

|

+

"""

|

|

24

|

+

poly = PolynomialFeatures(degree=degree, include_bias=True)

|

|

25

|

+

X_poly = poly.fit_transform(X)

|

|

26

|

+

model = Lasso(alpha=alpha, fit_intercept=False)

|

|

27

|

+

model.fit(X_poly, y)

|

|

28

|

+

if len(y.shape) == 1 or y.shape[1] == 1:

|

|

29

|

+

coef = model.coef_.flatten()

|

|

30

|

+

selected_indices = np.where(np.abs(coef) > 1e-6)[0]

|

|

31

|

+

return {

|

|

32

|

+

'n_features': X.shape[1],

|

|

33

|

+

'degree': degree,

|

|

34

|

+

'basis_functions': poly.get_feature_names_out()[selected_indices].tolist(),

|

|

35

|

+

'coefficients': coef[selected_indices].tolist()

|

|

36

|

+

}

|

|

37

|

+

else:

|

|

38

|

+

coefficients_list = []

|

|

39

|

+

selected_indices = set()

|

|

40

|

+

for c in range(y.shape[1]):

|

|

41

|

+

coef = model.coef_[c]

|

|

42

|

+

indices = np.where(np.abs(coef) > 1e-6)[0]

|

|

43

|

+

selected_indices.update(indices)

|

|

44

|

+

selected_indices = list(selected_indices)

|

|

45

|

+

basis_functions = poly.get_feature_names_out()[selected_indices].tolist()

|

|

46

|

+

for c in range(y.shape[1]):

|

|

47

|

+

coef = model.coef_[c]

|

|

48

|

+

coef_selected = coef[selected_indices].tolist()

|

|

49

|

+

coefficients_list.append(coef_selected)

|

|

50

|

+

return {

|

|

51

|

+

'n_features': X.shape[1],

|

|

52

|

+

'degree': degree,

|

|

53

|

+

'basis_functions': basis_functions,

|

|

54

|

+

'coefficients_list': coefficients_list

|

|

55

|

+

}

|

oikan/utils.py

CHANGED

|

@@ -1,53 +1,63 @@

|

|

|

1

|

-

from .exceptions import *

|

|

2

|

-

import torch

|

|

3

|

-

import torch.nn as nn

|

|

4

1

|

import numpy as np

|

|

5

2

|

|

|

6

|

-

def

|

|

7

|

-

"""

|

|

8

|

-

|

|

9

|

-

|

|

10

|

-

|

|

11

|

-

|

|

12

|

-

|

|

13

|

-

|

|

14

|

-

|

|

15

|

-

|

|

16

|

-

|

|

17

|

-

|

|

18

|

-

|

|

19

|

-

|

|

20

|

-

|

|

21

|

-

|

|

22

|

-

|

|

23

|

-

|

|

3

|

+

def evaluate_basis_functions(X, basis_functions, n_features):

|

|

4

|

+

"""

|

|

5

|

+

Evaluates basis functions on the input data.

|

|

6

|

+

|

|

7

|

+

Parameters:

|

|

8

|

+

-----------

|

|

9

|

+

X : array-like of shape (n_samples, n_features)

|

|

10

|

+

Input data.

|

|

11

|

+

basis_functions : list

|

|

12

|

+

List of basis function strings (e.g., '1', 'x0', 'x0^2', 'x0 x1').

|

|

13

|

+

n_features : int

|

|

14

|

+

Number of input features.

|

|

15

|

+

|

|

16

|

+

Returns:

|

|

17

|

+

--------

|

|

18

|

+

X_transformed : ndarray of shape (n_samples, n_basis_functions)

|

|

19

|

+

Transformed data matrix.

|

|

20

|

+

"""

|

|

21

|

+

X_transformed = np.zeros((X.shape[0], len(basis_functions)))

|

|

22

|

+

for i, func in enumerate(basis_functions):

|

|

23

|

+

if func == '1':

|

|

24

|

+

X_transformed[:, i] = 1

|

|

25

|

+

elif '^' in func:

|

|

26

|

+

var, power = func.split('^')

|

|

27

|

+

idx = int(var[1:])

|

|

28

|

+

X_transformed[:, i] = X[:, idx] ** int(power)

|

|

29

|

+

elif ' ' in func:

|

|

30

|

+

var1, var2 = func.split(' ')

|

|

31

|

+

idx1 = int(var1[1:])

|

|

32

|

+

idx2 = int(var2[1:])

|

|

33

|

+

X_transformed[:, i] = X[:, idx1] * X[:, idx2]

|

|

34

|

+

else:

|

|

35

|

+

idx = int(func[1:])

|

|

36

|

+

X_transformed[:, i] = X[:, idx]

|

|

37

|

+

return X_transformed

|

|

24

38

|

|

|

25

|

-

|

|

26

|

-

"""

|

|

27

|

-

|

|

28

|

-

|

|

29

|

-

|

|

30

|

-

|

|

31

|

-

|

|

32

|

-

|

|

33

|

-

x_tensor = ensure_tensor(x)

|

|

34

|

-

features = []

|

|

35

|

-

for _, func in ADVANCED_LIB.values():

|

|

36

|

-

feat = func(x_tensor)

|

|

37

|

-

features.append(feat)

|

|

38

|

-

features = torch.stack(features, dim=-1)

|

|

39

|

-

return torch.matmul(features, self.weights.unsqueeze(0).T) + self.bias

|

|

39

|

+

def get_features_involved(basis_function):

|

|

40

|

+

"""

|

|

41

|

+

Extracts the feature indices involved in a basis function string.

|

|

42

|

+

|

|

43

|

+

Parameters:

|

|

44

|

+

-----------

|

|

45

|

+

basis_function : str

|

|

46

|

+

String representation of the basis function, e.g., 'x0', 'x0^2', 'x0 x1'.

|

|

40

47

|

|

|

41

|

-

|

|

42

|

-

|

|

43

|

-

|

|

44

|

-

|

|

45

|

-

|

|

46

|

-

|

|

47

|

-

|

|

48

|

-

|

|

49

|

-

|

|

50

|

-

|

|

51

|

-

|

|

52

|

-

|

|

53

|

-

|

|

48

|

+

Returns:

|

|

49

|

+

--------

|

|

50

|

+

set : Set of feature indices involved.

|

|

51

|

+

"""

|

|

52

|

+

if basis_function == '1': # Constant term involves no features

|

|

53

|

+

return set()

|

|

54

|

+

features = set()

|

|

55

|

+

for part in basis_function.split(): # Split by space for interaction terms

|

|

56

|

+

if part.startswith('x'):

|

|

57

|

+

if '^' in part: # Handle powers, e.g., 'x0^2'

|

|

58

|

+

var = part.split('^')[0] # Take 'x0'

|

|

59

|

+

else:

|

|

60

|

+

var = part # Take 'x0' as is

|

|

61

|

+

idx = int(var[1:]) # Extract index, e.g., 0

|

|

62

|

+

features.add(idx)

|

|

63

|

+

return features

|

|

@@ -0,0 +1,233 @@

|

|

|

1

|

+

Metadata-Version: 2.4

|

|

2

|

+

Name: oikan

|

|

3

|

+

Version: 0.0.3.1

|

|

4

|

+

Summary: OIKAN: Neuro-Symbolic ML for Scientific Discovery

|

|

5

|

+

Author: Arman Zhalgasbayev

|

|

6

|

+

License: MIT

|

|

7

|

+

Classifier: Programming Language :: Python :: 3

|

|

8

|

+

Classifier: License :: OSI Approved :: MIT License

|

|

9

|

+

Classifier: Operating System :: OS Independent

|

|

10

|

+

Requires-Python: >=3.7

|

|

11

|

+

Description-Content-Type: text/markdown

|

|

12

|

+

License-File: LICENSE

|

|

13

|

+

Requires-Dist: torch

|

|

14

|

+

Requires-Dist: numpy

|

|

15

|

+

Requires-Dist: scikit-learn

|

|

16

|

+

Requires-Dist: tqdm

|

|

17

|

+

Dynamic: license-file

|

|

18

|

+

|

|

19

|

+

<!-- logo in the center -->

|

|

20

|

+

<div align="center">

|

|

21

|

+

<img src="https://raw.githubusercontent.com/silvermete0r/oikan/main/docs/media/oikan_logo.png" alt="OIKAN Logo" width="200"/>

|

|

22

|

+

|

|

23

|

+

<h1>OIKAN: Neuro-Symbolic ML for Scientific Discovery</h1>

|

|

24

|

+

</div>

|

|

25

|

+

|

|

26

|

+

## Overview

|

|

27

|

+

|

|

28

|

+

OIKAN is a neuro-symbolic machine learning framework inspired by Kolmogorov-Arnold representation theorem. It combines the power of modern neural networks with techniques for extracting clear, interpretable symbolic formulas from data. OIKAN is designed to make machine learning models both accurate and Interpretable.

|

|

29

|

+

|

|

30

|

+

[](https://badge.fury.io/py/oikan)

|

|

31

|

+

[](https://pypistats.org/packages/oikan)

|

|

32

|

+

[](https://pepy.tech/projects/oikan)

|

|

33

|

+

[](https://opensource.org/licenses/MIT)

|

|

34

|

+

[](https://github.com/silvermete0r/oikan/issues)

|

|

35

|

+

[](https://silvermete0r.github.io/oikan/)

|

|

36

|

+

|

|

37

|

+

> **Important Disclaimer**: OIKAN is an experimental research project. It is not intended for production use or real-world applications. This framework is designed for research purposes, experimentation, and academic exploration of neuro-symbolic machine learning concepts.

|

|

38

|

+

|

|

39

|

+

## Key Features

|

|

40

|

+

- 🧠 **Neuro-Symbolic ML**: Combines neural network learning with symbolic mathematics

|

|

41

|

+

- 📊 **Automatic Formula Extraction**: Generates human-readable mathematical expressions

|

|

42

|

+

- 🎯 **Scikit-learn Compatible**: Familiar `.fit()` and `.predict()` interface

|

|

43

|

+

- 🔬 **Research-Focused**: Designed for academic exploration and experimentation

|

|

44

|

+

- 📈 **Multi-Task**: Supports both regression and classification problems

|

|

45

|

+

|

|

46

|

+

## Scientific Foundation

|

|

47

|

+

|

|

48

|

+

OIKAN implements a modern interpretation of the Kolmogorov-Arnold Representation Theorem through a hybrid neural architecture:

|

|

49

|

+

|

|

50

|

+

1. **Theoretical Foundation**: The Kolmogorov-Arnold theorem states that any continuous n-dimensional function can be decomposed into a combination of single-variable functions:

|

|

51

|

+

|

|

52

|

+

```

|

|

53

|

+

f(x₁,...,xₙ) = ∑(j=0 to 2n){ φⱼ( ∑(i=1 to n) ψᵢⱼ(xᵢ) ) }

|

|

54

|

+

```

|

|

55

|

+

|

|

56

|

+

where φⱼ and ψᵢⱼ are continuous univariate functions.

|

|

57

|

+

|

|

58

|

+

2. **Neural Implementation**: OIKAN uses a specialized architecture combining:

|

|

59

|

+

- Feature transformation layers with interpretable basis functions

|

|

60

|

+

- Symbolic regression for formula extraction

|

|

61

|

+

- Automatic pruning of insignificant terms

|

|

62

|

+

|

|

63

|

+

```python

|

|

64

|

+

class OIKANRegressor:

|

|

65

|

+

def __init__(self, hidden_sizes=[64, 64], activation='relu',

|

|

66

|

+

polynomial_degree=2, alpha=0.1):

|

|

67

|

+

# Neural network for learning complex patterns

|

|

68

|

+

self.neural_net = TabularNet(input_size, hidden_sizes, activation)

|

|

69

|

+

# Symbolic regression for interpretable formulas

|

|

70

|

+

self.symbolic_model = None

|

|

71

|

+

|

|

72

|

+

```

|

|

73

|

+

|

|

74

|

+

3. **Basis Functions**: Core set of interpretable transformations:

|

|

75

|

+

```python

|

|

76

|

+

SYMBOLIC_FUNCTIONS = {

|

|

77

|

+

'linear': 'x', # Direct relationships

|

|

78

|

+

'quadratic': 'x^2', # Non-linear patterns

|

|

79

|

+

'interaction': 'x_i x_j', # Feature interactions

|

|

80

|

+

'higher_order': 'x^n' # Polynomial terms

|

|

81

|

+

}

|

|

82

|

+

```

|

|

83

|

+

|

|

84

|

+

4. **Formula Extraction Process**:

|

|

85

|

+

- Train neural network on raw data

|

|

86

|

+

- Generate augmented samples for better coverage

|

|

87

|

+

- Perform L1-regularized symbolic regression

|

|

88

|

+

- Prune terms with coefficients below threshold

|

|

89

|

+

- Export human-readable mathematical expressions

|

|

90

|

+

|

|

91

|

+

## Quick Start

|

|

92

|

+

|

|

93

|

+

### Installation

|

|

94

|

+

|

|

95

|

+

#### Method 1: Via PyPI (Recommended)

|

|

96

|

+

```bash

|

|

97

|

+

pip install -qU oikan

|

|

98

|

+

```

|

|

99

|

+

|

|

100

|

+

#### Method 2: Local Development

|

|

101

|

+

```bash

|

|

102

|

+

git clone https://github.com/silvermete0r/OIKAN.git

|

|

103

|

+

cd OIKAN

|

|

104

|

+

pip install -e . # Install in development mode

|

|

105

|

+

```

|

|

106

|

+

|

|

107

|

+

### Regression Example

|

|

108

|

+

```python

|

|

109

|

+

from oikan.model import OIKANRegressor

|

|

110

|

+

from sklearn.metrics import mean_squared_error

|

|

111

|

+

|

|

112

|

+

# Initialize model

|

|

113

|

+

model = OIKANRegressor(

|

|

114

|

+

hidden_sizes=[32, 32], # Hidden layer sizes

|

|

115

|

+

activation='relu', # Activation function (other options: 'tanh', 'leaky_relu', 'elu', 'swish', 'gelu')

|

|

116

|

+

augmentation_factor=5, # Augmentation factor for data generation

|

|

117

|

+

polynomial_degree=2, # Degree of polynomial basis functions

|

|

118

|

+

alpha=0.1, # L1 regularization strength

|

|

119

|

+

sigma=0.1, # Standard deviation of Gaussian noise for data augmentation

|

|

120

|

+

epochs=100, # Number of training epochs

|

|

121

|

+

lr=0.001, # Learning rate

|

|

122

|

+

batch_size=32, # Batch size for training

|

|

123

|

+

verbose=True # Verbose output during training

|

|

124

|

+

)

|

|

125

|

+

|

|

126

|

+

# Fit the model

|

|

127

|

+

model.fit(X_train, y_train)

|

|

128

|

+

|

|

129

|

+

# Make predictions

|

|

130

|

+

y_pred = model.predict(X_test)

|

|

131

|

+

|

|

132

|

+

# Evaluate performance

|

|

133

|

+

mse = mean_squared_error(y_test, y_pred)

|

|

134

|

+

print("Mean Squared Error:", mse)

|

|

135

|

+

|

|

136

|

+

# Get symbolic formula

|

|

137

|

+

formula = model.get_formula()

|

|

138

|

+

print("Symbolic Formula:", formula)

|

|

139

|

+

|

|

140

|

+

# Get feature importances

|

|

141

|

+

importances = model.feature_importances()

|

|

142

|

+

print("Feature Importances:", importances)

|

|

143

|

+

|

|

144

|

+

# Save the model (optional)

|

|

145

|

+

model.save("outputs/model.json")

|

|

146

|

+

|

|

147

|

+

# Load the model (optional)

|

|

148

|

+

loaded_model = OIKANRegressor()

|

|

149

|

+

loaded_model.load("outputs/model.json")

|

|

150

|

+

```

|

|

151

|

+

|

|

152

|

+

*Example of the saved symbolic formula (regression model): [outputs/california_housing_model.json](outputs/california_housing_model.json)*

|

|

153

|

+

|

|

154

|

+

|

|

155

|

+

### Classification Example

|

|

156

|

+

```python

|

|

157

|

+

from oikan.model import OIKANClassifier

|

|

158

|

+

from sklearn.metrics import accuracy_score

|

|

159

|

+

|

|

160

|

+

# Initialize model

|

|

161

|

+

model = OIKANClassifier(

|

|

162

|

+

hidden_sizes=[32, 32], # Hidden layer sizes

|

|

163

|

+

activation='relu', # Activation function (other options: 'tanh', 'leaky_relu', 'elu', 'swish', 'gelu')

|

|

164

|

+

augmentation_factor=10, # Augmentation factor for data generation

|

|

165

|

+

polynomial_degree=2, # Degree of polynomial basis functions

|

|

166

|

+

alpha=0.1, # L1 regularization strength

|

|

167

|

+

sigma=0.1, # Standard deviation of Gaussian noise for data augmentation

|

|

168

|

+

epochs=100, # # Number of training epochs

|

|

169

|

+

lr=0.001, # Learning rate

|

|

170

|

+

batch_size=32, # Batch size for training

|

|

171

|

+

verbose=True # Verbose output during training

|

|

172

|

+

)

|

|

173

|

+

|

|

174

|

+

# Fit the model

|

|

175

|

+

model.fit(X_train, y_train)

|

|

176

|

+

|

|

177

|

+

# Make predictions

|

|

178

|

+

y_pred = model.predict(X_test)

|

|

179

|

+

|

|

180

|

+

# Evaluate performance

|

|

181

|

+

accuracy = model.score(X_test, y_test)

|

|

182

|

+

print("Accuracy:", accuracy)

|

|

183

|

+

|

|

184

|

+

# Get symbolic formulas for each class

|

|

185

|

+

formulas = model.get_formula()

|

|

186

|

+

for i, formula in enumerate(formulas):

|

|

187

|

+

print(f"Class {i} Formula:", formula)

|

|

188

|

+

|

|

189

|

+

# Get feature importances

|

|

190

|

+

importances = model.feature_importances()

|

|

191

|

+

print("Feature Importances:", importances)

|

|

192

|

+

|

|

193

|

+

# Save the model (optional)

|

|

194

|

+

model.save("outputs/model.json")

|

|

195

|

+

|

|

196

|

+

# Load the model (optional)

|

|

197

|

+

loaded_model = OIKANClassifier()

|

|

198

|

+

loaded_model.load("outputs/model.json")

|

|

199

|

+

```

|

|

200

|

+

|

|

201

|

+

*Example of the saved symbolic formula (classification model): [outputs/iris_model.json](outputs/iris_model.json)*

|

|

202

|

+

|

|

203

|

+

### Architecture Diagram

|

|

204

|

+

|

|

205

|

+

*Will be updated soon..*

|

|

206

|

+

|

|

207

|

+

## Contributing

|

|

208

|

+

|

|

209

|

+

We welcome contributions! Key areas of interest:

|

|

210

|

+

|

|

211

|

+

- Model architecture improvements

|

|

212

|

+

- Novel basis function implementations

|

|

213

|

+

- Improved symbolic extraction algorithms

|

|

214

|

+

- Real-world case studies and applications

|

|

215

|

+

- Performance optimizations

|

|

216

|

+

|

|

217

|

+

Please see [CONTRIBUTING.md](CONTRIBUTING.md) for guidelines.

|

|

218

|

+

|

|

219

|

+

## Citation

|

|

220

|

+

|

|

221

|

+

If you use OIKAN in your research, please cite:

|

|

222

|

+

|

|

223

|

+

```bibtex

|

|

224

|

+

@software{oikan2025,

|

|

225

|

+

title = {OIKAN: Optimized Interpretable Kolmogorov-Arnold Networks},

|

|

226

|

+

author = {Zhalgasbayev, Arman},

|

|

227

|

+

year = {2025},

|

|

228

|

+

url = {https://github.com/silvermete0r/OIKAN}

|

|

229

|

+

}

|

|

230

|

+

```

|

|

231

|

+

|

|

232

|

+

## License

|

|

233

|

+

This project is licensed under the MIT License - see the [LICENSE](LICENSE) file for details.

|

|

@@ -0,0 +1,11 @@

|

|

|

1

|

+

oikan/__init__.py,sha256=zEzhm1GYLT4vNaIQ4CgZcNpUk3uo8SWnoaHYtHW_XSQ,628

|

|

2

|

+

oikan/exceptions.py,sha256=Is0jG4apxO8QJQREIiJQYMjANYWibWeS-103q9KWbfg,192

|

|

3

|

+

oikan/model.py,sha256=-LuvcljM5fqQsqwmhfol_e-_zVQzTAfq8SedQ3HYQQQ,14032

|

|

4

|

+

oikan/neural.py,sha256=wxmGgzmtpwJ3lvH6u6D4i4BiAzg018czrIdw49phSCY,1558

|

|

5

|

+

oikan/symbolic.py,sha256=3gtBndqFFC9ny2-PekKkUgr_t1HEpfkbk68e94yPpbI,2083

|

|

6

|

+

oikan/utils.py,sha256=xMGRa1qhn8BWn9UxpVeJIuGb-UvQmbjiFSsvAdF0bMU,2095

|

|

7

|

+

oikan-0.0.3.1.dist-info/licenses/LICENSE,sha256=75ASVmU-XIpN-M4LbVmJ_ibgbzbvRLVti8FhnR0BTf8,1096

|

|

8

|

+

oikan-0.0.3.1.dist-info/METADATA,sha256=BAYWIvUqQ-al4TPraOnx0tx6eGSFUOvl4_Mxfxo61Qw,8335

|

|

9

|

+

oikan-0.0.3.1.dist-info/WHEEL,sha256=0CuiUZ_p9E4cD6NyLD6UG80LBXYyiSYZOKDm5lp32xk,91

|

|

10

|

+

oikan-0.0.3.1.dist-info/top_level.txt,sha256=XwnwKwTJddZwIvtrUsAz-l-58BJRj6HjAGWrfYi_3QY,6

|

|

11

|

+

oikan-0.0.3.1.dist-info/RECORD,,

|

oikan-0.0.2.5.dist-info/METADATA

DELETED

|

@@ -1,195 +0,0 @@

|

|

|

1

|

-

Metadata-Version: 2.4

|

|

2

|

-

Name: oikan

|

|

3

|

-

Version: 0.0.2.5

|

|

4

|

-

Summary: OIKAN: Optimized Interpretable Kolmogorov-Arnold Networks

|

|

5

|

-

Author: Arman Zhalgasbayev

|

|

6

|

-

License: MIT

|

|

7

|

-

Classifier: Programming Language :: Python :: 3

|

|

8

|

-

Classifier: License :: OSI Approved :: MIT License

|

|

9

|

-

Classifier: Operating System :: OS Independent

|

|

10

|

-

Requires-Python: >=3.7

|

|

11

|

-

Description-Content-Type: text/markdown

|

|

12

|

-

License-File: LICENSE

|

|

13

|

-

Requires-Dist: torch

|

|

14

|

-

Requires-Dist: numpy

|

|

15

|

-

Requires-Dist: scikit-learn

|

|

16

|

-

Dynamic: license-file

|

|

17

|

-

|

|

18

|

-

<!-- logo in the center -->

|

|

19

|

-

<div align="center">

|

|

20

|

-

<img src="https://raw.githubusercontent.com/silvermete0r/oikan/main/docs/media/oikan_logo.png" alt="OIKAN Logo" width="200"/>

|

|

21

|

-

|

|

22

|

-

<h1>OIKAN: Optimized Interpretable Kolmogorov-Arnold Networks</h1>

|

|

23

|

-

</div>

|

|

24

|

-

|

|

25

|

-

## Overview

|

|

26

|

-

OIKAN (Optimized Interpretable Kolmogorov-Arnold Networks) is a neuro-symbolic ML framework that combines modern neural networks with classical Kolmogorov-Arnold representation theory. It provides interpretable machine learning solutions through automatic extraction of symbolic mathematical formulas from trained models.

|

|

27

|

-

|

|

28

|

-

[](https://badge.fury.io/py/oikan)

|

|

29

|

-

[](https://pypistats.org/packages/oikan)

|

|

30

|

-

[](https://pepy.tech/projects/oikan)

|

|

31

|

-

[](https://opensource.org/licenses/MIT)

|

|

32

|

-

[](https://github.com/silvermete0r/oikan/issues)

|

|

33

|

-

[](https://silvermete0r.github.io/oikan/)

|

|

34

|

-

|

|

35

|

-

> **Important Disclaimer**: OIKAN is an experimental research project. It is not intended for production use or real-world applications. This framework is designed for research purposes, experimentation, and academic exploration of neuro-symbolic machine learning concepts.

|

|

36

|

-

|

|

37

|

-

## Key Features

|

|

38

|

-

- 🧠 **Neuro-Symbolic ML**: Combines neural network learning with symbolic mathematics

|

|

39

|

-

- 📊 **Automatic Formula Extraction**: Generates human-readable mathematical expressions

|

|

40

|

-

- 🎯 **Scikit-learn Compatible**: Familiar `.fit()` and `.predict()` interface

|

|

41

|

-

- 🔬 **Research-Focused**: Designed for academic exploration and experimentation

|

|

42

|

-

- 📈 **Multi-Task**: Supports both regression and classification problems

|

|

43

|

-

|

|

44

|

-

## Scientific Foundation

|

|

45

|

-

|

|

46

|

-

OIKAN implements the Kolmogorov-Arnold Representation Theorem through a novel neural architecture:

|

|

47

|

-

|

|

48

|

-

1. **Theorem Background**: Any continuous multivariate function f(x1,...,xn) can be represented as:

|

|

49

|

-

```

|

|

50

|

-

f(x1,...,xn) = ∑(j=0 to 2n){ φj( ∑(i=1 to n) ψij(xi) ) }

|

|

51

|

-

```

|

|

52

|

-

where φj and ψij are continuous single-variable functions.

|

|

53

|

-

|

|

54

|

-

2. **Neural Implementation**:

|

|

55

|

-

```python

|

|

56

|

-

# Pseudo-implementation of KAN architecture

|

|

57

|

-

class KANLayer:

|

|

58

|

-

def __init__(self, input_dim, output_dim):

|

|

59

|

-

self.edges = [SymbolicEdge() for _ in range(input_dim * output_dim)]

|

|

60

|

-

self.weights = initialize_weights(input_dim, output_dim)

|

|

61

|

-

|

|

62

|

-

def forward(self, x):

|

|

63

|

-

# Transform each input through basis functions

|

|

64

|

-

edge_outputs = [edge(x_i) for x_i, edge in zip(x, self.edges)]

|

|

65

|

-

# Combine using learned weights

|

|

66

|

-

return combine_weighted_outputs(edge_outputs, self.weights)

|

|

67

|

-

```

|

|

68

|

-

|

|

69

|

-

3. **Basis functions**

|

|

70

|

-

```python

|

|

71

|

-

# Edge activation contains interpretable basis functions

|

|

72

|

-

ADVANCED_LIB = {

|

|

73

|

-

'x': (lambda x: x), # Linear

|

|

74

|

-

'x^2': (lambda x: x**2), # Quadratic

|

|

75

|

-

'sin(x)': np.sin, # Periodic

|

|

76

|

-

'tanh(x)': np.tanh # Bounded

|

|

77

|

-

}

|

|

78

|

-

```

|

|

79

|

-

|

|

80

|

-

## Quick Start

|

|

81

|

-

|

|

82

|

-

### Installation

|

|

83

|

-

|

|

84

|

-

#### Method 1: Via PyPI (Recommended)

|

|

85

|

-

```bash

|

|

86

|

-

pip install -qU oikan

|

|

87

|

-

```

|

|

88

|

-

|

|

89

|

-

#### Method 2: Local Development

|

|

90

|

-

```bash

|

|

91

|

-

git clone https://github.com/silvermete0r/OIKAN.git

|

|

92

|

-

cd OIKAN

|

|

93

|

-

pip install -e . # Install in development mode

|

|

94

|

-

```

|

|

95

|

-

|

|

96

|

-

### Regression Example

|

|

97

|

-

```python

|

|

98

|

-

from oikan.model import OIKANRegressor

|

|

99

|

-

from sklearn.model_selection import train_test_split

|

|

100

|

-

|

|

101

|

-

# Initialize model

|

|

102

|

-

model = OIKANRegressor()

|

|

103

|

-

|

|

104

|

-

# Fit model (sklearn-style)

|

|

105

|

-

model.fit(X_train, y_train, epochs=100, lr=0.01)

|

|

106

|

-

|

|

107

|

-

# Get predictions

|

|

108

|

-

y_pred = model.predict(X_test)

|

|

109

|

-

|

|

110

|

-

# Save interpretable formula to file with auto-generated guidelines

|

|

111

|

-

# The output file will contain:

|

|

112

|

-

# - Detailed symbolic formulas for each feature

|

|

113

|

-

# - Instructions for practical implementation

|

|

114

|

-

# - Recommendations for testing and validation

|

|

115

|

-

model.save_symbolic_formula("regression_formula.txt")

|

|

116

|

-

```

|

|

117

|

-

|

|

118

|

-

*Example of the saved symbolic formula instructions: [outputs/regression_symbolic_formula.txt](outputs/regression_symbolic_formula.txt)*

|

|

119

|

-

|

|

120

|

-

|

|

121

|

-

### Classification Example

|

|

122

|

-

```python

|

|

123

|

-

from oikan.model import OIKANClassifier

|

|

124

|

-

|

|

125

|

-

# Similar sklearn-style interface for classification

|

|

126

|

-

model = OIKANClassifier()

|

|

127

|

-

model.fit(X_train, y_train, epochs=100, lr=0.01)

|

|

128

|

-

probas = model.predict_proba(X_test)

|

|

129

|

-

|

|

130

|

-

# Save classification formulas with implementation guidelines

|

|

131

|

-

# The output file will contain:

|

|

132

|

-

# - Decision boundary formulas for each class

|

|

133

|

-

# - Softmax application instructions

|

|

134

|

-

# - Recommendations for testing and validation

|

|

135

|

-

model.save_symbolic_formula("classification_formula.txt")

|

|

136

|

-

```

|

|

137

|

-

|

|

138

|

-

*Example of the saved symbolic formula instructions: [outputs/classification_symbolic_formula.txt](outputs/classification_symbolic_formula.txt)*

|

|

139

|

-

|

|

140

|

-

### Architecture Diagram

|

|

141

|

-

|

|

142

|

-

|

|

143

|

-

|

|

144

|

-

### Key Design Principles

|

|

145

|

-

|

|

146

|

-

1. **Interpretability First**: All transformations maintain clear mathematical meaning

|

|

147

|

-

2. **Scikit-learn Compatibility**: Familiar `.fit()` and `.predict()` interface

|

|

148

|

-

3. **Symbolic Formula Exporting**: Export formulas as lightweight mathematical expressions

|

|

149

|

-

4. **Automatic Simplification**: Remove insignificant terms (|w| < 1e-4)

|

|

150

|

-

|

|

151

|

-

|

|

152

|

-

### Key Model Components

|

|

153

|

-

|

|

154

|

-

1. **EdgeActivation Layer**:

|

|

155

|

-

- Implements interpretable basis function transformations

|

|

156

|

-

- Automatically prunes insignificant terms

|

|

157

|

-

- Maintains mathematical transparency

|

|

158

|

-

|

|

159

|

-

2. **Formula Extraction**:

|

|

160

|

-

- Combines edge transformations with learned weights

|

|

161

|

-

- Applies symbolic simplification

|

|

162

|

-

- Generates human-readable expressions

|

|

163

|

-

|

|

164

|

-

3. **Training Process**:

|

|

165

|

-

- Gradient-based optimization of edge weights

|

|

166

|

-

- Automatic feature importance detection

|

|

167

|

-

- Complexity control through regularization

|

|

168

|

-

|

|

169

|

-

## Contributing

|

|

170

|

-

|

|

171

|

-

We welcome contributions! Key areas of interest:

|

|

172

|

-

|

|

173

|

-

- Model architecture improvements

|

|

174

|

-

- Novel basis function implementations

|

|

175

|

-

- Improved symbolic extraction algorithms

|

|

176

|

-

- Real-world case studies and applications

|

|

177

|

-

- Performance optimizations

|

|

178

|

-

|

|

179

|

-

Please see [CONTRIBUTING.md](CONTRIBUTING.md) for guidelines.

|

|

180

|

-

|

|

181

|

-

## Citation

|

|

182

|

-

|

|

183

|

-

If you use OIKAN in your research, please cite:

|

|

184

|

-

|

|

185

|

-

```bibtex

|

|

186

|

-

@software{oikan2025,

|

|

187

|

-

title = {OIKAN: Optimized Interpretable Kolmogorov-Arnold Networks},

|

|

188

|

-

author = {Zhalgasbayev, Arman},

|

|

189

|

-

year = {2025},

|

|

190

|

-

url = {https://github.com/silvermete0r/OIKAN}

|

|

191

|

-

}

|

|

192

|

-

```

|

|

193

|

-

|

|

194

|

-

## License

|

|

195

|

-

This project is licensed under the MIT License - see the [LICENSE](LICENSE) file for details.

|

oikan-0.0.2.5.dist-info/RECORD

DELETED

|

@@ -1,9 +0,0 @@

|

|

|

1

|

-

oikan/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

2

|

-

oikan/exceptions.py,sha256=UqT3uTtfiB8QA_3AMvKdHOme9WL9HZD_d7GHIk00LJw,394

|

|

3

|

-

oikan/model.py,sha256=O5ozMUNCG-d7y5du1uG96psEgwMsN6H9CLQDtCg-AmM,21580

|

|

4

|

-

oikan/utils.py,sha256=nLbzycmtNCj8806delPsLcKMaBuFhTHtrKXCf1NDMb0,2062

|

|

5

|

-

oikan-0.0.2.5.dist-info/licenses/LICENSE,sha256=75ASVmU-XIpN-M4LbVmJ_ibgbzbvRLVti8FhnR0BTf8,1096

|

|

6

|

-

oikan-0.0.2.5.dist-info/METADATA,sha256=3AgQFr8-ihylBaSKlYBDbRnU8yAajf_fRW8fJVOAGCM,7283

|

|

7

|

-

oikan-0.0.2.5.dist-info/WHEEL,sha256=CmyFI0kx5cdEMTLiONQRbGQwjIoR1aIYB7eCAQ4KPJ0,91

|

|

8

|

-

oikan-0.0.2.5.dist-info/top_level.txt,sha256=XwnwKwTJddZwIvtrUsAz-l-58BJRj6HjAGWrfYi_3QY,6

|

|

9

|

-

oikan-0.0.2.5.dist-info/RECORD,,

|

|

File without changes

|

|

File without changes

|