oikan 0.0.2.3__py3-none-any.whl → 0.0.2.5__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- oikan/model.py +78 -35

- oikan/utils.py +21 -15

- {oikan-0.0.2.3.dist-info → oikan-0.0.2.5.dist-info}/METADATA +55 -81

- oikan-0.0.2.5.dist-info/RECORD +9 -0

- oikan/symbolic.py +0 -28

- oikan-0.0.2.3.dist-info/RECORD +0 -10

- {oikan-0.0.2.3.dist-info → oikan-0.0.2.5.dist-info}/WHEEL +0 -0

- {oikan-0.0.2.3.dist-info → oikan-0.0.2.5.dist-info}/licenses/LICENSE +0 -0

- {oikan-0.0.2.3.dist-info → oikan-0.0.2.5.dist-info}/top_level.txt +0 -0

oikan/model.py

CHANGED

|

@@ -30,7 +30,10 @@ class KANLayer(nn.Module):

|

|

|

30

30

|

for _ in range(input_dim)

|

|

31

31

|

])

|

|

32

32

|

|

|

33

|

-

|

|

33

|

+

# Updated initialization using Xavier uniform initialization

|

|

34

|

+

self.combination_weights = nn.Parameter(

|

|

35

|

+

nn.init.xavier_uniform_(torch.empty(input_dim, output_dim))

|

|

36

|

+

)

|

|

34

37

|

|

|

35

38

|

def forward(self, x):

|

|

36

39

|

x_split = x.split(1, dim=1) # list of (batch, 1) tensors for each input feature

|

|

@@ -49,7 +52,8 @@ class KANLayer(nn.Module):

|

|

|

49

52

|

for i in range(self.input_dim):

|

|

50

53

|

weight = self.combination_weights[i, j].item()

|

|

51

54

|

if abs(weight) > 1e-4:

|

|

52

|

-

|

|

55

|

+

# Pass lower threshold for improved precision

|

|

56

|

+

edge_formula = self.edges[i][j].get_symbolic_repr(threshold=1e-6)

|

|

53

57

|

if edge_formula != "0":

|

|

54

58

|

terms.append(f"({weight:.4f} * ({edge_formula}))")

|

|

55

59

|

formulas.append(" + ".join(terms) if terms else "0")

|

|

@@ -57,15 +61,13 @@ class KANLayer(nn.Module):

|

|

|

57

61

|

|

|

58

62

|

class BaseOIKAN(BaseEstimator):

|

|

59

63

|

"""Base OIKAN model implementing common functionality"""

|

|

60

|

-

def __init__(self, hidden_dims=[

|

|

64

|

+

def __init__(self, hidden_dims=[32, 16], dropout=0.1):

|

|

61

65

|

self.hidden_dims = hidden_dims

|

|

62

|

-

self.num_basis = num_basis

|

|

63

|

-

self.degree = degree

|

|

64

66

|

self.dropout = dropout # Dropout probability for uncertainty quantification

|

|

65

67

|

self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # Auto device chooser

|

|

66

68

|

self.model = None

|

|

67

69

|

self._is_fitted = False

|

|

68

|

-

self.__name = "OIKAN v0.0.2"

|

|

70

|

+

self.__name = "OIKAN v0.0.2" # Manual configured version

|

|

69

71

|

self.loss_history = [] # <-- new attribute to store loss values

|

|

70

72

|

|

|

71

73

|

def _build_network(self, input_dim, output_dim):

|

|

@@ -73,7 +75,9 @@ class BaseOIKAN(BaseEstimator):

|

|

|

73

75

|

prev_dim = input_dim

|

|

74

76

|

for hidden_dim in self.hidden_dims:

|

|

75

77

|

layers.append(KANLayer(prev_dim, hidden_dim))

|

|

76

|

-

layers.append(nn.

|

|

78

|

+

layers.append(nn.BatchNorm1d(hidden_dim)) # Added batch normalization

|

|

79

|

+

layers.append(nn.ReLU()) # Added activation function

|

|

80

|

+

layers.append(nn.Dropout(self.dropout)) # Apply dropout for uncertainty quantification

|

|

77

81

|

prev_dim = hidden_dim

|

|

78

82

|

layers.append(KANLayer(prev_dim, output_dim))

|

|

79

83

|

return nn.Sequential(*layers).to(self.device)

|

|

@@ -85,6 +89,25 @@ class BaseOIKAN(BaseEstimator):

|

|

|

85

89

|

y = torch.FloatTensor(y)

|

|

86

90

|

return X.to(self.device), (y.to(self.device) if y is not None else None)

|

|

87

91

|

|

|

92

|

+

def _process_edge_formula(self, edge_formula, weight):

|

|

93

|

+

"""Helper to scale symbolic formula terms by a given weight"""

|

|

94

|

+

terms = []

|

|

95

|

+

for term in edge_formula.split(" + "):

|

|

96

|

+

if term and term != "0":

|

|

97

|

+

if "*" in term:

|

|

98

|

+

coef_str, rest = term.split("*", 1)

|

|

99

|

+

try:

|

|

100

|

+

coef = float(coef_str)

|

|

101

|

+

terms.append(f"{(coef * weight):.4f}*{rest}")

|

|

102

|

+

except Exception:

|

|

103

|

+

terms.append(term) # fallback

|

|

104

|

+

else:

|

|

105

|

+

try:

|

|

106

|

+

terms.append(f"{(float(term) * weight):.4f}")

|

|

107

|

+

except Exception:

|

|

108

|

+

terms.append(term)

|

|

109

|

+

return " + ".join(terms) if terms else "0"

|

|

110

|

+

|

|

88

111

|

def get_symbolic_formula(self):

|

|

89

112

|

"""Generate and cache symbolic formulas for production‐ready inference."""

|

|

90

113

|

if not self._is_fitted:

|

|

@@ -100,17 +123,9 @@ class BaseOIKAN(BaseEstimator):

|

|

|

100

123

|

for j in range(n_classes):

|

|

101

124

|

weight = first_layer.combination_weights[i, j].item()

|

|

102

125

|

if abs(weight) > 1e-4:

|

|

103

|

-

|

|

104

|

-

|

|

105

|

-

|

|

106

|

-

if term and term != "0":

|

|

107

|

-

if "*" in term:

|

|

108

|

-

coef, rest = term.split("*", 1)

|

|

109

|

-

coef = float(coef) * weight

|

|

110

|

-

terms.append(f"{coef:.4f}*{rest}")

|

|

111

|

-

else:

|

|

112

|

-

terms.append(f"{float(term)*weight:.4f}")

|

|

113

|

-

formulas[i][j] = " + ".join(terms) if terms else "0"

|

|

126

|

+

# Use improved threshold for formula extraction

|

|

127

|

+

edge_formula = first_layer.edges[i][j].get_symbolic_repr(threshold=1e-6)

|

|

128

|

+

formulas[i][j] = self._process_edge_formula(edge_formula, weight)

|

|

114

129

|

else:

|

|

115

130

|

formulas[i][j] = "0"

|

|

116

131

|

self.symbolic_formula = formulas

|

|

@@ -119,8 +134,9 @@ class BaseOIKAN(BaseEstimator):

|

|

|

119

134

|

formulas = []

|

|

120

135

|

first_layer = self.model[0]

|

|

121

136

|

for i in range(first_layer.input_dim):

|

|

122

|

-

formula

|

|

123

|

-

|

|

137

|

+

# Use improved threshold for formula extraction in regressor branch

|

|

138

|

+

edge_formula = first_layer.edges[i][0].get_symbolic_repr(threshold=1e-6)

|

|

139

|

+

formulas.append(self._process_edge_formula(edge_formula, 1.0))

|

|

124

140

|

self.symbolic_formula = formulas

|

|

125

141

|

return formulas

|

|

126

142

|

|

|

@@ -131,7 +147,7 @@ class BaseOIKAN(BaseEstimator):

|

|

|

131

147

|

- A header with the version and timestamp

|

|

132

148

|

- The symbolic formulas for each feature (and class for classification)

|

|

133

149

|

- A general formula, including softmax for classification

|

|

134

|

-

- Recommendations

|

|

150

|

+

- Recommendations and performance results.

|

|

135

151

|

"""

|

|

136

152

|

header = f"Generated by {self.__name} | Timestamp: {dt.now()}\n\n"

|

|

137

153

|

header += "Symbolic Formulas:\n"

|

|

@@ -157,8 +173,14 @@ class BaseOIKAN(BaseEstimator):

|

|

|

157

173

|

recs = ("\nRecommendations:\n"

|

|

158

174

|

"• Consider the symbolic formula for lightweight and interpretable inference.\n"

|

|

159

175

|

"• Validate approximation accuracy against the neural model.\n")

|

|

176

|

+

|

|

177

|

+

# Disclaimer regarding experimental usage

|

|

178

|

+

disclaimer = ("\nDisclaimer:\n"

|

|

179

|

+

"This experimental model is intended for research purposes only and is not production-ready. "

|

|

180

|

+

"Feel free to fork and build your own project based on this research: "

|

|

181

|

+

"https://github.com/silvermete0r/oikan\n")

|

|

160

182

|

|

|

161

|

-

output = header + formulas_text + general + recs

|

|

183

|

+

output = header + formulas_text + general + recs + disclaimer

|

|

162

184

|

with open(filename, "w") as f:

|

|

163

185

|

f.write(output)

|

|

164

186

|

print(f"Symbolic formulas saved to {filename}")

|

|

@@ -174,30 +196,51 @@ class BaseOIKAN(BaseEstimator):

|

|

|

174

196

|

def _eval_formula(self, formula, x):

|

|

175

197

|

"""Helper to evaluate a symbolic formula for an input vector x using ADVANCED_LIB basis functions."""

|

|

176

198

|

import re

|

|

177

|

-

|

|

199

|

+

from .utils import ensure_tensor

|

|

200

|

+

|

|

201

|

+

if isinstance(x, (list, tuple)):

|

|

202

|

+

x = np.array(x)

|

|

203

|

+

|

|

204

|

+

total = torch.zeros_like(ensure_tensor(x))

|

|

178

205

|

pattern = re.compile(r"(-?\d+\.\d+)\*?([\w\(\)\^]+)")

|

|

179

206

|

matches = pattern.findall(formula)

|

|

207

|

+

|

|

180

208

|

for coef_str, func_name in matches:

|

|

181

209

|

try:

|

|

182

210

|

coef = float(coef_str)

|

|

183

211

|

for key, (notation, func) in ADVANCED_LIB.items():

|

|

184

212

|

if notation.strip() == func_name.strip():

|

|

185

|

-

|

|

213

|

+

result = func(x)

|

|

214

|

+

if isinstance(result, torch.Tensor):

|

|

215

|

+

total += coef * result

|

|

216

|

+

else:

|

|

217

|

+

total += coef * ensure_tensor(result)

|

|

186

218

|

break

|

|

187

|

-

except Exception:

|

|

219

|

+

except Exception as e:

|

|

220

|

+

print(f"Warning: Error evaluating term {coef_str}*{func_name}: {str(e)}")

|

|

188

221

|

continue

|

|

189

|

-

|

|

222

|

+

|

|

223

|

+

return total.cpu().numpy() if isinstance(total, torch.Tensor) else total

|

|

190

224

|

|

|

191

225

|

def symbolic_predict(self, X):

|

|

192

226

|

"""Predict using only the extracted symbolic formula (regressor)."""

|

|

193

227

|

if not self._is_fitted:

|

|

194

228

|

raise NotFittedError("Model must be fitted before prediction")

|

|

229

|

+

|

|

195

230

|

X = np.array(X) if not isinstance(X, np.ndarray) else X

|

|

196

|

-

formulas = self.get_symbolic_formula()

|

|

231

|

+

formulas = self.get_symbolic_formula()

|

|

197

232

|

predictions = np.zeros((X.shape[0], 1))

|

|

198

|

-

|

|

199

|

-

|

|

200

|

-

|

|

233

|

+

|

|

234

|

+

try:

|

|

235

|

+

for i, formula in enumerate(formulas):

|

|

236

|

+

x = X[:, i]

|

|

237

|

+

pred = self._eval_formula(formula, x)

|

|

238

|

+

if isinstance(pred, torch.Tensor):

|

|

239

|

+

pred = pred.cpu().numpy()

|

|

240

|

+

predictions[:, 0] += pred

|

|

241

|

+

except Exception as e:

|

|

242

|

+

raise RuntimeError(f"Error in symbolic prediction: {str(e)}")

|

|

243

|

+

|

|

201

244

|

return predictions

|

|

202

245

|

|

|

203

246

|

def compile_symbolic_formula(self, filename="output/final_symbolic_formula.txt"):

|

|

@@ -263,7 +306,7 @@ class BaseOIKAN(BaseEstimator):

|

|

|

263

306

|

|

|

264

307

|

class OIKANRegressor(BaseOIKAN, RegressorMixin):

|

|

265

308

|

"""OIKAN implementation for regression tasks"""

|

|

266

|

-

def fit(self, X, y, epochs=100, lr=0.01,

|

|

309

|

+

def fit(self, X, y, epochs=100, lr=0.01, verbose=True):

|

|

267

310

|

X, y = self._validate_data(X, y)

|

|

268

311

|

if len(y.shape) == 1:

|

|

269

312

|

y = y.reshape(-1, 1)

|

|

@@ -284,7 +327,7 @@ class OIKANRegressor(BaseOIKAN, RegressorMixin):

|

|

|

284

327

|

if torch.isnan(loss):

|

|

285

328

|

print("Warning: NaN loss detected, reinitializing model...")

|

|

286

329

|

self.model = None

|

|

287

|

-

return self.fit(X, y, epochs, lr/10,

|

|

330

|

+

return self.fit(X, y, epochs, lr/10, verbose)

|

|

288

331

|

|

|

289

332

|

loss.backward()

|

|

290

333

|

|

|

@@ -312,7 +355,7 @@ class OIKANRegressor(BaseOIKAN, RegressorMixin):

|

|

|

312

355

|

|

|

313

356

|

class OIKANClassifier(BaseOIKAN, ClassifierMixin):

|

|

314

357

|

"""OIKAN implementation for classification tasks"""

|

|

315

|

-

def fit(self, X, y, epochs=100, lr=0.01,

|

|

358

|

+

def fit(self, X, y, epochs=100, lr=0.01, verbose=True):

|

|

316

359

|

X, y = self._validate_data(X, y)

|

|

317

360

|

self.classes_ = torch.unique(y)

|

|

318

361

|

n_classes = len(self.classes_)

|

|

@@ -414,8 +457,8 @@ class OIKANClassifier(BaseOIKAN, ClassifierMixin):

|

|

|

414

457

|

weight = first_layer.combination_weights[i, j].item()

|

|

415

458

|

|

|

416

459

|

if abs(weight) > 1e-4:

|

|

417

|

-

#

|

|

418

|

-

edge_formula = edge.get_symbolic_repr()

|

|

460

|

+

# Improved precision by using a lower threshold

|

|

461

|

+

edge_formula = edge.get_symbolic_repr(threshold=1e-6)

|

|

419

462

|

terms = []

|

|

420

463

|

for term in edge_formula.split(" + "):

|

|

421

464

|

if term and term != "0":

|

oikan/utils.py

CHANGED

|

@@ -3,41 +3,47 @@ import torch

|

|

|

3

3

|

import torch.nn as nn

|

|

4

4

|

import numpy as np

|

|

5

5

|

|

|

6

|

-

|

|

6

|

+

def ensure_tensor(x):

|

|

7

|

+

"""Helper function to ensure input is a PyTorch tensor."""

|

|

8

|

+

if isinstance(x, np.ndarray):

|

|

9

|

+

return torch.from_numpy(x).float()

|

|

10

|

+

elif isinstance(x, (int, float)):

|

|

11

|

+

return torch.tensor([x], dtype=torch.float32)

|

|

12

|

+

elif isinstance(x, torch.Tensor):

|

|

13

|

+

return x.float()

|

|

14

|

+

else:

|

|

15

|

+

raise ValueError(f"Unsupported input type: {type(x)}")

|

|

16

|

+

|

|

17

|

+

# Updated to handle numpy arrays and scalars

|

|

7

18

|

ADVANCED_LIB = {

|

|

8

|

-

'x':

|

|

9

|

-

'x^2':

|

|

10

|

-

'

|

|

11

|

-

'

|

|

12

|

-

'log': ('log(x)', lambda x: np.log(np.abs(x) + 1)),

|

|

13

|

-

'sqrt': ('sqrt(x)', lambda x: np.sqrt(np.abs(x))),

|

|

14

|

-

'tanh': ('tanh(x)', lambda x: np.tanh(x)),

|

|

15

|

-

'sin': ('sin(x)', lambda x: np.sin(np.clip(x, -10*np.pi, 10*np.pi))),

|

|

16

|

-

'abs': ('abs(x)', lambda x: np.abs(x))

|

|

19

|

+

'x': ('x', lambda x: ensure_tensor(x)),

|

|

20

|

+

'x^2': ('x^2', lambda x: torch.pow(ensure_tensor(x), 2)),

|

|

21

|

+

'sin': ('sin(x)', lambda x: torch.sin(ensure_tensor(x))),

|

|

22

|

+

'tanh': ('tanh(x)', lambda x: torch.tanh(ensure_tensor(x)))

|

|

17

23

|

}

|

|

18

24

|

|

|

19

25

|

class EdgeActivation(nn.Module):

|

|

20

|

-

"""Learnable edge-based activation function."""

|

|

26

|

+

"""Learnable edge-based activation function with improved gradient flow."""

|

|

21

27

|

def __init__(self):

|

|

22

28

|

super().__init__()

|

|

23

29

|

self.weights = nn.Parameter(torch.randn(len(ADVANCED_LIB)))

|

|

24

30

|

self.bias = nn.Parameter(torch.zeros(1))

|

|

25

31

|

|

|

26

32

|

def forward(self, x):

|

|

33

|

+

x_tensor = ensure_tensor(x)

|

|

27

34

|

features = []

|

|

28

35

|

for _, func in ADVANCED_LIB.values():

|

|

29

|

-

feat =

|

|

30

|

-

dtype=torch.float32).to(x.device)

|

|

36

|

+

feat = func(x_tensor)

|

|

31

37

|

features.append(feat)

|

|

32

38

|

features = torch.stack(features, dim=-1)

|

|

33

39

|

return torch.matmul(features, self.weights.unsqueeze(0).T) + self.bias

|

|

34

40

|

|

|

35

41

|

def get_symbolic_repr(self, threshold=1e-4):

|

|

36

42

|

"""Get symbolic representation of the activation function."""

|

|

43

|

+

weights_np = self.weights.detach().cpu().numpy()

|

|

37

44

|

significant_terms = []

|

|

38

45

|

|

|

39

|

-

for (notation, _), weight in zip(ADVANCED_LIB.values(),

|

|

40

|

-

self.weights.detach().cpu().numpy()):

|

|

46

|

+

for (notation, _), weight in zip(ADVANCED_LIB.values(), weights_np):

|

|

41

47

|

if abs(weight) > threshold:

|

|

42

48

|

significant_terms.append(f"{weight:.4f}*{notation}")

|

|

43

49

|

|

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: oikan

|

|

3

|

-

Version: 0.0.2.

|

|

3

|

+

Version: 0.0.2.5

|

|

4

4

|

Summary: OIKAN: Optimized Interpretable Kolmogorov-Arnold Networks

|

|

5

5

|

Author: Arman Zhalgasbayev

|

|

6

6

|

License: MIT

|

|

@@ -32,20 +32,50 @@ OIKAN (Optimized Interpretable Kolmogorov-Arnold Networks) is a neuro-symbolic M

|

|

|

32

32

|

[](https://github.com/silvermete0r/oikan/issues)

|

|

33

33

|

[](https://silvermete0r.github.io/oikan/)

|

|

34

34

|

|

|

35

|

+

> **Important Disclaimer**: OIKAN is an experimental research project. It is not intended for production use or real-world applications. This framework is designed for research purposes, experimentation, and academic exploration of neuro-symbolic machine learning concepts.

|

|

36

|

+

|

|

35

37

|

## Key Features

|

|

36

38

|

- 🧠 **Neuro-Symbolic ML**: Combines neural network learning with symbolic mathematics

|

|

37

39

|

- 📊 **Automatic Formula Extraction**: Generates human-readable mathematical expressions

|

|

38

40

|

- 🎯 **Scikit-learn Compatible**: Familiar `.fit()` and `.predict()` interface

|

|

39

|

-

-

|

|

41

|

+

- 🔬 **Research-Focused**: Designed for academic exploration and experimentation

|

|

40

42

|

- 📈 **Multi-Task**: Supports both regression and classification problems

|

|

41

43

|

|

|

42

44

|

## Scientific Foundation

|

|

43

45

|

|

|

44

|

-

OIKAN

|

|

46

|

+

OIKAN implements the Kolmogorov-Arnold Representation Theorem through a novel neural architecture:

|

|

47

|

+

|

|

48

|

+

1. **Theorem Background**: Any continuous multivariate function f(x1,...,xn) can be represented as:

|

|

49

|

+

```

|

|

50

|

+

f(x1,...,xn) = ∑(j=0 to 2n){ φj( ∑(i=1 to n) ψij(xi) ) }

|

|

51

|

+

```

|

|

52

|

+

where φj and ψij are continuous single-variable functions.

|

|

45

53

|

|

|

46

|

-

|

|

47

|

-

|

|

48

|

-

|

|

54

|

+

2. **Neural Implementation**:

|

|

55

|

+

```python

|

|

56

|

+

# Pseudo-implementation of KAN architecture

|

|

57

|

+

class KANLayer:

|

|

58

|

+

def __init__(self, input_dim, output_dim):

|

|

59

|

+

self.edges = [SymbolicEdge() for _ in range(input_dim * output_dim)]

|

|

60

|

+

self.weights = initialize_weights(input_dim, output_dim)

|

|

61

|

+

|

|

62

|

+

def forward(self, x):

|

|

63

|

+

# Transform each input through basis functions

|

|

64

|

+

edge_outputs = [edge(x_i) for x_i, edge in zip(x, self.edges)]

|

|

65

|

+

# Combine using learned weights

|

|

66

|

+

return combine_weighted_outputs(edge_outputs, self.weights)

|

|

67

|

+

```

|

|

68

|

+

|

|

69

|

+

3. **Basis functions**

|

|

70

|

+

```python

|

|

71

|

+

# Edge activation contains interpretable basis functions

|

|

72

|

+

ADVANCED_LIB = {

|

|

73

|

+

'x': (lambda x: x), # Linear

|

|

74

|

+

'x^2': (lambda x: x**2), # Quadratic

|

|

75

|

+

'sin(x)': np.sin, # Periodic

|

|

76

|

+

'tanh(x)': np.tanh # Bounded

|

|

77

|

+

}

|

|

78

|

+

```

|

|

49

79

|

|

|

50

80

|

## Quick Start

|

|

51

81

|

|

|

@@ -68,11 +98,8 @@ pip install -e . # Install in development mode

|

|

|

68

98

|

from oikan.model import OIKANRegressor

|

|

69

99

|

from sklearn.model_selection import train_test_split

|

|

70

100

|

|

|

71

|

-

# Initialize model

|

|

72

|

-

model = OIKANRegressor(

|

|

73

|

-

hidden_dims=[16, 8], # Network architecture

|

|

74

|

-

dropout=0.1 # Regularization

|

|

75

|

-

)

|

|

101

|

+

# Initialize model

|

|

102

|

+

model = OIKANRegressor()

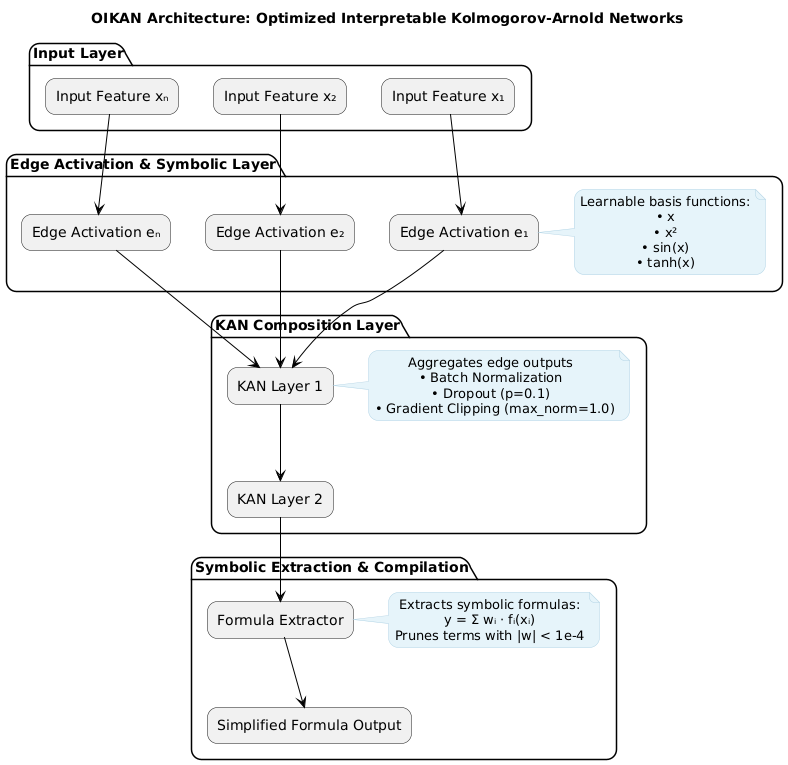

|

|

76

103

|

|

|

77

104

|

# Fit model (sklearn-style)

|

|

78

105

|

model.fit(X_train, y_train, epochs=100, lr=0.01)

|

|

@@ -84,7 +111,7 @@ y_pred = model.predict(X_test)

|

|

|

84

111

|

# The output file will contain:

|

|

85

112

|

# - Detailed symbolic formulas for each feature

|

|

86

113

|

# - Instructions for practical implementation

|

|

87

|

-

# - Recommendations for

|

|

114

|

+

# - Recommendations for testing and validation

|

|

88

115

|

model.save_symbolic_formula("regression_formula.txt")

|

|

89

116

|

```

|

|

90

117

|

|

|

@@ -96,7 +123,7 @@ model.save_symbolic_formula("regression_formula.txt")

|

|

|

96

123

|

from oikan.model import OIKANClassifier

|

|

97

124

|

|

|

98

125

|

# Similar sklearn-style interface for classification

|

|

99

|

-

model = OIKANClassifier(

|

|

126

|

+

model = OIKANClassifier()

|

|

100

127

|

model.fit(X_train, y_train, epochs=100, lr=0.01)

|

|

101

128

|

probas = model.predict_proba(X_test)

|

|

102

129

|

|

|

@@ -104,46 +131,12 @@ probas = model.predict_proba(X_test)

|

|

|

104

131

|

# The output file will contain:

|

|

105

132

|

# - Decision boundary formulas for each class

|

|

106

133

|

# - Softmax application instructions

|

|

107

|

-

# -

|

|

134

|

+

# - Recommendations for testing and validation

|

|

108

135

|

model.save_symbolic_formula("classification_formula.txt")

|

|

109

136

|

```

|

|

110

137

|

|

|

111

138

|

*Example of the saved symbolic formula instructions: [outputs/classification_symbolic_formula.txt](outputs/classification_symbolic_formula.txt)*

|

|

112

139

|

|

|

113

|

-

## Architecture Details

|

|

114

|

-

|

|

115

|

-

OIKAN implements a novel neuro-symbolic architecture based on Kolmogorov-Arnold representation theory through three specialized components:

|

|

116

|

-

|

|

117

|

-

1. **Edge Symbolic Layer**: Learns interpretable single-variable transformations

|

|

118

|

-

- Adaptive basis function composition using 9 core functions:

|

|

119

|

-

```python

|

|

120

|

-

ADVANCED_LIB = {

|

|

121

|

-

'x': ('x', lambda x: x),

|

|

122

|

-

'x^2': ('x^2', lambda x: x**2),

|

|

123

|

-

'x^3': ('x^3', lambda x: x**3),

|

|

124

|

-

'exp': ('exp(x)', lambda x: np.exp(x)),

|

|

125

|

-

'log': ('log(x)', lambda x: np.log(abs(x) + 1)),

|

|

126

|

-

'sqrt': ('sqrt(x)', lambda x: np.sqrt(abs(x))),

|

|

127

|

-

'tanh': ('tanh(x)', lambda x: np.tanh(x)),

|

|

128

|

-

'sin': ('sin(x)', lambda x: np.sin(x)),

|

|

129

|

-

'abs': ('abs(x)', lambda x: np.abs(x))

|

|

130

|

-

}

|

|

131

|

-

```

|

|

132

|

-

- Each input feature is transformed through these basis functions

|

|

133

|

-

- Learnable weights determine the optimal combination

|

|

134

|

-

|

|

135

|

-

2. **Neural Composition Layer**: Multi-layer feature aggregation

|

|

136

|

-

- Direct feature-to-feature connections through KAN layers

|

|

137

|

-

- Dropout regularization (p=0.1 default) for robust learning

|

|

138

|

-

- Gradient clipping (max_norm=1.0) for stable training

|

|

139

|

-

- User-configurable hidden layer dimensions

|

|

140

|

-

|

|

141

|

-

3. **Symbolic Extraction Layer**: Generates production-ready formulas

|

|

142

|

-

- Weight-based term pruning (threshold=1e-4)

|

|

143

|

-

- Automatic coefficient optimization

|

|

144

|

-

- Human-readable mathematical expressions

|

|

145

|

-

- Exportable to lightweight production code

|

|

146

|

-

|

|

147

140

|

### Architecture Diagram

|

|

148

141

|

|

|

149

142

|

|

|

@@ -152,45 +145,26 @@ OIKAN implements a novel neuro-symbolic architecture based on Kolmogorov-Arnold

|

|

|

152

145

|

|

|

153

146

|

1. **Interpretability First**: All transformations maintain clear mathematical meaning

|

|

154

147

|

2. **Scikit-learn Compatibility**: Familiar `.fit()` and `.predict()` interface

|

|

155

|

-

3. **

|

|

148

|

+

3. **Symbolic Formula Exporting**: Export formulas as lightweight mathematical expressions

|

|

156

149

|

4. **Automatic Simplification**: Remove insignificant terms (|w| < 1e-4)

|

|

157

150

|

|

|

158

|

-

## Model Components

|

|

159

151

|

|

|

160

|

-

|

|

161

|

-

```python

|

|

162

|

-

class EdgeActivation(nn.Module):

|

|

163

|

-

"""Learnable edge activation with basis functions"""

|

|

164

|

-

def forward(self, x):

|

|

165

|

-

return sum(self.weights[i] * basis[i](x) for i in range(self.num_basis))

|

|

166

|

-

```

|

|

152

|

+

### Key Model Components

|

|

167

153

|

|

|

168

|

-

|

|

169

|

-

|

|

170

|

-

|

|

171

|

-

|

|

172

|

-

def forward(self, x):

|

|

173

|

-

edge_outputs = [self.edges[i](x[:,i]) for i in range(self.input_dim)]

|

|

174

|

-

return self.combine(edge_outputs)

|

|

175

|

-

```

|

|

154

|

+

1. **EdgeActivation Layer**:

|

|

155

|

+

- Implements interpretable basis function transformations

|

|

156

|

+

- Automatically prunes insignificant terms

|

|

157

|

+

- Maintains mathematical transparency

|

|

176

158

|

|

|

177

|

-

|

|

178

|

-

|

|

179

|

-

|

|

180

|

-

|

|

181

|

-

terms = []

|

|

182

|

-

for i, edge in enumerate(self.edges):

|

|

183

|

-

if abs(self.weights[i]) > threshold:

|

|

184

|

-

terms.append(f"{self.weights[i]:.4f} * {edge.formula}")

|

|

185

|

-

return " + ".join(terms)

|

|

186

|

-

```

|

|

187

|

-

|

|

188

|

-

### Key Design Principles

|

|

159

|

+

2. **Formula Extraction**:

|

|

160

|

+

- Combines edge transformations with learned weights

|

|

161

|

+

- Applies symbolic simplification

|

|

162

|

+

- Generates human-readable expressions

|

|

189

163

|

|

|

190

|

-

|

|

191

|

-

-

|

|

192

|

-

-

|

|

193

|

-

-

|

|

164

|

+

3. **Training Process**:

|

|

165

|

+

- Gradient-based optimization of edge weights

|

|

166

|

+

- Automatic feature importance detection

|

|

167

|

+

- Complexity control through regularization

|

|

194

168

|

|

|

195

169

|

## Contributing

|

|

196

170

|

|

|

@@ -0,0 +1,9 @@

|

|

|

1

|

+

oikan/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

2

|

+

oikan/exceptions.py,sha256=UqT3uTtfiB8QA_3AMvKdHOme9WL9HZD_d7GHIk00LJw,394

|

|

3

|

+

oikan/model.py,sha256=O5ozMUNCG-d7y5du1uG96psEgwMsN6H9CLQDtCg-AmM,21580

|

|

4

|

+

oikan/utils.py,sha256=nLbzycmtNCj8806delPsLcKMaBuFhTHtrKXCf1NDMb0,2062

|

|

5

|

+

oikan-0.0.2.5.dist-info/licenses/LICENSE,sha256=75ASVmU-XIpN-M4LbVmJ_ibgbzbvRLVti8FhnR0BTf8,1096

|

|

6

|

+

oikan-0.0.2.5.dist-info/METADATA,sha256=3AgQFr8-ihylBaSKlYBDbRnU8yAajf_fRW8fJVOAGCM,7283

|

|

7

|

+

oikan-0.0.2.5.dist-info/WHEEL,sha256=CmyFI0kx5cdEMTLiONQRbGQwjIoR1aIYB7eCAQ4KPJ0,91

|

|

8

|

+

oikan-0.0.2.5.dist-info/top_level.txt,sha256=XwnwKwTJddZwIvtrUsAz-l-58BJRj6HjAGWrfYi_3QY,6

|

|

9

|

+

oikan-0.0.2.5.dist-info/RECORD,,

|

oikan/symbolic.py

DELETED

|

@@ -1,28 +0,0 @@

|

|

|

1

|

-

from .utils import ADVANCED_LIB

|

|

2

|

-

|

|

3

|

-

def symbolic_edge_repr(weights, bias=None, threshold=1e-4):

|

|

4

|

-

"""

|

|

5

|

-

Given a list of weights (floats) and an optional bias,

|

|

6

|

-

returns a list of structured terms (coefficient, basis function string).

|

|

7

|

-

"""

|

|

8

|

-

terms = []

|

|

9

|

-

# weights should be in the same order as ADVANCED_LIB.items()

|

|

10

|

-

for (_, (notation, _)), w in zip(ADVANCED_LIB.items(), weights):

|

|

11

|

-

if abs(w) > threshold:

|

|

12

|

-

terms.append((w, notation))

|

|

13

|

-

if bias is not None and abs(bias) > threshold:

|

|

14

|

-

# use "1" to represent the constant term

|

|

15

|

-

terms.append((bias, "1"))

|

|

16

|

-

return terms

|

|

17

|

-

|

|

18

|

-

def format_symbolic_terms(terms):

|

|

19

|

-

"""

|

|

20

|

-

Formats a list of structured symbolic terms (coef, basis) to a string.

|

|

21

|

-

"""

|

|

22

|

-

formatted_terms = []

|

|

23

|

-

for coef, basis in terms:

|

|

24

|

-

if basis == "1":

|

|

25

|

-

formatted_terms.append(f"{coef:.4f}")

|

|

26

|

-

else:

|

|

27

|

-

formatted_terms.append(f"{coef:.4f}*{basis}")

|

|

28

|

-

return " + ".join(formatted_terms) if formatted_terms else "0"

|

oikan-0.0.2.3.dist-info/RECORD

DELETED

|

@@ -1,10 +0,0 @@

|

|

|

1

|

-

oikan/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

2

|

-

oikan/exceptions.py,sha256=UqT3uTtfiB8QA_3AMvKdHOme9WL9HZD_d7GHIk00LJw,394

|

|

3

|

-

oikan/model.py,sha256=iHWKjk_n0Kkw47UO2XFTc0faqGYBrQBJhmmRn1Po4qw,19604

|

|

4

|

-

oikan/symbolic.py,sha256=TtalmSpBecf33_g7yE3q-RPuCVRWQNaXWE4LsCNZmfg,1040

|

|

5

|

-

oikan/utils.py,sha256=sivt_8jzATH-eUZ3-P-tsdmyIgKsayibSZeP_MtLTfU,1969

|

|

6

|

-

oikan-0.0.2.3.dist-info/licenses/LICENSE,sha256=75ASVmU-XIpN-M4LbVmJ_ibgbzbvRLVti8FhnR0BTf8,1096

|

|

7

|

-

oikan-0.0.2.3.dist-info/METADATA,sha256=pr8kHktQQPBk9QA_gchl_ynHzCWWv6j9lib9dmXuYi0,8554

|

|

8

|

-

oikan-0.0.2.3.dist-info/WHEEL,sha256=CmyFI0kx5cdEMTLiONQRbGQwjIoR1aIYB7eCAQ4KPJ0,91

|

|

9

|

-

oikan-0.0.2.3.dist-info/top_level.txt,sha256=XwnwKwTJddZwIvtrUsAz-l-58BJRj6HjAGWrfYi_3QY,6

|

|

10

|

-

oikan-0.0.2.3.dist-info/RECORD,,

|

|

File without changes

|

|

File without changes

|

|

File without changes

|