massgen 0.0.3__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

Potentially problematic release.

This version of massgen might be problematic. Click here for more details.

- massgen/__init__.py +94 -0

- massgen/agent_config.py +507 -0

- massgen/backend/CLAUDE_API_RESEARCH.md +266 -0

- massgen/backend/Function calling openai responses.md +1161 -0

- massgen/backend/GEMINI_API_DOCUMENTATION.md +410 -0

- massgen/backend/OPENAI_RESPONSES_API_FORMAT.md +65 -0

- massgen/backend/__init__.py +25 -0

- massgen/backend/base.py +180 -0

- massgen/backend/chat_completions.py +228 -0

- massgen/backend/claude.py +661 -0

- massgen/backend/gemini.py +652 -0

- massgen/backend/grok.py +187 -0

- massgen/backend/response.py +397 -0

- massgen/chat_agent.py +440 -0

- massgen/cli.py +686 -0

- massgen/configs/README.md +293 -0

- massgen/configs/creative_team.yaml +53 -0

- massgen/configs/gemini_4o_claude.yaml +31 -0

- massgen/configs/news_analysis.yaml +51 -0

- massgen/configs/research_team.yaml +51 -0

- massgen/configs/single_agent.yaml +18 -0

- massgen/configs/single_flash2.5.yaml +44 -0

- massgen/configs/technical_analysis.yaml +51 -0

- massgen/configs/three_agents_default.yaml +31 -0

- massgen/configs/travel_planning.yaml +51 -0

- massgen/configs/two_agents.yaml +39 -0

- massgen/frontend/__init__.py +20 -0

- massgen/frontend/coordination_ui.py +945 -0

- massgen/frontend/displays/__init__.py +24 -0

- massgen/frontend/displays/base_display.py +83 -0

- massgen/frontend/displays/rich_terminal_display.py +3497 -0

- massgen/frontend/displays/simple_display.py +93 -0

- massgen/frontend/displays/terminal_display.py +381 -0

- massgen/frontend/logging/__init__.py +9 -0

- massgen/frontend/logging/realtime_logger.py +197 -0

- massgen/message_templates.py +431 -0

- massgen/orchestrator.py +1222 -0

- massgen/tests/__init__.py +10 -0

- massgen/tests/multi_turn_conversation_design.md +214 -0

- massgen/tests/multiturn_llm_input_analysis.md +189 -0

- massgen/tests/test_case_studies.md +113 -0

- massgen/tests/test_claude_backend.py +310 -0

- massgen/tests/test_grok_backend.py +160 -0

- massgen/tests/test_message_context_building.py +293 -0

- massgen/tests/test_rich_terminal_display.py +378 -0

- massgen/tests/test_v3_3agents.py +117 -0

- massgen/tests/test_v3_simple.py +216 -0

- massgen/tests/test_v3_three_agents.py +272 -0

- massgen/tests/test_v3_two_agents.py +176 -0

- massgen/utils.py +79 -0

- massgen/v1/README.md +330 -0

- massgen/v1/__init__.py +91 -0

- massgen/v1/agent.py +605 -0

- massgen/v1/agents.py +330 -0

- massgen/v1/backends/gemini.py +584 -0

- massgen/v1/backends/grok.py +410 -0

- massgen/v1/backends/oai.py +571 -0

- massgen/v1/cli.py +351 -0

- massgen/v1/config.py +169 -0

- massgen/v1/examples/fast-4o-mini-config.yaml +44 -0

- massgen/v1/examples/fast_config.yaml +44 -0

- massgen/v1/examples/production.yaml +70 -0

- massgen/v1/examples/single_agent.yaml +39 -0

- massgen/v1/logging.py +974 -0

- massgen/v1/main.py +368 -0

- massgen/v1/orchestrator.py +1138 -0

- massgen/v1/streaming_display.py +1190 -0

- massgen/v1/tools.py +160 -0

- massgen/v1/types.py +245 -0

- massgen/v1/utils.py +199 -0

- massgen-0.0.3.dist-info/METADATA +568 -0

- massgen-0.0.3.dist-info/RECORD +76 -0

- massgen-0.0.3.dist-info/WHEEL +5 -0

- massgen-0.0.3.dist-info/entry_points.txt +2 -0

- massgen-0.0.3.dist-info/licenses/LICENSE +204 -0

- massgen-0.0.3.dist-info/top_level.txt +1 -0

|

@@ -0,0 +1,1161 @@

|

|

|

1

|

+

Function calling

|

|

2

|

+

================

|

|

3

|

+

|

|

4

|

+

Enable models to fetch data and take actions.

|

|

5

|

+

|

|

6

|

+

**Function calling** provides a powerful and flexible way for OpenAI models to interface with your code or external services. This guide will explain how to connect the models to your own custom code to fetch data or take action.

|

|

7

|

+

|

|

8

|

+

Get weather

|

|

9

|

+

|

|

10

|

+

Function calling example with get\_weather function

|

|

11

|

+

|

|

12

|

+

```python

|

|

13

|

+

from openai import OpenAI

|

|

14

|

+

|

|

15

|

+

client = OpenAI()

|

|

16

|

+

|

|

17

|

+

tools = [{

|

|

18

|

+

"type": "function",

|

|

19

|

+

"name": "get_weather",

|

|

20

|

+

"description": "Get current temperature for a given location.",

|

|

21

|

+

"parameters": {

|

|

22

|

+

"type": "object",

|

|

23

|

+

"properties": {

|

|

24

|

+

"location": {

|

|

25

|

+

"type": "string",

|

|

26

|

+

"description": "City and country e.g. Bogotá, Colombia"

|

|

27

|

+

}

|

|

28

|

+

},

|

|

29

|

+

"required": [

|

|

30

|

+

"location"

|

|

31

|

+

],

|

|

32

|

+

"additionalProperties": False

|

|

33

|

+

}

|

|

34

|

+

}]

|

|

35

|

+

|

|

36

|

+

response = client.responses.create(

|

|

37

|

+

model="gpt-4.1",

|

|

38

|

+

input=[{"role": "user", "content": "What is the weather like in Paris today?"}],

|

|

39

|

+

tools=tools

|

|

40

|

+

)

|

|

41

|

+

|

|

42

|

+

print(response.output)

|

|

43

|

+

```

|

|

44

|

+

|

|

45

|

+

```javascript

|

|

46

|

+

import { OpenAI } from "openai";

|

|

47

|

+

|

|

48

|

+

const openai = new OpenAI();

|

|

49

|

+

|

|

50

|

+

const tools = [{

|

|

51

|

+

"type": "function",

|

|

52

|

+

"name": "get_weather",

|

|

53

|

+

"description": "Get current temperature for a given location.",

|

|

54

|

+

"parameters": {

|

|

55

|

+

"type": "object",

|

|

56

|

+

"properties": {

|

|

57

|

+

"location": {

|

|

58

|

+

"type": "string",

|

|

59

|

+

"description": "City and country e.g. Bogotá, Colombia"

|

|

60

|

+

}

|

|

61

|

+

},

|

|

62

|

+

"required": [

|

|

63

|

+

"location"

|

|

64

|

+

],

|

|

65

|

+

"additionalProperties": false

|

|

66

|

+

}

|

|

67

|

+

}];

|

|

68

|

+

|

|

69

|

+

const response = await openai.responses.create({

|

|

70

|

+

model: "gpt-4.1",

|

|

71

|

+

input: [{ role: "user", content: "What is the weather like in Paris today?" }],

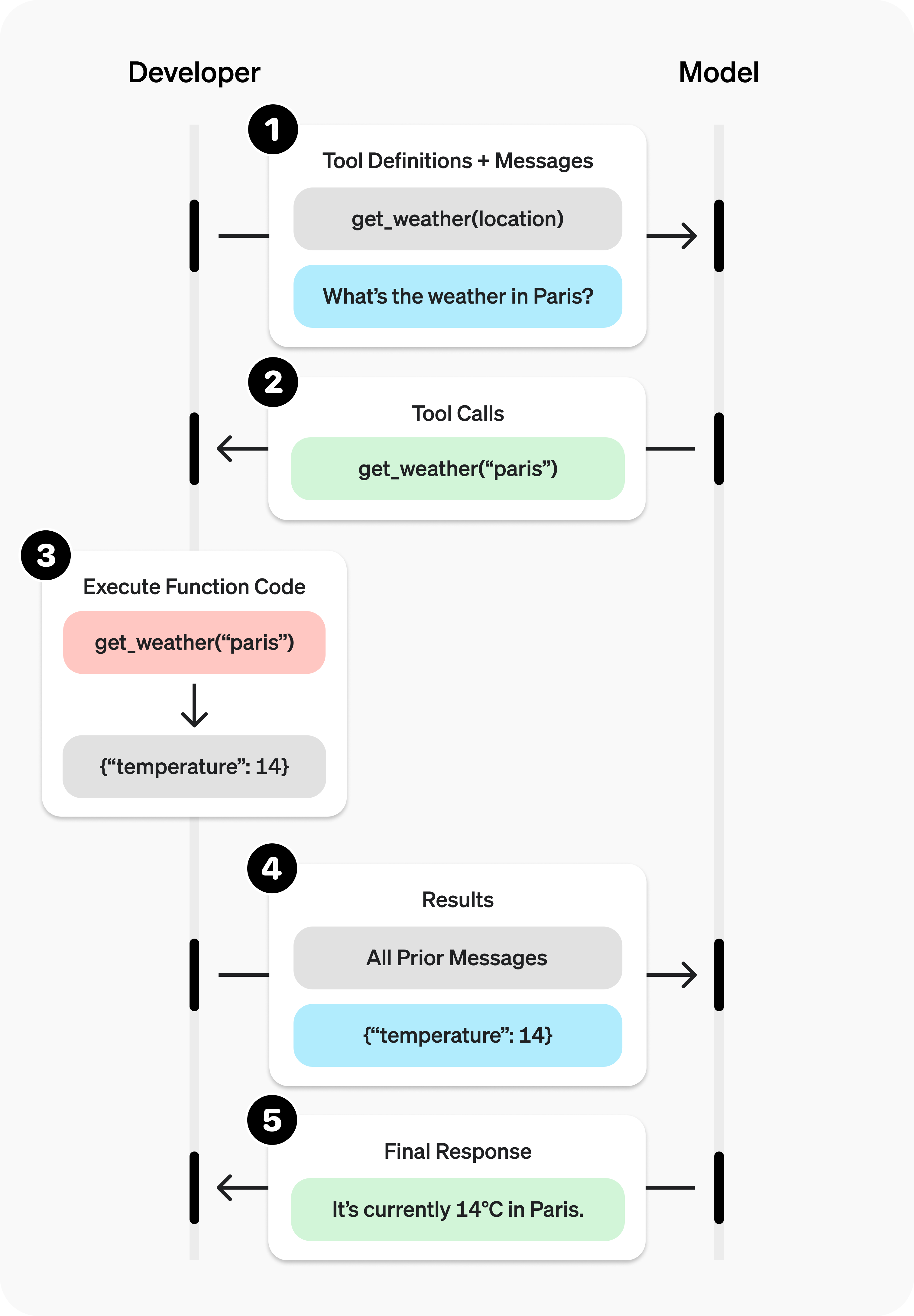

|

|

72

|

+

tools,

|

|

73

|

+

});

|

|

74

|

+

|

|

75

|

+

console.log(response.output);

|

|

76

|

+

```

|

|

77

|

+

|

|

78

|

+

```bash

|

|

79

|

+

curl https://api.openai.com/v1/responses \

|

|

80

|

+

-H "Content-Type: application/json" \

|

|

81

|

+

-H "Authorization: Bearer $OPENAI_API_KEY" \

|

|

82

|

+

-d '{

|

|

83

|

+

"model": "gpt-4.1",

|

|

84

|

+

"input": "What is the weather like in Paris today?",

|

|

85

|

+

"tools": [

|

|

86

|

+

{

|

|

87

|

+

"type": "function",

|

|

88

|

+

"name": "get_weather",

|

|

89

|

+

"description": "Get current temperature for a given location.",

|

|

90

|

+

"parameters": {

|

|

91

|

+

"type": "object",

|

|

92

|

+

"properties": {

|

|

93

|

+

"location": {

|

|

94

|

+

"type": "string",

|

|

95

|

+

"description": "City and country e.g. Bogotá, Colombia"

|

|

96

|

+

}

|

|

97

|

+

},

|

|

98

|

+

"required": [

|

|

99

|

+

"location"

|

|

100

|

+

],

|

|

101

|

+

"additionalProperties": false

|

|

102

|

+

}

|

|

103

|

+

}

|

|

104

|

+

]

|

|

105

|

+

}'

|

|

106

|

+

```

|

|

107

|

+

|

|

108

|

+

Output

|

|

109

|

+

|

|

110

|

+

```json

|

|

111

|

+

[{

|

|

112

|

+

"type": "function_call",

|

|

113

|

+

"id": "fc_12345xyz",

|

|

114

|

+

"call_id": "call_12345xyz",

|

|

115

|

+

"name": "get_weather",

|

|

116

|

+

"arguments": "{\"location\":\"Paris, France\"}"

|

|

117

|

+

}]

|

|

118

|

+

```

|

|

119

|

+

|

|

120

|

+

Send email

|

|

121

|

+

|

|

122

|

+

Function calling example with send\_email function

|

|

123

|

+

|

|

124

|

+

```python

|

|

125

|

+

from openai import OpenAI

|

|

126

|

+

|

|

127

|

+

client = OpenAI()

|

|

128

|

+

|

|

129

|

+

tools = [{

|

|

130

|

+

"type": "function",

|

|

131

|

+

"name": "send_email",

|

|

132

|

+

"description": "Send an email to a given recipient with a subject and message.",

|

|

133

|

+

"parameters": {

|

|

134

|

+

"type": "object",

|

|

135

|

+

"properties": {

|

|

136

|

+

"to": {

|

|

137

|

+

"type": "string",

|

|

138

|

+

"description": "The recipient email address."

|

|

139

|

+

},

|

|

140

|

+

"subject": {

|

|

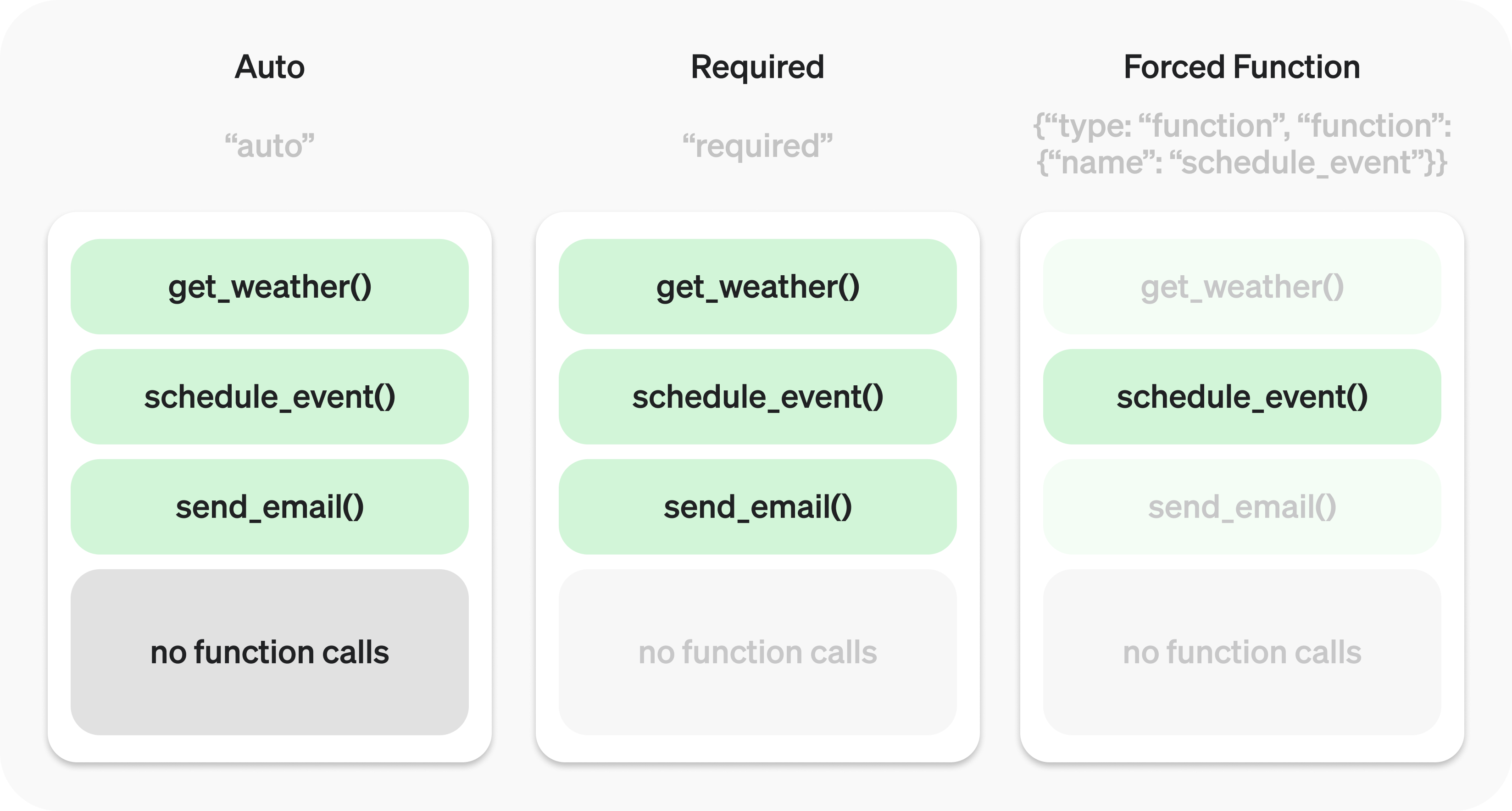

141

|

+

"type": "string",

|

|

142

|

+

"description": "Email subject line."

|

|

143

|

+

},

|

|

144

|

+

"body": {

|

|

145

|

+

"type": "string",

|

|

146

|

+

"description": "Body of the email message."

|

|

147

|

+

}

|

|

148

|

+

},

|

|

149

|

+

"required": [

|

|

150

|

+

"to",

|

|

151

|

+

"subject",

|

|

152

|

+

"body"

|

|

153

|

+

],

|

|

154

|

+

"additionalProperties": False

|

|

155

|

+

}

|

|

156

|

+

}]

|

|

157

|

+

|

|

158

|

+

response = client.responses.create(

|

|

159

|

+

model="gpt-4.1",

|

|

160

|

+

input=[{"role": "user", "content": "Can you send an email to ilan@example.com and katia@example.com saying hi?"}],

|

|

161

|

+

tools=tools

|

|

162

|

+

)

|

|

163

|

+

|

|

164

|

+

print(response.output)

|

|

165

|

+

```

|

|

166

|

+

|

|

167

|

+

```javascript

|

|

168

|

+

import { OpenAI } from "openai";

|

|

169

|

+

|

|

170

|

+

const openai = new OpenAI();

|

|

171

|

+

|

|

172

|

+

const tools = [{

|

|

173

|

+

"type": "function",

|

|

174

|

+

"name": "send_email",

|

|

175

|

+

"description": "Send an email to a given recipient with a subject and message.",

|

|

176

|

+

"parameters": {

|

|

177

|

+

"type": "object",

|

|

178

|

+

"properties": {

|

|

179

|

+

"to": {

|

|

180

|

+

"type": "string",

|

|

181

|

+

"description": "The recipient email address."

|

|

182

|

+

},

|

|

183

|

+

"subject": {

|

|

184

|

+

"type": "string",

|

|

185

|

+

"description": "Email subject line."

|

|

186

|

+

},

|

|

187

|

+

"body": {

|

|

188

|

+

"type": "string",

|

|

189

|

+

"description": "Body of the email message."

|

|

190

|

+

}

|

|

191

|

+

},

|

|

192

|

+

"required": [

|

|

193

|

+

"to",

|

|

194

|

+

"subject",

|

|

195

|

+

"body"

|

|

196

|

+

],

|

|

197

|

+

"additionalProperties": false

|

|

198

|

+

}

|

|

199

|

+

}];

|

|

200

|

+

|

|

201

|

+

const response = await openai.responses.create({

|

|

202

|

+

model: "gpt-4.1",

|

|

203

|

+

input: [{ role: "user", content: "Can you send an email to ilan@example.com and katia@example.com saying hi?" }],

|

|

204

|

+

tools,

|

|

205

|

+

});

|

|

206

|

+

|

|

207

|

+

console.log(response.output);

|

|

208

|

+

```

|

|

209

|

+

|

|

210

|

+

```bash

|

|

211

|

+

curl https://api.openai.com/v1/responses \

|

|

212

|

+

-H "Content-Type: application/json" \

|

|

213

|

+

-H "Authorization: Bearer $OPENAI_API_KEY" \

|

|

214

|

+

-d '{

|

|

215

|

+

"model": "gpt-4.1",

|

|

216

|

+

"input": "Can you send an email to ilan@example.com and katia@example.com saying hi?",

|

|

217

|

+

"tools": [

|

|

218

|

+

{

|

|

219

|

+

"type": "function",

|

|

220

|

+

"name": "send_email",

|

|

221

|

+

"description": "Send an email to a given recipient with a subject and message.",

|

|

222

|

+

"parameters": {

|

|

223

|

+

"type": "object",

|

|

224

|

+

"properties": {

|

|

225

|

+

"to": {

|

|

226

|

+

"type": "string",

|

|

227

|

+

"description": "The recipient email address."

|

|

228

|

+

},

|

|

229

|

+

"subject": {

|

|

230

|

+

"type": "string",

|

|

231

|

+

"description": "Email subject line."

|

|

232

|

+

},

|

|

233

|

+

"body": {

|

|

234

|

+

"type": "string",

|

|

235

|

+

"description": "Body of the email message."

|

|

236

|

+

}

|

|

237

|

+

},

|

|

238

|

+

"required": [

|

|

239

|

+

"to",

|

|

240

|

+

"subject",

|

|

241

|

+

"body"

|

|

242

|

+

],

|

|

243

|

+

"additionalProperties": false

|

|

244

|

+

}

|

|

245

|

+

}

|

|

246

|

+

]

|

|

247

|

+

}'

|

|

248

|

+

```

|

|

249

|

+

|

|

250

|

+

Output

|

|

251

|

+

|

|

252

|

+

```json

|

|

253

|

+

[

|

|

254

|

+

{

|

|

255

|

+

"type": "function_call",

|

|

256

|

+

"id": "fc_12345xyz",

|

|

257

|

+

"call_id": "call_9876abc",

|

|

258

|

+

"name": "send_email",

|

|

259

|

+

"arguments": "{\"to\":\"ilan@example.com\",\"subject\":\"Hello!\",\"body\":\"Just wanted to say hi\"}"

|

|

260

|

+

},

|

|

261

|

+

{

|

|

262

|

+

"type": "function_call",

|

|

263

|

+

"id": "fc_12345xyz",

|

|

264

|

+

"call_id": "call_9876abc",

|

|

265

|

+

"name": "send_email",

|

|

266

|

+

"arguments": "{\"to\":\"katia@example.com\",\"subject\":\"Hello!\",\"body\":\"Just wanted to say hi\"}"

|

|

267

|

+

}

|

|

268

|

+

]

|

|

269

|

+

```

|

|

270

|

+

|

|

271

|

+

Search knowledge base

|

|

272

|

+

|

|

273

|

+

Function calling example with search\_knowledge\_base function

|

|

274

|

+

|

|

275

|

+

```python

|

|

276

|

+

from openai import OpenAI

|

|

277

|

+

|

|

278

|

+

client = OpenAI()

|

|

279

|

+

|

|

280

|

+

tools = [{

|

|

281

|

+

"type": "function",

|

|

282

|

+

"name": "search_knowledge_base",

|

|

283

|

+

"description": "Query a knowledge base to retrieve relevant info on a topic.",

|

|

284

|

+

"parameters": {

|

|

285

|

+

"type": "object",

|

|

286

|

+

"properties": {

|

|

287

|

+

"query": {

|

|

288

|

+

"type": "string",

|

|

289

|

+

"description": "The user question or search query."

|

|

290

|

+

},

|

|

291

|

+

"options": {

|

|

292

|

+

"type": "object",

|

|

293

|

+

"properties": {

|

|

294

|

+

"num_results": {

|

|

295

|

+

"type": "number",

|

|

296

|

+

"description": "Number of top results to return."

|

|

297

|

+

},

|

|

298

|

+

"domain_filter": {

|

|

299

|

+

"type": [

|

|

300

|

+

"string",

|

|

301

|

+

"null"

|

|

302

|

+

],

|

|

303

|

+

"description": "Optional domain to narrow the search (e.g. 'finance', 'medical'). Pass null if not needed."

|

|

304

|

+

},

|

|

305

|

+

"sort_by": {

|

|

306

|

+

"type": [

|

|

307

|

+

"string",

|

|

308

|

+

"null"

|

|

309

|

+

],

|

|

310

|

+

"enum": [

|

|

311

|

+

"relevance",

|

|

312

|

+

"date",

|

|

313

|

+

"popularity",

|

|

314

|

+

"alphabetical"

|

|

315

|

+

],

|

|

316

|

+

"description": "How to sort results. Pass null if not needed."

|

|

317

|

+

}

|

|

318

|

+

},

|

|

319

|

+

"required": [

|

|

320

|

+

"num_results",

|

|

321

|

+

"domain_filter",

|

|

322

|

+

"sort_by"

|

|

323

|

+

],

|

|

324

|

+

"additionalProperties": False

|

|

325

|

+

}

|

|

326

|

+

},

|

|

327

|

+

"required": [

|

|

328

|

+

"query",

|

|

329

|

+

"options"

|

|

330

|

+

],

|

|

331

|

+

"additionalProperties": False

|

|

332

|

+

}

|

|

333

|

+

}]

|

|

334

|

+

|

|

335

|

+

response = client.responses.create(

|

|

336

|

+

model="gpt-4.1",

|

|

337

|

+

input=[{"role": "user", "content": "Can you find information about ChatGPT in the AI knowledge base?"}],

|

|

338

|

+

tools=tools

|

|

339

|

+

)

|

|

340

|

+

|

|

341

|

+

print(response.output)

|

|

342

|

+

```

|

|

343

|

+

|

|

344

|

+

```javascript

|

|

345

|

+

import { OpenAI } from "openai";

|

|

346

|

+

|

|

347

|

+

const openai = new OpenAI();

|

|

348

|

+

|

|

349

|

+

const tools = [{

|

|

350

|

+

"type": "function",

|

|

351

|

+

"name": "search_knowledge_base",

|

|

352

|

+

"description": "Query a knowledge base to retrieve relevant info on a topic.",

|

|

353

|

+

"parameters": {

|

|

354

|

+

"type": "object",

|

|

355

|

+

"properties": {

|

|

356

|

+

"query": {

|

|

357

|

+

"type": "string",

|

|

358

|

+

"description": "The user question or search query."

|

|

359

|

+

},

|

|

360

|

+

"options": {

|

|

361

|

+

"type": "object",

|

|

362

|

+

"properties": {

|

|

363

|

+

"num_results": {

|

|

364

|

+

"type": "number",

|

|

365

|

+

"description": "Number of top results to return."

|

|

366

|

+

},

|

|

367

|

+

"domain_filter": {

|

|

368

|

+

"type": [

|

|

369

|

+

"string",

|

|

370

|

+

"null"

|

|

371

|

+

],

|

|

372

|

+

"description": "Optional domain to narrow the search (e.g. 'finance', 'medical'). Pass null if not needed."

|

|

373

|

+

},

|

|

374

|

+

"sort_by": {

|

|

375

|

+

"type": [

|

|

376

|

+

"string",

|

|

377

|

+

"null"

|

|

378

|

+

],

|

|

379

|

+

"enum": [

|

|

380

|

+

"relevance",

|

|

381

|

+

"date",

|

|

382

|

+

"popularity",

|

|

383

|

+

"alphabetical"

|

|

384

|

+

],

|

|

385

|

+

"description": "How to sort results. Pass null if not needed."

|

|

386

|

+

}

|

|

387

|

+

},

|

|

388

|

+

"required": [

|

|

389

|

+

"num_results",

|

|

390

|

+

"domain_filter",

|

|

391

|

+

"sort_by"

|

|

392

|

+

],

|

|

393

|

+

"additionalProperties": false

|

|

394

|

+

}

|

|

395

|

+

},

|

|

396

|

+

"required": [

|

|

397

|

+

"query",

|

|

398

|

+

"options"

|

|

399

|

+

],

|

|

400

|

+

"additionalProperties": false

|

|

401

|

+

}

|

|

402

|

+

}];

|

|

403

|

+

|

|

404

|

+

const response = await openai.responses.create({

|

|

405

|

+

model: "gpt-4.1",

|

|

406

|

+

input: [{ role: "user", content: "Can you find information about ChatGPT in the AI knowledge base?" }],

|

|

407

|

+

tools,

|

|

408

|

+

});

|

|

409

|

+

|

|

410

|

+

console.log(response.output);

|

|

411

|

+

```

|

|

412

|

+

|

|

413

|

+

```bash

|

|

414

|

+

curl https://api.openai.com/v1/responses \

|

|

415

|

+

-H "Content-Type: application/json" \

|

|

416

|

+

-H "Authorization: Bearer $OPENAI_API_KEY" \

|

|

417

|

+

-d '{

|

|

418

|

+

"model": "gpt-4.1",

|

|

419

|

+

"input": "Can you find information about ChatGPT in the AI knowledge base?",

|

|

420

|

+

"tools": [

|

|

421

|

+

{

|

|

422

|

+

"type": "function",

|

|

423

|

+

"name": "search_knowledge_base",

|

|

424

|

+

"description": "Query a knowledge base to retrieve relevant info on a topic.",

|

|

425

|

+

"parameters": {

|

|

426

|

+

"type": "object",

|

|

427

|

+

"properties": {

|

|

428

|

+

"query": {

|

|

429

|

+

"type": "string",

|

|

430

|

+

"description": "The user question or search query."

|

|

431

|

+

},

|

|

432

|

+

"options": {

|

|

433

|

+

"type": "object",

|

|

434

|

+

"properties": {

|

|

435

|

+

"num_results": {

|

|

436

|

+

"type": "number",

|

|

437

|

+

"description": "Number of top results to return."

|

|

438

|

+

},

|

|

439

|

+

"domain_filter": {

|

|

440

|

+

"type": [

|

|

441

|

+

"string",

|

|

442

|

+

"null"

|

|

443

|

+

],

|

|

444

|

+

"description": "Optional domain to narrow the search (e.g. 'finance', 'medical'). Pass null if not needed."

|

|

445

|

+

},

|

|

446

|

+

"sort_by": {

|

|

447

|

+

"type": [

|

|

448

|

+

"string",

|

|

449

|

+

"null"

|

|

450

|

+

],

|

|

451

|

+

"enum": [

|

|

452

|

+

"relevance",

|

|

453

|

+

"date",

|

|

454

|

+

"popularity",

|

|

455

|

+

"alphabetical"

|

|

456

|

+

],

|

|

457

|

+

"description": "How to sort results. Pass null if not needed."

|

|

458

|

+

}

|

|

459

|

+

},

|

|

460

|

+

"required": [

|

|

461

|

+

"num_results",

|

|

462

|

+

"domain_filter",

|

|

463

|

+

"sort_by"

|

|

464

|

+

],

|

|

465

|

+

"additionalProperties": false

|

|

466

|

+

}

|

|

467

|

+

},

|

|

468

|

+

"required": [

|

|

469

|

+

"query",

|

|

470

|

+

"options"

|

|

471

|

+

],

|

|

472

|

+

"additionalProperties": false

|

|

473

|

+

}

|

|

474

|

+

}

|

|

475

|

+

]

|

|

476

|

+

}'

|

|

477

|

+

```

|

|

478

|

+

|

|

479

|

+

Output

|

|

480

|

+

|

|

481

|

+

```json

|

|

482

|

+

[{

|

|

483

|

+

"type": "function_call",

|

|

484

|

+

"id": "fc_12345xyz",

|

|

485

|

+

"call_id": "call_4567xyz",

|

|

486

|

+

"name": "search_knowledge_base",

|

|

487

|

+

"arguments": "{\"query\":\"What is ChatGPT?\",\"options\":{\"num_results\":3,\"domain_filter\":null,\"sort_by\":\"relevance\"}}"

|

|

488

|

+

}]

|

|

489

|

+

```

|

|

490

|

+

|

|

491

|

+

Experiment with function calling and [generate function schemas](/docs/guides/prompt-generation) in the [Playground](/playground)!

|

|

492

|

+

|

|

493

|

+

Overview

|

|

494

|

+

--------

|

|

495

|

+

|

|

496

|

+

You can give the model access to your own custom code through **function calling**. Based on the system prompt and messages, the model may decide to call these functions — **instead of (or in addition to) generating text or audio**.

|

|

497

|

+

|

|

498

|

+

You'll then execute the function code, send back the results, and the model will incorporate them into its final response.

|

|

499

|

+

|

|

500

|

+

|

|

501

|

+

|

|

502

|

+

Function calling has two primary use cases:

|

|

503

|

+

|

|

504

|

+

|||

|

|

505

|

+

|---|---|

|

|

506

|

+

|Fetching Data|Retrieve up-to-date information to incorporate into the model's response (RAG). Useful for searching knowledge bases and retrieving specific data from APIs (e.g. current weather data).|

|

|

507

|

+

|Taking Action|Perform actions like submitting a form, calling APIs, modifying application state (UI/frontend or backend), or taking agentic workflow actions (like handing off the conversation).|

|

|

508

|

+

|

|

509

|

+

### Sample function

|

|

510

|

+

|

|

511

|

+

Let's look at the steps to allow a model to use a real `get_weather` function defined below:

|

|

512

|

+

|

|

513

|

+

Sample get\_weather function implemented in your codebase

|

|

514

|

+

|

|

515

|

+

```python

|

|

516

|

+

import requests

|

|

517

|

+

|

|

518

|

+

def get_weather(latitude, longitude):

|

|

519

|

+

response = requests.get(f"https://api.open-meteo.com/v1/forecast?latitude={latitude}&longitude={longitude}¤t=temperature_2m,wind_speed_10m&hourly=temperature_2m,relative_humidity_2m,wind_speed_10m")

|

|

520

|

+

data = response.json()

|

|

521

|

+

return data['current']['temperature_2m']

|

|

522

|

+

```

|

|

523

|

+

|

|

524

|

+

```javascript

|

|

525

|

+

async function getWeather(latitude, longitude) {

|

|

526

|

+

const response = await fetch(`https://api.open-meteo.com/v1/forecast?latitude=${latitude}&longitude=${longitude}¤t=temperature_2m,wind_speed_10m&hourly=temperature_2m,relative_humidity_2m,wind_speed_10m`);

|

|

527

|

+

const data = await response.json();

|

|

528

|

+

return data.current.temperature_2m;

|

|

529

|

+

}

|

|

530

|

+

```

|

|

531

|

+

|

|

532

|

+

Unlike the diagram earlier, this function expects precise `latitude` and `longitude` instead of a general `location` parameter. (However, our models can automatically determine the coordinates for many locations!)

|

|

533

|

+

|

|

534

|

+

### Function calling steps

|

|

535

|

+

|

|

536

|

+

* **Call model with [functions defined](/docs/guides/function-calling#defining-functions)** – along with your system and user messages.

|

|

537

|

+

|

|

538

|

+

|

|

539

|

+

Step 1: Call model with get\_weather tool defined

|

|

540

|

+

|

|

541

|

+

```python

|

|

542

|

+

from openai import OpenAI

|

|

543

|

+

import json

|

|

544

|

+

|

|

545

|

+

client = OpenAI()

|

|

546

|

+

|

|

547

|

+

tools = [{

|

|

548

|

+

"type": "function",

|

|

549

|

+

"name": "get_weather",

|

|

550

|

+

"description": "Get current temperature for provided coordinates in celsius.",

|

|

551

|

+

"parameters": {

|

|

552

|

+

"type": "object",

|

|

553

|

+

"properties": {

|

|

554

|

+

"latitude": {"type": "number"},

|

|

555

|

+

"longitude": {"type": "number"}

|

|

556

|

+

},

|

|

557

|

+

"required": ["latitude", "longitude"],

|

|

558

|

+

"additionalProperties": False

|

|

559

|

+

},

|

|

560

|

+

"strict": True

|

|

561

|

+

}]

|

|

562

|

+

|

|

563

|

+

input_messages = [{"role": "user", "content": "What's the weather like in Paris today?"}]

|

|

564

|

+

|

|

565

|

+

response = client.responses.create(

|

|

566

|

+

model="gpt-4.1",

|

|

567

|

+

input=input_messages,

|

|

568

|

+

tools=tools,

|

|

569

|

+

)

|

|

570

|

+

```

|

|

571

|

+

|

|

572

|

+

```javascript

|

|

573

|

+

import { OpenAI } from "openai";

|

|

574

|

+

|

|

575

|

+

const openai = new OpenAI();

|

|

576

|

+

|

|

577

|

+

const tools = [{

|

|

578

|

+

type: "function",

|

|

579

|

+

name: "get_weather",

|

|

580

|

+

description: "Get current temperature for provided coordinates in celsius.",

|

|

581

|

+

parameters: {

|

|

582

|

+

type: "object",

|

|

583

|

+

properties: {

|

|

584

|

+

latitude: { type: "number" },

|

|

585

|

+

longitude: { type: "number" }

|

|

586

|

+

},

|

|

587

|

+

required: ["latitude", "longitude"],

|

|

588

|

+

additionalProperties: false

|

|

589

|

+

},

|

|

590

|

+

strict: true

|

|

591

|

+

}];

|

|

592

|

+

|

|

593

|

+

const input = [

|

|

594

|

+

{

|

|

595

|

+

role: "user",

|

|

596

|

+

content: "What's the weather like in Paris today?"

|

|

597

|

+

}

|

|

598

|

+

];

|

|

599

|

+

|

|

600

|

+

const response = await openai.responses.create({

|

|

601

|

+

model: "gpt-4.1",

|

|

602

|

+

input,

|

|

603

|

+

tools,

|

|

604

|

+

});

|

|

605

|

+

```

|

|

606

|

+

|

|

607

|

+

* **Model decides to call function(s)** – model returns the **name** and **input arguments**.

|

|

608

|

+

|

|

609

|

+

|

|

610

|

+

response.output

|

|

611

|

+

|

|

612

|

+

```json

|

|

613

|

+

[{

|

|

614

|

+

"type": "function_call",

|

|

615

|

+

"id": "fc_12345xyz",

|

|

616

|

+

"call_id": "call_12345xyz",

|

|

617

|

+

"name": "get_weather",

|

|

618

|

+

"arguments": "{\"latitude\":48.8566,\"longitude\":2.3522}"

|

|

619

|

+

}]

|

|

620

|

+

```

|

|

621

|

+

|

|

622

|

+

* **Execute function code** – parse the model's response and [handle function calls](/docs/guides/function-calling#handling-function-calls).

|

|

623

|

+

|

|

624

|

+

|

|

625

|

+

Step 3: Execute get\_weather function

|

|

626

|

+

|

|

627

|

+

```python

|

|

628

|

+

tool_call = response.output[0]

|

|

629

|

+

args = json.loads(tool_call.arguments)

|

|

630

|

+

|

|

631

|

+

result = get_weather(args["latitude"], args["longitude"])

|

|

632

|

+

```

|

|

633

|

+

|

|

634

|

+

```javascript

|

|

635

|

+

const toolCall = response.output[0];

|

|

636

|

+

const args = JSON.parse(toolCall.arguments);

|

|

637

|

+

|

|

638

|

+

const result = await getWeather(args.latitude, args.longitude);

|

|

639

|

+

```

|

|

640

|

+

|

|

641

|

+

* **Supply model with results** – so it can incorporate them into its final response.

|

|

642

|

+

|

|

643

|

+

|

|

644

|

+

Step 4: Supply result and call model again

|

|

645

|

+

|

|

646

|

+

```python

|

|

647

|

+

input_messages.append(tool_call) # append model's function call message

|

|

648

|

+

input_messages.append({ # append result message

|

|

649

|

+

"type": "function_call_output",

|

|

650

|

+

"call_id": tool_call.call_id,

|

|

651

|

+

"output": str(result)

|

|

652

|

+

})

|

|

653

|

+

|

|

654

|

+

response_2 = client.responses.create(

|

|

655

|

+

model="gpt-4.1",

|

|

656

|

+

input=input_messages,

|

|

657

|

+

tools=tools,

|

|

658

|

+

)

|

|

659

|

+

print(response_2.output_text)

|

|

660

|

+

```

|

|

661

|

+

|

|

662

|

+

```javascript

|

|

663

|

+

input.push(toolCall); // append model's function call message

|

|

664

|

+

input.push({ // append result message

|

|

665

|

+

type: "function_call_output",

|

|

666

|

+

call_id: toolCall.call_id,

|

|

667

|

+

output: result.toString()

|

|

668

|

+

});

|

|

669

|

+

|

|

670

|

+

const response2 = await openai.responses.create({

|

|

671

|

+

model: "gpt-4.1",

|

|

672

|

+

input,

|

|

673

|

+

tools,

|

|

674

|

+

store: true,

|

|

675

|

+

});

|

|

676

|

+

|

|

677

|

+

console.log(response2.output_text)

|

|

678

|

+

```

|

|

679

|

+

|

|

680

|

+

* **Model responds** – incorporating the result in its output.

|

|

681

|

+

|

|

682

|

+

|

|

683

|

+

response\_2.output\_text

|

|

684

|

+

|

|

685

|

+

```json

|

|

686

|

+

"The current temperature in Paris is 14°C (57.2°F)."

|

|

687

|

+

```

|

|

688

|

+

|

|

689

|

+

Defining functions

|

|

690

|

+

------------------

|

|

691

|

+

|

|

692

|

+

Functions can be set in the `tools` parameter of each API request.

|

|

693

|

+

|

|

694

|

+

A function is defined by its schema, which informs the model what it does and what input arguments it expects. It comprises the following fields:

|

|

695

|

+

|

|

696

|

+

|Field|Description|

|

|

697

|

+

|---|---|

|

|

698

|

+

|type|This should always be function|

|

|

699

|

+

|name|The function's name (e.g. get_weather)|

|

|

700

|

+

|description|Details on when and how to use the function|

|

|

701

|

+

|parameters|JSON schema defining the function's input arguments|

|

|

702

|

+

|strict|Whether to enforce strict mode for the function call|

|

|

703

|

+

|

|

704

|

+

Take a look at this example or generate your own below (or in our [Playground](/playground)).

|

|

705

|

+

|

|

706

|

+

```json

|

|

707

|

+

{

|

|

708

|

+

"type": "function",

|

|

709

|

+

"name": "get_weather",

|

|

710

|

+

"description": "Retrieves current weather for the given location.",

|

|

711

|

+

"parameters": {

|

|

712

|

+

"type": "object",

|

|

713

|

+

"properties": {

|

|

714

|

+

"location": {

|

|

715

|

+

"type": "string",

|

|

716

|

+

"description": "City and country e.g. Bogotá, Colombia"

|

|

717

|

+

},

|

|

718

|

+

"units": {

|

|

719

|

+

"type": "string",

|

|

720

|

+

"enum": [

|

|

721

|

+

"celsius",

|

|

722

|

+

"fahrenheit"

|

|

723

|

+

],

|

|

724

|

+

"description": "Units the temperature will be returned in."

|

|

725

|

+

}

|

|

726

|

+

},

|

|

727

|

+

"required": [

|

|

728

|

+

"location",

|

|

729

|

+

"units"

|

|

730

|

+

],

|

|

731

|

+

"additionalProperties": false

|

|

732

|

+

},

|

|

733

|

+

"strict": true

|

|

734

|

+

}

|

|

735

|

+

```

|

|

736

|

+

|

|

737

|

+

Because the `parameters` are defined by a [JSON schema](https://json-schema.org/), you can leverage many of its rich features like property types, enums, descriptions, nested objects, and, recursive objects.

|

|

738

|

+

|

|

739

|

+

### Best practices for defining functions

|

|

740

|

+

|

|

741

|

+

1. **Write clear and detailed function names, parameter descriptions, and instructions.**

|

|

742

|

+

|

|

743

|

+

* **Explicitly describe the purpose of the function and each parameter** (and its format), and what the output represents.

|

|

744

|

+

* **Use the system prompt to describe when (and when not) to use each function.** Generally, tell the model _exactly_ what to do.

|

|

745

|

+

* **Include examples and edge cases**, especially to rectify any recurring failures. (**Note:** Adding examples may hurt performance for [reasoning models](/docs/guides/reasoning).)

|

|

746

|

+

2. **Apply software engineering best practices.**

|

|

747

|

+

|

|

748

|

+

* **Make the functions obvious and intuitive**. ([principle of least surprise](https://en.wikipedia.org/wiki/Principle_of_least_astonishment))

|

|

749

|

+

* **Use enums** and object structure to make invalid states unrepresentable. (e.g. `toggle_light(on: bool, off: bool)` allows for invalid calls)

|

|

750

|

+

* **Pass the intern test.** Can an intern/human correctly use the function given nothing but what you gave the model? (If not, what questions do they ask you? Add the answers to the prompt.)

|

|

751

|

+

3. **Offload the burden from the model and use code where possible.**

|

|

752

|

+

|

|

753

|

+

* **Don't make the model fill arguments you already know.** For example, if you already have an `order_id` based on a previous menu, don't have an `order_id` param – instead, have no params `submit_refund()` and pass the `order_id` with code.

|

|

754

|

+

* **Combine functions that are always called in sequence.** For example, if you always call `mark_location()` after `query_location()`, just move the marking logic into the query function call.

|

|

755

|

+

4. **Keep the number of functions small for higher accuracy.**

|

|

756

|

+

|

|

757

|

+

* **Evaluate your performance** with different numbers of functions.

|

|

758

|

+

* **Aim for fewer than 20 functions** at any one time, though this is just a soft suggestion.

|

|

759

|

+

5. **Leverage OpenAI resources.**

|

|

760

|

+

|

|

761

|

+

* **Generate and iterate on function schemas** in the [Playground](/playground).

|

|

762

|

+

* **Consider [fine-tuning](https://platform.openai.com/docs/guides/fine-tuning) to increase function calling accuracy** for large numbers of functions or difficult tasks. ([cookbook](https://cookbook.openai.com/examples/fine_tuning_for_function_calling))

|

|

763

|

+

|

|

764

|

+

### Token Usage

|

|

765

|

+

|

|

766

|

+

Under the hood, functions are injected into the system message in a syntax the model has been trained on. This means functions count against the model's context limit and are billed as input tokens. If you run into token limits, we suggest limiting the number of functions or the length of the descriptions you provide for function parameters.

|

|

767

|

+

|

|

768

|

+

It is also possible to use [fine-tuning](/docs/guides/fine-tuning#fine-tuning-examples) to reduce the number of tokens used if you have many functions defined in your tools specification.

|

|

769

|

+

|

|

770

|

+

Handling function calls

|

|

771

|

+

-----------------------

|

|

772

|

+

|

|

773

|

+

When the model calls a function, you must execute it and return the result. Since model responses can include zero, one, or multiple calls, it is best practice to assume there are several.

|

|

774

|

+

|

|

775

|

+

The response `output` array contains an entry with the `type` having a value of `function_call`. Each entry with a `call_id` (used later to submit the function result), `name`, and JSON-encoded `arguments`.

|

|

776

|

+

|

|

777

|

+

Sample response with multiple function calls

|

|

778

|

+

|

|

779

|

+

```json

|

|

780

|

+

[

|

|

781

|

+

{

|

|

782

|

+

"id": "fc_12345xyz",

|

|

783

|

+

"call_id": "call_12345xyz",

|

|

784

|

+

"type": "function_call",

|

|

785

|

+

"name": "get_weather",

|

|

786

|

+

"arguments": "{\"location\":\"Paris, France\"}"

|

|

787

|

+

},

|

|

788

|

+

{

|

|

789

|

+

"id": "fc_67890abc",

|

|

790

|

+

"call_id": "call_67890abc",

|

|

791

|

+

"type": "function_call",

|

|

792

|

+

"name": "get_weather",

|

|

793

|

+

"arguments": "{\"location\":\"Bogotá, Colombia\"}"

|

|

794

|

+

},

|

|

795

|

+

{

|

|

796

|

+

"id": "fc_99999def",

|

|

797

|

+

"call_id": "call_99999def",

|

|

798

|

+

"type": "function_call",

|

|

799

|

+

"name": "send_email",

|

|

800

|

+

"arguments": "{\"to\":\"bob@email.com\",\"body\":\"Hi bob\"}"

|

|

801

|

+

}

|

|

802

|

+

]

|

|

803

|

+

```

|

|

804

|

+

|

|

805

|

+

Execute function calls and append results

|

|

806

|

+

|

|

807

|

+

```python

|

|

808

|

+

for tool_call in response.output:

|

|

809

|

+

if tool_call.type != "function_call":

|

|

810

|

+

continue

|

|

811

|

+

|

|

812

|

+

name = tool_call.name

|

|

813

|

+

args = json.loads(tool_call.arguments)

|

|

814

|

+

|

|

815

|

+

result = call_function(name, args)

|

|

816

|

+

input_messages.append({

|

|

817

|

+

"type": "function_call_output",

|

|

818

|

+

"call_id": tool_call.call_id,

|

|

819

|

+

"output": str(result)

|

|

820

|

+

})

|

|

821

|

+

```

|

|

822

|

+

|

|

823

|

+

```javascript

|

|

824

|

+

for (const toolCall of response.output) {

|

|

825

|

+

if (toolCall.type !== "function_call") {

|

|

826

|

+

continue;

|

|

827

|

+

}

|

|

828

|

+

|

|

829

|

+

const name = toolCall.name;

|

|

830

|

+

const args = JSON.parse(toolCall.arguments);

|

|

831

|

+

|

|

832

|

+

const result = callFunction(name, args);

|

|

833

|

+

input.push({

|

|

834

|

+

type: "function_call_output",

|

|

835

|

+

call_id: toolCall.call_id,

|

|

836

|

+

output: result.toString()

|

|

837

|

+

});

|

|

838

|

+

}

|

|

839

|

+

```

|

|

840

|

+

|

|

841

|

+

In the example above, we have a hypothetical `call_function` to route each call. Here’s a possible implementation:

|

|

842

|

+

|

|

843

|

+

Execute function calls and append results

|

|

844

|

+

|

|

845

|

+

```python

|

|

846

|

+

def call_function(name, args):

|

|

847

|

+

if name == "get_weather":

|

|

848

|

+

return get_weather(**args)

|

|

849

|

+

if name == "send_email":

|

|

850

|

+

return send_email(**args)

|

|

851

|

+

```

|

|

852

|

+

|

|

853

|

+

```javascript

|

|

854

|

+

const callFunction = async (name, args) => {

|

|

855

|

+

if (name === "get_weather") {

|

|

856

|

+

return getWeather(args.latitude, args.longitude);

|

|

857

|

+

}

|

|

858

|

+

if (name === "send_email") {

|

|

859

|

+

return sendEmail(args.to, args.body);

|

|

860

|

+

}

|

|

861

|

+

};

|

|

862

|

+

```

|

|

863

|

+

|

|

864

|

+

### Formatting results

|

|

865

|

+

|

|

866

|

+

A result must be a string, but the format is up to you (JSON, error codes, plain text, etc.). The model will interpret that string as needed.

|

|

867

|

+

|

|

868

|

+

If your function has no return value (e.g. `send_email`), simply return a string to indicate success or failure. (e.g. `"success"`)

|

|

869

|

+

|

|

870

|

+

### Incorporating results into response

|

|

871

|

+

|

|

872

|

+

After appending the results to your `input`, you can send them back to the model to get a final response.

|

|

873

|

+

|

|

874

|

+

Send results back to model

|

|

875

|

+

|

|

876

|

+

```python

|

|

877

|

+

response = client.responses.create(

|

|

878

|

+

model="gpt-4.1",

|

|

879

|

+

input=input_messages,

|

|

880

|

+

tools=tools,

|

|

881

|

+

)

|

|

882

|

+

```

|

|

883

|

+

|

|

884

|

+

```javascript

|

|

885

|

+

const response = await openai.responses.create({

|

|

886

|

+

model: "gpt-4.1",

|

|

887

|

+

input,

|

|

888

|

+

tools,

|

|

889

|

+

});

|

|

890

|

+

```

|

|

891

|

+

|

|

892

|

+

Final response

|

|

893

|

+

|

|

894

|

+

```json

|

|

895

|

+

"It's about 15°C in Paris, 18°C in Bogotá, and I've sent that email to Bob."

|

|

896

|

+

```

|

|

897

|

+

|

|

898

|

+

Additional configurations

|

|

899

|

+

-------------------------

|

|

900

|

+

|

|

901

|

+

### Tool choice

|

|

902

|

+

|

|

903

|

+

By default the model will determine when and how many tools to use. You can force specific behavior with the `tool_choice` parameter.

|

|

904

|

+

|

|

905

|

+

1. **Auto:** (_Default_) Call zero, one, or multiple functions. `tool_choice: "auto"`

|

|

906

|

+

2. **Required:** Call one or more functions. `tool_choice: "required"`

|

|

907

|

+

|

|

908

|

+

3. **Forced Function:** Call exactly one specific function. `tool_choice: {"type": "function", "name": "get_weather"}`

|

|

909

|

+

|

|

910

|

+

|

|

911

|

+

|

|

912

|

+

You can also set `tool_choice` to `"none"` to imitate the behavior of passing no functions.

|

|

913

|

+

|

|

914

|

+

### Parallel function calling

|

|

915

|

+

|

|

916

|

+

The model may choose to call multiple functions in a single turn. You can prevent this by setting `parallel_tool_calls` to `false`, which ensures exactly zero or one tool is called.

|

|

917

|

+

|

|

918

|

+

**Note:** Currently, if you are using a fine tuned model and the model calls multiple functions in one turn then [strict mode](/docs/guides/function-calling#strict-mode) will be disabled for those calls.

|

|

919

|

+

|

|

920

|

+

**Note for `gpt-4.1-nano-2025-04-14`:** This snapshot of `gpt-4.1-nano` can sometimes include multiple tools calls for the same tool if parallel tool calls are enabled. It is recommended to disable this feature when using this nano snapshot.

|

|

921

|

+

|

|

922

|

+

### Strict mode

|

|

923

|

+

|

|

924

|

+

Setting `strict` to `true` will ensure function calls reliably adhere to the function schema, instead of being best effort. We recommend always enabling strict mode.

|

|

925

|

+

|

|

926

|

+

Under the hood, strict mode works by leveraging our [structured outputs](/docs/guides/structured-outputs) feature and therefore introduces a couple requirements:

|

|

927

|

+

|

|

928

|

+

1. `additionalProperties` must be set to `false` for each object in the `parameters`.

|

|

929

|

+

2. All fields in `properties` must be marked as `required`.

|

|

930

|

+

|

|

931

|

+

You can denote optional fields by adding `null` as a `type` option (see example below).

|

|

932

|

+

|

|

933

|

+

Strict mode enabled

|

|

934

|

+

|

|

935

|

+

```json

|

|

936

|

+

{

|

|

937

|

+

"type": "function",

|

|

938

|

+

"name": "get_weather",

|

|

939

|

+

"description": "Retrieves current weather for the given location.",

|

|

940

|

+

"strict": true,

|

|

941

|

+

"parameters": {

|

|

942

|

+

"type": "object",

|

|

943

|

+

"properties": {

|

|

944

|

+

"location": {

|

|

945

|

+

"type": "string",

|

|

946

|

+

"description": "City and country e.g. Bogotá, Colombia"

|

|

947

|

+

},

|

|

948

|

+

"units": {

|

|

949

|

+

"type": ["string", "null"],

|

|

950

|

+

"enum": ["celsius", "fahrenheit"],

|

|

951

|

+

"description": "Units the temperature will be returned in."

|

|

952

|

+

}

|

|

953

|

+

},

|

|

954

|

+

"required": ["location", "units"],

|

|

955

|

+

"additionalProperties": false

|

|

956

|

+

}

|

|

957

|

+

}

|

|

958

|

+

```

|

|

959

|

+

|

|

960

|

+

Strict mode disabled

|

|

961

|

+

|

|

962

|

+

```json

|

|

963

|

+

{

|

|

964

|

+

"type": "function",

|

|

965

|

+

"name": "get_weather",

|

|

966

|

+

"description": "Retrieves current weather for the given location.",

|

|

967

|

+

"parameters": {

|

|

968

|

+

"type": "object",

|

|

969

|

+

"properties": {

|

|

970

|

+

"location": {

|

|

971

|

+

"type": "string",

|

|

972

|

+

"description": "City and country e.g. Bogotá, Colombia"

|

|

973

|

+

},

|

|

974

|

+

"units": {

|

|

975

|

+

"type": "string",

|

|

976

|

+

"enum": ["celsius", "fahrenheit"],

|

|

977

|

+

"description": "Units the temperature will be returned in."

|

|

978

|

+

}

|

|

979

|

+

},

|

|

980

|

+

"required": ["location"],

|

|

981

|

+

}

|

|

982

|

+

}

|

|

983

|

+

```

|

|

984

|

+

|

|

985

|

+

All schemas generated in the [playground](/playground) have strict mode enabled.

|

|

986

|

+

|

|

987

|

+

While we recommend you enable strict mode, it has a few limitations:

|

|

988

|

+

|

|

989

|

+

1. Some features of JSON schema are not supported. (See [supported schemas](/docs/guides/structured-outputs?context=with_parse#supported-schemas).)

|

|

990

|

+

|

|

991

|

+

Specifically for fine tuned models:

|

|

992

|

+

|

|

993

|

+

1. Schemas undergo additional processing on the first request (and are then cached). If your schemas vary from request to request, this may result in higher latencies.

|

|

994

|

+

2. Schemas are cached for performance, and are not eligible for [zero data retention](/docs/models#how-we-use-your-data).