llms-py 2.0.27__py3-none-any.whl → 2.0.29__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- llms/llms.json +11 -1

- llms/main.py +194 -6

- llms/ui/Analytics.mjs +12 -4

- llms/ui/ChatPrompt.mjs +46 -6

- llms/ui/Main.mjs +18 -3

- llms/ui/ModelSelector.mjs +20 -2

- llms/ui/SystemPromptSelector.mjs +21 -1

- llms/ui/ai.mjs +1 -1

- llms/ui/app.css +16 -70

- llms/ui/lib/servicestack-vue.mjs +4 -4

- llms/ui/typography.css +4 -2

- {llms_py-2.0.27.dist-info → llms_py-2.0.29.dist-info}/METADATA +32 -9

- {llms_py-2.0.27.dist-info → llms_py-2.0.29.dist-info}/RECORD +17 -17

- {llms_py-2.0.27.dist-info → llms_py-2.0.29.dist-info}/WHEEL +0 -0

- {llms_py-2.0.27.dist-info → llms_py-2.0.29.dist-info}/entry_points.txt +0 -0

- {llms_py-2.0.27.dist-info → llms_py-2.0.29.dist-info}/licenses/LICENSE +0 -0

- {llms_py-2.0.27.dist-info → llms_py-2.0.29.dist-info}/top_level.txt +0 -0

llms/ui/typography.css

CHANGED

|

@@ -94,16 +94,18 @@

|

|

|

94

94

|

border-left-style: solid;

|

|

95

95

|

}

|

|

96

96

|

|

|

97

|

-

.dark .prose :

|

|

97

|

+

.dark .prose :where(td):not(:where([class~="not-prose"] *)) {

|

|

98

98

|

color: rgb(209 213 219); /*text-gray-300*/

|

|

99

99

|

}

|

|

100

100

|

.dark .prose :where(h1,h2,h3,h4,h5,h6,th):not(:where([class~="not-prose"] *)) {

|

|

101

101

|

color: rgb(243 244 246); /*text-gray-100*/

|

|

102

102

|

}

|

|

103

|

-

.dark .prose :where(code):not(:where([class~="not-prose"] *))

|

|

103

|

+

.dark .prose :where(code):not(:where([class~="not-prose"] *)),

|

|

104

|

+

.dark .message em {

|

|

104

105

|

background-color: rgb(30 58 138); /*text-blue-900*/

|

|

105

106

|

color: rgb(243 244 246); /*text-gray-100*/

|

|

106

107

|

}

|

|

108

|

+

|

|

107

109

|

.dark .prose :where(pre code):not(:where([class~="not-prose"] *)) {

|

|

108

110

|

background-color: unset;

|

|

109

111

|

}

|

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: llms-py

|

|

3

|

-

Version: 2.0.

|

|

3

|

+

Version: 2.0.29

|

|

4

4

|

Summary: A lightweight CLI tool and OpenAI-compatible server for querying multiple Large Language Model (LLM) providers

|

|

5

5

|

Home-page: https://github.com/ServiceStack/llms

|

|

6

6

|

Author: ServiceStack

|

|

@@ -50,7 +50,7 @@ Configure additional providers and models in [llms.json](llms/llms.json)

|

|

|

50

50

|

|

|

51

51

|

## Features

|

|

52

52

|

|

|

53

|

-

- **Lightweight**: Single [llms.py](https://github.com/ServiceStack/llms/blob/main/llms/main.py) Python file with single `aiohttp` dependency

|

|

53

|

+

- **Lightweight**: Single [llms.py](https://github.com/ServiceStack/llms/blob/main/llms/main.py) Python file with single `aiohttp` dependency (Pillow optional)

|

|

54

54

|

- **Multi-Provider Support**: OpenRouter, Ollama, Anthropic, Google, OpenAI, Grok, Groq, Qwen, Z.ai, Mistral

|

|

55

55

|

- **OpenAI-Compatible API**: Works with any client that supports OpenAI's chat completion API

|

|

56

56

|

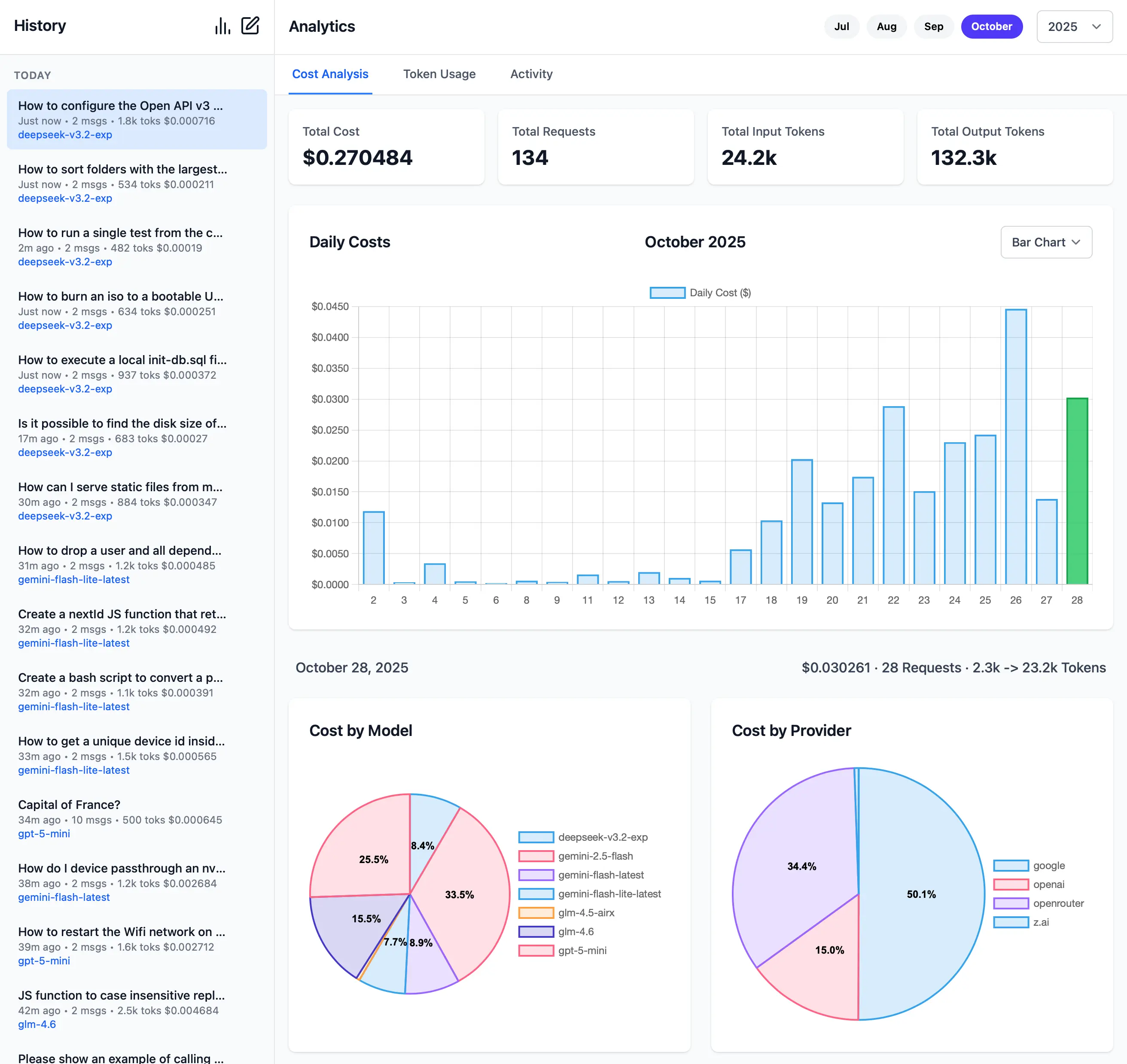

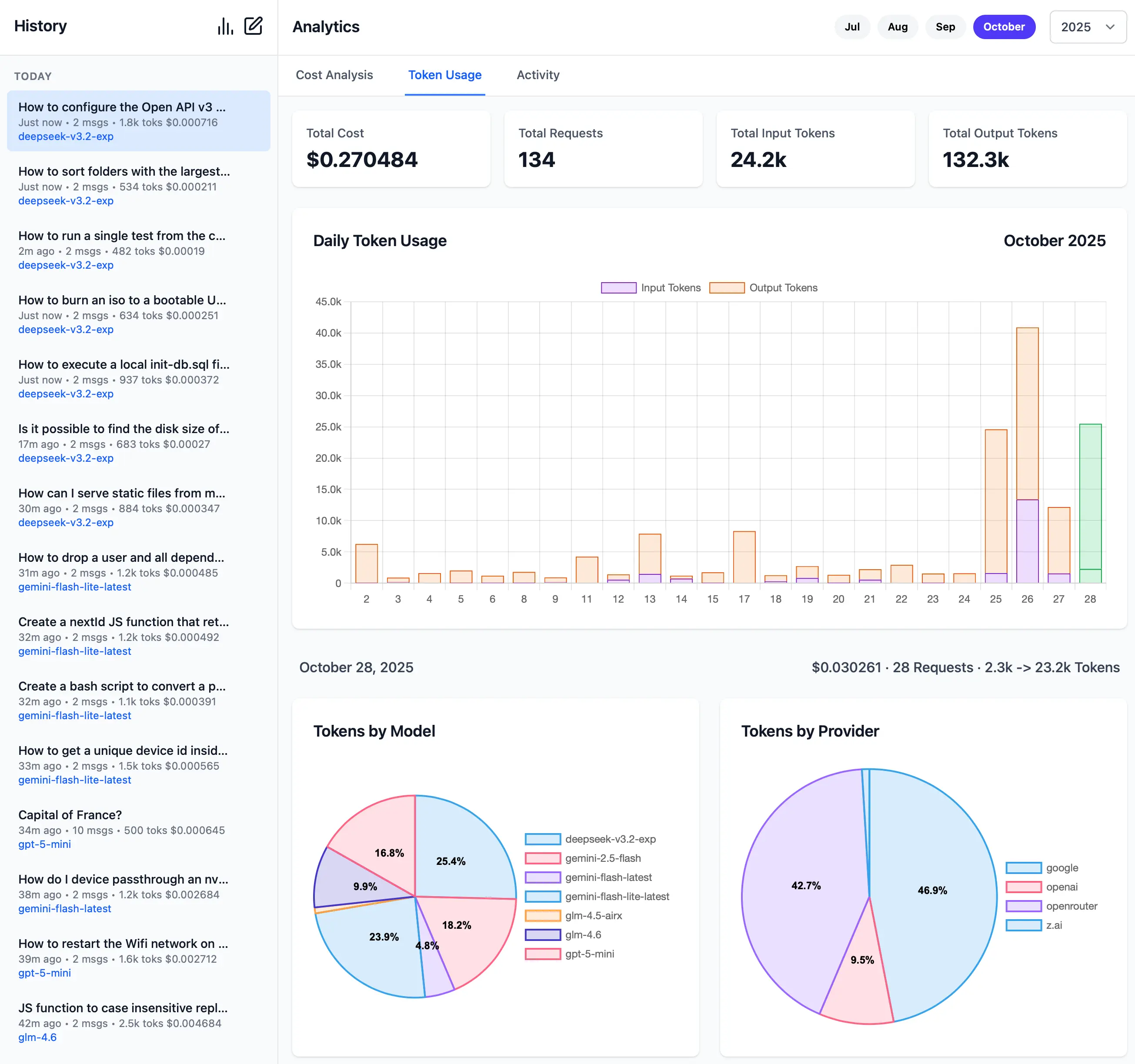

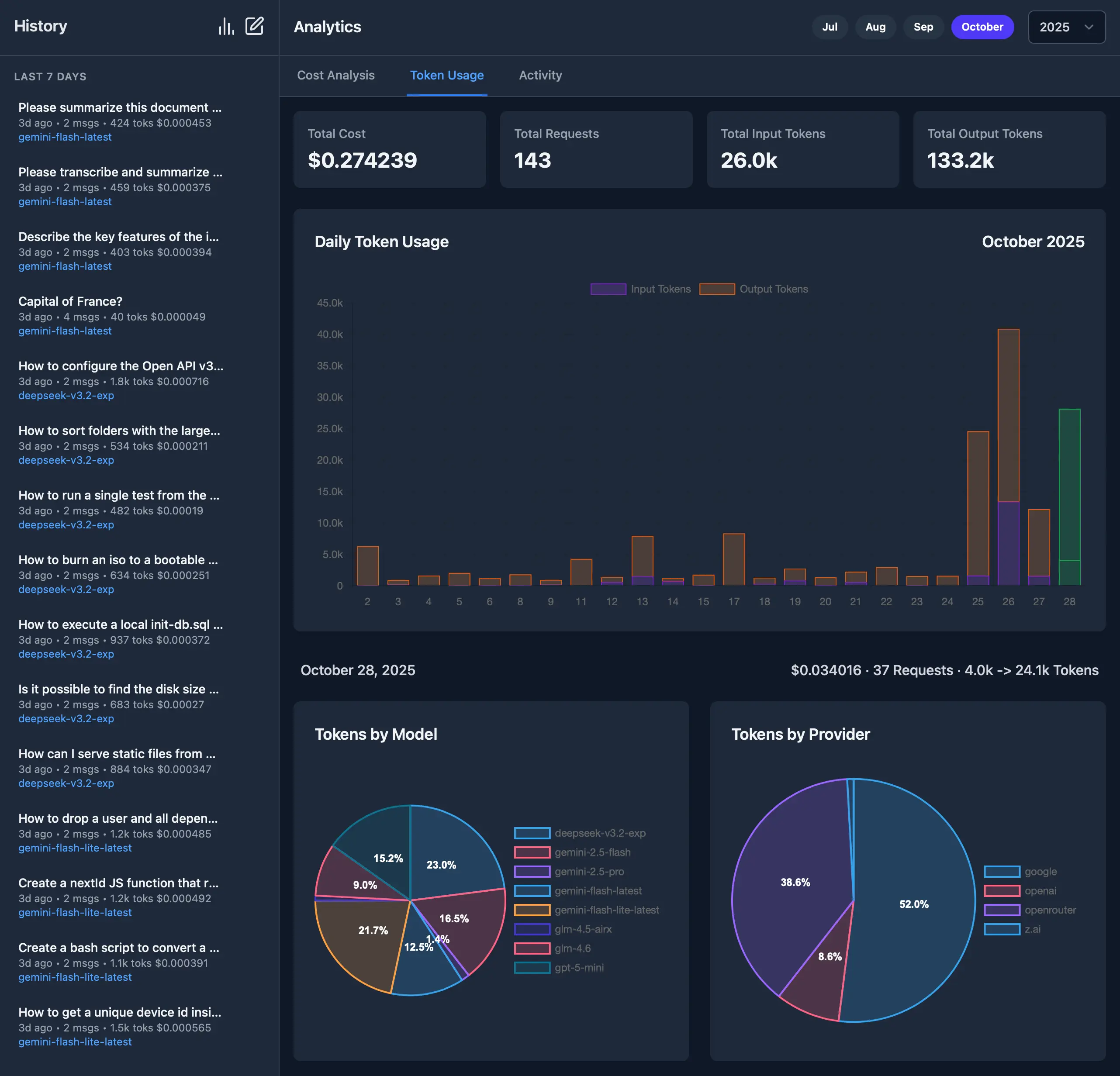

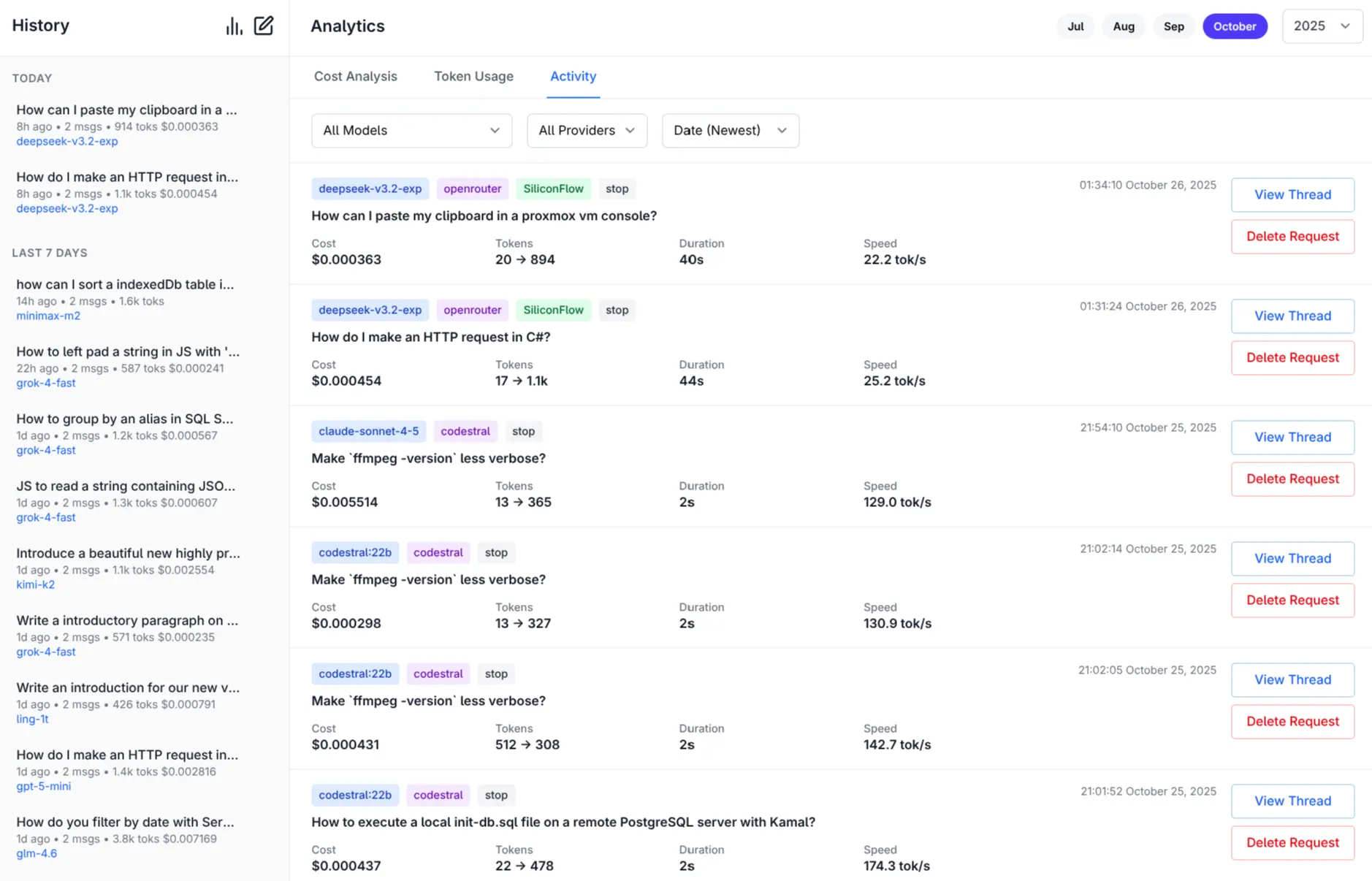

- **Built-in Analytics**: Built-in analytics UI to visualize costs, requests, and token usage

|

|

@@ -58,6 +58,7 @@ Configure additional providers and models in [llms.json](llms/llms.json)

|

|

|

58

58

|

- **CLI Interface**: Simple command-line interface for quick interactions

|

|

59

59

|

- **Server Mode**: Run an OpenAI-compatible HTTP server at `http://localhost:{PORT}/v1/chat/completions`

|

|

60

60

|

- **Image Support**: Process images through vision-capable models

|

|

61

|

+

- Auto resizes and converts to webp if exceeds configured limits

|

|

61

62

|

- **Audio Support**: Process audio through audio-capable models

|

|

62

63

|

- **Custom Chat Templates**: Configurable chat completion request templates for different modalities

|

|

63

64

|

- **Auto-Discovery**: Automatically discover available Ollama models

|

|

@@ -68,23 +69,27 @@ Configure additional providers and models in [llms.json](llms/llms.json)

|

|

|

68

69

|

|

|

69

70

|

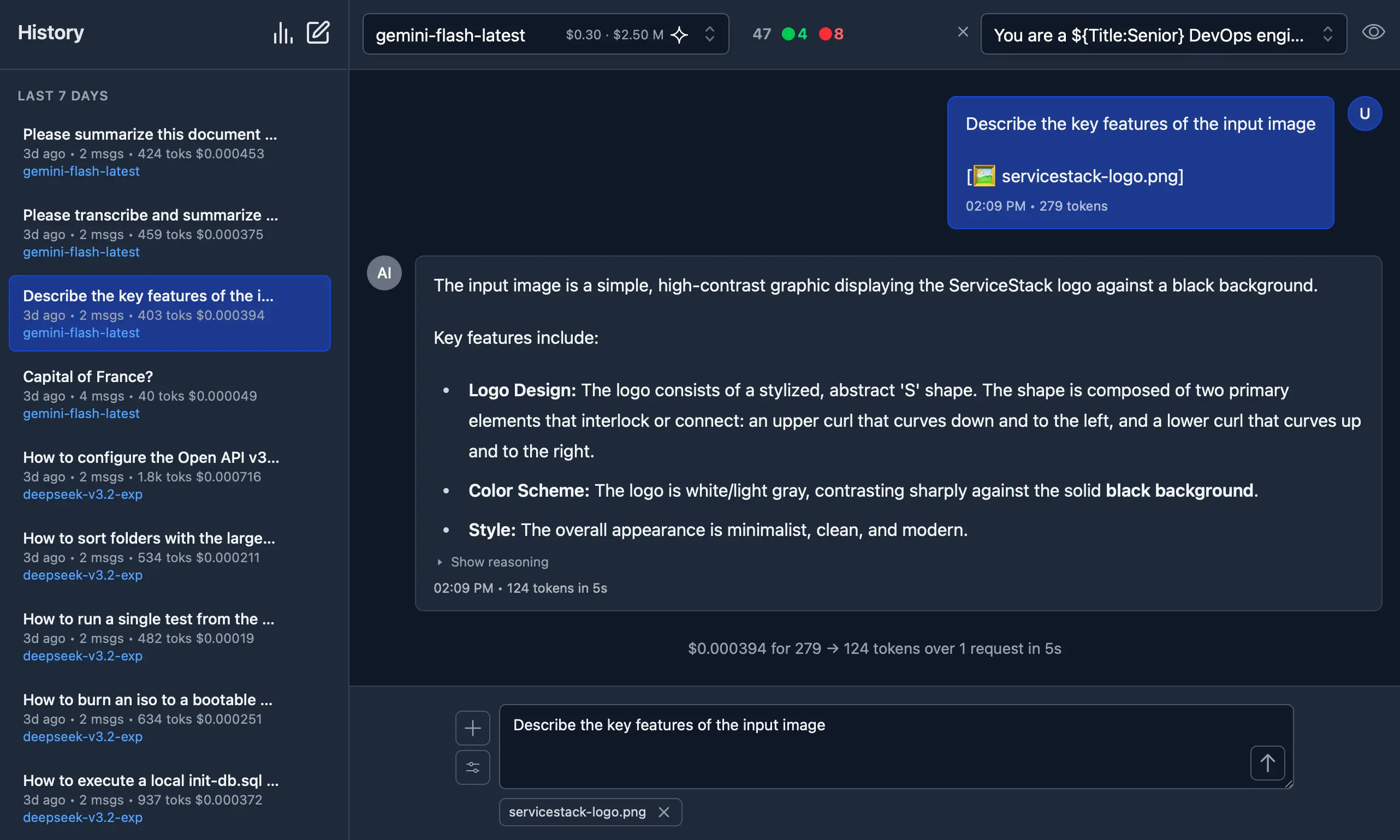

Access all your local all remote LLMs with a single ChatGPT-like UI:

|

|

70

71

|

|

|

71

|

-

[](https://servicestack.net/posts/llms-py-ui)

|

|

72

73

|

|

|

73

|

-

|

|

74

|

+

#### Dark Mode Support

|

|

75

|

+

|

|

76

|

+

[](https://servicestack.net/posts/llms-py-ui)

|

|

77

|

+

|

|

78

|

+

#### Monthly Costs Analysis

|

|

74

79

|

|

|

75

80

|

[](https://servicestack.net/posts/llms-py-ui)

|

|

76

81

|

|

|

77

|

-

|

|

82

|

+

#### Monthly Token Usage (Dark Mode)

|

|

78

83

|

|

|

79

|

-

[](https://servicestack.net/posts/llms-py-ui)

|

|

84

|

+

[](https://servicestack.net/posts/llms-py-ui)

|

|

80

85

|

|

|

81

|

-

|

|

86

|

+

#### Monthly Activity Log

|

|

82

87

|

|

|

83

88

|

[](https://servicestack.net/posts/llms-py-ui)

|

|

84

89

|

|

|

85

90

|

[More Features and Screenshots](https://servicestack.net/posts/llms-py-ui).

|

|

86

91

|

|

|

87

|

-

|

|

92

|

+

#### Check Provider Reliability and Response Times

|

|

88

93

|

|

|

89

94

|

Check the status of configured providers to test if they're configured correctly, reachable and what their response times is for the simplest `1+1=` request:

|

|

90

95

|

|

|

@@ -230,6 +235,22 @@ See [DOCKER.md](DOCKER.md) for detailed instructions on customizing configuratio

|

|

|

230

235

|

|

|

231

236

|

llms.py supports optional GitHub OAuth authentication to secure your web UI and API endpoints. When enabled, users must sign in with their GitHub account before accessing the application.

|

|

232

237

|

|

|

238

|

+

```json

|

|

239

|

+

{

|

|

240

|

+

"auth": {

|

|

241

|

+

"enabled": true,

|

|

242

|

+

"github": {

|

|

243

|

+

"client_id": "$GITHUB_CLIENT_ID",

|

|

244

|

+

"client_secret": "$GITHUB_CLIENT_SECRET",

|

|

245

|

+

"redirect_uri": "http://localhost:8000/auth/github/callback",

|

|

246

|

+

"restrict_to": "$GITHUB_USERS"

|

|

247

|

+

}

|

|

248

|

+

}

|

|

249

|

+

}

|

|

250

|

+

```

|

|

251

|

+

|

|

252

|

+

`GITHUB_USERS` is optional but if set will only allow access to the specified users.

|

|

253

|

+

|

|

233

254

|

See [GITHUB_OAUTH_SETUP.md](GITHUB_OAUTH_SETUP.md) for detailed setup instructions.

|

|

234

255

|

|

|

235

256

|

## Configuration

|

|

@@ -243,6 +264,8 @@ The configuration file [llms.json](llms/llms.json) is saved to `~/.llms/llms.jso

|

|

|

243

264

|

- `audio`: Default chat completion request template for audio prompts

|

|

244

265

|

- `file`: Default chat completion request template for file prompts

|

|

245

266

|

- `check`: Check request template for testing provider connectivity

|

|

267

|

+

- `limits`: Override Request size limits

|

|

268

|

+

- `convert`: Max image size and length limits and auto conversion settings

|

|

246

269

|

|

|

247

270

|

### Providers

|

|

248

271

|

|

|

@@ -1211,7 +1234,7 @@ This shows:

|

|

|

1211

1234

|

- `llms/main.py` - Main script with CLI and server functionality

|

|

1212

1235

|

- `llms/llms.json` - Default configuration file

|

|

1213

1236

|

- `llms/ui.json` - UI configuration file

|

|

1214

|

-

- `requirements.txt` - Python dependencies

|

|

1237

|

+

- `requirements.txt` - Python dependencies, required: `aiohttp`, optional: `Pillow`

|

|

1215

1238

|

|

|

1216

1239

|

### Provider Classes

|

|

1217

1240

|

|

|

@@ -1,16 +1,16 @@

|

|

|

1

1

|

llms/__init__.py,sha256=Mk6eHi13yoUxLlzhwfZ6A1IjsfSQt9ShhOdbLXTvffU,53

|

|

2

2

|

llms/__main__.py,sha256=hrBulHIt3lmPm1BCyAEVtB6DQ0Hvc3gnIddhHCmJasg,151

|

|

3

3

|

llms/index.html,sha256=_pkjdzCX95HTf19LgE4gMh6tLittcnf7M_jL2hSEbbM,3250

|

|

4

|

-

llms/llms.json,sha256=

|

|

5

|

-

llms/main.py,sha256=

|

|

4

|

+

llms/llms.json,sha256=oTMlVM3nYeooQgsPbIGN2LQ-1aq0u1v38sjmxHPiGAc,41331

|

|

5

|

+

llms/main.py,sha256=BLS3wUocCGDMUxraahVBXYbFwLnr0WmeFUqhwk-MtVE,90456

|

|

6

6

|

llms/ui.json,sha256=iBOmpNeD5-o8AgUa51ymS-KemovJ7bm9J1fnL0nf8jk,134025

|

|

7

|

-

llms/ui/Analytics.mjs,sha256=

|

|

7

|

+

llms/ui/Analytics.mjs,sha256=r5il9Yvh2lje23e4zbGVUqDu2CG75mtyx84MeMVmHpU,72055

|

|

8

8

|

llms/ui/App.mjs,sha256=95pBXexTt3tgaX-Toh5oS0PdFxrVt3ZU8wu8Ywoz6FI,648

|

|

9

9

|

llms/ui/Avatar.mjs,sha256=TgouwV9bN-Ou1Tf2zCDtVaRiUB21TXZZPFCTlFL-xxQ,3387

|

|

10

10

|

llms/ui/Brand.mjs,sha256=kJn7O4Oo3tbZi87Ifbbcq7AZvhfUqbn_ZcXcyv_WI1A,2075

|

|

11

|

-

llms/ui/ChatPrompt.mjs,sha256=

|

|

12

|

-

llms/ui/Main.mjs,sha256=

|

|

13

|

-

llms/ui/ModelSelector.mjs,sha256=

|

|

11

|

+

llms/ui/ChatPrompt.mjs,sha256=9VXtXz4vHKLujZOC_yRk0XRNP0L1OTtBNOXeEOTmI-M,26941

|

|

12

|

+

llms/ui/Main.mjs,sha256=T_5CmGGDAMc-9tk2hmX6UTUWZ7sDt-A_G22utLndB0Y,42926

|

|

13

|

+

llms/ui/ModelSelector.mjs,sha256=MignHFpyeF1_K6tcHqLLCmQgNGQf60gyiP6lzApQzJs,3112

|

|

14

14

|

llms/ui/OAuthSignIn.mjs,sha256=IdA9Tbswlh74a_-9e9YulOpqLfRpodRLGfCZ9sTZ5jU,4879

|

|

15

15

|

llms/ui/ProviderIcon.mjs,sha256=HTjlgtXEpekn8iNN_S0uswbbvL0iGb20N15-_lXdojk,9054

|

|

16

16

|

llms/ui/ProviderStatus.mjs,sha256=v5_Qx5kX-JbnJHD6Or5THiePzcX3Wf9ODcS4Q-kfQbM,6084

|

|

@@ -19,15 +19,15 @@ llms/ui/SettingsDialog.mjs,sha256=vyqLOrACBICwT8qQ10oJAMOYeA1phrAyz93mZygn-9Y,19

|

|

|

19

19

|

llms/ui/Sidebar.mjs,sha256=lVhVxC74UeQmBdhv_MxUmbdBJCfjWY0P6rtx1yJHywg,10932

|

|

20

20

|

llms/ui/SignIn.mjs,sha256=df3b-7L3ZIneDGbJWUk93K9RGo40gVeuR5StzT1ZH9g,2324

|

|

21

21

|

llms/ui/SystemPromptEditor.mjs,sha256=PffkNPV6hGbm1QZBKPI7yvWPZSBL7qla0d-JEJ4mxYo,1466

|

|

22

|

-

llms/ui/SystemPromptSelector.mjs,sha256=

|

|

22

|

+

llms/ui/SystemPromptSelector.mjs,sha256=A0qZHJGNwsX53X-DVn8ddBGdgn32Gd7wf1rRc1LL0v8,3769

|

|

23

23

|

llms/ui/Welcome.mjs,sha256=r9j7unF9CF3k7gEQBMRMVsa2oSjgHGNn46Oa5l5BwlY,950

|

|

24

|

-

llms/ui/ai.mjs,sha256=

|

|

25

|

-

llms/ui/app.css,sha256=

|

|

24

|

+

llms/ui/ai.mjs,sha256=XroCFHkmtsthJ5LtR5EY2ZoVekRzAEuWMYEEIzcTRFs,4768

|

|

25

|

+

llms/ui/app.css,sha256=0QcB6usfv9o5UieT27lPEBami38akKjhanL3hudnMH8,108326

|

|

26

26

|

llms/ui/fav.svg,sha256=_R6MFeXl6wBFT0lqcUxYQIDWgm246YH_3hSTW0oO8qw,734

|

|

27

27

|

llms/ui/markdown.mjs,sha256=uWSyBZZ8a76Dkt53q6CJzxg7Gkx7uayX089td3Srv8w,6388

|

|

28

28

|

llms/ui/tailwind.input.css,sha256=QInTVDpCR89OTzRo9AePdAa-MX3i66RkhNOfa4_7UAg,12086

|

|

29

29

|

llms/ui/threadStore.mjs,sha256=VeGXAuUlA9-Ie9ZzOsay6InKBK_ewWFK6aTRmLTporg,16543

|

|

30

|

-

llms/ui/typography.css,sha256=

|

|

30

|

+

llms/ui/typography.css,sha256=6o7pbMIamRVlm2GfzSStpcOG4T5eFCK_WcQ3RIHKAsU,19587

|

|

31

31

|

llms/ui/utils.mjs,sha256=cYrP17JwpQk7lLqTWNgVTOD_ZZAovbWnx2QSvKzeB24,5333

|

|

32

32

|

llms/ui/lib/chart.js,sha256=dx8FdDX0Rv6OZtZjr9FQh5h-twFsKjfnb-FvFlQ--cU,196176

|

|

33

33

|

llms/ui/lib/charts.mjs,sha256=MNym9qE_2eoH6M7_8Gj9i6e6-Y3b7zw9UQWCUHRF6x0,1088

|

|

@@ -36,13 +36,13 @@ llms/ui/lib/highlight.min.mjs,sha256=sG7wq8bF-IKkfie7S4QSyh5DdHBRf0NqQxMOEH8-MT0

|

|

|

36

36

|

llms/ui/lib/idb.min.mjs,sha256=CeTXyV4I_pB5vnibvJuyXdMs0iVF2ZL0Z7cdm3w_QaI,3853

|

|

37

37

|

llms/ui/lib/marked.min.mjs,sha256=QRHb_VZugcBJRD2EP6gYlVFEsJw5C2fQ8ImMf_pA2_s,39488

|

|

38

38

|

llms/ui/lib/servicestack-client.mjs,sha256=UVafVbzhJ_0N2lzv7rlzIbzwnWpoqXxGk3N3FSKgOOc,54534

|

|

39

|

-

llms/ui/lib/servicestack-vue.mjs,sha256=

|

|

39

|

+

llms/ui/lib/servicestack-vue.mjs,sha256=EU3cnlQuTzsmPvoK50JFN98t4AO80vVNA-CS2kaS0nI,215162

|

|

40

40

|

llms/ui/lib/vue-router.min.mjs,sha256=fR30GHoXI1u81zyZ26YEU105pZgbbAKSXbpnzFKIxls,30418

|

|

41

41

|

llms/ui/lib/vue.min.mjs,sha256=iXh97m5hotl0eFllb3aoasQTImvp7mQoRJ_0HoxmZkw,163811

|

|

42

42

|

llms/ui/lib/vue.mjs,sha256=dS8LKOG01t9CvZ04i0tbFXHqFXOO_Ha4NmM3BytjQAs,537071

|

|

43

|

-

llms_py-2.0.

|

|

44

|

-

llms_py-2.0.

|

|

45

|

-

llms_py-2.0.

|

|

46

|

-

llms_py-2.0.

|

|

47

|

-

llms_py-2.0.

|

|

48

|

-

llms_py-2.0.

|

|

43

|

+

llms_py-2.0.29.dist-info/licenses/LICENSE,sha256=bus9cuAOWeYqBk2OuhSABVV1P4z7hgrEFISpyda_H5w,1532

|

|

44

|

+

llms_py-2.0.29.dist-info/METADATA,sha256=5fJrs4oI-YvVi3yA9Bx34j5K3e2cPXiqxiKnhsyBJ7E,37076

|

|

45

|

+

llms_py-2.0.29.dist-info/WHEEL,sha256=_zCd3N1l69ArxyTb8rzEoP9TpbYXkqRFSNOD5OuxnTs,91

|

|

46

|

+

llms_py-2.0.29.dist-info/entry_points.txt,sha256=WswyE7PfnkZMIxboC-MS6flBD6wm-CYU7JSUnMhqMfM,40

|

|

47

|

+

llms_py-2.0.29.dist-info/top_level.txt,sha256=gC7hk9BKSeog8gyg-EM_g2gxm1mKHwFRfK-10BxOsa4,5

|

|

48

|

+

llms_py-2.0.29.dist-info/RECORD,,

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|