llms-py 2.0.19__py3-none-any.whl → 2.0.20__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- llms/llms.json +0 -1

- llms/main.py +1 -13

- llms/ui/ai.mjs +1 -1

- {llms_py-2.0.19.dist-info → llms_py-2.0.20.dist-info}/METADATA +14 -5

- {llms_py-2.0.19.dist-info → llms_py-2.0.20.dist-info}/RECORD +9 -9

- {llms_py-2.0.19.dist-info → llms_py-2.0.20.dist-info}/WHEEL +0 -0

- {llms_py-2.0.19.dist-info → llms_py-2.0.20.dist-info}/entry_points.txt +0 -0

- {llms_py-2.0.19.dist-info → llms_py-2.0.20.dist-info}/licenses/LICENSE +0 -0

- {llms_py-2.0.19.dist-info → llms_py-2.0.20.dist-info}/top_level.txt +0 -0

llms/llms.json

CHANGED

|

@@ -261,7 +261,6 @@

|

|

|

261

261

|

"nova-micro": "amazon/nova-micro-v1",

|

|

262

262

|

"nova-lite": "amazon/nova-lite-v1",

|

|

263

263

|

"nova-pro": "amazon/nova-pro-v1",

|

|

264

|

-

"claude-opus-4-1": "anthropic/claude-opus-4.1",

|

|

265

264

|

"claude-sonnet-4-5": "anthropic/claude-sonnet-4.5",

|

|

266

265

|

"claude-sonnet-4-0": "anthropic/claude-sonnet-4",

|

|

267

266

|

"gpt-5": "openai/gpt-5",

|

llms/main.py

CHANGED

|

@@ -22,7 +22,7 @@ from aiohttp import web

|

|

|

22

22

|

from pathlib import Path

|

|

23

23

|

from importlib import resources # Py≥3.9 (pip install importlib_resources for 3.7/3.8)

|

|

24

24

|

|

|

25

|

-

VERSION = "2.0.

|

|

25

|

+

VERSION = "2.0.20"

|

|

26

26

|

_ROOT = None

|

|

27

27

|

g_config_path = None

|

|

28

28

|

g_ui_path = None

|

|

@@ -938,12 +938,6 @@ async def save_default_config(config_path):

|

|

|

938

938

|

config_json = await save_text(github_url("llms.json"), config_path)

|

|

939

939

|

g_config = json.loads(config_json)

|

|

940

940

|

|

|

941

|

-

async def update_llms():

|

|

942

|

-

"""

|

|

943

|

-

Update llms.py from GitHub

|

|

944

|

-

"""

|

|

945

|

-

await save_text(github_url("llms.py"), __file__)

|

|

946

|

-

|

|

947

941

|

def provider_status():

|

|

948

942

|

enabled = list(g_handlers.keys())

|

|

949

943

|

disabled = [provider for provider in g_config['providers'].keys() if provider not in enabled]

|

|

@@ -1291,7 +1285,6 @@ def main():

|

|

|

1291

1285

|

parser.add_argument('--root', default=None, help='Change root directory for UI files', metavar='PATH')

|

|

1292

1286

|

parser.add_argument('--logprefix', default="", help='Prefix used in log messages', metavar='PREFIX')

|

|

1293

1287

|

parser.add_argument('--verbose', action='store_true', help='Verbose output')

|

|

1294

|

-

parser.add_argument('--update', action='store_true', help='Update to latest version')

|

|

1295

1288

|

|

|

1296

1289

|

cli_args, extra_args = parser.parse_known_args()

|

|

1297

1290

|

if cli_args.verbose:

|

|

@@ -1617,11 +1610,6 @@ def main():

|

|

|

1617

1610

|

print(f"\nDefault model set to: {default_model}")

|

|

1618

1611

|

exit(0)

|

|

1619

1612

|

|

|

1620

|

-

if cli_args.update:

|

|

1621

|

-

asyncio.run(update_llms())

|

|

1622

|

-

print(f"{__file__} updated")

|

|

1623

|

-

exit(0)

|

|

1624

|

-

|

|

1625

1613

|

if cli_args.chat is not None or cli_args.image is not None or cli_args.audio is not None or cli_args.file is not None or len(extra_args) > 0:

|

|

1626

1614

|

try:

|

|

1627

1615

|

chat = g_config['defaults']['text']

|

llms/ui/ai.mjs

CHANGED

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: llms-py

|

|

3

|

-

Version: 2.0.

|

|

3

|

+

Version: 2.0.20

|

|

4

4

|

Summary: A lightweight CLI tool and OpenAI-compatible server for querying multiple Large Language Model (LLM) providers

|

|

5

5

|

Home-page: https://github.com/ServiceStack/llms

|

|

6

6

|

Author: ServiceStack

|

|

@@ -40,7 +40,7 @@ Dynamic: requires-python

|

|

|

40

40

|

|

|

41

41

|

# llms.py

|

|

42

42

|

|

|

43

|

-

Lightweight CLI and

|

|

43

|

+

Lightweight CLI, API and ChatGPT-like alternative to Open WebUI for accessing multiple LLMs, entirely offline, with all data kept private in browser storage.

|

|

44

44

|

|

|

45

45

|

Configure additional providers and models in [llms.json](llms/llms.json)

|

|

46

46

|

- Mix and match local models with models from different API providers

|

|

@@ -53,6 +53,7 @@ Configure additional providers and models in [llms.json](llms/llms.json)

|

|

|

53

53

|

- **Lightweight**: Single [llms.py](llms.py) Python file with single `aiohttp` dependency

|

|

54

54

|

- **Multi-Provider Support**: OpenRouter, Ollama, Anthropic, Google, OpenAI, Grok, Groq, Qwen, Z.ai, Mistral

|

|

55

55

|

- **OpenAI-Compatible API**: Works with any client that supports OpenAI's chat completion API

|

|

56

|

+

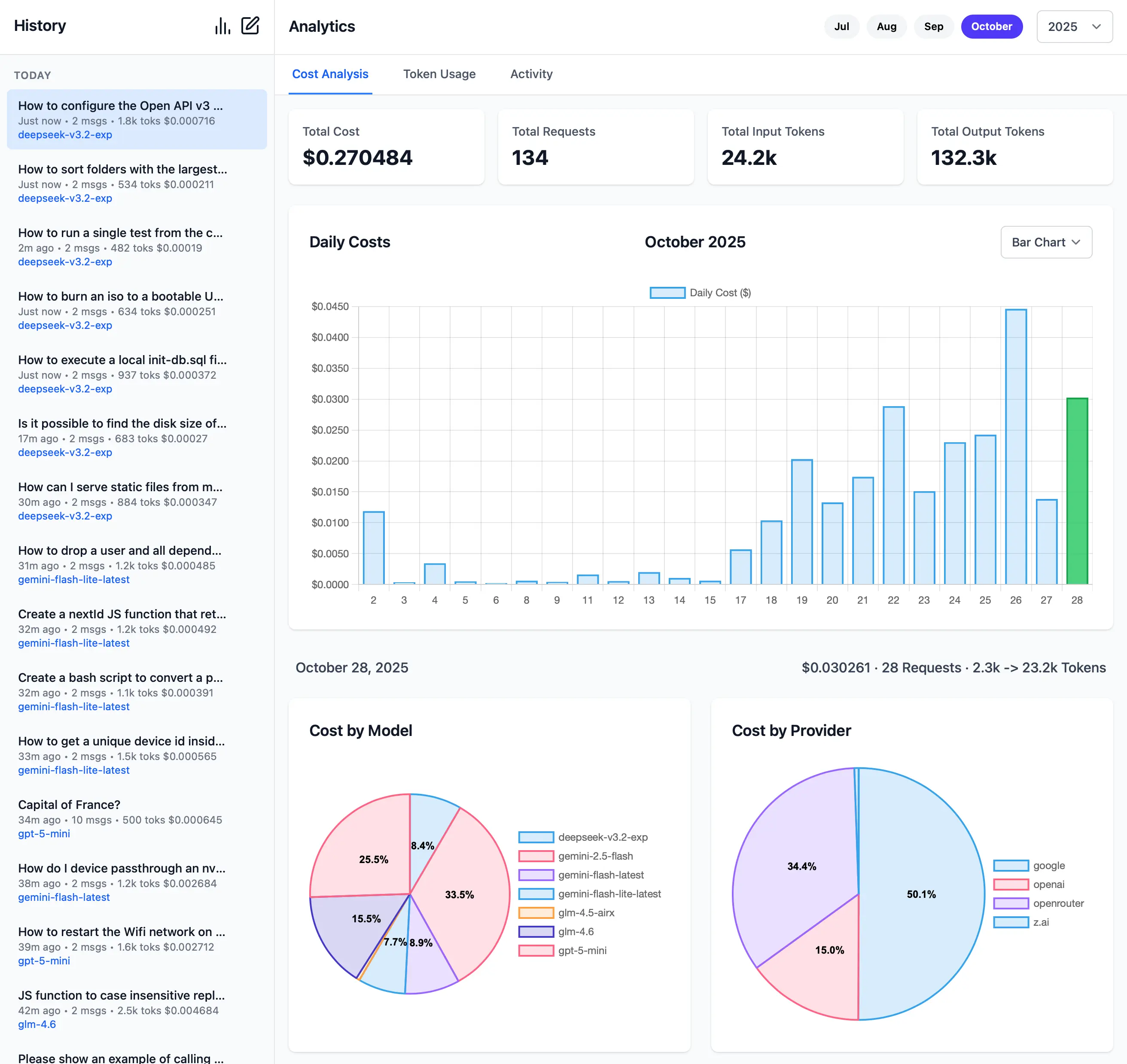

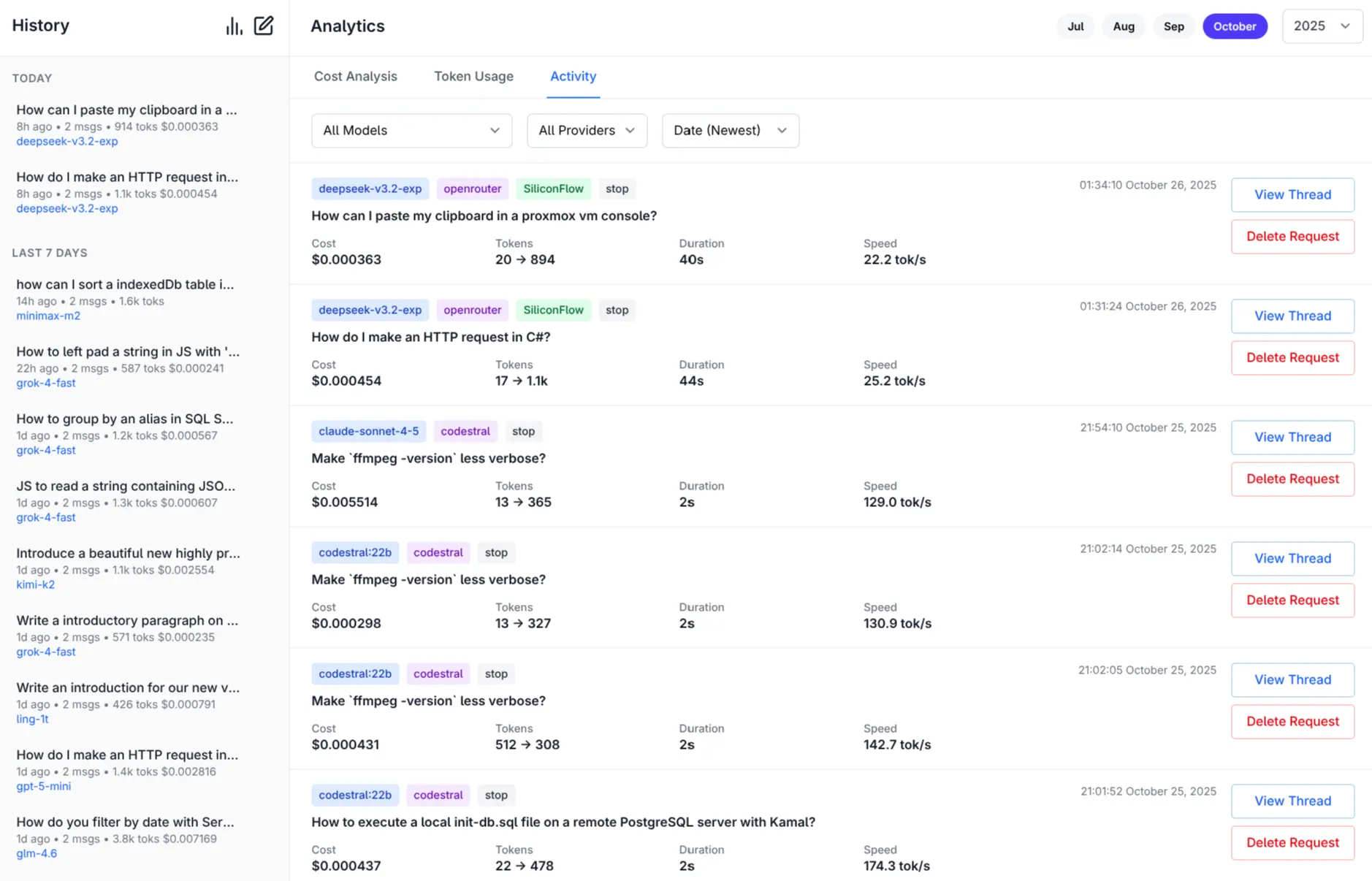

- **Built-in Analytics**: Built-in analytics UI to visualize costs, requests, and token usage

|

|

56

57

|

- **Configuration Management**: Easy provider enable/disable and configuration management

|

|

57

58

|

- **CLI Interface**: Simple command-line interface for quick interactions

|

|

58

59

|

- **Server Mode**: Run an OpenAI-compatible HTTP server at `http://localhost:{PORT}/v1/chat/completions`

|

|

@@ -65,11 +66,19 @@ Configure additional providers and models in [llms.json](llms/llms.json)

|

|

|

65

66

|

|

|

66

67

|

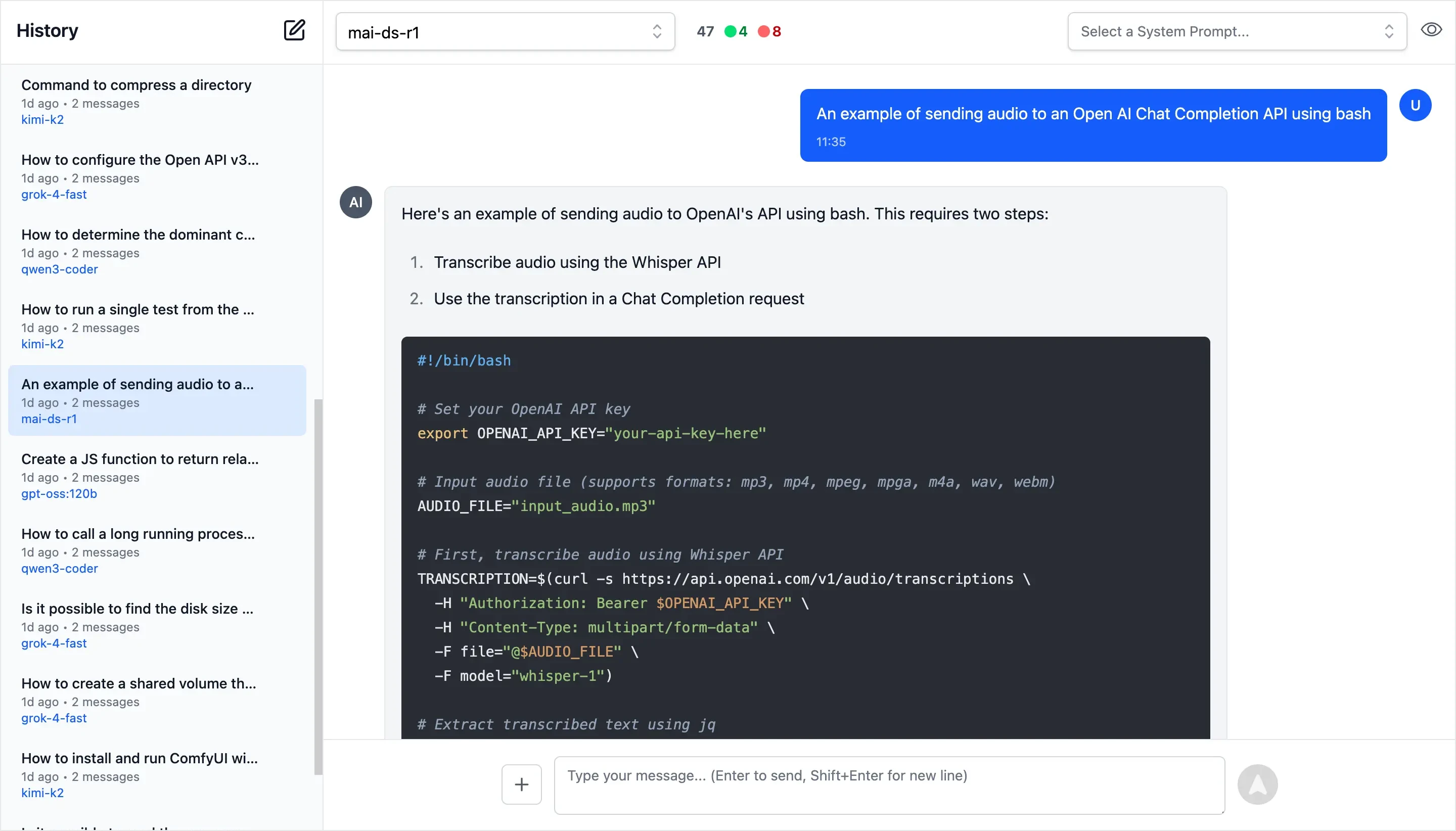

## llms.py UI

|

|

67

68

|

|

|

68

|

-

|

|

69

|

+

Access all your local all remote LLMs with a single ChatGPT-like UI:

|

|

69

70

|

|

|

70

|

-

[](https://servicestack.net/posts/llms-py-ui)

|

|

71

72

|

|

|

72

|

-

|

|

73

|

+

**Monthly Costs Analysis**

|

|

74

|

+

|

|

75

|

+

[](https://servicestack.net/posts/llms-py-ui)

|

|

76

|

+

|

|

77

|

+

**Monthly Activity Log**

|

|

78

|

+

|

|

79

|

+

[](https://servicestack.net/posts/llms-py-ui)

|

|

80

|

+

|

|

81

|

+

[More Features and Screenshots](https://servicestack.net/posts/llms-py-ui).

|

|

73

82

|

|

|

74

83

|

## Installation

|

|

75

84

|

|

|

@@ -1,8 +1,8 @@

|

|

|

1

1

|

llms/__init__.py,sha256=Mk6eHi13yoUxLlzhwfZ6A1IjsfSQt9ShhOdbLXTvffU,53

|

|

2

2

|

llms/__main__.py,sha256=hrBulHIt3lmPm1BCyAEVtB6DQ0Hvc3gnIddhHCmJasg,151

|

|

3

3

|

llms/index.html,sha256=OA9mRmgh-dQrPqb0Z2Jv-cwEZ3YLPRxcWUN7ASjxO8s,2658

|

|

4

|

-

llms/llms.json,sha256=

|

|

5

|

-

llms/main.py,sha256=

|

|

4

|

+

llms/llms.json,sha256=zMSbAMOhWjZDgXpVZOZpjYQs2k-h41yKHGhv_cphuTE,40780

|

|

5

|

+

llms/main.py,sha256=mskL0eRqLtCXNES9GldKofitGWI0cI8S6QuC0Aw2Hpc,71037

|

|

6

6

|

llms/ui.json,sha256=iBOmpNeD5-o8AgUa51ymS-KemovJ7bm9J1fnL0nf8jk,134025

|

|

7

7

|

llms/ui/Analytics.mjs,sha256=mAS5AUQjpnEIMyzGzOGE6fZxwxoVyq5QCitYQSSCEpQ,69151

|

|

8

8

|

llms/ui/App.mjs,sha256=hXtUjaL3GrcIHieEK3BzIG72OVzrorBBS4RkE1DOGc4,439

|

|

@@ -20,7 +20,7 @@ llms/ui/SignIn.mjs,sha256=df3b-7L3ZIneDGbJWUk93K9RGo40gVeuR5StzT1ZH9g,2324

|

|

|

20

20

|

llms/ui/SystemPromptEditor.mjs,sha256=2CyIUvkIubqYPyIp5zC6_I8CMxvYINuYNjDxvMz4VRU,1265

|

|

21

21

|

llms/ui/SystemPromptSelector.mjs,sha256=AuEtRwUf_RkGgene3nVA9bw8AeMb-b5_6ZLJCTWA8KQ,3051

|

|

22

22

|

llms/ui/Welcome.mjs,sha256=QFAxN7sjWlhMvOIJCmHjNFCQcvpM_T-b4ze1ld9Hj7I,912

|

|

23

|

-

llms/ui/ai.mjs,sha256=

|

|

23

|

+

llms/ui/ai.mjs,sha256=1YxowGP9pBOwUkKAgMi7M5fd9XFVdNsIAQidCEfi6xw,2346

|

|

24

24

|

llms/ui/app.css,sha256=e81FHQ-K7TlS7Cr2x_CCHqrvmVvg9I-m0InLQHRT_Dg,98992

|

|

25

25

|

llms/ui/fav.svg,sha256=_R6MFeXl6wBFT0lqcUxYQIDWgm246YH_3hSTW0oO8qw,734

|

|

26

26

|

llms/ui/markdown.mjs,sha256=O5UspOeD8-E23rxOLWcS4eyy2YejMbPwszCYteVtuoU,6221

|

|

@@ -28,9 +28,9 @@ llms/ui/tailwind.input.css,sha256=yo_3A50uyiVSUHUWeqAMorXMhCWpZoE5lTO6OJIFlYg,11

|

|

|

28

28

|

llms/ui/threadStore.mjs,sha256=JKimOl-9c4p9qQ9L93tZZktmKwuzpiudXiWb4N9Ca3U,15557

|

|

29

29

|

llms/ui/typography.css,sha256=Z5Fe2IQWnh7bu1CMXniYt0SkaN2fXOFlOuniXUW8oGM,19325

|

|

30

30

|

llms/ui/utils.mjs,sha256=cYrP17JwpQk7lLqTWNgVTOD_ZZAovbWnx2QSvKzeB24,5333

|

|

31

|

-

llms_py-2.0.

|

|

32

|

-

llms_py-2.0.

|

|

33

|

-

llms_py-2.0.

|

|

34

|

-

llms_py-2.0.

|

|

35

|

-

llms_py-2.0.

|

|

36

|

-

llms_py-2.0.

|

|

31

|

+

llms_py-2.0.20.dist-info/licenses/LICENSE,sha256=rRryrddGfVftpde-rmAZpW0R8IJihqJ8t8wpfDXoKiQ,1549

|

|

32

|

+

llms_py-2.0.20.dist-info/METADATA,sha256=u2zKuP5T-1i96EQBnzvv7NZuDJYshgLzF1fN84p_C10,28330

|

|

33

|

+

llms_py-2.0.20.dist-info/WHEEL,sha256=_zCd3N1l69ArxyTb8rzEoP9TpbYXkqRFSNOD5OuxnTs,91

|

|

34

|

+

llms_py-2.0.20.dist-info/entry_points.txt,sha256=WswyE7PfnkZMIxboC-MS6flBD6wm-CYU7JSUnMhqMfM,40

|

|

35

|

+

llms_py-2.0.20.dist-info/top_level.txt,sha256=gC7hk9BKSeog8gyg-EM_g2gxm1mKHwFRfK-10BxOsa4,5

|

|

36

|

+

llms_py-2.0.20.dist-info/RECORD,,

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|