lazylabel-gui 1.3.5__py3-none-any.whl → 1.3.7__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- lazylabel/ui/main_window.py +27 -14

- lazylabel/ui/modes/multi_view_mode.py +38 -10

- lazylabel/ui/widgets/channel_threshold_widget.py +18 -4

- lazylabel_gui-1.3.7.dist-info/METADATA +227 -0

- {lazylabel_gui-1.3.5.dist-info → lazylabel_gui-1.3.7.dist-info}/RECORD +9 -9

- lazylabel_gui-1.3.5.dist-info/METADATA +0 -194

- {lazylabel_gui-1.3.5.dist-info → lazylabel_gui-1.3.7.dist-info}/WHEEL +0 -0

- {lazylabel_gui-1.3.5.dist-info → lazylabel_gui-1.3.7.dist-info}/entry_points.txt +0 -0

- {lazylabel_gui-1.3.5.dist-info → lazylabel_gui-1.3.7.dist-info}/licenses/LICENSE +0 -0

- {lazylabel_gui-1.3.5.dist-info → lazylabel_gui-1.3.7.dist-info}/top_level.txt +0 -0

lazylabel/ui/main_window.py

CHANGED

|

@@ -1582,6 +1582,19 @@ class MainWindow(QMainWindow):

|

|

|

1582

1582

|

# Reset AI mode state

|

|

1583

1583

|

self.ai_click_start_pos = None

|

|

1584

1584

|

|

|

1585

|

+

# Reset all link states to linked when navigating to new images

|

|

1586

|

+

config = self._get_multi_view_config()

|

|

1587

|

+

num_viewers = config["num_viewers"]

|

|

1588

|

+

self.multi_view_linked = [True] * num_viewers

|

|

1589

|

+

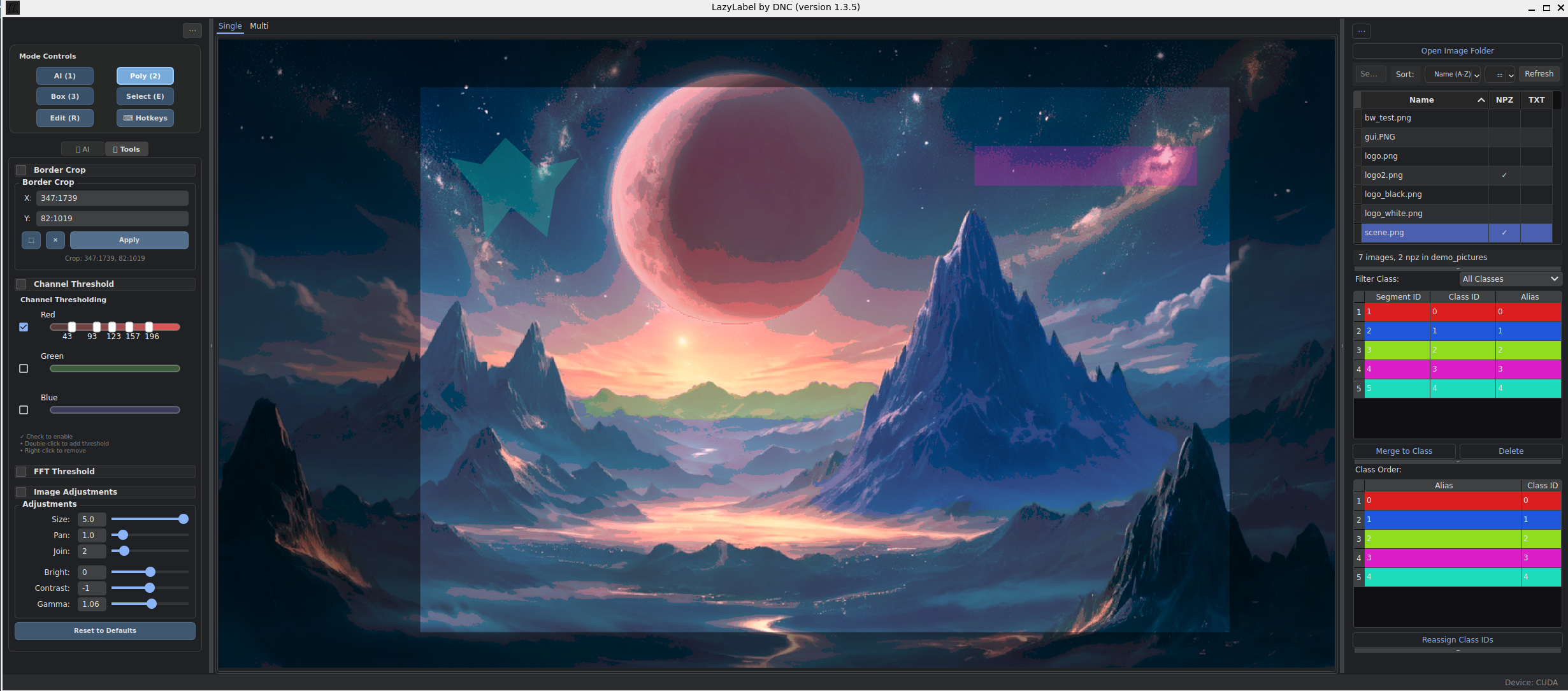

|

|

1590

|

+

# Reset unlink button appearances to default linked state

|

|

1591

|

+

if hasattr(self, "multi_view_unlink_buttons"):

|

|

1592

|

+

for i, button in enumerate(self.multi_view_unlink_buttons):

|

|

1593

|

+

if i < num_viewers:

|

|

1594

|

+

button.setText("X")

|

|

1595

|

+

button.setToolTip("Unlink this image from mirroring")

|

|

1596

|

+

button.setStyleSheet("")

|

|

1597

|

+

|

|

1585

1598

|

# Update UI lists to reflect cleared state

|

|

1586

1599

|

self._update_all_lists()

|

|

1587

1600

|

|

|

@@ -2829,7 +2842,8 @@ class MainWindow(QMainWindow):

|

|

|

2829

2842

|

for i in range(num_viewers):

|

|

2830

2843

|

image_path = self.multi_view_images[i]

|

|

2831

2844

|

|

|

2832

|

-

if

|

|

2845

|

+

# Skip if no image or if this viewer is unlinked

|

|

2846

|

+

if not image_path or not self.multi_view_linked[i]:

|

|

2833

2847

|

continue

|

|

2834

2848

|

|

|

2835

2849

|

# Filter segments for this viewer

|

|

@@ -6802,21 +6816,20 @@ class MainWindow(QMainWindow):

|

|

|

6802

6816

|

|

|

6803

6817

|

def _toggle_multi_view_link(self, image_index):

|

|

6804

6818

|

"""Toggle the link status for a specific image in multi-view."""

|

|

6805

|

-

|

|

6806

|

-

|

|

6807

|

-

|

|

6808

|

-

]

|

|

6819

|

+

# Check bounds and link status - only allow unlinking when currently linked

|

|

6820

|

+

if (

|

|

6821

|

+

0 <= image_index < len(self.multi_view_linked)

|

|

6822

|

+

and self.multi_view_linked[image_index]

|

|

6823

|

+

):

|

|

6824

|

+

# Currently linked - allow unlinking

|

|

6825

|

+

self.multi_view_linked[image_index] = False

|

|

6809

6826

|

|

|

6810

|

-

# Update button appearance

|

|

6827

|

+

# Update button appearance to show unlinked state

|

|

6811

6828

|

button = self.multi_view_unlink_buttons[image_index]

|

|

6812

|

-

|

|

6813

|

-

|

|

6814

|

-

|

|

6815

|

-

|

|

6816

|

-

else:

|

|

6817

|

-

button.setText("↪")

|

|

6818

|

-

button.setToolTip("Link this image to mirroring")

|

|

6819

|

-

button.setStyleSheet("background-color: #ffcccc;")

|

|

6829

|

+

button.setText("↪")

|

|

6830

|

+

button.setToolTip("This image is unlinked from mirroring")

|

|

6831

|

+

button.setStyleSheet("background-color: #ff4444; color: white;")

|

|

6832

|

+

# If already unlinked or invalid index, do nothing (prevent re-linking)

|

|

6820

6833

|

|

|

6821

6834

|

def _start_background_image_discovery(self):

|

|

6822

6835

|

"""Start background discovery of all image files."""

|

|

@@ -369,12 +369,20 @@ class MultiViewModeHandler(BaseModeHandler):

|

|

|

369

369

|

|

|

370

370

|

paired_segment = {"type": "Polygon", "views": {}}

|

|

371

371

|

|

|

372

|

-

# Add view data for

|

|

372

|

+

# Add view data for current viewer and mirror to linked viewers only

|

|

373

|

+

paired_segment["views"][viewer_index] = view_data

|

|

374

|

+

|

|

375

|

+

# Mirror to other viewers only if they are linked

|

|

373

376

|

for viewer_idx in range(num_viewers):

|

|

374

|

-

|

|

375

|

-

|

|

376

|

-

|

|

377

|

-

|

|

377

|

+

if (

|

|

378

|

+

viewer_idx != viewer_index

|

|

379

|

+

and self.main_window.multi_view_linked[viewer_idx]

|

|

380

|

+

and self.main_window.multi_view_images[viewer_idx] is not None

|

|

381

|

+

):

|

|

382

|

+

paired_segment["views"][viewer_idx] = {

|

|

383

|

+

"vertices": view_data["vertices"].copy(),

|

|

384

|

+

"mask": None,

|

|

385

|

+

}

|

|

378

386

|

|

|

379

387

|

# Add to segment manager

|

|

380

388

|

self.main_window.segment_manager.add_segment(paired_segment)

|

|

@@ -783,9 +791,13 @@ class MultiViewModeHandler(BaseModeHandler):

|

|

|

783

791

|

# Add the current viewer's data

|

|

784

792

|

paired_segment["views"][viewer_index] = view_data

|

|

785

793

|

|

|

786

|

-

# Mirror to all other viewers with same coordinates (

|

|

794

|

+

# Mirror to all other viewers with same coordinates (only if they are linked)

|

|

787

795

|

for other_viewer_index in range(num_viewers):

|

|

788

|

-

if

|

|

796

|

+

if (

|

|

797

|

+

other_viewer_index != viewer_index

|

|

798

|

+

and self.main_window.multi_view_linked[other_viewer_index]

|

|

799

|

+

and self.main_window.multi_view_images[other_viewer_index] is not None

|

|

800

|

+

):

|

|

789

801

|

mirrored_view_data = {

|

|

790

802

|

"vertices": view_data[

|

|

791

803

|

"vertices"

|

|

@@ -807,11 +819,27 @@ class MultiViewModeHandler(BaseModeHandler):

|

|

|

807

819

|

|

|

808

820

|

# Update UI

|

|

809

821

|

self.main_window._update_all_lists()

|

|

810

|

-

|

|

811

|

-

|

|

812

|

-

|

|

822

|

+

|

|

823

|

+

# Count linked viewers (excluding the source viewer)

|

|

824

|

+

linked_viewers_count = sum(

|

|

825

|

+

1

|

|

826

|

+

for i in range(num_viewers)

|

|

827

|

+

if i != viewer_index

|

|

828

|

+

and self.main_window.multi_view_linked[i]

|

|

829

|

+

and self.main_window.multi_view_images[i] is not None

|

|

813

830

|

)

|

|

814

831

|

|

|

832

|

+

if linked_viewers_count == 0:

|

|

833

|

+

viewer_count_text = "created (no linked viewers to mirror to)"

|

|

834

|

+

elif linked_viewers_count == 1:

|

|

835

|

+

viewer_count_text = "created and mirrored to 1 linked viewer"

|

|

836

|

+

else:

|

|

837

|

+

viewer_count_text = (

|

|

838

|

+

f"created and mirrored to {linked_viewers_count} linked viewers"

|

|

839

|

+

)

|

|

840

|

+

|

|

841

|

+

self.main_window._show_notification(f"Polygon {viewer_count_text}.")

|

|

842

|

+

|

|

815

843

|

# Clear polygon state for this viewer

|

|

816

844

|

self._clear_multi_view_polygon(viewer_index)

|

|

817

845

|

|

|

@@ -372,6 +372,7 @@ class ChannelThresholdWidget(QWidget):

|

|

|

372

372

|

super().__init__(parent)

|

|

373

373

|

self.current_image_channels = 0 # 0 = no image, 1 = grayscale, 3 = RGB

|

|

374

374

|

self.sliders = {} # Dictionary of channel name -> slider

|

|

375

|

+

self.is_dragging = False # Track if any slider is being dragged

|

|

375

376

|

|

|

376

377

|

self.setupUI()

|

|

377

378

|

|

|

@@ -434,10 +435,8 @@ class ChannelThresholdWidget(QWidget):

|

|

|

434

435

|

for channel_name in channel_names:

|

|

435

436

|

slider_widget = ChannelSliderWidget(channel_name, self)

|

|

436

437

|

slider_widget.valueChanged.connect(self._on_slider_changed)

|

|

437

|

-

slider_widget.dragStarted.connect(

|

|

438

|

-

|

|

439

|

-

) # Forward drag signals

|

|

440

|

-

slider_widget.dragFinished.connect(self.dragFinished.emit)

|

|

438

|

+

slider_widget.dragStarted.connect(self._on_drag_started)

|

|

439

|

+

slider_widget.dragFinished.connect(self._on_drag_finished)

|

|

441

440

|

self.sliders[channel_name] = slider_widget

|

|

442

441

|

self.sliders_layout.addWidget(slider_widget)

|

|

443

442

|

|

|

@@ -450,6 +449,21 @@ class ChannelThresholdWidget(QWidget):

|

|

|

450

449

|

|

|

451

450

|

def _on_slider_changed(self):

|

|

452

451

|

"""Handle slider value change."""

|

|

452

|

+

# Only emit thresholdChanged if not currently dragging

|

|

453

|

+

# This prevents expensive calculations during drag operations

|

|

454

|

+

if not self.is_dragging:

|

|

455

|

+

self.thresholdChanged.emit()

|

|

456

|

+

|

|

457

|

+

def _on_drag_started(self):

|

|

458

|

+

"""Handle drag start - suppress expensive calculations during drag."""

|

|

459

|

+

self.is_dragging = True

|

|

460

|

+

self.dragStarted.emit()

|

|

461

|

+

|

|

462

|

+

def _on_drag_finished(self):

|

|

463

|

+

"""Handle drag finish - perform final calculation."""

|

|

464

|

+

self.is_dragging = False

|

|

465

|

+

self.dragFinished.emit()

|

|

466

|

+

# Emit threshold changed now that dragging is complete

|

|

453

467

|

self.thresholdChanged.emit()

|

|

454

468

|

|

|

455

469

|

def get_threshold_settings(self):

|

|

@@ -0,0 +1,227 @@

|

|

|

1

|

+

Metadata-Version: 2.4

|

|

2

|

+

Name: lazylabel-gui

|

|

3

|

+

Version: 1.3.7

|

|

4

|

+

Summary: An image segmentation GUI for generating ML ready mask tensors and annotations.

|

|

5

|

+

Author-email: "Deniz N. Cakan" <deniz.n.cakan@gmail.com>

|

|

6

|

+

License: MIT License

|

|

7

|

+

|

|

8

|

+

Copyright (c) 2025 Deniz N. Cakan

|

|

9

|

+

|

|

10

|

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

|

11

|

+

of this software and associated documentation files (the "Software"), to deal

|

|

12

|

+

in the Software without restriction, including without limitation the rights

|

|

13

|

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

|

14

|

+

copies of the Software, and to permit persons to whom the Software is

|

|

15

|

+

furnished to do so, subject to the following conditions:

|

|

16

|

+

|

|

17

|

+

The above copyright notice and this permission notice shall be included in all

|

|

18

|

+

copies or substantial portions of the Software.

|

|

19

|

+

|

|

20

|

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

|

21

|

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

|

22

|

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

|

23

|

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

|

24

|

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

|

25

|

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

|

26

|

+

SOFTWARE.

|

|

27

|

+

|

|

28

|

+

Project-URL: Homepage, https://github.com/dnzckn/lazylabel

|

|

29

|

+

Project-URL: Bug Tracker, https://github.com/dnzckn/lazylabel/issues

|

|

30

|

+

Classifier: Programming Language :: Python :: 3

|

|

31

|

+

Classifier: License :: OSI Approved :: MIT License

|

|

32

|

+

Classifier: Operating System :: OS Independent

|

|

33

|

+

Classifier: Topic :: Scientific/Engineering :: Image Processing

|

|

34

|

+

Classifier: Environment :: X11 Applications :: Qt

|

|

35

|

+

Requires-Python: >=3.10

|

|

36

|

+

Description-Content-Type: text/markdown

|

|

37

|

+

License-File: LICENSE

|

|

38

|

+

Requires-Dist: PyQt6>=6.9.0

|

|

39

|

+

Requires-Dist: pyqtdarktheme==2.1.0

|

|

40

|

+

Requires-Dist: torch>=2.7.1

|

|

41

|

+

Requires-Dist: torchvision>=0.22.1

|

|

42

|

+

Requires-Dist: segment-anything==1.0

|

|

43

|

+

Requires-Dist: numpy>=2.1.2

|

|

44

|

+

Requires-Dist: opencv-python>=4.11.0.86

|

|

45

|

+

Requires-Dist: scipy>=1.15.3

|

|

46

|

+

Requires-Dist: requests>=2.32.4

|

|

47

|

+

Requires-Dist: tqdm>=4.67.1

|

|

48

|

+

Provides-Extra: dev

|

|

49

|

+

Requires-Dist: pytest>=7.0.0; extra == "dev"

|

|

50

|

+

Requires-Dist: pytest-cov>=4.0.0; extra == "dev"

|

|

51

|

+

Requires-Dist: pytest-mock>=3.10.0; extra == "dev"

|

|

52

|

+

Requires-Dist: pytest-qt>=4.2.0; extra == "dev"

|

|

53

|

+

Requires-Dist: ruff>=0.8.0; extra == "dev"

|

|

54

|

+

Dynamic: license-file

|

|

55

|

+

|

|

56

|

+

# LazyLabel

|

|

57

|

+

|

|

58

|

+

<div align="center">

|

|

59

|

+

<img src="https://raw.githubusercontent.com/dnzckn/LazyLabel/main/src/lazylabel/demo_pictures/logo2.png" alt="LazyLabel Logo" style="height:60px; vertical-align:middle;" />

|

|

60

|

+

<img src="https://raw.githubusercontent.com/dnzckn/LazyLabel/main/src/lazylabel/demo_pictures/logo_black.png" alt="LazyLabel Cursive" style="height:60px; vertical-align:middle;" />

|

|

61

|

+

</div>

|

|

62

|

+

|

|

63

|

+

**AI-Assisted Image Segmentation for Machine Learning Dataset Preparation**

|

|

64

|

+

|

|

65

|

+

LazyLabel combines Meta's Segment Anything Model (SAM) with comprehensive manual annotation tools to accelerate the creation of pixel-perfect segmentation masks for computer vision applications.

|

|

66

|

+

|

|

67

|

+

<div align="center">

|

|

68

|

+

<img src="https://raw.githubusercontent.com/dnzckn/LazyLabel/main/src/lazylabel/demo_pictures/gui.PNG" alt="LazyLabel Screenshot" width="800"/>

|

|

69

|

+

</div>

|

|

70

|

+

|

|

71

|

+

---

|

|

72

|

+

|

|

73

|

+

## Quick Start

|

|

74

|

+

|

|

75

|

+

```bash

|

|

76

|

+

pip install lazylabel-gui

|

|

77

|

+

lazylabel-gui

|

|

78

|

+

```

|

|

79

|

+

|

|

80

|

+

**From source:**

|

|

81

|

+

```bash

|

|

82

|

+

git clone https://github.com/dnzckn/LazyLabel.git

|

|

83

|

+

cd LazyLabel

|

|

84

|

+

pip install -e .

|

|

85

|

+

lazylabel-gui

|

|

86

|

+

```

|

|

87

|

+

|

|

88

|

+

**Requirements:** Python 3.10+, 8GB RAM, ~2.5GB disk space (for model weights)

|

|

89

|

+

|

|

90

|

+

---

|

|

91

|

+

|

|

92

|

+

## Core Features

|

|

93

|

+

|

|

94

|

+

### AI-Powered Segmentation

|

|

95

|

+

LazyLabel leverages Meta's SAM for intelligent object detection:

|

|

96

|

+

- Single-click object segmentation

|

|

97

|

+

- Interactive refinement with positive/negative points

|

|

98

|

+

- Support for both SAM 1.0 and SAM 2.1 models

|

|

99

|

+

- GPU acceleration with automatic CPU fallback

|

|

100

|

+

|

|

101

|

+

### Manual Annotation Tools

|

|

102

|

+

When precision matters:

|

|

103

|

+

- Polygon drawing with vertex-level editing

|

|

104

|

+

- Bounding box annotations for object detection

|

|

105

|

+

- Edit mode for adjusting existing segments

|

|

106

|

+

- Merge tool for combining related segments

|

|

107

|

+

|

|

108

|

+

### Image Processing & Filtering

|

|

109

|

+

Advanced preprocessing capabilities:

|

|

110

|

+

- **FFT filtering**: Remove noise and enhance edges

|

|

111

|

+

- **Channel thresholding**: Isolate objects by color

|

|

112

|

+

- **Border cropping**: Define crop regions that set pixels outside the area to zero in saved outputs

|

|

113

|

+

- **View adjustments**: Brightness, contrast, gamma correction

|

|

114

|

+

|

|

115

|

+

### Multi-View Mode

|

|

116

|

+

Process multiple images efficiently:

|

|

117

|

+

- Annotate up to 4 images simultaneously

|

|

118

|

+

- Synchronized zoom and pan across views

|

|

119

|

+

- Mirror annotations to all linked images

|

|

120

|

+

|

|

121

|

+

---

|

|

122

|

+

|

|

123

|

+

## Export Formats

|

|

124

|

+

|

|

125

|

+

### NPZ Format (Semantic Segmentation)

|

|

126

|

+

One-hot encoded masks optimized for deep learning:

|

|

127

|

+

|

|

128

|

+

```python

|

|

129

|

+

import numpy as np

|

|

130

|

+

|

|

131

|

+

data = np.load('image.npz')

|

|

132

|

+

mask = data['mask'] # Shape: (height, width, num_classes)

|

|

133

|

+

|

|

134

|

+

# Each channel represents one class

|

|

135

|

+

sky = mask[:, :, 0]

|

|

136

|

+

boats = mask[:, :, 1]

|

|

137

|

+

cats = mask[:, :, 2]

|

|

138

|

+

dogs = mask[:, :, 3]

|

|

139

|

+

```

|

|

140

|

+

|

|

141

|

+

### YOLO Format (Object Detection)

|

|

142

|

+

Normalized polygon coordinates for YOLO training:

|

|

143

|

+

```

|

|

144

|

+

0 0.234 0.456 0.289 0.478 0.301 0.523 ...

|

|

145

|

+

1 0.567 0.123 0.598 0.145 0.612 0.189 ...

|

|

146

|

+

```

|

|

147

|

+

|

|

148

|

+

### Class Aliases (JSON)

|

|

149

|

+

Maintains consistent class naming across datasets:

|

|

150

|

+

```json

|

|

151

|

+

{

|

|

152

|

+

"0": "background",

|

|

153

|

+

"1": "person",

|

|

154

|

+

"2": "vehicle"

|

|

155

|

+

}

|

|

156

|

+

```

|

|

157

|

+

|

|

158

|

+

---

|

|

159

|

+

|

|

160

|

+

## Typical Workflow

|

|

161

|

+

|

|

162

|

+

1. **Open folder** containing your images

|

|

163

|

+

2. **Click objects** to generate AI masks (mode 1)

|

|

164

|

+

3. **Refine** with additional points or manual tools

|

|

165

|

+

4. **Assign classes** and organize in the class table

|

|

166

|

+

5. **Export** as NPZ or YOLO format

|

|

167

|

+

|

|

168

|

+

### Advanced Preprocessing Workflow

|

|

169

|

+

|

|

170

|

+

For challenging images:

|

|

171

|

+

1. Apply **FFT filtering** to reduce noise

|

|

172

|

+

2. Use **channel thresholding** to isolate color ranges

|

|

173

|

+

3. Enable **"Operate on View"** to pass filtered images to SAM

|

|

174

|

+

4. Fine-tune with manual tools

|

|

175

|

+

|

|

176

|

+

---

|

|

177

|

+

|

|

178

|

+

## Advanced Features

|

|

179

|

+

|

|

180

|

+

### Multi-View Mode

|

|

181

|

+

Access via the "Multi" tab to process multiple images:

|

|

182

|

+

- 2-view (side-by-side) or 4-view (grid) layouts

|

|

183

|

+

- Annotations mirror across linked views automatically

|

|

184

|

+

- Synchronized zoom maintains alignment

|

|

185

|

+

|

|

186

|

+

### SAM 2.1 Support

|

|

187

|

+

LazyLabel supports both SAM 1.0 (default) and SAM 2.1 models. SAM 2.1 offers improved segmentation accuracy and better handling of complex boundaries.

|

|

188

|

+

|

|

189

|

+

To use SAM 2.1 models:

|

|

190

|

+

1. Install the SAM 2 package:

|

|

191

|

+

```bash

|

|

192

|

+

pip install git+https://github.com/facebookresearch/sam2.git

|

|

193

|

+

```

|

|

194

|

+

2. Download a SAM 2.1 model (e.g., `sam2.1_hiera_large.pt`) from the [SAM 2 repository](https://github.com/facebookresearch/sam2)

|

|

195

|

+

3. Place the model file in LazyLabel's models folder:

|

|

196

|

+

- If installed via pip: `~/.local/share/lazylabel/models/` (or equivalent on your system)

|

|

197

|

+

- If running from source: `src/lazylabel/models/`

|

|

198

|

+

4. Select the SAM 2.1 model from the dropdown in LazyLabel's settings

|

|

199

|

+

|

|

200

|

+

Note: SAM 1.0 models are automatically downloaded on first use.

|

|

201

|

+

|

|

202

|

+

---

|

|

203

|

+

|

|

204

|

+

## Key Shortcuts

|

|

205

|

+

|

|

206

|

+

| Action | Key | Description |

|

|

207

|

+

|--------|-----|-------------|

|

|

208

|

+

| AI Mode | `1` | SAM point-click segmentation |

|

|

209

|

+

| Draw Mode | `2` | Manual polygon creation |

|

|

210

|

+

| Edit Mode | `E` | Modify existing segments |

|

|

211

|

+

| Accept AI Segment | `Space` | Confirm AI segment suggestion |

|

|

212

|

+

| Save | `Enter` | Save annotations |

|

|

213

|

+

| Merge | `M` | Combine selected segments |

|

|

214

|

+

| Pan Mode | `Q` | Enter pan mode |

|

|

215

|

+

| Pan | `WASD` | Navigate image |

|

|

216

|

+

| Delete | `V`/`Delete` | Remove segments |

|

|

217

|

+

| Undo/Redo | `Ctrl+Z/Y` | Action history |

|

|

218

|

+

|

|

219

|

+

---

|

|

220

|

+

|

|

221

|

+

## Documentation

|

|

222

|

+

|

|

223

|

+

- [Usage Manual](src/lazylabel/USAGE_MANUAL.md) - Comprehensive feature guide

|

|

224

|

+

- [Architecture Guide](src/lazylabel/ARCHITECTURE.md) - Technical implementation details

|

|

225

|

+

- [GitHub Issues](https://github.com/dnzckn/LazyLabel/issues) - Report bugs or request features

|

|

226

|

+

|

|

227

|

+

---

|

|

@@ -18,19 +18,19 @@ lazylabel/ui/editable_vertex.py,sha256=ofo3r8ZZ3b8oYV40vgzZuS3QnXYBNzE92ArC2wggJ

|

|

|

18

18

|

lazylabel/ui/hotkey_dialog.py,sha256=U_B76HLOxWdWkfA4d2XgRUaZTJPAAE_m5fmwf7Rh-5Y,14743

|

|

19

19

|

lazylabel/ui/hoverable_pixelmap_item.py,sha256=UbWVxpmCTaeae_AeA8gMOHYGUmAw40fZBFTS3sZlw48,1821

|

|

20

20

|

lazylabel/ui/hoverable_polygon_item.py,sha256=gZalImJ_PJYM7xON0iiSjQ335ZBREOfSscKLVs-MSh8,2314

|

|

21

|

-

lazylabel/ui/main_window.py,sha256=

|

|

21

|

+

lazylabel/ui/main_window.py,sha256=PFbVzZ1jbOuz2q8MNY3BHjWmJU6YoBqKiPwLvlDdsNI,383320

|

|

22

22

|

lazylabel/ui/numeric_table_widget_item.py,sha256=dQUlIFu9syCxTGAHVIlmbgkI7aJ3f3wmDPBz1AGK9Bg,283

|

|

23

23

|

lazylabel/ui/photo_viewer.py,sha256=3o7Xldn9kJWvWlbpcHDRMk87dnB5xZKbfyAT3oBYlIo,6670

|

|

24

24

|

lazylabel/ui/reorderable_class_table.py,sha256=sxHhQre5O_MXLDFgKnw43QnvXXoqn5xRKMGitgO7muI,2371

|

|

25

25

|

lazylabel/ui/right_panel.py,sha256=D69XgPXpLleflsIl8xCtoBZzFleLqx0SezdwfcEJhUg,14280

|

|

26

26

|

lazylabel/ui/modes/__init__.py,sha256=ikg47aeexLQavSda_3tYn79xGJW38jKoUCLXRe2w8ok,219

|

|

27

27

|

lazylabel/ui/modes/base_mode.py,sha256=0R3AkjN_WpYwetF3uuOvkTxb6Q1HB-Z1NQPvLh9eUTY,1315

|

|

28

|

-

lazylabel/ui/modes/multi_view_mode.py,sha256=

|

|

28

|

+

lazylabel/ui/modes/multi_view_mode.py,sha256=IiInmNhdFEQAcKuY2QY5ABx-EXgOh1lVeTnJVGoLyNM,51713

|

|

29

29

|

lazylabel/ui/modes/single_view_mode.py,sha256=khGUXVQ_lv9cCXkOAewQN8iH7R_CPyIVtQjUqEF1eC0,11852

|

|

30

30

|

lazylabel/ui/widgets/__init__.py,sha256=6VDoMnanqVm3yjOovxie3WggPODuhUsIO75RtxOhQhI,688

|

|

31

31

|

lazylabel/ui/widgets/adjustments_widget.py,sha256=5xldhdEArX3H2P7EmHPjURdwpV-Wa2WULFfspp0gxns,12750

|

|

32

32

|

lazylabel/ui/widgets/border_crop_widget.py,sha256=8NTkHrk_L5_T1psVuXMspVVZRPTTeJyIEYBfVxdGeQA,7529

|

|

33

|

-

lazylabel/ui/widgets/channel_threshold_widget.py,sha256=

|

|

33

|

+

lazylabel/ui/widgets/channel_threshold_widget.py,sha256=DSYDOUTMXo02Fn5P4cjh2OqDawA2Hm_ChYecVGwzwk8,21297

|

|

34

34

|

lazylabel/ui/widgets/fft_threshold_widget.py,sha256=mcbkNoP0aj-Pe57sgIWBnmg4JiEbTikrwG67Vgygnio,20859

|

|

35

35

|

lazylabel/ui/widgets/fragment_threshold_widget.py,sha256=YtToua1eAUtEuJ3EwdCMvI-39TRrFnshkty4tnR4OMU,3492

|

|

36

36

|

lazylabel/ui/widgets/model_selection_widget.py,sha256=k4zyhWszi_7e-0TPa0Go1LLPZpTNGtrYSb-yT7uGiVU,8420

|

|

@@ -47,9 +47,9 @@ lazylabel/utils/custom_file_system_model.py,sha256=-3EimlybvevH6bvqBE0qdFnLADVta

|

|

|

47

47

|

lazylabel/utils/fast_file_manager.py,sha256=kzbWz_xKufG5bP6sjyZV1fmOKRWPPNeL-xLYZEu_8wE,44697

|

|

48

48

|

lazylabel/utils/logger.py,sha256=R7z6ifgA-NY-9ZbLlNH0i19zzwXndJ_gkG2J1zpVEhg,1306

|

|

49

49

|

lazylabel/utils/utils.py,sha256=sYSCoXL27OaLgOZaUkCAhgmKZ7YfhR3Cc5F8nDIa3Ig,414

|

|

50

|

-

lazylabel_gui-1.3.

|

|

51

|

-

lazylabel_gui-1.3.

|

|

52

|

-

lazylabel_gui-1.3.

|

|

53

|

-

lazylabel_gui-1.3.

|

|

54

|

-

lazylabel_gui-1.3.

|

|

55

|

-

lazylabel_gui-1.3.

|

|

50

|

+

lazylabel_gui-1.3.7.dist-info/licenses/LICENSE,sha256=kSDEIgrWAPd1u2UFGGpC9X71dhzrlzBFs8hbDlENnGE,1092

|

|

51

|

+

lazylabel_gui-1.3.7.dist-info/METADATA,sha256=Mo6GD0ppauY9CxGQVxIT3BzwEcryP6D_Gs3alvGu5Eg,7754

|

|

52

|

+

lazylabel_gui-1.3.7.dist-info/WHEEL,sha256=_zCd3N1l69ArxyTb8rzEoP9TpbYXkqRFSNOD5OuxnTs,91

|

|

53

|

+

lazylabel_gui-1.3.7.dist-info/entry_points.txt,sha256=Hd0WwEG9OPTa_ziYjiD0aRh7R6Fupt-wdQ3sspdc1mM,54

|

|

54

|

+

lazylabel_gui-1.3.7.dist-info/top_level.txt,sha256=YN4uIyrpDBq1wiJaBuZLDipIzyZY0jqJOmmXiPIOUkU,10

|

|

55

|

+

lazylabel_gui-1.3.7.dist-info/RECORD,,

|

|

@@ -1,194 +0,0 @@

|

|

|

1

|

-

Metadata-Version: 2.4

|

|

2

|

-

Name: lazylabel-gui

|

|

3

|

-

Version: 1.3.5

|

|

4

|

-

Summary: An image segmentation GUI for generating ML ready mask tensors and annotations.

|

|

5

|

-

Author-email: "Deniz N. Cakan" <deniz.n.cakan@gmail.com>

|

|

6

|

-

License: MIT License

|

|

7

|

-

|

|

8

|

-

Copyright (c) 2025 Deniz N. Cakan

|

|

9

|

-

|

|

10

|

-

Permission is hereby granted, free of charge, to any person obtaining a copy

|

|

11

|

-

of this software and associated documentation files (the "Software"), to deal

|

|

12

|

-

in the Software without restriction, including without limitation the rights

|

|

13

|

-

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

|

14

|

-

copies of the Software, and to permit persons to whom the Software is

|

|

15

|

-

furnished to do so, subject to the following conditions:

|

|

16

|

-

|

|

17

|

-

The above copyright notice and this permission notice shall be included in all

|

|

18

|

-

copies or substantial portions of the Software.

|

|

19

|

-

|

|

20

|

-

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

|

21

|

-

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

|

22

|

-

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

|

23

|

-

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

|

24

|

-

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

|

25

|

-

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

|

26

|

-

SOFTWARE.

|

|

27

|

-

|

|

28

|

-

Project-URL: Homepage, https://github.com/dnzckn/lazylabel

|

|

29

|

-

Project-URL: Bug Tracker, https://github.com/dnzckn/lazylabel/issues

|

|

30

|

-

Classifier: Programming Language :: Python :: 3

|

|

31

|

-

Classifier: License :: OSI Approved :: MIT License

|

|

32

|

-

Classifier: Operating System :: OS Independent

|

|

33

|

-

Classifier: Topic :: Scientific/Engineering :: Image Processing

|

|

34

|

-

Classifier: Environment :: X11 Applications :: Qt

|

|

35

|

-

Requires-Python: >=3.10

|

|

36

|

-

Description-Content-Type: text/markdown

|

|

37

|

-

License-File: LICENSE

|

|

38

|

-

Requires-Dist: PyQt6>=6.9.0

|

|

39

|

-

Requires-Dist: pyqtdarktheme==2.1.0

|

|

40

|

-

Requires-Dist: torch>=2.7.1

|

|

41

|

-

Requires-Dist: torchvision>=0.22.1

|

|

42

|

-

Requires-Dist: segment-anything==1.0

|

|

43

|

-

Requires-Dist: numpy>=2.1.2

|

|

44

|

-

Requires-Dist: opencv-python>=4.11.0.86

|

|

45

|

-

Requires-Dist: scipy>=1.15.3

|

|

46

|

-

Requires-Dist: requests>=2.32.4

|

|

47

|

-

Requires-Dist: tqdm>=4.67.1

|

|

48

|

-

Provides-Extra: dev

|

|

49

|

-

Requires-Dist: pytest>=7.0.0; extra == "dev"

|

|

50

|

-

Requires-Dist: pytest-cov>=4.0.0; extra == "dev"

|

|

51

|

-

Requires-Dist: pytest-mock>=3.10.0; extra == "dev"

|

|

52

|

-

Requires-Dist: pytest-qt>=4.2.0; extra == "dev"

|

|

53

|

-

Requires-Dist: ruff>=0.8.0; extra == "dev"

|

|

54

|

-

Dynamic: license-file

|

|

55

|

-

|

|

56

|

-

# <img src="https://raw.githubusercontent.com/dnzckn/LazyLabel/main/src/lazylabel/demo_pictures/logo2.png" alt="LazyLabel Logo" style="height:60px; vertical-align:middle;" /> <img src="https://raw.githubusercontent.com/dnzckn/LazyLabel/main/src/lazylabel/demo_pictures/logo_black.png" alt="LazyLabel Cursive" style="height:60px; vertical-align:middle;" />

|

|

57

|

-

|

|

58

|

-

**AI-Assisted Image Segmentation Made Simple**

|

|

59

|

-

|

|

60

|

-

LazyLabel combines Meta's Segment Anything Model (SAM) with intuitive editing tools for fast, precise image labeling. Perfect for machine learning datasets and computer vision research.

|

|

61

|

-

|

|

62

|

-

|

|

63

|

-

|

|

64

|

-

---

|

|

65

|

-

|

|

66

|

-

## 🚀 Quick Start

|

|

67

|

-

|

|

68

|

-

### Installation

|

|

69

|

-

```bash

|

|

70

|

-

pip install lazylabel-gui

|

|

71

|

-

lazylabel-gui

|

|

72

|

-

```

|

|

73

|

-

|

|

74

|

-

### Optional: SAM-2 Support

|

|

75

|

-

For advanced SAM-2 models, install manually:

|

|

76

|

-

```bash

|

|

77

|

-

pip install git+https://github.com/facebookresearch/sam2.git

|

|

78

|

-

```

|

|

79

|

-

*Note: SAM-2 is optional - LazyLabel works with SAM 1.0 models by default*

|

|

80

|

-

|

|

81

|

-

### Usage

|

|

82

|

-

1. **Open Folder** → Select your image directory

|

|

83

|

-

2. **Click on image** → AI generates instant masks

|

|

84

|

-

3. **Fine-tune** → Edit polygons, merge segments

|

|

85

|

-

4. **Export** → Clean `.npz` files ready for ML training

|

|

86

|

-

|

|

87

|

-

---

|

|

88

|

-

|

|

89

|

-

## ✨ Key Features

|

|

90

|

-

|

|

91

|

-

- **🧠 One-click AI segmentation** with Meta's SAM and SAM2 models

|

|

92

|

-

- **🎨 Manual polygon drawing** with full vertex control

|

|

93

|

-

- **⚡ Smart editing tools** - merge segments, adjust class names, and class order

|

|

94

|

-

- **📊 ML-ready exports** - One-hot encoded `.npz` format and `.json` for YOLO format

|

|

95

|

-

- **🔧 Image enhancement** - brightness, contrast, gamma adjustment

|

|

96

|

-

- **🔍 Image viewer** - zoom, pan, brightness, contrast, and gamma adjustment

|

|

97

|

-

- **✂️ Edge cropping** - define custom crop areas to focus on specific regions

|

|

98

|

-

- **🔄 Undo/Redo** - full history of all actions

|

|

99

|

-

- **💾 Auto-saving** - Automatic saving of your labels when navigating between images

|

|

100

|

-

- **🎛️ Advanced filtering** - FFT thresholding and color channel thresholding

|

|

101

|

-

- **⌨️ Customizable hotkeys** for all functions

|

|

102

|

-

|

|

103

|

-

---

|

|

104

|

-

|

|

105

|

-

## ⌨️ Essential Hotkeys

|

|

106

|

-

|

|

107

|

-

| Action | Key | Description |

|

|

108

|

-

|--------|-----|-------------|

|

|

109

|

-

| **AI Mode** | `1` | Point-click segmentation |

|

|

110

|

-

| **Draw Mode** | `2` | Manual polygon drawing |

|

|

111

|

-

| **Edit Mode** | `E` | Select and modify shapes |

|

|

112

|

-

| **Save Segment** | `Space` | Confirm current mask |

|

|

113

|

-

| **Merge** | `M` | Combine selected segments |

|

|

114

|

-

| **Pan** | `Q` + drag | Navigate large images |

|

|

115

|

-

| **Positive Point** | `Left Click` | Add to segment |

|

|

116

|

-

| **Negative Point** | `Right Click` | Remove from segment |

|

|

117

|

-

|

|

118

|

-

💡 **All hotkeys customizable** - Click "Hotkeys" button to personalize

|

|

119

|

-

|

|

120

|

-

---

|

|

121

|

-

|

|

122

|

-

## 📦 Output Format

|

|

123

|

-

|

|

124

|

-

Perfect for ML training - clean, structured data:

|

|

125

|

-

|

|

126

|

-

```python

|

|

127

|

-

import numpy as np

|

|

128

|

-

|

|

129

|

-

# Load your labeled data

|

|

130

|

-

data = np.load('your_image.npz')

|

|

131

|

-

mask = data['mask'] # Shape: (height, width, num_classes)

|

|

132

|

-

|

|

133

|

-

# Each channel is a binary mask for one class

|

|

134

|

-

class_0_mask = mask[:, :, 0] # Background

|

|

135

|

-

class_1_mask = mask[:, :, 1] # Object type 1

|

|

136

|

-

class_2_mask = mask[:, :, 2] # Object type 2

|

|

137

|

-

```

|

|

138

|

-

|

|

139

|

-

|

|

140

|

-

**Ideal for:**

|

|

141

|

-

- Semantic segmentation datasets

|

|

142

|

-

- Instance segmentation training

|

|

143

|

-

- Computer vision research

|

|

144

|

-

- Automated annotation pipelines

|

|

145

|

-

|

|

146

|

-

---

|

|

147

|

-

|

|

148

|

-

## 🛠️ Development

|

|

149

|

-

|

|

150

|

-

**Requirements:** Python 3.10+

|

|

151

|

-

**2.5GB** disk space for SAM model (auto-downloaded)

|

|

152

|

-

|

|

153

|

-

### Installation from Source

|

|

154

|

-

```bash

|

|

155

|

-

git clone https://github.com/dnzckn/LazyLabel.git

|

|

156

|

-

cd LazyLabel

|

|

157

|

-

pip install -e .

|

|

158

|

-

lazylabel-gui

|

|

159

|

-

```

|

|

160

|

-

|

|

161

|

-

### Testing & Quality

|

|

162

|

-

```bash

|

|

163

|

-

# Run full test suite

|

|

164

|

-

python -m pytest --cov=lazylabel --cov-report=html

|

|

165

|

-

|

|

166

|

-

# Code formatting & linting

|

|

167

|

-

ruff check . && ruff format .

|

|

168

|

-

```

|

|

169

|

-

|

|

170

|

-

### Architecture

|

|

171

|

-

- **Modular design** with clean component separation

|

|

172

|

-

- **Signal-based communication** between UI elements

|

|

173

|

-

- **Extensible model system** for new SAM variants

|

|

174

|

-

- **Comprehensive test suite** (150+ tests, 60%+ coverage)

|

|

175

|

-

|

|

176

|

-

---

|

|

177

|

-

|

|

178

|

-

## 🤝 Contributing

|

|

179

|

-

|

|

180

|

-

LazyLabel welcomes contributions! Check out:

|

|

181

|

-

- [Architecture Guide](src/lazylabel/ARCHITECTURE.md) for technical details

|

|

182

|

-

- [Hotkey System](src/lazylabel/HOTKEY_FEATURE.md) for customization

|

|

183

|

-

- Issues page for feature requests and bug reports

|

|

184

|

-

|

|

185

|

-

---

|

|

186

|

-

|

|

187

|

-

## 🙏 Acknowledgments

|

|

188

|

-

|

|

189

|

-

- [LabelMe](https://github.com/wkentaro/labelme)

|

|

190

|

-

- [Segment-Anything-UI](https://github.com/branislavhesko/segment-anything-ui)

|

|

191

|

-

|

|

192

|

-

---

|

|

193

|

-

|

|

194

|

-

**Made with ❤️ for the computer vision community**

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|