lattice-sub 1.3.1__py3-none-any.whl → 1.5.4__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- {lattice_sub-1.3.1.dist-info → lattice_sub-1.5.4.dist-info}/METADATA +42 -3

- lattice_sub-1.5.4.dist-info/RECORD +18 -0

- lattice_subtraction/__init__.py +5 -2

- lattice_subtraction/batch.py +13 -11

- lattice_subtraction/cli.py +126 -7

- lattice_subtraction/ui.py +62 -1

- lattice_subtraction/watch.py +478 -0

- lattice_sub-1.3.1.dist-info/RECORD +0 -17

- {lattice_sub-1.3.1.dist-info → lattice_sub-1.5.4.dist-info}/WHEEL +0 -0

- {lattice_sub-1.3.1.dist-info → lattice_sub-1.5.4.dist-info}/entry_points.txt +0 -0

- {lattice_sub-1.3.1.dist-info → lattice_sub-1.5.4.dist-info}/licenses/LICENSE +0 -0

- {lattice_sub-1.3.1.dist-info → lattice_sub-1.5.4.dist-info}/top_level.txt +0 -0

|

@@ -1,13 +1,13 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: lattice-sub

|

|

3

|

-

Version: 1.

|

|

3

|

+

Version: 1.5.4

|

|

4

4

|

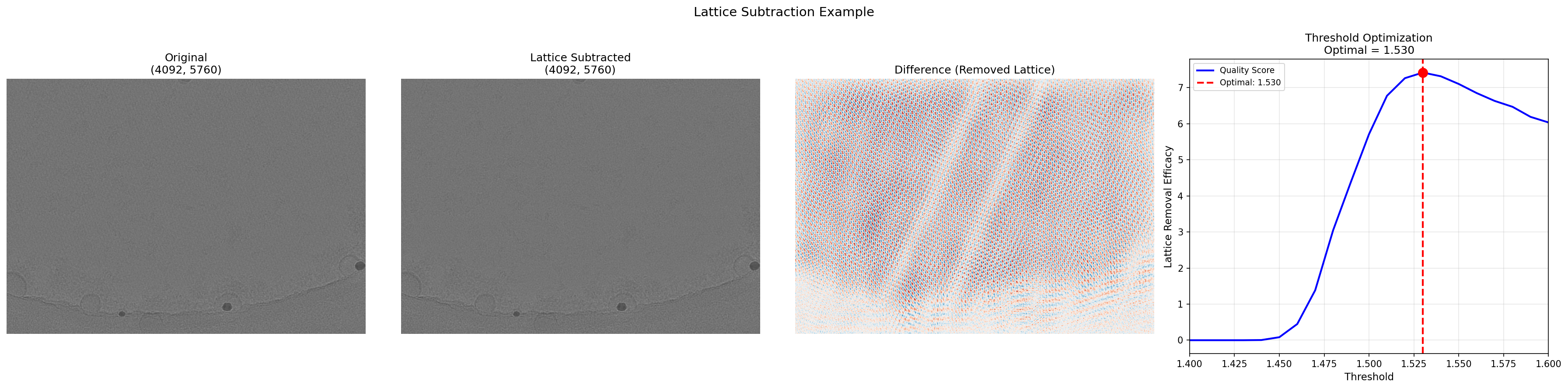

Summary: Lattice subtraction for cryo-EM micrographs - removes periodic crystal signals to reveal non-periodic features

|

|

5

5

|

Author-email: George Stephenson <george.stephenson@colorado.edu>, Vignesh Kasinath <vignesh.kasinath@colorado.edu>

|

|

6

6

|

License: MIT

|

|

7

7

|

Project-URL: Homepage, https://github.com/gsstephenson/cryoem-lattice-subtraction

|

|

8

8

|

Project-URL: Documentation, https://github.com/gsstephenson/cryoem-lattice-subtraction#readme

|

|

9

9

|

Project-URL: Repository, https://github.com/gsstephenson/cryoem-lattice-subtraction

|

|

10

|

-

Keywords: cryo-em,electron-microscopy,lattice-subtraction,image-processing,fft

|

|

10

|

+

Keywords: cryo-em,electron-microscopy,lattice-subtraction,image-processing,fft,live-processing,file-watching

|

|

11

11

|

Classifier: Development Status :: 5 - Production/Stable

|

|

12

12

|

Classifier: Intended Audience :: Science/Research

|

|

13

13

|

Classifier: License :: OSI Approved :: MIT License

|

|

@@ -29,6 +29,7 @@ Requires-Dist: scikit-image>=0.21

|

|

|

29

29

|

Requires-Dist: torch>=2.0

|

|

30

30

|

Requires-Dist: matplotlib>=3.7

|

|

31

31

|

Requires-Dist: kornia>=0.7

|

|

32

|

+

Requires-Dist: watchdog>=3.0

|

|

32

33

|

Provides-Extra: dev

|

|

33

34

|

Requires-Dist: pytest>=7.4; extra == "dev"

|

|

34

35

|

Requires-Dist: pytest-cov; extra == "dev"

|

|

@@ -56,6 +57,8 @@ Dynamic: license-file

|

|

|

56

57

|

|

|

57

58

|

**Remove periodic lattice patterns from cryo-EM micrographs to reveal non-periodic features.**

|

|

58

59

|

|

|

60

|

+

> **NEW in v1.5.0:** Live watch mode with `--live` flag! Automatically process motion-corrected files as they arrive during data collection.

|

|

61

|

+

|

|

59

62

|

|

|

60

63

|

|

|

61

64

|

---

|

|

@@ -88,6 +91,20 @@ lattice-sub process your_image.mrc -o output.mrc --pixel-size 0.56

|

|

|

88

91

|

lattice-sub batch input_folder/ output_folder/ --pixel-size 0.56

|

|

89

92

|

```

|

|

90

93

|

|

|

94

|

+

### Live Watch Mode (NEW in v1.5.0)

|

|

95

|

+

|

|

96

|

+

Process files as they arrive during data collection:

|

|

97

|

+

|

|

98

|

+

```bash

|

|

99

|

+

lattice-sub batch input_folder/ output_folder/ --pixel-size 0.56 --live

|

|

100

|

+

```

|

|

101

|

+

|

|

102

|

+

In live mode:

|

|

103

|

+

- If files already exist: batch processes them first with all GPUs

|

|

104

|

+

- Then switches to watch mode: monitors for new files with single GPU

|

|

105

|

+

- Press Ctrl+C to stop watching

|

|

106

|

+

- Perfect for processing motion-corrected files as they're generated

|

|

107

|

+

|

|

91

108

|

### Generate Comparison Images

|

|

92

109

|

|

|

93

110

|

```bash

|

|

@@ -117,6 +134,7 @@ lattice-sub batch input_folder/ output_folder/ -p 0.56 --vis comparisons/ -n 10

|

|

|

117

134

|

| `-t, --threshold` | Peak detection sensitivity (default: **auto** - optimized per image) |

|

|

118

135

|

| `--vis DIR` | Generate 4-panel comparison PNGs in DIR |

|

|

119

136

|

| `-n, --num-vis N` | Limit visualizations to first N images |

|

|

137

|

+

| `--live` | **Watch mode**: continuously monitor directory for new files (Ctrl+C to stop) |

|

|

120

138

|

| `--cpu` | Force CPU processing (GPU is used by default) |

|

|

121

139

|

| `-q, --quiet` | Hide the banner and progress messages |

|

|

122

140

|

| `-v, --verbose` | Show detailed processing information |

|

|

@@ -191,6 +209,9 @@ When processing batches on systems with multiple GPUs, files are automatically d

|

|

|

191

209

|

```bash

|

|

192

210

|

# Automatically uses all available GPUs

|

|

193

211

|

lattice-sub batch input_folder/ output_folder/ -p 0.56

|

|

212

|

+

|

|

213

|

+

# Or watch for new files in real-time (live mode)

|

|

214

|

+

lattice-sub batch input_folder/ output_folder/ -p 0.56 --live

|

|

194

215

|

```

|

|

195

216

|

|

|

196

217

|

**Example with 2 GPUs and 100 images:**

|

|

@@ -219,7 +240,7 @@ lattice-sub batch input/ output/ -p 0.56

|

|

|

219

240

|

|

|

220

241

|

**Output:**

|

|

221

242

|

```

|

|

222

|

-

Phase-preserving FFT inpainting for cryo-EM | v1.3

|

|

243

|

+

Phase-preserving FFT inpainting for cryo-EM | v1.5.3

|

|

223

244

|

|

|

224

245

|

Configuration

|

|

225

246

|

-------------

|

|

@@ -279,6 +300,23 @@ stats = processor.process_directory("raw/", "processed/")

|

|

|

279

300

|

print(f"Processed {stats.successful}/{stats.total} files")

|

|

280

301

|

```

|

|

281

302

|

|

|

303

|

+

### Live Watch Mode

|

|

304

|

+

|

|

305

|

+

```python

|

|

306

|

+

from lattice_subtraction import LiveBatchProcessor, Config

|

|

307

|

+

|

|

308

|

+

config = Config(pixel_ang=0.56)

|

|

309

|

+

processor = LiveBatchProcessor(

|

|

310

|

+

input_dir="raw/",

|

|

311

|

+

output_dir="processed/",

|

|

312

|

+

config=config,

|

|

313

|

+

num_workers=1

|

|

314

|

+

)

|

|

315

|

+

|

|

316

|

+

# Process existing files, then watch for new ones

|

|

317

|

+

processor.watch_and_process() # Runs until Ctrl+C

|

|

318

|

+

```

|

|

319

|

+

|

|

282

320

|

---

|

|

283

321

|

|

|

284

322

|

## What It Does

|

|

@@ -449,6 +487,7 @@ src/lattice_subtraction/

|

|

|

449

487

|

├── cli.py # Command-line interface

|

|

450

488

|

├── core.py # LatticeSubtractor main class

|

|

451

489

|

├── batch.py # Parallel batch processing

|

|

490

|

+

├── watch.py # Live watch mode (NEW in v1.5.0)

|

|

452

491

|

├── config.py # Configuration dataclass

|

|

453

492

|

├── io.py # MRC file I/O

|

|

454

493

|

├── masks.py # FFT mask generation

|

|

@@ -0,0 +1,18 @@

|

|

|

1

|

+

lattice_sub-1.5.4.dist-info/licenses/LICENSE,sha256=2kPoH0cbEp0cVEGqMpyF2IQX1npxdtQmWJB__HIRSb0,1101

|

|

2

|

+

lattice_subtraction/__init__.py,sha256=1pt-fhNgcIdz-IiQQ4ZAQ_lb4jqgLveUB_T077hmrxU,1828

|

|

3

|

+

lattice_subtraction/batch.py,sha256=G_-sxtNT46gOr22miDcG69uOnud3x6a9hEqNv4HpfwQ,20984

|

|

4

|

+

lattice_subtraction/cli.py,sha256=SvnZ2fqUqhdHe2_jkdbMlX-NYWDzyfIHkvPXwXXSNwk,28606

|

|

5

|

+

lattice_subtraction/config.py,sha256=uzwKb5Zi3phHUk2ZgoiLsQdwFdN-rTiY8n02U91SObc,8426

|

|

6

|

+

lattice_subtraction/core.py,sha256=VzcecSZHRuBuHUc2jHGv8LalINL75RH0aTpABI708y8,16265

|

|

7

|

+

lattice_subtraction/io.py,sha256=uHku6rJ0jeCph7w-gOIDJx-xpNoF6PZcLfb5TBTOiw0,4594

|

|

8

|

+

lattice_subtraction/masks.py,sha256=HIamrACmbQDkaCV4kXhnjMDSwIig4OtQFLig9A8PMO8,11741

|

|

9

|

+

lattice_subtraction/processing.py,sha256=tmnj5K4Z9HCQhRpJ-iMd9Bj_uTRuvDEWyUenh8MCWEM,8341

|

|

10

|

+

lattice_subtraction/threshold_optimizer.py,sha256=yEsGM_zt6YjgEulEZqtRy113xOFB69aHJIETm2xSS6k,15398

|

|

11

|

+

lattice_subtraction/ui.py,sha256=-PdBSE6yF78ze85aHuGPNCziYsrQRyXQDUjYRdiTJFM,11436

|

|

12

|

+

lattice_subtraction/visualization.py,sha256=noRhBXi_Xd1b5deBfVo0Bk0f3d2kqlf3_SQwAPJC0E0,12032

|

|

13

|

+

lattice_subtraction/watch.py,sha256=wIXjdw0ofB71J8OvU1E5piq5BHEQG73R1VVgdzxVIow,17313

|

|

14

|

+

lattice_sub-1.5.4.dist-info/METADATA,sha256=R16yRpGTZEV_JomHmfR-rivmd8RaLctljTXK9WvMGmk,16155

|

|

15

|

+

lattice_sub-1.5.4.dist-info/WHEEL,sha256=wUyA8OaulRlbfwMtmQsvNngGrxQHAvkKcvRmdizlJi0,92

|

|

16

|

+

lattice_sub-1.5.4.dist-info/entry_points.txt,sha256=o8PzJR8kFnXlKZufoYGBIHpiosM-P4PZeKZXJjtPS6Y,61

|

|

17

|

+

lattice_sub-1.5.4.dist-info/top_level.txt,sha256=BOuW-sm4G-fQtsWPRdeLzWn0WS8sDYVNKIMj5I3JXew,20

|

|

18

|

+

lattice_sub-1.5.4.dist-info/RECORD,,

|

lattice_subtraction/__init__.py

CHANGED

|

@@ -19,7 +19,7 @@ Example:

|

|

|

19

19

|

>>> result.save("output.mrc")

|

|

20

20

|

"""

|

|

21

21

|

|

|

22

|

-

__version__ = "1.

|

|

22

|

+

__version__ = "1.5.4"

|

|

23

23

|

__author__ = "George Stephenson & Vignesh Kasinath"

|

|

24

24

|

|

|

25

25

|

from .config import Config

|

|

@@ -38,10 +38,12 @@ from .visualization import (

|

|

|

38

38

|

)

|

|

39

39

|

from .processing import subtract_background_gpu

|

|

40

40

|

from .ui import TerminalUI, get_ui, is_interactive

|

|

41

|

+

from .watch import LiveBatchProcessor, LiveStats

|

|

41

42

|

|

|

42

43

|

__all__ = [

|

|

43

44

|

"LatticeSubtractor",

|

|

44

|

-

"BatchProcessor",

|

|

45

|

+

"BatchProcessor",

|

|

46

|

+

"LiveBatchProcessor",

|

|

45

47

|

"Config",

|

|

46

48

|

"read_mrc",

|

|

47

49

|

"write_mrc",

|

|

@@ -55,5 +57,6 @@ __all__ = [

|

|

|

55

57

|

"OptimizationResult",

|

|

56

58

|

"find_optimal_threshold",

|

|

57

59

|

"subtract_background_gpu",

|

|

60

|

+

"LiveStats",

|

|

58

61

|

"__version__",

|

|

59

62

|

]

|

lattice_subtraction/batch.py

CHANGED

|

@@ -272,6 +272,19 @@ class BatchProcessor:

|

|

|

272

272

|

available_gpus = _get_available_gpus()

|

|

273

273

|

|

|

274

274

|

if len(available_gpus) > 1 and total > 1:

|

|

275

|

+

# Print GPU list

|

|

276

|

+

try:

|

|

277

|

+

import torch

|

|

278

|

+

from .ui import get_ui, Colors

|

|

279

|

+

ui = get_ui(quiet=self.config._quiet)

|

|

280

|

+

print()

|

|

281

|

+

for gpu_id in available_gpus:

|

|

282

|

+

gpu_name = torch.cuda.get_device_name(gpu_id)

|

|

283

|

+

print(f" {ui._colorize('✓', Colors.GREEN)} GPU {gpu_id}: {gpu_name}")

|

|

284

|

+

print()

|

|

285

|

+

except Exception:

|

|

286

|

+

pass

|

|

287

|

+

|

|

275

288

|

# Multi-GPU processing

|

|

276

289

|

successful, failed_files = self._process_multi_gpu(

|

|

277

290

|

file_pairs, available_gpus, show_progress

|

|

@@ -407,17 +420,6 @@ class BatchProcessor:

|

|

|

407

420

|

total = len(file_pairs)

|

|

408

421

|

num_gpus = len(gpu_ids)

|

|

409

422

|

|

|

410

|

-

# Print multi-GPU info with GPU names

|

|

411

|

-

try:

|

|

412

|

-

import torch

|

|

413

|

-

gpu_names = [torch.cuda.get_device_name(i) for i in gpu_ids]

|

|

414

|

-

print(f"✓ Using {num_gpus} GPUs: {', '.join(f'GPU {i}' for i in gpu_ids)}")

|

|

415

|

-

print("")

|

|

416

|

-

for i, name in zip(gpu_ids, gpu_names):

|

|

417

|

-

print(f" ✓ GPU {i}: {name}")

|

|

418

|

-

except Exception:

|

|

419

|

-

print(f"✓ Using {num_gpus} GPUs")

|

|

420

|

-

|

|

421

423

|

# Check GPU memory on first GPU (assume similar for all)

|

|

422

424

|

if file_pairs:

|

|

423

425

|

try:

|

lattice_subtraction/cli.py

CHANGED

|

@@ -24,6 +24,7 @@ from .batch import BatchProcessor

|

|

|

24

24

|

from .visualization import generate_visualizations, save_comparison_visualization

|

|

25

25

|

from .ui import get_ui, get_gpu_name

|

|

26

26

|

from .io import read_mrc

|

|

27

|

+

from .watch import LiveBatchProcessor

|

|

27

28

|

|

|

28

29

|

|

|

29

30

|

# CUDA version to PyTorch index URL mapping

|

|

@@ -312,9 +313,9 @@ def setup_gpu(yes: bool, force: bool):

|

|

|

312

313

|

)

|

|

313

314

|

@click.option(

|

|

314

315

|

"--pixel-size", "-p",

|

|

315

|

-

type=

|

|

316

|

-

required=

|

|

317

|

-

help="Pixel size in Angstroms",

|

|

316

|

+

type=click.FloatRange(min=0.0, min_open=True),

|

|

317

|

+

required=False,

|

|

318

|

+

help="Pixel size in Angstroms (must be positive). Required unless --config provides pixel_ang.",

|

|

318

319

|

)

|

|

319

320

|

@click.option(

|

|

320

321

|

"--threshold", "-t",

|

|

@@ -396,7 +397,23 @@ def process(

|

|

|

396

397

|

if config:

|

|

397

398

|

logger.info(f"Loading config from {config}")

|

|

398

399

|

cfg = Config.from_yaml(config)

|

|

400

|

+

# Override with command-line options if provided

|

|

401

|

+

if pixel_size is not None:

|

|

402

|

+

cfg.pixel_ang = pixel_size

|

|

403

|

+

if threshold is not None:

|

|

404

|

+

cfg.threshold = threshold

|

|

405

|

+

if inside_radius != 90.0: # Non-default value

|

|

406

|

+

cfg.inside_radius_ang = inside_radius

|

|

407

|

+

if outside_radius is not None:

|

|

408

|

+

cfg.outside_radius_ang = outside_radius

|

|

409

|

+

if cpu:

|

|

410

|

+

cfg.backend = "numpy"

|

|

399

411

|

else:

|

|

412

|

+

# No config file - pixel_size is required

|

|

413

|

+

if pixel_size is None:

|

|

414

|

+

raise click.UsageError(

|

|

415

|

+

"--pixel-size is required when --config is not provided"

|

|

416

|

+

)

|

|

400

417

|

# Use "auto" threshold if not specified (GPU-optimized per-image)

|

|

401

418

|

thresh_value = threshold if threshold is not None else "auto"

|

|

402

419

|

cfg = Config(

|

|

@@ -461,9 +478,9 @@ def process(

|

|

|

461

478

|

@click.argument("output_dir", type=click.Path(file_okay=False))

|

|

462

479

|

@click.option(

|

|

463

480

|

"--pixel-size", "-p",

|

|

464

|

-

type=

|

|

465

|

-

required=

|

|

466

|

-

help="Pixel size in Angstroms",

|

|

481

|

+

type=click.FloatRange(min=0.0, min_open=True),

|

|

482

|

+

required=False,

|

|

483

|

+

help="Pixel size in Angstroms (must be positive). Required unless --config provides pixel_ang.",

|

|

467

484

|

)

|

|

468

485

|

@click.option(

|

|

469

486

|

"--threshold", "-t",

|

|

@@ -526,6 +543,11 @@ def process(

|

|

|

526

543

|

is_flag=True,

|

|

527

544

|

help="Force CPU processing (disable GPU auto-detection)",

|

|

528

545

|

)

|

|

546

|

+

@click.option(

|

|

547

|

+

"--live",

|

|

548

|

+

is_flag=True,

|

|

549

|

+

help="Watch mode: continuously monitor input directory for new files (Press Ctrl+C to stop)",

|

|

550

|

+

)

|

|

529

551

|

def batch(

|

|

530

552

|

input_dir: str,

|

|

531

553

|

output_dir: str,

|

|

@@ -541,6 +563,7 @@ def batch(

|

|

|

541

563

|

verbose: bool,

|

|

542

564

|

quiet: bool,

|

|

543

565

|

cpu: bool,

|

|

566

|

+

live: bool,

|

|

544

567

|

):

|

|

545

568

|

"""

|

|

546

569

|

Batch process a directory of micrographs.

|

|

@@ -549,6 +572,11 @@ def batch(

|

|

|

549

572

|

INPUT_DIR: Directory containing input MRC files

|

|

550

573

|

OUTPUT_DIR: Directory for processed output files

|

|

551

574

|

"""

|

|

575

|

+

# Validate options

|

|

576

|

+

if live and recursive:

|

|

577

|

+

click.echo("Error: --live and --recursive cannot be used together", err=True)

|

|

578

|

+

sys.exit(1)

|

|

579

|

+

|

|

552

580

|

# Initialize UI

|

|

553

581

|

ui = get_ui(quiet=quiet)

|

|

554

582

|

ui.print_banner()

|

|

@@ -559,7 +587,19 @@ def batch(

|

|

|

559

587

|

# Load or create config

|

|

560

588

|

if config:

|

|

561

589

|

cfg = Config.from_yaml(config)

|

|

590

|

+

# Override with command-line options if provided

|

|

591

|

+

if pixel_size is not None:

|

|

592

|

+

cfg.pixel_ang = pixel_size

|

|

593

|

+

if threshold is not None:

|

|

594

|

+

cfg.threshold = threshold

|

|

595

|

+

if cpu:

|

|

596

|

+

cfg.backend = "numpy"

|

|

562

597

|

else:

|

|

598

|

+

# No config file - pixel_size is required

|

|

599

|

+

if pixel_size is None:

|

|

600

|

+

raise click.UsageError(

|

|

601

|

+

"--pixel-size is required when --config is not provided"

|

|

602

|

+

)

|

|

563

603

|

# Use "auto" threshold if not specified (GPU-optimized per-image)

|

|

564

604

|

thresh_value = threshold if threshold is not None else "auto"

|

|

565

605

|

cfg = Config(

|

|

@@ -568,8 +608,87 @@ def batch(

|

|

|

568

608

|

backend="numpy" if cpu else "auto",

|

|

569

609

|

)

|

|

570

610

|

|

|

571

|

-

#

|

|

611

|

+

# Print configuration

|

|

612

|

+

gpu_name = get_gpu_name() if not cpu else None

|

|

613

|

+

ui.print_config(cfg.pixel_ang, cfg.threshold, cfg.backend, gpu_name)

|

|

614

|

+

|

|

572

615

|

input_path = Path(input_dir)

|

|

616

|

+

output_path = Path(output_dir)

|

|

617

|

+

|

|

618

|

+

# LIVE WATCH MODE

|

|

619

|

+

if live:

|

|

620

|

+

logger.info(f"Starting live watch mode: {input_dir} -> {output_dir}")

|

|

621

|

+

|

|

622

|

+

# Determine number of workers

|

|

623

|

+

# For live mode, default to 1 worker to avoid GPU memory issues

|

|

624

|

+

# Files typically arrive one at a time, so parallel processing isn't needed

|

|

625

|

+

if jobs is not None:

|

|

626

|

+

num_workers = jobs

|

|

627

|

+

else:

|

|

628

|

+

num_workers = 1 # Single worker is optimal for live mode

|

|

629

|

+

|

|

630

|

+

ui.show_watch_startup(str(input_path))

|

|

631

|

+

ui.start_timer()

|

|

632

|

+

|

|

633

|

+

# Create live processor

|

|

634

|

+

live_processor = LiveBatchProcessor(

|

|

635

|

+

config=cfg,

|

|

636

|

+

output_prefix=prefix,

|

|

637

|

+

debounce_seconds=2.0,

|

|

638

|

+

)

|

|

639

|

+

|

|

640

|

+

# Start watching and processing

|

|

641

|

+

stats = live_processor.watch_and_process(

|

|

642

|

+

input_dir=input_path,

|

|

643

|

+

output_dir=output_path,

|

|

644

|

+

pattern=pattern,

|

|

645

|

+

ui=ui,

|

|

646

|

+

num_workers=num_workers,

|

|

647

|

+

)

|

|

648

|

+

|

|

649

|

+

# Print summary

|

|

650

|

+

print() # Extra newline after counter

|

|

651

|

+

ui.print_summary(processed=stats.total_processed, failed=stats.total_failed)

|

|

652

|

+

|

|

653

|

+

if stats.total_failed > 0:

|

|

654

|

+

ui.print_warning(f"{stats.total_failed} file(s) failed to process")

|

|

655

|

+

for file_path, error in stats.failed_files[:5]:

|

|

656

|

+

ui.print_error(f"{file_path.name}: {error}")

|

|

657

|

+

if len(stats.failed_files) > 5:

|

|

658

|

+

ui.print_error(f"... and {len(stats.failed_files) - 5} more failures")

|

|

659

|

+

|

|

660

|

+

logger.info(f"Live mode complete: {stats.total_processed} processed, {stats.total_failed} failed")

|

|

661

|

+

|

|

662

|

+

# Generate visualizations if requested

|

|

663

|

+

if vis and stats.total_processed > 0:

|

|

664

|

+

ui.print_info(f"Generating visualizations in: {vis}")

|

|

665

|

+

limit_msg = f" (first {num_vis})" if num_vis else ""

|

|

666

|

+

logger.info(f"Generating visualizations{limit_msg}")

|

|

667

|

+

|

|

668

|

+

viz_success, viz_total = generate_visualizations(

|

|

669

|

+

input_dir=input_dir,

|

|

670

|

+

output_dir=output_dir,

|

|

671

|

+

viz_dir=vis,

|

|

672

|

+

prefix=prefix,

|

|

673

|

+

pattern=pattern,

|

|

674

|

+

show_progress=True,

|

|

675

|

+

limit=num_vis,

|

|

676

|

+

config=cfg,

|

|

677

|

+

)

|

|

678

|

+

logger.info(f"Visualizations: {viz_success}/{viz_total} created")

|

|

679

|

+

|

|

680

|

+

# Exit with error code if any files failed

|

|

681

|

+

if stats.total_failed > 0:

|

|

682

|

+

sys.exit(1)

|

|

683

|

+

|

|

684

|

+

return

|

|

685

|

+

|

|

686

|

+

# NORMAL BATCH MODE

|

|

687

|

+

# Count files first

|

|

688

|

+

if recursive:

|

|

689

|

+

files = list(input_path.rglob(pattern))

|

|

690

|

+

else:

|

|

691

|

+

files = list(input_path.glob(pattern))

|

|

573

692

|

if recursive:

|

|

574

693

|

files = list(input_path.rglob(pattern))

|

|

575

694

|

else:

|

lattice_subtraction/ui.py

CHANGED

|

@@ -11,7 +11,7 @@ suppressed to avoid polluting downstream processing.

|

|

|

11

11

|

|

|

12

12

|

import sys

|

|

13

13

|

import time

|

|

14

|

-

from typing import Optional

|

|

14

|

+

from typing import Optional, List

|

|

15

15

|

|

|

16

16

|

|

|

17

17

|

class Colors:

|

|

@@ -230,6 +230,67 @@ class TerminalUI:

|

|

|

230

230

|

if not self.interactive:

|

|

231

231

|

return

|

|

232

232

|

print(f" {self._colorize('|-', Colors.DIM)} Saved: {path}")

|

|

233

|

+

|

|

234

|

+

def show_watch_startup(self, input_dir: str) -> None:

|

|

235

|

+

"""Show live watch mode startup message."""

|

|

236

|

+

if not self.interactive:

|

|

237

|

+

return

|

|

238

|

+

|

|

239

|

+

print(self._colorize(" Live Watch Mode", Colors.BOLD))

|

|

240

|

+

print(self._colorize(" ---------------", Colors.DIM))

|

|

241

|

+

print(f" Watching: {input_dir}")

|

|

242

|

+

print(f" {self._colorize('Press Ctrl+C to stop watching and finalize', Colors.YELLOW)}")

|

|

243

|

+

print()

|

|

244

|

+

|

|

245

|

+

def show_watch_stopped(self) -> None:

|

|

246

|

+

"""Show message when watching is stopped."""

|

|

247

|

+

if not self.interactive:

|

|

248

|

+

return

|

|

249

|

+

print() # New line after counter

|

|

250

|

+

print(f" {self._colorize('[STOPPED]', Colors.YELLOW)} Watch mode stopped")

|

|

251

|

+

print()

|

|

252

|

+

|

|

253

|

+

def show_live_counter_header(self) -> None:

|

|

254

|

+

"""Show header for live counter display."""

|

|

255

|

+

if not self.interactive:

|

|

256

|

+

return

|

|

257

|

+

# No header needed - counter updates in place

|

|

258

|

+

pass

|

|

259

|

+

|

|

260

|

+

def update_live_counter(self, count: int, total: int, avg_time: float, latest: str) -> None:

|

|

261

|

+

"""

|

|

262

|

+

Update the live processing counter in place.

|

|

263

|

+

|

|

264

|

+

Args:

|

|

265

|

+

count: Number of files processed

|

|

266

|

+

total: Total number of files in input directory

|

|

267

|

+

avg_time: Average processing time per file

|

|

268

|

+

latest: Name of most recently processed file

|

|

269

|

+

"""

|

|

270

|

+

if not self.interactive:

|

|

271

|

+

return

|

|

272

|

+

|

|

273

|

+

# Format time display

|

|

274

|

+

if avg_time > 0:

|

|

275

|

+

time_str = f"{avg_time:.1f}s/file"

|

|

276

|

+

else:

|

|

277

|

+

time_str = "--s/file"

|

|

278

|

+

|

|

279

|

+

# Truncate filename if too long

|

|

280

|

+

max_filename_len = 40

|

|

281

|

+

if len(latest) > max_filename_len:

|

|

282

|

+

latest = "..." + latest[-(max_filename_len-3):]

|

|

283

|

+

|

|

284

|

+

# Build counter line with X/Y format

|

|

285

|

+

count_str = f"{self._colorize(str(count), Colors.GREEN)}/{total}"

|

|

286

|

+

counter = f" Processed: {count_str} files"

|

|

287

|

+

avg = f"Avg: {self._colorize(time_str, Colors.CYAN)}"

|

|

288

|

+

file_info = f"Latest: {latest}"

|

|

289

|

+

|

|

290

|

+

line = f"{counter} | {avg} | {file_info}"

|

|

291

|

+

|

|

292

|

+

# Print with carriage return to overwrite previous line

|

|

293

|

+

print(f"\r{line}", end="", flush=True)

|

|

233

294

|

|

|

234

295

|

|

|

235

296

|

def get_ui(quiet: bool = False) -> TerminalUI:

|

|

@@ -0,0 +1,478 @@

|

|

|

1

|

+

"""

|

|

2

|

+

Live watch mode for processing files as they arrive.

|

|

3

|

+

|

|

4

|

+

This module provides functionality for monitoring a directory and

|

|

5

|

+

processing MRC files as they are created/modified, enabling real-time

|

|

6

|

+

processing pipelines (e.g., from motion correction output).

|

|

7

|

+

"""

|

|

8

|

+

|

|

9

|

+

import logging

|

|

10

|

+

import time

|

|

11

|

+

import threading

|

|

12

|

+

from pathlib import Path

|

|

13

|

+

from typing import Set, Dict, Optional, List, Tuple

|

|

14

|

+

from queue import Queue, Empty

|

|

15

|

+

from dataclasses import dataclass

|

|

16

|

+

|

|

17

|

+

from watchdog.observers import Observer

|

|

18

|

+

from watchdog.events import FileSystemEventHandler, FileSystemEvent

|

|

19

|

+

|

|

20

|

+

from .config import Config

|

|

21

|

+

from .core import LatticeSubtractor

|

|

22

|

+

from .io import write_mrc

|

|

23

|

+

from .ui import TerminalUI

|

|

24

|

+

|

|

25

|

+

logger = logging.getLogger(__name__)

|

|

26

|

+

|

|

27

|

+

|

|

28

|

+

@dataclass

|

|

29

|

+

class LiveStats:

|

|

30

|

+

"""Statistics for live processing."""

|

|

31

|

+

|

|

32

|

+

total_processed: int = 0

|

|

33

|

+

total_failed: int = 0

|

|

34

|

+

total_time: float = 0.0

|

|

35

|

+

failed_files: List[Tuple[Path, str]] = None

|

|

36

|

+

|

|

37

|

+

def __post_init__(self):

|

|

38

|

+

if self.failed_files is None:

|

|

39

|

+

self.failed_files = []

|

|

40

|

+

|

|

41

|

+

@property

|

|

42

|

+

def average_time(self) -> float:

|

|

43

|

+

"""Get average processing time per file."""

|

|

44

|

+

if self.total_processed == 0:

|

|

45

|

+

return 0.0

|

|

46

|

+

return self.total_time / self.total_processed

|

|

47

|

+

|

|

48

|

+

def add_success(self, processing_time: float):

|

|

49

|

+

"""Record a successful processing."""

|

|

50

|

+

self.total_processed += 1

|

|

51

|

+

self.total_time += processing_time

|

|

52

|

+

|

|

53

|

+

def add_failure(self, file_path: Path, error: str):

|

|

54

|

+

"""Record a failed processing."""

|

|

55

|

+

self.total_failed += 1

|

|

56

|

+

self.failed_files.append((file_path, error))

|

|

57

|

+

|

|

58

|

+

|

|

59

|

+

class MRCFileHandler(FileSystemEventHandler):

|

|

60

|

+

"""

|

|

61

|

+

Handles file system events for MRC files.

|

|

62

|

+

|

|

63

|

+

Implements debouncing to ensure files are completely written before

|

|

64

|

+

processing. Files are added to a processing queue after being stable

|

|

65

|

+

for a specified duration.

|

|

66

|

+

"""

|

|

67

|

+

|

|

68

|

+

def __init__(

|

|

69

|

+

self,

|

|

70

|

+

pattern: str,

|

|

71

|

+

file_queue: Queue,

|

|

72

|

+

processed_files: Set[Path],

|

|

73

|

+

processor: 'LiveBatchProcessor',

|

|

74

|

+

debounce_seconds: float = 2.0,

|

|

75

|

+

):

|

|

76

|

+

"""

|

|

77

|

+

Initialize file handler.

|

|

78

|

+

|

|

79

|

+

Args:

|

|

80

|

+

pattern: Glob pattern for matching files (e.g., "*.mrc")

|

|

81

|

+

file_queue: Queue to add detected files to

|

|

82

|

+

processed_files: Set of already processed file paths

|

|

83

|

+

processor: Parent LiveBatchProcessor for updating totals

|

|

84

|

+

debounce_seconds: Time to wait after last modification before processing

|

|

85

|

+

"""

|

|

86

|

+

super().__init__()

|

|

87

|

+

self.pattern = pattern

|

|

88

|

+

self.file_queue = file_queue

|

|

89

|

+

self.processed_files = processed_files

|

|

90

|

+

self.processor = processor

|

|

91

|

+

self.debounce_seconds = debounce_seconds

|

|

92

|

+

|

|

93

|

+

# Track file modification times for debouncing

|

|

94

|

+

self._pending_files: Dict[Path, float] = {}

|

|

95

|

+

self._lock = threading.Lock()

|

|

96

|

+

|

|

97

|

+

# Start debounce checker thread

|

|

98

|

+

self._running = True

|

|

99

|

+

self._checker_thread = threading.Thread(target=self._check_pending_files, daemon=True)

|

|

100

|

+

self._checker_thread.start()

|

|

101

|

+

|

|

102

|

+

def stop(self):

|

|

103

|

+

"""Stop the debounce checker thread."""

|

|

104

|

+

self._running = False

|

|

105

|

+

if self._checker_thread.is_alive():

|

|

106

|

+

self._checker_thread.join(timeout=5.0)

|

|

107

|

+

|

|

108

|

+

def _matches_pattern(self, path: Path) -> bool:

|

|

109

|

+

"""Check if file matches the pattern."""

|

|

110

|

+

import fnmatch

|

|

111

|

+

return fnmatch.fnmatch(path.name, self.pattern)

|

|

112

|

+

|

|

113

|

+

def _check_pending_files(self):

|

|

114

|

+

"""Background thread to check for stable files ready to process."""

|

|

115

|

+

while self._running:

|

|

116

|

+

time.sleep(0.5) # Check every 0.5 seconds

|

|

117

|

+

|

|

118

|

+

current_time = time.time()

|

|

119

|

+

files_to_queue = []

|

|

120

|

+

|

|

121

|

+

with self._lock:

|

|

122

|

+

# Find files that are stable (no modifications for debounce_seconds)

|

|

123

|

+

for file_path, last_mod in list(self._pending_files.items()):

|

|

124

|

+

if current_time - last_mod >= self.debounce_seconds:

|

|

125

|

+

files_to_queue.append(file_path)

|

|

126

|

+

del self._pending_files[file_path]

|

|

127

|

+

|

|

128

|

+

# Queue stable files

|

|

129

|

+

for file_path in files_to_queue:

|

|

130

|

+

if file_path not in self.processed_files and file_path.exists():

|

|

131

|

+

logger.debug(f"Queueing stable file: {file_path}")

|

|

132

|

+

self.file_queue.put(file_path)

|

|

133

|

+

# Increment total count when new file is queued

|

|

134

|

+

with self.processor._lock:

|

|

135

|

+

self.processor.total_files += 1

|

|

136

|

+

|

|

137

|

+

def on_created(self, event: FileSystemEvent):

|

|

138

|

+

"""Handle file creation events."""

|

|

139

|

+

if event.is_directory:

|

|

140

|

+

return

|

|

141

|

+

|

|

142

|

+

file_path = Path(event.src_path)

|

|

143

|

+

|

|

144

|

+

if self._matches_pattern(file_path) and file_path not in self.processed_files:

|

|

145

|

+

logger.debug(f"File created: {file_path}")

|

|

146

|

+

with self._lock:

|

|

147

|

+

self._pending_files[file_path] = time.time()

|

|

148

|

+

|

|

149

|

+

def on_modified(self, event: FileSystemEvent):

|

|

150

|

+

"""Handle file modification events."""

|

|

151

|

+

if event.is_directory:

|

|

152

|

+

return

|

|

153

|

+

|

|

154

|

+

file_path = Path(event.src_path)

|

|

155

|

+

|

|

156

|

+

if self._matches_pattern(file_path) and file_path not in self.processed_files:

|

|

157

|

+

logger.debug(f"File modified: {file_path}")

|

|

158

|

+

with self._lock:

|

|

159

|

+

self._pending_files[file_path] = time.time()

|

|

160

|

+

|

|

161

|

+

|

|

162

|

+

class LiveBatchProcessor:

|

|

163

|

+

"""

|

|

164

|

+

Live batch processor that watches a directory and processes files as they arrive.

|

|

165

|

+

|

|

166

|

+

This processor monitors an input directory for new MRC files and processes them

|

|

167

|

+

in real-time as they are created (e.g., from motion correction output).

|

|

168

|

+

|

|

169

|

+

Features:

|

|

170

|

+

- File debouncing to ensure complete writes

|

|

171

|

+

- Real-time progress counter (instead of progress bar)

|

|

172

|

+

- Resilient error handling (continues on failures)

|

|

173

|

+

- Support for multi-GPU, single-GPU, and CPU processing

|

|

174

|

+

- Deferred visualization generation (after watching stops)

|

|

175

|

+

"""

|

|

176

|

+

|

|

177

|

+

def __init__(

|

|

178

|

+

self,

|

|

179

|

+

config: Config,

|

|

180

|

+

output_prefix: str = "sub_",

|

|

181

|

+

debounce_seconds: float = 2.0,

|

|

182

|

+

):

|

|

183

|

+

"""

|

|

184

|

+

Initialize live batch processor.

|

|

185

|

+

|

|

186

|

+

Args:

|

|

187

|

+

config: Processing configuration

|

|

188

|

+

output_prefix: Prefix for output filenames

|

|

189

|

+

debounce_seconds: Time to wait after file modification before processing

|

|

190

|

+

"""

|

|

191

|

+

self.config = config

|

|

192

|

+

self.output_prefix = output_prefix

|

|

193

|

+

self.debounce_seconds = debounce_seconds

|

|

194

|

+

|

|

195

|

+

# Processing state

|

|

196

|

+

self.file_queue: Queue = Queue()

|

|

197

|

+

self.processed_files: Set[Path] = set()

|

|

198

|

+

self.stats = LiveStats()

|

|

199

|

+

self.total_files: int = 0 # Total files in input directory

|

|

200

|

+

|

|

201

|

+

# Worker threads

|

|

202

|

+

self._workers: List[threading.Thread] = []

|

|

203

|

+

self._running = False

|

|

204

|

+

self._lock = threading.Lock()

|

|

205

|

+

|

|

206

|

+

# File system watcher

|

|

207

|

+

self.observer: Optional[Observer] = None

|

|

208

|

+

self.handler: Optional[MRCFileHandler] = None

|

|

209

|

+

|

|

210

|

+

def _create_subtractor(self, device_id: Optional[int] = None) -> LatticeSubtractor:

|

|

211

|

+

"""Create a LatticeSubtractor instance with optional device override."""

|

|

212

|

+

if device_id is not None:

|

|

213

|

+

# Create config copy with specific device

|

|

214

|

+

from dataclasses import replace

|

|

215

|

+

config = replace(self.config, device_id=device_id)

|

|

216

|

+

else:

|

|

217

|

+

config = self.config

|

|

218

|

+

|

|

219

|

+

# Enable quiet mode to suppress GPU messages on each file

|

|

220

|

+

config._quiet = True

|

|

221

|

+

|

|

222

|

+

return LatticeSubtractor(config)

|

|

223

|

+

|

|

224

|

+

def _process_worker(

|

|

225

|

+

self,

|

|

226

|

+

output_dir: Path,

|

|

227

|

+

ui: TerminalUI,

|

|

228

|

+

device_id: Optional[int] = None,

|

|

229

|

+

):

|

|

230

|

+

"""

|

|

231

|

+

Worker thread that processes files from the queue.

|

|

232

|

+

|

|

233

|

+

Args:

|

|

234

|

+

output_dir: Output directory for processed files

|

|

235

|

+

ui: Terminal UI for displaying progress

|

|

236

|

+

device_id: Optional GPU device ID (for multi-GPU)

|

|

237

|

+

"""

|

|

238

|

+

# Set CUDA device for this thread if GPU is being used

|

|

239

|

+

if device_id is not None:

|

|

240

|

+

import torch

|

|

241

|

+

torch.cuda.set_device(device_id)

|

|

242

|

+

logger.debug(f"Worker initialized on GPU {device_id}")

|

|

243

|

+

|

|

244

|

+

while self._running:

|

|

245

|

+

try:

|

|

246

|

+

# Get file from queue with timeout

|

|

247

|

+

file_path = self.file_queue.get(timeout=0.5)

|

|

248

|

+

except Empty:

|

|

249

|

+

continue

|

|

250

|

+

|

|

251

|

+

# Create subtractor on-demand for each file to avoid memory buildup

|

|

252

|

+

subtractor = self._create_subtractor(device_id)

|

|

253

|

+

|

|

254

|

+

# Process the file

|

|

255

|

+

output_name = f"{self.output_prefix}{file_path.name}"

|

|

256

|

+

output_path = output_dir / output_name

|

|

257

|

+

|

|

258

|

+

start_time = time.time()

|

|

259

|

+

|

|

260

|

+

try:

|

|

261

|

+

result = subtractor.process(file_path)

|

|

262

|

+

result.save(output_path, pixel_size=self.config.pixel_ang)

|

|

263

|

+

|

|

264

|

+

processing_time = time.time() - start_time

|

|

265

|

+

|

|

266

|

+

with self._lock:

|

|

267

|

+

self.stats.add_success(processing_time)

|

|

268

|

+

self.processed_files.add(file_path)

|

|

269

|

+

|

|

270

|

+

# Update UI counter

|

|

271

|

+

ui.update_live_counter(

|

|

272

|

+

count=self.stats.total_processed,

|

|

273

|

+

total=self.total_files,

|

|

274

|

+

avg_time=self.stats.average_time,

|

|

275

|

+

latest=file_path.name,

|

|

276

|

+

)

|

|

277

|

+

|

|

278

|

+

# Don't log to console in live mode - it breaks the in-place counter update

|

|

279

|

+

|

|

280

|

+

except Exception as e:

|

|

281

|

+

with self._lock:

|

|

282

|

+

self.stats.add_failure(file_path, str(e))

|

|

283

|

+

self.processed_files.add(file_path) # Don't retry

|

|

284

|

+

|

|

285

|

+

logger.error(f"Failed to process {file_path.name}: {e}")

|

|

286

|

+

|

|

287

|

+

finally:

|

|

288

|

+

# Clean up memory after each file in live mode

|

|

289

|

+

del subtractor

|

|

290

|

+

if device_id is not None:

|

|

291

|

+

import torch

|

|

292

|

+

torch.cuda.empty_cache()

|

|

293

|

+

|

|

294

|

+

self.file_queue.task_done()

|

|

295

|

+

|

|

296

|

+

def watch_and_process(

|

|

297

|

+

self,

|

|

298

|

+

input_dir: Path,

|

|

299

|

+

output_dir: Path,

|

|

300

|

+

pattern: str,

|

|

301

|

+

ui: TerminalUI,

|

|

302

|

+

num_workers: int = 1,

|

|

303

|

+

) -> LiveStats:

|

|

304

|

+

"""

|

|

305

|

+

Start watching directory and processing files as they arrive.

|

|

306

|

+

|

|

307

|

+

This method blocks until KeyboardInterrupt (Ctrl+C) is received.

|

|

308

|

+

|

|

309

|

+

Args:

|

|

310

|

+

input_dir: Directory to watch for new files

|

|

311

|

+

output_dir: Output directory for processed files

|

|

312

|

+

pattern: Glob pattern for matching files (e.g., "*.mrc")

|

|

313

|

+

ui: Terminal UI for displaying progress

|

|

314

|

+

num_workers: Number of processing workers (for multi-GPU or CPU)

|

|

315

|

+

|

|

316

|

+

Returns:

|

|

317

|

+

LiveStats with processing statistics

|

|

318

|

+

"""

|

|

319

|

+

# Create output directory

|

|

320

|

+

output_dir.mkdir(parents=True, exist_ok=True)

|

|

321

|

+

|

|

322

|

+

# Check for existing files in directory

|

|

323

|

+

all_files = list(input_dir.glob(pattern))

|

|

324

|

+

|

|

325

|

+

# If files already exist, process them with batch mode first (multi-GPU)

|

|

326

|

+

if all_files:

|

|

327

|

+

from .batch import BatchProcessor

|

|

328

|

+

|

|

329

|

+

ui.print_info(f"Found {len(all_files)} existing files - processing with batch mode first")

|

|

330

|

+

|

|

331

|

+

# Create file pairs for batch processing

|

|

332

|

+

file_pairs = []

|

|

333

|

+

for file_path in all_files:

|

|

334

|

+

output_name = f"{self.output_prefix}{file_path.name}"

|

|

335

|

+

output_path = output_dir / output_name

|

|

336

|

+

file_pairs.append((file_path, output_path))

|

|

337

|

+

self.processed_files.add(file_path) # Mark as processed

|

|

338

|

+

|

|

339

|

+

# Process with BatchProcessor (will use multi-GPU if available)

|

|

340

|

+

batch_processor = BatchProcessor(

|

|

341

|

+

config=self.config,

|

|

342

|

+

num_workers=num_workers,

|

|

343

|

+

output_prefix="", # Already included in output paths

|

|

344

|

+

)

|

|

345

|

+

|

|

346

|

+

result = batch_processor.process_directory(

|

|

347

|

+

input_dir=input_dir,

|

|

348

|

+

output_dir=output_dir,

|

|

349

|

+

pattern=pattern,

|

|

350

|

+

recursive=False,

|

|

351

|

+

show_progress=True,

|

|

352

|

+

)

|

|

353

|

+

|

|

354

|

+

# Update stats with batch results

|

|

355

|

+

self.stats.total_processed = result.successful

|

|

356

|

+

self.stats.total_failed = result.failed

|

|

357

|

+

self.total_files = len(all_files) # Set initial total

|

|

358

|

+

|

|

359

|

+

ui.print_info(f"Batch processing complete: {result.successful}/{result.total} files")

|

|

360

|

+

print()

|

|

361

|

+

|

|

362

|

+

# Check if any new files arrived during batch processing

|

|

363

|

+

current_files = set(input_dir.glob(pattern))

|

|

364

|

+

new_during_batch = current_files - set(all_files)

|

|

365

|

+

if new_during_batch:

|

|

366

|

+

ui.print_info(f"Found {len(new_during_batch)} files added during batch processing - queueing now")

|

|

367

|

+

for file_path in new_during_batch:

|

|

368

|

+

if file_path not in self.processed_files:

|

|

369

|

+

self.file_queue.put(file_path)

|

|

370

|

+

self.total_files += 1 # Increment total for each new file

|

|

371

|

+

else:

|

|

372

|

+

# No existing files, start fresh

|

|

373

|

+

self.total_files = 0

|

|

374

|

+

|

|

375

|

+

# Setup file system watcher

|

|

376

|

+

self.handler = MRCFileHandler(

|

|

377

|

+

pattern=pattern,

|

|

378

|

+

file_queue=self.file_queue,

|

|

379

|

+

processed_files=self.processed_files,

|

|

380

|

+

processor=self,

|

|

381

|

+

debounce_seconds=self.debounce_seconds,

|

|

382

|

+

)

|

|

383

|

+

|

|

384

|

+

self.observer = Observer()

|

|

385

|

+

self.observer.schedule(self.handler, str(input_dir), recursive=False)

|

|

386

|

+

self.observer.start()

|

|

387

|

+

|

|

388

|

+

# Determine GPU setup for workers

|

|

389

|

+

device_ids = self._get_worker_devices(num_workers)

|

|

390

|

+

|

|

391

|

+

# Print GPU list at startup (non-dynamic, just info)

|

|

392

|

+

if device_ids and device_ids[0] is not None:

|

|

393

|

+

try:

|

|

394

|

+

import torch

|

|

395

|

+

from .ui import Colors

|

|

396

|

+

unique_gpus = sorted(set(d for d in device_ids if d is not None))

|

|

397

|

+

print()

|

|

398

|

+

for gpu_id in unique_gpus:

|

|

399

|

+

gpu_name = torch.cuda.get_device_name(gpu_id)

|

|

400

|

+

print(f" {ui._colorize('✓', Colors.GREEN)} GPU {gpu_id}: {gpu_name}")

|

|

401

|

+

print()

|

|

402

|

+

except Exception as e:

|

|

403

|

+

pass # Silently skip if GPU info unavailable

|

|

404

|

+

|

|

405

|

+

# Start processing workers

|

|

406

|

+

self._running = True

|

|

407

|

+

for i, device_id in enumerate(device_ids):

|

|

408

|

+

worker = threading.Thread(

|

|

409

|

+

target=self._process_worker,

|

|

410

|

+

args=(output_dir, ui, device_id),

|

|

411

|

+

daemon=True,

|

|

412

|

+

)

|

|

413

|

+

worker.start()

|

|

414

|

+

self._workers.append(worker)

|

|

415

|

+

|

|

416

|

+

# Show initial counter

|

|

417

|

+

ui.show_live_counter_header()

|

|

418

|

+

ui.update_live_counter(count=0, total=self.total_files, avg_time=0.0, latest="waiting...")

|

|

419

|

+

|

|

420

|

+

# Wait for interrupt

|

|

421

|

+

try:

|

|

422

|

+

while True:

|

|

423

|

+

time.sleep(1.0)

|

|

424

|

+

except KeyboardInterrupt:

|

|

425

|

+

ui.show_watch_stopped()

|

|

426

|

+

|

|

427

|

+

# Cleanup

|

|

428

|

+

self._shutdown(ui)

|

|

429

|

+

|

|

430

|

+

return self.stats

|

|

431

|

+

|

|

432

|

+

def _get_worker_devices(self, num_workers: int) -> List[Optional[int]]:

|

|

433

|

+

"""

|

|

434

|

+

Determine device IDs for workers based on available GPUs.

|

|

435

|

+

|

|

436

|

+

Returns:

|

|

437

|

+

List of device IDs (None for CPU workers)

|

|

438

|

+

"""

|

|

439

|

+

# Check if CPU-only mode is forced

|

|

440

|

+

if self.config.backend == "numpy":

|

|

441

|

+

return [None] * num_workers

|

|

442

|

+

|

|

443

|

+

# Check for GPU availability

|

|

444

|

+

try:

|

|

445

|

+

import torch

|

|

446

|

+

if torch.cuda.is_available():

|

|

447

|

+

gpu_count = torch.cuda.device_count()

|

|

448

|

+

|

|

449

|

+

if gpu_count > 1 and num_workers > 1:

|

|

450

|

+

# Multi-GPU: assign workers to GPUs in round-robin

|

|

451

|

+

return [i % gpu_count for i in range(num_workers)]

|

|

452

|

+

else:

|

|

453

|

+

# Single GPU: all workers use GPU 0

|

|

454

|

+

return [0] * num_workers

|

|

455

|

+

except ImportError:

|

|

456

|

+

pass

|

|

457

|

+

|

|

458

|

+

# CPU mode: all workers use None (CPU)

|

|

459

|

+

return [None] * num_workers

|

|

460

|

+

|

|

461

|

+

def _shutdown(self, ui: TerminalUI):

|

|

462

|

+

"""Shutdown workers and observer cleanly."""

|

|

463

|

+

# Stop accepting new files

|

|

464

|

+

if self.observer:

|

|

465

|

+

self.observer.stop()

|

|

466

|

+

self.observer.join(timeout=5.0)

|

|

467

|

+

|

|

468

|

+

if self.handler:

|

|

469

|

+

self.handler.stop()

|

|

470

|

+

|

|

471

|

+

# Wait for queue to be processed

|

|

472

|

+

ui.print_info("Processing remaining queued files...")

|

|

473

|

+

self.file_queue.join()

|

|

474

|

+

|

|

475

|

+

# Stop workers

|

|

476

|

+

self._running = False

|

|

477

|

+

for worker in self._workers:

|

|

478

|

+

worker.join(timeout=5.0)

|

|

@@ -1,17 +0,0 @@

|

|

|

1

|

-

lattice_sub-1.3.1.dist-info/licenses/LICENSE,sha256=2kPoH0cbEp0cVEGqMpyF2IQX1npxdtQmWJB__HIRSb0,1101

|

|

2

|

-

lattice_subtraction/__init__.py,sha256=KL12LDW3dzz_JSBMGIo6UJ3_9vw7oooF7z7EG5P3i4Y,1737

|

|

3

|

-

lattice_subtraction/batch.py,sha256=zJzvUnr8dznvxE8jaPKDLJ7AcJg8Cbfv5nVo0FzZz1I,20891

|

|

4

|

-

lattice_subtraction/cli.py,sha256=W99XQClUMKaaFQxle0W-ILQ6UuYRFXZVJWD4qXpcIj4,24063

|

|

5

|

-

lattice_subtraction/config.py,sha256=uzwKb5Zi3phHUk2ZgoiLsQdwFdN-rTiY8n02U91SObc,8426

|

|

6

|

-

lattice_subtraction/core.py,sha256=VzcecSZHRuBuHUc2jHGv8LalINL75RH0aTpABI708y8,16265

|

|

7

|

-

lattice_subtraction/io.py,sha256=uHku6rJ0jeCph7w-gOIDJx-xpNoF6PZcLfb5TBTOiw0,4594

|

|

8

|

-

lattice_subtraction/masks.py,sha256=HIamrACmbQDkaCV4kXhnjMDSwIig4OtQFLig9A8PMO8,11741

|

|

9

|

-

lattice_subtraction/processing.py,sha256=tmnj5K4Z9HCQhRpJ-iMd9Bj_uTRuvDEWyUenh8MCWEM,8341

|

|

10

|

-

lattice_subtraction/threshold_optimizer.py,sha256=yEsGM_zt6YjgEulEZqtRy113xOFB69aHJIETm2xSS6k,15398

|

|

11

|

-

lattice_subtraction/ui.py,sha256=Sp_a-yNmBRZJxll8h9T_H5-_KsI13zGYmHcbcpVpbR8,9176

|

|

12

|

-

lattice_subtraction/visualization.py,sha256=noRhBXi_Xd1b5deBfVo0Bk0f3d2kqlf3_SQwAPJC0E0,12032

|

|

13

|

-

lattice_sub-1.3.1.dist-info/METADATA,sha256=qN0VzkEJyNbBe_AN5tKcUXh4VjDzaL1COQCk0W83ZiM,14901

|

|

14

|

-

lattice_sub-1.3.1.dist-info/WHEEL,sha256=wUyA8OaulRlbfwMtmQsvNngGrxQHAvkKcvRmdizlJi0,92

|

|

15

|

-

lattice_sub-1.3.1.dist-info/entry_points.txt,sha256=o8PzJR8kFnXlKZufoYGBIHpiosM-P4PZeKZXJjtPS6Y,61

|

|

16

|

-