langchain-timbr 2.0.3__py3-none-any.whl → 2.1.0__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- langchain_timbr/_version.py +2 -2

- langchain_timbr/utils/timbr_llm_utils.py +21 -16

- langchain_timbr-2.1.0.dist-info/METADATA +213 -0

- {langchain_timbr-2.0.3.dist-info → langchain_timbr-2.1.0.dist-info}/RECORD +6 -6

- langchain_timbr-2.0.3.dist-info/METADATA +0 -163

- {langchain_timbr-2.0.3.dist-info → langchain_timbr-2.1.0.dist-info}/WHEEL +0 -0

- {langchain_timbr-2.0.3.dist-info → langchain_timbr-2.1.0.dist-info}/licenses/LICENSE +0 -0

langchain_timbr/_version.py

CHANGED

|

@@ -28,7 +28,7 @@ version_tuple: VERSION_TUPLE

|

|

|

28

28

|

commit_id: COMMIT_ID

|

|

29

29

|

__commit_id__: COMMIT_ID

|

|

30

30

|

|

|

31

|

-

__version__ = version = '2.0

|

|

32

|

-

__version_tuple__ = version_tuple = (2,

|

|

31

|

+

__version__ = version = '2.1.0'

|

|

32

|

+

__version_tuple__ = version_tuple = (2, 1, 0)

|

|

33

33

|

|

|

34

34

|

__commit_id__ = commit_id = None

|

|

@@ -138,29 +138,30 @@ def _prompt_to_string(prompt: Any) -> str:

|

|

|

138

138

|

def _calculate_token_count(llm: LLM, prompt: str) -> int:

|

|

139

139

|

"""

|

|

140

140

|

Calculate the token count for a given prompt text using the specified LLM.

|

|

141

|

-

Falls back to

|

|

141

|

+

Falls back to basic if the LLM doesn't support token counting.

|

|

142

142

|

"""

|

|

143

|

+

import tiktoken

|

|

143

144

|

token_count = 0

|

|

145

|

+

|

|

146

|

+

encoding = None

|

|

144

147

|

try:

|

|

145

|

-

if hasattr(llm,

|

|

146

|

-

|

|

148

|

+

if hasattr(llm, 'client') and hasattr(llm.client, 'model_name'):

|

|

149

|

+

encoding = tiktoken.encoding_for_model(llm.client.model_name)

|

|

147

150

|

except Exception as e:

|

|

148

|

-

|

|

151

|

+

print(f"Error with primary token counting: {e}")

|

|

149

152

|

pass

|

|

150

153

|

|

|

151

|

-

|

|

152

|

-

|

|

153

|

-

try:

|

|

154

|

-

import tiktoken

|

|

154

|

+

try:

|

|

155

|

+

if encoding is None:

|

|

155

156

|

encoding = tiktoken.get_encoding("cl100k_base")

|

|

156

|

-

|

|

157

|

-

|

|

158

|

-

|

|

159

|

-

|

|

160

|

-

|

|

161

|

-

|

|

162

|

-

|

|

163

|

-

|

|

157

|

+

if isinstance(prompt, str):

|

|

158

|

+

token_count = len(encoding.encode(prompt))

|

|

159

|

+

else:

|

|

160

|

+

prompt_text = _prompt_to_string(prompt)

|

|

161

|

+

token_count = len(encoding.encode(prompt_text))

|

|

162

|

+

except Exception as e2:

|

|

163

|

+

#print(f"Error calculating token count with fallback method: {e2}")

|

|

164

|

+

pass

|

|

164

165

|

|

|

165

166

|

return token_count

|

|

166

167

|

|

|

@@ -179,6 +180,10 @@ def _get_response_text(response: Any) -> str:

|

|

|

179

180

|

else:

|

|

180

181

|

raise ValueError("Unexpected response format from LLM.")

|

|

181

182

|

|

|

183

|

+

if "QUESTION VALIDATION ERROR:" in response_text:

|

|

184

|

+

err = response_text.split("QUESTION VALIDATION ERROR:", 1)[1].strip()

|

|

185

|

+

raise ValueError(err)

|

|

186

|

+

|

|

182

187

|

return response_text

|

|

183

188

|

|

|

184

189

|

def _extract_usage_metadata(response: Any) -> dict:

|

|

@@ -0,0 +1,213 @@

|

|

|

1

|

+

Metadata-Version: 2.4

|

|

2

|

+

Name: langchain-timbr

|

|

3

|

+

Version: 2.1.0

|

|

4

|

+

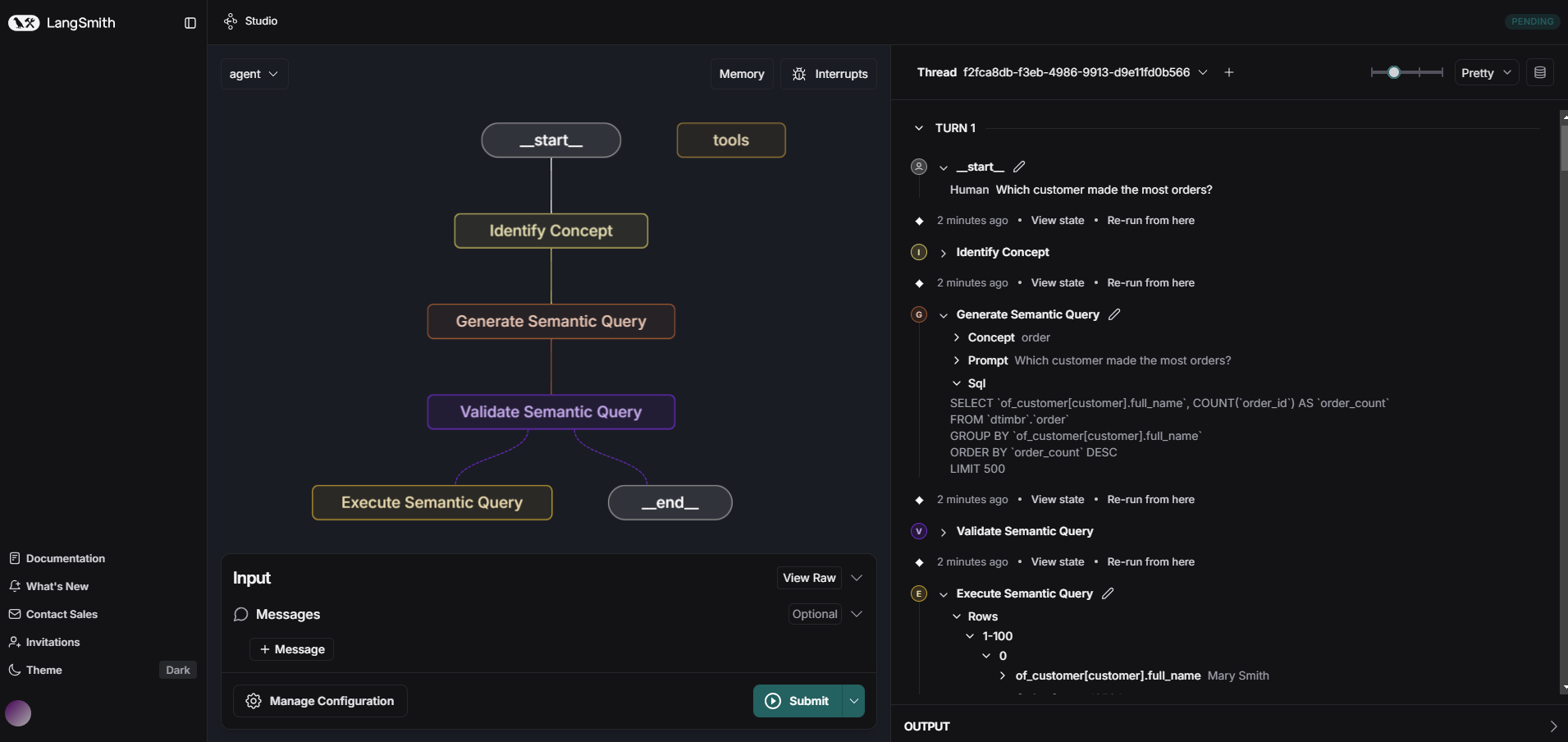

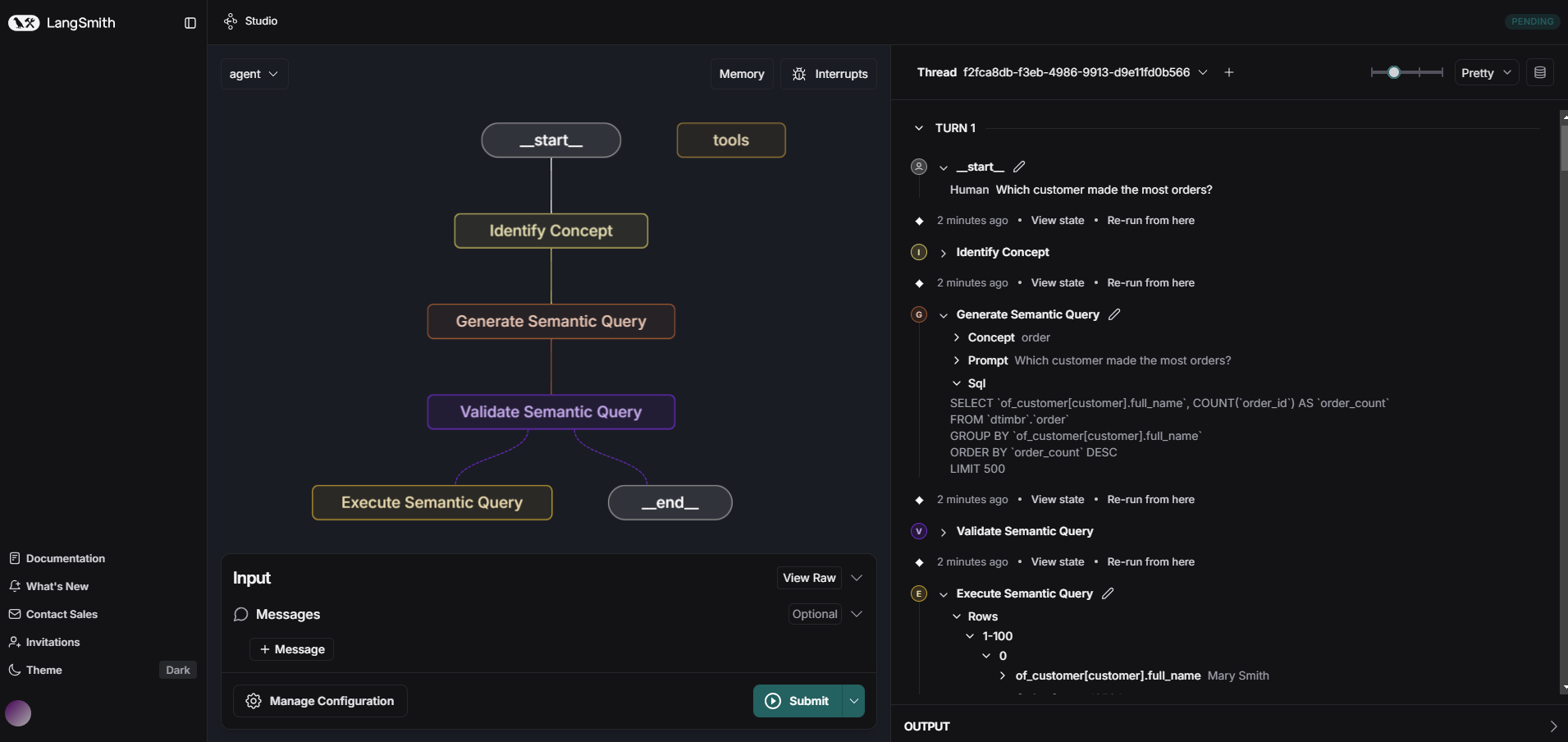

Summary: LangChain & LangGraph extensions that parse LLM prompts into Timbr semantic SQL and execute them.

|

|

5

|

+

Project-URL: Homepage, https://github.com/WPSemantix/langchain-timbr

|

|

6

|

+

Project-URL: Documentation, https://docs.timbr.ai/doc/docs/integration/langchain-sdk/

|

|

7

|

+

Project-URL: Source, https://github.com/WPSemantix/langchain-timbr

|

|

8

|

+

Project-URL: Issues, https://github.com/WPSemantix/langchain-timbr/issues

|

|

9

|

+

Author-email: "Timbr.ai" <contact@timbr.ai>

|

|

10

|

+

License: MIT

|

|

11

|

+

License-File: LICENSE

|

|

12

|

+

Keywords: Agents,Knowledge Graph,LLM,LangChain,LangGraph,SQL,Semantic Layer,Timbr

|

|

13

|

+

Classifier: Intended Audience :: Developers

|

|

14

|

+

Classifier: License :: OSI Approved :: MIT License

|

|

15

|

+

Classifier: Programming Language :: Python :: 3

|

|

16

|

+

Classifier: Programming Language :: Python :: 3 :: Only

|

|

17

|

+

Classifier: Programming Language :: Python :: 3.10

|

|

18

|

+

Classifier: Programming Language :: Python :: 3.11

|

|

19

|

+

Classifier: Programming Language :: Python :: 3.12

|

|

20

|

+

Classifier: Topic :: Scientific/Engineering :: Artificial Intelligence

|

|

21

|

+

Requires-Python: <3.13,>=3.10

|

|

22

|

+

Requires-Dist: anthropic==0.42.0

|

|

23

|

+

Requires-Dist: azure-identity==1.25.0; python_version >= '3.11'

|

|

24

|

+

Requires-Dist: azure-identity>=1.16.1; python_version == '3.10'

|

|

25

|

+

Requires-Dist: cryptography==45.0.7; python_version >= '3.11'

|

|

26

|

+

Requires-Dist: cryptography>=44.0.3; python_version == '3.10'

|

|

27

|

+

Requires-Dist: databricks-langchain==0.7.1

|

|

28

|

+

Requires-Dist: databricks-sdk==0.64.0

|

|

29

|

+

Requires-Dist: google-generativeai==0.8.4

|

|

30

|

+

Requires-Dist: langchain-anthropic==0.3.5; python_version >= '3.11'

|

|

31

|

+

Requires-Dist: langchain-anthropic>=0.3.1; python_version == '3.10'

|

|

32

|

+

Requires-Dist: langchain-community==0.3.30; python_version >= '3.11'

|

|

33

|

+

Requires-Dist: langchain-community>=0.3.20; python_version == '3.10'

|

|

34

|

+

Requires-Dist: langchain-core==0.3.78; python_version >= '3.11'

|

|

35

|

+

Requires-Dist: langchain-core>=0.3.58; python_version == '3.10'

|

|

36

|

+

Requires-Dist: langchain-google-genai==2.0.10; python_version >= '3.11'

|

|

37

|

+

Requires-Dist: langchain-google-genai>=2.0.9; python_version == '3.10'

|

|

38

|

+

Requires-Dist: langchain-google-vertexai==2.1.2; python_version >= '3.11'

|

|

39

|

+

Requires-Dist: langchain-google-vertexai>=2.0.28; python_version == '3.10'

|

|

40

|

+

Requires-Dist: langchain-openai==0.3.34; python_version >= '3.11'

|

|

41

|

+

Requires-Dist: langchain-openai>=0.3.16; python_version == '3.10'

|

|

42

|

+

Requires-Dist: langchain-tests==0.3.22; python_version >= '3.11'

|

|

43

|

+

Requires-Dist: langchain-tests>=0.3.20; python_version == '3.10'

|

|

44

|

+

Requires-Dist: langchain==0.3.27; python_version >= '3.11'

|

|

45

|

+

Requires-Dist: langchain>=0.3.25; python_version == '3.10'

|

|

46

|

+

Requires-Dist: langgraph==0.6.8; python_version >= '3.11'

|

|

47

|

+

Requires-Dist: langgraph>=0.3.20; python_version == '3.10'

|

|

48

|

+

Requires-Dist: openai==2.1.0; python_version >= '3.11'

|

|

49

|

+

Requires-Dist: openai>=1.77.0; python_version == '3.10'

|

|

50

|

+

Requires-Dist: opentelemetry-api==1.38.0; python_version == '3.10'

|

|

51

|

+

Requires-Dist: opentelemetry-sdk==1.38.0; python_version == '3.10'

|

|

52

|

+

Requires-Dist: pydantic==2.10.4

|

|

53

|

+

Requires-Dist: pytest==8.3.4

|

|

54

|

+

Requires-Dist: pytimbr-api==2.0.0; python_version >= '3.11'

|

|

55

|

+

Requires-Dist: pytimbr-api>=2.0.0; python_version == '3.10'

|

|

56

|

+

Requires-Dist: snowflake-snowpark-python==1.39.1; python_version >= '3.11'

|

|

57

|

+

Requires-Dist: snowflake-snowpark-python>=1.39.1; python_version == '3.10'

|

|

58

|

+

Requires-Dist: snowflake==1.8.0; python_version >= '3.11'

|

|

59

|

+

Requires-Dist: snowflake>=1.8.0; python_version == '3.10'

|

|

60

|

+

Requires-Dist: tiktoken==0.8.0

|

|

61

|

+

Requires-Dist: transformers==4.57.0; python_version >= '3.11'

|

|

62

|

+

Requires-Dist: transformers>=4.53; python_version == '3.10'

|

|

63

|

+

Requires-Dist: uvicorn==0.34.0

|

|

64

|

+

Provides-Extra: all

|

|

65

|

+

Requires-Dist: anthropic==0.42.0; extra == 'all'

|

|

66

|

+

Requires-Dist: azure-identity==1.25.0; (python_version >= '3.11') and extra == 'all'

|

|

67

|

+

Requires-Dist: azure-identity>=1.16.1; (python_version == '3.10') and extra == 'all'

|

|

68

|

+

Requires-Dist: databricks-langchain==0.7.1; extra == 'all'

|

|

69

|

+

Requires-Dist: databricks-sdk==0.64.0; extra == 'all'

|

|

70

|

+

Requires-Dist: google-generativeai==0.8.4; extra == 'all'

|

|

71

|

+

Requires-Dist: langchain-anthropic==0.3.5; (python_version >= '3.11') and extra == 'all'

|

|

72

|

+

Requires-Dist: langchain-anthropic>=0.3.1; (python_version == '3.10') and extra == 'all'

|

|

73

|

+

Requires-Dist: langchain-google-genai==2.0.10; (python_version >= '3.11') and extra == 'all'

|

|

74

|

+

Requires-Dist: langchain-google-genai>=2.0.9; (python_version == '3.10') and extra == 'all'

|

|

75

|

+

Requires-Dist: langchain-google-vertexai==2.1.2; (python_version >= '3.11') and extra == 'all'

|

|

76

|

+

Requires-Dist: langchain-google-vertexai>=2.0.28; (python_version == '3.10') and extra == 'all'

|

|

77

|

+

Requires-Dist: langchain-openai==0.3.34; (python_version >= '3.11') and extra == 'all'

|

|

78

|

+

Requires-Dist: langchain-openai>=0.3.16; (python_version == '3.10') and extra == 'all'

|

|

79

|

+

Requires-Dist: langchain-tests==0.3.22; (python_version >= '3.11') and extra == 'all'

|

|

80

|

+

Requires-Dist: langchain-tests>=0.3.20; (python_version == '3.10') and extra == 'all'

|

|

81

|

+

Requires-Dist: openai==2.1.0; (python_version >= '3.11') and extra == 'all'

|

|

82

|

+

Requires-Dist: openai>=1.77.0; (python_version == '3.10') and extra == 'all'

|

|

83

|

+

Requires-Dist: pytest==8.3.4; extra == 'all'

|

|

84

|

+

Requires-Dist: snowflake-snowpark-python==1.39.1; (python_version >= '3.11') and extra == 'all'

|

|

85

|

+

Requires-Dist: snowflake-snowpark-python>=1.39.1; (python_version == '3.10') and extra == 'all'

|

|

86

|

+

Requires-Dist: snowflake==1.8.0; (python_version >= '3.11') and extra == 'all'

|

|

87

|

+

Requires-Dist: snowflake>=1.8.0; (python_version == '3.10') and extra == 'all'

|

|

88

|

+

Requires-Dist: uvicorn==0.34.0; extra == 'all'

|

|

89

|

+

Provides-Extra: anthropic

|

|

90

|

+

Requires-Dist: anthropic==0.42.0; extra == 'anthropic'

|

|

91

|

+

Requires-Dist: langchain-anthropic==0.3.5; (python_version >= '3.11') and extra == 'anthropic'

|

|

92

|

+

Requires-Dist: langchain-anthropic>=0.3.1; (python_version == '3.10') and extra == 'anthropic'

|

|

93

|

+

Provides-Extra: azure-openai

|

|

94

|

+

Requires-Dist: azure-identity==1.25.0; (python_version >= '3.11') and extra == 'azure-openai'

|

|

95

|

+

Requires-Dist: azure-identity>=1.16.1; (python_version == '3.10') and extra == 'azure-openai'

|

|

96

|

+

Requires-Dist: langchain-openai==0.3.34; (python_version >= '3.11') and extra == 'azure-openai'

|

|

97

|

+

Requires-Dist: langchain-openai>=0.3.16; (python_version == '3.10') and extra == 'azure-openai'

|

|

98

|

+

Requires-Dist: openai==2.1.0; (python_version >= '3.11') and extra == 'azure-openai'

|

|

99

|

+

Requires-Dist: openai>=1.77.0; (python_version == '3.10') and extra == 'azure-openai'

|

|

100

|

+

Provides-Extra: databricks

|

|

101

|

+

Requires-Dist: databricks-langchain==0.7.1; extra == 'databricks'

|

|

102

|

+

Requires-Dist: databricks-sdk==0.64.0; extra == 'databricks'

|

|

103

|

+

Provides-Extra: dev

|

|

104

|

+

Requires-Dist: langchain-tests==0.3.22; (python_version >= '3.11') and extra == 'dev'

|

|

105

|

+

Requires-Dist: langchain-tests>=0.3.20; (python_version == '3.10') and extra == 'dev'

|

|

106

|

+

Requires-Dist: pytest==8.3.4; extra == 'dev'

|

|

107

|

+

Requires-Dist: uvicorn==0.34.0; extra == 'dev'

|

|

108

|

+

Provides-Extra: google

|

|

109

|

+

Requires-Dist: google-generativeai==0.8.4; extra == 'google'

|

|

110

|

+

Requires-Dist: langchain-google-genai==2.0.10; (python_version >= '3.11') and extra == 'google'

|

|

111

|

+

Requires-Dist: langchain-google-genai>=2.0.9; (python_version == '3.10') and extra == 'google'

|

|

112

|

+

Provides-Extra: openai

|

|

113

|

+

Requires-Dist: langchain-openai==0.3.34; (python_version >= '3.11') and extra == 'openai'

|

|

114

|

+

Requires-Dist: langchain-openai>=0.3.16; (python_version == '3.10') and extra == 'openai'

|

|

115

|

+

Requires-Dist: openai==2.1.0; (python_version >= '3.11') and extra == 'openai'

|

|

116

|

+

Requires-Dist: openai>=1.77.0; (python_version == '3.10') and extra == 'openai'

|

|

117

|

+

Provides-Extra: snowflake

|

|

118

|

+

Requires-Dist: opentelemetry-api==1.38.0; (python_version < '3.12') and extra == 'snowflake'

|

|

119

|

+

Requires-Dist: opentelemetry-sdk==1.38.0; (python_version < '3.12') and extra == 'snowflake'

|

|

120

|

+

Requires-Dist: snowflake-snowpark-python==1.39.1; (python_version >= '3.11') and extra == 'snowflake'

|

|

121

|

+

Requires-Dist: snowflake-snowpark-python>=1.39.1; (python_version == '3.10') and extra == 'snowflake'

|

|

122

|

+

Requires-Dist: snowflake==1.8.0; (python_version >= '3.11') and extra == 'snowflake'

|

|

123

|

+

Requires-Dist: snowflake>=1.8.0; (python_version == '3.10') and extra == 'snowflake'

|

|

124

|

+

Provides-Extra: vertex-ai

|

|

125

|

+

Requires-Dist: google-generativeai==0.8.4; extra == 'vertex-ai'

|

|

126

|

+

Requires-Dist: langchain-google-vertexai==2.1.2; (python_version >= '3.11') and extra == 'vertex-ai'

|

|

127

|

+

Requires-Dist: langchain-google-vertexai>=2.0.28; (python_version == '3.10') and extra == 'vertex-ai'

|

|

128

|

+

Description-Content-Type: text/markdown

|

|

129

|

+

|

|

130

|

+

|

|

131

|

+

|

|

132

|

+

[](https://app.fossa.com/projects/git%2Bgithub.com%2FWPSemantix%2Flangchain-timbr?ref=badge_shield&issueType=security)

|

|

133

|

+

[](https://app.fossa.com/projects/git%2Bgithub.com%2FWPSemantix%2Flangchain-timbr?ref=badge_shield&issueType=license)

|

|

134

|

+

|

|

135

|

+

|

|

136

|

+

[](https://www.python.org/downloads/release/python-31017/)

|

|

137

|

+

[](https://www.python.org/downloads/release/python-31112/)

|

|

138

|

+

[](https://www.python.org/downloads/release/python-3129/)

|

|

139

|

+

|

|

140

|

+

# Timbr LangChain LLM SDK

|

|

141

|

+

|

|

142

|

+

Timbr LangChain LLM SDK is a Python SDK that extends LangChain and LangGraph with custom agents, chains, and nodes for seamless integration with the Timbr semantic layer. It enables converting natural language prompts into optimized semantic-SQL queries and executing them directly against your data.

|

|

143

|

+

|

|

144

|

+

|

|

145

|

+

|

|

146

|

+

## Dependencies

|

|

147

|

+

|

|

148

|

+

- Access to a timbr-server

|

|

149

|

+

- Python 3.10 or newer

|

|

150

|

+

|

|

151

|

+

## Installation

|

|

152

|

+

|

|

153

|

+

### Using pip

|

|

154

|

+

|

|

155

|

+

```bash

|

|

156

|

+

python -m pip install langchain-timbr

|

|

157

|

+

```

|

|

158

|

+

|

|

159

|

+

### Install with selected LLM providers

|

|

160

|

+

|

|

161

|

+

#### One of: openai, anthropic, google, azure_openai, snowflake, databricks, vertex_ai (or 'all')

|

|

162

|

+

|

|

163

|

+

```bash

|

|

164

|

+

python -m pip install 'langchain-timbr[<your selected providers, separated by comma w/o space>]'

|

|

165

|

+

```

|

|

166

|

+

|

|

167

|

+

### Using pip from github

|

|

168

|

+

|

|

169

|

+

```bash

|

|

170

|

+

pip install git+https://github.com/WPSemantix/langchain-timbr

|

|

171

|

+

```

|

|

172

|

+

|

|

173

|

+

## Documentation

|

|

174

|

+

|

|

175

|

+

For comprehensive documentation and usage examples, please visit:

|

|

176

|

+

|

|

177

|

+

- [Timbr LangChain Documentation](https://docs.timbr.ai/doc/docs/integration/langchain-sdk)

|

|

178

|

+

- [Timbr LangGraph Documentation](https://docs.timbr.ai/doc/docs/integration/langgraph-sdk)

|

|

179

|

+

|

|

180

|

+

## Configuration

|

|

181

|

+

|

|

182

|

+

The SDK uses environment variables for configuration. All configurations are optional - when set, they serve as default values for `langchain-timbr` provided tools. Below are all available configuration options:

|

|

183

|

+

|

|

184

|

+

### Configuration Options

|

|

185

|

+

|

|

186

|

+

#### Timbr Connection Settings

|

|

187

|

+

|

|

188

|

+

- **`TIMBR_URL`** - The URL of your Timbr server

|

|

189

|

+

- **`TIMBR_TOKEN`** - Authentication token for accessing the Timbr server

|

|

190

|

+

- **`TIMBR_ONTOLOGY`** - The ontology to use (also accepts `ONTOLOGY` as an alias)

|

|

191

|

+

- **`IS_JWT`** - Whether the token is a JWT token (true/false)

|

|

192

|

+

- **`JWT_TENANT_ID`** - Tenant ID for JWT authentication

|

|

193

|

+

|

|

194

|

+

#### Cache and Data Processing

|

|

195

|

+

|

|

196

|

+

- **`CACHE_TIMEOUT`** - Timeout for caching operations in seconds

|

|

197

|

+

- **`IGNORE_TAGS`** - Comma-separated list of tags to ignore during processing

|

|

198

|

+

- **`IGNORE_TAGS_PREFIX`** - Comma-separated list of tag prefixes to ignore during processing

|

|

199

|

+

|

|

200

|

+

#### LLM Configuration

|

|

201

|

+

|

|

202

|

+

- **`LLM_TYPE`** - The type of LLM provider to use

|

|

203

|

+

- **`LLM_MODEL`** - The specific model to use with the LLM provider

|

|

204

|

+

- **`LLM_API_KEY`** - API key or client secret for the LLM provider

|

|

205

|

+

- **`LLM_TEMPERATURE`** - Temperature setting for LLM responses (controls randomness)

|

|

206

|

+

- **`LLM_ADDITIONAL_PARAMS`** - Additional parameters to pass to the LLM

|

|

207

|

+

- **`LLM_TIMEOUT`** - Timeout for LLM requests in seconds

|

|

208

|

+

- **`LLM_TENANT_ID`** - LLM provider tenant/directory ID (Used for Service Principal authentication)

|

|

209

|

+

- **`LLM_CLIENT_ID`** - LLM provider client ID (Used for Service Principal authentication)

|

|

210

|

+

- **`LLM_CLIENT_SECRET`** - LLM provider client secret (Used for Service Principal authentication)

|

|

211

|

+

- **`LLM_ENDPOINT`** - LLM provider OpenAI endpoint URL

|

|

212

|

+

- **`LLM_API_VERSION`** - LLM provider API version

|

|

213

|

+

- **`LLM_SCOPE`** - LLM provider authentication scope

|

|

@@ -1,5 +1,5 @@

|

|

|

1

1

|

langchain_timbr/__init__.py,sha256=gxd6Y6QDmYZtPlYVdXtPIy501hMOZXHjWh2qq4qzt_s,828

|

|

2

|

-

langchain_timbr/_version.py,sha256=

|

|

2

|

+

langchain_timbr/_version.py,sha256=6G8yJbldQAMT0M9ZiFAqGo5OVqUMxGB1aeWeKmrwNIE,704

|

|

3

3

|

langchain_timbr/config.py,sha256=PEtvNgvnA9UseZJjKgup_O6xdG-VYk3N11nH8p8W1Kg,1410

|

|

4

4

|

langchain_timbr/timbr_llm_connector.py,sha256=1jDicBZkW7CKB-PvQiQ1_AMXYm9JJHaoNaPqy54nhh8,13096

|

|

5

5

|

langchain_timbr/langchain/__init__.py,sha256=ejcsZKP9PK0j4WrrCCcvBXpDpP-TeRiVb21OIUJqix8,580

|

|

@@ -20,9 +20,9 @@ langchain_timbr/llm_wrapper/timbr_llm_wrapper.py,sha256=sDqDOz0qu8b4WWlagjNceswM

|

|

|

20

20

|

langchain_timbr/utils/general.py,sha256=MtY-ZExKJrcBzV3EQNn6G1ESKpiQB2hJCp95BrUayUo,5707

|

|

21

21

|

langchain_timbr/utils/prompt_service.py,sha256=QT7kiq72rQno77z1-tvGGD7HlH_wdTQAl_1teSoKEv4,11373

|

|

22

22

|

langchain_timbr/utils/temperature_supported_models.json,sha256=d3UmBUpG38zDjjB42IoGpHTUaf0pHMBRSPY99ao1a3g,1832

|

|

23

|

-

langchain_timbr/utils/timbr_llm_utils.py,sha256=

|

|

23

|

+

langchain_timbr/utils/timbr_llm_utils.py,sha256=g8bHzymnwmrnffYh0KMQ7j2hGvYcbjAqQInZfE9b5io,23122

|

|

24

24

|

langchain_timbr/utils/timbr_utils.py,sha256=p21DwTGhF4iKTLDQBkeBaJDFcXt-Hpu1ij8xzQt00Ng,16958

|

|

25

|

-

langchain_timbr-2.0.

|

|

26

|

-

langchain_timbr-2.0.

|

|

27

|

-

langchain_timbr-2.0.

|

|

28

|

-

langchain_timbr-2.0.

|

|

25

|

+

langchain_timbr-2.1.0.dist-info/METADATA,sha256=RwwECPHKJAGuRb9PcaSVfeMPgbGjudLV6xMjDH0sRQU,12268

|

|

26

|

+

langchain_timbr-2.1.0.dist-info/WHEEL,sha256=qtCwoSJWgHk21S1Kb4ihdzI2rlJ1ZKaIurTj_ngOhyQ,87

|

|

27

|

+

langchain_timbr-2.1.0.dist-info/licenses/LICENSE,sha256=0ITGFk2alkC7-e--bRGtuzDrv62USIiVyV2Crf3_L_0,1065

|

|

28

|

+

langchain_timbr-2.1.0.dist-info/RECORD,,

|

|

@@ -1,163 +0,0 @@

|

|

|

1

|

-

Metadata-Version: 2.4

|

|

2

|

-

Name: langchain-timbr

|

|

3

|

-

Version: 2.0.3

|

|

4

|

-

Summary: LangChain & LangGraph extensions that parse LLM prompts into Timbr semantic SQL and execute them.

|

|

5

|

-

Project-URL: Homepage, https://github.com/WPSemantix/langchain-timbr

|

|

6

|

-

Project-URL: Documentation, https://docs.timbr.ai/doc/docs/integration/langchain-sdk/

|

|

7

|

-

Project-URL: Source, https://github.com/WPSemantix/langchain-timbr

|

|

8

|

-

Project-URL: Issues, https://github.com/WPSemantix/langchain-timbr/issues

|

|

9

|

-

Author-email: "Timbr.ai" <contact@timbr.ai>

|

|

10

|

-

License: MIT

|

|

11

|

-

License-File: LICENSE

|

|

12

|

-

Keywords: Agents,Knowledge Graph,LLM,LangChain,LangGraph,SQL,Semantic Layer,Timbr

|

|

13

|

-

Classifier: Intended Audience :: Developers

|

|

14

|

-

Classifier: License :: OSI Approved :: MIT License

|

|

15

|

-

Classifier: Programming Language :: Python :: 3

|

|

16

|

-

Classifier: Programming Language :: Python :: 3 :: Only

|

|

17

|

-

Classifier: Programming Language :: Python :: 3.9

|

|

18

|

-

Classifier: Programming Language :: Python :: 3.10

|

|

19

|

-

Classifier: Programming Language :: Python :: 3.11

|

|

20

|

-

Classifier: Programming Language :: Python :: 3.12

|

|

21

|

-

Classifier: Topic :: Scientific/Engineering :: Artificial Intelligence

|

|

22

|

-

Requires-Python: <3.13,>=3.9

|

|

23

|

-

Requires-Dist: cryptography>=44.0.3

|

|

24

|

-

Requires-Dist: langchain-community>=0.3.20

|

|

25

|

-

Requires-Dist: langchain-core>=0.3.58

|

|

26

|

-

Requires-Dist: langchain>=0.3.25

|

|

27

|

-

Requires-Dist: langgraph>=0.3.20

|

|

28

|

-

Requires-Dist: pydantic==2.10.4

|

|

29

|

-

Requires-Dist: pytimbr-api>=2.0.0

|

|

30

|

-

Requires-Dist: tiktoken==0.8.0

|

|

31

|

-

Requires-Dist: transformers>=4.53

|

|

32

|

-

Provides-Extra: all

|

|

33

|

-

Requires-Dist: anthropic==0.42.0; extra == 'all'

|

|

34

|

-

Requires-Dist: azure-identity>=1.16.1; extra == 'all'

|

|

35

|

-

Requires-Dist: databricks-langchain==0.3.0; (python_version < '3.10') and extra == 'all'

|

|

36

|

-

Requires-Dist: databricks-langchain==0.7.1; (python_version >= '3.10') and extra == 'all'

|

|

37

|

-

Requires-Dist: databricks-sdk==0.64.0; extra == 'all'

|

|

38

|

-

Requires-Dist: google-generativeai==0.8.4; extra == 'all'

|

|

39

|

-

Requires-Dist: langchain-anthropic>=0.3.1; extra == 'all'

|

|

40

|

-

Requires-Dist: langchain-google-genai>=2.0.9; extra == 'all'

|

|

41

|

-

Requires-Dist: langchain-google-vertexai>=2.0.28; extra == 'all'

|

|

42

|

-

Requires-Dist: langchain-openai>=0.3.16; extra == 'all'

|

|

43

|

-

Requires-Dist: langchain-tests>=0.3.20; extra == 'all'

|

|

44

|

-

Requires-Dist: openai>=1.77.0; extra == 'all'

|

|

45

|

-

Requires-Dist: pyarrow<20.0.0,>=19.0.1; extra == 'all'

|

|

46

|

-

Requires-Dist: pytest==8.3.4; extra == 'all'

|

|

47

|

-

Requires-Dist: snowflake-snowpark-python>=1.6.0; extra == 'all'

|

|

48

|

-

Requires-Dist: snowflake>=0.8.0; extra == 'all'

|

|

49

|

-

Requires-Dist: uvicorn==0.34.0; extra == 'all'

|

|

50

|

-

Provides-Extra: anthropic

|

|

51

|

-

Requires-Dist: anthropic==0.42.0; extra == 'anthropic'

|

|

52

|

-

Requires-Dist: langchain-anthropic>=0.3.1; extra == 'anthropic'

|

|

53

|

-

Provides-Extra: azure-openai

|

|

54

|

-

Requires-Dist: azure-identity>=1.16.1; extra == 'azure-openai'

|

|

55

|

-

Requires-Dist: langchain-openai>=0.3.16; extra == 'azure-openai'

|

|

56

|

-

Requires-Dist: openai>=1.77.0; extra == 'azure-openai'

|

|

57

|

-

Provides-Extra: databricks

|

|

58

|

-

Requires-Dist: databricks-langchain==0.3.0; (python_version < '3.10') and extra == 'databricks'

|

|

59

|

-

Requires-Dist: databricks-langchain==0.7.1; (python_version >= '3.10') and extra == 'databricks'

|

|

60

|

-

Requires-Dist: databricks-sdk==0.64.0; extra == 'databricks'

|

|

61

|

-

Provides-Extra: dev

|

|

62

|

-

Requires-Dist: langchain-tests>=0.3.20; extra == 'dev'

|

|

63

|

-

Requires-Dist: pyarrow<20.0.0,>=19.0.1; extra == 'dev'

|

|

64

|

-

Requires-Dist: pytest==8.3.4; extra == 'dev'

|

|

65

|

-

Requires-Dist: uvicorn==0.34.0; extra == 'dev'

|

|

66

|

-

Provides-Extra: google

|

|

67

|

-

Requires-Dist: google-generativeai==0.8.4; extra == 'google'

|

|

68

|

-

Requires-Dist: langchain-google-genai>=2.0.9; extra == 'google'

|

|

69

|

-

Provides-Extra: openai

|

|

70

|

-

Requires-Dist: langchain-openai>=0.3.16; extra == 'openai'

|

|

71

|

-

Requires-Dist: openai>=1.77.0; extra == 'openai'

|

|

72

|

-

Provides-Extra: snowflake

|

|

73

|

-

Requires-Dist: snowflake-snowpark-python>=1.6.0; extra == 'snowflake'

|

|

74

|

-

Requires-Dist: snowflake>=0.8.0; extra == 'snowflake'

|

|

75

|

-

Provides-Extra: vertex-ai

|

|

76

|

-

Requires-Dist: google-generativeai==0.8.4; extra == 'vertex-ai'

|

|

77

|

-

Requires-Dist: langchain-google-vertexai>=2.0.28; extra == 'vertex-ai'

|

|

78

|

-

Description-Content-Type: text/markdown

|

|

79

|

-

|

|

80

|

-

|

|

81

|

-

|

|

82

|

-

[](https://app.fossa.com/projects/git%2Bgithub.com%2FWPSemantix%2Flangchain-timbr?ref=badge_shield&issueType=security)

|

|

83

|

-

[](https://app.fossa.com/projects/git%2Bgithub.com%2FWPSemantix%2Flangchain-timbr?ref=badge_shield&issueType=license)

|

|

84

|

-

|

|

85

|

-

[](https://www.python.org/downloads/release/python-3921/)

|

|

86

|

-

[](https://www.python.org/downloads/release/python-31017/)

|

|

87

|

-

[](https://www.python.org/downloads/release/python-31112/)

|

|

88

|

-

[](https://www.python.org/downloads/release/python-3129/)

|

|

89

|

-

|

|

90

|

-

# Timbr LangChain LLM SDK

|

|

91

|

-

|

|

92

|

-

Timbr LangChain LLM SDK is a Python SDK that extends LangChain and LangGraph with custom agents, chains, and nodes for seamless integration with the Timbr semantic layer. It enables converting natural language prompts into optimized semantic-SQL queries and executing them directly against your data.

|

|

93

|

-

|

|

94

|

-

|

|

95

|

-

|

|

96

|

-

## Dependencies

|

|

97

|

-

|

|

98

|

-

- Access to a timbr-server

|

|

99

|

-

- Python 3.9.13 or newer

|

|

100

|

-

|

|

101

|

-

## Installation

|

|

102

|

-

|

|

103

|

-

### Using pip

|

|

104

|

-

|

|

105

|

-

```bash

|

|

106

|

-

python -m pip install langchain-timbr

|

|

107

|

-

```

|

|

108

|

-

|

|

109

|

-

### Install with selected LLM providers

|

|

110

|

-

|

|

111

|

-

#### One of: openai, anthropic, google, azure_openai, snowflake, databricks, vertex_ai (or 'all')

|

|

112

|

-

|

|

113

|

-

```bash

|

|

114

|

-

python -m pip install 'langchain-timbr[<your selected providers, separated by comma w/o space>]'

|

|

115

|

-

```

|

|

116

|

-

|

|

117

|

-

### Using pip from github

|

|

118

|

-

|

|

119

|

-

```bash

|

|

120

|

-

pip install git+https://github.com/WPSemantix/langchain-timbr

|

|

121

|

-

```

|

|

122

|

-

|

|

123

|

-

## Documentation

|

|

124

|

-

|

|

125

|

-

For comprehensive documentation and usage examples, please visit:

|

|

126

|

-

|

|

127

|

-

- [Timbr LangChain Documentation](https://docs.timbr.ai/doc/docs/integration/langchain-sdk)

|

|

128

|

-

- [Timbr LangGraph Documentation](https://docs.timbr.ai/doc/docs/integration/langgraph-sdk)

|

|

129

|

-

|

|

130

|

-

## Configuration

|

|

131

|

-

|

|

132

|

-

The SDK uses environment variables for configuration. All configurations are optional - when set, they serve as default values for `langchain-timbr` provided tools. Below are all available configuration options:

|

|

133

|

-

|

|

134

|

-

### Configuration Options

|

|

135

|

-

|

|

136

|

-

#### Timbr Connection Settings

|

|

137

|

-

|

|

138

|

-

- **`TIMBR_URL`** - The URL of your Timbr server

|

|

139

|

-

- **`TIMBR_TOKEN`** - Authentication token for accessing the Timbr server

|

|

140

|

-

- **`TIMBR_ONTOLOGY`** - The ontology to use (also accepts `ONTOLOGY` as an alias)

|

|

141

|

-

- **`IS_JWT`** - Whether the token is a JWT token (true/false)

|

|

142

|

-

- **`JWT_TENANT_ID`** - Tenant ID for JWT authentication

|

|

143

|

-

|

|

144

|

-

#### Cache and Data Processing

|

|

145

|

-

|

|

146

|

-

- **`CACHE_TIMEOUT`** - Timeout for caching operations in seconds

|

|

147

|

-

- **`IGNORE_TAGS`** - Comma-separated list of tags to ignore during processing

|

|

148

|

-

- **`IGNORE_TAGS_PREFIX`** - Comma-separated list of tag prefixes to ignore during processing

|

|

149

|

-

|

|

150

|

-

#### LLM Configuration

|

|

151

|

-

|

|

152

|

-

- **`LLM_TYPE`** - The type of LLM provider to use

|

|

153

|

-

- **`LLM_MODEL`** - The specific model to use with the LLM provider

|

|

154

|

-

- **`LLM_API_KEY`** - API key or client secret for the LLM provider

|

|

155

|

-

- **`LLM_TEMPERATURE`** - Temperature setting for LLM responses (controls randomness)

|

|

156

|

-

- **`LLM_ADDITIONAL_PARAMS`** - Additional parameters to pass to the LLM

|

|

157

|

-

- **`LLM_TIMEOUT`** - Timeout for LLM requests in seconds

|

|

158

|

-

- **`LLM_TENANT_ID`** - LLM provider tenant/directory ID (Used for Service Principal authentication)

|

|

159

|

-

- **`LLM_CLIENT_ID`** - LLM provider client ID (Used for Service Principal authentication)

|

|

160

|

-

- **`LLM_CLIENT_SECRET`** - LLM provider client secret (Used for Service Principal authentication)

|

|

161

|

-

- **`LLM_ENDPOINT`** - LLM provider OpenAI endpoint URL

|

|

162

|

-

- **`LLM_API_VERSION`** - LLM provider API version

|

|

163

|

-

- **`LLM_SCOPE`** - LLM provider authentication scope

|

|

File without changes

|

|

File without changes

|