hud-python 0.4.35__py3-none-any.whl → 0.4.37__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

Potentially problematic release.

This version of hud-python might be problematic. Click here for more details.

- hud/agents/__init__.py +2 -0

- hud/agents/lite_llm.py +72 -0

- hud/agents/openai_chat_generic.py +21 -7

- hud/agents/tests/test_claude.py +32 -7

- hud/agents/tests/test_openai.py +29 -6

- hud/cli/__init__.py +228 -79

- hud/cli/build.py +26 -6

- hud/cli/dev.py +21 -40

- hud/cli/eval.py +96 -15

- hud/cli/flows/tasks.py +198 -65

- hud/cli/init.py +222 -629

- hud/cli/pull.py +6 -0

- hud/cli/push.py +11 -1

- hud/cli/rl/__init__.py +14 -4

- hud/cli/rl/celebrate.py +187 -0

- hud/cli/rl/config.py +15 -8

- hud/cli/rl/local_runner.py +44 -20

- hud/cli/rl/remote_runner.py +166 -87

- hud/cli/rl/viewer.py +141 -0

- hud/cli/rl/wait_utils.py +89 -0

- hud/cli/tests/test_build.py +3 -27

- hud/cli/tests/test_mcp_server.py +1 -12

- hud/cli/utils/config.py +85 -0

- hud/cli/utils/docker.py +21 -39

- hud/cli/utils/env_check.py +196 -0

- hud/cli/utils/environment.py +4 -3

- hud/cli/utils/interactive.py +2 -1

- hud/cli/utils/local_runner.py +204 -0

- hud/cli/utils/metadata.py +3 -1

- hud/cli/utils/package_runner.py +292 -0

- hud/cli/utils/remote_runner.py +4 -1

- hud/cli/utils/source_hash.py +108 -0

- hud/clients/base.py +1 -1

- hud/clients/fastmcp.py +1 -1

- hud/clients/mcp_use.py +30 -7

- hud/datasets/parallel.py +3 -1

- hud/datasets/runner.py +4 -1

- hud/otel/config.py +1 -1

- hud/otel/context.py +40 -6

- hud/rl/buffer.py +3 -0

- hud/rl/tests/test_learner.py +1 -1

- hud/rl/vllm_adapter.py +1 -1

- hud/server/server.py +234 -7

- hud/server/tests/test_add_tool.py +60 -0

- hud/server/tests/test_context.py +128 -0

- hud/server/tests/test_mcp_server_handlers.py +44 -0

- hud/server/tests/test_mcp_server_integration.py +405 -0

- hud/server/tests/test_mcp_server_more.py +247 -0

- hud/server/tests/test_run_wrapper.py +53 -0

- hud/server/tests/test_server_extra.py +166 -0

- hud/server/tests/test_sigterm_runner.py +78 -0

- hud/settings.py +38 -0

- hud/shared/hints.py +2 -2

- hud/telemetry/job.py +2 -2

- hud/types.py +9 -2

- hud/utils/tasks.py +32 -24

- hud/utils/tests/test_version.py +1 -1

- hud/version.py +1 -1

- {hud_python-0.4.35.dist-info → hud_python-0.4.37.dist-info}/METADATA +43 -23

- {hud_python-0.4.35.dist-info → hud_python-0.4.37.dist-info}/RECORD +63 -46

- {hud_python-0.4.35.dist-info → hud_python-0.4.37.dist-info}/WHEEL +0 -0

- {hud_python-0.4.35.dist-info → hud_python-0.4.37.dist-info}/entry_points.txt +0 -0

- {hud_python-0.4.35.dist-info → hud_python-0.4.37.dist-info}/licenses/LICENSE +0 -0

|

@@ -0,0 +1,166 @@

|

|

|

1

|

+

# filename: hud/server/tests/test_server_extra.py

|

|

2

|

+

from __future__ import annotations

|

|

3

|

+

|

|

4

|

+

import asyncio

|

|

5

|

+

from contextlib import asynccontextmanager, suppress

|

|

6

|

+

|

|

7

|

+

import anyio

|

|

8

|

+

import pytest

|

|

9

|

+

|

|

10

|

+

from hud.server import MCPServer

|

|

11

|

+

from hud.server import server as server_mod

|

|

12

|

+

|

|

13

|

+

|

|

14

|

+

@asynccontextmanager

|

|

15

|

+

async def _fake_stdio_server():

|

|

16

|

+

"""

|

|

17

|

+

Stand-in for stdio_server that avoids reading real stdin.

|

|

18

|

+

|

|

19

|

+

It yields a pair of in-memory streams (receive, send) so the low-level server

|

|

20

|

+

can start and idle without touching sys.stdin/sys.stdout.

|

|

21

|

+

"""

|

|

22

|

+

send_in, recv_in = anyio.create_memory_object_stream(100)

|

|

23

|

+

send_out, recv_out = anyio.create_memory_object_stream(100)

|

|

24

|

+

try:

|

|

25

|

+

yield recv_in, send_out

|

|

26

|

+

finally:

|

|

27

|

+

# best-effort close across anyio versions

|

|

28

|

+

for s in (send_in, recv_in, send_out, recv_out):

|

|

29

|

+

close = getattr(s, "close", None) or getattr(s, "aclose", None)

|

|

30

|

+

try:

|

|

31

|

+

if close is not None:

|

|

32

|

+

res = close()

|

|

33

|

+

if asyncio.iscoroutine(res):

|

|

34

|

+

await res

|

|

35

|

+

except Exception:

|

|

36

|

+

pass

|

|

37

|

+

|

|

38

|

+

|

|

39

|

+

@pytest.fixture

|

|

40

|

+

def patch_stdio(monkeypatch: pytest.MonkeyPatch):

|

|

41

|

+

"""Patch stdio server to avoid stdin issues during tests."""

|

|

42

|

+

monkeypatch.setenv("FASTMCP_DISABLE_BANNER", "1")

|

|

43

|

+

monkeypatch.setattr("mcp.server.stdio.stdio_server", _fake_stdio_server)

|

|

44

|

+

monkeypatch.setattr("fastmcp.server.server.stdio_server", _fake_stdio_server)

|

|

45

|

+

|

|

46

|

+

|

|

47

|

+

@pytest.mark.asyncio

|

|

48

|

+

async def test_sigterm_flag_remains_true_without_shutdown_handler(patch_stdio):

|

|

49

|

+

"""

|

|

50

|

+

When no @mcp.shutdown is registered, neither the lifespan.finally nor run_async.finally

|

|

51

|

+

should reset the global SIGTERM flag. This exercises the 'no handler' branches.

|

|

52

|

+

"""

|

|

53

|

+

mcp = MCPServer(name="NoShutdownHandler")

|

|

54

|

+

|

|

55

|

+

task = asyncio.create_task(mcp.run_async(transport="stdio", show_banner=False))

|

|

56

|

+

try:

|

|

57

|

+

await asyncio.sleep(0.05)

|

|

58

|

+

# Simulate SIGTERM path

|

|

59

|

+

server_mod._sigterm_received = True # type: ignore[attr-defined]

|

|

60

|

+

finally:

|

|

61

|

+

with suppress(asyncio.CancelledError):

|

|

62

|

+

task.cancel()

|

|

63

|

+

await task

|

|

64

|

+

|

|

65

|

+

# Flag must remain set since no shutdown handler was installed

|

|

66

|

+

assert getattr(server_mod, "_sigterm_received") is True

|

|

67

|

+

|

|

68

|

+

# Always reset for other tests

|

|

69

|

+

server_mod._sigterm_received = False # type: ignore[attr-defined]

|

|

70

|

+

|

|

71

|

+

|

|

72

|

+

@pytest.mark.asyncio

|

|

73

|

+

async def test_last_shutdown_handler_wins(patch_stdio):

|

|

74

|

+

"""

|

|

75

|

+

If multiple @mcp.shutdown decorators are applied, the last one should be the one that runs.

|

|

76

|

+

"""

|

|

77

|

+

mcp = MCPServer(name="ShutdownOverride")

|

|

78

|

+

calls: list[str] = []

|

|

79

|

+

|

|

80

|

+

@mcp.shutdown

|

|

81

|

+

async def _first() -> None:

|

|

82

|

+

calls.append("first")

|

|

83

|

+

|

|

84

|

+

@mcp.shutdown

|

|

85

|

+

async def _second() -> None:

|

|

86

|

+

calls.append("second")

|

|

87

|

+

|

|

88

|

+

task = asyncio.create_task(mcp.run_async(transport="stdio", show_banner=False))

|

|

89

|

+

try:

|

|

90

|

+

await asyncio.sleep(0.05)

|

|

91

|

+

server_mod._sigterm_received = True # type: ignore[attr-defined]

|

|

92

|

+

finally:

|

|

93

|

+

with suppress(asyncio.CancelledError):

|

|

94

|

+

task.cancel()

|

|

95

|

+

await task

|

|

96

|

+

|

|

97

|

+

assert calls == ["second"], "Only the last registered shutdown handler should run"

|

|

98

|

+

server_mod._sigterm_received = False # type: ignore[attr-defined]

|

|

99

|

+

|

|

100

|

+

|

|

101

|

+

def test__run_with_sigterm_registers_handlers_when_enabled(monkeypatch: pytest.MonkeyPatch):

|

|

102

|

+

"""

|

|

103

|

+

Verify that _run_with_sigterm attempts to register SIGTERM/SIGINT handlers

|

|

104

|

+

when the env var does NOT disable the handler. We stub AnyIO's TaskGroup so

|

|

105

|

+

the watcher doesn't block and the test returns immediately.

|

|

106

|

+

"""

|

|

107

|

+

# Ensure handler is enabled

|

|

108

|

+

monkeypatch.delenv("FASTMCP_DISABLE_SIGTERM_HANDLER", raising=False)

|

|

109

|

+

|

|

110

|

+

# Record what the server tries to register

|

|

111

|

+

added_signals: list[int] = []

|

|

112

|

+

|

|

113

|

+

import asyncio as _asyncio

|

|

114

|

+

|

|

115

|

+

orig_get_running_loop = _asyncio.get_running_loop

|

|

116

|

+

|

|

117

|

+

def proxy_get_running_loop():

|

|

118

|

+

real = orig_get_running_loop()

|

|

119

|

+

|

|

120

|

+

class _LoopProxy:

|

|

121

|

+

__slots__ = ("_inner",)

|

|

122

|

+

|

|

123

|

+

def __init__(self, inner):

|

|

124

|

+

self._inner = inner

|

|

125

|

+

|

|

126

|

+

def add_signal_handler(self, signum, callback, *args):

|

|

127

|

+

added_signals.append(signum) # don't actually install

|

|

128

|

+

# no-op: skip calling inner.add_signal_handler to avoid OS constraints

|

|

129

|

+

|

|

130

|

+

def __getattr__(self, name):

|

|

131

|

+

# delegate everything else (create_task, call_soon, etc.)

|

|

132

|

+

return getattr(self._inner, name)

|

|

133

|

+

|

|

134

|

+

return _LoopProxy(real)

|

|

135

|

+

|

|

136

|

+

# Patch globally so both the test and hud.server.server see the proxy

|

|

137

|

+

monkeypatch.setattr(_asyncio, "get_running_loop", proxy_get_running_loop)

|

|

138

|

+

|

|

139

|

+

# Dummy TaskGroup that runs the work but skips _watch

|

|

140

|

+

class _DummyTG:

|

|

141

|

+

async def __aenter__(self):

|

|

142

|

+

return self

|

|

143

|

+

|

|

144

|

+

async def __aexit__(self, exc_type, exc, tb):

|

|

145

|

+

return False

|

|

146

|

+

|

|

147

|

+

def start_soon(self, fn, *args, **kwargs):

|

|

148

|

+

if getattr(fn, "__name__", "") == "_watch":

|

|

149

|

+

return

|

|

150

|

+

_asyncio.get_running_loop().create_task(fn(*args, **kwargs))

|

|

151

|

+

|

|

152

|

+

monkeypatch.setattr("anyio.create_task_group", lambda: _DummyTG())

|

|

153

|

+

|

|

154

|

+

# Simple coroutine that should run to completion

|

|

155

|

+

hit = {"v": False}

|

|

156

|

+

|

|

157

|

+

async def work():

|

|

158

|

+

hit["v"] = True

|

|

159

|

+

|

|

160

|

+

server_mod._run_with_sigterm(work)

|

|

161

|

+

assert hit["v"] is True

|

|

162

|

+

|

|

163

|

+

import signal as _signal

|

|

164

|

+

|

|

165

|

+

assert _signal.SIGTERM in added_signals

|

|

166

|

+

assert _signal.SIGINT in added_signals

|

|

@@ -0,0 +1,78 @@

|

|

|

1

|

+

from __future__ import annotations

|

|

2

|

+

|

|

3

|

+

import asyncio

|

|

4

|

+

from contextlib import asynccontextmanager, suppress

|

|

5

|

+

|

|

6

|

+

import anyio

|

|

7

|

+

import pytest

|

|

8

|

+

|

|

9

|

+

from hud.server import MCPServer

|

|

10

|

+

from hud.server import server as server_mod

|

|

11

|

+

|

|

12

|

+

|

|

13

|

+

def test__run_with_sigterm_executes_coro_when_handler_disabled(monkeypatch: pytest.MonkeyPatch):

|

|

14

|

+

"""With FASTMCP_DISABLE_SIGTERM_HANDLER=1, _run_with_sigterm should just run the task."""

|

|

15

|

+

monkeypatch.setenv("FASTMCP_DISABLE_SIGTERM_HANDLER", "1")

|

|

16

|

+

|

|

17

|

+

hit = {"v": False}

|

|

18

|

+

|

|

19

|

+

async def work(arg, *, kw=None):

|

|

20

|

+

assert arg == 123 and kw == "ok"

|

|

21

|

+

hit["v"] = True

|

|

22

|

+

|

|

23

|

+

# Wrapper to exercise kwargs since TaskGroup.start_soon only accepts positional args

|

|

24

|

+

async def wrapper(arg):

|

|

25

|

+

await work(arg, kw="ok")

|

|

26

|

+

|

|

27

|

+

# Should return cleanly and mark hit

|

|

28

|

+

server_mod._run_with_sigterm(wrapper, 123)

|

|

29

|

+

assert hit["v"] is True

|

|

30

|

+

|

|

31

|

+

|

|

32

|

+

@asynccontextmanager

|

|

33

|

+

async def _fake_stdio_server():

|

|

34

|

+

"""Stand-in for stdio_server that avoids reading real stdin."""

|

|

35

|

+

send_in, recv_in = anyio.create_memory_object_stream(100)

|

|

36

|

+

send_out, recv_out = anyio.create_memory_object_stream(100)

|

|

37

|

+

try:

|

|

38

|

+

yield recv_in, send_out

|

|

39

|

+

finally:

|

|

40

|

+

for s in (send_in, recv_in, send_out, recv_out):

|

|

41

|

+

close = getattr(s, "close", None) or getattr(s, "aclose", None)

|

|

42

|

+

try:

|

|

43

|

+

if close is not None:

|

|

44

|

+

res = close()

|

|

45

|

+

if asyncio.iscoroutine(res):

|

|

46

|

+

await res

|

|

47

|

+

except Exception:

|

|

48

|

+

pass

|

|

49

|

+

|

|

50

|

+

|

|

51

|

+

@pytest.fixture

|

|

52

|

+

def patch_stdio(monkeypatch: pytest.MonkeyPatch):

|

|

53

|

+

"""Patch stdio server to avoid stdin issues during tests."""

|

|

54

|

+

monkeypatch.setenv("FASTMCP_DISABLE_BANNER", "1")

|

|

55

|

+

monkeypatch.setattr("mcp.server.stdio.stdio_server", _fake_stdio_server)

|

|

56

|

+

monkeypatch.setattr("fastmcp.server.server.stdio_server", _fake_stdio_server)

|

|

57

|

+

|

|

58

|

+

|

|

59

|

+

@pytest.mark.asyncio

|

|

60

|

+

async def test_shutdown_handler_exception_does_not_crash_and_resets_flag(patch_stdio):

|

|

61

|

+

"""If @shutdown raises, run_async must swallow it and still reset the SIGTERM flag."""

|

|

62

|

+

mcp = MCPServer(name="ShutdownRaises")

|

|

63

|

+

|

|

64

|

+

@mcp.shutdown

|

|

65

|

+

async def _boom() -> None:

|

|

66

|

+

raise RuntimeError("kaboom")

|

|

67

|

+

|

|

68

|

+

task = asyncio.create_task(mcp.run_async(transport="stdio", show_banner=False))

|

|

69

|

+

try:

|

|

70

|

+

await asyncio.sleep(0.05)

|

|

71

|

+

server_mod._sigterm_received = True # trigger shutdown path

|

|

72

|

+

finally:

|

|

73

|

+

with suppress(asyncio.CancelledError):

|

|

74

|

+

task.cancel()

|

|

75

|

+

await task

|

|

76

|

+

|

|

77

|

+

# No exception propagated; flag must be reset

|

|

78

|

+

assert not getattr(server_mod, "_sigterm_received")

|

hud/settings.py

CHANGED

|

@@ -1,7 +1,10 @@

|

|

|

1

1

|

from __future__ import annotations

|

|

2

2

|

|

|

3

|

+

from pathlib import Path

|

|

4

|

+

|

|

3

5

|

from pydantic import Field

|

|

4

6

|

from pydantic_settings import BaseSettings, SettingsConfigDict

|

|

7

|

+

from pydantic_settings.sources import DotEnvSettingsSource, PydanticBaseSettingsSource

|

|

5

8

|

|

|

6

9

|

|

|

7

10

|

class Settings(BaseSettings):

|

|

@@ -14,6 +17,41 @@ class Settings(BaseSettings):

|

|

|

14

17

|

|

|

15

18

|

model_config = SettingsConfigDict(env_file=".env", env_file_encoding="utf-8", extra="allow")

|

|

16

19

|

|

|

20

|

+

@classmethod

|

|

21

|

+

def settings_customise_sources(

|

|

22

|

+

cls,

|

|

23

|

+

settings_cls: type[BaseSettings],

|

|

24

|

+

init_settings: PydanticBaseSettingsSource,

|

|

25

|

+

env_settings: PydanticBaseSettingsSource,

|

|

26

|

+

dotenv_settings: PydanticBaseSettingsSource,

|

|

27

|

+

file_secret_settings: PydanticBaseSettingsSource,

|

|

28

|

+

) -> tuple[PydanticBaseSettingsSource, ...]:

|

|

29

|

+

"""

|

|

30

|

+

Customize settings source precedence to include a user-level env file.

|

|

31

|

+

|

|

32

|

+

Precedence (highest to lowest):

|

|

33

|

+

- init_settings (explicit kwargs)

|

|

34

|

+

- env_settings (process environment)

|

|

35

|

+

- dotenv_settings (project .env)

|

|

36

|

+

- user_dotenv_settings (~/.hud/.env) ← added

|

|

37

|

+

- file_secret_settings

|

|

38

|

+

"""

|

|

39

|

+

|

|

40

|

+

user_env_path = Path.home() / ".hud" / ".env"

|

|

41

|

+

user_dotenv_settings = DotEnvSettingsSource(

|

|

42

|

+

settings_cls,

|

|

43

|

+

env_file=user_env_path,

|

|

44

|

+

env_file_encoding="utf-8",

|

|

45

|

+

)

|

|

46

|

+

|

|

47

|

+

return (

|

|

48

|

+

init_settings,

|

|

49

|

+

env_settings,

|

|

50

|

+

dotenv_settings,

|

|

51

|

+

user_dotenv_settings,

|

|

52

|

+

file_secret_settings,

|

|

53

|

+

)

|

|

54

|

+

|

|

17

55

|

hud_telemetry_url: str = Field(

|

|

18

56

|

default="https://telemetry.hud.so/v3/api",

|

|

19

57

|

description="Base URL for the HUD API",

|

hud/shared/hints.py

CHANGED

|

@@ -37,8 +37,8 @@ HUD_API_KEY_MISSING = Hint(

|

|

|

37

37

|

title="HUD API key required",

|

|

38

38

|

message="Missing or invalid HUD_API_KEY.",

|

|

39

39

|

tips=[

|

|

40

|

-

"Set HUD_API_KEY environment

|

|

41

|

-

"Get a key at https://

|

|

40

|

+

"Set HUD_API_KEY in your environment or run: hud set HUD_API_KEY=your-key-here",

|

|

41

|

+

"Get a key at https://hud.so",

|

|

42

42

|

"Check for whitespace or truncation",

|

|

43

43

|

],

|

|

44

44

|

docs_url=None,

|

hud/telemetry/job.py

CHANGED

|

@@ -143,7 +143,7 @@ def _print_job_url(job_id: str, job_name: str) -> None:

|

|

|

143

143

|

if not (settings.telemetry_enabled and settings.api_key):

|

|

144

144

|

return

|

|

145

145

|

|

|

146

|

-

url = f"https://

|

|

146

|

+

url = f"https://hud.so/jobs/{job_id}"

|

|

147

147

|

header = f"🚀 Job '{job_name}' started:"

|

|

148

148

|

|

|

149

149

|

# ANSI color codes

|

|

@@ -182,7 +182,7 @@ def _print_job_complete_url(job_id: str, job_name: str, error_occurred: bool = F

|

|

|

182

182

|

if not (settings.telemetry_enabled and settings.api_key):

|

|

183

183

|

return

|

|

184

184

|

|

|

185

|

-

url = f"https://

|

|

185

|

+

url = f"https://hud.so/jobs/{job_id}"

|

|

186

186

|

|

|

187

187

|

# ANSI color codes

|

|

188

188

|

GREEN = "\033[92m"

|

hud/types.py

CHANGED

|

@@ -1,5 +1,6 @@

|

|

|

1

1

|

from __future__ import annotations

|

|

2

2

|

|

|

3

|

+

import contextlib

|

|

3

4

|

import json

|

|

4

5

|

import logging

|

|

5

6

|

import uuid

|

|

@@ -107,7 +108,13 @@ class Task(BaseModel):

|

|

|

107

108

|

|

|

108

109

|

# Start with current environment variables

|

|

109

110

|

mapping = dict(os.environ)

|

|

110

|

-

|

|

111

|

+

# Include settings (from process env, project .env, and user .env)

|

|

112

|

+

settings_dict = settings.model_dump()

|

|

113

|

+

mapping.update(settings_dict)

|

|

114

|

+

# Add UPPERCASE aliases for settings keys

|

|

115

|

+

for _key, _val in settings_dict.items():

|

|

116

|

+

with contextlib.suppress(Exception):

|

|

117

|

+

mapping[_key.upper()] = _val

|

|

111

118

|

|

|

112

119

|

if settings.api_key:

|

|

113

120

|

mapping["HUD_API_KEY"] = settings.api_key

|

|

@@ -208,7 +215,7 @@ class AgentResponse(BaseModel):

|

|

|

208

215

|

tool_calls: list[MCPToolCall] = Field(default_factory=list)

|

|

209

216

|

done: bool = Field(default=False)

|

|

210

217

|

|

|

211

|

-

# --- TELEMETRY [

|

|

218

|

+

# --- TELEMETRY [hud.so] ---

|

|

212

219

|

# Responses

|

|

213

220

|

content: str | None = Field(default=None)

|

|

214

221

|

reasoning: str | None = Field(default=None)

|

hud/utils/tasks.py

CHANGED

|

@@ -9,7 +9,7 @@ from hud.utils.hud_console import HUDConsole

|

|

|

9

9

|

hud_console = HUDConsole()

|

|

10

10

|

|

|

11

11

|

|

|

12

|

-

def load_tasks(tasks_input: str | list[dict]) -> list[Task]:

|

|

12

|

+

def load_tasks(tasks_input: str | list[dict], *, raw: bool = False) -> list[Task] | list[dict]:

|

|

13

13

|

"""Load tasks from various sources.

|

|

14

14

|

|

|

15

15

|

Args:

|

|

@@ -18,16 +18,19 @@ def load_tasks(tasks_input: str | list[dict]) -> list[Task]:

|

|

|

18

18

|

- Path to a JSONL file (one task per line)

|

|

19

19

|

- HuggingFace dataset name (format: "username/dataset" or "username/dataset:split")

|

|

20

20

|

- List of task dictionaries

|

|

21

|

-

|

|

21

|

+

raw: If True, return raw dicts without validation or env substitution

|

|

22

22

|

|

|

23

23

|

Returns:

|

|

24

|

-

|

|

24

|

+

- If raw=False (default): list[Task]

|

|

25

|

+

- If raw=True: list[dict]

|

|

25

26

|

"""

|

|

26

|

-

tasks = []

|

|

27

|

+

tasks: list[Task] | list[dict] = []

|

|

27

28

|

|

|

28

29

|

if isinstance(tasks_input, list):

|

|

29

30

|

# Direct list of task dicts

|

|

30

31

|

hud_console.info(f"Loading {len(tasks_input)} tasks from provided list")

|

|

32

|

+

if raw:

|

|

33

|

+

return [item for item in tasks_input if isinstance(item, dict)]

|

|

31

34

|

for item in tasks_input:

|

|

32

35

|

task = Task(**item)

|

|

33

36

|

tasks.append(task)

|

|

@@ -36,7 +39,6 @@ def load_tasks(tasks_input: str | list[dict]) -> list[Task]:

|

|

|

36

39

|

# Check if it's a file path

|

|

37

40

|

if Path(tasks_input).exists():

|

|

38

41

|

file_path = Path(tasks_input)

|

|

39

|

-

hud_console.info(f"Loading tasks from file: {tasks_input}")

|

|

40

42

|

|

|

41

43

|

with open(file_path) as f:

|

|

42

44

|

# Handle JSON files (array of tasks)

|

|

@@ -46,31 +48,33 @@ def load_tasks(tasks_input: str | list[dict]) -> list[Task]:

|

|

|

46

48

|

raise ValueError(

|

|

47

49

|

f"JSON file must contain an array of tasks, got {type(data)}"

|

|

48

50

|

)

|

|

49

|

-

|

|

51

|

+

if raw:

|

|

52

|

+

return [item for item in data if isinstance(item, dict)]

|

|

50

53

|

for item in data:

|

|

51

54

|

task = Task(**item)

|

|

52

55

|

tasks.append(task)

|

|

53

56

|

|

|

54

57

|

# Handle JSONL files (one task per line)

|

|

55

58

|

else:

|

|

59

|

+

raw_items: list[dict] = []

|

|

56

60

|

for line in f:

|

|

57

61

|

line = line.strip()

|

|

58

|

-

if line:

|

|

59

|

-

|

|

60

|

-

|

|

61

|

-

|

|

62

|

-

if isinstance(

|

|

63

|

-

|

|

64

|

-

|

|

65

|

-

|

|

66

|

-

|

|

67

|

-

|

|

68

|

-

|

|

69

|

-

|

|

70

|

-

|

|

71

|

-

|

|

72

|

-

|

|

73

|

-

|

|

62

|

+

if not line:

|

|

63

|

+

continue

|

|

64

|

+

item = json.loads(line)

|

|

65

|

+

if isinstance(item, list):

|

|

66

|

+

raw_items.extend([it for it in item if isinstance(it, dict)])

|

|

67

|

+

elif isinstance(item, dict):

|

|

68

|

+

raw_items.append(item)

|

|

69

|

+

else:

|

|

70

|

+

raise ValueError(

|

|

71

|

+

f"Invalid JSONL format: expected dict or list of dicts, got {type(item)}" # noqa: E501

|

|

72

|

+

)

|

|

73

|

+

if raw:

|

|

74

|

+

return raw_items

|

|

75

|

+

for it in raw_items:

|

|

76

|

+

task = Task(**it)

|

|

77

|

+

tasks.append(task)

|

|

74

78

|

|

|

75

79

|

# Check if it's a HuggingFace dataset

|

|

76

80

|

elif "/" in tasks_input:

|

|

@@ -88,6 +92,7 @@ def load_tasks(tasks_input: str | list[dict]) -> list[Task]:

|

|

|

88

92

|

dataset = load_dataset(dataset_name, split=split)

|

|

89

93

|

|

|

90

94

|

# Convert dataset rows to Task objects

|

|

95

|

+

raw_rows: list[dict] = []

|

|

91

96

|

for item in dataset:

|

|

92

97

|

if not isinstance(item, dict):

|

|

93

98

|

raise ValueError(

|

|

@@ -97,7 +102,11 @@ def load_tasks(tasks_input: str | list[dict]) -> list[Task]:

|

|

|

97

102

|

raise ValueError(

|

|

98

103

|

f"Invalid HuggingFace dataset: expected mcp_config and prompt, got {item}" # noqa: E501

|

|

99

104

|

)

|

|

100

|

-

|

|

105

|

+

raw_rows.append(item)

|

|

106

|

+

if raw:

|

|

107

|

+

return raw_rows

|

|

108

|

+

for row in raw_rows:

|

|

109

|

+

task = Task(**row)

|

|

101

110

|

tasks.append(task)

|

|

102

111

|

|

|

103

112

|

except ImportError as e:

|

|

@@ -115,5 +124,4 @@ def load_tasks(tasks_input: str | list[dict]) -> list[Task]:

|

|

|

115

124

|

else:

|

|

116

125

|

raise TypeError(f"tasks_input must be str or list, got {type(tasks_input)}")

|

|

117

126

|

|

|

118

|

-

hud_console.info(f"Loaded {len(tasks)} tasks")

|

|

119

127

|

return tasks

|

hud/utils/tests/test_version.py

CHANGED

hud/version.py

CHANGED

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: hud-python

|

|

3

|

-

Version: 0.4.

|

|

3

|

+

Version: 0.4.37

|

|

4

4

|

Summary: SDK for the HUD platform.

|

|

5

5

|

Project-URL: Homepage, https://github.com/hud-evals/hud-python

|

|

6

6

|

Project-URL: Bug Tracker, https://github.com/hud-evals/hud-python/issues

|

|

@@ -36,11 +36,13 @@ Classifier: Programming Language :: Python :: 3.12

|

|

|

36

36

|

Classifier: Programming Language :: Python :: 3.13

|

|

37

37

|

Requires-Python: <3.13,>=3.11

|

|

38

38

|

Requires-Dist: anthropic

|

|

39

|

+

Requires-Dist: blessed>=1.20.0

|

|

39

40

|

Requires-Dist: datasets>=2.14.0

|

|

40

41

|

Requires-Dist: httpx<1,>=0.23.0

|

|

41

42

|

Requires-Dist: hud-fastmcp-python-sdk>=0.1.2

|

|

42

43

|

Requires-Dist: hud-mcp-python-sdk>=3.13.2

|

|

43

|

-

Requires-Dist: hud-mcp-use-python-sdk

|

|

44

|

+

Requires-Dist: hud-mcp-use-python-sdk==2.3.19

|

|

45

|

+

Requires-Dist: litellm>=1.55.0

|

|

44

46

|

Requires-Dist: numpy>=1.24.0

|

|

45

47

|

Requires-Dist: openai

|

|

46

48

|

Requires-Dist: opentelemetry-api>=1.34.1

|

|

@@ -50,8 +52,8 @@ Requires-Dist: opentelemetry-sdk>=1.34.1

|

|

|

50

52

|

Requires-Dist: pathspec>=0.12.1

|

|

51

53

|

Requires-Dist: pillow>=11.1.0

|

|

52

54

|

Requires-Dist: prompt-toolkit==3.0.51

|

|

53

|

-

Requires-Dist: pydantic-settings<3,>=2

|

|

54

|

-

Requires-Dist: pydantic<3,>=2

|

|

55

|

+

Requires-Dist: pydantic-settings<3,>=2.2

|

|

56

|

+

Requires-Dist: pydantic<3,>=2.6

|

|

55

57

|

Requires-Dist: questionary==2.1.0

|

|

56

58

|

Requires-Dist: rich>=13.0.0

|

|

57

59

|

Requires-Dist: toml>=0.10.2

|

|

@@ -59,7 +61,9 @@ Requires-Dist: typer>=0.9.0

|

|

|

59

61

|

Requires-Dist: watchfiles>=0.21.0

|

|

60

62

|

Requires-Dist: wrapt>=1.14.0

|

|

61

63

|

Provides-Extra: agent

|

|

64

|

+

Requires-Dist: aiodocker>=0.24.0; extra == 'agent'

|

|

62

65

|

Requires-Dist: dotenv>=0.9.9; extra == 'agent'

|

|

66

|

+

Requires-Dist: inspect-ai>=0.3.80; extra == 'agent'

|

|

63

67

|

Requires-Dist: ipykernel; extra == 'agent'

|

|

64

68

|

Requires-Dist: ipython<9; extra == 'agent'

|

|

65

69

|

Requires-Dist: jupyter-client; extra == 'agent'

|

|

@@ -67,8 +71,21 @@ Requires-Dist: jupyter-core; extra == 'agent'

|

|

|

67

71

|

Requires-Dist: langchain; extra == 'agent'

|

|

68

72

|

Requires-Dist: langchain-anthropic; extra == 'agent'

|

|

69

73

|

Requires-Dist: langchain-openai; extra == 'agent'

|

|

74

|

+

Requires-Dist: pillow>=11.1.0; extra == 'agent'

|

|

75

|

+

Requires-Dist: playwright; extra == 'agent'

|

|

76

|

+

Requires-Dist: pyautogui>=0.9.54; extra == 'agent'

|

|

77

|

+

Requires-Dist: pyright==1.1.401; extra == 'agent'

|

|

78

|

+

Requires-Dist: pytest-asyncio; extra == 'agent'

|

|

79

|

+

Requires-Dist: pytest-cov; extra == 'agent'

|

|

80

|

+

Requires-Dist: pytest-mock; extra == 'agent'

|

|

81

|

+

Requires-Dist: pytest<9,>=8.1.1; extra == 'agent'

|

|

82

|

+

Requires-Dist: ruff>=0.11.8; extra == 'agent'

|

|

83

|

+

Requires-Dist: setuptools; extra == 'agent'

|

|

84

|

+

Requires-Dist: textdistance<5,>=4.5.0; extra == 'agent'

|

|

70

85

|

Provides-Extra: agents

|

|

86

|

+

Requires-Dist: aiodocker>=0.24.0; extra == 'agents'

|

|

71

87

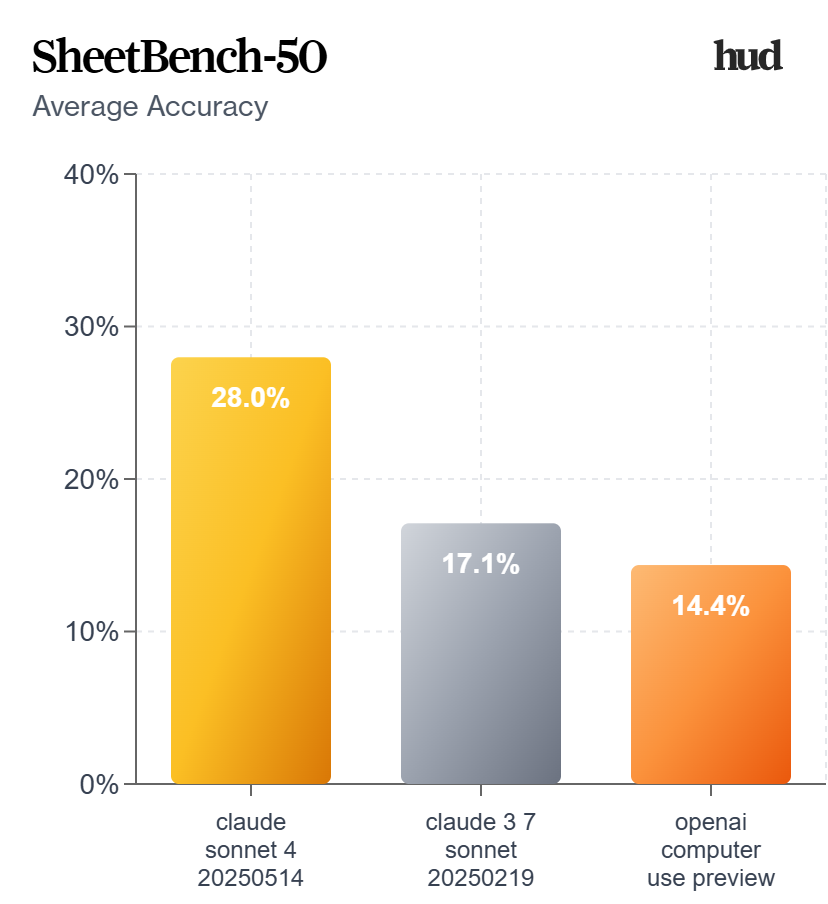

|

Requires-Dist: dotenv>=0.9.9; extra == 'agents'

|

|

88

|

+

Requires-Dist: inspect-ai>=0.3.80; extra == 'agents'

|

|

72

89

|

Requires-Dist: ipykernel; extra == 'agents'

|

|

73

90

|

Requires-Dist: ipython<9; extra == 'agents'

|

|

74

91

|

Requires-Dist: jupyter-client; extra == 'agents'

|

|

@@ -76,6 +93,17 @@ Requires-Dist: jupyter-core; extra == 'agents'

|

|

|

76

93

|

Requires-Dist: langchain; extra == 'agents'

|

|

77

94

|

Requires-Dist: langchain-anthropic; extra == 'agents'

|

|

78

95

|

Requires-Dist: langchain-openai; extra == 'agents'

|

|

96

|

+

Requires-Dist: pillow>=11.1.0; extra == 'agents'

|

|

97

|

+

Requires-Dist: playwright; extra == 'agents'

|

|

98

|

+

Requires-Dist: pyautogui>=0.9.54; extra == 'agents'

|

|

99

|

+

Requires-Dist: pyright==1.1.401; extra == 'agents'

|

|

100

|

+

Requires-Dist: pytest-asyncio; extra == 'agents'

|

|

101

|

+

Requires-Dist: pytest-cov; extra == 'agents'

|

|

102

|

+

Requires-Dist: pytest-mock; extra == 'agents'

|

|

103

|

+

Requires-Dist: pytest<9,>=8.1.1; extra == 'agents'

|

|

104

|

+

Requires-Dist: ruff>=0.11.8; extra == 'agents'

|

|

105

|

+

Requires-Dist: setuptools; extra == 'agents'

|

|

106

|

+

Requires-Dist: textdistance<5,>=4.5.0; extra == 'agents'

|

|

79

107

|

Provides-Extra: dev

|

|

80

108

|

Requires-Dist: aiodocker>=0.24.0; extra == 'dev'

|

|

81

109

|

Requires-Dist: dotenv>=0.9.9; extra == 'dev'

|

|

@@ -100,14 +128,6 @@ Requires-Dist: setuptools; extra == 'dev'

|

|

|

100

128

|

Requires-Dist: textdistance<5,>=4.5.0; extra == 'dev'

|

|

101

129

|

Provides-Extra: rl

|

|

102

130

|

Requires-Dist: bitsandbytes>=0.41.0; (sys_platform == 'linux') and extra == 'rl'

|

|

103

|

-

Requires-Dist: dotenv>=0.9.9; extra == 'rl'

|

|

104

|

-

Requires-Dist: ipykernel; extra == 'rl'

|

|

105

|

-

Requires-Dist: ipython<9; extra == 'rl'

|

|

106

|

-

Requires-Dist: jupyter-client; extra == 'rl'

|

|

107

|

-

Requires-Dist: jupyter-core; extra == 'rl'

|

|

108

|

-

Requires-Dist: langchain; extra == 'rl'

|

|

109

|

-

Requires-Dist: langchain-anthropic; extra == 'rl'

|

|

110

|

-

Requires-Dist: langchain-openai; extra == 'rl'

|

|

111

131

|

Requires-Dist: liger-kernel>=0.5.0; (sys_platform == 'linux') and extra == 'rl'

|

|

112

132

|

Requires-Dist: peft>=0.17.1; extra == 'rl'

|

|

113

133

|

Requires-Dist: vllm==0.10.1.1; extra == 'rl'

|

|

@@ -138,8 +158,8 @@ OSS RL environment + evals toolkit. Wrap software as environments, run benchmark

|

|

|

138

158

|

## Highlights

|

|

139

159

|

|

|

140

160

|

- 🚀 **[MCP environment skeleton](https://docs.hud.so/core-concepts/mcp-protocol)** – any agent can call any environment.

|

|

141

|

-

- ⚡️ **[Live telemetry](https://

|

|

142

|

-

- 🗂️ **[Public benchmarks](https://

|

|

161

|

+

- ⚡️ **[Live telemetry](https://hud.so)** – inspect every tool call, observation, and reward in real time.

|

|

162

|

+

- 🗂️ **[Public benchmarks](https://hud.so/leaderboards)** – OSWorld-Verified, SheetBench-50, and more.

|

|

143

163

|

- 🌱 **[Reinforcement learning built-in](rl/)** – Verifiers gym pipelines for GRPO on any environment.

|

|

144

164

|

- 🌐 **[Cloud browsers](environments/remote_browser/)** – AnchorBrowser, Steel, BrowserBase integrations for browser automation.

|

|

145

165

|

- 🛠️ **[Hot-reload dev loop](environments/README.md#phase-5-hot-reload-development-with-cursor-agent)** – `hud dev` for iterating on environments without rebuilds.

|

|

@@ -185,14 +205,14 @@ from hud.agents import ClaudeAgent

|

|

|

185

205

|

from hud.datasets import Task # See docs: https://docs.hud.so/reference/tasks

|

|

186

206

|

|

|

187

207

|

async def main() -> None:

|

|

188

|

-

with hud.trace("Quick Start 2048"): # All telemetry works for any MCP-based agent (see https://

|

|

208

|

+

with hud.trace("Quick Start 2048"): # All telemetry works for any MCP-based agent (see https://hud.so)

|

|

189

209

|

task = {

|

|

190

210

|

"prompt": "Reach 64 in 2048.",

|

|

191

211

|

"mcp_config": {

|

|

192

212

|

"hud": {

|

|

193

213

|

"url": "https://mcp.hud.so/v3/mcp", # HUD's cloud MCP server (see https://docs.hud.so/core-concepts/architecture)

|

|

194

214

|

"headers": {

|

|

195

|

-

"Authorization": f"Bearer {settings.api_key}", # Get your key at https://

|

|

215

|

+

"Authorization": f"Bearer {settings.api_key}", # Get your key at https://hud.so

|

|

196

216

|

"Mcp-Image": "hudpython/hud-text-2048:v1.2" # Docker image from https://hub.docker.com/u/hudpython

|

|

197

217

|

}

|

|

198

218

|

}

|

|

@@ -219,7 +239,7 @@ async def main() -> None:

|

|

|

219

239

|

asyncio.run(main())

|

|

220

240

|

```

|

|

221

241

|

|

|

222

|

-

The above example let's the agent play 2048 ([See replay](https://

|

|

242

|

+

The above example let's the agent play 2048 ([See replay](https://hud.so/trace/6feed7bd-5f67-4d66-b77f-eb1e3164604f))

|

|

223

243

|

|

|

224

244

|

|

|

225

245

|

|

|

@@ -250,7 +270,7 @@ Supports multi‑turn RL for both:

|

|

|

250

270

|

- Language‑only models (e.g., `Qwen/Qwen2.5-7B-Instruct`)

|

|

251

271

|

- Vision‑Language models (e.g., `Qwen/Qwen2.5-VL-3B-Instruct`)

|

|

252

272

|

|

|

253

|

-

By default, `hud rl` provisions a persistant server and trainer in the cloud, streams telemetry to `

|

|

273

|

+

By default, `hud rl` provisions a persistant server and trainer in the cloud, streams telemetry to `hud.so`, and lets you monitor/manage models at `hud.so/models`. Use `--local` to run entirely on your machines (typically 2+ GPUs: one for vLLM, the rest for training).

|

|

254

274

|

|

|

255

275

|

Any HUD MCP environment and evaluation works with our RL pipeline (including remote configurations). See the guided docs: `https://docs.hud.so/train-agents/quickstart`.

|

|

256

276

|

|

|

@@ -260,7 +280,7 @@ This is Claude Computer Use running on our proprietary financial analyst benchma

|

|

|

260

280

|

|

|

261

281

|

|

|

262

282

|

|

|

263

|

-

> [See this trace on

|

|

283

|

+

> [See this trace on _hud.so_](https://hud.so/trace/9e212e9e-3627-4f1f-9eb5-c6d03c59070a)

|

|

264

284

|

|

|

265

285

|

This example runs the full dataset (only takes ~20 minutes) using [run_evaluation.py](examples/run_evaluation.py):

|

|

266

286

|

|

|

@@ -286,7 +306,7 @@ results = await run_dataset(

|

|

|

286

306

|

print(f"Average reward: {sum(r.reward for r in results) / len(results):.2f}")

|

|

287

307

|

```

|

|

288

308

|

|

|

289

|

-

> Running a dataset creates a job and streams results to the [

|

|

309

|

+

> Running a dataset creates a job and streams results to the [hud.so](https://hud.so) platform for analysis and [leaderboard submission](https://docs.hud.so/evaluate-agents/leaderboards).

|

|

290

310

|

|

|

291

311

|

## Building Environments (MCP)

|

|

292

312

|

|

|

@@ -377,7 +397,7 @@ Tools

|

|

|

377

397

|

hud push # needs docker login, hud api key

|

|

378

398

|

```

|

|

379

399

|

|

|

380

|

-

5. Now you can use `mcp.hud.so` to launch 100s of instances of this environment in parallel with any agent, and see everything live on [

|

|

400

|

+

5. Now you can use `mcp.hud.so` to launch 100s of instances of this environment in parallel with any agent, and see everything live on [hud.so](https://hud.so):

|

|

381

401

|

|

|

382

402

|

```python

|

|

383

403

|

from hud.agents import ClaudeAgent

|

|

@@ -408,7 +428,7 @@ result = await ClaudeAgent().run({ # See all agents: https://docs.hud.so/refere

|

|

|

408

428

|

|

|

409

429

|

## Leaderboards & benchmarks

|

|

410

430

|

|

|

411

|

-

All leaderboards are publicly available on [

|

|

431

|

+

All leaderboards are publicly available on [hud.so/leaderboards](https://hud.so/leaderboards) (see [docs](https://docs.hud.so/evaluate-agents/leaderboards))

|

|

412

432

|

|

|

413

433

|

|

|

414

434

|

|

|

@@ -422,7 +442,7 @@ Using the [`run_dataset`](https://docs.hud.so/reference/tasks#run_dataset) funct

|

|

|

422

442

|

%%{init: {"theme": "neutral", "themeVariables": {"fontSize": "14px"}} }%%

|

|

423

443

|

graph LR

|

|

424

444

|

subgraph "Platform"

|

|

425

|

-

Dashboard["📊

|

|

445

|

+

Dashboard["📊 hud.so"]

|

|

426

446

|

API["🔌 mcp.hud.so"]

|

|

427

447

|

end

|

|

428

448

|

|