hud-python 0.4.30__py3-none-any.whl → 0.4.32__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

Potentially problematic release.

This version of hud-python might be problematic. Click here for more details.

- hud/agents/openai_chat_generic.py +1 -1

- hud/cli/flows/tasks.py +253 -0

- hud/cli/init.py +2 -2

- hud/cli/push.py +1 -0

- hud/cli/rl/__init__.py +35 -457

- hud/cli/rl/local_runner.py +571 -0

- hud/cli/rl/remote_runner.py +88 -63

- hud/cli/utils/docker.py +94 -0

- hud/rl/train.py +3 -0

- hud/rl/vllm_adapter.py +32 -14

- hud/utils/tests/test_version.py +1 -1

- hud/version.py +1 -1

- {hud_python-0.4.30.dist-info → hud_python-0.4.32.dist-info}/METADATA +26 -27

- {hud_python-0.4.30.dist-info → hud_python-0.4.32.dist-info}/RECORD +17 -16

- {hud_python-0.4.30.dist-info → hud_python-0.4.32.dist-info}/WHEEL +0 -0

- {hud_python-0.4.30.dist-info → hud_python-0.4.32.dist-info}/entry_points.txt +0 -0

- {hud_python-0.4.30.dist-info → hud_python-0.4.32.dist-info}/licenses/LICENSE +0 -0

hud/cli/rl/remote_runner.py

CHANGED

|

@@ -10,6 +10,7 @@ import os

|

|

|

10

10

|

import subprocess

|

|

11

11

|

import time

|

|

12

12

|

from pathlib import Path

|

|

13

|

+

import uuid

|

|

13

14

|

|

|

14

15

|

from rich.console import Console

|

|

15

16

|

|

|

@@ -29,6 +30,43 @@ GPU_PRICING = {

|

|

|

29

30

|

}

|

|

30

31

|

|

|

31

32

|

|

|

33

|

+

def ensure_vllm_deployed(model_name: str, gpu_type: str = "A100", timeout: int = 600) -> None:

|

|

34

|

+

"""Deploy vLLM for a model if needed and wait until it's ready.

|

|

35

|

+

|

|

36

|

+

Args:

|

|

37

|

+

model_name: The name of the model to deploy vLLM for

|

|

38

|

+

gpu_type: GPU type to use for deployment (e.g., A100, H100)

|

|

39

|

+

timeout: Max seconds to wait for vLLM to be ready

|

|

40

|

+

"""

|

|

41

|

+

# Check current model status

|

|

42

|

+

info = rl_api.get_model(model_name)

|

|

43

|

+

if info.vllm_url:

|

|

44

|

+

hud_console.success("vLLM server already running")

|

|

45

|

+

return

|

|

46

|

+

|

|

47

|

+

hud_console.info(f"Deploying vLLM server for {model_name}...")

|

|

48

|

+

rl_api.deploy_vllm(model_name, gpu_type=gpu_type)

|

|

49

|

+

hud_console.success("vLLM deployment started")

|

|

50

|

+

|

|

51

|

+

hud_console.info("Waiting for vLLM server to be ready...")

|

|

52

|

+

start_time = time.time()

|

|

53

|

+

with hud_console.progress() as progress:

|

|

54

|

+

progress.update(

|

|

55

|

+

"Checking deployment status (see live status on https://app.hud.so/models)"

|

|

56

|

+

)

|

|

57

|

+

while True:

|

|

58

|

+

if time.time() - start_time > timeout:

|

|

59

|

+

hud_console.error("Timeout waiting for vLLM deployment")

|

|

60

|

+

raise ValueError("vLLM deployment timeout")

|

|

61

|

+

info = rl_api.get_model(model_name)

|

|

62

|

+

if info.vllm_url or info.status == "ready":

|

|

63

|

+

hud_console.success(

|

|

64

|

+

f"vLLM server ready at http://rl.hud.so/v1/models/{model_name}/vllm"

|

|

65

|

+

)

|

|

66

|

+

break

|

|

67

|

+

time.sleep(5)

|

|

68

|

+

|

|

69

|

+

|

|

32

70

|

def run_remote_training(

|

|

33

71

|

tasks_file: str | None,

|

|

34

72

|

model: str | None,

|

|

@@ -128,49 +166,55 @@ def run_remote_training(

|

|

|

128

166

|

from rich.prompt import Prompt

|

|

129

167

|

|

|

130

168

|

# Ask for model name

|

|

131

|

-

|

|

169

|

+

base_default = model_type.split("/")[-1].lower()

|

|

170

|

+

default_name = base_default

|

|

171

|

+

existing_names = {m.name for m in active_models}

|

|

172

|

+

suffix = 1

|

|

173

|

+

while default_name in existing_names:

|

|

174

|

+

default_name = f"{base_default}-{suffix}"

|

|

175

|

+

suffix += 1

|

|

176

|

+

|

|

132

177

|

hud_console.info(f"Enter model name (default: {default_name}):")

|

|

133

178

|

model_name = Prompt.ask("Model name", default=default_name)

|

|

134

179

|

model_name = model_name.replace("/", "-").lower()

|

|

135

180

|

|

|

136

|

-

# Create the model

|

|

181

|

+

# Create the model with retry on name conflict

|

|

137

182

|

hud_console.info(f"Creating model: {model_name}")

|

|

138

183

|

try:

|

|

139

184

|

rl_api.create_model(model_name, model_type)

|

|

140

185

|

hud_console.success(f"Created model: {model_name}")

|

|

186

|

+

ensure_vllm_deployed(model_name, gpu_type="A100")

|

|

141

187

|

|

|

142

|

-

|

|

143

|

-

|

|

144

|

-

|

|

145

|

-

|

|

146

|

-

|

|

147

|

-

|

|

148

|

-

hud_console.info("Waiting for vLLM server to be ready...")

|

|

149

|

-

max_wait = 600 # 10 minutes

|

|

150

|

-

start_time = time.time()

|

|

151

|

-

|

|

152

|

-

with hud_console.progress() as progress:

|

|

153

|

-

progress.update(

|

|

154

|

-

"Checking deployment status (see live status on https://app.hud.so/models)"

|

|

155

|

-

)

|

|

156

|

-

|

|

188

|

+

except Exception as e:

|

|

189

|

+

# If the name already exists, suggest a new name and prompt once

|

|

190

|

+

message = str(e)

|

|

191

|

+

if "already exists" in message or "409" in message:

|

|

192

|

+

alt_name = f"{model_name}-1"

|

|

193

|

+

i = 1

|

|

157

194

|

while True:

|

|

158

|

-

|

|

159

|

-

|

|

160

|

-

|

|

161

|

-

|

|

162

|

-

model_info = rl_api.get_model(model_name)

|

|

163

|

-

if model_info.status == "ready":

|

|

164

|

-

hud_console.success(

|

|

165

|

-

f"vLLM server ready at http://rl.hud.so/v1/models/{model_name}/vllm"

|

|

166

|

-

)

|

|

195

|

+

candidate = f"{model_name}-{str(uuid.uuid4())[:4]}"

|

|

196

|

+

if candidate not in existing_names:

|

|

197

|

+

alt_name = candidate

|

|

167

198

|

break

|

|

168

|

-

|

|

169

|

-

|

|

170

|

-

|

|

171

|

-

|

|

172

|

-

|

|

173

|

-

|

|

199

|

+

i += 1

|

|

200

|

+

hud_console.warning(

|

|

201

|

+

f"Model '{model_name}' exists. Suggesting '{alt_name}' instead."

|

|

202

|

+

)

|

|

203

|

+

try:

|

|

204

|

+

from rich.prompt import Prompt as _Prompt

|

|

205

|

+

|

|

206

|

+

chosen = _Prompt.ask("Use different name", default=alt_name)

|

|

207

|

+

chosen = chosen.replace("/", "-").lower()

|

|

208

|

+

rl_api.create_model(chosen, model_type)

|

|

209

|

+

hud_console.success(f"Created model: {chosen}")

|

|

210

|

+

model_name = chosen

|

|

211

|

+

ensure_vllm_deployed(model_name, gpu_type="A100")

|

|

212

|

+

except Exception as e2:

|

|

213

|

+

hud_console.error(f"Failed to create model: {e2}")

|

|

214

|

+

raise

|

|

215

|

+

else:

|

|

216

|

+

hud_console.error(f"Failed to create model: {e}")

|

|

217

|

+

raise

|

|

174

218

|

|

|

175

219

|

else:

|

|

176

220

|

# Existing model selected

|

|

@@ -194,36 +238,7 @@ def run_remote_training(

|

|

|

194

238

|

return

|

|

195

239

|

|

|

196

240

|

# Ensure vLLM is deployed

|

|

197

|

-

|

|

198

|

-

hud_console.info(f"Deploying vLLM server for {model_name}...")

|

|

199

|

-

rl_api.deploy_vllm(model_name, gpu_type="A100")

|

|

200

|

-

hud_console.success("vLLM deployment started")

|

|

201

|

-

|

|

202

|

-

# Wait for deployment

|

|

203

|

-

hud_console.info("Waiting for vLLM server to be ready...")

|

|

204

|

-

max_wait = 600 # 10 minutes

|

|

205

|

-

start_time = time.time()

|

|

206

|

-

|

|

207

|

-

with hud_console.progress() as progress:

|

|

208

|

-

progress.update(

|

|

209

|

-

"Checking deployment status (see live status on https://app.hud.so/models)"

|

|

210

|

-

)

|

|

211

|

-

|

|

212

|

-

while True:

|

|

213

|

-

if time.time() - start_time > max_wait:

|

|

214

|

-

hud_console.error("Timeout waiting for vLLM deployment")

|

|

215

|

-

raise ValueError("vLLM deployment timeout")

|

|

216

|

-

|

|

217

|

-

model_info = rl_api.get_model(model_name)

|

|

218

|

-

if model_info.vllm_url:

|

|

219

|

-

hud_console.success(

|

|

220

|

-

f"vLLM server ready at http://rl.hud.so/v1/models/{model_name}/vllm"

|

|

221

|

-

)

|

|

222

|

-

break

|

|

223

|

-

|

|

224

|

-

time.sleep(5)

|

|

225

|

-

else:

|

|

226

|

-

hud_console.success("vLLM server already running")

|

|

241

|

+

ensure_vllm_deployed(model_name, gpu_type="A100")

|

|

227

242

|

except KeyboardInterrupt:

|

|

228

243

|

hud_console.dim_info("Training cancelled", "")

|

|

229

244

|

return

|

|

@@ -281,7 +296,17 @@ def run_remote_training(

|

|

|

281

296

|

presets=presets,

|

|

282

297

|

)

|

|

283

298

|

|

|

284

|

-

|

|

299

|

+

# Use a short label for tasks (avoid full absolute paths)

|

|

300

|

+

try:

|

|

301

|

+

if tasks_file and Path(tasks_file).exists():

|

|

302

|

+

tasks_label = Path(tasks_file).name

|

|

303

|

+

else:

|

|

304

|

+

# Fallback: last segment of a non-existent path or dataset name

|

|

305

|

+

tasks_label = str(tasks_file).replace("\\", "/").split("/")[-1]

|

|

306

|

+

except Exception:

|

|

307

|

+

tasks_label = str(tasks_file)

|

|

308

|

+

|

|

309

|

+

config.job_name = f"RL {model_name} on {tasks_label}"

|

|

285

310

|

|

|

286

311

|

# Save config for editing

|

|

287

312

|

temp_config_path = Path(f".rl_config_temp_{model_name}.json")

|

hud/cli/utils/docker.py

CHANGED

|

@@ -3,6 +3,8 @@

|

|

|

3

3

|

from __future__ import annotations

|

|

4

4

|

|

|

5

5

|

import json

|

|

6

|

+

import platform

|

|

7

|

+

import shutil

|

|

6

8

|

import subprocess

|

|

7

9

|

|

|

8

10

|

|

|

@@ -117,3 +119,95 @@ def generate_container_name(identifier: str, prefix: str = "hud") -> str:

|

|

|

117

119

|

# Replace special characters with hyphens

|

|

118

120

|

safe_name = identifier.replace(":", "-").replace("/", "-").replace("\\", "-")

|

|

119

121

|

return f"{prefix}-{safe_name}"

|

|

122

|

+

|

|

123

|

+

|

|

124

|

+

def _emit_docker_hints(error_text: str) -> None:

|

|

125

|

+

"""Parse common Docker connectivity errors and print platform-specific hints."""

|

|

126

|

+

from hud.utils.hud_console import hud_console

|

|

127

|

+

|

|

128

|

+

text = error_text.lower()

|

|

129

|

+

system = platform.system()

|

|

130

|

+

|

|

131

|

+

markers = [

|

|

132

|

+

"cannot connect to the docker daemon",

|

|

133

|

+

"is the docker daemon running",

|

|

134

|

+

"error during connect",

|

|

135

|

+

"permission denied while trying to connect",

|

|

136

|

+

"no such file or directory",

|

|

137

|

+

"pipe/dockerdesktop",

|

|

138

|

+

"dockerdesktoplinuxengine",

|

|

139

|

+

"//./pipe/docker",

|

|

140

|

+

"/var/run/docker.sock",

|

|

141

|

+

]

|

|

142

|

+

|

|

143

|

+

if any(m in text for m in markers):

|

|

144

|

+

hud_console.error("Docker does not appear to be running or accessible")

|

|

145

|

+

if system == "Windows":

|

|

146

|

+

hud_console.hint("Open Docker Desktop and wait until it shows 'Running'")

|

|

147

|

+

hud_console.hint("If using WSL, enable integration for your distro in Docker Desktop")

|

|

148

|

+

elif system == "Linux":

|

|

149

|

+

hud_console.hint(

|

|

150

|

+

"Start the daemon: sudo systemctl start docker (or service docker start)"

|

|

151

|

+

)

|

|

152

|

+

hud_console.hint("If permission denied: sudo usermod -aG docker $USER && re-login")

|

|

153

|

+

elif system == "Darwin":

|

|

154

|

+

hud_console.hint("Open Docker Desktop and wait until it shows 'Running'")

|

|

155

|

+

else:

|

|

156

|

+

hud_console.hint("Start Docker and ensure the daemon is reachable")

|

|

157

|

+

trimmed = error_text.strip()

|

|

158

|

+

if len(trimmed) > 300:

|

|

159

|

+

trimmed = trimmed[:300] + "..."

|

|

160

|

+

hud_console.dim_info("Details", trimmed)

|

|

161

|

+

else:

|

|

162

|

+

from hud.utils.hud_console import hud_console as _hc

|

|

163

|

+

|

|

164

|

+

_hc.error("Docker returned an error")

|

|

165

|

+

trimmed = error_text.strip()

|

|

166

|

+

if len(trimmed) > 300:

|

|

167

|

+

trimmed = trimmed[:300] + "..."

|

|

168

|

+

_hc.dim_info("Details", trimmed)

|

|

169

|

+

_hc.hint("Is Docker running and accessible?")

|

|

170

|

+

|

|

171

|

+

|

|

172

|

+

def require_docker_running() -> None:

|

|

173

|

+

"""Ensure Docker CLI exists and daemon is reachable; print hints and exit if not."""

|

|

174

|

+

import typer

|

|

175

|

+

|

|

176

|

+

from hud.utils.hud_console import hud_console

|

|

177

|

+

|

|

178

|

+

docker_path: str | None = shutil.which("docker")

|

|

179

|

+

if not docker_path:

|

|

180

|

+

hud_console.error("Docker CLI not found")

|

|

181

|

+

hud_console.info("Install Docker Desktop (Windows/macOS) or Docker Engine (Linux)")

|

|

182

|

+

hud_console.hint("After installation, start Docker and re-run this command")

|

|

183

|

+

raise typer.Exit(1)

|

|

184

|

+

|

|

185

|

+

try:

|

|

186

|

+

result = subprocess.run( # noqa: UP022, S603

|

|

187

|

+

[docker_path, "info"],

|

|

188

|

+

stdout=subprocess.PIPE,

|

|

189

|

+

stderr=subprocess.PIPE,

|

|

190

|

+

text=True,

|

|

191

|

+

timeout=8,

|

|

192

|

+

check=False,

|

|

193

|

+

)

|

|

194

|

+

if result.returncode == 0:

|

|

195

|

+

return

|

|

196

|

+

|

|

197

|

+

error_text = (result.stderr or "") + "\n" + (result.stdout or "")

|

|

198

|

+

_emit_docker_hints(error_text)

|

|

199

|

+

raise typer.Exit(1)

|

|

200

|

+

except FileNotFoundError as e:

|

|

201

|

+

hud_console.error("Docker CLI not found on PATH")

|

|

202

|

+

hud_console.hint("Install Docker and ensure 'docker' is on your PATH")

|

|

203

|

+

raise typer.Exit(1) from e

|

|

204

|

+

except subprocess.TimeoutExpired as e:

|

|

205

|

+

hud_console.error("Docker did not respond in time")

|

|

206

|

+

hud_console.hint(

|

|

207

|

+

"Is Docker running? Open Docker Desktop and wait until it reports 'Running'"

|

|

208

|

+

)

|

|

209

|

+

raise typer.Exit(1) from e

|

|

210

|

+

except Exception as e:

|

|

211

|

+

hud_console.error(f"Docker check failed: {e}")

|

|

212

|

+

hud_console.hint("Is the Docker daemon running?")

|

|

213

|

+

raise typer.Exit(1) from e

|

hud/rl/train.py

CHANGED

|

@@ -232,6 +232,9 @@ async def train(config: Config, tasks: list[Task]) -> None:

|

|

|

232

232

|

)

|

|

233

233

|

learner.save(str(checkpoint_path))

|

|

234

234

|

|

|

235

|

+

# Wait for 6 seconds to ensure the checkpoint is saved

|

|

236

|

+

await asyncio.sleep(6)

|

|

237

|

+

|

|

235

238

|

adapter_name = f"{config.adapter_prefix}-{checkpoint_id}"

|

|

236

239

|

if vllm.load_adapter(adapter_name, str(checkpoint_path)):

|

|

237

240

|

actor.update_adapter(adapter_name)

|

hud/rl/vllm_adapter.py

CHANGED

|

@@ -35,20 +35,38 @@ class VLLMAdapter:

|

|

|

35

35

|

url = f"{self.base_url}/load_lora_adapter"

|

|

36

36

|

headers = {"Authorization": f"Bearer {self.api_key}", "Content-Type": "application/json"}

|

|

37

37

|

payload = {"lora_name": adapter_name, "lora_path": adapter_path}

|

|

38

|

-

|

|

39

|

-

|

|

40

|

-

|

|

41

|

-

|

|

42

|

-

|

|

43

|

-

|

|

44

|

-

|

|

45

|

-

|

|

46

|

-

|

|

47

|

-

|

|

48

|

-

|

|

49

|

-

|

|

50

|

-

|

|

51

|

-

|

|

38

|

+

# Implement exponential backoff for retrying the adapter load request.

|

|

39

|

+

max_retries = 5

|

|

40

|

+

backoff_factor = 2

|

|

41

|

+

delay = 1 # initial delay in seconds

|

|

42

|

+

|

|

43

|

+

for attempt in range(1, max_retries + 1):

|

|

44

|

+

try:

|

|

45

|

+

response = requests.post(

|

|

46

|

+

url, headers=headers, data=json.dumps(payload), timeout=timeout

|

|

47

|

+

)

|

|

48

|

+

response.raise_for_status()

|

|

49

|

+

|

|

50

|

+

self.current_adapter = adapter_name

|

|

51

|

+

hud_console.info(f"[VLLMAdapter] Loaded adapter: {adapter_name}")

|

|

52

|

+

return True

|

|

53

|

+

|

|

54

|

+

except requests.exceptions.RequestException as e:

|

|

55

|

+

if attempt == max_retries:

|

|

56

|

+

hud_console.error(

|

|

57

|

+

f"[VLLMAdapter] Failed to load adapter {adapter_name} after {attempt} attempts: {e}" # noqa: E501

|

|

58

|

+

)

|

|

59

|

+

return False

|

|

60

|

+

else:

|

|

61

|

+

hud_console.warning(

|

|

62

|

+

f"[VLLMAdapter] Load adapter {adapter_name} failed (attempt {attempt}/{max_retries}): {e}. Retrying in {delay} seconds...", # noqa: E501

|

|

63

|

+

)

|

|

64

|

+

import time

|

|

65

|

+

|

|

66

|

+

time.sleep(delay)

|

|

67

|

+

delay *= backoff_factor

|

|

68

|

+

|

|

69

|

+

return False

|

|

52

70

|

|

|

53

71

|

def unload_adapter(self, adapter_name: str) -> bool:

|

|

54

72

|

"""

|

hud/utils/tests/test_version.py

CHANGED

hud/version.py

CHANGED

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: hud-python

|

|

3

|

-

Version: 0.4.

|

|

3

|

+

Version: 0.4.32

|

|

4

4

|

Summary: SDK for the HUD platform.

|

|

5

5

|

Project-URL: Homepage, https://github.com/hud-evals/hud-python

|

|

6

6

|

Project-URL: Bug Tracker, https://github.com/hud-evals/hud-python/issues

|

|

@@ -35,15 +35,20 @@ Classifier: Programming Language :: Python :: 3.11

|

|

|

35

35

|

Classifier: Programming Language :: Python :: 3.12

|

|

36

36

|

Classifier: Programming Language :: Python :: 3.13

|

|

37

37

|

Requires-Python: <3.13,>=3.11

|

|

38

|

+

Requires-Dist: anthropic

|

|

39

|

+

Requires-Dist: datasets>=2.14.0

|

|

38

40

|

Requires-Dist: httpx<1,>=0.23.0

|

|

39

41

|

Requires-Dist: hud-fastmcp-python-sdk>=0.1.2

|

|

40

42

|

Requires-Dist: hud-mcp-python-sdk>=3.13.2

|

|

41

43

|

Requires-Dist: hud-mcp-use-python-sdk>=2.3.16

|

|

44

|

+

Requires-Dist: numpy>=1.24.0

|

|

45

|

+

Requires-Dist: openai

|

|

42

46

|

Requires-Dist: opentelemetry-api>=1.34.1

|

|

43

47

|

Requires-Dist: opentelemetry-exporter-otlp-proto-http>=1.34.1

|

|

44

48

|

Requires-Dist: opentelemetry-instrumentation-mcp==0.47.0

|

|

45

49

|

Requires-Dist: opentelemetry-sdk>=1.34.1

|

|

46

50

|

Requires-Dist: pathspec>=0.12.1

|

|

51

|

+

Requires-Dist: pillow>=11.1.0

|

|

47

52

|

Requires-Dist: prompt-toolkit==3.0.51

|

|

48

53

|

Requires-Dist: pydantic-settings<3,>=2

|

|

49

54

|

Requires-Dist: pydantic<3,>=2

|

|

@@ -54,8 +59,6 @@ Requires-Dist: typer>=0.9.0

|

|

|

54

59

|

Requires-Dist: watchfiles>=0.21.0

|

|

55

60

|

Requires-Dist: wrapt>=1.14.0

|

|

56

61

|

Provides-Extra: agent

|

|

57

|

-

Requires-Dist: anthropic; extra == 'agent'

|

|

58

|

-

Requires-Dist: datasets>=2.14.0; extra == 'agent'

|

|

59

62

|

Requires-Dist: dotenv>=0.9.9; extra == 'agent'

|

|

60

63

|

Requires-Dist: ipykernel; extra == 'agent'

|

|

61

64

|

Requires-Dist: ipython<9; extra == 'agent'

|

|

@@ -64,12 +67,7 @@ Requires-Dist: jupyter-core; extra == 'agent'

|

|

|

64

67

|

Requires-Dist: langchain; extra == 'agent'

|

|

65

68

|

Requires-Dist: langchain-anthropic; extra == 'agent'

|

|

66

69

|

Requires-Dist: langchain-openai; extra == 'agent'

|

|

67

|

-

Requires-Dist: numpy>=1.24.0; extra == 'agent'

|

|

68

|

-

Requires-Dist: openai; extra == 'agent'

|

|

69

|

-

Requires-Dist: pillow>=11.1.0; extra == 'agent'

|

|

70

70

|

Provides-Extra: agents

|

|

71

|

-

Requires-Dist: anthropic; extra == 'agents'

|

|

72

|

-

Requires-Dist: datasets>=2.14.0; extra == 'agents'

|

|

73

71

|

Requires-Dist: dotenv>=0.9.9; extra == 'agents'

|

|

74

72

|

Requires-Dist: ipykernel; extra == 'agents'

|

|

75

73

|

Requires-Dist: ipython<9; extra == 'agents'

|

|

@@ -78,13 +76,8 @@ Requires-Dist: jupyter-core; extra == 'agents'

|

|

|

78

76

|

Requires-Dist: langchain; extra == 'agents'

|

|

79

77

|

Requires-Dist: langchain-anthropic; extra == 'agents'

|

|

80

78

|

Requires-Dist: langchain-openai; extra == 'agents'

|

|

81

|

-

Requires-Dist: numpy>=1.24.0; extra == 'agents'

|

|

82

|

-

Requires-Dist: openai; extra == 'agents'

|

|

83

|

-

Requires-Dist: pillow>=11.1.0; extra == 'agents'

|

|

84

79

|

Provides-Extra: dev

|

|

85

80

|

Requires-Dist: aiodocker>=0.24.0; extra == 'dev'

|

|

86

|

-

Requires-Dist: anthropic; extra == 'dev'

|

|

87

|

-

Requires-Dist: datasets>=2.14.0; extra == 'dev'

|

|

88

81

|

Requires-Dist: dotenv>=0.9.9; extra == 'dev'

|

|

89

82

|

Requires-Dist: inspect-ai>=0.3.80; extra == 'dev'

|

|

90

83

|

Requires-Dist: ipykernel; extra == 'dev'

|

|

@@ -94,8 +87,6 @@ Requires-Dist: jupyter-core; extra == 'dev'

|

|

|

94

87

|

Requires-Dist: langchain; extra == 'dev'

|

|

95

88

|

Requires-Dist: langchain-anthropic; extra == 'dev'

|

|

96

89

|

Requires-Dist: langchain-openai; extra == 'dev'

|

|

97

|

-

Requires-Dist: numpy>=1.24.0; extra == 'dev'

|

|

98

|

-

Requires-Dist: openai; extra == 'dev'

|

|

99

90

|

Requires-Dist: pillow>=11.1.0; extra == 'dev'

|

|

100

91

|

Requires-Dist: playwright; extra == 'dev'

|

|

101

92

|

Requires-Dist: pyautogui>=0.9.54; extra == 'dev'

|

|

@@ -108,9 +99,7 @@ Requires-Dist: ruff>=0.11.8; extra == 'dev'

|

|

|

108

99

|

Requires-Dist: setuptools; extra == 'dev'

|

|

109

100

|

Requires-Dist: textdistance<5,>=4.5.0; extra == 'dev'

|

|

110

101

|

Provides-Extra: rl

|

|

111

|

-

Requires-Dist: anthropic; extra == 'rl'

|

|

112

102

|

Requires-Dist: bitsandbytes>=0.41.0; (sys_platform == 'linux') and extra == 'rl'

|

|

113

|

-

Requires-Dist: datasets>=2.14.0; extra == 'rl'

|

|

114

103

|

Requires-Dist: dotenv>=0.9.9; extra == 'rl'

|

|

115

104

|

Requires-Dist: ipykernel; extra == 'rl'

|

|

116

105

|

Requires-Dist: ipython<9; extra == 'rl'

|

|

@@ -120,10 +109,7 @@ Requires-Dist: langchain; extra == 'rl'

|

|

|

120

109

|

Requires-Dist: langchain-anthropic; extra == 'rl'

|

|

121

110

|

Requires-Dist: langchain-openai; extra == 'rl'

|

|

122

111

|

Requires-Dist: liger-kernel>=0.5.0; (sys_platform == 'linux') and extra == 'rl'

|

|

123

|

-

Requires-Dist: numpy>=1.24.0; extra == 'rl'

|

|

124

|

-

Requires-Dist: openai; extra == 'rl'

|

|

125

112

|

Requires-Dist: peft>=0.17.1; extra == 'rl'

|

|

126

|

-

Requires-Dist: pillow>=11.1.0; extra == 'rl'

|

|

127

113

|

Requires-Dist: vllm==0.10.1.1; extra == 'rl'

|

|

128

114

|

Description-Content-Type: text/markdown

|

|

129

115

|

|

|

@@ -239,21 +225,34 @@ The above example let's the agent play 2048 ([See replay](https://app.hud.so/tra

|

|

|

239

225

|

|

|

240

226

|

## Reinforcement Learning with GRPO

|

|

241

227

|

|

|

242

|

-

This is a Qwen

|

|

228

|

+

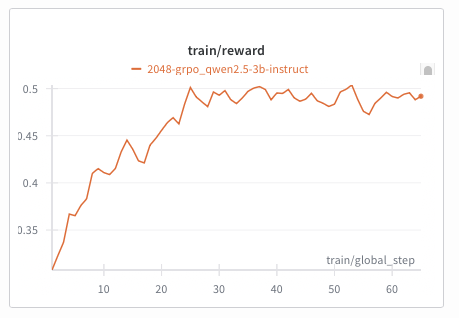

This is a Qwen‑2.5‑VL‑3B agent training a policy on the 2048-basic browser environment:

|

|

243

229

|

|

|

244

230

|

|

|

245

231

|

|

|

246

|

-

|

|

232

|

+

Train with the new interactive `hud rl` flow:

|

|

247

233

|

|

|

248

234

|

```bash

|

|

249

|

-

|

|

250

|

-

|

|

251

|

-

|

|

235

|

+

# Install CLI with RL extras

|

|

236

|

+

uv tool install "hud-python[rl]"

|

|

237

|

+

|

|

238

|

+

# Option A: Run directly from a HuggingFace dataset

|

|

239

|

+

hud rl hud-evals/basic-2048

|

|

240

|

+

|

|

241

|

+

# Option B: Download first, modify, then train

|

|

242

|

+

hud get hud-evals/basic-2048

|

|

243

|

+

hud rl basic-2048.jsonl

|

|

244

|

+

|

|

245

|

+

# Optional: baseline evaluation

|

|

246

|

+

hud eval basic-2048.jsonl

|

|

252

247

|

```

|

|

253

248

|

|

|

254

|

-

|

|

249

|

+

Supports multi‑turn RL for both:

|

|

250

|

+

- Language‑only models (e.g., `Qwen/Qwen2.5-7B-Instruct`)

|

|

251

|

+

- Vision‑Language models (e.g., `Qwen/Qwen2.5-VL-3B-Instruct`)

|

|

252

|

+

|

|

253

|

+

By default, `hud rl` provisions a persistant server and trainer in the cloud, streams telemetry to `app.hud.so`, and lets you monitor/manage models at `app.hud.so/models`. Use `--local` to run entirely on your machines (typically 2+ GPUs: one for vLLM, the rest for training).

|

|

255

254

|

|

|

256

|

-

|

|

255

|

+

Any HUD MCP environment and evaluation works with our RL pipeline (including remote configurations). See the guided docs: `https://docs.hud.so/train-agents/quickstart`.

|

|

257

256

|

|

|

258

257

|

## Benchmarking Agents

|

|

259

258

|

|

|

@@ -2,14 +2,14 @@ hud/__init__.py,sha256=JMDFUE1pP0J1Xl_miBdt7ERvoffZmTzSFe8yxz512A8,552

|

|

|

2

2

|

hud/__main__.py,sha256=YR8Dq8OhINOsVfQ55PmRXXg4fEK84Rt_-rMtJ5rvhWo,145

|

|

3

3

|

hud/settings.py,sha256=sMS31iW1m-5VpWk-Blhi5-obLcUA0fwxWE1GgJz-vqU,2708

|

|

4

4

|

hud/types.py,sha256=Cn9suZ_ZitLnxmnknfbCYVvmLsXRWI56kJ3LXtdfI6M,10157

|

|

5

|

-

hud/version.py,sha256=

|

|

5

|

+

hud/version.py,sha256=amJJuAW2iW2bL8JO7j8MSy3wzSXv2pKn1GXk85UJEVM,105

|

|

6

6

|

hud/agents/__init__.py,sha256=UoIkljWdbq4bM0LD-mSaw6w826EqdEjOk7r6glNYwYQ,286

|

|

7

7

|

hud/agents/base.py,sha256=_u1zR3gXzZ1RlTCUYdMcvgHqdJBC4-AB1lZt0yBx8lg,35406

|

|

8

8

|

hud/agents/claude.py,sha256=wHiw8iAnjnRmZyKRKcOhagCDQMhz9Z6rlSBWqH1X--M,15781

|

|

9

9

|

hud/agents/grounded_openai.py,sha256=U-FHjB2Nh1_o0gmlxY5F17lWJ3oHsNRIB2a7z-IKB64,11231

|

|

10

10

|

hud/agents/langchain.py,sha256=1EgCy8jfjunsWxlPC5XfvfLS6_XZVrIF1ZjtHcrvhYw,9584

|

|

11

11

|

hud/agents/openai.py,sha256=ovARRWNuHqKkZ2Q_OCYSVCIZckrh8XY2jUB2p2x1m88,14259

|

|

12

|

-

hud/agents/openai_chat_generic.py,sha256=

|

|

12

|

+

hud/agents/openai_chat_generic.py,sha256=7n7timn3fvNRnL2xzWyOTeNTchej2r9cAL1mU6YnFdY,11605

|

|

13

13

|

hud/agents/misc/__init__.py,sha256=BYi4Ytp9b_vycpZFXnr5Oyw6ncKLNNGml8Jrb7bWUb4,136

|

|

14

14

|

hud/agents/misc/response_agent.py,sha256=OJdQJ76jP9xxQxVYJ-qPcdBxvFr8ABcwbP1f1I5zU5A,3227

|

|

15

15

|

hud/agents/tests/__init__.py,sha256=W-O-_4i34d9TTyEHV-O_q1Ai1gLhzwDaaPo02_TWQIY,34

|

|

@@ -27,20 +27,21 @@ hud/cli/debug.py,sha256=jtFW8J5F_3rhq1Hf1_SkJ7aLS3wjnyIs_LsC8k5cnzc,14200

|

|

|

27

27

|

hud/cli/dev.py,sha256=56vQdH9oe_XGnOcRcFbNIsLEoBnpCl1eANlRFUeddHQ,31734

|

|

28

28

|

hud/cli/eval.py,sha256=W_eY4uoIQwHcSCvxNaQeRfWC10uQA1UhBWiNQzQPuXM,22694

|

|

29

29

|

hud/cli/get.py,sha256=sksKrdzBGZa7ZuSoQkc0haj-CvOGVSSikoVXeaUd3N4,6274

|

|

30

|

-

hud/cli/init.py,sha256=

|

|

30

|

+

hud/cli/init.py,sha256=McZwpxZMXD-It_PXINCUy-SwUaPiQ7jdpSU5-F-caO8,19671

|

|

31

31

|

hud/cli/list_func.py,sha256=EVi2Vc3Lb3glBNJxFx4MPnZknZ4xmuJz1OFg_dc8a_E,7177

|

|

32

32

|

hud/cli/pull.py,sha256=Vd1l1-IwskyACzhtC8Df1SYINUZEYmFxrLl0s9cNN6c,12151

|

|

33

|

-

hud/cli/push.py,sha256=

|

|

33

|

+

hud/cli/push.py,sha256=dmjF-hGlMfq73tquDxsTuM9t50zrkE9PFJqW5vRmYSw,18380

|

|

34

34

|

hud/cli/remove.py,sha256=8vGQyXDqgtjz85_vtusoIG8zurH4RHz6z8UMevQRYM4,6861

|

|

35

35

|

hud/cli/flows/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

36

|

-

hud/cli/flows/tasks.py,sha256=

|

|

37

|

-

hud/cli/rl/__init__.py,sha256=

|

|

36

|

+

hud/cli/flows/tasks.py,sha256=cOIXs03_iAS68nIYj5NpzqlSh1vSbIZtp3dnt8PEyl4,8855

|

|

37

|

+

hud/cli/rl/__init__.py,sha256=BeqXdmzPwVBptz4j796XJRxSC5B_9tQta5aKd0jDMvo,5000

|

|

38

38

|

hud/cli/rl/config.py,sha256=iNhCxotM33OEiP9gqPvn8A_AxrBVe6fcFCQTvc13xzA,2884

|

|

39

39

|

hud/cli/rl/display.py,sha256=hqJVGmO9csYinladhZwjF-GMvppYWngxDHajTyIJ_gM,5214

|

|

40

40

|

hud/cli/rl/gpu.py,sha256=peXS-NdUF5RyuSs0aZoCzGLboneBUpCy8f9f99WMrG0,2009

|

|

41

41

|

hud/cli/rl/gpu_utils.py,sha256=H5ckPwgj5EVP3yJ5eVihR5R7Y6Gp6pt8ZUfWCCwcLG4,11072

|

|

42

|

+

hud/cli/rl/local_runner.py,sha256=GssmDgCxGfFsi31aFj22vwCiwa9ELllEwQjbActxSXY,21514

|

|

42

43

|

hud/cli/rl/presets.py,sha256=DzOO82xL5QyzdVtlX-Do1CODMvDz9ILMPapjU92jcZg,3051

|

|

43

|

-

hud/cli/rl/remote_runner.py,sha256=

|

|

44

|

+

hud/cli/rl/remote_runner.py,sha256=nSLPkBek1pBQiOYg-yqkc3La5dkQEN9xn9DaauSlYAA,13747

|

|

44

45

|

hud/cli/rl/rl_api.py,sha256=INJobvSa50ccR037u_GPsDa_9WboWyNwqEaoh9hcXj0,4306

|

|

45

46

|

hud/cli/rl/vllm.py,sha256=Gq_M6KsQArGz7FNIdemuM5mk16mu3xe8abpO2GCCuOE,6093

|

|

46

47

|

hud/cli/tests/__init__.py,sha256=ZrGVkmH7DHXGqOvjOSNGZeMYaFIRB2K8c6hwr8FPJ-8,68

|

|

@@ -61,7 +62,7 @@ hud/cli/tests/test_registry.py,sha256=-o9MvQTcBElteqrg0XW8Bg59KrHCt88ZyPqeaAlyyT

|

|

|

61

62

|

hud/cli/tests/test_utils.py,sha256=_oa2lTvgqJxXe0Mtovxb8x-Sug-f6oJJKvG67r5pFtA,13474

|

|

62

63

|

hud/cli/utils/__init__.py,sha256=L6s0oNzY2LugGp9faodCPnjzM-ZUorUH05-HmYOq5hY,35

|

|

63

64

|

hud/cli/utils/cursor.py,sha256=fy850p0rVp5k_1wwOCI7rK1SggbselJrywFInSQ2gio,3009

|

|

64

|

-

hud/cli/utils/docker.py,sha256

|

|

65

|

+

hud/cli/utils/docker.py,sha256=-nAj7wRRIilbezG0-pCHA2-tleoqUJN9sDXHxvMWilU,7331

|

|

65

66

|

hud/cli/utils/environment.py,sha256=y_c0ohxWrM054ZKid0KOQPzs2M2vh985AsumPG2wTPc,4282

|

|

66

67

|

hud/cli/utils/interactive.py,sha256=tcwp9HkAyr2_GiM3Raba4h0P_OgCksQKram80BucPo4,16546

|

|

67

68

|

hud/cli/utils/logging.py,sha256=DyOWuzZUg6HeKCqfs6ufb703XS3bW4G2pzaXVAvDqvA,9018

|

|

@@ -113,10 +114,10 @@ hud/rl/chat_template.jinja,sha256=XTdzI8oFGEcSA-exKxyHaprwRDmX5Am1KEb0VxvUc6U,49

|

|

|

113

114

|

hud/rl/config.py,sha256=PAKYPCsKl8yg_j3gJSE5SJUgLM7j0lFy0K_Vt4-otDM,5384

|

|

114

115

|

hud/rl/distributed.py,sha256=8avhrb0lHYkhW22Z7MfkqSnlczWj5jMrUMEtkcoCf74,2473

|

|

115

116

|

hud/rl/learner.py,sha256=FKIgIIghsNiDr_g090xokOO_BxNmTSj1O-TSJzIq_Uw,24703

|

|

116

|

-

hud/rl/train.py,sha256=

|

|

117

|

+

hud/rl/train.py,sha256=ZigkUKj-I1nsYmFByZprqaoDZ88LVDH-6auYneEPOsA,13555

|

|

117

118

|

hud/rl/types.py,sha256=lrLKo7iaqodYth2EyeuOQfLiuzXfYM2eJjPmpObrD7c,3965

|

|

118

119

|

hud/rl/utils.py,sha256=IsgVUUibxnUzb32a4mu1sYrgJC1CwoG9E-Dd5y5VDOA,19115

|

|

119

|

-

hud/rl/vllm_adapter.py,sha256=

|

|

120

|

+

hud/rl/vllm_adapter.py,sha256=O2_TdTGIyNr9zRGhCw18XWjOKYzEM3049wvlyL2x0sc,4751

|

|

120

121

|

hud/rl/tests/__init__.py,sha256=PXmD3Gs6xOAwaYKb4HnwZERDjX05N1QF-aU6ya0dBtE,27

|

|

121

122

|

hud/rl/tests/test_learner.py,sha256=qfSHFFROteRb98TjBuAKjFmZjCGfuWXPysVvTAWJ7wQ,6025

|

|

122

123

|

hud/rl/utils/start_vllm_server.sh,sha256=ThPokrLK_Qm_uh916fHXXBfMlw1TC97P57-AEI5MuOc,910

|

|

@@ -197,10 +198,10 @@ hud/utils/tests/test_init.py,sha256=2QLQSGgyP9wJhOvPCusm_zjJad0qApOZi1BXpxcdHXQ,

|

|

|

197

198

|

hud/utils/tests/test_mcp.py,sha256=0pUa16mL-bqbZDXp5NHBnt1gO5o10BOg7zTMHZ1DNPM,4023

|

|

198

199

|

hud/utils/tests/test_progress.py,sha256=QSF7Kpi03Ff_l3mAeqW9qs1nhK50j9vBiSobZq7T4f4,7394

|

|

199

200

|

hud/utils/tests/test_telemetry.py,sha256=5jl7bEx8C8b-FfFUko5pf4UY-mPOR-9HaeL98dGtVHM,2781

|

|

200

|

-

hud/utils/tests/test_version.py,sha256=

|

|

201

|

+

hud/utils/tests/test_version.py,sha256=HVazB_GTYA2lJ3Oc10a6KPOvPoBBQOGuYoJl7yIazWw,160

|

|

201

202

|

hud/py.typed,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

|

|

202

|

-

hud_python-0.4.

|

|

203

|

-

hud_python-0.4.

|

|

204

|

-

hud_python-0.4.

|

|

205

|

-

hud_python-0.4.

|

|

206

|

-

hud_python-0.4.

|

|

203

|

+

hud_python-0.4.32.dist-info/METADATA,sha256=Ve-zWpi0dWcv8bjC4wCcNZnQtsrlFTOwAaWs4RmQ_W0,20861

|

|

204

|

+

hud_python-0.4.32.dist-info/WHEEL,sha256=qtCwoSJWgHk21S1Kb4ihdzI2rlJ1ZKaIurTj_ngOhyQ,87

|

|

205

|

+

hud_python-0.4.32.dist-info/entry_points.txt,sha256=jJbodNFg1m0-CDofe5AHvB4zKBq7sSdP97-ohaQ3ae4,63

|

|

206

|

+

hud_python-0.4.32.dist-info/licenses/LICENSE,sha256=yIzBheVUf86FC1bztAcr7RYWWNxyd3B-UJQ3uddg1HA,1078

|

|

207

|

+

hud_python-0.4.32.dist-info/RECORD,,

|

|

File without changes

|

|

File without changes

|

|

File without changes

|