cobweb-launcher 1.2.25__py3-none-any.whl → 3.2.20__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- cobweb/__init__.py +4 -1

- cobweb/base/__init__.py +3 -3

- cobweb/base/common_queue.py +37 -16

- cobweb/base/item.py +35 -16

- cobweb/base/{log.py → logger.py} +3 -3

- cobweb/base/request.py +741 -54

- cobweb/base/response.py +380 -13

- cobweb/base/seed.py +96 -48

- cobweb/base/task_queue.py +180 -0

- cobweb/base/test.py +257 -0

- cobweb/constant.py +10 -1

- cobweb/crawlers/crawler.py +12 -155

- cobweb/db/api_db.py +3 -2

- cobweb/db/redis_db.py +117 -28

- cobweb/launchers/__init__.py +4 -3

- cobweb/launchers/distributor.py +141 -0

- cobweb/launchers/launcher.py +95 -157

- cobweb/launchers/uploader.py +68 -0

- cobweb/log_dots/__init__.py +2 -0

- cobweb/log_dots/dot.py +258 -0

- cobweb/log_dots/loghub_dot.py +53 -0

- cobweb/pipelines/__init__.py +1 -1

- cobweb/pipelines/pipeline.py +5 -55

- cobweb/pipelines/pipeline_csv.py +25 -0

- cobweb/pipelines/pipeline_loghub.py +32 -12

- cobweb/schedulers/__init__.py +1 -0

- cobweb/schedulers/scheduler.py +66 -0

- cobweb/schedulers/scheduler_with_redis.py +189 -0

- cobweb/setting.py +27 -40

- cobweb/utils/__init__.py +5 -3

- cobweb/utils/bloom.py +58 -58

- cobweb/{base → utils}/decorators.py +14 -12

- cobweb/utils/dotting.py +300 -0

- cobweb/utils/oss.py +113 -94

- cobweb/utils/tools.py +3 -15

- {cobweb_launcher-1.2.25.dist-info → cobweb_launcher-3.2.20.dist-info}/METADATA +31 -43

- cobweb_launcher-3.2.20.dist-info/RECORD +44 -0

- {cobweb_launcher-1.2.25.dist-info → cobweb_launcher-3.2.20.dist-info}/WHEEL +1 -1

- cobweb/crawlers/base_crawler.py +0 -144

- cobweb/crawlers/file_crawler.py +0 -98

- cobweb/launchers/launcher_air.py +0 -88

- cobweb/launchers/launcher_api.py +0 -221

- cobweb/launchers/launcher_pro.py +0 -222

- cobweb/pipelines/base_pipeline.py +0 -54

- cobweb/pipelines/loghub_pipeline.py +0 -34

- cobweb/pipelines/pipeline_console.py +0 -22

- cobweb_launcher-1.2.25.dist-info/RECORD +0 -40

- {cobweb_launcher-1.2.25.dist-info → cobweb_launcher-3.2.20.dist-info}/LICENSE +0 -0

- {cobweb_launcher-1.2.25.dist-info → cobweb_launcher-3.2.20.dist-info}/top_level.txt +0 -0

|

@@ -1,22 +1,21 @@

|

|

|

1

1

|

Metadata-Version: 2.1

|

|

2

2

|

Name: cobweb-launcher

|

|

3

|

-

Version:

|

|

3

|

+

Version: 3.2.20

|

|

4

4

|

Summary: spider_hole

|

|

5

5

|

Home-page: https://github.com/Juannie-PP/cobweb

|

|

6

6

|

Author: Juannie-PP

|

|

7

7

|

Author-email: 2604868278@qq.com

|

|

8

8

|

License: MIT

|

|

9

|

-

Keywords: cobweb-launcher,

|

|

9

|

+

Keywords: cobweb-launcher,cobweb,spider

|

|

10

10

|

Platform: UNKNOWN

|

|

11

11

|

Classifier: Programming Language :: Python :: 3

|

|

12

|

+

Classifier: License :: OSI Approved :: MIT License

|

|

13

|

+

Classifier: Operating System :: OS Independent

|

|

12

14

|

Requires-Python: >=3.7

|

|

13

15

|

Description-Content-Type: text/markdown

|

|

14

|

-

|

|

15

|

-

Requires-Dist:

|

|

16

|

-

Requires-Dist: oss2 (>=2.18.1)

|

|

17

|

-

Requires-Dist: redis (>=4.4.4)

|

|

16

|

+

Requires-Dist: requests>=2.19.1

|

|

17

|

+

Requires-Dist: redis>=4.4.4

|

|

18

18

|

Requires-Dist: aliyun-log-python-sdk

|

|

19

|

-

Requires-Dist: mmh3

|

|

20

19

|

|

|

21

20

|

# cobweb

|

|

22

21

|

cobweb是一个基于python的分布式爬虫调度框架,目前支持分布式爬虫,单机爬虫,支持自定义数据库,支持自定义数据存储,支持自定义数据处理等操作。

|

|

@@ -34,12 +33,12 @@ pip3 install --upgrade cobweb-launcher

|

|

|

34

33

|

```

|

|

35

34

|

## 使用方法介绍

|

|

36

35

|

### 1. 任务创建

|

|

37

|

-

-

|

|

36

|

+

- Launcher任务创建

|

|

38

37

|

```python

|

|

39

|

-

from cobweb import

|

|

38

|

+

from cobweb import Launcher

|

|

40

39

|

|

|

41

40

|

# 创建启动器

|

|

42

|

-

app =

|

|

41

|

+

app = Launcher(task="test", project="test")

|

|

43

42

|

|

|

44

43

|

# 设置采集种子

|

|

45

44

|

app.SEEDS = [{

|

|

@@ -49,29 +48,15 @@ app.SEEDS = [{

|

|

|

49

48

|

# 启动任务

|

|

50

49

|

app.start()

|

|

51

50

|

```

|

|

52

|

-

- LauncherPro任务创建

|

|

53

|

-

LauncherPro依赖redis实现分布式调度,使用LauncherPro启动器需要完成环境变量的配置或自定义setting文件中的redis配置,如何配置查看`2. 自定义配置文件参数`

|

|

54

|

-

```python

|

|

55

|

-

from cobweb import LauncherPro

|

|

56

|

-

|

|

57

|

-

# 创建启动器

|

|

58

|

-

app = LauncherPro(

|

|

59

|

-

task="test",

|

|

60

|

-

project="test"

|

|

61

|

-

)

|

|

62

|

-

...

|

|

63

|

-

# 启动任务

|

|

64

|

-

app.start()

|

|

65

|

-

```

|

|

66

51

|

### 2. 自定义配置文件参数

|

|

67

52

|

- 通过自定义setting文件,配置文件导入字符串方式

|

|

68

53

|

> 默认配置文件:import cobweb.setting

|

|

69

54

|

> 不推荐!!!目前有bug,随缘使用...

|

|

70

55

|

例如:同级目录下自定义创建了setting.py文件。

|

|

71

56

|

```python

|

|

72

|

-

from cobweb import

|

|

57

|

+

from cobweb import Launcher

|

|

73

58

|

|

|

74

|

-

app =

|

|

59

|

+

app = Launcher(

|

|

75

60

|

task="test",

|

|

76

61

|

project="test",

|

|

77

62

|

setting="import setting"

|

|

@@ -83,10 +68,10 @@ app.start()

|

|

|

83

68

|

```

|

|

84

69

|

- 自定义修改setting中对象值

|

|

85

70

|

```python

|

|

86

|

-

from cobweb import

|

|

71

|

+

from cobweb import Launcher

|

|

87

72

|

|

|

88

73

|

# 创建启动器

|

|

89

|

-

app =

|

|

74

|

+

app = Launcher(

|

|

90

75

|

task="test",

|

|

91

76

|

project="test",

|

|

92

77

|

REDIS_CONFIG = {

|

|

@@ -104,10 +89,10 @@ app.start()

|

|

|

104

89

|

`@app.request`使用装饰器封装自定义请求方法,作用于发生请求前的操作,返回Request对象或继承于BaseItem对象,用于控制请求参数。

|

|

105

90

|

```python

|

|

106

91

|

from typing import Union

|

|

107

|

-

from cobweb import

|

|

92

|

+

from cobweb import Launcher

|

|

108

93

|

from cobweb.base import Seed, Request, BaseItem

|

|

109

94

|

|

|

110

|

-

app =

|

|

95

|

+

app = Launcher(

|

|

111

96

|

task="test",

|

|

112

97

|

project="test"

|

|

113

98

|

)

|

|

@@ -120,7 +105,7 @@ def request(seed: Seed) -> Union[Request, BaseItem]:

|

|

|

120

105

|

proxies = {"http": ..., "https": ...}

|

|

121

106

|

yield Request(seed.url, seed, ..., proxies=proxies, timeout=15)

|

|

122

107

|

# yield xxxItem(seed, ...) # 跳过请求和解析直接进入数据存储流程

|

|

123

|

-

|

|

108

|

+

|

|

124

109

|

...

|

|

125

110

|

|

|

126

111

|

app.start()

|

|

@@ -132,10 +117,10 @@ app.start()

|

|

|

132

117

|

`@app.download`使用装饰器封装自定义下载方法,作用于发生请求时的操作,返回Response对象或继承于BaseItem对象,用于控制请求参数。

|

|

133

118

|

```python

|

|

134

119

|

from typing import Union

|

|

135

|

-

from cobweb import

|

|

120

|

+

from cobweb import Launcher

|

|

136

121

|

from cobweb.base import Request, Response, BaseItem

|

|

137

122

|

|

|

138

|

-

app =

|

|

123

|

+

app = Launcher(

|

|

139

124

|

task="test",

|

|

140

125

|

project="test"

|

|

141

126

|

)

|

|

@@ -149,7 +134,7 @@ def download(item: Request) -> Union[BaseItem, Response]:

|

|

|

149

134

|

...

|

|

150

135

|

yield Response(item.seed, response, ...) # 返回Response对象,进行解析

|

|

151

136

|

# yield xxxItem(seed, ...) # 跳过请求和解析直接进入数据存储流程

|

|

152

|

-

|

|

137

|

+

|

|

153

138

|

...

|

|

154

139

|

|

|

155

140

|

app.start()

|

|

@@ -163,14 +148,14 @@ app.start()

|

|

|

163

148

|

解析方法返回继承于BaseItem的对象,yield返回进行控制数据存储流程。

|

|

164

149

|

```python

|

|

165

150

|

from typing import Union

|

|

166

|

-

from cobweb import

|

|

151

|

+

from cobweb import Launcher

|

|

167

152

|

from cobweb.base import Seed, Response, BaseItem

|

|

168

153

|

|

|

169

154

|

class TestItem(BaseItem):

|

|

170

155

|

__TABLE__ = "test_data" # 表名

|

|

171

156

|

__FIELDS__ = "field1, field2, field3" # 字段名

|

|

172

157

|

|

|

173

|

-

app =

|

|

158

|

+

app = Launcher(

|

|

174

159

|

task="test",

|

|

175

160

|

project="test"

|

|

176

161

|

)

|

|

@@ -182,7 +167,7 @@ def parse(item: Response) -> Union[Seed, BaseItem]:

|

|

|

182

167

|

...

|

|

183

168

|

yield TestItem(item.seed, field1=..., field2=..., field3=...)

|

|

184

169

|

# yield Seed(...) # 构造新种子推送至消费队列

|

|

185

|

-

|

|

170

|

+

|

|

186

171

|

...

|

|

187

172

|

|

|

188

173

|

app.start()

|

|

@@ -192,14 +177,17 @@ app.start()

|

|

|

192

177

|

> upload_item = item.to_dict

|

|

193

178

|

> upload_item["text"] = item.response.text

|

|

194

179

|

> yield ConsoleItem(item.seed, data=json.dumps(upload_item, ensure_ascii=False))

|

|

195

|

-

##

|

|

196

|

-

- 队列优化完善,使用queue的机制wait()同步各模块执行?

|

|

197

|

-

- 日志功能完善,单机模式调度和保存数据写入文件,结构化输出各任务日志

|

|

198

|

-

- 去重过滤(布隆过滤器等)

|

|

199

|

-

-

|

|

200

|

-

-

|

|

180

|

+

## todo

|

|

181

|

+

- [ ] 队列优化完善,使用queue的机制wait()同步各模块执行?

|

|

182

|

+

- [x] 日志功能完善,单机模式调度和保存数据写入文件,结构化输出各任务日志

|

|

183

|

+

- [ ] 去重过滤(布隆过滤器等)

|

|

184

|

+

- [ ] 请求检验

|

|

185

|

+

- [ ] 异常回调

|

|

186

|

+

- [ ] 失败回调

|

|

201

187

|

|

|

202

188

|

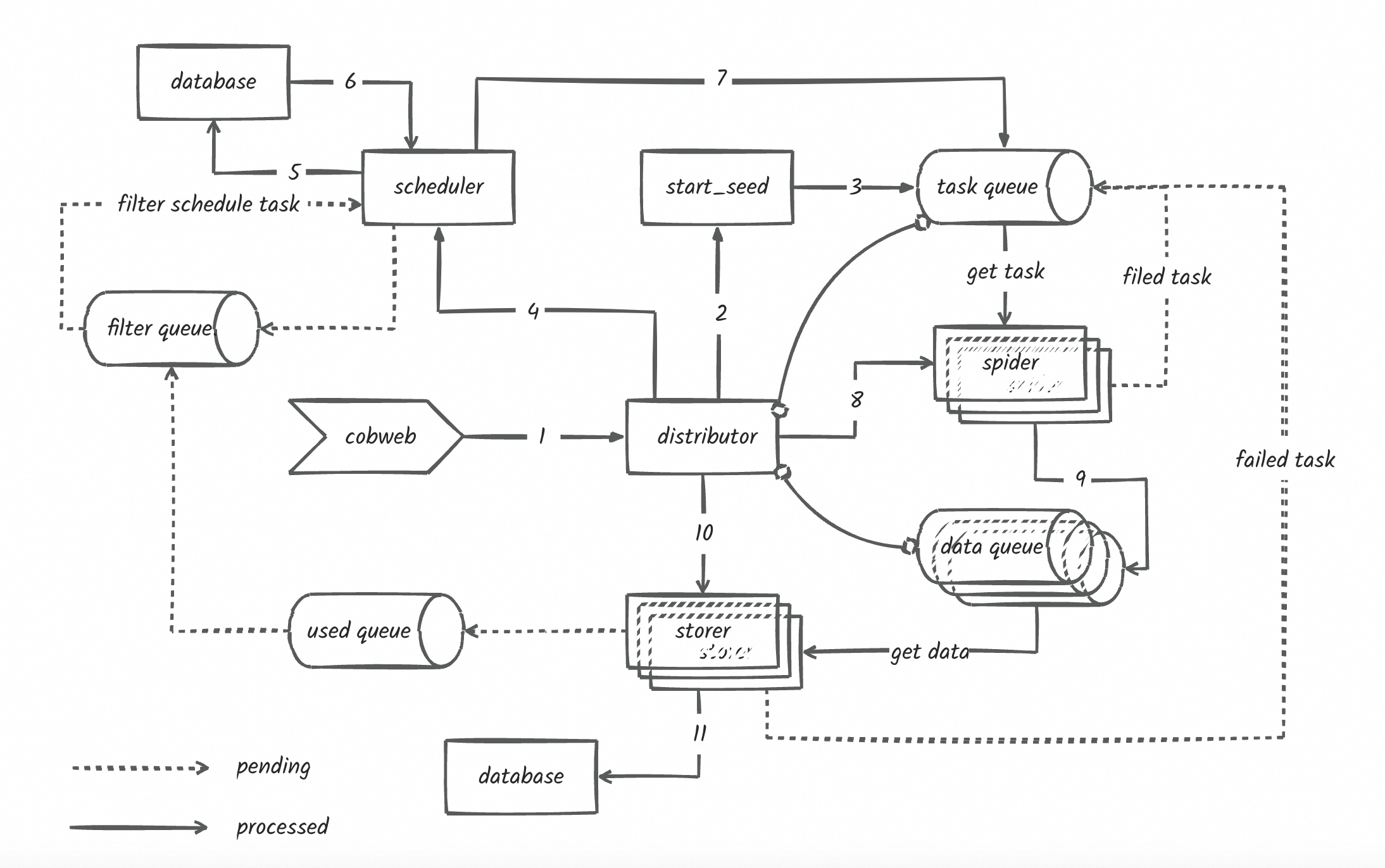

> 未更新流程图!!!

|

|

203

189

|

|

|

204

190

|

|

|

205

191

|

|

|

192

|

+

|

|

193

|

+

|

|

@@ -0,0 +1,44 @@

|

|

|

1

|

+

cobweb/__init__.py,sha256=1V2fOFvCncbsPlyzAOo0o6FB9mJfJfaomIVWgNb4hMk,155

|

|

2

|

+

cobweb/constant.py,sha256=u44MFrduzcuITPto9MwPuAGavfDjzi0FKh2looF-9FY,2988

|

|

3

|

+

cobweb/setting.py,sha256=Mte2hQPo1HQUTtOePIfP69GUR7TjeTA2AedaFLndueI,1679

|

|

4

|

+

cobweb/base/__init__.py,sha256=NanSxJr0WsqjqCNOQAlxlkt-vQEsERHYBzacFC057oI,222

|

|

5

|

+

cobweb/base/common_queue.py,sha256=hYdaM70KrWjvACuLKaGhkI2VqFCnd87NVvWzmnfIg8Q,1423

|

|

6

|

+

cobweb/base/item.py,sha256=1bS4U_3vzI2jzSSeoEbLoLT_5CfgLPopWiEYtaahbvw,1674

|

|

7

|

+

cobweb/base/logger.py,sha256=Vsg1bD4LXW91VgY-ANsmaUu-mD88hU_WS83f7jX3qF8,2011

|

|

8

|

+

cobweb/base/request.py,sha256=8CrnQQ9q4R6pX_DQmeaytboVxXquuQsYH-kfS8ECjIw,28845

|

|

9

|

+

cobweb/base/response.py,sha256=L3sX2PskV744uz3BJ8xMuAoAfGCeh20w8h0Cnd9vLo0,11377

|

|

10

|

+

cobweb/base/seed.py,sha256=ddaWCq_KaWwpmPl1CToJlfCxEEnoJ16kjo6azJs9uls,5000

|

|

11

|

+

cobweb/base/task_queue.py,sha256=2MqGpHGNmK5B-kqv7z420RWyihzB9zgDHJUiLsmtzOI,6402

|

|

12

|

+

cobweb/base/test.py,sha256=N8MDGb94KQeI4pC5rCc2QdohE9_5AgcOyGqKjbMsOEs,9588

|

|

13

|

+

cobweb/crawlers/__init__.py,sha256=msvkB9mTpsgyj8JfNMsmwAcpy5kWk_2NrO1Adw2Hkw0,29

|

|

14

|

+

cobweb/crawlers/crawler.py,sha256=ZZVZJ17RWuvzUFGLjqdvyVZpmuq-ynslJwXQzdm_UdQ,709

|

|

15

|

+

cobweb/db/__init__.py,sha256=uZwSkd105EAwYo95oZQXAfofUKHVIAZZIPpNMy-hm2Q,56

|

|

16

|

+

cobweb/db/api_db.py,sha256=qIhEGB-reKPVFtWPIJYFVK16Us32GBgYjgFjcF-V0GM,3036

|

|

17

|

+

cobweb/db/redis_db.py,sha256=X7dUpW50QcmRPjYlYg7b-fXF_fcjuRRk3DBx2ggetXk,7687

|

|

18

|

+

cobweb/exceptions/__init__.py,sha256=E9SHnJBbhD7fOgPFMswqyOf8SKRDrI_i25L0bSpohvk,32

|

|

19

|

+

cobweb/exceptions/oss_db_exception.py,sha256=iP_AImjNHT3-Iv49zCFQ3rdLnlvuHa3h2BXApgrOYpA,636

|

|

20

|

+

cobweb/launchers/__init__.py,sha256=6_v2jd2sgj6YnOB1nPKiYBskuXVb5xpQnq2YaDGJgQ8,100

|

|

21

|

+

cobweb/launchers/distributor.py,sha256=1ooOFqWqZLCn7zIDoraDDGJm9l7aAWzygz-UNUSqxpI,5662

|

|

22

|

+

cobweb/launchers/launcher.py,sha256=Shb6o6MAM38d32ybW2gY6qpGmhuiV7jo9TDh0f7rud8,5694

|

|

23

|

+

cobweb/launchers/uploader.py,sha256=QwJOmG7jq2T5sRzrT386zJ0YYNz-hAv0i6GOpoEaRdU,2075

|

|

24

|

+

cobweb/log_dots/__init__.py,sha256=F-3sNXac5uI80j-tNFGPv0lygy00uEI9XfFvK178RC4,55

|

|

25

|

+

cobweb/log_dots/dot.py,sha256=ng6PAtQwR56F-421jJwablAmvMM_n8CR0gBJBJTBMhM,9927

|

|

26

|

+

cobweb/log_dots/loghub_dot.py,sha256=HGbvvVuJqFCOa17-bDeZokhetOmo8J6YpnhL8prRxn4,1741

|

|

27

|

+

cobweb/pipelines/__init__.py,sha256=rtkaaCZ4u1XcxpkDLHztETQjEcLZ_6DXTHjdfcJlyxQ,97

|

|

28

|

+

cobweb/pipelines/pipeline.py,sha256=OgSEZ2DdqofpZcer1Wj1tuBqn8OHVjrYQ5poqt75czQ,357

|

|

29

|

+

cobweb/pipelines/pipeline_csv.py,sha256=TFqxqgVUqkBF6Jott4zd6fvCSxzG67lpafRQtXPw1eg,807

|

|

30

|

+

cobweb/pipelines/pipeline_loghub.py,sha256=zwIa_pcWBB2UNGd32Cu-i1jKGNruTbo2STdxl1WGwZ0,1829

|

|

31

|

+

cobweb/schedulers/__init__.py,sha256=LEya11fdAv0X28YzbQTeC1LQZ156Fj4cyEMGqQHUWW0,49

|

|

32

|

+

cobweb/schedulers/scheduler.py,sha256=Of-BjbBh679R6glc12Kc8iugeERCSusP7jolpCc1UMI,1740

|

|

33

|

+

cobweb/schedulers/scheduler_with_redis.py,sha256=yz6anTM3eNOQRP5WtlY1Kx6KaVgKgyR_FwvXtlydGqQ,7403

|

|

34

|

+

cobweb/utils/__init__.py,sha256=HV0rDW6PVpIVmN_bKquKufli15zuk50tw1ZZejyZ4Pc,173

|

|

35

|

+

cobweb/utils/bloom.py,sha256=A8xqtHXp7jgRoBuUlpovmq8lhU5y7IEF0FOCjfQDb6s,1855

|

|

36

|

+

cobweb/utils/decorators.py,sha256=ZwVQlz-lYHgXgKf9KRCp15EWPzTDdhoikYUNUCIqNeM,1140

|

|

37

|

+

cobweb/utils/dotting.py,sha256=e0pLEWB8sly1xvXOmJ_uHGQ6Bbw3O9tLcmUBfyNKRmQ,10633

|

|

38

|

+

cobweb/utils/oss.py,sha256=wmToIIVNO8nCQVRmreVaZejk01aCWS35e1NV6cr0yGI,4192

|

|

39

|

+

cobweb/utils/tools.py,sha256=14TCedqt07m4z6bCnFAsITOFixeGr8V3aOKk--L7Cr0,879

|

|

40

|

+

cobweb_launcher-3.2.20.dist-info/LICENSE,sha256=z1rxSIGOyzcSb3orZxFPxzx-0C1vTocmswqBNxpKfEk,1063

|

|

41

|

+

cobweb_launcher-3.2.20.dist-info/METADATA,sha256=GrOWnfJGxsO3LPt3_xWpb2ySDzRrupBYngzOpZ7NSPs,6115

|

|

42

|

+

cobweb_launcher-3.2.20.dist-info/WHEEL,sha256=tZoeGjtWxWRfdplE7E3d45VPlLNQnvbKiYnx7gwAy8A,92

|

|

43

|

+

cobweb_launcher-3.2.20.dist-info/top_level.txt,sha256=4GETBGNsKqiCUezmT-mJn7tjhcDlu7nLIV5gGgHBW4I,7

|

|

44

|

+

cobweb_launcher-3.2.20.dist-info/RECORD,,

|

cobweb/crawlers/base_crawler.py

DELETED

|

@@ -1,144 +0,0 @@

|

|

|

1

|

-

import threading

|

|

2

|

-

import time

|

|

3

|

-

import traceback

|

|

4

|

-

|

|

5

|

-

from inspect import isgenerator

|

|

6

|

-

from typing import Union, Callable, Mapping

|

|

7

|

-

|

|

8

|

-

from cobweb.base import Queue, Seed, BaseItem, Request, Response, logger

|

|

9

|

-

from cobweb.constant import DealModel, LogTemplate

|

|

10

|

-

from cobweb.utils import download_log_info

|

|

11

|

-

from cobweb import setting

|

|

12

|

-

|

|

13

|

-

|

|

14

|

-

class Crawler(threading.Thread):

|

|

15

|

-

|

|

16

|

-

def __init__(

|

|

17

|

-

self,

|

|

18

|

-

upload_queue: Queue,

|

|

19

|

-

custom_func: Union[Mapping[str, Callable]],

|

|

20

|

-

launcher_queue: Union[Mapping[str, Queue]],

|

|

21

|

-

):

|

|

22

|

-

super().__init__()

|

|

23

|

-

|

|

24

|

-

self.upload_queue = upload_queue

|

|

25

|

-

for func_name, _callable in custom_func.items():

|

|

26

|

-

if isinstance(_callable, Callable):

|

|

27

|

-

self.__setattr__(func_name, _callable)

|

|

28

|

-

|

|

29

|

-

self.launcher_queue = launcher_queue

|

|

30

|

-

|

|

31

|

-

self.spider_thread_num = setting.SPIDER_THREAD_NUM

|

|

32

|

-

self.max_retries = setting.SPIDER_MAX_RETRIES

|

|

33

|

-

|

|

34

|

-

@staticmethod

|

|

35

|

-

def request(seed: Seed) -> Union[Request, BaseItem]:

|

|

36

|

-

stream = True if setting.DOWNLOAD_MODEL else False

|

|

37

|

-

yield Request(seed.url, seed, stream=stream, timeout=5)

|

|

38

|

-

|

|

39

|

-

@staticmethod

|

|

40

|

-

def download(item: Request) -> Union[Seed, BaseItem, Response, str]:

|

|

41

|

-

response = item.download()

|

|

42

|

-

yield Response(item.seed, response, **item.to_dict)

|

|

43

|

-

|

|

44

|

-

@staticmethod

|

|

45

|

-

def parse(item: Response) -> BaseItem:

|

|

46

|

-

pass

|

|

47

|

-

|

|

48

|

-

def get_seed(self) -> Seed:

|

|

49

|

-

return self.launcher_queue['todo'].pop()

|

|

50

|

-

|

|

51

|

-

def distribute(self, item, seed):

|

|

52

|

-

if isinstance(item, BaseItem):

|

|

53

|

-

self.upload_queue.push(item)

|

|

54

|

-

elif isinstance(item, Seed):

|

|

55

|

-

self.launcher_queue['new'].push(item)

|

|

56

|

-

elif isinstance(item, str) and item == DealModel.poll:

|

|

57

|

-

self.launcher_queue['todo'].push(seed)

|

|

58

|

-

elif isinstance(item, str) and item == DealModel.done:

|

|

59

|

-

self.launcher_queue['done'].push(seed)

|

|

60

|

-

elif isinstance(item, str) and item == DealModel.fail:

|

|

61

|

-

seed.params.seed_status = DealModel.fail

|

|

62

|

-

self.launcher_queue['done'].push(seed)

|

|

63

|

-

else:

|

|

64

|

-

raise TypeError("yield value type error!")

|

|

65

|

-

|

|

66

|

-

def spider(self):

|

|

67

|

-

while True:

|

|

68

|

-

seed = self.get_seed()

|

|

69

|

-

|

|

70

|

-

if not seed:

|

|

71

|

-

continue

|

|

72

|

-

|

|

73

|

-

elif seed.params.retry >= self.max_retries:

|

|

74

|

-

seed.params.seed_status = DealModel.fail

|

|

75

|

-

self.launcher_queue['done'].push(seed)

|

|

76

|

-

continue

|

|

77

|

-

|

|

78

|

-

seed_detail_log_info = download_log_info(seed.to_dict)

|

|

79

|

-

|

|

80

|

-

try:

|

|

81

|

-

request_iterators = self.request(seed)

|

|

82

|

-

|

|

83

|

-

if not isgenerator(request_iterators):

|

|

84

|

-

raise TypeError("request function isn't a generator!")

|

|

85

|

-

|

|

86

|

-

iterator_status = False

|

|

87

|

-

|

|

88

|

-

for request_item in request_iterators:

|

|

89

|

-

|

|

90

|

-

iterator_status = True

|

|

91

|

-

|

|

92

|

-

if isinstance(request_item, Request):

|

|

93

|

-

iterator_status = False

|

|

94

|

-

download_iterators = self.download(request_item)

|

|

95

|

-

if not isgenerator(download_iterators):

|

|

96

|

-

raise TypeError("download function isn't a generator")

|

|

97

|

-

|

|

98

|

-

for download_item in download_iterators:

|

|

99

|

-

iterator_status = True

|

|

100

|

-

if isinstance(download_item, Response):

|

|

101

|

-

iterator_status = False

|

|

102

|

-

logger.info(LogTemplate.download_info.format(

|

|

103

|

-

detail=seed_detail_log_info,

|

|

104

|

-

retry=seed.params.retry,

|

|

105

|

-

priority=seed.params.priority,

|

|

106

|

-

seed_version=seed.params.seed_version,

|

|

107

|

-

identifier=seed.identifier or "",

|

|

108

|

-

status=download_item.response,

|

|

109

|

-

response=download_log_info(download_item.to_dict)

|

|

110

|

-

))

|

|

111

|

-

parse_iterators = self.parse(download_item)

|

|

112

|

-

if not isgenerator(parse_iterators):

|

|

113

|

-

raise TypeError("parse function isn't a generator")

|

|

114

|

-

for parse_item in parse_iterators:

|

|

115

|

-

iterator_status = True

|

|

116

|

-

if isinstance(parse_item, Response):

|

|

117

|

-

raise TypeError("upload_item can't be a Response instance")

|

|

118

|

-

self.distribute(parse_item, seed)

|

|

119

|

-

else:

|

|

120

|

-

self.distribute(download_item, seed)

|

|

121

|

-

else:

|

|

122

|

-

self.distribute(request_item, seed)

|

|

123

|

-

|

|

124

|

-

if not iterator_status:

|

|

125

|

-

raise ValueError("request/download/parse function yield value error!")

|

|

126

|

-

|

|

127

|

-

except Exception as e:

|

|

128

|

-

logger.info(LogTemplate.download_exception.format(

|

|

129

|

-

detail=seed_detail_log_info,

|

|

130

|

-

retry=seed.params.retry,

|

|

131

|

-

priority=seed.params.priority,

|

|

132

|

-

seed_version=seed.params.seed_version,

|

|

133

|

-

identifier=seed.identifier or "",

|

|

134

|

-

exception=''.join(traceback.format_exception(type(e), e, e.__traceback__))

|

|

135

|

-

))

|

|

136

|

-

seed.params.retry += 1

|

|

137

|

-

self.launcher_queue['todo'].push(seed)

|

|

138

|

-

finally:

|

|

139

|

-

time.sleep(0.1)

|

|

140

|

-

|

|

141

|

-

def run(self):

|

|

142

|

-

for index in range(self.spider_thread_num):

|

|

143

|

-

threading.Thread(name=f"spider_{index}", target=self.spider).start()

|

|

144

|

-

|

cobweb/crawlers/file_crawler.py

DELETED

|

@@ -1,98 +0,0 @@

|

|

|

1

|

-

import os

|

|

2

|

-

from typing import Union

|

|

3

|

-

from cobweb import setting

|

|

4

|

-

from cobweb.utils import OssUtil

|

|

5

|

-

from cobweb.crawlers import Crawler

|

|

6

|

-

from cobweb.base import Seed, BaseItem, Request, Response

|

|

7

|

-

from cobweb.exceptions import OssDBPutPartError, OssDBMergeError

|

|

8

|

-

|

|

9

|

-

|

|

10

|

-

oss_util = OssUtil(is_path_style=bool(int(os.getenv("PRIVATE_LINK", 0))))

|

|

11

|

-

|

|

12

|

-

|

|

13

|

-

class FileCrawlerAir(Crawler):

|

|

14

|

-

|

|

15

|

-

@staticmethod

|

|

16

|

-

def download(item: Request) -> Union[Seed, BaseItem, Response, str]:

|

|

17

|

-

seed_dict = item.seed.to_dict

|

|

18

|

-

seed_dict["bucket_name"] = oss_util.bucket

|

|

19

|

-

try:

|

|

20

|

-

seed_dict["oss_path"] = key = item.seed.oss_path or getattr(item, "oss_path")

|

|

21

|

-

|

|

22

|

-

if oss_util.exists(key):

|

|

23

|

-

seed_dict["data_size"] = oss_util.head(key).content_length

|

|

24

|

-

yield Response(item.seed, "exists", **seed_dict)

|

|

25

|

-

|

|

26

|

-

else:

|

|

27

|

-

seed_dict.setdefault("end", "")

|

|

28

|

-

seed_dict.setdefault("start", 0)

|

|

29

|

-

|

|

30

|

-

if seed_dict["end"] or seed_dict["start"]:

|

|

31

|

-

start, end = seed_dict["start"], seed_dict["end"]

|

|

32

|

-

item.request_setting["headers"]['Range'] = f'bytes={start}-{end}'

|

|

33

|

-

|

|

34

|

-

if not item.seed.identifier:

|

|

35

|

-

content = b""

|

|

36

|

-

chunk_size = oss_util.chunk_size

|

|

37

|

-

min_upload_size = oss_util.min_upload_size

|

|

38

|

-

seed_dict.setdefault("position", 1)

|

|

39

|

-

|

|

40

|

-

response = item.download()

|

|

41

|

-

|

|

42

|

-

content_type = response.headers.get("content-type", "").split(";")[0]

|

|

43

|

-

seed_dict["data_size"] = content_length = int(response.headers.get("content-length", 0))

|

|

44

|

-

|

|

45

|

-

if content_type and content_type in setting.FILE_FILTER_CONTENT_TYPE:

|

|

46

|

-

"""过滤响应文件类型"""

|

|

47

|

-

response.close()

|

|

48

|

-

seed_dict["filter"] = True

|

|

49

|

-

seed_dict["msg"] = f"response content type is {content_type}"

|

|

50

|

-

yield Response(item.seed, response, **seed_dict)

|

|

51

|

-

|

|

52

|

-

elif seed_dict['position'] == 1 and min_upload_size >= content_length > 0:

|

|

53

|

-

"""过小文件标识返回"""

|

|

54

|

-

response.close()

|

|

55

|

-

seed_dict["filter"] = True

|

|

56

|

-

seed_dict["msg"] = "file size is too small"

|

|

57

|

-

yield Response(item.seed, response, **seed_dict)

|

|

58

|

-

|

|

59

|

-

elif seed_dict['position'] == 1 and chunk_size > content_length > min_upload_size:

|

|

60

|

-

"""小文件直接下载"""

|

|

61

|

-

for part_data in response.iter_content(chunk_size):

|

|

62

|

-

content += part_data

|

|

63

|

-

response.close()

|

|

64

|

-

oss_util.put(key, content)

|

|

65

|

-

yield Response(item.seed, response, **seed_dict)

|

|

66

|

-

|

|

67

|

-

else:

|

|

68

|

-

"""中大文件同步分片下载"""

|

|

69

|

-

seed_dict.setdefault("upload_id", oss_util.init_part(key).upload_id)

|

|

70

|

-

|

|

71

|

-

for part_data in response.iter_content(chunk_size):

|

|

72

|

-

content += part_data

|

|

73

|

-

if len(content) >= chunk_size:

|

|

74

|

-

upload_data = content[:chunk_size]

|

|

75

|

-

content = content[chunk_size:]

|

|

76

|

-

oss_util.put_part(key, seed_dict["upload_id"], seed_dict['position'], content)

|

|

77

|

-

seed_dict['start'] += len(upload_data)

|

|

78

|

-

seed_dict['position'] += 1

|

|

79

|

-

|

|

80

|

-

response.close()

|

|

81

|

-

|

|

82

|

-

if content:

|

|

83

|

-

oss_util.put_part(key, seed_dict["upload_id"], seed_dict['position'], content)

|

|

84

|

-

oss_util.merge(key, seed_dict["upload_id"])

|

|

85

|

-

seed_dict["data_size"] = oss_util.head(key).content_length

|

|

86

|

-

yield Response(item.seed, response, **seed_dict)

|

|

87

|

-

|

|

88

|

-

elif item.seed.identifier == "merge":

|

|

89

|

-

oss_util.merge(key, seed_dict["upload_id"])

|

|

90

|

-

seed_dict["data_size"] = oss_util.head(key).content_length

|

|

91

|

-

yield Response(item.seed, "merge", **seed_dict)

|

|

92

|

-

|

|

93

|

-

except OssDBPutPartError:

|

|

94

|

-

yield Seed(seed_dict)

|

|

95

|

-

except OssDBMergeError:

|

|

96

|

-

yield Seed(seed_dict, identifier="merge")

|

|

97

|

-

|

|

98

|

-

|

cobweb/launchers/launcher_air.py

DELETED

|

@@ -1,88 +0,0 @@

|

|

|

1

|

-

import time

|

|

2

|

-

|

|

3

|

-

from cobweb.base import logger

|

|

4

|

-

from cobweb.constant import LogTemplate

|

|

5

|

-

from .launcher import Launcher, check_pause

|

|

6

|

-

|

|

7

|

-

|

|

8

|

-

class LauncherAir(Launcher):

|

|

9

|

-

|

|

10

|

-

# def _scheduler(self):

|

|

11

|

-

# if self.start_seeds:

|

|

12

|

-

# self.__LAUNCHER_QUEUE__['todo'].push(self.start_seeds)

|

|

13

|

-

|

|

14

|

-

@check_pause

|

|

15

|

-

def _insert(self):

|

|

16

|

-

seeds = {}

|

|

17

|

-

status = self.__LAUNCHER_QUEUE__['new'].length < self._new_queue_max_size

|

|

18

|

-

for _ in range(self._new_queue_max_size):

|

|

19

|

-

seed = self.__LAUNCHER_QUEUE__['new'].pop()

|

|

20

|

-

if not seed:

|

|

21

|

-

break

|

|

22

|

-

seeds[seed.to_string] = seed.params.priority

|

|

23

|

-

if seeds:

|

|

24

|

-

self.__LAUNCHER_QUEUE__['todo'].push(seeds)

|

|

25

|

-

if status:

|

|

26

|

-

time.sleep(self._new_queue_wait_seconds)

|

|

27

|

-

|

|

28

|

-

@check_pause

|

|

29

|

-

def _delete(self):

|

|

30

|

-

seeds = []

|

|

31

|

-

status = self.__LAUNCHER_QUEUE__['done'].length < self._done_queue_max_size

|

|

32

|

-

|

|

33

|

-

for _ in range(self._done_queue_max_size):

|

|

34

|

-

seed = self.__LAUNCHER_QUEUE__['done'].pop()

|

|

35

|

-

if not seed:

|

|

36

|

-

break

|

|

37

|

-

seeds.append(seed.to_string)

|

|

38

|

-

|

|

39

|

-

if seeds:

|

|

40

|

-

self._remove_doing_seeds(seeds)

|

|

41

|

-

|

|

42

|

-

if status:

|

|

43

|

-

time.sleep(self._done_queue_wait_seconds)

|

|

44

|

-

|

|

45

|

-

def _polling(self):

|

|

46

|

-

|

|

47

|

-

check_emtpy_times = 0

|

|

48

|

-

|

|

49

|

-

while not self._stop.is_set():

|

|

50

|

-

|

|

51

|

-

queue_not_empty_count = 0

|

|

52

|

-

pooling_wait_seconds = 30

|

|

53

|

-

|

|

54

|

-

for q in self.__LAUNCHER_QUEUE__.values():

|

|

55

|

-

if q.length != 0:

|

|

56

|

-

queue_not_empty_count += 1

|

|

57

|

-

|

|

58

|

-

if queue_not_empty_count == 0:

|

|

59

|

-

pooling_wait_seconds = 3

|

|

60

|

-

if self._pause.is_set():

|

|

61

|

-

check_emtpy_times = 0

|

|

62

|

-

if not self._task_model:

|

|

63

|

-

logger.info("Done! Ready to close thread...")

|

|

64

|

-

self._stop.set()

|

|

65

|

-

elif check_emtpy_times > 2:

|

|

66

|

-

self.__DOING__ = {}

|

|

67

|

-

self._pause.set()

|

|

68

|

-

else:

|

|

69

|

-

logger.info(

|

|

70

|

-

"check whether the task is complete, "

|

|

71

|

-

f"reset times {3 - check_emtpy_times}"

|

|

72

|

-

)

|

|

73

|

-

check_emtpy_times += 1

|

|

74

|

-

elif self._pause.is_set():

|

|

75

|

-

self._pause.clear()

|

|

76

|

-

self._execute()

|

|

77

|

-

else:

|

|

78

|

-

logger.info(LogTemplate.launcher_air_polling.format(

|

|

79

|

-

task=self.task,

|

|

80

|

-

doing_len=len(self.__DOING__.keys()),

|

|

81

|

-

todo_len=self.__LAUNCHER_QUEUE__['todo'].length,

|

|

82

|

-

done_len=self.__LAUNCHER_QUEUE__['done'].length,

|

|

83

|

-

upload_len=self.__LAUNCHER_QUEUE__['upload'].length,

|

|

84

|

-

))

|

|

85

|

-

|

|

86

|

-

time.sleep(pooling_wait_seconds)

|

|

87

|

-

|

|

88

|

-

|