active-vision 0.4.0__py3-none-any.whl → 0.4.1__py3-none-any.whl

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- active_vision/__init__.py +1 -1

- {active_vision-0.4.0.dist-info → active_vision-0.4.1.dist-info}/METADATA +7 -14

- active_vision-0.4.1.dist-info/RECORD +6 -0

- active_vision-0.4.0.dist-info/RECORD +0 -6

- {active_vision-0.4.0.dist-info → active_vision-0.4.1.dist-info}/WHEEL +0 -0

- {active_vision-0.4.0.dist-info → active_vision-0.4.1.dist-info}/licenses/LICENSE +0 -0

active_vision/__init__.py

CHANGED

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: active-vision

|

|

3

|

-

Version: 0.4.

|

|

3

|

+

Version: 0.4.1

|

|

4

4

|

Summary: Active learning for computer vision.

|

|

5

5

|

Project-URL: Homepage, https://github.com/dnth/active-vision

|

|

6

6

|

Project-URL: Bug Tracker, https://github.com/dnth/active-vision/issues

|

|

@@ -16,6 +16,7 @@ Requires-Dist: loguru>=0.7.3

|

|

|

16

16

|

Requires-Dist: seaborn>=0.13.2

|

|

17

17

|

Requires-Dist: timm>=1.0.13

|

|

18

18

|

Requires-Dist: transformers>=4.48.0

|

|

19

|

+

Requires-Dist: xinfer>=0.3.2

|

|

19

20

|

Provides-Extra: dev

|

|

20

21

|

Requires-Dist: black>=22.0; extra == 'dev'

|

|

21

22

|

Requires-Dist: flake8>=4.0; extra == 'dev'

|

|

@@ -42,8 +43,6 @@ Requires-Dist: pygments; extra == 'docs'

|

|

|

42

43

|

Requires-Dist: pymdown-extensions; extra == 'docs'

|

|

43

44

|

Requires-Dist: sphinx; extra == 'docs'

|

|

44

45

|

Requires-Dist: watchdog; extra == 'docs'

|

|

45

|

-

Provides-Extra: zero-shot

|

|

46

|

-

Requires-Dist: x-infer>=0.3.2; extra == 'zero-shot'

|

|

47

46

|

Description-Content-Type: text/markdown

|

|

48

47

|

|

|

49

48

|

[](https://pypi.org/project/active-vision/)

|

|

@@ -139,6 +138,8 @@ pip install -e .

|

|

|

139

138

|

[![Open In Colab][colab_badge]](https://colab.research.google.com/github/dnth/active-vision/blob/main/nbs/imagenette/quickstart.ipynb)

|

|

140

139

|

[![Open In Kaggle][kaggle_badge]](https://kaggle.com/kernels/welcome?src=https://github.com/dnth/active-vision/blob/main/nbs/imagenette/quickstart.ipynb)

|

|

141

140

|

|

|

141

|

+

The following are code snippets for the active learning loop in active-vision. I recommend running the quickstart notebook in Colab or Kaggle to see the full workflow.

|

|

142

|

+

|

|

142

143

|

```python

|

|

143

144

|

from active_vision import ActiveLearner

|

|

144

145

|

|

|

@@ -149,11 +150,10 @@ al = ActiveLearner(name="cycle-1")

|

|

|

149

150

|

al.load_model(model="resnet18", pretrained=True)

|

|

150

151

|

|

|

151

152

|

# Load dataset

|

|

152

|

-

train_df = pd.read_parquet("training_samples.parquet")

|

|

153

153

|

al.load_dataset(train_df, filepath_col="filepath", label_col="label", batch_size=8)

|

|

154

154

|

|

|

155

155

|

# Train model

|

|

156

|

-

al.train(epochs=10, lr=5e-3

|

|

156

|

+

al.train(epochs=10, lr=5e-3)

|

|

157

157

|

|

|

158

158

|

# Evaluate the model on a *labeled* evaluation set

|

|

159

159

|

accuracy = al.evaluate(eval_df, filepath_col="filepath", label_col="label")

|

|

@@ -177,8 +177,8 @@ samples = al.sample_combination(

|

|

|

177

177

|

},

|

|

178

178

|

)

|

|

179

179

|

|

|

180

|

-

# Launch a Gradio UI to label the

|

|

181

|

-

al.label(samples, output_filename="

|

|

180

|

+

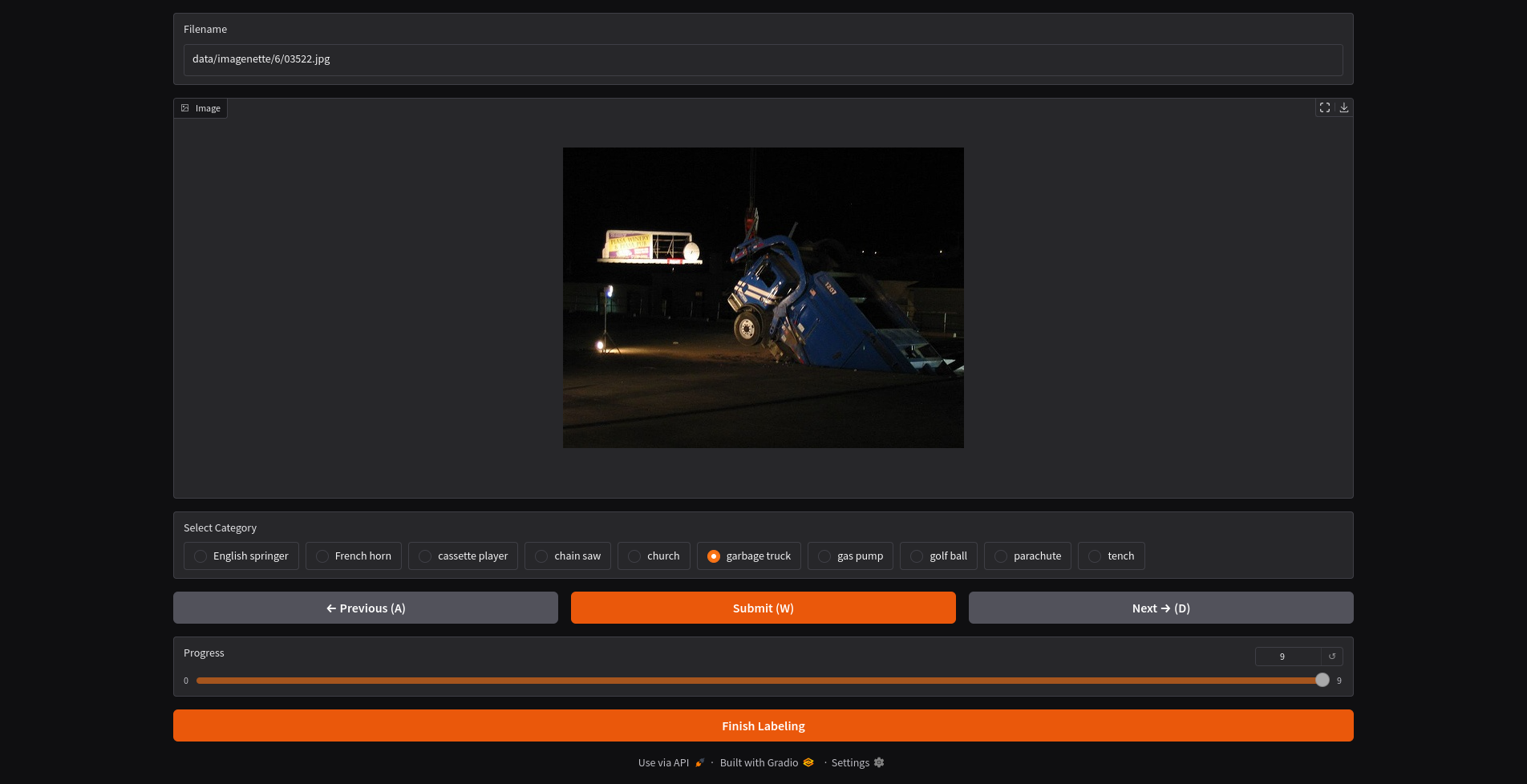

# Launch a Gradio UI to label the samples, save the labeled samples to a file

|

|

181

|

+

al.label(samples, output_filename="samples.parquet")

|

|

182

182

|

```

|

|

183

183

|

|

|

184

184

|

|

|

@@ -191,18 +191,11 @@ Once complete, the labeled samples will be save into a new df.

|

|

|

191

191

|

We can now add the newly labeled data to the training set.

|

|

192

192

|

|

|

193

193

|

```python

|

|

194

|

-

# Add newly labeled data to the dataset

|

|

195

194

|

al.add_to_dataset(labeled_df, output_filename="active_labeled.parquet")

|

|

196

195

|

```

|

|

197

196

|

|

|

198

197

|

Repeat the process until the model is good enough. Use the dataset to train a larger model and deploy.

|

|

199

198

|

|

|

200

|

-

> [!TIP]

|

|

201

|

-

> For the toy dataset, I got to about 93% accuracy on the evaluation set with 200+ labeled images. The best performing model on the [leaderboard](https://github.com/fastai/imagenette) got 95.11% accuracy training on all 9469 labeled images.

|

|

202

|

-

>

|

|

203

|

-

> This took me about 6 iterations of relabeling. Each iteration took about 5 minutes to complete including labeling and model training (resnet18). See the [notebook](./nbs/04_relabel_loop.ipynb) for more details.

|

|

204

|

-

>

|

|

205

|

-

> But using the dataset of 200+ images, I trained a more capable model (convnext_small_in22k) and got 99.3% accuracy on the evaluation set. See the [notebook](./nbs/05_retrain_larger.ipynb) for more details.

|

|

206

199

|

|

|

207

200

|

|

|

208

201

|

## 📊 Benchmarks

|

|

@@ -0,0 +1,6 @@

|

|

|

1

|

+

active_vision/__init__.py,sha256=vauWDAlrr6fiIylIKSzErXOEopRtTsBk8G4hC9418M0,43

|

|

2

|

+

active_vision/core.py,sha256=ZDRylM3KsoLxy9qA9bld4WxzcKcyCwH8IJ1cFxtz5mE,41607

|

|

3

|

+

active_vision-0.4.1.dist-info/METADATA,sha256=LpgLc_E7jJVXxUHrIPv-1RZq_CEE3enyb0O2PDZMrJM,17262

|

|

4

|

+

active_vision-0.4.1.dist-info/WHEEL,sha256=qtCwoSJWgHk21S1Kb4ihdzI2rlJ1ZKaIurTj_ngOhyQ,87

|

|

5

|

+

active_vision-0.4.1.dist-info/licenses/LICENSE,sha256=xx0jnfkXJvxRnG63LTGOxlggYnIysveWIZ6H3PNdCrQ,11357

|

|

6

|

+

active_vision-0.4.1.dist-info/RECORD,,

|

|

@@ -1,6 +0,0 @@

|

|

|

1

|

-

active_vision/__init__.py,sha256=fzly76fAU-lTwO6Ne4bvQjwryPBaB640_waXGbVmEts,43

|

|

2

|

-

active_vision/core.py,sha256=ZDRylM3KsoLxy9qA9bld4WxzcKcyCwH8IJ1cFxtz5mE,41607

|

|

3

|

-

active_vision-0.4.0.dist-info/METADATA,sha256=8uGHfmSNjsPOxu6tTfUIs2yVlQ3EKvBVMJTB0owSR0E,17954

|

|

4

|

-

active_vision-0.4.0.dist-info/WHEEL,sha256=qtCwoSJWgHk21S1Kb4ihdzI2rlJ1ZKaIurTj_ngOhyQ,87

|

|

5

|

-

active_vision-0.4.0.dist-info/licenses/LICENSE,sha256=xx0jnfkXJvxRnG63LTGOxlggYnIysveWIZ6H3PNdCrQ,11357

|

|

6

|

-

active_vision-0.4.0.dist-info/RECORD,,

|

|

File without changes

|

|

File without changes

|