grnsight 6.0.7 → 7.2.0

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/.eslintrc.yml +4 -4

- package/.github/workflows/node.js.yml +35 -0

- package/README.md +1 -1

- package/database/README.md +218 -97

- package/database/constants.py +42 -0

- package/database/filter_update.py +168 -0

- package/database/grnsettings-database/README.md +52 -0

- package/database/grnsettings-database/schema.sql +4 -0

- package/database/loader.py +30 -0

- package/database/loader_update.py +36 -0

- package/database/network-database/scripts/generate_network.py +15 -23

- package/database/network-database/scripts/generate_new_network_version.py +17 -24

- package/database/protein-protein-database/README.md +71 -0

- package/database/protein-protein-database/schema.sql +37 -0

- package/database/protein-protein-database/scripts/generate_protein_network.py +227 -0

- package/database/protein-protein-database/scripts/remove_duplicates.sh +4 -0

- package/database/utils.py +418 -0

- package/package.json +3 -2

- package/server/app.js +2 -0

- package/server/config/config.js +4 -4

- package/server/controllers/additional-sheet-parser.js +2 -1

- package/server/controllers/constants.js +5 -0

- package/server/controllers/custom-workbook-controller.js +4 -3

- package/server/controllers/demo-workbooks.js +1462 -6

- package/server/controllers/export-constants.js +3 -2

- package/server/controllers/exporters/sif.js +6 -1

- package/server/controllers/exporters/xlsx.js +8 -3

- package/server/controllers/expression-sheet-parser.js +0 -6

- package/server/controllers/grnsettings-database-controller.js +17 -0

- package/server/controllers/importers/sif.js +30 -11

- package/server/controllers/network-database-controller.js +2 -2

- package/server/controllers/network-sheet-parser.js +54 -12

- package/server/controllers/protein-database-controller.js +18 -0

- package/server/controllers/sif-constants.js +11 -4

- package/server/controllers/spreadsheet-controller.js +44 -1

- package/server/controllers/workbook-constants.js +21 -4

- package/server/dals/expression-dal.js +4 -4

- package/server/dals/grnsetting-dal.js +49 -0

- package/server/dals/network-dal.js +14 -15

- package/server/dals/protein-dal.js +106 -0

- package/test/additional-sheet-parser-tests.js +1 -1

- package/test/export-tests.js +136 -9

- package/test/import-sif-tests.js +67 -13

- package/test/test.js +1 -1

- package/test-files/additional-sheet-test-files/optimization-parameters-default.xlsx +0 -0

- package/test-files/demo-files/18_proteins_81_edges_PPI.xlsx +0 -0

- package/test-files/expression-data-test-sheets/expression_sheet_missing_data_ok_export_exact.xlsx +0 -0

- package/web-client/config/config.js +4 -4

- package/web-client/public/js/api/grnsight-api.js +18 -3

- package/web-client/public/js/constants.js +27 -12

- package/web-client/public/js/generateNetwork.js +170 -72

- package/web-client/public/js/graph.js +424 -161

- package/web-client/public/js/grnsight.js +25 -4

- package/web-client/public/js/grnstate.js +4 -1

- package/web-client/public/js/iframe-coordination.js +3 -3

- package/web-client/public/js/setup-handlers.js +76 -61

- package/web-client/public/js/setup-load-and-import-handlers.js +32 -7

- package/web-client/public/js/update-app.js +119 -28

- package/web-client/public/js/upload.js +142 -85

- package/web-client/public/js/warnings.js +25 -0

- package/web-client/public/lib/bootstrap.file-input/bootstrap.file-input.js +0 -1

- package/web-client/public/stylesheets/grnsight.styl +40 -16

- package/web-client/views/components/demo.pug +7 -5

- package/web-client/views/upload.pug +64 -50

- package/database/network-database/scripts/filter_genes.py +0 -76

- package/database/network-database/scripts/loader.py +0 -79

- package/database/network-database/scripts/loader_updates.py +0 -99

package/.eslintrc.yml

CHANGED

|

@@ -4,9 +4,9 @@ env:

|

|

|

4

4

|

jquery: true

|

|

5

5

|

mocha: true

|

|

6

6

|

es6: true

|

|

7

|

-

extends:

|

|

7

|

+

extends: "eslint:recommended"

|

|

8

8

|

parserOptions:

|

|

9

|

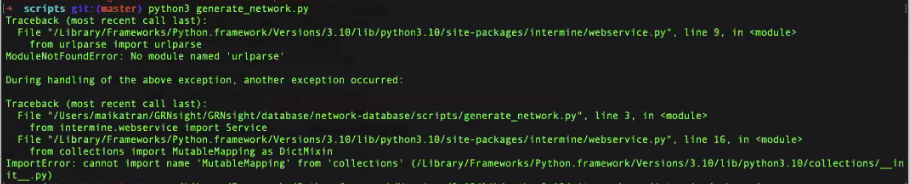

-

ecmaVersion:

|

|

9

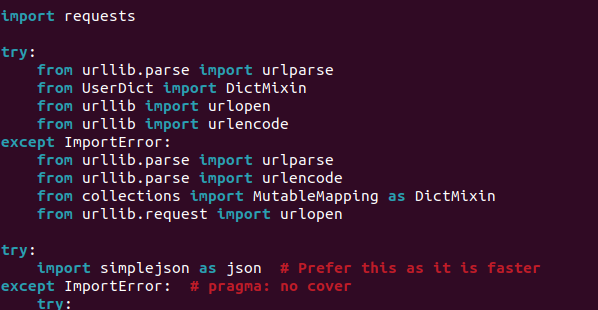

|

+

ecmaVersion: 8

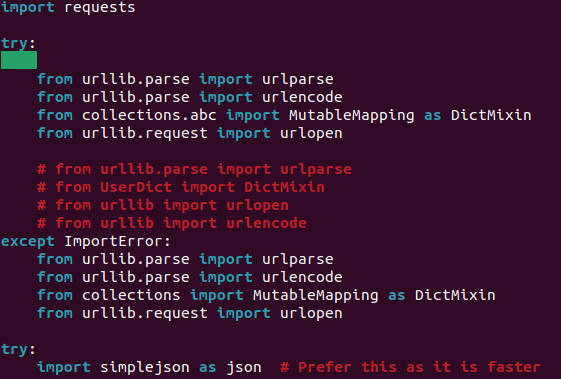

|

|

10

10

|

sourceType: module

|

|

11

11

|

ecmaFeatures:

|

|

12

12

|

jsx: true

|

|

@@ -45,7 +45,7 @@ rules:

|

|

|

45

45

|

brace-style:

|

|

46

46

|

- error

|

|

47

47

|

- 1tbs

|

|

48

|

-

- allowSingleLine: true

|

|

48

|

+

- allowSingleLine: true

|

|

49

49

|

comma-spacing:

|

|

50

50

|

- error

|

|

51

51

|

max-len:

|

|

@@ -63,6 +63,6 @@ rules:

|

|

|

63

63

|

- error

|

|

64

64

|

space-before-function-paren:

|

|

65

65

|

- error

|

|

66

|

-

- anonymous:

|

|

66

|

+

- anonymous: "always"

|

|

67

67

|

no-trailing-spaces:

|

|

68

68

|

- error

|

|

@@ -0,0 +1,35 @@

|

|

|

1

|

+

# This workflow will do a clean installation of node dependencies, cache/restore them, build the source code and run tests across different versions of node

|

|

2

|

+

# For more information see: https://docs.github.com/en/actions/automating-builds-and-tests/building-and-testing-nodejs

|

|

3

|

+

|

|

4

|

+

name: Node.js CI

|

|

5

|

+

|

|

6

|

+

on: [push]

|

|

7

|

+

|

|

8

|

+

jobs:

|

|

9

|

+

build:

|

|

10

|

+

|

|

11

|

+

runs-on: ubuntu-latest

|

|

12

|

+

|

|

13

|

+

strategy:

|

|

14

|

+

matrix:

|

|

15

|

+

node-version: [18.x, 20.x, 22.x]

|

|

16

|

+

|

|

17

|

+

steps:

|

|

18

|

+

- uses: actions/checkout@v4

|

|

19

|

+

# install system dependencies needed by the 'canvas' package

|

|

20

|

+

- name: Install dependencies for canvas

|

|

21

|

+

run: |

|

|

22

|

+

sudo apt-get update

|

|

23

|

+

sudo apt-get install -y libcairo2-dev libpango1.0-dev libjpeg62 libgif-dev librsvg2-dev

|

|

24

|

+

- name: Use Node.js ${{ matrix.node-version }}

|

|

25

|

+

uses: actions/setup-node@v4

|

|

26

|

+

with:

|

|

27

|

+

node-version: ${{ matrix.node-version }}

|

|

28

|

+

cache: 'npm'

|

|

29

|

+

|

|

30

|

+

- run: npm ci

|

|

31

|

+

- run: npm run lint

|

|

32

|

+

- run: npm run build --if-present

|

|

33

|

+

- run: npm test

|

|

34

|

+

|

|

35

|

+

|

package/README.md

CHANGED

|

@@ -1,7 +1,7 @@

|

|

|

1

1

|

GRNsight

|

|

2

2

|

========

|

|

3

3

|

[](https://zenodo.org/badge/latestdoi/16195791)

|

|

4

|

-

[](https://github.com/dondi/GRNsight/actions/workflows/node.js.yml)

|

|

5

5

|

[](https://coveralls.io/github/dondi/GRNsight?branch=master)

|

|

6

6

|

|

|

7

7

|

http://dondi.github.io/GRNsight/

|

package/database/README.md

CHANGED

|

@@ -1,111 +1,232 @@

|

|

|

1

1

|

# GRNsight Database

|

|

2

|

+

|

|

2

3

|

Here are the files pertaining to both the network and expression databases. Look within the README.md files of both folders for information pertinent to the schema that you intend to be using.

|

|

4

|

+

|

|

3

5

|

## Setting up a local postgres GRNsight Database

|

|

6

|

+

|

|

4

7

|

1. Installing PostgreSQL on your computer

|

|

5

|

-

|

|

6

|

-

|

|

7

|

-

|

|

8

|

-

|

|

9

|

-

|

|

10

|

-

|

|

11

|

-

|

|

12

|

-

|

|

13

|

-

|

|

14

|

-

|

|

15

|

-

|

|

16

|

-

|

|

17

|

-

|

|

18

|

-

|

|

19

|

-

|

|

8

|

+

|

|

9

|

+

- MacOS and Windows can follow these instructions on how to install postgreSQL.

|

|

10

|

+

|

|

11

|

+

- Install the software at this [link](https://www.postgresql.org/download/)

|

|

12

|

+

- > MacOS users: It is recommended to install with homebrew rather than the interactive installation in order to correctly view the `initdb --locale=C -E UTF-8 location-of-cluster` message in the documentation.

|

|

13

|

+

- > Windows users: when prompted for a password at the end of the installation process, save this password. It is the password for the postgres user

|

|

14

|

+

- Initialize the database

|

|

15

|

+

- If your terminal emits a message that looks like `initdb --locale=C -E UTF-8 location-of-cluster` from Step 1B, then your installer has initialized a database for you.

|

|

16

|

+

- Open the terminal and type the command `initdb --locale=C -E UTF-8 location-of-cluster`

|

|

17

|

+

- "Cluster" is the PostgreSQL term for the file structure of a PostgreSQL database instance

|

|

18

|

+

- You will have to modify location-of-cluster to the folder name you want to store the database (you don't need to create a folder, the command will create the folder for you, just create the name)

|

|

19

|

+

- Start and stop the server

|

|

20

|

+

- Additionally, your installer may start the server for you upon installation (You can save this command for further reuse).

|

|

21

|

+

- To start the server yourself run `pg_ctl start -D location-of-cluster` (You can save this command for further reuse).

|

|

22

|

+

- To stop the server run `pg_ctl stop -D location-of-cluster`.

|

|

23

|

+

- After installing with homebrew on MacOS, you may receive an error when you try to start the server that the server is unable to be started, and when attempting to stop the server, there terminal states there is no server running. In this case, you have to directly kill the port that the server is running on.

|

|

24

|

+

- To double check that this is the issue, you can open the Activity Monitor app on your computer and search for the `postgres` activity. If there is one, that means the server is running, and we have to terminate the port that the server is running on.

|

|

25

|

+

- First, we have to check what port the server is running on. Navigate to your homebrew installation, which is the same `location-of-cluster` from when the database was initialized and open that location in VSCode.

|

|

26

|

+

- Search for `port =` in the file `postgresql.conf`. By default, the port should be port 5432, but keep note of this port in case it is different.

|

|

27

|

+

- Refer to this Stack Overflow documentation on how to kill a server:

|

|

28

|

+

- https://stackoverflow.com/questions/4075287/node-express-eaddrinuse-address-already-in-use-kill-server

|

|

29

|

+

- If that doesn't work, then refer to the different methods on this link from Stack Overflow:

|

|

30

|

+

- https://stackoverflow.com/questions/42416527/postgres-app-port-in-use

|

|

31

|

+

|

|

32

|

+

- Linux users

|

|

33

|

+

|

|

34

|

+

- The MacOS and Windows instructions will _probably_ not work for you. You can try at your own risk to check.

|

|

35

|

+

- Linux users can try these [instructions](https://www.geeksforgeeks.org/install-postgresql-on-linux/) and that should work for you (...maybe...). If it doesn't try googling instructions with your specific operating system. Sorry!

|

|

36

|

+

|

|

20

37

|

2. Loading data to your database

|

|

21

|

-

|

|

22

|

-

|

|

23

|

-

|

|

24

|

-

|

|

25

|

-

|

|

26

|

-

|

|

27

|

-

|

|

28

|

-

|

|

29

|

-

|

|

30

|

-

|

|

31

|

-

|

|

32

|

-

|

|

33

|

-

|

|

34

|

-

|

|

35

|

-

|

|

36

|

-

|

|

37

|

-

|

|

38

|

-

|

|

39

|

-

|

|

40

|

-

|

|

41

|

-

|

|

42

|

-

|

|

43

|

-

|

|

44

|

-

|

|

45

|

-

|

|

46

|

-

|

|

47

|

-

|

|

48

|

-

|

|

49

|

-

|

|

50

|

-

|

|

51

|

-

|

|

52

|

-

|

|

53

|

-

|

|

54

|

-

|

|

55

|

-

|

|

56

|

-

|

|

57

|

-

|

|

58

|

-

|

|

59

|

-

|

|

60

|

-

|

|

61

|

-

|

|

62

|

-

|

|

63

|

-

|

|

64

|

-

|

|

65

|

-

|

|

66

|

-

|

|

67

|

-

|

|

68

|

-

|

|

69

|

-

|

|

70

|

-

|

|

71

|

-

|

|

72

|

-

|

|

73

|

-

|

|

74

|

-

|

|

75

|

-

|

|

76

|

-

|

|

77

|

-

|

|

78

|

-

|

|

79

|

-

|

|

80

|

-

|

|

81

|

-

|

|

82

|

-

|

|

83

|

-

|

|

84

|

-

|

|

85

|

-

|

|

86

|

-

|

|

87

|

-

|

|

88

|

-

|

|

89

|

-

|

|

90

|

-

|

|

38

|

+

|

|

39

|

+

1. Adding the Schemas to your database.

|

|

40

|

+

|

|

41

|

+

1. Go into your database using the following command:

|

|

42

|

+

|

|

43

|

+

```

|

|

44

|

+

psql postgresql://localhost/postgres

|

|

45

|

+

```

|

|

46

|

+

|

|

47

|

+

> For Windows users use this command:

|

|

48

|

+

|

|

49

|

+

```

|

|

50

|

+

psql -U postgres postgresql://localhost/postgres

|

|

51

|

+

```

|

|

52

|

+

|

|

53

|

+

When prompted for the password, use the password you specified earlier during the installation process. For all future commands requiring you to access postgres, you will need to add `-U postgres `

|

|

54

|

+

|

|

55

|

+

From there, create the schemas using the following commands:

|

|

56

|

+

|

|

57

|

+

```

|

|

58

|

+

CREATE SCHEMA gene_regulatory_network;

|

|

59

|

+

```

|

|

60

|

+

|

|

61

|

+

```

|

|

62

|

+

CREATE SCHEMA gene_expression;

|

|

63

|

+

```

|

|

64

|

+

|

|

65

|

+

```

|

|

66

|

+

CREATE SCHEMA protein_protein_interactions;

|

|

67

|

+

```

|

|

68

|

+

|

|

69

|

+

Once they are created you can exit your database using the command `\q`.

|

|

70

|

+

|

|

71

|

+

2. Once your schema's are created, you can add the table specifications using the following commands:

|

|

72

|

+

|

|

73

|

+

```

|

|

74

|

+

psql -f <path to GRNsight/database/network-database>/schema.sql postgresql://localhost/postgres

|

|

75

|

+

```

|

|

76

|

+

|

|

77

|

+

```

|

|

78

|

+

psql -f <path to GRNsight/database/expression-database>/schema.sql postgresql://localhost/postgres

|

|

79

|

+

```

|

|

80

|

+

|

|

81

|

+

```

|

|

82

|

+

psql -f <path to GRNsight/database/protein-protein-database>/schema.sql postgresql://localhost/postgres

|

|

83

|

+

```

|

|

84

|

+

|

|

85

|

+

Your database is now ready to accept expression and network data!

|

|

86

|

+

|

|

87

|

+

3. However, before you load the data, follow the steps of grnsettings-database README.md. Instructions are [located here!](https://github.com/dondi/GRNsight/tree/master/database/grnsettings-database)

|

|

88

|

+

|

|

89

|

+

2. Loading the GRNsight Network Data to your local database

|

|

90

|

+

|

|

91

|

+

1. Getting Data for Network

|

|

92

|

+

|

|

93

|

+

GRNsight generates Network Data from SGD through YeastMine. In order to run the script that generates these Network files, you must pip3 install the dependencies used. If you get an error saying that a module doesn't exist, just run `pip3 install <Module Name>` and it should fix the error. If the error persists and is found in a specific file on your machine, you might have to manually go into that file and alter the naming conventions of the dependencies that are used. _Note: So far this issue has only occured on Ubuntu 22.04.1, and certain MacOS versions so you might be lucky and not have to do it!_

|

|

94

|

+

|

|

95

|

+

```

|

|

96

|

+

pip3 install pandas requests intermine tzlocal

|

|

97

|

+

```

|

|

98

|

+

|

|

99

|

+

Once the dependencies have been installed, you can run

|

|

100

|

+

|

|

101

|

+

```

|

|

102

|

+

cd <path to GRNsight/database/network-database/scripts>

|

|

103

|

+

python3 generate_network.py

|

|

104

|

+

```

|

|

105

|

+

|

|

106

|

+

> Windows users should use `py` instead of `python3`.

|

|

107

|

+

|

|

108

|

+

This will take a while to get all of the network data and generate all of the files. This will create a folder full of the processed files in `database/network-database/script-results`.

|

|

109

|

+

|

|

110

|

+

**Note:** If you get the following error:

|

|

111

|

+

ImportError: urllib3 v2.0 only supports OpenSSL 1.1.1+, currently the 'ssl' module is compiled with 'OpenSSL 1.1.0h 27 Mar 2018'. See: Drop support for OpenSSL<1.1.1 urllib3/urllib3#2168

|

|

112

|

+

Run `pip install urllib3==1.26.6`

|

|

113

|

+

|

|

114

|

+

**Note:** If you get an error similar to the following image where it references the in then you are one of the unlucky few who has to edit the intermine.py file directly.

|

|

115

|

+

|

|

116

|

+

|

|

117

|

+

|

|

118

|

+

- Navigate the referenced file ( \<path specific to your machine>/intermine/webservice.py )

|

|

119

|

+

- The try-catch block should look like this:

|

|

120

|

+

|

|

121

|

+

-

|

|

122

|

+

- Change it to the following, rerun the `generate_network.py` command and it should work! If it doesn't you may need to troubleshoot a bit further (´◕ ᵔ ◕`✿)_ᶜʳᶦᵉˢ_.

|

|

123

|

+

|

|

124

|

+

-

|

|

125

|

+

|

|

126

|

+

2. Getting Data for Expression

|

|

127

|

+

|

|

128

|

+

1. Create a directory (aka folder) in the database/expression-database folder called `source-files`.

|

|

129

|

+

|

|

91

130

|

```

|

|

92

131

|

mkdir <path to GRNsight/database/expression-database>/source-files

|

|

93

132

|

```

|

|

94

|

-

|

|

95

|

-

|

|

96

|

-

|

|

97

|

-

|

|

133

|

+

|

|

134

|

+

2. Download the _"Expression 2020"_ folder from Box located in `GRNsight > GRNsight Expression > Expression 2020` to your newly created `source-files` folder. Your the path should look like this: GRNsight > database > expression-database > source-files > Expression 2020 > [the actual csv and xlsx files are here!]

|

|

135

|

+

3. Run the pre-processing script on the data. This will create a folder full of the processed files in `database/expression-database/script-results`.

|

|

136

|

+

|

|

98

137

|

```

|

|

99

138

|

cd <path to GRNsight/database/expression-database/scripts>

|

|

100

139

|

python3 preprocessing.py

|

|

101

140

|

```

|

|

102

|

-

|

|

103

|

-

|

|

104

|

-

|

|

141

|

+

|

|

142

|

+

**Note:** If you receive a UnicodeEncodeError add `-X utf8` to the beginning of the command

|

|

143

|

+

|

|

144

|

+

3. Getting Data for Protein-Protein Interactions

|

|

145

|

+

|

|

146

|

+

1. GRNsight generates Protein-Protein Interactions from SGD through YeastMine. In order to run the script that generates these Network files, you must pip3 install the dependencies used. These are the same dependencies used when creating the Network Database, so if you have completed step 2.2.1, then you should be fine. Once the dependencies have been installed, you can run

|

|

147

|

+

|

|

105

148

|

```

|

|

106

|

-

cd <path to GRNsight/database/

|

|

107

|

-

python3

|

|

149

|

+

cd <path to GRNsight/database/protein-protein-database/scripts>

|

|

150

|

+

python3 generate_protein_network.py

|

|

108

151

|

```

|

|

109

|

-

|

|

110

|

-

This

|

|

111

|

-

|

|

152

|

+

|

|

153

|

+

This will take a while {almost 2 hours (´◕ ᵔ ◕\`✿)_ᶜʳᶦᵉˢ_} to get all of the network data and generate all of the files. This will create a folder full of the processed files in `database/protein-protein-database/script-results`.

|

|

154

|

+

|

|

155

|

+

2. Once you have finished generating the loader files, you need to remove duplicate entries from the physical interactions file. The bash script (`remove_duplicates.sh`) does this for you. The resultant file (`no_dupe.csv`)will be generated in the script-results directory located in the sub-directory processed-loader-files. If your machine doesn't support bash shell scripts, then you have to make a new script that removes duplicate lines from a file and writes the results to a file. Sorry!

|

|

156

|

+

|

|

157

|

+

Run the following:

|

|

158

|

+

|

|

159

|

+

```

|

|

160

|

+

chmod u+x remove_duplicates.sh

|

|

161

|

+

|

|

162

|

+

./remove_duplicates.sh

|

|

163

|

+

```

|

|

164

|

+

|

|

165

|

+

3. Loading all processed files into your local database

|

|

166

|

+

|

|

167

|

+

Need to run the script under `database` folder and run `loader.py`. The file contains scripts to collect union genes from expression, network, and protein-protein interactions. After that, the scripts populate all data from generated files in "Getting Data" section above into database.

|

|

168

|

+

|

|

169

|

+

```

|

|

170

|

+

cd <path to GRNsight/database>

|

|

171

|

+

```

|

|

172

|

+

|

|

173

|

+

To load to local database

|

|

174

|

+

|

|

175

|

+

```

|

|

176

|

+

python3 loader.py | psql postgresql://localhost/postgres

|

|

177

|

+

```

|

|

178

|

+

|

|

179

|

+

To load to production database

|

|

180

|

+

|

|

181

|

+

```

|

|

182

|

+

python3 loader.py | psql <path to database>

|

|

183

|

+

```

|

|

184

|

+

|

|

185

|

+

This should output a bunch of COPY print statements to your terminal. Once complete your database is now loaded with the expression, network, and protein-protein interactions data.

|

|

186

|

+

|

|

187

|

+

## Instructions for Updating Database

|

|

188

|

+

|

|

189

|

+

1. Getting new data

|

|

190

|

+

|

|

191

|

+

1. Generate a new network from Yeastmine using the script `generate_network.py` inside `network-database` folder.

|

|

192

|

+

|

|

193

|

+

```

|

|

194

|

+

cd <path to GRNsight/database/network-database/scripts>

|

|

195

|

+

python3 generate_network.py

|

|

196

|

+

```

|

|

197

|

+

|

|

198

|

+

2. Generate a new Protein-Protein Interactions from SGD using Yeastmine

|

|

199

|

+

```

|

|

200

|

+

cd <path to GRNsight/database/protein-protein-database/scripts>

|

|

201

|

+

python3 generate_protein_network.py

|

|

202

|

+

```

|

|

203

|

+

|

|

204

|

+

2. Filter all the missing genes, and updated genes in both Network and Protein-Protein Interactions. Also you need to filter the missing protein, and updated proteins in Protein-Protein Interactions. Everything is done in `filter_update.py`. The script will access the database get all of the genes stored within. From there it will generate a csv file of all genes that are missing from your database, and all genes that have updated their display name (standard like name). After running this script, you will see `missing-genes.csv`, `update-genes.csv` in `processed-loader-files` for both `network-database` and `protein-protein-database`, also `missing-protein.csv` and `update-protein.csv`.

|

|

205

|

+

|

|

206

|

+

```

|

|

207

|

+

cd <path to GRNsight/database/>

|

|

208

|

+

DB_URL="postgresql://[<db_user>:<password>]@<address to database>/<database name>" python3 filter_update.py

|

|

209

|

+

```

|

|

210

|

+

|

|

211

|

+

Ex:

|

|

212

|

+

|

|

213

|

+

```

|

|

214

|

+

DB_URL="postgresql://postgres@localhost/postgres" python3 filter_update.py

|

|

215

|

+

```

|

|

216

|

+

|

|

217

|

+

3. Loading all the updates from Network or Protein-Protein Interactions to database.

|

|

218

|

+

|

|

219

|

+

In the command below, the --network option specifies the network source, which can be either GRN or PPI. Ensure you select the correct network type.

|

|

220

|

+

To load to local database

|

|

221

|

+

|

|

222

|

+

```

|

|

223

|

+

python3 loader_update.py --network=[GRN|PPI] | psql postgresql://localhost/postgres

|

|

224

|

+

```

|

|

225

|

+

|

|

226

|

+

To load to production database

|

|

227

|

+

|

|

228

|

+

```

|

|

229

|

+

python3 loader_update.py --network=[GRN|PPI]| psql <path to database>

|

|

230

|

+

```

|

|

231

|

+

|

|

232

|

+

Continue setting up in the [Initial Setup Wiki page](https://github.com/dondi/GRNsight/wiki/Initial-Setup)

|

|

@@ -0,0 +1,42 @@

|

|

|

1

|

+

class Constants:

|

|

2

|

+

GRN_FOLDER_PATH = 'network-database'

|

|

3

|

+

PPI_FOLDER_PATH = 'protein-protein-database'

|

|

4

|

+

EXPRESSION_FOLDER_PATH = 'expression-database'

|

|

5

|

+

UNION_GENE_FOLDER_PATH = 'union-gene-data/'

|

|

6

|

+

|

|

7

|

+

# Gene data source file path

|

|

8

|

+

GRN_GENE_SOURCE = GRN_FOLDER_PATH + "/script-results/processed-loader-files/gene.csv"

|

|

9

|

+

PPI_GENE_SOURCE = PPI_FOLDER_PATH + "/script-results/processed-loader-files/gene.csv"

|

|

10

|

+

EXPRESSION_GENE_SOURCE = EXPRESSION_FOLDER_PATH + "/script-results/processed-expression/genes.csv"

|

|

11

|

+

|

|

12

|

+

# Union gene data

|

|

13

|

+

GENE_DATA_DIRECTORY = UNION_GENE_FOLDER_PATH + 'union_genes.csv'

|

|

14

|

+

MISSING_GENE_UNION_DIRECTORY = UNION_GENE_FOLDER_PATH + 'union-missing-genes.csv'

|

|

15

|

+

UPDATE_GENE_UNION_DIRECTORY = UNION_GENE_FOLDER_PATH + 'union-update-genes.csv'

|

|

16

|

+

|

|

17

|

+

# Constants name: NETWORK_<table_name>_DATA_DIRECTORY

|

|

18

|

+

GRN_DATABASE_NAMESPACE = 'gene_regulatory_network'

|

|

19

|

+

GRN_SOURCE_TABLE_DATA_DIRECTORY = GRN_FOLDER_PATH + '/script-results/processed-loader-files/source.csv'

|

|

20

|

+

GRN_NETWORK_TABLE_DATA_DIRECTORY = GRN_FOLDER_PATH + '/script-results/processed-loader-files/network.csv'

|

|

21

|

+

|

|

22

|

+

# Protein-protein-interactions

|

|

23

|

+

PPI_DATABASE_NAMESPACE = 'protein_protein_interactions'

|

|

24

|

+

PPI_SOURCE_TABLE_DATA_DIRECTORY = PPI_FOLDER_PATH + '/script-results/processed-loader-files/source.csv'

|

|

25

|

+

PPI_NETWORK_TABLE_DATA_DIRECTORY = PPI_FOLDER_PATH + '/script-results/processed-loader-files/physical_interaction_no_dupe.csv'

|

|

26

|

+

PPI_PROTEIN_TABLE_DATA_DIRECTORY = PPI_FOLDER_PATH + '/script-results/processed-loader-files/protein.csv'

|

|

27

|

+

|

|

28

|

+

# Expression data

|

|

29

|

+

EXPRESISON_DATABASE_NAMESPACE = 'gene_expression'

|

|

30

|

+

EXPRESSION_REFS_TABLE_DATA_DIRECTORY = EXPRESSION_FOLDER_PATH + '/script-results/processed-expression/refs.csv'

|

|

31

|

+

EXPRESSION_METADATA_TABLE_DATA_DIRECTORY = EXPRESSION_FOLDER_PATH + '/script-results/processed-expression/expression-metadata.csv'

|

|

32

|

+

EXPRESSION_EXPRESSION_TABLE_DATA_DIRECTORY = EXPRESSION_FOLDER_PATH + '/script-results/processed-expression/expression-data.csv'

|

|

33

|

+

EXPRESSION_PRODUCTION_RATE_TABLE_DATA_DIRECTORY = EXPRESSION_FOLDER_PATH + '/script-results/processed-expression/production-rates.csv'

|

|

34

|

+

EXPRESSION_DEGRADATION_RATE_TABLE_DATA_DIRECTORY = EXPRESSION_FOLDER_PATH + '/script-results/processed-expression/degradation-rates.csv'

|

|

35

|

+

|

|

36

|

+

# Paths for update files

|

|

37

|

+

PPI_MISSING_GENE_DIRECTORY = PPI_FOLDER_PATH + '/script-results/processed-loader-files/missing-genes.csv'

|

|

38

|

+

PPI_UPDATE_GENE_DIRECTORY = PPI_FOLDER_PATH + '/script-results/processed-loader-files/update-genes.csv'

|

|

39

|

+

PPI_MISSING_PROTEIN_DIRECTORY = PPI_FOLDER_PATH + '/script-results/processed-loader-files/missing-proteins.csv'

|

|

40

|

+

PPI_UPDATE_PROTEIN_DIRECTORY = PPI_FOLDER_PATH + '/script-results/processed-loader-files/update-proteins.csv'

|

|

41

|

+

GRN_MISSING_GENE_DIRECTORY = GRN_FOLDER_PATH + '/script-results/processed-loader-files/missing-genes.csv'

|

|

42

|

+

GRN_UPDATE_GENE_DIRECTORY = GRN_FOLDER_PATH + '/script-results/processed-loader-files/update-genes.csv'

|

|

@@ -0,0 +1,168 @@

|

|

|

1

|

+

import os

|

|

2

|

+

import csv

|

|

3

|

+

from sqlalchemy import create_engine

|

|

4

|

+

from sqlalchemy import text

|

|

5

|

+

from constants import Constants

|

|

6

|

+

from utils import Utils

|

|

7

|

+

|

|

8

|

+

PROTEIN_GENE_HEADER = f'Gene ID\tDisplay Gene ID\tSpecies\tTaxon ID'

|

|

9

|

+

GRN_GENE_HEADER = f'Gene ID\tDisplay Gene ID\tSpecies\tTaxon ID\tRegulator'

|

|

10

|

+

|

|

11

|

+

def _get_all_data_from_database_table(database_namespace, table_name):

|

|

12

|

+

db = create_engine(os.environ['DB_URL'])

|

|

13

|

+

with db.connect() as connection:

|

|

14

|

+

result_set = connection.execute(text(f"SELECT * FROM {database_namespace}.{table_name}"))

|

|

15

|

+

return result_set.fetchall()

|

|

16

|

+

|

|

17

|

+

def _get_all_db_genes(database_namespace):

|

|

18

|

+

gene_records = _get_all_data_from_database_table(database_namespace, "gene")

|

|

19

|

+

genes = {}

|

|

20

|

+

for gene in gene_records:

|

|

21

|

+

key = (gene[0], gene[3])

|

|

22

|

+

if len(gene) > 4:

|

|

23

|

+

value = (gene[1], gene[2], gene[4])

|

|

24

|

+

else:

|

|

25

|

+

value = (gene[1], gene[2])

|

|

26

|

+

genes[key] = value

|

|

27

|

+

return genes

|

|

28

|

+

|

|

29

|

+

def _get_all_db_grn_genes():

|

|

30

|

+

return _get_all_db_genes(Constants.GRN_DATABASE_NAMESPACE)

|

|

31

|

+

|

|

32

|

+

def _get_all_db_ppi_genes():

|

|

33

|

+

return _get_all_db_genes(Constants.PPI_DATABASE_NAMESPACE)

|

|

34

|

+

|

|

35

|

+

def _get_all_genes():

|

|

36

|

+

db_grn_genes = _get_all_db_grn_genes()

|

|

37

|

+

db_ppi_genes = _get_all_db_ppi_genes()

|

|

38

|

+

|

|

39

|

+

if not os.path.exists('union-gene-data'):

|

|

40

|

+

os.makedirs('union-gene-data')

|

|

41

|

+

Utils.create_union_file([Constants.PPI_GENE_SOURCE, Constants.GRN_GENE_SOURCE], Constants.GENE_DATA_DIRECTORY)

|

|

42

|

+

genes = db_grn_genes

|

|

43

|

+

|

|

44

|

+

for gene in db_ppi_genes:

|

|

45

|

+

if gene not in genes:

|

|

46

|

+

display_gene_id, species = db_ppi_genes[gene]

|

|

47

|

+

genes[gene] = [display_gene_id, species, False]

|

|

48

|

+

|

|

49

|

+

with open(Constants.GENE_DATA_DIRECTORY, 'r+', encoding="UTF-8") as f:

|

|

50

|

+

i = 0

|

|

51

|

+

reader = csv.reader(f)

|

|

52

|

+

for row in reader:

|

|

53

|

+

if i != 0:

|

|

54

|

+

row = row[0].split('\t')

|

|

55

|

+

gene_id = row[0]

|

|

56

|

+

display_gene_id = row[1]

|

|

57

|

+

species = row[2]

|

|

58

|

+

taxon_id = row[3]

|

|

59

|

+

regulator = row[4].capitalize()

|

|

60

|

+

key = (gene_id, taxon_id)

|

|

61

|

+

value = (display_gene_id, species, regulator)

|

|

62

|

+

if key not in genes:

|

|

63

|

+

genes[key] = value

|

|

64

|

+

elif genes[key][0] != display_gene_id:

|

|

65

|

+

if display_gene_id != "None":

|

|

66

|

+

genes[key] = value

|

|

67

|

+

i+=1

|

|

68

|

+

return genes

|

|

69

|

+

|

|

70

|

+

|

|

71

|

+

def get_all_proteins():

|

|

72

|

+

protein_records = _get_all_data_from_database_table(Constants.PPI_DATABASE_NAMESPACE, "protein")

|

|

73

|

+

proteins = {}

|

|

74

|

+

for protein in protein_records:

|

|

75

|

+

key = (protein[0], protein[5])

|

|

76

|

+

value = (protein[1], protein[2], protein[3], protein[4])

|

|

77

|

+

proteins[key] = value

|

|

78

|

+

return proteins

|

|

79

|

+

|

|

80

|

+

def processing_grn_gene_file():

|

|

81

|

+

return _processing_gene_file(_get_all_db_grn_genes(), is_protein=False)

|

|

82

|

+

|

|

83

|

+

def processing_ppi_gene_file():

|

|

84

|

+

return _processing_gene_file(_get_all_db_ppi_genes())

|

|

85

|

+

|

|

86

|

+

def _processing_gene_file(db_genes, is_protein=True):

|

|

87

|

+

print(f'Processing gene')

|

|

88

|

+

missing_genes = {}

|

|

89

|

+

genes_to_update = {}

|

|

90

|

+

all_genes = _get_all_genes()

|

|

91

|

+

for gene in all_genes:

|

|

92

|

+

display_gene_id, species, regulator = all_genes[gene]

|

|

93

|

+

values_for_ppi = (display_gene_id, species)

|

|

94

|

+

values_for_grn = (display_gene_id, species, regulator)

|

|

95

|

+

if gene not in db_genes:

|

|

96

|

+

if is_protein:

|

|

97

|

+

missing_genes[gene] = values_for_ppi

|

|

98

|

+

else:

|

|

99

|

+

missing_genes[gene] = values_for_grn

|

|

100

|

+

elif gene in db_genes and db_genes[gene][0] != display_gene_id:

|

|

101

|

+

if db_genes[gene][0] != "None":

|

|

102

|

+

if is_protein:

|

|

103

|

+

genes_to_update[gene] = values_for_ppi

|

|

104

|

+

else:

|

|

105

|

+

genes_to_update[gene] = values_for_grn

|

|

106

|

+

return missing_genes, genes_to_update

|

|

107

|

+

|

|

108

|

+

def processing_protein_file(file_path, db_proteins):

|

|

109

|

+

print(f'Processing file {file_path}')

|

|

110

|

+

ppi_missing_proteins = {}

|

|

111

|

+

ppi_proteins_to_update = {}

|

|

112

|

+

with open(file_path, 'r+', encoding="UTF-8") as f:

|

|

113

|

+

i = 0

|

|

114

|

+

reader = csv.reader(f)

|

|

115

|

+

for row in reader:

|

|

116

|

+

if i != 0:

|

|

117

|

+

row = row[0].split('\t')

|

|

118

|

+

standard_name = row[0]

|

|

119

|

+

gene_systematic_name = row[1]

|

|

120

|

+

length = float(row[2]) if row[2] != "None" else 0

|

|

121

|

+

molecular_weight = float(row[3]) if row[3] != "None" else 0

|

|

122

|

+

pi = float(row[4]) if row[4] != "None" else 0

|

|

123

|

+

taxon_id = row[5]

|

|

124

|

+

key = (standard_name, taxon_id)

|

|

125

|

+

value = (gene_systematic_name, length, molecular_weight, pi)

|

|

126

|

+

if key not in db_proteins:

|

|

127

|

+

ppi_missing_proteins[key] = value

|

|

128

|

+

elif db_proteins[key] != value:

|

|

129

|

+

ppi_proteins_to_update[key] = value

|

|

130

|

+

i+=1

|

|

131

|

+

return ppi_missing_proteins, ppi_proteins_to_update

|

|

132

|

+

|

|

133

|

+

def create_grn_gene_file(file_path, data):

|

|

134

|

+

_create_gene_file(file_path, GRN_GENE_HEADER, data, is_protein=False)

|

|

135

|

+

|

|

136

|

+

def create_ppi_gene_file(file_path, data):

|

|

137

|

+

_create_gene_file(file_path, PROTEIN_GENE_HEADER, data)

|

|

138

|

+

|

|

139

|

+

def _create_gene_file(file_path, headers, data, is_protein=True):

|

|

140

|

+

print(f'Creating {file_path}\n')

|

|

141

|

+

gene_file = open(file_path, 'w')

|

|

142

|

+

gene_file.write(f'{headers}\n')

|

|

143

|

+

for gene in data:

|

|

144

|

+

if is_protein:

|

|

145

|

+

gene_file.write(f'{gene[0]}\t{data[gene][0]}\t{data[gene][1]}\t{gene[1]}\n')

|

|

146

|

+

else:

|

|

147

|

+

gene_file.write(f'{gene[0]}\t{data[gene][0]}\t{data[gene][1]}\t{gene[1]}\t{data[gene][2]}\n')

|

|

148

|

+

gene_file.close()

|

|

149

|

+

|

|

150

|

+

def create_ppi_protein_file(file_path, data):

|

|

151

|

+

print(f'Creating {file_path}\n')

|

|

152

|

+

protein_file = open(file_path, 'w')

|

|

153

|

+

headers = f'Standard Name\tGene Systematic Name\tLength\tMolecular Weight\tPI\tTaxon ID'

|

|

154

|

+

protein_file.write(f'{headers}\n')

|

|

155

|

+

for protein in data:

|

|

156

|

+

protein_file.write(f'{protein[0]}\t{data[protein][0]}\t{data[protein][1]}\t{data[protein][2]}\t{data[protein][3]}\t{protein[1]}\n')

|

|

157

|

+

protein_file.close()

|

|

158

|

+

|

|

159

|

+

# Processing gene files

|

|

160

|

+

ppi_missing_genes, ppi_genes_to_update = processing_ppi_gene_file()

|

|

161

|

+

grn_missing_genes, grn_genes_to_update = processing_grn_gene_file()

|

|

162

|

+

ppi_missing_proteins, ppi_proteins_to_update = processing_protein_file(Constants.PPI_PROTEIN_TABLE_DATA_DIRECTORY, get_all_proteins())

|

|

163

|

+

create_grn_gene_file(Constants.GRN_MISSING_GENE_DIRECTORY, grn_missing_genes)

|

|

164

|

+

create_grn_gene_file(Constants.GRN_UPDATE_GENE_DIRECTORY, grn_genes_to_update)

|

|

165

|

+

create_ppi_gene_file(Constants.PPI_MISSING_GENE_DIRECTORY, ppi_missing_genes)

|

|

166

|

+

create_ppi_gene_file(Constants.PPI_UPDATE_GENE_DIRECTORY, ppi_genes_to_update)

|

|

167

|

+

create_ppi_protein_file(Constants.PPI_MISSING_PROTEIN_DIRECTORY, ppi_missing_proteins)

|

|

168

|

+

create_ppi_protein_file(Constants.PPI_UPDATE_PROTEIN_DIRECTORY, ppi_proteins_to_update)

|