fsevents 1.0.10 → 1.0.11

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

Potentially problematic release.

This version of fsevents might be problematic. Click here for more details.

- package/node_modules/ansi-styles/package.json +4 -21

- package/node_modules/aws4/node_modules/lru-cache/README.md +1 -1

- package/node_modules/aws4/node_modules/lru-cache/lib/lru-cache.js +1 -0

- package/node_modules/aws4/node_modules/lru-cache/node_modules/pseudomap/package.json +2 -1

- package/node_modules/aws4/node_modules/lru-cache/node_modules/yallist/package.json +2 -1

- package/node_modules/aws4/node_modules/lru-cache/package.json +20 -12

- package/node_modules/aws4/package.json +2 -1

- package/node_modules/bl/package.json +2 -1

- package/node_modules/chalk/package.json +22 -17

- package/node_modules/dashdash/node_modules/assert-plus/package.json +2 -1

- package/node_modules/dashdash/package.json +2 -1

- package/node_modules/escape-string-regexp/package.json +2 -1

- package/node_modules/form-data/package.json +2 -1

- package/node_modules/is-my-json-valid/package.json +2 -1

- package/node_modules/mime-db/package.json +2 -1

- package/node_modules/mime-types/package.json +2 -1

- package/node_modules/node-pre-gyp/CHANGELOG.md +6 -0

- package/node_modules/node-pre-gyp/lib/util/abi_crosswalk.json +8 -0

- package/node_modules/node-pre-gyp/lib/util/versioning.js +14 -2

- package/node_modules/node-pre-gyp/package.json +15 -15

- package/node_modules/oauth-sign/package.json +2 -1

- package/node_modules/once/package.json +1 -1

- package/node_modules/pinkie/package.json +2 -1

- package/node_modules/qs/package.json +2 -1

- package/node_modules/readable-stream/package.json +1 -1

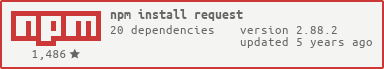

- package/node_modules/request/package.json +2 -1

- package/node_modules/rimraf/node_modules/glob/package.json +2 -1

- package/node_modules/rimraf/package.json +2 -1

- package/node_modules/semver/package.json +2 -1

- package/node_modules/sshpk/package.json +2 -1

- package/node_modules/strip-ansi/package.json +2 -1

- package/node_modules/tar-pack/package.json +1 -1

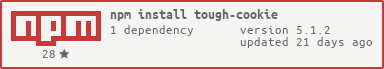

- package/node_modules/tough-cookie/package.json +2 -1

- package/node_modules/tweetnacl/README.md +12 -4

- package/node_modules/tweetnacl/nacl-fast.js +1 -1

- package/node_modules/tweetnacl/nacl-fast.min.js +2 -2

- package/node_modules/tweetnacl/package.json +12 -11

- package/node_modules/verror/package.json +3 -2

- package/package.json +2 -2

- package/node_modules/ansi-styles/license +0 -21

- package/node_modules/aws4/node_modules/lru-cache/.npmignore +0 -4

- package/node_modules/aws4/node_modules/lru-cache/.travis.yml +0 -7

- package/node_modules/aws4/node_modules/lru-cache/CONTRIBUTORS +0 -14

- package/node_modules/aws4/node_modules/lru-cache/LICENSE +0 -15

- package/node_modules/aws4/node_modules/lru-cache/benchmarks/insertion-time.js +0 -32

- package/node_modules/aws4/node_modules/lru-cache/test/basic.js +0 -520

- package/node_modules/aws4/node_modules/lru-cache/test/foreach.js +0 -134

- package/node_modules/aws4/node_modules/lru-cache/test/inspect.js +0 -54

- package/node_modules/aws4/node_modules/lru-cache/test/no-symbol.js +0 -3

- package/node_modules/aws4/node_modules/lru-cache/test/serialize.js +0 -227

|

@@ -1127,6 +1127,10 @@

|

|

|

1127

1127

|

"node_abi": 46,

|

|

1128

1128

|

"v8": "4.5"

|

|

1129

1129

|

},

|

|

1130

|

+

"4.4.1": {

|

|

1131

|

+

"node_abi": 46,

|

|

1132

|

+

"v8": "4.5"

|

|

1133

|

+

},

|

|

1130

1134

|

"5.0.0": {

|

|

1131

1135

|

"node_abi": 47,

|

|

1132

1136

|

"v8": "4.6"

|

|

@@ -1178,5 +1182,9 @@

|

|

|

1178

1182

|

"5.9.0": {

|

|

1179

1183

|

"node_abi": 47,

|

|

1180

1184

|

"v8": "4.6"

|

|

1185

|

+

},

|

|

1186

|

+

"5.9.1": {

|

|

1187

|

+

"node_abi": 47,

|

|

1188

|

+

"v8": "4.6"

|

|

1181

1189

|

}

|

|

1182

1190

|

}

|

|

@@ -25,7 +25,7 @@ function get_electron_abi(runtime, target_version) {

|

|

|

25

25

|

// erroneous CLI call

|

|

26

26

|

throw new Error("Empty target version is not supported if electron is the target.");

|

|

27

27

|

}

|

|

28

|

-

// Electron

|

|

28

|

+

// Electron guarantees that patch version update won't break native modules.

|

|

29

29

|

var sem_ver = semver.parse(target_version);

|

|

30

30

|

return runtime + '-v' + sem_ver.major + '.' + sem_ver.minor;

|

|

31

31

|

}

|

|

@@ -241,6 +241,18 @@ function drop_double_slashes(pathname) {

|

|

|

241

241

|

return pathname.replace(/\/\//g,'/');

|

|

242

242

|

}

|

|

243

243

|

|

|

244

|

+

function get_process_runtime(versions) {

|

|

245

|

+

var runtime = 'node';

|

|

246

|

+

if (versions['node-webkit']) {

|

|

247

|

+

runtime = 'node-webkit';

|

|

248

|

+

} else if (versions.electron) {

|

|

249

|

+

runtime = 'electron';

|

|

250

|

+

}

|

|

251

|

+

return runtime;

|

|

252

|

+

}

|

|

253

|

+

|

|

254

|

+

module.exports.get_process_runtime = get_process_runtime;

|

|

255

|

+

|

|

244

256

|

var default_package_name = '{module_name}-v{version}-{node_abi}-{platform}-{arch}.tar.gz';

|

|

245

257

|

var default_remote_path = '';

|

|

246

258

|

|

|

@@ -249,7 +261,7 @@ module.exports.evaluate = function(package_json,options) {

|

|

|

249

261

|

validate_config(package_json);

|

|

250

262

|

var v = package_json.version;

|

|

251

263

|

var module_version = semver.parse(v);

|

|

252

|

-

var runtime = options.runtime || (process.versions

|

|

264

|

+

var runtime = options.runtime || get_process_runtime(process.versions);

|

|

253

265

|

var opts = {

|

|

254

266

|

name: package_json.name,

|

|

255

267

|

configuration: Boolean(options.debug) ? 'Debug' : 'Release',

|

|

@@ -1,18 +1,18 @@

|

|

|

1

1

|

{

|

|

2

2

|

"_args": [

|

|

3

3

|

[

|

|

4

|

-

"node-pre-gyp

|

|

4

|

+

"node-pre-gyp@0.6.25",

|

|

5

5

|

"/Users/eshanker/Code/fsevents"

|

|

6

6

|

]

|

|

7

7

|

],

|

|

8

|

-

"_from": "node-pre-gyp

|

|

9

|

-

"_id": "node-pre-gyp@0.6.

|

|

8

|

+

"_from": "node-pre-gyp@0.6.25",

|

|

9

|

+

"_id": "node-pre-gyp@0.6.25",

|

|

10

10

|

"_inCache": true,

|

|

11

11

|

"_installable": true,

|

|

12

12

|

"_location": "/node-pre-gyp",

|

|

13

13

|

"_npmOperationalInternal": {

|

|

14

14

|

"host": "packages-12-west.internal.npmjs.com",

|

|

15

|

-

"tmp": "tmp/node-pre-gyp-0.6.

|

|

15

|

+

"tmp": "tmp/node-pre-gyp-0.6.25.tgz_1459446165913_0.5912895421497524"

|

|

16

16

|

},

|

|

17

17

|

"_npmUser": {

|

|

18

18

|

"email": "dane@mapbox.com",

|

|

@@ -22,19 +22,19 @@

|

|

|

22

22

|

"_phantomChildren": {},

|

|

23

23

|

"_requested": {

|

|

24

24

|

"name": "node-pre-gyp",

|

|

25

|

-

"raw": "node-pre-gyp

|

|

26

|

-

"rawSpec": "

|

|

25

|

+

"raw": "node-pre-gyp@0.6.25",

|

|

26

|

+

"rawSpec": "0.6.25",

|

|

27

27

|

"scope": null,

|

|

28

|

-

"spec": "

|

|

29

|

-

"type": "

|

|

28

|

+

"spec": "0.6.25",

|

|

29

|

+

"type": "version"

|

|

30

30

|

},

|

|

31

31

|

"_requiredBy": [

|

|

32

32

|

"/"

|

|

33

33

|

],

|

|

34

|

-

"_resolved": "https://registry.npmjs.org/node-pre-gyp/-/node-pre-gyp-0.6.

|

|

35

|

-

"_shasum": "

|

|

34

|

+

"_resolved": "https://registry.npmjs.org/node-pre-gyp/-/node-pre-gyp-0.6.25.tgz",

|

|

35

|

+

"_shasum": "2c6818775e6f1df5e353ba8024f1c0118726545b",

|

|

36

36

|

"_shrinkwrap": null,

|

|

37

|

-

"_spec": "node-pre-gyp

|

|

37

|

+

"_spec": "node-pre-gyp@0.6.25",

|

|

38

38

|

"_where": "/Users/eshanker/Code/fsevents",

|

|

39

39

|

"author": {

|

|

40

40

|

"email": "dane@mapbox.com",

|

|

@@ -77,10 +77,10 @@

|

|

|

77

77

|

},

|

|

78

78

|

"directories": {},

|

|

79

79

|

"dist": {

|

|

80

|

-

"shasum": "

|

|

81

|

-

"tarball": "https://registry.npmjs.org/node-pre-gyp/-/node-pre-gyp-0.6.

|

|

80

|

+

"shasum": "2c6818775e6f1df5e353ba8024f1c0118726545b",

|

|

81

|

+

"tarball": "https://registry.npmjs.org/node-pre-gyp/-/node-pre-gyp-0.6.25.tgz"

|

|

82

82

|

},

|

|

83

|

-

"gitHead": "

|

|

83

|

+

"gitHead": "92d8e8087b0834b9e6d43b7f7215bd8c42c713b0",

|

|

84

84

|

"homepage": "https://github.com/mapbox/node-pre-gyp",

|

|

85

85

|

"jshintConfig": {

|

|

86

86

|

"globalstrict": true,

|

|

@@ -135,5 +135,5 @@

|

|

|

135

135

|

"test": "jshint lib lib/util scripts bin/node-pre-gyp && mocha -R spec --timeout 500000",

|

|

136

136

|

"update-crosswalk": "node scripts/abi_crosswalk.js"

|

|

137

137

|

},

|

|

138

|

-

"version": "0.6.

|

|

138

|

+

"version": "0.6.25"

|

|

139

139

|

}

|

|

@@ -54,7 +54,8 @@

|

|

|

54

54

|

],

|

|

55

55

|

"name": "oauth-sign",

|

|

56

56

|

"optionalDependencies": {},

|

|

57

|

-

"readme": "

|

|

57

|

+

"readme": "oauth-sign\n==========\n\nOAuth 1 signing. Formerly a vendor lib in mikeal/request, now a standalone module. \n",

|

|

58

|

+

"readmeFilename": "README.md",

|

|

58

59

|

"repository": {

|

|

59

60

|

"url": "git+https://github.com/mikeal/oauth-sign.git"

|

|

60

61

|

},

|

|

@@ -61,7 +61,8 @@

|

|

|

61

61

|

],

|

|

62

62

|

"name": "pinkie",

|

|

63

63

|

"optionalDependencies": {},

|

|

64

|

-

"readme": "

|

|

64

|

+

"readme": "<h1 align=\"center\">\n\t<br>\n\t<img width=\"256\" src=\"media/logo.png\" alt=\"pinkie\">\n\t<br>\n\t<br>\n</h1>\n\n> Itty bitty little widdle twinkie pinkie [ES2015 Promise](https://people.mozilla.org/~jorendorff/es6-draft.html#sec-promise-objects) implementation\n\n[](https://travis-ci.org/floatdrop/pinkie) [](https://coveralls.io/github/floatdrop/pinkie?branch=master)\n\nThere are [tons of Promise implementations](https://github.com/promises-aplus/promises-spec/blob/master/implementations.md#standalone) out there, but all of them focus on browser compatibility and are often bloated with functionality.\n\nThis module is an exact Promise specification polyfill (like [native-promise-only](https://github.com/getify/native-promise-only)), but in Node.js land (it should be browserify-able though).\n\n\n## Install\n\n```\n$ npm install --save pinkie\n```\n\n\n## Usage\n\n```js\nvar fs = require('fs');\nvar Promise = require('pinkie');\n\nnew Promise(function (resolve, reject) {\n\tfs.readFile('foo.json', 'utf8', function (err, data) {\n\t\tif (err) {\n\t\t\treject(err);\n\t\t\treturn;\n\t\t}\n\n\t\tresolve(data);\n\t});\n});\n//=> Promise\n```\n\n\n### API\n\n`pinkie` exports bare [ES2015 Promise](https://people.mozilla.org/~jorendorff/es6-draft.html#sec-promise-objects) implementation and polyfills [Node.js rejection events](https://nodejs.org/api/process.html#process_event_unhandledrejection). In case you forgot:\n\n#### new Promise(executor)\n\nReturns new instance of `Promise`.\n\n##### executor\n\n*Required* \nType: `function`\n\nFunction with two arguments `resolve` and `reject`. The first argument fulfills the promise, the second argument rejects it.\n\n#### pinkie.all(promises)\n\nReturns a promise that resolves when all of the promises in the `promises` Array argument have resolved.\n\n#### pinkie.race(promises)\n\nReturns a promise that resolves or rejects as soon as one of the promises in the `promises` Array resolves or rejects, with the value or reason from that promise.\n\n#### pinkie.reject(reason)\n\nReturns a Promise object that is rejected with the given `reason`.\n\n#### pinkie.resolve(value)\n\nReturns a Promise object that is resolved with the given `value`. If the `value` is a thenable (i.e. has a then method), the returned promise will \"follow\" that thenable, adopting its eventual state; otherwise the returned promise will be fulfilled with the `value`.\n\n\n## Related\n\n- [pinkie-promise](https://github.com/floatdrop/pinkie-promise) - Returns the native Promise or this module\n\n\n## License\n\nMIT © [Vsevolod Strukchinsky](http://github.com/floatdrop)\n",

|

|

65

|

+

"readmeFilename": "readme.md",

|

|

65

66

|

"repository": {

|

|

66

67

|

"type": "git",

|

|

67

68

|

"url": "git+https://github.com/floatdrop/pinkie.git"

|

|

@@ -69,7 +69,8 @@

|

|

|

69

69

|

],

|

|

70

70

|

"name": "qs",

|

|

71

71

|

"optionalDependencies": {},

|

|

72

|

-

"readme": "ERROR: No README data found!",

|

|

72

|

+

"readme": "# qs\n\nA querystring parsing and stringifying library with some added security.\n\n[](http://travis-ci.org/ljharb/qs)\n\nLead Maintainer: [Jordan Harband](https://github.com/ljharb)\n\nThe **qs** module was originally created and maintained by [TJ Holowaychuk](https://github.com/visionmedia/node-querystring).\n\n## Usage\n\n```javascript\nvar qs = require('qs');\nvar assert = require('assert');\n\nvar obj = qs.parse('a=c');\nassert.deepEqual(obj, { a: 'c' });\n\nvar str = qs.stringify(obj);\nassert.equal(str, 'a=c');\n```\n\n### Parsing Objects\n\n[](#preventEval)\n```javascript\nqs.parse(string, [options]);\n```\n\n**qs** allows you to create nested objects within your query strings, by surrounding the name of sub-keys with square brackets `[]`.\nFor example, the string `'foo[bar]=baz'` converts to:\n\n```javascript\nassert.deepEqual(qs.parse('foo[bar]=baz'), {\n foo: {\n bar: 'baz'\n }\n});\n```\n\nWhen using the `plainObjects` option the parsed value is returned as a plain object, created via `Object.create(null)` and as such you should be aware that prototype methods will not exist on it and a user may set those names to whatever value they like:\n\n```javascript\nvar plainObject = qs.parse('a[hasOwnProperty]=b', { plainObjects: true });\nassert.deepEqual(plainObject, { a: { hasOwnProperty: 'b' } });\n```\n\nBy default parameters that would overwrite properties on the object prototype are ignored, if you wish to keep the data from those fields either use `plainObjects` as mentioned above, or set `allowPrototypes` to `true` which will allow user input to overwrite those properties. *WARNING* It is generally a bad idea to enable this option as it can cause problems when attempting to use the properties that have been overwritten. Always be careful with this option.\n\n```javascript\nvar protoObject = qs.parse('a[hasOwnProperty]=b', { allowPrototypes: true });\nassert.deepEqual(protoObject, { a: { hasOwnProperty: 'b' } });\n```\n\nURI encoded strings work too:\n\n```javascript\nassert.deepEqual(qs.parse('a%5Bb%5D=c'), {\n a: { b: 'c' }\n});\n```\n\nYou can also nest your objects, like `'foo[bar][baz]=foobarbaz'`:\n\n```javascript\nassert.deepEqual(qs.parse('foo[bar][baz]=foobarbaz'), {\n foo: {\n bar: {\n baz: 'foobarbaz'\n }\n }\n});\n```\n\nBy default, when nesting objects **qs** will only parse up to 5 children deep. This means if you attempt to parse a string like\n`'a[b][c][d][e][f][g][h][i]=j'` your resulting object will be:\n\n```javascript\nvar expected = {\n a: {\n b: {\n c: {\n d: {\n e: {\n f: {\n '[g][h][i]': 'j'\n }\n }\n }\n }\n }\n }\n};\nvar string = 'a[b][c][d][e][f][g][h][i]=j';\nassert.deepEqual(qs.parse(string), expected);\n```\n\nThis depth can be overridden by passing a `depth` option to `qs.parse(string, [options])`:\n\n```javascript\nvar deep = qs.parse('a[b][c][d][e][f][g][h][i]=j', { depth: 1 });\nassert.deepEqual(deep, { a: { b: { '[c][d][e][f][g][h][i]': 'j' } } });\n```\n\nThe depth limit helps mitigate abuse when **qs** is used to parse user input, and it is recommended to keep it a reasonably small number.\n\nFor similar reasons, by default **qs** will only parse up to 1000 parameters. This can be overridden by passing a `parameterLimit` option:\n\n```javascript\nvar limited = qs.parse('a=b&c=d', { parameterLimit: 1 });\nassert.deepEqual(limited, { a: 'b' });\n```\n\nAn optional delimiter can also be passed:\n\n```javascript\nvar delimited = qs.parse('a=b;c=d', { delimiter: ';' });\nassert.deepEqual(delimited, { a: 'b', c: 'd' });\n```\n\nDelimiters can be a regular expression too:\n\n```javascript\nvar regexed = qs.parse('a=b;c=d,e=f', { delimiter: /[;,]/ });\nassert.deepEqual(regexed, { a: 'b', c: 'd', e: 'f' });\n```\n\nOption `allowDots` can be used to enable dot notation:\n\n```javascript\nvar withDots = qs.parse('a.b=c', { allowDots: true });\nassert.deepEqual(withDots, { a: { b: 'c' } });\n```\n\n### Parsing Arrays\n\n**qs** can also parse arrays using a similar `[]` notation:\n\n```javascript\nvar withArray = qs.parse('a[]=b&a[]=c');\nassert.deepEqual(withArray, { a: ['b', 'c'] });\n```\n\nYou may specify an index as well:\n\n```javascript\nvar withIndexes = qs.parse('a[1]=c&a[0]=b');\nassert.deepEqual(withIndexes, { a: ['b', 'c'] });\n```\n\nNote that the only difference between an index in an array and a key in an object is that the value between the brackets must be a number\nto create an array. When creating arrays with specific indices, **qs** will compact a sparse array to only the existing values preserving\ntheir order:\n\n```javascript\nvar noSparse = qs.parse('a[1]=b&a[15]=c');\nassert.deepEqual(noSparse, { a: ['b', 'c'] });\n```\n\nNote that an empty string is also a value, and will be preserved:\n\n```javascript\nvar withEmptyString = qs.parse('a[]=&a[]=b');\nassert.deepEqual(withEmptyString, { a: ['', 'b'] });\n\nvar withIndexedEmptyString = qs.parse('a[0]=b&a[1]=&a[2]=c');\nassert.deepEqual(withIndexedEmptyString, { a: ['b', '', 'c'] });\n```\n\n**qs** will also limit specifying indices in an array to a maximum index of `20`. Any array members with an index of greater than `20` will\ninstead be converted to an object with the index as the key:\n\n```javascript\nvar withMaxIndex = qs.parse('a[100]=b');\nassert.deepEqual(withMaxIndex, { a: { '100': 'b' } });\n```\n\nThis limit can be overridden by passing an `arrayLimit` option:\n\n```javascript\nvar withArrayLimit = qs.parse('a[1]=b', { arrayLimit: 0 });\nassert.deepEqual(withArrayLimit, { a: { '1': 'b' } });\n```\n\nTo disable array parsing entirely, set `parseArrays` to `false`.\n\n```javascript\nvar noParsingArrays = qs.parse('a[]=b', { parseArrays: false });\nassert.deepEqual(noParsingArrays, { a: { '0': 'b' } });\n```\n\nIf you mix notations, **qs** will merge the two items into an object:\n\n```javascript\nvar mixedNotation = qs.parse('a[0]=b&a[b]=c');\nassert.deepEqual(mixedNotation, { a: { '0': 'b', b: 'c' } });\n```\n\nYou can also create arrays of objects:\n\n```javascript\nvar arraysOfObjects = qs.parse('a[][b]=c');\nassert.deepEqual(arraysOfObjects, { a: [{ b: 'c' }] });\n```\n\n### Stringifying\n\n[](#preventEval)\n```javascript\nqs.stringify(object, [options]);\n```\n\nWhen stringifying, **qs** by default URI encodes output. Objects are stringified as you would expect:\n\n```javascript\nassert.equal(qs.stringify({ a: 'b' }), 'a=b');\nassert.equal(qs.stringify({ a: { b: 'c' } }), 'a%5Bb%5D=c');\n```\n\nThis encoding can be disabled by setting the `encode` option to `false`:\n\n```javascript\nvar unencoded = qs.stringify({ a: { b: 'c' } }, { encode: false });\nassert.equal(unencoded, 'a[b]=c');\n```\n\nExamples beyond this point will be shown as though the output is not URI encoded for clarity. Please note that the return values in these cases *will* be URI encoded during real usage.\n\nWhen arrays are stringified, by default they are given explicit indices:\n\n```javascript\nqs.stringify({ a: ['b', 'c', 'd'] });\n// 'a[0]=b&a[1]=c&a[2]=d'\n```\n\nYou may override this by setting the `indices` option to `false`:\n\n```javascript\nqs.stringify({ a: ['b', 'c', 'd'] }, { indices: false });\n// 'a=b&a=c&a=d'\n```\n\nYou may use the `arrayFormat` option to specify the format of the output array\n\n```javascript\nqs.stringify({ a: ['b', 'c'] }, { arrayFormat: 'indices' })\n// 'a[0]=b&a[1]=c'\nqs.stringify({ a: ['b', 'c'] }, { arrayFormat: 'brackets' })\n// 'a[]=b&a[]=c'\nqs.stringify({ a: ['b', 'c'] }, { arrayFormat: 'repeat' })\n// 'a=b&a=c'\n```\n\nEmpty strings and null values will omit the value, but the equals sign (=) remains in place:\n\n```javascript\nassert.equal(qs.stringify({ a: '' }), 'a=');\n```\n\nProperties that are set to `undefined` will be omitted entirely:\n\n```javascript\nassert.equal(qs.stringify({ a: null, b: undefined }), 'a=');\n```\n\nThe delimiter may be overridden with stringify as well:\n\n```javascript\nassert.equal(qs.stringify({ a: 'b', c: 'd' }, { delimiter: ';' }), 'a=b;c=d');\n```\n\nFinally, you can use the `filter` option to restrict which keys will be included in the stringified output.\nIf you pass a function, it will be called for each key to obtain the replacement value. Otherwise, if you\npass an array, it will be used to select properties and array indices for stringification:\n\n```javascript\nfunction filterFunc(prefix, value) {\n if (prefix == 'b') {\n // Return an `undefined` value to omit a property.\n return;\n }\n if (prefix == 'e[f]') {\n return value.getTime();\n }\n if (prefix == 'e[g][0]') {\n return value * 2;\n }\n return value;\n}\nqs.stringify({ a: 'b', c: 'd', e: { f: new Date(123), g: [2] } }, { filter: filterFunc });\n// 'a=b&c=d&e[f]=123&e[g][0]=4'\nqs.stringify({ a: 'b', c: 'd', e: 'f' }, { filter: ['a', 'e'] });\n// 'a=b&e=f'\nqs.stringify({ a: ['b', 'c', 'd'], e: 'f' }, { filter: ['a', 0, 2] });\n// 'a[0]=b&a[2]=d'\n```\n\n### Handling of `null` values\n\nBy default, `null` values are treated like empty strings:\n\n```javascript\nvar withNull = qs.stringify({ a: null, b: '' });\nassert.equal(withNull, 'a=&b=');\n```\n\nParsing does not distinguish between parameters with and without equal signs. Both are converted to empty strings.\n\n```javascript\nvar equalsInsensitive = qs.parse('a&b=');\nassert.deepEqual(equalsInsensitive, { a: '', b: '' });\n```\n\nTo distinguish between `null` values and empty strings use the `strictNullHandling` flag. In the result string the `null`\nvalues have no `=` sign:\n\n```javascript\nvar strictNull = qs.stringify({ a: null, b: '' }, { strictNullHandling: true });\nassert.equal(strictNull, 'a&b=');\n```\n\nTo parse values without `=` back to `null` use the `strictNullHandling` flag:\n\n```javascript\nvar parsedStrictNull = qs.parse('a&b=', { strictNullHandling: true });\nassert.deepEqual(parsedStrictNull, { a: null, b: '' });\n```\n\nTo completely skip rendering keys with `null` values, use the `skipNulls` flag:\n\n```javascript\nvar nullsSkipped = qs.stringify({ a: 'b', c: null}, { skipNulls: true });\nassert.equal(nullsSkipped, 'a=b');\n```\n",

|

|

73

|

+

"readmeFilename": "README.md",

|

|

73

74

|

"repository": {

|

|

74

75

|

"type": "git",

|

|

75

76

|

"url": "git+https://github.com/ljharb/qs.git"

|

|

@@ -99,7 +99,8 @@

|

|

|

99

99

|

],

|

|

100

100

|

"name": "request",

|

|

101

101

|

"optionalDependencies": {},

|

|

102

|

-

"readme": "ERROR: No README data found!",

|

|

102

|

+

"readme": "\n# Request - Simplified HTTP client\n\n[](https://nodei.co/npm/request/)\n\n[](https://travis-ci.org/request/request)\n[](https://codecov.io/github/request/request?branch=master)\n[](https://coveralls.io/r/request/request)\n[](https://david-dm.org/request/request)\n[](https://gitter.im/request/request?utm_source=badge)\n\n\n## Super simple to use\n\nRequest is designed to be the simplest way possible to make http calls. It supports HTTPS and follows redirects by default.\n\n```js\nvar request = require('request');\nrequest('http://www.google.com', function (error, response, body) {\n if (!error && response.statusCode == 200) {\n console.log(body) // Show the HTML for the Google homepage.\n }\n})\n```\n\n\n## Table of contents\n\n- [Streaming](#streaming)\n- [Forms](#forms)\n- [HTTP Authentication](#http-authentication)\n- [Custom HTTP Headers](#custom-http-headers)\n- [OAuth Signing](#oauth-signing)\n- [Proxies](#proxies)\n- [Unix Domain Sockets](#unix-domain-sockets)\n- [TLS/SSL Protocol](#tlsssl-protocol)\n- [Support for HAR 1.2](#support-for-har-12)\n- [**All Available Options**](#requestoptions-callback)\n\nRequest also offers [convenience methods](#convenience-methods) like\n`request.defaults` and `request.post`, and there are\nlots of [usage examples](#examples) and several\n[debugging techniques](#debugging).\n\n\n---\n\n\n## Streaming\n\nYou can stream any response to a file stream.\n\n```js\nrequest('http://google.com/doodle.png').pipe(fs.createWriteStream('doodle.png'))\n```\n\nYou can also stream a file to a PUT or POST request. This method will also check the file extension against a mapping of file extensions to content-types (in this case `application/json`) and use the proper `content-type` in the PUT request (if the headers don’t already provide one).\n\n```js\nfs.createReadStream('file.json').pipe(request.put('http://mysite.com/obj.json'))\n```\n\nRequest can also `pipe` to itself. When doing so, `content-type` and `content-length` are preserved in the PUT headers.\n\n```js\nrequest.get('http://google.com/img.png').pipe(request.put('http://mysite.com/img.png'))\n```\n\nRequest emits a \"response\" event when a response is received. The `response` argument will be an instance of [http.IncomingMessage](http://nodejs.org/api/http.html#http_http_incomingmessage).\n\n```js\nrequest\n .get('http://google.com/img.png')\n .on('response', function(response) {\n console.log(response.statusCode) // 200\n console.log(response.headers['content-type']) // 'image/png'\n })\n .pipe(request.put('http://mysite.com/img.png'))\n```\n\nTo easily handle errors when streaming requests, listen to the `error` event before piping:\n\n```js\nrequest\n .get('http://mysite.com/doodle.png')\n .on('error', function(err) {\n console.log(err)\n })\n .pipe(fs.createWriteStream('doodle.png'))\n```\n\nNow let’s get fancy.\n\n```js\nhttp.createServer(function (req, resp) {\n if (req.url === '/doodle.png') {\n if (req.method === 'PUT') {\n req.pipe(request.put('http://mysite.com/doodle.png'))\n } else if (req.method === 'GET' || req.method === 'HEAD') {\n request.get('http://mysite.com/doodle.png').pipe(resp)\n }\n }\n})\n```\n\nYou can also `pipe()` from `http.ServerRequest` instances, as well as to `http.ServerResponse` instances. The HTTP method, headers, and entity-body data will be sent. Which means that, if you don't really care about security, you can do:\n\n```js\nhttp.createServer(function (req, resp) {\n if (req.url === '/doodle.png') {\n var x = request('http://mysite.com/doodle.png')\n req.pipe(x)\n x.pipe(resp)\n }\n})\n```\n\nAnd since `pipe()` returns the destination stream in ≥ Node 0.5.x you can do one line proxying. :)\n\n```js\nreq.pipe(request('http://mysite.com/doodle.png')).pipe(resp)\n```\n\nAlso, none of this new functionality conflicts with requests previous features, it just expands them.\n\n```js\nvar r = request.defaults({'proxy':'http://localproxy.com'})\n\nhttp.createServer(function (req, resp) {\n if (req.url === '/doodle.png') {\n r.get('http://google.com/doodle.png').pipe(resp)\n }\n})\n```\n\nYou can still use intermediate proxies, the requests will still follow HTTP forwards, etc.\n\n[back to top](#table-of-contents)\n\n\n---\n\n\n## Forms\n\n`request` supports `application/x-www-form-urlencoded` and `multipart/form-data` form uploads. For `multipart/related` refer to the `multipart` API.\n\n\n#### application/x-www-form-urlencoded (URL-Encoded Forms)\n\nURL-encoded forms are simple.\n\n```js\nrequest.post('http://service.com/upload', {form:{key:'value'}})\n// or\nrequest.post('http://service.com/upload').form({key:'value'})\n// or\nrequest.post({url:'http://service.com/upload', form: {key:'value'}}, function(err,httpResponse,body){ /* ... */ })\n```\n\n\n#### multipart/form-data (Multipart Form Uploads)\n\nFor `multipart/form-data` we use the [form-data](https://github.com/form-data/form-data) library by [@felixge](https://github.com/felixge). For the most cases, you can pass your upload form data via the `formData` option.\n\n\n```js\nvar formData = {\n // Pass a simple key-value pair\n my_field: 'my_value',\n // Pass data via Buffers\n my_buffer: new Buffer([1, 2, 3]),\n // Pass data via Streams\n my_file: fs.createReadStream(__dirname + '/unicycle.jpg'),\n // Pass multiple values /w an Array\n attachments: [\n fs.createReadStream(__dirname + '/attachment1.jpg'),\n fs.createReadStream(__dirname + '/attachment2.jpg')\n ],\n // Pass optional meta-data with an 'options' object with style: {value: DATA, options: OPTIONS}\n // Use case: for some types of streams, you'll need to provide \"file\"-related information manually.\n // See the `form-data` README for more information about options: https://github.com/form-data/form-data\n custom_file: {\n value: fs.createReadStream('/dev/urandom'),\n options: {\n filename: 'topsecret.jpg',\n contentType: 'image/jpg'\n }\n }\n};\nrequest.post({url:'http://service.com/upload', formData: formData}, function optionalCallback(err, httpResponse, body) {\n if (err) {\n return console.error('upload failed:', err);\n }\n console.log('Upload successful! Server responded with:', body);\n});\n```\n\nFor advanced cases, you can access the form-data object itself via `r.form()`. This can be modified until the request is fired on the next cycle of the event-loop. (Note that this calling `form()` will clear the currently set form data for that request.)\n\n```js\n// NOTE: Advanced use-case, for normal use see 'formData' usage above\nvar r = request.post('http://service.com/upload', function optionalCallback(err, httpResponse, body) {...})\nvar form = r.form();\nform.append('my_field', 'my_value');\nform.append('my_buffer', new Buffer([1, 2, 3]));\nform.append('custom_file', fs.createReadStream(__dirname + '/unicycle.jpg'), {filename: 'unicycle.jpg'});\n```\nSee the [form-data README](https://github.com/form-data/form-data) for more information & examples.\n\n\n#### multipart/related\n\nSome variations in different HTTP implementations require a newline/CRLF before, after, or both before and after the boundary of a `multipart/related` request (using the multipart option). This has been observed in the .NET WebAPI version 4.0. You can turn on a boundary preambleCRLF or postamble by passing them as `true` to your request options.\n\n```js\n request({\n method: 'PUT',\n preambleCRLF: true,\n postambleCRLF: true,\n uri: 'http://service.com/upload',\n multipart: [\n {\n 'content-type': 'application/json',\n body: JSON.stringify({foo: 'bar', _attachments: {'message.txt': {follows: true, length: 18, 'content_type': 'text/plain' }}})\n },\n { body: 'I am an attachment' },\n { body: fs.createReadStream('image.png') }\n ],\n // alternatively pass an object containing additional options\n multipart: {\n chunked: false,\n data: [\n {\n 'content-type': 'application/json',\n body: JSON.stringify({foo: 'bar', _attachments: {'message.txt': {follows: true, length: 18, 'content_type': 'text/plain' }}})\n },\n { body: 'I am an attachment' }\n ]\n }\n },\n function (error, response, body) {\n if (error) {\n return console.error('upload failed:', error);\n }\n console.log('Upload successful! Server responded with:', body);\n })\n```\n\n[back to top](#table-of-contents)\n\n\n---\n\n\n## HTTP Authentication\n\n```js\nrequest.get('http://some.server.com/').auth('username', 'password', false);\n// or\nrequest.get('http://some.server.com/', {\n 'auth': {\n 'user': 'username',\n 'pass': 'password',\n 'sendImmediately': false\n }\n});\n// or\nrequest.get('http://some.server.com/').auth(null, null, true, 'bearerToken');\n// or\nrequest.get('http://some.server.com/', {\n 'auth': {\n 'bearer': 'bearerToken'\n }\n});\n```\n\nIf passed as an option, `auth` should be a hash containing values:\n\n- `user` || `username`\n- `pass` || `password`\n- `sendImmediately` (optional)\n- `bearer` (optional)\n\nThe method form takes parameters\n`auth(username, password, sendImmediately, bearer)`.\n\n`sendImmediately` defaults to `true`, which causes a basic or bearer\nauthentication header to be sent. If `sendImmediately` is `false`, then\n`request` will retry with a proper authentication header after receiving a\n`401` response from the server (which must contain a `WWW-Authenticate` header\nindicating the required authentication method).\n\nNote that you can also specify basic authentication using the URL itself, as\ndetailed in [RFC 1738](http://www.ietf.org/rfc/rfc1738.txt). Simply pass the\n`user:password` before the host with an `@` sign:\n\n```js\nvar username = 'username',\n password = 'password',\n url = 'http://' + username + ':' + password + '@some.server.com';\n\nrequest({url: url}, function (error, response, body) {\n // Do more stuff with 'body' here\n});\n```\n\nDigest authentication is supported, but it only works with `sendImmediately`\nset to `false`; otherwise `request` will send basic authentication on the\ninitial request, which will probably cause the request to fail.\n\nBearer authentication is supported, and is activated when the `bearer` value is\navailable. The value may be either a `String` or a `Function` returning a\n`String`. Using a function to supply the bearer token is particularly useful if\nused in conjunction with `defaults` to allow a single function to supply the\nlast known token at the time of sending a request, or to compute one on the fly.\n\n[back to top](#table-of-contents)\n\n\n---\n\n\n## Custom HTTP Headers\n\nHTTP Headers, such as `User-Agent`, can be set in the `options` object.\nIn the example below, we call the github API to find out the number\nof stars and forks for the request repository. This requires a\ncustom `User-Agent` header as well as https.\n\n```js\nvar request = require('request');\n\nvar options = {\n url: 'https://api.github.com/repos/request/request',\n headers: {\n 'User-Agent': 'request'\n }\n};\n\nfunction callback(error, response, body) {\n if (!error && response.statusCode == 200) {\n var info = JSON.parse(body);\n console.log(info.stargazers_count + \" Stars\");\n console.log(info.forks_count + \" Forks\");\n }\n}\n\nrequest(options, callback);\n```\n\n[back to top](#table-of-contents)\n\n\n---\n\n\n## OAuth Signing\n\n[OAuth version 1.0](https://tools.ietf.org/html/rfc5849) is supported. The\ndefault signing algorithm is\n[HMAC-SHA1](https://tools.ietf.org/html/rfc5849#section-3.4.2):\n\n```js\n// OAuth1.0 - 3-legged server side flow (Twitter example)\n// step 1\nvar qs = require('querystring')\n , oauth =\n { callback: 'http://mysite.com/callback/'\n , consumer_key: CONSUMER_KEY\n , consumer_secret: CONSUMER_SECRET\n }\n , url = 'https://api.twitter.com/oauth/request_token'\n ;\nrequest.post({url:url, oauth:oauth}, function (e, r, body) {\n // Ideally, you would take the body in the response\n // and construct a URL that a user clicks on (like a sign in button).\n // The verifier is only available in the response after a user has\n // verified with twitter that they are authorizing your app.\n\n // step 2\n var req_data = qs.parse(body)\n var uri = 'https://api.twitter.com/oauth/authenticate'\n + '?' + qs.stringify({oauth_token: req_data.oauth_token})\n // redirect the user to the authorize uri\n\n // step 3\n // after the user is redirected back to your server\n var auth_data = qs.parse(body)\n , oauth =\n { consumer_key: CONSUMER_KEY\n , consumer_secret: CONSUMER_SECRET\n , token: auth_data.oauth_token\n , token_secret: req_data.oauth_token_secret\n , verifier: auth_data.oauth_verifier\n }\n , url = 'https://api.twitter.com/oauth/access_token'\n ;\n request.post({url:url, oauth:oauth}, function (e, r, body) {\n // ready to make signed requests on behalf of the user\n var perm_data = qs.parse(body)\n , oauth =\n { consumer_key: CONSUMER_KEY\n , consumer_secret: CONSUMER_SECRET\n , token: perm_data.oauth_token\n , token_secret: perm_data.oauth_token_secret\n }\n , url = 'https://api.twitter.com/1.1/users/show.json'\n , qs =\n { screen_name: perm_data.screen_name\n , user_id: perm_data.user_id\n }\n ;\n request.get({url:url, oauth:oauth, qs:qs, json:true}, function (e, r, user) {\n console.log(user)\n })\n })\n})\n```\n\nFor [RSA-SHA1 signing](https://tools.ietf.org/html/rfc5849#section-3.4.3), make\nthe following changes to the OAuth options object:\n* Pass `signature_method : 'RSA-SHA1'`\n* Instead of `consumer_secret`, specify a `private_key` string in\n [PEM format](http://how2ssl.com/articles/working_with_pem_files/)\n\nFor [PLAINTEXT signing](http://oauth.net/core/1.0/#anchor22), make\nthe following changes to the OAuth options object:\n* Pass `signature_method : 'PLAINTEXT'`\n\nTo send OAuth parameters via query params or in a post body as described in The\n[Consumer Request Parameters](http://oauth.net/core/1.0/#consumer_req_param)\nsection of the oauth1 spec:\n* Pass `transport_method : 'query'` or `transport_method : 'body'` in the OAuth\n options object.\n* `transport_method` defaults to `'header'`\n\nTo use [Request Body Hash](https://oauth.googlecode.com/svn/spec/ext/body_hash/1.0/oauth-bodyhash.html) you can either\n* Manually generate the body hash and pass it as a string `body_hash: '...'`\n* Automatically generate the body hash by passing `body_hash: true`\n\n[back to top](#table-of-contents)\n\n\n---\n\n\n## Proxies\n\nIf you specify a `proxy` option, then the request (and any subsequent\nredirects) will be sent via a connection to the proxy server.\n\nIf your endpoint is an `https` url, and you are using a proxy, then\nrequest will send a `CONNECT` request to the proxy server *first*, and\nthen use the supplied connection to connect to the endpoint.\n\nThat is, first it will make a request like:\n\n```\nHTTP/1.1 CONNECT endpoint-server.com:80\nHost: proxy-server.com\nUser-Agent: whatever user agent you specify\n```\n\nand then the proxy server make a TCP connection to `endpoint-server`\non port `80`, and return a response that looks like:\n\n```\nHTTP/1.1 200 OK\n```\n\nAt this point, the connection is left open, and the client is\ncommunicating directly with the `endpoint-server.com` machine.\n\nSee [the wikipedia page on HTTP Tunneling](https://en.wikipedia.org/wiki/HTTP_tunnel)\nfor more information.\n\nBy default, when proxying `http` traffic, request will simply make a\nstandard proxied `http` request. This is done by making the `url`\nsection of the initial line of the request a fully qualified url to\nthe endpoint.\n\nFor example, it will make a single request that looks like:\n\n```\nHTTP/1.1 GET http://endpoint-server.com/some-url\nHost: proxy-server.com\nOther-Headers: all go here\n\nrequest body or whatever\n```\n\nBecause a pure \"http over http\" tunnel offers no additional security\nor other features, it is generally simpler to go with a\nstraightforward HTTP proxy in this case. However, if you would like\nto force a tunneling proxy, you may set the `tunnel` option to `true`.\n\nYou can also make a standard proxied `http` request by explicitly setting\n`tunnel : false`, but **note that this will allow the proxy to see the traffic\nto/from the destination server**.\n\nIf you are using a tunneling proxy, you may set the\n`proxyHeaderWhiteList` to share certain headers with the proxy.\n\nYou can also set the `proxyHeaderExclusiveList` to share certain\nheaders only with the proxy and not with destination host.\n\nBy default, this set is:\n\n```\naccept\naccept-charset\naccept-encoding\naccept-language\naccept-ranges\ncache-control\ncontent-encoding\ncontent-language\ncontent-length\ncontent-location\ncontent-md5\ncontent-range\ncontent-type\nconnection\ndate\nexpect\nmax-forwards\npragma\nproxy-authorization\nreferer\nte\ntransfer-encoding\nuser-agent\nvia\n```\n\nNote that, when using a tunneling proxy, the `proxy-authorization`\nheader and any headers from custom `proxyHeaderExclusiveList` are\n*never* sent to the endpoint server, but only to the proxy server.\n\n\n### Controlling proxy behaviour using environment variables\n\nThe following environment variables are respected by `request`:\n\n * `HTTP_PROXY` / `http_proxy`\n * `HTTPS_PROXY` / `https_proxy`\n * `NO_PROXY` / `no_proxy`\n\nWhen `HTTP_PROXY` / `http_proxy` are set, they will be used to proxy non-SSL requests that do not have an explicit `proxy` configuration option present. Similarly, `HTTPS_PROXY` / `https_proxy` will be respected for SSL requests that do not have an explicit `proxy` configuration option. It is valid to define a proxy in one of the environment variables, but then override it for a specific request, using the `proxy` configuration option. Furthermore, the `proxy` configuration option can be explicitly set to false / null to opt out of proxying altogether for that request.\n\n`request` is also aware of the `NO_PROXY`/`no_proxy` environment variables. These variables provide a granular way to opt out of proxying, on a per-host basis. It should contain a comma separated list of hosts to opt out of proxying. It is also possible to opt of proxying when a particular destination port is used. Finally, the variable may be set to `*` to opt out of the implicit proxy configuration of the other environment variables.\n\nHere's some examples of valid `no_proxy` values:\n\n * `google.com` - don't proxy HTTP/HTTPS requests to Google.\n * `google.com:443` - don't proxy HTTPS requests to Google, but *do* proxy HTTP requests to Google.\n * `google.com:443, yahoo.com:80` - don't proxy HTTPS requests to Google, and don't proxy HTTP requests to Yahoo!\n * `*` - ignore `https_proxy`/`http_proxy` environment variables altogether.\n\n[back to top](#table-of-contents)\n\n\n---\n\n\n## UNIX Domain Sockets\n\n`request` supports making requests to [UNIX Domain Sockets](https://en.wikipedia.org/wiki/Unix_domain_socket). To make one, use the following URL scheme:\n\n```js\n/* Pattern */ 'http://unix:SOCKET:PATH'\n/* Example */ request.get('http://unix:/absolute/path/to/unix.socket:/request/path')\n```\n\nNote: The `SOCKET` path is assumed to be absolute to the root of the host file system.\n\n[back to top](#table-of-contents)\n\n\n---\n\n\n## TLS/SSL Protocol\n\nTLS/SSL Protocol options, such as `cert`, `key` and `passphrase`, can be\nset directly in `options` object, in the `agentOptions` property of the `options` object, or even in `https.globalAgent.options`. Keep in mind that, although `agentOptions` allows for a slightly wider range of configurations, the recommended way is via `options` object directly, as using `agentOptions` or `https.globalAgent.options` would not be applied in the same way in proxied environments (as data travels through a TLS connection instead of an http/https agent).\n\n```js\nvar fs = require('fs')\n , path = require('path')\n , certFile = path.resolve(__dirname, 'ssl/client.crt')\n , keyFile = path.resolve(__dirname, 'ssl/client.key')\n , caFile = path.resolve(__dirname, 'ssl/ca.cert.pem')\n , request = require('request');\n\nvar options = {\n url: 'https://api.some-server.com/',\n cert: fs.readFileSync(certFile),\n key: fs.readFileSync(keyFile),\n passphrase: 'password',\n ca: fs.readFileSync(caFile)\n }\n};\n\nrequest.get(options);\n```\n\n### Using `options.agentOptions`\n\nIn the example below, we call an API requires client side SSL certificate\n(in PEM format) with passphrase protected private key (in PEM format) and disable the SSLv3 protocol:\n\n```js\nvar fs = require('fs')\n , path = require('path')\n , certFile = path.resolve(__dirname, 'ssl/client.crt')\n , keyFile = path.resolve(__dirname, 'ssl/client.key')\n , request = require('request');\n\nvar options = {\n url: 'https://api.some-server.com/',\n agentOptions: {\n cert: fs.readFileSync(certFile),\n key: fs.readFileSync(keyFile),\n // Or use `pfx` property replacing `cert` and `key` when using private key, certificate and CA certs in PFX or PKCS12 format:\n // pfx: fs.readFileSync(pfxFilePath),\n passphrase: 'password',\n securityOptions: 'SSL_OP_NO_SSLv3'\n }\n};\n\nrequest.get(options);\n```\n\nIt is able to force using SSLv3 only by specifying `secureProtocol`:\n\n```js\nrequest.get({\n url: 'https://api.some-server.com/',\n agentOptions: {\n secureProtocol: 'SSLv3_method'\n }\n});\n```\n\nIt is possible to accept other certificates than those signed by generally allowed Certificate Authorities (CAs).\nThis can be useful, for example, when using self-signed certificates.\nTo require a different root certificate, you can specify the signing CA by adding the contents of the CA's certificate file to the `agentOptions`.\nThe certificate the domain presents must be signed by the root certificate specified:\n\n```js\nrequest.get({\n url: 'https://api.some-server.com/',\n agentOptions: {\n ca: fs.readFileSync('ca.cert.pem')\n }\n});\n```\n\n[back to top](#table-of-contents)\n\n\n---\n\n## Support for HAR 1.2\n\nThe `options.har` property will override the values: `url`, `method`, `qs`, `headers`, `form`, `formData`, `body`, `json`, as well as construct multipart data and read files from disk when `request.postData.params[].fileName` is present without a matching `value`.\n\na validation step will check if the HAR Request format matches the latest spec (v1.2) and will skip parsing if not matching.\n\n```js\n var request = require('request')\n request({\n // will be ignored\n method: 'GET',\n uri: 'http://www.google.com',\n\n // HTTP Archive Request Object\n har: {\n url: 'http://www.mockbin.com/har',\n method: 'POST',\n headers: [\n {\n name: 'content-type',\n value: 'application/x-www-form-urlencoded'\n }\n ],\n postData: {\n mimeType: 'application/x-www-form-urlencoded',\n params: [\n {\n name: 'foo',\n value: 'bar'\n },\n {\n name: 'hello',\n value: 'world'\n }\n ]\n }\n }\n })\n\n // a POST request will be sent to http://www.mockbin.com\n // with body an application/x-www-form-urlencoded body:\n // foo=bar&hello=world\n```\n\n[back to top](#table-of-contents)\n\n\n---\n\n## request(options, callback)\n\nThe first argument can be either a `url` or an `options` object. The only required option is `uri`; all others are optional.\n\n- `uri` || `url` - fully qualified uri or a parsed url object from `url.parse()`\n- `baseUrl` - fully qualified uri string used as the base url. Most useful with `request.defaults`, for example when you want to do many requests to the same domain. If `baseUrl` is `https://example.com/api/`, then requesting `/end/point?test=true` will fetch `https://example.com/api/end/point?test=true`. When `baseUrl` is given, `uri` must also be a string.\n- `method` - http method (default: `\"GET\"`)\n- `headers` - http headers (default: `{}`)\n\n---\n\n- `qs` - object containing querystring values to be appended to the `uri`\n- `qsParseOptions` - object containing options to pass to the [qs.parse](https://github.com/hapijs/qs#parsing-objects) method. Alternatively pass options to the [querystring.parse](https://nodejs.org/docs/v0.12.0/api/querystring.html#querystring_querystring_parse_str_sep_eq_options) method using this format `{sep:';', eq:':', options:{}}`\n- `qsStringifyOptions` - object containing options to pass to the [qs.stringify](https://github.com/hapijs/qs#stringifying) method. Alternatively pass options to the [querystring.stringify](https://nodejs.org/docs/v0.12.0/api/querystring.html#querystring_querystring_stringify_obj_sep_eq_options) method using this format `{sep:';', eq:':', options:{}}`. For example, to change the way arrays are converted to query strings using the `qs` module pass the `arrayFormat` option with one of `indices|brackets|repeat`\n- `useQuerystring` - If true, use `querystring` to stringify and parse\n querystrings, otherwise use `qs` (default: `false`). Set this option to\n `true` if you need arrays to be serialized as `foo=bar&foo=baz` instead of the\n default `foo[0]=bar&foo[1]=baz`.\n\n---\n\n- `body` - entity body for PATCH, POST and PUT requests. Must be a `Buffer` or `String`, unless `json` is `true`. If `json` is `true`, then `body` must be a JSON-serializable object.\n- `form` - when passed an object or a querystring, this sets `body` to a querystring representation of value, and adds `Content-type: application/x-www-form-urlencoded` header. When passed no options, a `FormData` instance is returned (and is piped to request). See \"Forms\" section above.\n- `formData` - Data to pass for a `multipart/form-data` request. See\n [Forms](#forms) section above.\n- `multipart` - array of objects which contain their own headers and `body`\n attributes. Sends a `multipart/related` request. See [Forms](#forms) section\n above.\n - Alternatively you can pass in an object `{chunked: false, data: []}` where\n `chunked` is used to specify whether the request is sent in\n [chunked transfer encoding](https://en.wikipedia.org/wiki/Chunked_transfer_encoding)\n In non-chunked requests, data items with body streams are not allowed.\n- `preambleCRLF` - append a newline/CRLF before the boundary of your `multipart/form-data` request.\n- `postambleCRLF` - append a newline/CRLF at the end of the boundary of your `multipart/form-data` request.\n- `json` - sets `body` to JSON representation of value and adds `Content-type: application/json` header. Additionally, parses the response body as JSON.\n- `jsonReviver` - a [reviver function](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/JSON/parse) that will be passed to `JSON.parse()` when parsing a JSON response body.\n\n---\n\n- `auth` - A hash containing values `user` || `username`, `pass` || `password`, and `sendImmediately` (optional). See documentation above.\n- `oauth` - Options for OAuth HMAC-SHA1 signing. See documentation above.\n- `hawk` - Options for [Hawk signing](https://github.com/hueniverse/hawk). The `credentials` key must contain the necessary signing info, [see hawk docs for details](https://github.com/hueniverse/hawk#usage-example).\n- `aws` - `object` containing AWS signing information. Should have the properties `key`, `secret`. Also requires the property `bucket`, unless you’re specifying your `bucket` as part of the path, or the request doesn’t use a bucket (i.e. GET Services). If you want to use AWS sign version 4 use the parameter `sign_version` with value `4` otherwise the default is version 2. **Note:** you need to `npm install aws4` first.\n- `httpSignature` - Options for the [HTTP Signature Scheme](https://github.com/joyent/node-http-signature/blob/master/http_signing.md) using [Joyent's library](https://github.com/joyent/node-http-signature). The `keyId` and `key` properties must be specified. See the docs for other options.\n\n---\n\n- `followRedirect` - follow HTTP 3xx responses as redirects (default: `true`). This property can also be implemented as function which gets `response` object as a single argument and should return `true` if redirects should continue or `false` otherwise.\n- `followAllRedirects` - follow non-GET HTTP 3xx responses as redirects (default: `false`)\n- `maxRedirects` - the maximum number of redirects to follow (default: `10`)\n- `removeRefererHeader` - removes the referer header when a redirect happens (default: `false`). **Note:** if true, referer header set in the initial request is preserved during redirect chain.\n\n---\n\n- `encoding` - Encoding to be used on `setEncoding` of response data. If `null`, the `body` is returned as a `Buffer`. Anything else **(including the default value of `undefined`)** will be passed as the [encoding](http://nodejs.org/api/buffer.html#buffer_buffer) parameter to `toString()` (meaning this is effectively `utf8` by default). (**Note:** if you expect binary data, you should set `encoding: null`.)\n- `gzip` - If `true`, add an `Accept-Encoding` header to request compressed content encodings from the server (if not already present) and decode supported content encodings in the response. **Note:** Automatic decoding of the response content is performed on the body data returned through `request` (both through the `request` stream and passed to the callback function) but is not performed on the `response` stream (available from the `response` event) which is the unmodified `http.IncomingMessage` object which may contain compressed data. See example below.\n- `jar` - If `true`, remember cookies for future use (or define your custom cookie jar; see examples section)\n\n---\n\n- `agent` - `http(s).Agent` instance to use\n- `agentClass` - alternatively specify your agent's class name\n- `agentOptions` - and pass its options. **Note:** for HTTPS see [tls API doc for TLS/SSL options](http://nodejs.org/api/tls.html#tls_tls_connect_options_callback) and the [documentation above](#using-optionsagentoptions).\n- `forever` - set to `true` to use the [forever-agent](https://github.com/request/forever-agent) **Note:** Defaults to `http(s).Agent({keepAlive:true})` in node 0.12+\n- `pool` - An object describing which agents to use for the request. If this option is omitted the request will use the global agent (as long as your options allow for it). Otherwise, request will search the pool for your custom agent. If no custom agent is found, a new agent will be created and added to the pool. **Note:** `pool` is used only when the `agent` option is not specified.\n - A `maxSockets` property can also be provided on the `pool` object to set the max number of sockets for all agents created (ex: `pool: {maxSockets: Infinity}`).\n - Note that if you are sending multiple requests in a loop and creating\n multiple new `pool` objects, `maxSockets` will not work as intended. To\n work around this, either use [`request.defaults`](#requestdefaultsoptions)\n with your pool options or create the pool object with the `maxSockets`\n property outside of the loop.\n- `timeout` - Integer containing the number of milliseconds to wait for a\nserver to send response headers (and start the response body) before aborting\nthe request. Note that if the underlying TCP connection cannot be established,\nthe OS-wide TCP connection timeout will overrule the `timeout` option ([the\ndefault in Linux can be anywhere from 20-120 seconds][linux-timeout]).\n\n[linux-timeout]: http://www.sekuda.com/overriding_the_default_linux_kernel_20_second_tcp_socket_connect_timeout\n\n---\n\n- `localAddress` - Local interface to bind for network connections.\n- `proxy` - An HTTP proxy to be used. Supports proxy Auth with Basic Auth, identical to support for the `url` parameter (by embedding the auth info in the `uri`)\n- `strictSSL` - If `true`, requires SSL certificates be valid. **Note:** to use your own certificate authority, you need to specify an agent that was created with that CA as an option.\n- `tunnel` - controls the behavior of\n [HTTP `CONNECT` tunneling](https://en.wikipedia.org/wiki/HTTP_tunnel#HTTP_CONNECT_tunneling)\n as follows:\n - `undefined` (default) - `true` if the destination is `https`, `false` otherwise\n - `true` - always tunnel to the destination by making a `CONNECT` request to\n the proxy\n - `false` - request the destination as a `GET` request.\n- `proxyHeaderWhiteList` - A whitelist of headers to send to a\n tunneling proxy.\n- `proxyHeaderExclusiveList` - A whitelist of headers to send\n exclusively to a tunneling proxy and not to destination.\n\n---\n\n- `time` - If `true`, the request-response cycle (including all redirects) is timed at millisecond resolution, and the result provided on the response's `elapsedTime` property.\n- `har` - A [HAR 1.2 Request Object](http://www.softwareishard.com/blog/har-12-spec/#request), will be processed from HAR format into options overwriting matching values *(see the [HAR 1.2 section](#support-for-har-1.2) for details)*\n\nThe callback argument gets 3 arguments:\n\n1. An `error` when applicable (usually from [`http.ClientRequest`](http://nodejs.org/api/http.html#http_class_http_clientrequest) object)\n2. An [`http.IncomingMessage`](http://nodejs.org/api/http.html#http_http_incomingmessage) object\n3. The third is the `response` body (`String` or `Buffer`, or JSON object if the `json` option is supplied)\n\n[back to top](#table-of-contents)\n\n\n---\n\n## Convenience methods\n\nThere are also shorthand methods for different HTTP METHODs and some other conveniences.\n\n\n### request.defaults(options)\n\nThis method **returns a wrapper** around the normal request API that defaults\nto whatever options you pass to it.\n\n**Note:** `request.defaults()` **does not** modify the global request API;\ninstead, it **returns a wrapper** that has your default settings applied to it.\n\n**Note:** You can call `.defaults()` on the wrapper that is returned from\n`request.defaults` to add/override defaults that were previously defaulted.\n\nFor example:\n```js\n//requests using baseRequest() will set the 'x-token' header\nvar baseRequest = request.defaults({\n headers: {'x-token': 'my-token'}\n})\n\n//requests using specialRequest() will include the 'x-token' header set in\n//baseRequest and will also include the 'special' header\nvar specialRequest = baseRequest.defaults({\n headers: {special: 'special value'}\n})\n```\n\n### request.put\n\nSame as `request()`, but defaults to `method: \"PUT\"`.\n\n```js\nrequest.put(url)\n```\n\n### request.patch\n\nSame as `request()`, but defaults to `method: \"PATCH\"`.\n\n```js\nrequest.patch(url)\n```\n\n### request.post\n\nSame as `request()`, but defaults to `method: \"POST\"`.\n\n```js\nrequest.post(url)\n```\n\n### request.head\n\nSame as `request()`, but defaults to `method: \"HEAD\"`.\n\n```js\nrequest.head(url)\n```\n\n### request.del\n\nSame as `request()`, but defaults to `method: \"DELETE\"`.\n\n```js\nrequest.del(url)\n```\n\n### request.get\n\nSame as `request()` (for uniformity).\n\n```js\nrequest.get(url)\n```\n### request.cookie\n\nFunction that creates a new cookie.\n\n```js\nrequest.cookie('key1=value1')\n```\n### request.jar()\n\nFunction that creates a new cookie jar.\n\n```js\nrequest.jar()\n```\n\n[back to top](#table-of-contents)\n\n\n---\n\n\n## Debugging\n\nThere are at least three ways to debug the operation of `request`:\n\n1. Launch the node process like `NODE_DEBUG=request node script.js`\n (`lib,request,otherlib` works too).\n\n2. Set `require('request').debug = true` at any time (this does the same thing\n as #1).\n\n3. Use the [request-debug module](https://github.com/request/request-debug) to\n view request and response headers and bodies.\n\n[back to top](#table-of-contents)\n\n\n---\n\n## Timeouts\n\nMost requests to external servers should have a timeout attached, in case the\nserver is not responding in a timely manner. Without a timeout, your code may\nhave a socket open/consume resources for minutes or more.\n\nThere are two main types of timeouts: **connection timeouts** and **read\ntimeouts**. A connect timeout occurs if the timeout is hit while your client is\nattempting to establish a connection to a remote machine (corresponding to the\n[connect() call][connect] on the socket). A read timeout occurs any time the\nserver is too slow to send back a part of the response.\n\nThese two situations have widely different implications for what went wrong\nwith the request, so it's useful to be able to distinguish them. You can detect\ntimeout errors by checking `err.code` for an 'ETIMEDOUT' value. Further, you\ncan detect whether the timeout was a connection timeout by checking if the\n`err.connect` property is set to `true`.\n\n```js\nrequest.get('http://10.255.255.1', {timeout: 1500}, function(err) {\n console.log(err.code === 'ETIMEDOUT');\n // Set to `true` if the timeout was a connection timeout, `false` or\n // `undefined` otherwise.\n console.log(err.connect === true);\n process.exit(0);\n});\n```\n\n[connect]: http://linux.die.net/man/2/connect\n\n## Examples:\n\n```js\n var request = require('request')\n , rand = Math.floor(Math.random()*100000000).toString()\n ;\n request(\n { method: 'PUT'\n , uri: 'http://mikeal.iriscouch.com/testjs/' + rand\n , multipart:\n [ { 'content-type': 'application/json'\n , body: JSON.stringify({foo: 'bar', _attachments: {'message.txt': {follows: true, length: 18, 'content_type': 'text/plain' }}})\n }\n , { body: 'I am an attachment' }\n ]\n }\n , function (error, response, body) {\n if(response.statusCode == 201){\n console.log('document saved as: http://mikeal.iriscouch.com/testjs/'+ rand)\n } else {\n console.log('error: '+ response.statusCode)\n console.log(body)\n }\n }\n )\n```\n\nFor backwards-compatibility, response compression is not supported by default.\nTo accept gzip-compressed responses, set the `gzip` option to `true`. Note\nthat the body data passed through `request` is automatically decompressed\nwhile the response object is unmodified and will contain compressed data if\nthe server sent a compressed response.\n\n```js\n var request = require('request')\n request(\n { method: 'GET'\n , uri: 'http://www.google.com'\n , gzip: true\n }\n , function (error, response, body) {\n // body is the decompressed response body\n console.log('server encoded the data as: ' + (response.headers['content-encoding'] || 'identity'))\n console.log('the decoded data is: ' + body)\n }\n ).on('data', function(data) {\n // decompressed data as it is received\n console.log('decoded chunk: ' + data)\n })\n .on('response', function(response) {\n // unmodified http.IncomingMessage object\n response.on('data', function(data) {\n // compressed data as it is received\n console.log('received ' + data.length + ' bytes of compressed data')\n })\n })\n```\n\nCookies are disabled by default (else, they would be used in subsequent requests). To enable cookies, set `jar` to `true` (either in `defaults` or `options`).\n\n```js\nvar request = request.defaults({jar: true})\nrequest('http://www.google.com', function () {\n request('http://images.google.com')\n})\n```\n\nTo use a custom cookie jar (instead of `request`’s global cookie jar), set `jar` to an instance of `request.jar()` (either in `defaults` or `options`)\n\n```js\nvar j = request.jar()\nvar request = request.defaults({jar:j})\nrequest('http://www.google.com', function () {\n request('http://images.google.com')\n})\n```\n\nOR\n\n```js\nvar j = request.jar();\nvar cookie = request.cookie('key1=value1');\nvar url = 'http://www.google.com';\nj.setCookie(cookie, url);\nrequest({url: url, jar: j}, function () {\n request('http://images.google.com')\n})\n```\n\nTo use a custom cookie store (such as a\n[`FileCookieStore`](https://github.com/mitsuru/tough-cookie-filestore)\nwhich supports saving to and restoring from JSON files), pass it as a parameter\nto `request.jar()`:\n\n```js\nvar FileCookieStore = require('tough-cookie-filestore');\n// NOTE - currently the 'cookies.json' file must already exist!\nvar j = request.jar(new FileCookieStore('cookies.json'));\nrequest = request.defaults({ jar : j })\nrequest('http://www.google.com', function() {\n request('http://images.google.com')\n})\n```\n\nThe cookie store must be a\n[`tough-cookie`](https://github.com/SalesforceEng/tough-cookie)\nstore and it must support synchronous operations; see the\n[`CookieStore` API docs](https://github.com/SalesforceEng/tough-cookie#cookiestore-api)\nfor details.\n\nTo inspect your cookie jar after a request:\n\n```js\nvar j = request.jar()\nrequest({url: 'http://www.google.com', jar: j}, function () {\n var cookie_string = j.getCookieString(url); // \"key1=value1; key2=value2; ...\"\n var cookies = j.getCookies(url);\n // [{key: 'key1', value: 'value1', domain: \"www.google.com\", ...}, ...]\n})\n```\n\n[back to top](#table-of-contents)\n",

|

|

103

|

+

"readmeFilename": "README.md",

|

|

103

104

|

"repository": {

|

|

104

105

|

"type": "git",

|

|

105

106

|

"url": "git+https://github.com/request/request.git"

|

|

@@ -66,7 +66,8 @@

|

|

|

66

66

|

],

|

|

67

67

|

"name": "glob",

|

|

68

68

|

"optionalDependencies": {},

|

|

69

|

-

"readme": "ERROR: No README data found!",

|

|

69

|

+