e2e-testing-agent 0.1.0

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/.env.example +3 -0

- package/LICENSE +21 -0

- package/README.md +89 -0

- package/e2e_agent/agent.ts +417 -0

- package/e2e_agent/index.ts +60 -0

- package/e2e_agent/memory.ts +110 -0

- package/e2e_agent/tools.ts +74 -0

- package/examples.test.ts +26 -0

- package/package.json +19 -0

- package/plasmidsaurus.test.ts +39 -0

- package/test_replays/842387e7ddf282738c06ea1d5139c46e.json +100 -0

- package/test_replays/fcfc76e7deac088da1aa2ef5ef7e47c6.json +315 -0

- package/test_replays/null/fcfc76e7deac088da1aa2ef5ef7e47c6.json +369 -0

- package/test_replays/plasmidsaurus.test.ts/842387e7ddf282738c06ea1d5139c46e.json +100 -0

- package/tsconfig.json +29 -0

package/.env.example

ADDED

package/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

1

|

+

MIT License

|

|

2

|

+

|

|

3

|

+

Copyright (c) 2025 Swizec Teller

|

|

4

|

+

|

|

5

|

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

|

6

|

+

of this software and associated documentation files (the "Software"), to deal

|

|

7

|

+

in the Software without restriction, including without limitation the rights

|

|

8

|

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

|

9

|

+

copies of the Software, and to permit persons to whom the Software is

|

|

10

|

+

furnished to do so, subject to the following conditions:

|

|

11

|

+

|

|

12

|

+

The above copyright notice and this permission notice shall be included in all

|

|

13

|

+

copies or substantial portions of the Software.

|

|

14

|

+

|

|

15

|

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

|

16

|

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

|

17

|

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

|

18

|

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

|

19

|

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

|

20

|

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

|

21

|

+

SOFTWARE.

|

package/README.md

ADDED

|

@@ -0,0 +1,89 @@

|

|

|

1

|

+

# e2e-testing-agent

|

|

2

|

+

|

|

3

|

+

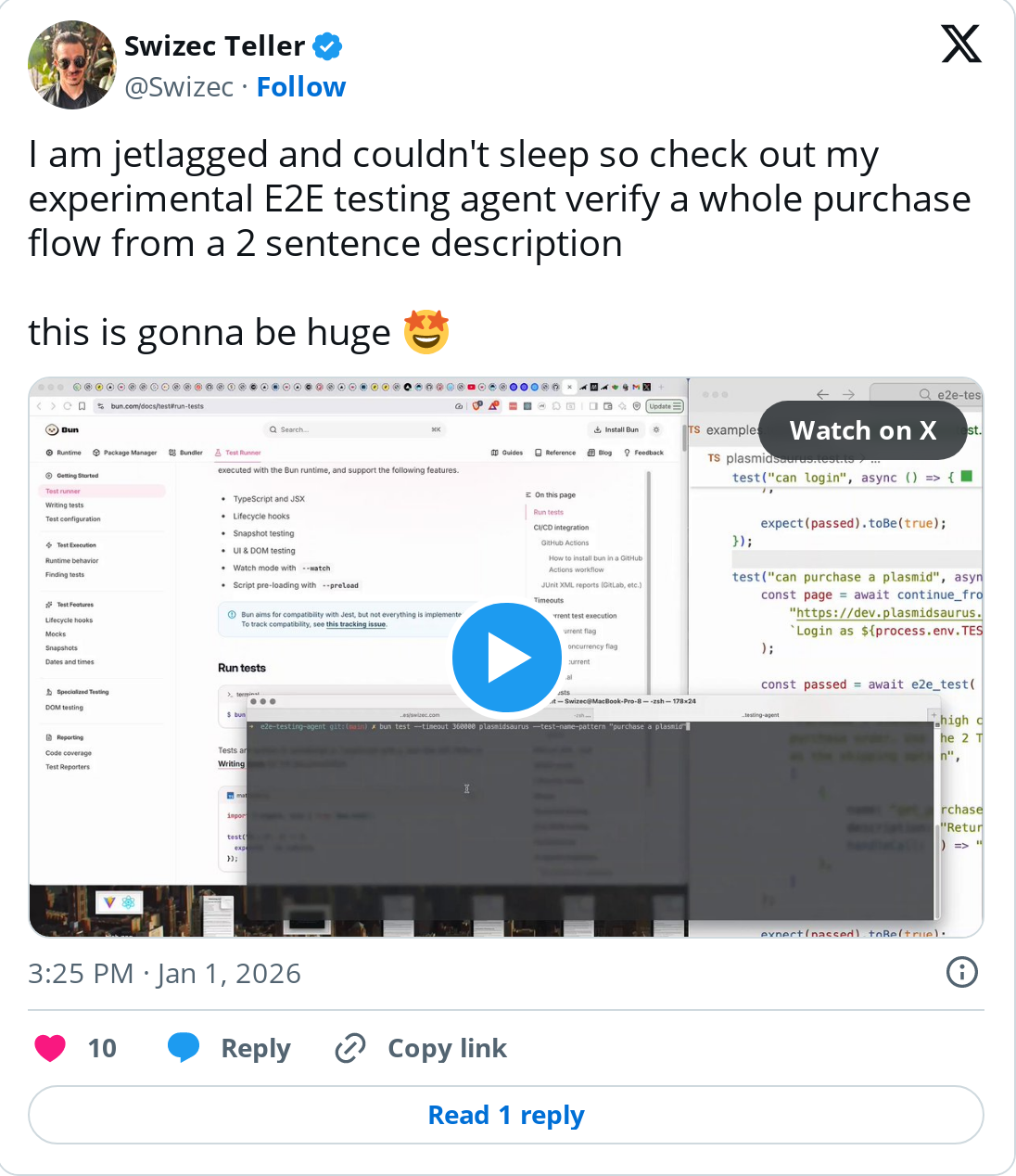

Here’s the idea:

|

|

4

|

+

1. You write plain language goal oriented specs

|

|

5

|

+

2. Agent looks at your UI and tries to follow the spec

|

|

6

|

+

3. Writes cheap-to-run playwright based tests

|

|

7

|

+

|

|

8

|

+

Goal is to make e2e testing ergonomic to use and cheap to update when things change.

|

|

9

|

+

|

|

10

|

+

## What it looks like

|

|

11

|

+

|

|

12

|

+

Here's an example of verifying a checkout flow. The agent figured it out from scratch on first run and recorded every action. Subsequent runs replay those actions on your page and check everything still works.

|

|

13

|

+

|

|

14

|

+

[](https://x.com/Swizec/status/2006748633425985776)

|

|

15

|

+

|

|

16

|

+

You specify the test in plain language. No fiddly Playwright commands, cumbersome testing code to write, or messing around with the DOM – your tests are fully declarative and goal oriented.

|

|

17

|

+

|

|

18

|

+

```typescript

|

|

19

|

+

test("can purchase a plasmid", async () => {

|

|

20

|

+

const page = await continue_from(

|

|

21

|

+

"https://dev.plasmidsaurus.com",

|

|

22

|

+

`Login as ${process.env.TEST_USER_EMAIL}. You'll see a welcome message upon successful login.`

|

|

23

|

+

);

|

|

24

|

+

|

|

25

|

+

const passed = await e2e_test(

|

|

26

|

+

page,

|

|

27

|

+

"Purchase 3 standard high concentration plasmids using a purchase order. Use the 2 Tower Place, San Francisco dropbox as the shipping option",

|

|

28

|

+

[

|

|

29

|

+

{

|

|

30

|

+

name: "get_purchase_order",

|

|

31

|

+

description: "Returns a valid purchase order PO number.",

|

|

32

|

+

handleCall: () => "PO34238124",

|

|

33

|

+

},

|

|

34

|

+

]

|

|

35

|

+

);

|

|

36

|

+

|

|

37

|

+

expect(passed).toBe(true);

|

|

38

|

+

});

|

|

39

|

+

```

|

|

40

|

+

|

|

41

|

+

## How to use

|

|

42

|

+

|

|

43

|

+

`e2e-testing-agent` is extremely early software. Use with caution and please report sharp edges. I have tested with `bun test` but the library should work with every test runner.

|

|

44

|

+

|

|

45

|

+

The core API consists of 2 functions:

|

|

46

|

+

|

|

47

|

+

### e2e_test

|

|

48

|

+

|

|

49

|

+

```typescript

|

|

50

|

+

e2e_test(

|

|

51

|

+

start_location: string | Page,

|

|

52

|

+

goal: string,

|

|

53

|

+

tools: ToolDefinition[] = []

|

|

54

|

+

): Promise<boolean>

|

|

55

|

+

```

|

|

56

|

+

|

|

57

|

+

Accepts a URL or open Playwright page as the starting point. The `goal` specifies what you want the agent to achieve.

|

|

58

|

+

|

|

59

|

+

Returns pass/fail as a boolean.

|

|

60

|

+

|

|

61

|

+

Uses agentic mode on first run then blindly repeats those steps on subsequent runs.

|

|

62

|

+

|

|

63

|

+

### continue_from

|

|

64

|

+

|

|

65

|

+

```typescript

|

|

66

|

+

continue_from(url: string, goal: string): Promise<Page>

|

|

67

|

+

```

|

|

68

|

+

|

|

69

|

+

Accepts url and goal that should match an existing test with a stored replay. Returns a page with those steps replayed.

|

|

70

|

+

|

|

71

|

+

This lets you reuse existing verified steps so your tests are cheaper to run. Similar idea as fixtures.

|

|

72

|

+

|

|

73

|

+

### Provide tools to the agent

|

|

74

|

+

|

|

75

|

+

The agent can use tools (function calls) to do work on the page. You can use this to provide custom values for certain actions or execute custom code that interacts with your system.

|

|

76

|

+

|

|

77

|

+

You pass tools as an array of objects to `e2e_test`:

|

|

78

|

+

|

|

79

|

+

```typescript

|

|

80

|

+

[

|

|

81

|

+

{

|

|

82

|

+

name: "get_password",

|

|

83

|

+

description: "Returns the password for the test user.",

|

|

84

|

+

handleCall: () => process.env.TEST_USER_PASSWORD || "",

|

|

85

|

+

},

|

|

86

|

+

]

|

|

87

|

+

```

|

|

88

|

+

|

|

89

|

+

Every tool needs a `name` and `description` that the agent can use to figure out which tools to use, and `handleCall` which is the function to execute.

|

|

@@ -0,0 +1,417 @@

|

|

|

1

|

+

import OpenAI from "openai";

|

|

2

|

+

import { type Page } from "playwright";

|

|

3

|

+

import {

|

|

4

|

+

builtinTools,

|

|

5

|

+

handleFunctionCalls,

|

|

6

|

+

toolsForModelCall,

|

|

7

|

+

type ToolDefinition,

|

|

8

|

+

} from "./tools";

|

|

9

|

+

import { getReplay, storeReplay } from "./memory";

|

|

10

|

+

|

|

11

|

+

const openai = new OpenAI();

|

|

12

|

+

|

|

13

|

+

async function handleModelAction(

|

|

14

|

+

page: Page,

|

|

15

|

+

action:

|

|

16

|

+

| OpenAI.Responses.ResponseComputerToolCall.Click

|

|

17

|

+

| OpenAI.Responses.ResponseComputerToolCall.DoubleClick

|

|

18

|

+

| OpenAI.Responses.ResponseComputerToolCall.Drag

|

|

19

|

+

| OpenAI.Responses.ResponseComputerToolCall.Keypress

|

|

20

|

+

| OpenAI.Responses.ResponseComputerToolCall.Move

|

|

21

|

+

| OpenAI.Responses.ResponseComputerToolCall.Screenshot

|

|

22

|

+

| OpenAI.Responses.ResponseComputerToolCall.Scroll

|

|

23

|

+

| OpenAI.Responses.ResponseComputerToolCall.Type

|

|

24

|

+

| OpenAI.Responses.ResponseComputerToolCall.Wait

|

|

25

|

+

) {

|

|

26

|

+

// Given a computer action (e.g., click, double_click, scroll, etc.),

|

|

27

|

+

// execute the corresponding operation on the Playwright page.

|

|

28

|

+

|

|

29

|

+

const actionType = action.type;

|

|

30

|

+

|

|

31

|

+

try {

|

|

32

|

+

switch (actionType) {

|

|

33

|

+

case "click": {

|

|

34

|

+

const { x, y, button = "left" } = action;

|

|

35

|

+

console.log(

|

|

36

|

+

`Action: click at (${x}, ${y}) with button '${button}'`

|

|

37

|

+

);

|

|

38

|

+

await page.mouse.click(x, y, { button });

|

|

39

|

+

break;

|

|

40

|

+

}

|

|

41

|

+

|

|

42

|

+

case "scroll": {

|

|

43

|

+

const { x, y, scroll_x, scroll_y } = action;

|

|

44

|

+

console.log(

|

|

45

|

+

`Action: scroll at (${x}, ${y}) with offsets (scrollX=${scroll_x}, scrollY=${scroll_y})`

|

|

46

|

+

);

|

|

47

|

+

await page.mouse.move(x, y);

|

|

48

|

+

await page.evaluate(

|

|

49

|

+

`window.scrollBy(${scroll_x}, ${scroll_y})`

|

|

50

|

+

);

|

|

51

|

+

break;

|

|

52

|

+

}

|

|

53

|

+

|

|

54

|

+

case "keypress": {

|

|

55

|

+

const { keys } = action;

|

|

56

|

+

for (const k of keys) {

|

|

57

|

+

console.log(`Action: keypress '${k}'`);

|

|

58

|

+

// A simple mapping for common keys; expand as needed.

|

|

59

|

+

if (k.includes("ENTER")) {

|

|

60

|

+

await page.keyboard.press("Enter");

|

|

61

|

+

} else if (k.includes("SPACE")) {

|

|

62

|

+

await page.keyboard.press(" ");

|

|

63

|

+

} else if (k.includes("CMD")) {

|

|

64

|

+

await page.keyboard.press("ControlOrMeta");

|

|

65

|

+

} else {

|

|

66

|

+

await page.keyboard.press(k);

|

|

67

|

+

}

|

|

68

|

+

}

|

|

69

|

+

break;

|

|

70

|

+

}

|

|

71

|

+

|

|

72

|

+

case "type": {

|

|

73

|

+

const { text } = action;

|

|

74

|

+

console.log(`Action: type text '${text}'`);

|

|

75

|

+

await page.keyboard.type(text);

|

|

76

|

+

break;

|

|

77

|

+

}

|

|

78

|

+

|

|

79

|

+

case "wait": {

|

|

80

|

+

console.log(`Action: wait`);

|

|

81

|

+

await page.waitForTimeout(3000);

|

|

82

|

+

break;

|

|

83

|

+

}

|

|

84

|

+

|

|

85

|

+

case "screenshot": {

|

|

86

|

+

// Nothing to do as screenshot is taken at each turn

|

|

87

|

+

console.log(`Action: screenshot`);

|

|

88

|

+

break;

|

|

89

|

+

}

|

|

90

|

+

|

|

91

|

+

// Handle other actions here

|

|

92

|

+

|

|

93

|

+

default:

|

|

94

|

+

console.log("Unrecognized action:", action);

|

|

95

|

+

}

|

|

96

|

+

} catch (e) {

|

|

97

|

+

console.error("Error handling action", action, ":", e);

|

|

98

|

+

}

|

|

99

|

+

}

|

|

100

|

+

|

|

101

|

+

async function computerUseLoop(

|

|

102

|

+

page: Page,

|

|

103

|

+

response: OpenAI.Responses.Response,

|

|

104

|

+

availableTools: ToolDefinition[]

|

|

105

|

+

): Promise<[OpenAI.Responses.Response, any[]]> {

|

|

106

|

+

/**

|

|

107

|

+

* Run the loop that executes computer actions and tool calls until no 'computer_call' or 'function_call' is found.

|

|

108

|

+

*/

|

|

109

|

+

const computerCallStack = [];

|

|

110

|

+

|

|

111

|

+

while (true) {

|

|

112

|

+

console.debug(response.output);

|

|

113

|

+

|

|

114

|

+

const functionCallOutputs = handleFunctionCalls(

|

|

115

|

+

response,

|

|

116

|

+

availableTools

|

|

117

|

+

);

|

|

118

|

+

|

|

119

|

+

const computerCalls = response.output.filter(

|

|

120

|

+

(item) => item.type === "computer_call"

|

|

121

|

+

);

|

|

122

|

+

|

|

123

|

+

if (computerCalls.length === 0 && functionCallOutputs.length === 0) {

|

|

124

|

+

console.debug("No computer or tool calls found. Final output:");

|

|

125

|

+

console.debug(JSON.stringify(response.output, null, 2));

|

|

126

|

+

|

|

127

|

+

break; // Exit when no computer calls are issued.

|

|

128

|

+

}

|

|

129

|

+

|

|

130

|

+

let hasNewAction = false;

|

|

131

|

+

|

|

132

|

+

if (computerCalls[0]) {

|

|

133

|

+

computerCallStack.push(computerCalls[0]);

|

|

134

|

+

hasNewAction = true;

|

|

135

|

+

}

|

|

136

|

+

|

|

137

|

+

// We expect at most one computer call per response.

|

|

138

|

+

const lastComputerCall =

|

|

139

|

+

computerCallStack[computerCallStack.length - 1];

|

|

140

|

+

const lastCallId = lastComputerCall?.call_id;

|

|

141

|

+

const action = lastComputerCall?.action;

|

|

142

|

+

const safetyChecks = lastComputerCall?.pending_safety_checks || [];

|

|

143

|

+

|

|

144

|

+

console.debug("safety checks", safetyChecks);

|

|

145

|

+

|

|

146

|

+

if (hasNewAction && action) {

|

|

147

|

+

// Execute the action (function defined in step 3)

|

|

148

|

+

handleModelAction(page, action);

|

|

149

|

+

await new Promise((resolve) => setTimeout(resolve, 200)); // Allow time for changes to take effect.

|

|

150

|

+

}

|

|

151

|

+

|

|

152

|

+

// Take a screenshot after the action

|

|

153

|

+

const screenshotBytes = await page.screenshot();

|

|

154

|

+

const screenshotBase64 =

|

|

155

|

+

Buffer.from(screenshotBytes).toString("base64");

|

|

156

|

+

|

|

157

|

+

console.debug("Calling model with screenshot and tool outputs...");

|

|

158

|

+

|

|

159

|

+

// Send the screenshot back as a computer_call_output

|

|

160

|

+

response = await openai.responses.create({

|

|

161

|

+

model: "computer-use-preview",

|

|

162

|

+

previous_response_id: response.id,

|

|

163

|

+

tools: [

|

|

164

|

+

{

|

|

165

|

+

type: "computer_use_preview",

|

|

166

|

+

display_width: Number(process.env.DISPLAY_WIDTH),

|

|

167

|

+

display_height: Number(process.env.DISPLAY_HEIGHT),

|

|

168

|

+

environment: "browser",

|

|

169

|

+

},

|

|

170

|

+

...toolsForModelCall(availableTools),

|

|

171

|

+

],

|

|

172

|

+

input: [

|

|

173

|

+

{

|

|

174

|

+

call_id: lastCallId,

|

|

175

|

+

type: "computer_call_output",

|

|

176

|

+

acknowledged_safety_checks: safetyChecks,

|

|

177

|

+

output: {

|

|

178

|

+

type: "input_image",

|

|

179

|

+

image_url: `data:image/png;base64,${screenshotBase64}`,

|

|

180

|

+

},

|

|

181

|

+

},

|

|

182

|

+

...functionCallOutputs,

|

|

183

|

+

],

|

|

184

|

+

truncation: "auto",

|

|

185

|

+

});

|

|

186

|

+

}

|

|

187

|

+

|

|

188

|

+

return [response, computerCallStack];

|

|

189

|

+

}

|

|

190

|

+

|

|

191

|

+

async function verifyGoalAchieved(

|

|

192

|

+

response: OpenAI.Responses.Response,

|

|

193

|

+

goal: string

|

|

194

|

+

): Promise<boolean> {

|

|

195

|

+

/**

|

|

196

|

+

* Verify if the goal has been achieved based on the model's final response.

|

|

197

|

+

*/

|

|

198

|

+

|

|

199

|

+

console.debug(

|

|

200

|

+

"Verifying response against goal",

|

|

201

|

+

JSON.stringify(

|

|

202

|

+

[

|

|

203

|

+

{

|

|

204

|

+

role: "user",

|

|

205

|

+

content: `Based on the following information, did the agent successfully accomplish the goal: "${goal}"? Respond with "yes" or "no" only.`,

|

|

206

|

+

},

|

|

207

|

+

{

|

|

208

|

+

role: "user",

|

|

209

|

+

content: `Final agent response: ${JSON.stringify(

|

|

210

|

+

response.output,

|

|

211

|

+

null,

|

|

212

|

+

2

|

|

213

|

+

)}`,

|

|

214

|

+

},

|

|

215

|

+

],

|

|

216

|

+

null,

|

|

217

|

+

2

|

|

218

|

+

)

|

|

219

|

+

);

|

|

220

|

+

|

|

221

|

+

const verificationResponse = await openai.responses.create({

|

|

222

|

+

model: "gpt-5-nano",

|

|

223

|

+

input: [

|

|

224

|

+

{

|

|

225

|

+

role: "user",

|

|

226

|

+

content: `Based on the following information, did the agent successfully accomplish the goal: "${goal}"? Respond with "yes" or "no" only.`,

|

|

227

|

+

},

|

|

228

|

+

{

|

|

229

|

+

role: "user",

|

|

230

|

+

content: `Final agent response: ${JSON.stringify(

|

|

231

|

+

response.output,

|

|

232

|

+

null,

|

|

233

|

+

2

|

|

234

|

+

)}`,

|

|

235

|

+

},

|

|

236

|

+

],

|

|

237

|

+

});

|

|

238

|

+

|

|

239

|

+

console.debug(JSON.stringify(verificationResponse.output, null, 2));

|

|

240

|

+

|

|

241

|

+

const answer = verificationResponse.output

|

|

242

|

+

.map((item) => {

|

|

243

|

+

if (item.type === "message") {

|

|

244

|

+

return item.content

|

|

245

|

+

.filter((c) => c.type === "output_text")

|

|

246

|

+

.map((c) => c.text)

|

|

247

|

+

.join(" ")

|

|

248

|

+

.toString()

|

|

249

|

+

.toLowerCase();

|

|

250

|

+

}

|

|

251

|

+

return "";

|

|

252

|

+

})

|

|

253

|

+

.join(" ");

|

|

254

|

+

|

|

255

|

+

console.debug("Verification answer:", answer);

|

|

256

|

+

return answer.includes("yes") && !answer.includes("no");

|

|

257

|

+

}

|

|

258

|

+

|

|

259

|

+

async function verifyGoalAchievedFromScreenshot(

|

|

260

|

+

screenshotBase64: string,

|

|

261

|

+

goal: string

|

|

262

|

+

): Promise<boolean> {

|

|

263

|

+

/**

|

|

264

|

+

* Verify if the goal has been achieved based on the model's final response.

|

|

265

|

+

*/

|

|

266

|

+

|

|

267

|

+

const verificationResponse = await openai.responses.create({

|

|

268

|

+

model: "gpt-5-nano",

|

|

269

|

+

input: [

|

|

270

|

+

{

|

|

271

|

+

role: "user",

|

|

272

|

+

content: [

|

|

273

|

+

{

|

|

274

|

+

type: "input_text",

|

|

275

|

+

text: `Based on the following information, did the agent successfully accomplish the goal: "${goal}"? Respond with "yes" or "no" only.`,

|

|

276

|

+

},

|

|

277

|

+

{

|

|

278

|

+

type: "input_image",

|

|

279

|

+

image_url: `data:image/png;base64,${screenshotBase64}`,

|

|

280

|

+

detail: "high",

|

|

281

|

+

},

|

|

282

|

+

],

|

|

283

|

+

},

|

|

284

|

+

],

|

|

285

|

+

});

|

|

286

|

+

|

|

287

|

+

console.debug(JSON.stringify(verificationResponse.output, null, 2));

|

|

288

|

+

|

|

289

|

+

const answer = verificationResponse.output

|

|

290

|

+

.map((item) => {

|

|

291

|

+

if (item.type === "message") {

|

|

292

|

+

return item.content

|

|

293

|

+

.filter((c) => c.type === "output_text")

|

|

294

|

+

.map((c) => c.text)

|

|

295

|

+

.join(" ")

|

|

296

|

+

.toString()

|

|

297

|

+

.toLowerCase();

|

|

298

|

+

}

|

|

299

|

+

return "";

|

|

300

|

+

})

|

|

301

|

+

.join(" ");

|

|

302

|

+

|

|

303

|

+

console.debug("Verification answer:", answer);

|

|

304

|

+

return answer.includes("yes") && !answer.includes("no");

|

|

305

|

+

}

|

|

306

|

+

|

|

307

|

+

export async function replayActions(

|

|

308

|

+

url: string,

|

|

309

|

+

goal: string,

|

|

310

|

+

page: Page

|

|

311

|

+

): Promise<boolean> {

|

|

312

|

+

const computerCallStack = await getReplay(url, goal);

|

|

313

|

+

|

|

314

|

+

if (computerCallStack) {

|

|

315

|

+

console.debug(

|

|

316

|

+

`Restoring previous computer call stack with ${computerCallStack.length} actions...`

|

|

317

|

+

);

|

|

318

|

+

|

|

319

|

+

for (const call of computerCallStack) {

|

|

320

|

+

const action = call.action;

|

|

321

|

+

if (action) {

|

|

322

|

+

await handleModelAction(page, action);

|

|

323

|

+

await new Promise((resolve) => setTimeout(resolve, 500)); // Allow time for changes to take effect.

|

|

324

|

+

}

|

|

325

|

+

}

|

|

326

|

+

}

|

|

327

|

+

|

|

328

|

+

return !!computerCallStack;

|

|

329

|

+

}

|

|

330

|

+

|

|

331

|

+

async function takeScreenshotAsBase64(page: Page): Promise<string> {

|

|

332

|

+

const screenshotBytes = await page.screenshot();

|

|

333

|

+

const screenshotBase64 = Buffer.from(screenshotBytes).toString("base64");

|

|

334

|

+

return screenshotBase64;

|

|

335

|

+

}

|

|

336

|

+

|

|

337

|

+

export async function testFromReplay(

|

|

338

|

+

url: string,

|

|

339

|

+

goal: string,

|

|

340

|

+

page: Page

|

|

341

|

+

): Promise<boolean> {

|

|

342

|

+

await replayActions(url, goal, page);

|

|

343

|

+

const screenshotBase64 = await takeScreenshotAsBase64(page);

|

|

344

|

+

|

|

345

|

+

const passed = await verifyGoalAchievedFromScreenshot(

|

|

346

|

+

screenshotBase64,

|

|

347

|

+

goal

|

|

348

|

+

);

|

|

349

|

+

// browser.close();

|

|

350

|

+

|

|

351

|

+

return passed;

|

|

352

|

+

}

|

|

353

|

+

|

|

354

|

+

export async function testFromScratch(

|

|

355

|

+

url: string,

|

|

356

|

+

goal: string,

|

|

357

|

+

page: Page,

|

|

358

|

+

tools: ToolDefinition[]

|

|

359

|

+

): Promise<boolean> {

|

|

360

|

+

const openai = new OpenAI();

|

|

361

|

+

const availableTools: ToolDefinition[] = [...builtinTools, ...tools];

|

|

362

|

+

|

|

363

|

+

const screenshotBase64 = await takeScreenshotAsBase64(page);

|

|

364

|

+

|

|

365

|

+

const response = await openai.responses.create({

|

|

366

|

+

model: "computer-use-preview",

|

|

367

|

+

tools: [

|

|

368

|

+

{

|

|

369

|

+

type: "computer_use_preview",

|

|

370

|

+

display_width: Number(process.env.DISPLAY_WIDTH),

|

|

371

|

+

display_height: Number(process.env.DISPLAY_HEIGHT),

|

|

372

|

+

environment: "browser",

|

|

373

|

+

},

|

|

374

|

+

...toolsForModelCall(availableTools),

|

|

375

|

+

],

|

|

376

|

+

input: [

|

|

377

|

+

{

|

|

378

|

+

role: "system",

|

|

379

|

+

content:

|

|

380

|

+

"You are an autonomous agent running in a sandbox environment designed to test web applications. Use the browser and provided tools to accomplish the user's goal. Submit forms without asking the user for confirmation.",

|

|

381

|

+

},

|

|

382

|

+

{

|

|

383

|

+

role: "user",

|

|

384

|

+

content: [

|

|

385

|

+

{

|

|

386

|

+

type: "input_text",

|

|

387

|

+

text: `You are in a browser navigated to ${url}. ${goal}.`,

|

|

388

|

+

},

|

|

389

|

+

{

|

|

390

|

+

type: "input_image",

|

|

391

|

+

image_url: `data:image/png;base64,${screenshotBase64}`,

|

|

392

|

+

detail: "high",

|

|

393

|

+

},

|

|

394

|

+

],

|

|

395

|

+

},

|

|

396

|

+

],

|

|

397

|

+

reasoning: {

|

|

398

|

+

summary: "concise",

|

|

399

|

+

},

|

|

400

|

+

truncation: "auto",

|

|

401

|

+

});

|

|

402

|

+

|

|

403

|

+

const [finalResponse, computerCallStack] = await computerUseLoop(

|

|

404

|

+

page,

|

|

405

|

+

response,

|

|

406

|

+

availableTools

|

|

407

|

+

);

|

|

408

|

+

|

|

409

|

+

await storeReplay(url, goal, computerCallStack);

|

|

410

|

+

|

|

411

|

+

// browser.close();

|

|

412

|

+

|

|

413

|

+

console.debug("Verifying goal achieved");

|

|

414

|

+

const passed = await verifyGoalAchieved(finalResponse, goal);

|

|

415

|

+

|

|

416

|

+

return passed;

|

|

417

|

+

}

|

|

@@ -0,0 +1,60 @@

|

|

|

1

|

+

import { chromium, type Page } from "playwright";

|

|

2

|

+

import { type ToolDefinition } from "./tools";

|

|

3

|

+

import { canReplayActions } from "./memory";

|

|

4

|

+

import { replayActions, testFromReplay, testFromScratch } from "./agent";

|

|

5

|

+

|

|

6

|

+

async function openBrowser(url: string): Promise<Page> {

|

|

7

|

+

const browser = await chromium.launch({

|

|

8

|

+

headless: false,

|

|

9

|

+

chromiumSandbox: true,

|

|

10

|

+

env: {},

|

|

11

|

+

args: ["--disable-extensions", "--disable-filesystem"],

|

|

12

|

+

});

|

|

13

|

+

|

|

14

|

+

const displayWidth = Number(process.env.DISPLAY_WIDTH);

|

|

15

|

+

const displayHeight = Number(process.env.DISPLAY_HEIGHT);

|

|

16

|

+

|

|

17

|

+

const page = await browser.newPage();

|

|

18

|

+

await page.setViewportSize({

|

|

19

|

+

width: displayWidth,

|

|

20

|

+

height: displayHeight,

|

|

21

|

+

});

|

|

22

|

+

await page.goto(url);

|

|

23

|

+

|

|

24

|

+

return page;

|

|

25

|

+

}

|

|

26

|

+

|

|

27

|

+

export async function continue_from(url: string, goal: string): Promise<Page> {

|

|

28

|

+

const page = await openBrowser(url);

|

|

29

|

+

const replayed = await replayActions(url, goal, page);

|

|

30

|

+

|

|

31

|

+

if (!replayed) {

|

|

32

|

+

throw new Error(

|

|

33

|

+

"No saved computer call stack found for the given URL and goal."

|

|

34

|

+

);

|

|

35

|

+

}

|

|

36

|

+

|

|

37

|

+

return page;

|

|

38

|

+

}

|

|

39

|

+

|

|

40

|

+

export async function e2e_test(

|

|

41

|

+

start_location: string | Page,

|

|

42

|

+

goal: string,

|

|

43

|

+

tools: ToolDefinition[] = []

|

|

44

|

+

): Promise<boolean> {

|

|

45

|

+

let page: Page;

|

|

46

|

+

|

|

47

|

+

if (typeof start_location === "string") {

|

|

48

|

+

page = await openBrowser(start_location);

|

|

49

|

+

} else {

|

|

50

|

+

page = start_location;

|

|

51

|

+

}

|

|

52

|

+

|

|

53

|

+

const startUrl = page.url();

|

|

54

|

+

|

|

55

|

+

if (await canReplayActions(startUrl, goal)) {

|

|

56

|

+

return await testFromReplay(startUrl, goal, page);

|

|

57

|

+

} else {

|

|

58

|

+

return await testFromScratch(startUrl, goal, page, tools);

|

|

59

|

+

}

|

|

60

|

+

}

|

|

@@ -0,0 +1,110 @@

|

|

|

1

|

+

import fs from "fs/promises";

|

|

2

|

+

import path from "path";

|

|

3

|

+

import crypto from "crypto";

|

|

4

|

+

|

|

5

|

+

function getCallingTestFilename() {

|

|

6

|

+

const err = new Error();

|

|

7

|

+

const stack = err.stack?.split("\n") ?? [];

|

|

8

|

+

|

|

9

|

+

// Skip internal frames until we leave node_modules / your framework

|

|

10

|

+

const frame = stack.find(

|

|

11

|

+

(line) =>

|

|

12

|

+

// prefer test/spec files but skip node_modules

|

|

13

|

+

!line.includes("node_modules") &&

|

|

14

|

+

(line.includes(".test") || line.includes(".spec"))

|

|

15

|

+

);

|

|

16

|

+

|

|

17

|

+

const callingTestInfo = frame?.trim();

|

|

18

|

+

|

|

19

|

+

// Examples of frames we expect:

|

|

20

|

+

// at async <anonymous> (/path/to/file.test.ts:5:26)

|

|

21

|

+

// at /path/to/file.test.ts:5:26

|

|

22

|

+

const m =

|

|

23

|

+

callingTestInfo?.match(/\((.*?):(\d+):(\d+)\)$/) ||

|

|

24

|

+

callingTestInfo?.match(/at (.*?):(\d+):(\d+)$/);

|

|

25

|

+

|

|

26

|

+

const filename = m?.[1] ?? null;

|

|

27

|

+

|

|

28

|

+

// Return only the basename (file name with extension), not the full path

|

|

29

|

+

const base = filename ? path.basename(filename) : null;

|

|

30

|

+

|

|

31

|

+

return base;

|

|

32

|

+

}

|

|

33

|

+

|

|

34

|

+

function getFilename(url: string, goal: string): string {

|

|

35

|

+

const hash = crypto

|

|

36

|

+

.createHash("md5")

|

|

37

|

+

.update(url.replace(/\/$/, "") + goal)

|

|

38

|

+

.digest("hex");

|

|

39

|

+

|

|

40

|

+

// const testFilename = getCallingTestFilename();

|

|

41

|

+

// TODO: use more human readable filenames

|

|

42

|

+

const filename = `test_replays/${hash}.json`;

|

|

43

|

+

|

|

44

|

+

return filename;

|

|

45

|

+

}

|

|

46

|

+

|

|

47

|

+

export async function storeReplay(

|

|

48

|

+

url: string,

|

|

49

|

+

goal: string,

|

|

50

|

+

computerCallStack: any[]

|

|

51

|

+

) {

|

|

52

|

+

/**

|

|

53

|

+

* Store the computer call stack for future reference.

|

|

54

|

+

*/

|

|

55

|

+

const filename = getFilename(url, goal);

|

|

56

|

+

|

|

57

|

+

// Ensure the directory for the filename exists before writing.

|

|

58

|

+

const dir = path.dirname(filename);

|

|

59

|

+

await fs.mkdir(dir, { recursive: true });

|

|

60

|

+

|

|

61

|

+

await fs.writeFile(

|

|

62

|

+

filename,

|

|

63

|

+

JSON.stringify(

|

|

64

|

+

{

|

|

65

|

+

url,

|

|

66

|

+

goal,

|

|

67

|

+

computerCallStack,

|

|

68

|

+

},

|

|

69

|

+

null,

|

|

70

|

+

2

|

|

71

|

+

)

|

|

72

|

+

);

|

|

73

|

+

}

|

|

74

|

+

|

|

75

|

+

export async function getReplay(

|

|

76

|

+

url: string,

|

|

77

|

+

goal: string

|

|

78

|

+

): Promise<any[] | null> {

|

|

79

|

+

/**

|

|

80

|

+

* Restore the computer call stack if it exists.

|

|

81

|

+

*/

|

|

82

|

+

const filename = getFilename(url, goal);

|

|

83

|

+

|

|

84

|

+

console.debug({ url, goal, filename });

|

|

85

|

+

|

|

86

|

+

try {

|

|

87

|

+

const data = await fs.readFile(filename, "utf-8");

|

|

88

|

+

const parsed = JSON.parse(data);

|

|

89

|

+

return parsed.computerCallStack;

|

|

90

|

+

} catch (e) {

|

|

91

|

+

return null;

|

|

92

|

+

}

|

|

93

|

+

}

|

|

94

|

+

|

|

95

|

+

export async function canReplayActions(

|

|

96

|

+

url: string,

|

|

97

|

+

goal: string

|

|

98

|

+

): Promise<boolean> {

|

|

99

|

+

/**

|

|

100

|

+

* Check if a replay exists for the given URL and goal.

|

|

101

|

+

*/

|

|

102

|

+

const filename = getFilename(url, goal);

|

|

103

|

+

|

|

104

|

+

try {

|

|

105

|

+

await fs.access(filename);

|

|

106

|

+

return true;

|

|

107

|

+

} catch (e) {

|

|

108

|

+

return false;

|

|

109

|

+

}

|

|

110

|

+

}

|