commitmind 1.0.1 → 1.0.3

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/README.md +176 -6

- package/bun.lock +3 -0

- package/images/ss1.png +0 -0

- package/package.json +3 -2

- package/src/config.ts +26 -0

- package/src/index.ts +56 -9

- package/src/ollama.ts +23 -13

- package/src/selectModel.ts +46 -0

package/README.md

CHANGED

|

@@ -1,15 +1,185 @@

|

|

|

1

|

-

#

|

|

1

|

+

# CommitMind

|

|

2

2

|

|

|

3

|

-

|

|

3

|

+

|

|

4

|

+

|

|

5

|

+

|

|

6

|

+

|

|

7

|

+

**CommitMind** is an AI-powered Git CLI that automatically generates clean, meaningful commit messages using local LLMs via **Ollama**.

|

|

8

|

+

|

|

9

|

+

Stop wasting time writing commit messages. Let AI handle it — fast, private, and fully local.

|

|

10

|

+

|

|

11

|

+

---

|

|

12

|

+

|

|

13

|

+

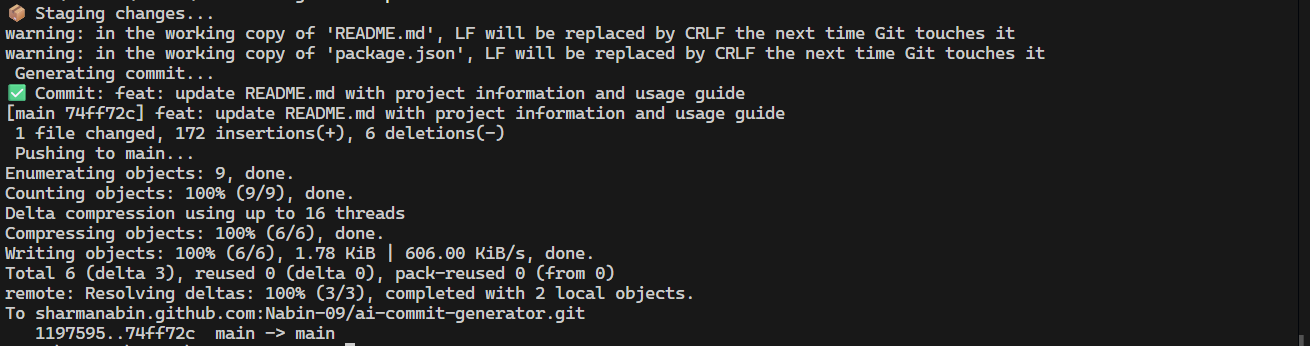

## Demo

|

|

14

|

+

|

|

15

|

+

|

|

16

|

+

|

|

17

|

+

## Features

|

|

18

|

+

|

|

19

|

+

* AI-generated semantic commit messages

|

|

20

|

+

* Fast and local (no cloud, no API keys)

|

|

21

|

+

* Privacy-first — your code never leaves your machine

|

|

22

|

+

* Auto stage, commit, and push

|

|

23

|

+

* Conventional commit format

|

|

24

|

+

* Works in any Git project

|

|

25

|

+

* Cross-platform (Windows, Mac, Linux)

|

|

26

|

+

|

|

27

|

+

---

|

|

28

|

+

|

|

29

|

+

## Installation

|

|

30

|

+

|

|

31

|

+

Install globally:

|

|

32

|

+

|

|

33

|

+

```bash

|

|

34

|

+

npm install -g commitmind

|

|

35

|

+

```

|

|

36

|

+

|

|

37

|

+

Or with Bun:

|

|

38

|

+

|

|

39

|

+

```bash

|

|

40

|

+

bun add -g commitmind

|

|

41

|

+

```

|

|

42

|

+

|

|

43

|

+

---

|

|

44

|

+

|

|

45

|

+

## Requirements

|

|

46

|

+

|

|

47

|

+

CommitMind uses **Ollama** to run AI locally.

|

|

48

|

+

|

|

49

|

+

### Install Ollama

|

|

50

|

+

|

|

51

|

+

Download from:

|

|

52

|

+

👉 https://ollama.com

|

|

53

|

+

|

|

54

|

+

### Start Ollama

|

|

55

|

+

|

|

56

|

+

```bash

|

|

57

|

+

ollama serve

|

|

58

|

+

```

|

|

59

|

+

|

|

60

|

+

### Pull the model

|

|

61

|

+

|

|

62

|

+

```bash

|

|

63

|

+

ollama pull llama3

|

|

64

|

+

```

|

|

65

|

+

|

|

66

|

+

---

|

|

67

|

+

|

|

68

|

+

## Usage

|

|

69

|

+

|

|

70

|

+

### Auto generate commit message

|

|

71

|

+

|

|

72

|

+

```bash

|

|

73

|

+

aic auto

|

|

74

|

+

```

|

|

75

|

+

|

|

76

|

+

This will:

|

|

77

|

+

|

|

78

|

+

1. Stage all changes

|

|

79

|

+

2. Generate an AI commit message

|

|

80

|

+

3. Commit automatically

|

|

81

|

+

|

|

82

|

+

---

|

|

83

|

+

|

|

84

|

+

### Commit and push

|

|

4

85

|

|

|

5

86

|

```bash

|

|

6

|

-

|

|

87

|

+

aic push main

|

|

7

88

|

```

|

|

8

89

|

|

|

9

|

-

|

|

90

|

+

This will:

|

|

91

|

+

|

|

92

|

+

1. Stage changes

|

|

93

|

+

2. Generate commit

|

|

94

|

+

3. Commit

|

|

95

|

+

4. Push to branch

|

|

96

|

+

|

|

97

|

+

---

|

|

98

|

+

|

|

99

|

+

## Example Workflow

|

|

10

100

|

|

|

11

101

|

```bash

|

|

12

|

-

|

|

102

|

+

git checkout -b feature-auth

|

|

103

|

+

# make changes

|

|

104

|

+

aic auto

|

|

105

|

+

aic push main

|

|

13

106

|

```

|

|

14

107

|

|

|

15

|

-

|

|

108

|

+

---

|

|

109

|

+

|

|

110

|

+

## Why CommitMind?

|

|

111

|

+

|

|

112

|

+

Writing commit messages is repetitive and often ignored.

|

|

113

|

+

CommitMind helps maintain:

|

|

114

|

+

|

|

115

|

+

* Clean project history

|

|

116

|

+

* Semantic commits

|

|

117

|

+

* Faster workflow

|

|

118

|

+

* Better collaboration

|

|

119

|

+

|

|

120

|

+

All while keeping your data private.

|

|

121

|

+

|

|

122

|

+

---

|

|

123

|

+

|

|

124

|

+

## Privacy First

|

|

125

|

+

|

|

126

|

+

CommitMind runs entirely on your machine using local models.

|

|

127

|

+

Your source code is never sent to external servers.

|

|

128

|

+

|

|

129

|

+

---

|

|

130

|

+

|

|

131

|

+

## Tech Stack

|

|

132

|

+

|

|

133

|

+

* Node.js CLI

|

|

134

|

+

* TypeScript

|

|

135

|

+

* Ollama (Local LLM)

|

|

136

|

+

* Conventional commits

|

|

137

|

+

|

|

138

|

+

---

|

|

139

|

+

|

|

140

|

+

## Roadmap

|

|

141

|

+

|

|

142

|

+

* Interactive commit preview

|

|

143

|

+

* Smart commit type detection

|

|

144

|

+

* Git hooks integration

|

|

145

|

+

* VS Code extension

|

|

146

|

+

* Multi-model support

|

|

147

|

+

* Config file

|

|

148

|

+

* Streaming AI output

|

|

149

|

+

|

|

150

|

+

---

|

|

151

|

+

|

|

152

|

+

## Contributing

|

|

153

|

+

|

|

154

|

+

Contributions are welcome!

|

|

155

|

+

|

|

156

|

+

1. Fork the repo

|

|

157

|

+

2. Create a feature branch

|

|

158

|

+

3. Submit a pull request

|

|

159

|

+

|

|

160

|

+

---

|

|

161

|

+

|

|

162

|

+

## Support

|

|

163

|

+

|

|

164

|

+

If you like this project:

|

|

165

|

+

|

|

166

|

+

* Star it on GitHub

|

|

167

|

+

* Share with your friends

|

|

168

|

+

* Give feedback

|

|

169

|

+

|

|

170

|

+

---

|

|

171

|

+

|

|

172

|

+

## License

|

|

173

|

+

|

|

174

|

+

MIT © Nabin Sharma

|

|

175

|

+

|

|

176

|

+

---

|

|

177

|

+

|

|

178

|

+

## Links

|

|

179

|

+

|

|

180

|

+

* GitHub: https://github.com/Nabin-09/ai-commit-generator

|

|

181

|

+

* Issues: https://github.com/Nabin-09/ai-commit-generator/issues

|

|

182

|

+

|

|

183

|

+

---

|

|

184

|

+

|

|

185

|

+

Made with ❤️ for developers.

|

package/bun.lock

CHANGED

|

@@ -6,6 +6,7 @@

|

|

|

6

6

|

"name": "ai-commit-gen",

|

|

7

7

|

"dependencies": {

|

|

8

8

|

"commitmind": "^1.0.0",

|

|

9

|

+

"readline": "^1.3.0",

|

|

9

10

|

},

|

|

10

11

|

"devDependencies": {

|

|

11

12

|

"@types/node": "^25.3.0",

|

|

@@ -18,6 +19,8 @@

|

|

|

18

19

|

|

|

19

20

|

"commitmind": ["commitmind@1.0.0", "", { "bin": { "aic": "dist/index.js" } }, "sha512-yxLwQAf4QV8cLAs5ObPoLmf0Qc1mE5v1t0BmD9rCKboSesy2S1ExQp2cngd/4xjPefy6X3SX/ysvlIj+nSXlmA=="],

|

|

20

21

|

|

|

22

|

+

"readline": ["readline@1.3.0", "", {}, "sha512-k2d6ACCkiNYz222Fs/iNze30rRJ1iIicW7JuX/7/cozvih6YCkFZH+J6mAFDVgv0dRBaAyr4jDqC95R2y4IADg=="],

|

|

23

|

+

|

|

21

24

|

"typescript": ["typescript@5.9.3", "", { "bin": { "tsc": "bin/tsc", "tsserver": "bin/tsserver" } }, "sha512-jl1vZzPDinLr9eUt3J/t7V6FgNEw9QjvBPdysz9KfQDD41fQrC2Y4vKQdiaUpFT4bXlb1RHhLpp8wtm6M5TgSw=="],

|

|

22

25

|

|

|

23

26

|

"undici-types": ["undici-types@7.18.2", "", {}, "sha512-AsuCzffGHJybSaRrmr5eHr81mwJU3kjw6M+uprWvCXiNeN9SOGwQ3Jn8jb8m3Z6izVgknn1R0FTCEAP2QrLY/w=="],

|

package/images/ss1.png

ADDED

|

Binary file

|

package/package.json

CHANGED

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

{

|

|

2

2

|

"name": "commitmind",

|

|

3

|

-

"version": "1.0.

|

|

3

|

+

"version": "1.0.3",

|

|

4

4

|

"author": "Nabin Sharma",

|

|

5

5

|

"bin": {

|

|

6

6

|

"aic": "./dist/index.js",

|

|

@@ -24,6 +24,7 @@

|

|

|

24

24

|

"typescript": "^5.9.3"

|

|

25

25

|

},

|

|

26

26

|

"dependencies": {

|

|

27

|

-

"commitmind": "^1.0.0"

|

|

27

|

+

"commitmind": "^1.0.0",

|

|

28

|

+

"readline": "^1.3.0"

|

|

28

29

|

}

|

|

29

30

|

}

|

package/src/config.ts

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

1

|

+

import { existsSync, mkdirSync , readFileSync , writeFileSync } from "node:fs";

|

|

2

|

+

import { homedir } from "node:os";

|

|

3

|

+

import path from "node:path";

|

|

4

|

+

|

|

5

|

+

|

|

6

|

+

const CONFIG_DIR = path.join(homedir() , '.commitmind')

|

|

7

|

+

const CONFIG_FILE = path.join(CONFIG_DIR , 'config.json')

|

|

8

|

+

|

|

9

|

+

type Config = {

|

|

10

|

+

model : string;

|

|

11

|

+

};

|

|

12

|

+

|

|

13

|

+

export function getConfig() : Config | null{

|

|

14

|

+

try{

|

|

15

|

+

if(!existsSync(CONFIG_FILE)) return null;

|

|

16

|

+

return JSON.parse(readFileSync(CONFIG_FILE , 'utf-8'));

|

|

17

|

+

}catch(err){

|

|

18

|

+

return null;

|

|

19

|

+

}

|

|

20

|

+

}

|

|

21

|

+

export function saveConfig(config: Config) {

|

|

22

|

+

if (!existsSync(CONFIG_DIR)) {

|

|

23

|

+

mkdirSync(CONFIG_DIR);

|

|

24

|

+

}

|

|

25

|

+

writeFileSync(CONFIG_FILE, JSON.stringify(config, null, 2));

|

|

26

|

+

}

|

package/src/index.ts

CHANGED

|

@@ -2,9 +2,31 @@

|

|

|

2

2

|

|

|

3

3

|

import { stageAll, getDiff, commit, push } from "./git.js";

|

|

4

4

|

import { generateCommit } from "./ollama.js";

|

|

5

|

+

import { getConfig , saveConfig } from "./config.js";

|

|

6

|

+

import { selectModel } from "./selectModel.js";

|

|

5

7

|

|

|

8

|

+

|

|

9

|

+

async function resolveModel(cliModel?: string): Promise<string> {

|

|

10

|

+

// 1. CLI flag wins (old users safe)

|

|

11

|

+

if (cliModel) {

|

|

12

|

+

saveConfig({ model: cliModel });

|

|

13

|

+

return cliModel;

|

|

14

|

+

}

|

|

15

|

+

|

|

16

|

+

|

|

17

|

+

const config = getConfig();

|

|

18

|

+

if (config?.model) return config.model;

|

|

19

|

+

|

|

20

|

+

if (!process.stdout.isTTY) {

|

|

21

|

+

return "llama3.1:latest";

|

|

22

|

+

}

|

|

23

|

+

|

|

24

|

+

console.log("No model configured.");

|

|

25

|

+

return await selectModel();

|

|

26

|

+

}

|

|

6

27

|

async function main() {

|

|

7

|

-

const

|

|

28

|

+

const args = process.argv.slice(2);

|

|

29

|

+

const command = args[0];

|

|

8

30

|

|

|

9

31

|

if (!command) {

|

|

10

32

|

console.log(`

|

|

@@ -12,6 +34,10 @@ Usage:

|

|

|

12

34

|

aic auto

|

|

13

35

|

aic push <branch>

|

|

14

36

|

|

|

37

|

+

Model:

|

|

38

|

+

aic model set

|

|

39

|

+

aic model get

|

|

40

|

+

|

|

15

41

|

Examples:

|

|

16

42

|

aic auto

|

|

17

43

|

aic push main

|

|

@@ -20,24 +46,45 @@ aic push main

|

|

|

20

46

|

}

|

|

21

47

|

|

|

22

48

|

|

|

49

|

+

if (command === "model") {

|

|

50

|

+

const sub = args[1];

|

|

51

|

+

|

|

52

|

+

if (sub === "set") {

|

|

53

|

+

await selectModel();

|

|

54

|

+

return;

|

|

55

|

+

}

|

|

56

|

+

|

|

57

|

+

if (sub === "get") {

|

|

58

|

+

const config = getConfig();

|

|

59

|

+

console.log("Current model:", config?.model || "Not set");

|

|

60

|

+

return;

|

|

61

|

+

}

|

|

62

|

+

}

|

|

63

|

+

|

|

64

|

+

// -------- model flag --------

|

|

65

|

+

let cliModel: string | undefined;

|

|

66

|

+

const modelIndex = args.indexOf("--model");

|

|

67

|

+

if (modelIndex !== -1 && args[modelIndex + 1]) {

|

|

68

|

+

cliModel = args[modelIndex + 1];

|

|

69

|

+

}

|

|

70

|

+

|

|

71

|

+

const model = await resolveModel(cliModel);

|

|

72

|

+

|

|

23

73

|

console.log("📦 Staging changes...");

|

|

24

74

|

await stageAll();

|

|

25

75

|

|

|

26

76

|

const diff = await getDiff();

|

|

27

77

|

|

|

78

|

+

console.log(`Generating commit using ${model}...`);

|

|

79

|

+

const message = await generateCommit(diff, model);

|

|

28

80

|

|

|

29

|

-

console.log("

|

|

30

|

-

const message = await generateCommit(diff);

|

|

31

|

-

|

|

32

|

-

console.log("✅ Commit:", message);

|

|

33

|

-

|

|

81

|

+

console.log("Commit:", message);

|

|

34

82

|

|

|

35

83

|

await commit(message);

|

|

36

84

|

|

|

37

|

-

|

|

38

85

|

if (command === "push") {

|

|

39

|

-

const branch =

|

|

40

|

-

console.log(`

|

|

86

|

+

const branch = args[1] || "main";

|

|

87

|

+

console.log(`Pushing to ${branch}...`);

|

|

41

88

|

await push(branch);

|

|

42

89

|

}

|

|

43

90

|

}

|

package/src/ollama.ts

CHANGED

|

@@ -1,8 +1,11 @@

|

|

|

1

1

|

type OllamaResponse = {

|

|

2

|

-

|

|

2

|

+

response: string;

|

|

3

3

|

};

|

|

4

4

|

|

|

5

|

-

export async function generateCommit(

|

|

5

|

+

export async function generateCommit(

|

|

6

|

+

diff: string,

|

|

7

|

+

model: string = 'llama3.1:latest'

|

|

8

|

+

): Promise<string> {

|

|

6

9

|

if (!diff) {

|

|

7

10

|

console.log(`No changes done`)

|

|

8

11

|

process.exit(0);

|

|

@@ -52,18 +55,25 @@ Now generate the commit message.

|

|

|

52

55

|

Diff:

|

|

53

56

|

${diff}

|

|

54

57

|

`

|

|

58

|

+

try {

|

|

59

|

+

const res = await fetch('http://localhost:11434/api/generate', {

|

|

60

|

+

method: 'POST',

|

|

61

|

+

body: JSON.stringify({

|

|

62

|

+

model,

|

|

63

|

+

prompt,

|

|

64

|

+

stream: false,

|

|

65

|

+

})

|

|

66

|

+

})

|

|

67

|

+

const data = (await res.json()) as OllamaResponse;

|

|

68

|

+

return data.response.trim();

|

|

69

|

+

}catch(err){

|

|

70

|

+

console.log(`Failed to connect to Ollama`);

|

|

71

|

+

process.exit(1);

|

|

72

|

+

|

|

73

|

+

}

|

|

74

|

+

|

|

75

|

+

|

|

55

76

|

|

|

56

|

-

const res = await fetch('http://localhost:11434/api/generate', {

|

|

57

|

-

method : 'POST',

|

|

58

|

-

body : JSON.stringify({

|

|

59

|

-

model : 'llama3.1:latest',

|

|

60

|

-

prompt,

|

|

61

|

-

stream : false,

|

|

62

|

-

})

|

|

63

|

-

})

|

|

64

|

-

|

|

65

|

-

const data = await res.json() as OllamaResponse;

|

|

66

|

-

return data.response.trim() ;

|

|

67

77

|

}

|

|

68

78

|

|

|

69

79

|

|

|

@@ -0,0 +1,46 @@

|

|

|

1

|

+

|

|

2

|

+

import { saveConfig } from "./config";

|

|

3

|

+

import readline from "readline";

|

|

4

|

+

|

|

5

|

+

async function getOllamaModels():Promise<string[]> {

|

|

6

|

+

try {

|

|

7

|

+

const res = await fetch('http://localhost:11434/api/tags');

|

|

8

|

+

const data = await res.json();

|

|

9

|

+

return data.models.map((m : any)=> m.name)

|

|

10

|

+

}catch(err){

|

|

11

|

+

console.log(`Ollama is not running ${err}`)

|

|

12

|

+

process.exit(1);

|

|

13

|

+

}

|

|

14

|

+

}

|

|

15

|

+

|

|

16

|

+

export async function selectModel() : Promise<string>{

|

|

17

|

+

const models = await getOllamaModels();

|

|

18

|

+

if(!models.length){

|

|

19

|

+

console.log(`No Ollama models found ! `);

|

|

20

|

+

process.exit(1);

|

|

21

|

+

}

|

|

22

|

+

console.log('Choose an Ollama model : ');

|

|

23

|

+

models.forEach((m , i)=> console.log(`${i + 1}.${m}`));

|

|

24

|

+

|

|

25

|

+

const rl = readline.createInterface({

|

|

26

|

+

input: process.stdin,

|

|

27

|

+

output : process.stdout,

|

|

28

|

+

})

|

|

29

|

+

|

|

30

|

+

const choice = await new Promise<number>((res)=>{

|

|

31

|

+

rl.question('Enter number : ' , (ans)=>{

|

|

32

|

+

rl.close();

|

|

33

|

+

res(Number(ans))

|

|

34

|

+

})

|

|

35

|

+

})

|

|

36

|

+

|

|

37

|

+

const selected = models[choice - 1];

|

|

38

|

+

if(!selected){

|

|

39

|

+

console.log(`Invalid Choice`);

|

|

40

|

+

process.exit(1);

|

|

41

|

+

}

|

|

42

|

+

|

|

43

|

+

saveConfig({model : selected});

|

|

44

|

+

console.log(`Model saved : ${selected}`);

|

|

45

|

+

return selected;

|

|

46

|

+

}

|