braidfs 0.0.57 → 0.0.58

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/README.md +122 -44

- package/index.js +150 -34

- package/package.json +1 -1

package/README.md

CHANGED

|

@@ -1,88 +1,146 @@

|

|

|

1

1

|

# BraidFS: Braid your Filesystem with the Web

|

|

2

2

|

|

|

3

|

-

|

|

4

|

-

- Using collaborative CRDTs and the Braid extensions for HTTP.

|

|

3

|

+

Synchronize ***WWW Pages*** with your ***OS Filesystem***.

|

|

5

4

|

|

|

6

|

-

The `braidfs` daemon performs bi-directional synchronization between remote

|

|

5

|

+

The `braidfs` daemon performs bi-directional synchronization between remote

|

|

6

|

+

[Braided](https://braid.org) HTTP resources and your local filesystem. It uses

|

|

7

|

+

the [braid-text](https://github.com/braid-org/braid-text) library for

|

|

8

|

+

high-performance, peer-to-peer collaborative text synchronization over HTTP,

|

|

9

|

+

and keeps your filesystem two-way synchronized with the fully-consistent CRDT.

|

|

7

10

|

|

|

8

|

-

###

|

|

11

|

+

### Sync the web into your `~/http` folder

|

|

9

12

|

|

|

10

|

-

|

|

13

|

+

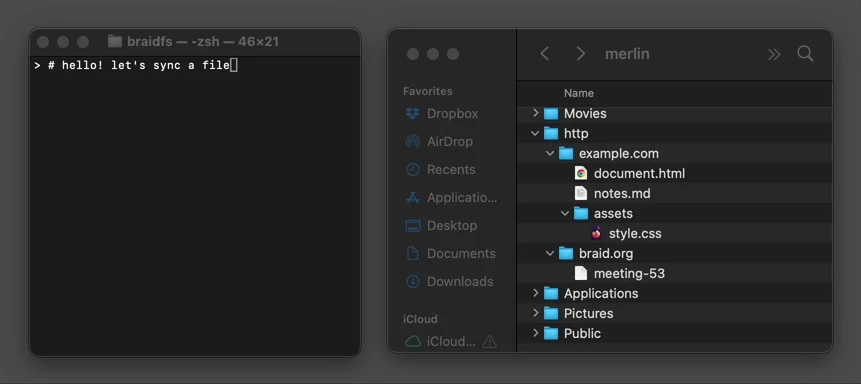

Braidfs synchronizes webpages to a local `~/http` folder:

|

|

11

14

|

|

|

12

15

|

```

|

|

13

16

|

~/http/

|

|

14

|

-

├──

|

|

15

|

-

|

|

16

|

-

|

|

17

|

-

|

|

18

|

-

|

|

19

|

-

|

|

20

|

-

|

|

17

|

+

├── braid.org/

|

|

18

|

+

| └── meeting-53

|

|

19

|

+

└── example.com/

|

|

20

|

+

├── document.html

|

|

21

|

+

├── notes.md

|

|

22

|

+

└── assets/

|

|

23

|

+

└── style.css

|

|

21

24

|

```

|

|

22

25

|

|

|

26

|

+

Add a new page with the `braidfs sync <url>` command:

|

|

23

27

|

|

|

24

|

-

https://

|

|

28

|

+

|

|

25

29

|

|

|

30

|

+

Unsync a page with `braidfs unsync <url>`.

|

|

26

31

|

|

|

32

|

+

### Edit remote state as a local file—and vice versa

|

|

27

33

|

|

|

28

|

-

|

|

34

|

+

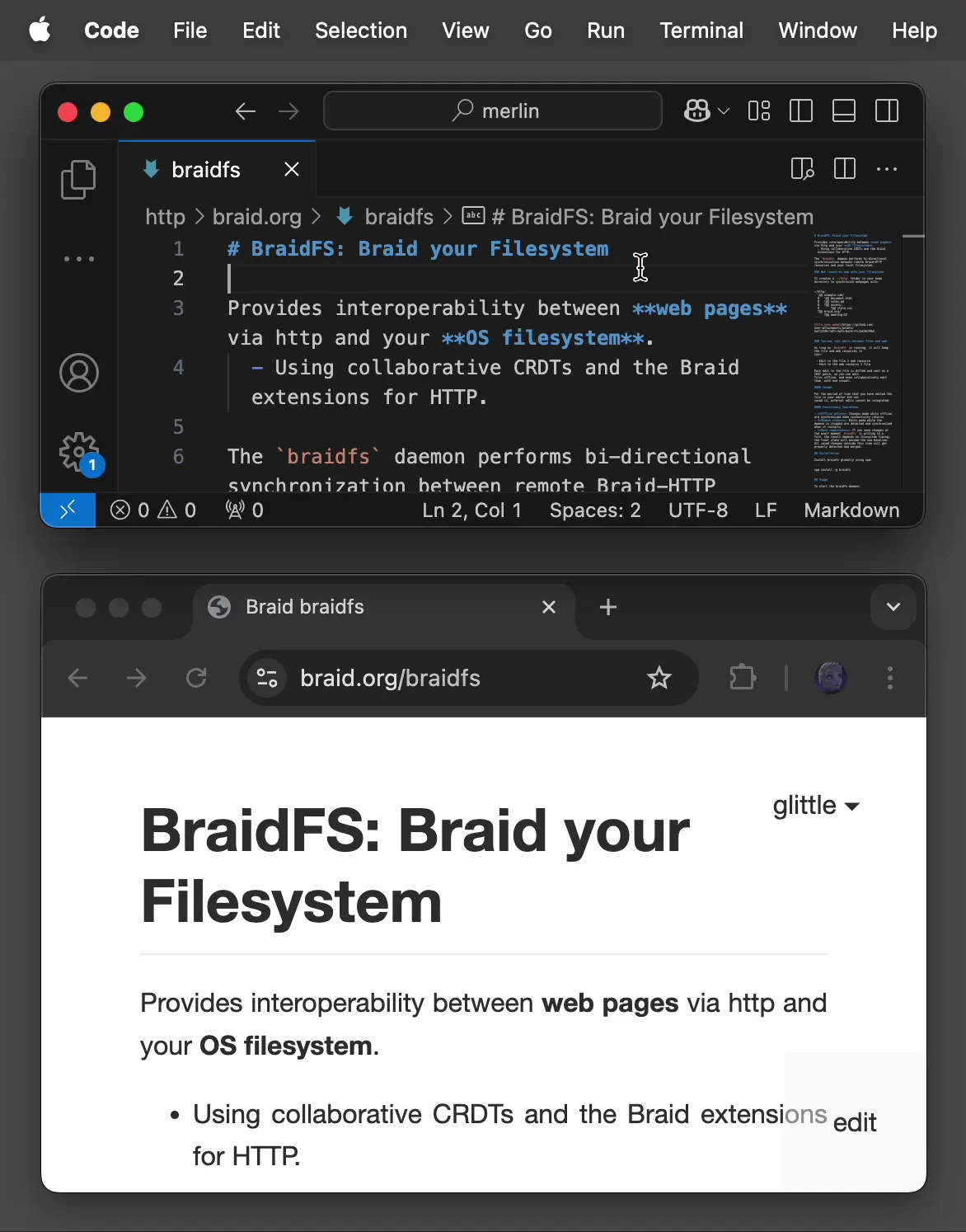

Any synced page can be edited with your favorite local text editor—like

|

|

35

|

+

VSCode, Emacs, Vim, Sublime, TextWrangler, BBEdit, Pico, Nano, or Notepad—and

|

|

36

|

+

edits propagate live to the web, and vice-versa.

|

|

29

37

|

|

|

30

|

-

|

|

31

|

-

sync!

|

|

38

|

+

Here's a demo of VSCode editing [braid.org/braidfs](https://braid.org/braidfs):

|

|

32

39

|

|

|

33

|

-

|

|

34

|

-

- Edit to the web resource → file

|

|

40

|

+

|

|

35

41

|

|

|

36

|

-

|

|

37

|

-

|

|

42

|

+

After VSCode saves the file, braidfs immediately computes a diff of the edits

|

|

43

|

+

and sends them as patches over Braid HTTP to https://braid.org/braidfs:

|

|

38

44

|

|

|

39

|

-

|

|

45

|

+

```

|

|

46

|

+

PUT /braidfs

|

|

47

|

+

Version: "n0j5kg9g23-100"

|

|

48

|

+

Parents: "ercurwxmz7g-37"

|

|

49

|

+

Content-Length: 9

|

|

50

|

+

Content-Range: text [32:32]

|

|

40

51

|

|

|

41

|

-

|

|

42

|

-

saved it, external edits cannot be integrated.

|

|

52

|

+

with the

|

|

43

53

|

|

|

44

|

-

|

|

54

|

+

```

|

|

45

55

|

|

|

46

|

-

|

|

56

|

+

Conversely, remote edits instantly update the local filesystem, and thus the

|

|

57

|

+

editor.

|

|

47

58

|

|

|

48

59

|

```

|

|

49

|

-

|

|

60

|

+

HTTP 200 OK

|

|

61

|

+

Version: "ercurwxmz7g-41"

|

|

62

|

+

Parents: "n0j5kg9g23-100"

|

|

63

|

+

Content-Length: 4

|

|

64

|

+

Content-Range: text [41:41]

|

|

65

|

+

|

|

66

|

+

Web

|

|

67

|

+

|

|

50

68

|

```

|

|

51

69

|

|

|

52

|

-

|

|

70

|

+

Edits are formatted as simple

|

|

71

|

+

[Braid-HTTP](https://github.com/braid-org/braid-spec). To participate in the

|

|

72

|

+

network, you can even author these messages by hand, from your own code, using

|

|

73

|

+

simple GETs and PUTs.

|

|

74

|

+

|

|

75

|

+

### Conflict-Free Collaborative Editing

|

|

76

|

+

|

|

77

|

+

Braidfs has a full [braid-text](https://github.com/braid-org/braid-text) peer

|

|

78

|

+

within it, providing high-performance collaborative text editing on the

|

|

79

|

+

diamond-types CRDT over the Braid HTTP protocol, guaranteeing conflict-free

|

|

80

|

+

editing with multiple editors, whether online or offline.

|

|

81

|

+

|

|

82

|

+

A novel trick using [Time Machines](https://braid.org/time-machines) lets us

|

|

83

|

+

making regular text editors conflict-free, as well, without speaking CRDT!

|

|

84

|

+

This means that you can edit a file in Emacs, even while other people edit the

|

|

85

|

+

same file, without write conflicts, and without adding CRDT code to Emacs.

|

|

86

|

+

(Still under development.)

|

|

87

|

+

|

|

88

|

+

# Installation and Usage

|

|

89

|

+

|

|

90

|

+

Install the `braidfs` command onto your computer with npm:

|

|

91

|

+

|

|

92

|

+

```

|

|

93

|

+

npm install -g braidfs

|

|

94

|

+

```

|

|

53

95

|

|

|

54

|

-

|

|

96

|

+

Then you can start the braidfs daemon with:

|

|

55

97

|

|

|

56

98

|

```

|

|

57

99

|

braidfs run

|

|

58

100

|

```

|

|

59

101

|

|

|

60

|

-

To

|

|

102

|

+

To run it automatically as a background service on MacOS, use:

|

|

61

103

|

|

|

62

104

|

```

|

|

63

|

-

|

|

105

|

+

# Todo: fix this. Not working yet.

|

|

106

|

+

# launchctl submit -l org.braid.braidfs -- braidfs run

|

|

64

107

|

```

|

|

65

108

|

|

|

66

|

-

|

|

109

|

+

### Adding and removing URLs

|

|

67

110

|

|

|

68

|

-

|

|

69

|

-

- It creates a local file at `~/http/example.com/path/file.txt`

|

|

111

|

+

Sync a URL with:

|

|

70

112

|

|

|

71

|

-

|

|

113

|

+

```

|

|

114

|

+

braidfs sync <url>

|

|

115

|

+

```

|

|

116

|

+

|

|

117

|

+

Unsync a URL with:

|

|

72

118

|

|

|

73

119

|

```

|

|

74

120

|

braidfs unsync <url>

|

|

75

121

|

```

|

|

76

122

|

|

|

77

|

-

|

|

123

|

+

URLs map to files with a simple pattern:

|

|

78

124

|

|

|

79

|

-

|

|

125

|

+

- url: `https://<domain>/<path>`

|

|

126

|

+

- file: `~/http/<domain>/<path>`

|

|

80

127

|

|

|

81

|

-

- `sync`: An object where the keys are URLs to sync, and the values are simply `true`

|

|

82

|

-

- `cookies`: An object for setting domain-specific cookies for authentication

|

|

83

|

-

- `port`: The port number for the internal daemon (default: 45678)

|

|

84

128

|

|

|

85

|

-

|

|

129

|

+

Examples:

|

|

130

|

+

|

|

131

|

+

| URL | File |

|

|

132

|

+

| --- | --- |

|

|

133

|

+

| `https://example.com/path/file.txt` | `~/http/example.com/path/file.txt` |

|

|

134

|

+

| `https://braid.org:8939/path` | `~/http/braid.org:8939/path` |

|

|

135

|

+

| `https://braid.org/` | `~/http/braid.org/index` |

|

|

136

|

+

|

|

137

|

+

If you sync a URL path to a directory containing items within it, the

|

|

138

|

+

directory will be named `/index`.

|

|

139

|

+

|

|

140

|

+

|

|

141

|

+

### Configuration

|

|

142

|

+

|

|

143

|

+

The config file lives at `~/http/.braidfs/config`. It looks like this:

|

|

86

144

|

|

|

87

145

|

```json

|

|

88

146

|

{

|

|

@@ -91,14 +149,34 @@ Example `config`:

|

|

|

91

149

|

"https://example.com/document2.txt": true

|

|

92

150

|

},

|

|

93

151

|

"cookies": {

|

|

94

|

-

"example.com": "secret_pass"

|

|

152

|

+

"example.com": "secret_pass",

|

|

153

|

+

"braid.org": "client=hsu238s88adhab3afhalkj3jasdhfdf"

|

|

95

154

|

},

|

|

96

155

|

"port": 45678

|

|

97

156

|

}

|

|

98

157

|

```

|

|

99

158

|

|

|

100

|

-

|

|

159

|

+

These are the options:

|

|

160

|

+

- `sync`: A set of URLs to synchronize. Each one maps to `true`.

|

|

161

|

+

- `cookies`: Braidfs will use these cookie when connecting to the domains.

|

|

162

|

+

- Put your login session cookies here.

|

|

163

|

+

- To find your cookie for a website:

|

|

164

|

+

- Log into the website with a browser

|

|

165

|

+

- Open the Javascript console and run `document.cookie`

|

|

166

|

+

- That's your cookie. Copy paste it into your config file.

|

|

167

|

+

- `port`: The port number for the internal daemon (default: 45678)

|

|

168

|

+

|

|

169

|

+

This config file live-updates, too. Changes are automatically detected and

|

|

170

|

+

applied to the running braidfs daemon. The only exception is the `port`

|

|

171

|

+

setting, which requires restarting the daemon after a change.

|

|

172

|

+

|

|

101

173

|

|

|

102

|

-

##

|

|

174

|

+

## Limitations & Future Work

|

|

103

175

|

|

|

104

|

-

|

|

176

|

+

- Doesn't sync binary yet. Just text text mime-types:

|

|

177

|

+

`text/*`, `application/html`, and `application/json`

|

|

178

|

+

- Binary blob support would be pretty easy and nice to add.

|

|

179

|

+

- Contact us if you'd like to add it!

|

|

180

|

+

- Doesn't update your editor's text with remote updates until you save

|

|

181

|

+

- It's not hard to make it live-update, though, so that you can see your edits integrated with others' before you save.

|

|

182

|

+

- Contact us if you'd like to help! It would be a fun project!

|

package/index.js

CHANGED

|

@@ -1,14 +1,9 @@

|

|

|

1

1

|

#!/usr/bin/env node

|

|

2

2

|

|

|

3

|

-

console.log(`braidfs version: ${require(`${__dirname}/package.json`).version}`)

|

|

4

|

-

|

|

5

3

|

var { diff_main } = require(`${__dirname}/diff.js`),

|

|

6

4

|

braid_text = require("braid-text"),

|

|

7

5

|

braid_fetch = require('braid-http').fetch

|

|

8

6

|

|

|

9

|

-

process.on("unhandledRejection", (x) => console.log(`unhandledRejection: ${x.stack}`))

|

|

10

|

-

process.on("uncaughtException", (x) => console.log(`uncaughtException: ${x.stack}`))

|

|

11

|

-

|

|

12

7

|

var proxy_base = `${require('os').homedir()}/http`,

|

|

13

8

|

braidfs_config_dir = `${proxy_base}/.braidfs`,

|

|

14

9

|

braidfs_config_file = `${braidfs_config_dir}/config`,

|

|

@@ -57,6 +52,41 @@ let to_run_in_background = process.platform === 'darwin' ? `

|

|

|

57

52

|

To run daemon in background:

|

|

58

53

|

launchctl submit -l org.braid.braidfs -- braidfs run` : ''

|

|

59

54

|

let argv = process.argv.slice(2)

|

|

55

|

+

|

|

56

|

+

if (argv[0] === 'editing') {

|

|

57

|

+

return (async () => {

|

|

58

|

+

var filename = argv[1]

|

|

59

|

+

if (!require('path').isAbsolute(filename))

|

|

60

|

+

filename = require('path').resolve(process.cwd(), filename)

|

|

61

|

+

var input_string = await new Promise(done => {

|

|

62

|

+

const chunks = []

|

|

63

|

+

process.stdin.on('data', chunk => chunks.push(chunk))

|

|

64

|

+

process.stdin.on('end', () => done(Buffer.concat(chunks).toString()))

|

|

65

|

+

})

|

|

66

|

+

|

|

67

|

+

var r = await fetch(`http://localhost:${config.port}/.braidfs/get_version/${encodeURIComponent(filename)}/${encodeURIComponent(sha256(input_string))}`)

|

|

68

|

+

if (!r.ok) throw new Error(`bad status: ${r.status}`)

|

|

69

|

+

console.log(await r.text())

|

|

70

|

+

})()

|

|

71

|

+

} else if (argv[0] === 'edited') {

|

|

72

|

+

return (async () => {

|

|

73

|

+

var filename = argv[1]

|

|

74

|

+

if (!require('path').isAbsolute(filename))

|

|

75

|

+

filename = require('path').resolve(process.cwd(), filename)

|

|

76

|

+

var parent_version = argv[2]

|

|

77

|

+

var input_string = await new Promise(done => {

|

|

78

|

+

const chunks = []

|

|

79

|

+

process.stdin.on('data', chunk => chunks.push(chunk))

|

|

80

|

+

process.stdin.on('end', () => done(Buffer.concat(chunks).toString()))

|

|

81

|

+

})

|

|

82

|

+

var r = await fetch(`http://localhost:${config.port}/.braidfs/set_version/${encodeURIComponent(filename)}/${encodeURIComponent(parent_version)}`, { method: 'PUT', body: input_string })

|

|

83

|

+

if (!r.ok) throw new Error(`bad status: ${r.status}`)

|

|

84

|

+

console.log(await r.text())

|

|

85

|

+

})()

|

|

86

|

+

}

|

|

87

|

+

|

|

88

|

+

console.log(`braidfs version: ${require(`${__dirname}/package.json`).version}`)

|

|

89

|

+

|

|

60

90

|

if (argv.length === 1 && argv[0].match(/^(run|serve)$/)) {

|

|

61

91

|

return main()

|

|

62

92

|

} else if (argv.length && argv.length % 2 == 0 && argv.every((x, i) => i % 2 != 0 || x.match(/^(sync|unsync)$/))) {

|

|

@@ -94,28 +124,82 @@ You can run it with:

|

|

|

94

124

|

}

|

|

95

125

|

|

|

96

126

|

async function main() {

|

|

127

|

+

process.on("unhandledRejection", (x) => console.log(`unhandledRejection: ${x.stack}`))

|

|

128

|

+

process.on("uncaughtException", (x) => console.log(`uncaughtException: ${x.stack}`))

|

|

97

129

|

require('http').createServer(async (req, res) => {

|

|

98

|

-

|

|

130

|

+

try {

|

|

131

|

+

console.log(`${req.method} ${req.url}`)

|

|

99

132

|

|

|

100

|

-

|

|

133

|

+

if (req.url === '/favicon.ico') return

|

|

101

134

|

|

|

102

|

-

|

|

103

|

-

|

|

104

|

-

|

|

105

|

-

|

|

135

|

+

if (req.socket.remoteAddress !== '127.0.0.1' && req.socket.remoteAddress !== '::1') {

|

|

136

|

+

res.writeHead(403, { 'Content-Type': 'text/plain' })

|

|

137

|

+

return res.end('Access denied: only accessible from localhost')

|

|

138

|

+

}

|

|

106

139

|

|

|

107

|

-

|

|

108

|

-

|

|

109

|

-

|

|

140

|

+

// Free the CORS

|

|

141

|

+

free_the_cors(req, res)

|

|

142

|

+

if (req.method === 'OPTIONS') return

|

|

110

143

|

|

|

111

|

-

|

|

144

|

+

var url = req.url.slice(1)

|

|

112

145

|

|

|

113

|

-

|

|

114

|

-

|

|

115

|

-

|

|

116

|

-

|

|

146

|

+

var m = url.match(/^\.braidfs\/get_version\/([^\/]*)\/([^\/]*)/)

|

|

147

|

+

if (m) {

|

|

148

|

+

var fullpath = decodeURIComponent(m[1])

|

|

149

|

+

var hash = decodeURIComponent(m[2])

|

|

150

|

+

|

|

151

|

+

var path = require('path').relative(proxy_base, fullpath)

|

|

152

|

+

var proxy = await proxy_url.cache[normalize_url(path)]

|

|

153

|

+

var version = proxy?.hash_to_version_cache.get(hash)?.version

|

|

154

|

+

return res.end(JSON.stringify(version ?? null))

|

|

155

|

+

}

|

|

156

|

+

|

|

157

|

+

var m = url.match(/^\.braidfs\/set_version\/([^\/]*)\/([^\/]*)/)

|

|

158

|

+

if (m) {

|

|

159

|

+

var fullpath = decodeURIComponent(m[1])

|

|

160

|

+

var path = require('path').relative(proxy_base, fullpath)

|

|

161

|

+

var proxy = await proxy_url.cache[normalize_url(path)]

|

|

162

|

+

|

|

163

|

+

var parents = JSON.parse(decodeURIComponent(m[2]))

|

|

164

|

+

var parent_text = (await braid_text.get(proxy.url,

|

|

165

|

+

{ parents })).body

|

|

166

|

+

|

|

167

|

+

var text = await new Promise(done => {

|

|

168

|

+

const chunks = []

|

|

169

|

+

req.on('data', chunk => chunks.push(chunk))

|

|

170

|

+

req.on('end', () => done(Buffer.concat(chunks).toString()))

|

|

171

|

+

})

|

|

172

|

+

|

|

173

|

+

var patches = diff(parent_text, text)

|

|

117

174

|

|

|

118

|

-

|

|

175

|

+

if (patches.length) {

|

|

176

|

+

var peer = Math.random().toString(36).slice(2)

|

|

177

|

+

var char_count = patches_to_code_points(patches, parent_text)

|

|

178

|

+

var version = [peer + "-" + char_count]

|

|

179

|

+

await braid_text.put(proxy.url, { version, parents, patches, peer, merge_type: 'dt' })

|

|

180

|

+

|

|

181

|

+

// may be able to do this more efficiently.. we want to make sure we're capturing a file write that is after our version was written.. there may be a way we can avoid calling file_needs_writing here

|

|

182

|

+

var stat = await new Promise(done => {

|

|

183

|

+

proxy.file_written_cbs.push(done)

|

|

184

|

+

proxy.signal_file_needs_writing()

|

|

185

|

+

})

|

|

186

|

+

|

|

187

|

+

res.writeHead(200, { 'Content-Type': 'application/json' })

|

|

188

|

+

return res.end(stat.mtimeMs.toString())

|

|

189

|

+

} else return res.end('null')

|

|

190

|

+

}

|

|

191

|

+

|

|

192

|

+

if (url !== '.braidfs/config' && url !== '.braidfs/errors') {

|

|

193

|

+

res.writeHead(404, { 'Content-Type': 'text/html' })

|

|

194

|

+

return res.end('Nothing to see here. You can go to <a href=".braidfs/config">.braidfs/config</a> or <a href=".braidfs/errors">.braidfs/errors</a>')

|

|

195

|

+

}

|

|

196

|

+

|

|

197

|

+

braid_text.serve(req, res, { key: normalize_url(url) })

|

|

198

|

+

} catch (e) {

|

|

199

|

+

console.log(`e = ${e.stack}`)

|

|

200

|

+

res.writeHead(500, { 'Error-Message': '' + e })

|

|

201

|

+

res.end('' + e)

|

|

202

|

+

}

|

|

119

203

|

}).listen(config.port, () => {

|

|

120

204

|

console.log(`daemon started on port ${config.port}`)

|

|

121

205

|

if (!config.allow_remote_access) console.log('!! only accessible from localhost !!')

|

|

@@ -263,6 +347,9 @@ async function scan_files() {

|

|

|

263

347

|

scan_files.timeout = setTimeout(scan_files, config.scan_interval_ms ?? (20 * 1000))

|

|

264

348

|

|

|

265

349

|

async function f(fullpath) {

|

|

350

|

+

path = require('path').relative(proxy_base, fullpath)

|

|

351

|

+

if (skip_file(path)) return

|

|

352

|

+

|

|

266

353

|

let stat = await require('fs').promises.stat(fullpath, { bigint: true })

|

|

267

354

|

if (stat.isDirectory()) {

|

|

268

355

|

let found

|

|

@@ -270,9 +357,6 @@ async function scan_files() {

|

|

|

270

357

|

found ||= await f(`${fullpath}/${file}`)

|

|

271

358

|

return found

|

|

272

359

|

} else {

|

|

273

|

-

path = require('path').relative(proxy_base, fullpath)

|

|

274

|

-

if (skip_file(path)) return

|

|

275

|

-

|

|

276

360

|

var proxy = await proxy_url.cache[normalize_url(path)]

|

|

277

361

|

if (!proxy) return await trash_file(fullpath, path)

|

|

278

362

|

|

|

@@ -343,7 +427,7 @@ async function proxy_url(url) {

|

|

|

343

427

|

}

|

|

344

428

|

await old_unproxy

|

|

345

429

|

|

|

346

|

-

var self = {}

|

|

430

|

+

var self = {url}

|

|

347

431

|

|

|

348

432

|

console.log(`proxy_url: ${url}`)

|

|

349

433

|

|

|

@@ -373,6 +457,23 @@ async function proxy_url(url) {

|

|

|

373

457

|

var file_needs_reading = true,

|

|

374

458

|

file_needs_writing = null,

|

|

375

459

|

file_loop_pump_lock = 0

|

|

460

|

+

self.file_written_cbs = []

|

|

461

|

+

|

|

462

|

+

// store a recent mapping of content-hashes to their versions,

|

|

463

|

+

// to support the command line: braidfs editing filename < file

|

|

464

|

+

self.hash_to_version_cache = new Map()

|

|

465

|

+

function add_to_version_cache(text, version) {

|

|

466

|

+

var hash = sha256(text)

|

|

467

|

+

self.hash_to_version_cache.delete(hash)

|

|

468

|

+

self.hash_to_version_cache.set(hash, { version, time: Date.now() })

|

|

469

|

+

|

|

470

|

+

var too_old = Date.now() - 30000

|

|

471

|

+

for (var [key, value] of self.hash_to_version_cache) {

|

|

472

|

+

if (value.time > too_old ||

|

|

473

|

+

self.hash_to_version_cache.size <= 1) break

|

|

474

|

+

self.hash_to_version_cache.delete(key)

|

|

475

|

+

}

|

|

476

|

+

}

|

|

376

477

|

|

|

377

478

|

self.signal_file_needs_reading = () => {

|

|

378

479

|

if (freed) return

|

|

@@ -380,7 +481,7 @@ async function proxy_url(url) {

|

|

|

380

481

|

file_loop_pump()

|

|

381

482

|

}

|

|

382

483

|

|

|

383

|

-

|

|

484

|

+

self.signal_file_needs_writing = () => {

|

|

384

485

|

if (freed) return

|

|

385

486

|

file_needs_writing = true

|

|

386

487

|

file_loop_pump()

|

|

@@ -434,7 +535,7 @@ async function proxy_url(url) {

|

|

|

434

535

|

file_needs_writing = !v_eq(file_last_version, (await braid_text.get(url, {})).version)

|

|

435

536

|

|

|

436

537

|

// sanity check

|

|

437

|

-

if (file_last_digest &&

|

|

538

|

+

if (file_last_digest && sha256(self.file_last_text) != file_last_digest) throw new Error('file_last_text does not match file_last_digest')

|

|

438

539

|

} else if (await require('fs').promises.access(fullpath).then(() => 1, () => 0)) {

|

|

439

540

|

// file exists, but not meta file

|

|

440

541

|

file_last_version = []

|

|

@@ -479,12 +580,16 @@ async function proxy_url(url) {

|

|

|

479

580

|

var parents = file_last_version

|

|

480

581

|

file_last_version = version

|

|

481

582

|

|

|

583

|

+

add_to_version_cache(text, version)

|

|

584

|

+

|

|

482

585

|

send_out({ version, parents, patches, peer })

|

|

483

586

|

|

|

484

587

|

await braid_text.put(url, { version, parents, patches, peer, merge_type: 'dt' })

|

|

485

588

|

|

|

486

|

-

await require('fs').promises.writeFile(meta_path, JSON.stringify({ version: file_last_version, digest:

|

|

589

|

+

await require('fs').promises.writeFile(meta_path, JSON.stringify({ version: file_last_version, digest: sha256(self.file_last_text) }))

|

|

487

590

|

} else {

|

|

591

|

+

add_to_version_cache(text, file_last_version)

|

|

592

|

+

|

|

488

593

|

console.log(`no changes found in: ${fullpath}`)

|

|

489

594

|

if (stat_eq(stat, self.file_last_stat)) {

|

|

490

595

|

if (Date.now() > (self.file_ignore_until ?? 0))

|

|

@@ -511,6 +616,8 @@ async function proxy_url(url) {

|

|

|

511

616

|

|

|

512

617

|

console.log(`writing file ${fullpath}`)

|

|

513

618

|

|

|

619

|

+

add_to_version_cache(body, version)

|

|

620

|

+

|

|

514

621

|

try { if (await is_read_only(fullpath)) await set_read_only(fullpath, false) } catch (e) { }

|

|

515

622

|

|

|

516

623

|

file_last_version = version

|

|

@@ -521,8 +628,7 @@ async function proxy_url(url) {

|

|

|

521

628

|

|

|

522

629

|

await require('fs').promises.writeFile(meta_path, JSON.stringify({

|

|

523

630

|

version: file_last_version,

|

|

524

|

-

digest:

|

|

525

|

-

.update(self.file_last_text).digest('base64')

|

|

631

|

+

digest: sha256(self.file_last_text)

|

|

526

632

|

}))

|

|

527

633

|

}

|

|

528

634

|

|

|

@@ -532,6 +638,9 @@ async function proxy_url(url) {

|

|

|

532

638

|

}

|

|

533

639

|

|

|

534

640

|

self.file_last_stat = await require('fs').promises.stat(fullpath, { bigint: true })

|

|

641

|

+

|

|

642

|

+

for (var cb of self.file_written_cbs) cb(self.file_last_stat)

|

|

643

|

+

self.file_written_cbs = []

|

|

535

644

|

}

|

|

536

645

|

}

|

|

537

646

|

})

|

|

@@ -568,7 +677,7 @@ async function proxy_url(url) {

|

|

|

568

677

|

console.log(` editable = ${res.headers.get('editable')}`)

|

|

569

678

|

|

|

570

679

|

self.file_read_only = res.headers.get('editable') === 'false'

|

|

571

|

-

signal_file_needs_writing()

|

|

680

|

+

self.signal_file_needs_writing()

|

|

572

681

|

}

|

|

573

682

|

},

|

|

574

683

|

heartbeats: 120,

|

|

@@ -593,7 +702,7 @@ async function proxy_url(url) {

|

|

|

593

702

|

await braid_text.put(url, { ...update, peer, merge_type: 'dt' })

|

|

594

703

|

|

|

595

704

|

|

|

596

|

-

signal_file_needs_writing()

|

|

705

|

+

self.signal_file_needs_writing()

|

|

597

706

|

finish_something()

|

|

598

707

|

}, e => (e?.name !== "AbortError") && crash(e))

|

|

599

708

|

}).catch(e => (e?.name !== "AbortError") && crash(e))

|

|

@@ -628,20 +737,23 @@ async function proxy_url(url) {

|

|

|

628

737

|

merge_type: 'dt',

|

|

629

738

|

peer,

|

|

630

739

|

subscribe: async (u) => {

|

|

631

|

-

if (u.version.length)

|

|

740

|

+

if (u.version.length) {

|

|

741

|

+

self.signal_file_needs_writing()

|

|

742

|

+

chain = chain.then(() => send_out({...u, peer}))

|

|

743

|

+

}

|

|

632

744

|

},

|

|

633

745

|

})

|

|

634

746

|

} catch (e) {

|

|

635

747

|

if (e?.name !== "AbortError") crash(e)

|

|

636

748

|

}

|

|

637

|

-

finish_something()

|

|

749

|

+

finish_something()

|

|

638

750

|

}

|

|

639

751

|

|

|

640

752

|

// for config and errors file, listen for web changes

|

|

641

753

|

if (!is_external_link) braid_text.get(url, braid_text_get_options = {

|

|

642

754

|

merge_type: 'dt',

|

|

643

755

|

peer,

|

|

644

|

-

subscribe: signal_file_needs_writing,

|

|

756

|

+

subscribe: self.signal_file_needs_writing,

|

|

645

757

|

})

|

|

646

758

|

|

|

647

759

|

return self

|

|

@@ -818,3 +930,7 @@ async function file_exists(fullpath) {

|

|

|

818

930

|

return x.isFile()

|

|

819

931

|

} catch (e) { }

|

|

820

932

|

}

|

|

933

|

+

|

|

934

|

+

function sha256(x) {

|

|

935

|

+

return require('crypto').createHash('sha256').update(x).digest('base64')

|

|

936

|

+

}

|