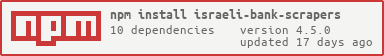

@tomerh2001/israeli-bank-scrapers 6.3.11

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/LICENSE +21 -0

- package/README.md +425 -0

- package/lib/assertNever.d.ts +1 -0

- package/lib/assertNever.js +10 -0

- package/lib/constants.d.ts +10 -0

- package/lib/constants.js +17 -0

- package/lib/definitions.d.ts +105 -0

- package/lib/definitions.js +116 -0

- package/lib/helpers/browser.d.ts +10 -0

- package/lib/helpers/browser.js +21 -0

- package/lib/helpers/dates.d.ts +2 -0

- package/lib/helpers/dates.js +22 -0

- package/lib/helpers/debug.d.ts +2 -0

- package/lib/helpers/debug.js +12 -0

- package/lib/helpers/elements-interactions.d.ts +17 -0

- package/lib/helpers/elements-interactions.js +111 -0

- package/lib/helpers/fetch.d.ts +6 -0

- package/lib/helpers/fetch.js +111 -0

- package/lib/helpers/navigation.d.ts +6 -0

- package/lib/helpers/navigation.js +39 -0

- package/lib/helpers/storage.d.ts +2 -0

- package/lib/helpers/storage.js +14 -0

- package/lib/helpers/transactions.d.ts +5 -0

- package/lib/helpers/transactions.js +47 -0

- package/lib/helpers/waiting.d.ts +13 -0

- package/lib/helpers/waiting.js +58 -0

- package/lib/index.d.ts +7 -0

- package/lib/index.js +85 -0

- package/lib/scrapers/amex.d.ts +6 -0

- package/lib/scrapers/amex.js +17 -0

- package/lib/scrapers/amex.test.d.ts +1 -0

- package/lib/scrapers/amex.test.js +49 -0

- package/lib/scrapers/base-beinleumi-group.d.ts +66 -0

- package/lib/scrapers/base-beinleumi-group.js +428 -0

- package/lib/scrapers/base-isracard-amex.d.ts +23 -0

- package/lib/scrapers/base-isracard-amex.js +324 -0

- package/lib/scrapers/base-scraper-with-browser.d.ts +57 -0

- package/lib/scrapers/base-scraper-with-browser.js +291 -0

- package/lib/scrapers/base-scraper-with-browser.test.d.ts +1 -0

- package/lib/scrapers/base-scraper-with-browser.test.js +53 -0

- package/lib/scrapers/base-scraper.d.ts +19 -0

- package/lib/scrapers/base-scraper.js +91 -0

- package/lib/scrapers/behatsdaa.d.ts +11 -0

- package/lib/scrapers/behatsdaa.js +113 -0

- package/lib/scrapers/behatsdaa.test.d.ts +1 -0

- package/lib/scrapers/behatsdaa.test.js +46 -0

- package/lib/scrapers/beinleumi.d.ts +7 -0

- package/lib/scrapers/beinleumi.js +15 -0

- package/lib/scrapers/beinleumi.test.d.ts +1 -0

- package/lib/scrapers/beinleumi.test.js +47 -0

- package/lib/scrapers/beyahad-bishvilha.d.ts +30 -0

- package/lib/scrapers/beyahad-bishvilha.js +149 -0

- package/lib/scrapers/beyahad-bishvilha.test.d.ts +1 -0

- package/lib/scrapers/beyahad-bishvilha.test.js +47 -0

- package/lib/scrapers/discount.d.ts +22 -0

- package/lib/scrapers/discount.js +120 -0

- package/lib/scrapers/discount.test.d.ts +1 -0

- package/lib/scrapers/discount.test.js +49 -0

- package/lib/scrapers/errors.d.ts +16 -0

- package/lib/scrapers/errors.js +32 -0

- package/lib/scrapers/factory.d.ts +2 -0

- package/lib/scrapers/factory.js +70 -0

- package/lib/scrapers/factory.test.d.ts +1 -0

- package/lib/scrapers/factory.test.js +19 -0

- package/lib/scrapers/hapoalim.d.ts +24 -0

- package/lib/scrapers/hapoalim.js +198 -0

- package/lib/scrapers/hapoalim.test.d.ts +1 -0

- package/lib/scrapers/hapoalim.test.js +47 -0

- package/lib/scrapers/interface.d.ts +186 -0

- package/lib/scrapers/interface.js +6 -0

- package/lib/scrapers/isracard.d.ts +6 -0

- package/lib/scrapers/isracard.js +17 -0

- package/lib/scrapers/isracard.test.d.ts +1 -0

- package/lib/scrapers/isracard.test.js +49 -0

- package/lib/scrapers/leumi.d.ts +21 -0

- package/lib/scrapers/leumi.js +200 -0

- package/lib/scrapers/leumi.test.d.ts +1 -0

- package/lib/scrapers/leumi.test.js +47 -0

- package/lib/scrapers/massad.d.ts +7 -0

- package/lib/scrapers/massad.js +15 -0

- package/lib/scrapers/max.d.ts +37 -0

- package/lib/scrapers/max.js +299 -0

- package/lib/scrapers/max.test.d.ts +1 -0

- package/lib/scrapers/max.test.js +64 -0

- package/lib/scrapers/mercantile.d.ts +20 -0

- package/lib/scrapers/mercantile.js +18 -0

- package/lib/scrapers/mercantile.test.d.ts +1 -0

- package/lib/scrapers/mercantile.test.js +45 -0

- package/lib/scrapers/mizrahi.d.ts +35 -0

- package/lib/scrapers/mizrahi.js +265 -0

- package/lib/scrapers/mizrahi.test.d.ts +1 -0

- package/lib/scrapers/mizrahi.test.js +56 -0

- package/lib/scrapers/one-zero-queries.d.ts +2 -0

- package/lib/scrapers/one-zero-queries.js +560 -0

- package/lib/scrapers/one-zero.d.ts +36 -0

- package/lib/scrapers/one-zero.js +238 -0

- package/lib/scrapers/one-zero.test.d.ts +1 -0

- package/lib/scrapers/one-zero.test.js +51 -0

- package/lib/scrapers/otsar-hahayal.d.ts +7 -0

- package/lib/scrapers/otsar-hahayal.js +15 -0

- package/lib/scrapers/otsar-hahayal.test.d.ts +1 -0

- package/lib/scrapers/otsar-hahayal.test.js +47 -0

- package/lib/scrapers/pagi.d.ts +7 -0

- package/lib/scrapers/pagi.js +15 -0

- package/lib/scrapers/pagi.test.d.ts +1 -0

- package/lib/scrapers/pagi.test.js +47 -0

- package/lib/scrapers/union-bank.d.ts +23 -0

- package/lib/scrapers/union-bank.js +242 -0

- package/lib/scrapers/union-bank.test.d.ts +1 -0

- package/lib/scrapers/union-bank.test.js +47 -0

- package/lib/scrapers/visa-cal.d.ts +20 -0

- package/lib/scrapers/visa-cal.js +318 -0

- package/lib/scrapers/visa-cal.test.d.ts +1 -0

- package/lib/scrapers/visa-cal.test.js +49 -0

- package/lib/scrapers/yahav.d.ts +25 -0

- package/lib/scrapers/yahav.js +247 -0

- package/lib/scrapers/yahav.test.d.ts +1 -0

- package/lib/scrapers/yahav.test.js +49 -0

- package/lib/transactions.d.ts +47 -0

- package/lib/transactions.js +17 -0

- package/package.json +91 -0

package/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

1

|

+

MIT License

|

|

2

|

+

|

|

3

|

+

Copyright (c) 2017 Elad Shaham

|

|

4

|

+

|

|

5

|

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

|

6

|

+

of this software and associated documentation files (the "Software"), to deal

|

|

7

|

+

in the Software without restriction, including without limitation the rights

|

|

8

|

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

|

9

|

+

copies of the Software, and to permit persons to whom the Software is

|

|

10

|

+

furnished to do so, subject to the following conditions:

|

|

11

|

+

|

|

12

|

+

The above copyright notice and this permission notice shall be included in all

|

|

13

|

+

copies or substantial portions of the Software.

|

|

14

|

+

|

|

15

|

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

|

16

|

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

|

17

|

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

|

18

|

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

|

19

|

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

|

20

|

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

|

21

|

+

SOFTWARE.

|

package/README.md

ADDED

|

@@ -0,0 +1,425 @@

|

|

|

1

|

+

# Israeli Bank Scrapers - Get closer to your own data!

|

|

2

|

+

|

|

3

|

+

<img src="./logo.png" width="100" height="100" alt="Logo" align="left" />

|

|

4

|

+

|

|

5

|

+

[](https://nodei.co/npm/israeli-bank-scrapers/)

|

|

6

|

+

|

|

7

|

+

[](https://badge.fury.io/js/israeli-bank-scrapers)

|

|

8

|

+

[](https://david-dm.org/eshaham/israeli-bank-scrapers)

|

|

9

|

+

[](https://david-dm.org/eshaham/israeli-bank-scrapers?type=dev)

|

|

10

|

+

[](https://discord.gg/2UvGM7aX4p)

|

|

11

|

+

|

|

12

|

+

> Important!

|

|

13

|

+

>

|

|

14

|

+

> The scrapers are set to use timezone `Asia/Jerusalem` to avoid conflicts in case you're running the scrapers outside Israel.

|

|

15

|

+

|

|

16

|

+

# What's here?

|

|

17

|

+

What you can find here is scrapers for all major Israeli banks and credit card companies. That's the plan at least.

|

|

18

|

+

Currently only the following banks are supported:

|

|

19

|

+

- Bank Hapoalim (thanks [@sebikaplun](https://github.com/sebikaplun))

|

|

20

|

+

- Leumi Bank (thanks [@esakal](https://github.com/esakal))

|

|

21

|

+

- Discount Bank

|

|

22

|

+

- Mercantile Bank (thanks [@ezzatq](https://github.com/ezzatq) and [@kfirarad](https://github.com/kfirarad)))

|

|

23

|

+

- Mizrahi Bank (thanks [@baruchiro](https://github.com/baruchiro))

|

|

24

|

+

- Otsar Hahayal Bank (thanks [@matanelgabsi](https://github.com/matanelgabsi))

|

|

25

|

+

- Visa Cal (thanks [@erikash](https://github.com/erikash), [@esakal](https://github.com/esakal) and [@nirgin](https://github.com/nirgin))

|

|

26

|

+

- Max (Formerly Leumi Card)

|

|

27

|

+

- Isracard

|

|

28

|

+

- Amex (thanks [@erezd](https://github.com/erezd))

|

|

29

|

+

- Union Bank (Thanks to Intuit FDP OpenSource Team [@dratler](https://github.com/dratler),[@kalinoy](https://github.com/kalinoy),[@shanigad](https://github.com/shanigad),[@dudiventura](https://github.com/dudiventura) and [@NoamGoren](https://github.com/NoamGoren))

|

|

30

|

+

- Beinleumi (Thanks to [@dudiventura](https://github.com/dudiventura) from the Intuit FDP OpenSource Team)

|

|

31

|

+

- Massad

|

|

32

|

+

- Yahav (Thanks to [@gczobel](https://github.com/gczobel))

|

|

33

|

+

- Beyhad Bishvilha - [ביחד בשבילך](https://www.hist.org.il/) (thanks [@esakal](https://github.com/esakal))

|

|

34

|

+

- OneZero (Experimental) (thanks [@orzarchi](https://github.com/orzarchi))

|

|

35

|

+

- Behatsdaa - [בהצדעה](behatsdaa.org.il) (thanks [@daniel-hauser](https://github.com/daniel-hauser))

|

|

36

|

+

|

|

37

|

+

# Prerequisites

|

|

38

|

+

To use this you will need to have [Node.js](https://nodejs.org) >= 16.x installed.

|

|

39

|

+

|

|

40

|

+

# Getting started

|

|

41

|

+

To use these scrapers you'll need to install the package from npm:

|

|

42

|

+

```sh

|

|

43

|

+

npm install israeli-bank-scrapers --save

|

|

44

|

+

```

|

|

45

|

+

Then you can simply import and use it in your node module:

|

|

46

|

+

|

|

47

|

+

```node

|

|

48

|

+

import { CompanyTypes, createScraper } from 'israeli-bank-scrapers';

|

|

49

|

+

|

|

50

|

+

(async function() {

|

|

51

|

+

try {

|

|

52

|

+

// read documentation below for available options

|

|

53

|

+

const options = {

|

|

54

|

+

companyId: CompanyTypes.leumi,

|

|

55

|

+

startDate: new Date('2020-05-01'),

|

|

56

|

+

combineInstallments: false,

|

|

57

|

+

showBrowser: true

|

|

58

|

+

};

|

|

59

|

+

|

|

60

|

+

// read documentation below for information about credentials

|

|

61

|

+

const credentials = {

|

|

62

|

+

username: 'vr29485',

|

|

63

|

+

password: 'sometingsomething'

|

|

64

|

+

};

|

|

65

|

+

|

|

66

|

+

const scraper = createScraper(options);

|

|

67

|

+

const scrapeResult = await scraper.scrape(credentials);

|

|

68

|

+

|

|

69

|

+

if (scrapeResult.success) {

|

|

70

|

+

scrapeResult.accounts.forEach((account) => {

|

|

71

|

+

console.log(`found ${account.txns.length} transactions for account number ${account.accountNumber}`);

|

|

72

|

+

});

|

|

73

|

+

}

|

|

74

|

+

else {

|

|

75

|

+

throw new Error(scrapeResult.errorType);

|

|

76

|

+

}

|

|

77

|

+

} catch(e) {

|

|

78

|

+

console.error(`scraping failed for the following reason: ${e.message}`);

|

|

79

|

+

}

|

|

80

|

+

})();

|

|

81

|

+

```

|

|

82

|

+

|

|

83

|

+

Check the options declaration [here](./src/scrapers/interface.ts#L29) for available options.

|

|

84

|

+

|

|

85

|

+

Regarding credentials, you should provide the relevant credentials for the chosen company. See [this file](./src/definitions.ts) with list of credentials per company.

|

|

86

|

+

|

|

87

|

+

The structure of the result object is as follows:

|

|

88

|

+

|

|

89

|

+

```node

|

|

90

|

+

{

|

|

91

|

+

success: boolean,

|

|

92

|

+

accounts: [{

|

|

93

|

+

accountNumber: string,

|

|

94

|

+

balance?: number, // Account balance. Not implemented for all accounts.

|

|

95

|

+

txns: [{

|

|

96

|

+

type: string, // can be either 'normal' or 'installments'

|

|

97

|

+

identifier: int, // only if exists

|

|

98

|

+

date: string, // ISO date string

|

|

99

|

+

processedDate: string, // ISO date string

|

|

100

|

+

originalAmount: double,

|

|

101

|

+

originalCurrency: string,

|

|

102

|

+

chargedAmount: double,

|

|

103

|

+

description: string,

|

|

104

|

+

memo: string, // can be null or empty

|

|

105

|

+

installments: { // only if exists

|

|

106

|

+

number: int, // the current installment number

|

|

107

|

+

total: int, // the total number of installments

|

|

108

|

+

},

|

|

109

|

+

status: string //can either be 'completed' or 'pending'

|

|

110

|

+

}],

|

|

111

|

+

}],

|

|

112

|

+

errorType: "INVALID_PASSWORD"|"CHANGE_PASSWORD"|"ACCOUNT_BLOCKED"|"UNKNOWN_ERROR"|"TIMEOUT"|"GENERIC", // only on success=false

|

|

113

|

+

errorMessage: string, // only on success=false

|

|

114

|

+

}

|

|

115

|

+

```

|

|

116

|

+

|

|

117

|

+

You can also use the `SCRAPERS` list to get scraper metadata:

|

|

118

|

+

```node

|

|

119

|

+

import { SCRAPERS } from 'israeli-bank-scrapers';

|

|

120

|

+

```

|

|

121

|

+

|

|

122

|

+

The return value is a list of scraper metadata:

|

|

123

|

+

```node

|

|

124

|

+

{

|

|

125

|

+

<companyId>: {

|

|

126

|

+

name: string, // the name of the scraper

|

|

127

|

+

loginFields: [ // a list of login field required by this scraper

|

|

128

|

+

'<some field>' // the name of the field

|

|

129

|

+

]

|

|

130

|

+

}

|

|

131

|

+

}

|

|

132

|

+

```

|

|

133

|

+

|

|

134

|

+

## Advanced options

|

|

135

|

+

|

|

136

|

+

### ExternalBrowserOptions

|

|

137

|

+

|

|

138

|

+

This option allows you to provide an externally created browser instance. You can get a browser directly from puppeteer via `puppeteer.launch()`.

|

|

139

|

+

Note that for backwards compatibility, the browser will be closed by the library after the scraper finishes unless `skipCloseBrowser` is set to true.

|

|

140

|

+

|

|

141

|

+

Example:

|

|

142

|

+

|

|

143

|

+

```typescript

|

|

144

|

+

import puppeteer from 'puppeteer';

|

|

145

|

+

import { CompanyTypes, createScraper } from 'israeli-bank-scrapers';

|

|

146

|

+

|

|

147

|

+

const browser = await puppeteer.launch();

|

|

148

|

+

const options = {

|

|

149

|

+

companyId: CompanyTypes.leumi,

|

|

150

|

+

startDate: new Date('2020-05-01'),

|

|

151

|

+

browser,

|

|

152

|

+

skipCloseBrowser: true, // Or false [default] if you want it to auto-close

|

|

153

|

+

};

|

|

154

|

+

const scraper = createScraper(options);

|

|

155

|

+

const scrapeResult = await scraper.scrape({ username: 'vr29485', password: 'sometingsomething' });

|

|

156

|

+

await browser.close(); // Or not if `skipCloseBrowser` is false

|

|

157

|

+

```

|

|

158

|

+

|

|

159

|

+

### ExternalBrowserContextOptions

|

|

160

|

+

|

|

161

|

+

This option allows you to provide a [browser context](https://pptr.dev/api/puppeteer.browsercontext). This is useful if you don't want to share cookies with other scrapers (i.e. multiple parallel runs of the same scraper with different users) without creating a new browser for each scraper.

|

|

162

|

+

|

|

163

|

+

Example:

|

|

164

|

+

|

|

165

|

+

```typescript

|

|

166

|

+

import puppeteer from 'puppeteer';

|

|

167

|

+

import { CompanyTypes, createScraper } from 'israeli-bank-scrapers';

|

|

168

|

+

|

|

169

|

+

const browser = await puppeteer.launch();

|

|

170

|

+

const browserContext = await browser.createBrowserContext();

|

|

171

|

+

const options = {

|

|

172

|

+

companyId: CompanyTypes.leumi,

|

|

173

|

+

startDate: new Date('2020-05-01'),

|

|

174

|

+

browserContext

|

|

175

|

+

};

|

|

176

|

+

const scraper = createScraper(options);

|

|

177

|

+

const scrapeResult = await scraper.scrape({ username: 'vr29485', password: 'sometingsomething' });

|

|

178

|

+

await browser.close();

|

|

179

|

+

```

|

|

180

|

+

|

|

181

|

+

### OptIn Features

|

|

182

|

+

|

|

183

|

+

Some scrapers support opt-in features that can be enabled by passing the `optInFeatures` option when creating the scraper.

|

|

184

|

+

Opt in features are usually used for breaking changes that are not enabled by default to avoid breaking existing users.

|

|

185

|

+

|

|

186

|

+

See the [OptInFeatures](https://github.com/eshaham/israeli-bank-scrapers/blob/master/src/scrapers/interface.ts#:~:text=export-,type%20OptInFeatures) interface for available features.

|

|

187

|

+

|

|

188

|

+

## Two-Factor Authentication Scrapers

|

|

189

|

+

|

|

190

|

+

Some companies require two-factor authentication, and as such the scraper cannot be fully automated. When using the relevant scrapers, you have two options:

|

|

191

|

+

1. Provide an async callback that knows how to retrieve real time secrets like OTP codes.

|

|

192

|

+

2. When supported by the scraper - provide a "long term token". These are usually available if the financial provider only requires Two-Factor authentication periodically, and not on every login. You can retrieve your long term token from the relevant credit/banking app using reverse engineering and a MITM proxy, or use helper functions that are provided by some Two-Factor Auth scrapers (e.g. OneZero).

|

|

193

|

+

|

|

194

|

+

|

|

195

|

+

```node

|

|

196

|

+

import { CompanyTypes, createScraper } from 'israeli-bank-scrapers';

|

|

197

|

+

import { prompt } from 'enquirer';

|

|

198

|

+

|

|

199

|

+

// Option 1 - Provide a callback

|

|

200

|

+

|

|

201

|

+

const result = await scraper.login({

|

|

202

|

+

email: relevantAccount.credentials.email,

|

|

203

|

+

password: relevantAccount.credentials.password,

|

|

204

|

+

phoneNumber,

|

|

205

|

+

otpCodeRetriever: async () => {

|

|

206

|

+

let otpCode;

|

|

207

|

+

while (!otpCode) {

|

|

208

|

+

otpCode = await questions('OTP Code?');

|

|

209

|

+

}

|

|

210

|

+

|

|

211

|

+

return otpCode[0];

|

|

212

|

+

}

|

|

213

|

+

});

|

|

214

|

+

|

|

215

|

+

// Option 2 - Retrieve a long term otp token (OneZero)

|

|

216

|

+

await scraper.triggerTwoFactorAuth(phoneNumber);

|

|

217

|

+

|

|

218

|

+

// OTP is sent, retrieve it somehow

|

|

219

|

+

const otpCode='...';

|

|

220

|

+

|

|

221

|

+

const result = scraper.getLongTermTwoFactorToken(otpCode);

|

|

222

|

+

/*

|

|

223

|

+

result = {

|

|

224

|

+

success: true;

|

|

225

|

+

longTermTwoFactorAuthToken: 'eyJraWQiOiJiNzU3OGM5Yy0wM2YyLTRkMzktYjBm...';

|

|

226

|

+

}

|

|

227

|

+

*/

|

|

228

|

+

```

|

|

229

|

+

|

|

230

|

+

# Getting deployed version of latest changes in master

|

|

231

|

+

This library is deployed automatically to NPM with any change merged into the master branch.

|

|

232

|

+

|

|

233

|

+

# `Israeli-bank-scrapers-core` library

|

|

234

|

+

|

|

235

|

+

> TL;DR this is the same library as the default library. The only difference is that it is using `puppeteer-core` instead of `puppeteer` which is useful if you are using frameworks like Electron to pack your application.

|

|

236

|

+

>

|

|

237

|

+

> In most cases you will probably want to use the default library (read [Getting Started](#getting-started) section).

|

|

238

|

+

|

|

239

|

+

Israeli bank scrapers library is published twice:

|

|

240

|

+

1. [israeli-bank-scrapers](https://www.npmjs.com/package/israeli-bank-scrapers) - the default variation, great for common usage as node dependency in server application or cli.

|

|

241

|

+

2. [israeli-bank-scrapers-core](https://www.npmjs.com/package/israeli-bank-scrapers-core) - extremely useful for applications that bundle `node_modules` like Electron applications.

|

|

242

|

+

|

|

243

|

+

## Differences between default and core variations

|

|

244

|

+

|

|

245

|

+

The default variation [israeli-bank-scrapers](https://www.npmjs.com/package/israeli-bank-scrapers) is using [puppeteer](https://www.npmjs.com/package/puppeteer) which handles the installation of local chroumium on its' own. This behavior is very handy since it takes care on all the hard work figuring which chromium to download and manage the actual download process. As a side effect it increases node_modules by several hundred megabytes.

|

|

246

|

+

|

|

247

|

+

The core variation [israeli-bank-scrapers-core](https://www.npmjs.com/package/israeli-bank-scrapers-core) is using [puppeteer-core](https://www.npmjs.com/package/puppeteer-core) which is exactly the same library as `puppeteer` except that it doesn't download chromium when installed by npm. It is up to you to make sure the specific version of chromium is installed locally and provide a path to that version. It is useful in Electron applications since it doesn't bloat the size of the application and you can provide a much friendlier experience like loading the application and download it later when needed.

|

|

248

|

+

|

|

249

|

+

To install `israeli-bank-scrapers-core`:

|

|

250

|

+

```sh

|

|

251

|

+

npm install israeli-bank-scrapers-core --save

|

|

252

|

+

```

|

|

253

|

+

|

|

254

|

+

## Getting chromium version used by puppeteer-core

|

|

255

|

+

When using the `israeli-bank-scrapers-core` it is up to you to make sure the relevant chromium version exists. You must:

|

|

256

|

+

1. query for the specific chromium revision required by the `puppeteer-core` library being used.

|

|

257

|

+

2. make sure that you have local version of that revision.

|

|

258

|

+

3. provide an absolute path to `israeli-bank-scrapers-core` scrapers.

|

|

259

|

+

|

|

260

|

+

Please read the following to learn more about the process:

|

|

261

|

+

1. To get the required chromium revision use the following code:

|

|

262

|

+

```

|

|

263

|

+

import { getPuppeteerConfig } from 'israeli-bank-scrapers-core';

|

|

264

|

+

|

|

265

|

+

const chromiumVersion = getPuppeteerConfig().chromiumRevision;

|

|

266

|

+

```

|

|

267

|

+

|

|

268

|

+

2. Once you have the chromium revision, you can either download it manually or use other liraries like [download-chromium](https://www.npmjs.com/package/download-chromium) to fetch that version. The mentioned library is very handy as it caches the download and provide useful helpers like download progress information.

|

|

269

|

+

|

|

270

|

+

3. provide the path to chromium to the library using the option key `executablePath`.

|

|

271

|

+

|

|

272

|

+

# Specific definitions per scraper

|

|

273

|

+

|

|

274

|

+

## Bank Hapoalim scraper

|

|

275

|

+

This scraper expects the following credentials object:

|

|

276

|

+

```node

|

|

277

|

+

const credentials = {

|

|

278

|

+

userCode: <user identification code>,

|

|

279

|

+

password: <user password>

|

|

280

|

+

};

|

|

281

|

+

```

|

|

282

|

+

This scraper supports fetching transaction from up to one year.

|

|

283

|

+

|

|

284

|

+

## Bank Leumi scraper

|

|

285

|

+

This scraper expects the following credentials object:

|

|

286

|

+

```node

|

|

287

|

+

const credentials = {

|

|

288

|

+

username: <user name>,

|

|

289

|

+

password: <user password>

|

|

290

|

+

};

|

|

291

|

+

```

|

|

292

|

+

This scraper supports fetching transaction from up to one year.

|

|

293

|

+

|

|

294

|

+

## Discount scraper

|

|

295

|

+

This scraper expects the following credentials object:

|

|

296

|

+

```node

|

|

297

|

+

const credentials = {

|

|

298

|

+

id: <user identification number>,

|

|

299

|

+

password: <user password>,

|

|

300

|

+

num: <user identificaiton code>

|

|

301

|

+

};

|

|

302

|

+

```

|

|

303

|

+

This scraper supports fetching transaction from up to one year (minus 1 day).

|

|

304

|

+

|

|

305

|

+

## Mercantile scraper

|

|

306

|

+

This scraper expects the following credentials object:

|

|

307

|

+

```node

|

|

308

|

+

const credentials = {

|

|

309

|

+

id: <user identification number>,

|

|

310

|

+

password: <user password>,

|

|

311

|

+

num: <user identificaiton code>

|

|

312

|

+

};

|

|

313

|

+

```

|

|

314

|

+

This scraper supports fetching transaction from up to one year (minus 1 day).

|

|

315

|

+

|

|

316

|

+

|

|

317

|

+

### Known Limitations

|

|

318

|

+

- Missing memo field

|

|

319

|

+

|

|

320

|

+

## Mizrahi scraper

|

|

321

|

+

This scraper expects the following credentials object:

|

|

322

|

+

```node

|

|

323

|

+

const credentials = {

|

|

324

|

+

username: <user identification number>,

|

|

325

|

+

password: <user password>

|

|

326

|

+

};

|

|

327

|

+

```

|

|

328

|

+

This scraper supports fetching transaction from up to one year.

|

|

329

|

+

|

|

330

|

+

## Beinleumi & Massad

|

|

331

|

+

These scrapers are essentially identical and expect the following credentials object:

|

|

332

|

+

```node

|

|

333

|

+

const credentials = {

|

|

334

|

+

username: <user name>,

|

|

335

|

+

password: <user password>

|

|

336

|

+

};

|

|

337

|

+

```

|

|

338

|

+

|

|

339

|

+

## Bank Otsar Hahayal scraper

|

|

340

|

+

This scraper expects the following credentials object:

|

|

341

|

+

```node

|

|

342

|

+

const credentials = {

|

|

343

|

+

username: <user name>,

|

|

344

|

+

password: <user password>

|

|

345

|

+

};

|

|

346

|

+

```

|

|

347

|

+

This scraper supports fetching transaction from up to one year.

|

|

348

|

+

|

|

349

|

+

## Visa Cal scraper

|

|

350

|

+

This scraper expects the following credentials object:

|

|

351

|

+

```node

|

|

352

|

+

const credentials = {

|

|

353

|

+

username: <user name>,

|

|

354

|

+

password: <user password>

|

|

355

|

+

};

|

|

356

|

+

```

|

|

357

|

+

This scraper supports fetching transaction from up to one year.

|

|

358

|

+

|

|

359

|

+

## Max scraper (Formerly Leumi-Card)

|

|

360

|

+

This scraper expects the following credentials object:

|

|

361

|

+

```node

|

|

362

|

+

const credentials = {

|

|

363

|

+

username: <user name>,

|

|

364

|

+

password: <user password>

|

|

365

|

+

};

|

|

366

|

+

```

|

|

367

|

+

This scraper supports fetching transaction from up to one year.

|

|

368

|

+

|

|

369

|

+

## Isracard scraper

|

|

370

|

+

This scraper expects the following credentials object:

|

|

371

|

+

```node

|

|

372

|

+

const credentials = {

|

|

373

|

+

id: <user identification number>,

|

|

374

|

+

card6Digits: <6 last digits of card>

|

|

375

|

+

password: <user password>

|

|

376

|

+

};

|

|

377

|

+

```

|

|

378

|

+

This scraper supports fetching transaction from up to one year.

|

|

379

|

+

|

|

380

|

+

## Amex scraper

|

|

381

|

+

This scraper expects the following credentials object:

|

|

382

|

+

```node

|

|

383

|

+

const credentials = {

|

|

384

|

+

username: <user identification number>,

|

|

385

|

+

card6Digits: <6 last digits of card>

|

|

386

|

+

password: <user password>

|

|

387

|

+

};

|

|

388

|

+

```

|

|

389

|

+

This scraper supports fetching transaction from up to one year.

|

|

390

|

+

|

|

391

|

+

## Yahav

|

|

392

|

+

This scraper expects the following credentials object:

|

|

393

|

+

```node

|

|

394

|

+

const credentials = {

|

|

395

|

+

username: <user name>,

|

|

396

|

+

password: <user password>,

|

|

397

|

+

nationalID: <user national ID>

|

|

398

|

+

};

|

|

399

|

+

```

|

|

400

|

+

This scraper supports fetching transaction from up to six months.

|

|

401

|

+

|

|

402

|

+

## Beyhad Bishvilha

|

|

403

|

+

This scraper expects the following credentials object::

|

|

404

|

+

```node

|

|

405

|

+

const credentials = {

|

|

406

|

+

id: <user identification number>,

|

|

407

|

+

password: <user password>

|

|

408

|

+

};

|

|

409

|

+

```

|

|

410

|

+

|

|

411

|

+

# Known projects

|

|

412

|

+

These are the projects known to be using this module:

|

|

413

|

+

- [Israeli YNAB updater](https://github.com/eshaham/israeli-ynab-updater) - A command line tool for exporting banks data to CSVs, formatted specifically for [YNAB](https://www.youneedabudget.com)

|

|

414

|

+

- [Caspion](https://github.com/brafdlog/caspion) - An app for automatically sending transactions from Israeli banks and credit cards to budget tracking apps

|

|

415

|

+

- [Finance Notifier](https://github.com/LiranBri/finance-notifier) - A simple script with the ability to send custom financial alerts to multiple contacts and platforms

|

|

416

|

+

- [Moneyman](https://github.com/daniel-hauser/moneyman) - Automatically save transactions from all major Israeli banks and credit card companies, using GitHub actions (or a self hosted docker image)

|

|

417

|

+

- [Firefly iii Importer](https://github.com/itairaz1/israeli-bank-firefly-importer) - A tool to import your banks data into [Firefly iii](https://www.firefly-iii.org/), a free and open source financial manager.

|

|

418

|

+

- [Actual Budget Importer](https://github.com/tomerh2001/israeli-banks-actual-budget-importer) - A tool to import your banks data into [Actual Budget](https://actualbudget.com/), a free and open source financial manager.

|

|

419

|

+

- [Clarify](https://github.com/tomyweiss/clarify-expences) - A full-stack personal finance app for tracking income and expenses.

|

|

420

|

+

- [Asher MCP](https://github.com/shlomiuziel/asher-mcp) - Scrape & access your financial data with LLM using the Model Context Protocol.

|

|

421

|

+

|

|

422

|

+

Built something interesting you want to share here? [Let me know](https://goo.gl/forms/5Fb9JAjvzMIpmzqo2).

|

|

423

|

+

|

|

424

|

+

# License

|

|

425

|

+

The MIT License

|

|

@@ -0,0 +1 @@

|

|

|

1

|

+

export declare function assertNever(x: never, error?: string): never;

|

|

@@ -0,0 +1,10 @@

|

|

|

1

|

+

"use strict";

|

|

2

|

+

|

|

3

|

+

Object.defineProperty(exports, "__esModule", {

|

|

4

|

+

value: true

|

|

5

|

+

});

|

|

6

|

+

exports.assertNever = assertNever;

|

|

7

|

+

function assertNever(x, error = '') {

|

|

8

|

+

throw new Error(error || `Unexpected object: ${x}`);

|

|

9

|

+

}

|

|

10

|

+

//# sourceMappingURL=data:application/json;charset=utf-8;base64,eyJ2ZXJzaW9uIjozLCJuYW1lcyI6WyJhc3NlcnROZXZlciIsIngiLCJlcnJvciIsIkVycm9yIl0sInNvdXJjZXMiOlsiLi4vc3JjL2Fzc2VydE5ldmVyLnRzIl0sInNvdXJjZXNDb250ZW50IjpbImV4cG9ydCBmdW5jdGlvbiBhc3NlcnROZXZlcih4OiBuZXZlciwgZXJyb3IgPSAnJyk6IG5ldmVyIHtcbiAgdGhyb3cgbmV3IEVycm9yKGVycm9yIHx8IGBVbmV4cGVjdGVkIG9iamVjdDogJHt4IGFzIGFueX1gKTtcbn1cbiJdLCJtYXBwaW5ncyI6Ijs7Ozs7O0FBQU8sU0FBU0EsV0FBV0EsQ0FBQ0MsQ0FBUSxFQUFFQyxLQUFLLEdBQUcsRUFBRSxFQUFTO0VBQ3ZELE1BQU0sSUFBSUMsS0FBSyxDQUFDRCxLQUFLLElBQUksc0JBQXNCRCxDQUFDLEVBQVMsQ0FBQztBQUM1RCIsImlnbm9yZUxpc3QiOltdfQ==

|

|

@@ -0,0 +1,10 @@

|

|

|

1

|

+

export declare const SHEKEL_CURRENCY_SYMBOL = "\u20AA";

|

|

2

|

+

export declare const SHEKEL_CURRENCY_KEYWORD = "\u05E9\"\u05D7";

|

|

3

|

+

export declare const ALT_SHEKEL_CURRENCY = "NIS";

|

|

4

|

+

export declare const SHEKEL_CURRENCY = "ILS";

|

|

5

|

+

export declare const DOLLAR_CURRENCY_SYMBOL = "$";

|

|

6

|

+

export declare const DOLLAR_CURRENCY = "USD";

|

|

7

|

+

export declare const EURO_CURRENCY_SYMBOL = "\u20AC";

|

|

8

|

+

export declare const EURO_CURRENCY = "EUR";

|

|

9

|

+

export declare const ISO_DATE_FORMAT = "YYYY-MM-DD[T]HH:mm:ss.SSS[Z]";

|

|

10

|

+

export declare const ISO_DATE_REGEX: RegExp;

|

package/lib/constants.js

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

1

|

+

"use strict";

|

|

2

|

+

|

|

3

|

+

Object.defineProperty(exports, "__esModule", {

|

|

4

|

+

value: true

|

|

5

|

+

});

|

|

6

|

+

exports.SHEKEL_CURRENCY_SYMBOL = exports.SHEKEL_CURRENCY_KEYWORD = exports.SHEKEL_CURRENCY = exports.ISO_DATE_REGEX = exports.ISO_DATE_FORMAT = exports.EURO_CURRENCY_SYMBOL = exports.EURO_CURRENCY = exports.DOLLAR_CURRENCY_SYMBOL = exports.DOLLAR_CURRENCY = exports.ALT_SHEKEL_CURRENCY = void 0;

|

|

7

|

+

const SHEKEL_CURRENCY_SYMBOL = exports.SHEKEL_CURRENCY_SYMBOL = '₪';

|

|

8

|

+

const SHEKEL_CURRENCY_KEYWORD = exports.SHEKEL_CURRENCY_KEYWORD = 'ש"ח';

|

|

9

|

+

const ALT_SHEKEL_CURRENCY = exports.ALT_SHEKEL_CURRENCY = 'NIS';

|

|

10

|

+

const SHEKEL_CURRENCY = exports.SHEKEL_CURRENCY = 'ILS';

|

|

11

|

+

const DOLLAR_CURRENCY_SYMBOL = exports.DOLLAR_CURRENCY_SYMBOL = '$';

|

|

12

|

+

const DOLLAR_CURRENCY = exports.DOLLAR_CURRENCY = 'USD';

|

|

13

|

+

const EURO_CURRENCY_SYMBOL = exports.EURO_CURRENCY_SYMBOL = '€';

|

|

14

|

+

const EURO_CURRENCY = exports.EURO_CURRENCY = 'EUR';

|

|

15

|

+

const ISO_DATE_FORMAT = exports.ISO_DATE_FORMAT = 'YYYY-MM-DD[T]HH:mm:ss.SSS[Z]';

|

|

16

|

+

const ISO_DATE_REGEX = exports.ISO_DATE_REGEX = /^[0-9]{4}-(0[1-9]|1[0-2])-(0[1-9]|[1-2][0-9]|3[0-1])T([0-1][0-9]|2[0-3])(:[0-5][0-9]){2}\.[0-9]{3}Z$/;

|

|

17

|

+

//# sourceMappingURL=data:application/json;charset=utf-8;base64,eyJ2ZXJzaW9uIjozLCJuYW1lcyI6WyJTSEVLRUxfQ1VSUkVOQ1lfU1lNQk9MIiwiZXhwb3J0cyIsIlNIRUtFTF9DVVJSRU5DWV9LRVlXT1JEIiwiQUxUX1NIRUtFTF9DVVJSRU5DWSIsIlNIRUtFTF9DVVJSRU5DWSIsIkRPTExBUl9DVVJSRU5DWV9TWU1CT0wiLCJET0xMQVJfQ1VSUkVOQ1kiLCJFVVJPX0NVUlJFTkNZX1NZTUJPTCIsIkVVUk9fQ1VSUkVOQ1kiLCJJU09fREFURV9GT1JNQVQiLCJJU09fREFURV9SRUdFWCJdLCJzb3VyY2VzIjpbIi4uL3NyYy9jb25zdGFudHMudHMiXSwic291cmNlc0NvbnRlbnQiOlsiZXhwb3J0IGNvbnN0IFNIRUtFTF9DVVJSRU5DWV9TWU1CT0wgPSAn4oKqJztcbmV4cG9ydCBjb25zdCBTSEVLRUxfQ1VSUkVOQ1lfS0VZV09SRCA9ICfXqVwi15cnO1xuZXhwb3J0IGNvbnN0IEFMVF9TSEVLRUxfQ1VSUkVOQ1kgPSAnTklTJztcbmV4cG9ydCBjb25zdCBTSEVLRUxfQ1VSUkVOQ1kgPSAnSUxTJztcblxuZXhwb3J0IGNvbnN0IERPTExBUl9DVVJSRU5DWV9TWU1CT0wgPSAnJCc7XG5leHBvcnQgY29uc3QgRE9MTEFSX0NVUlJFTkNZID0gJ1VTRCc7XG5cbmV4cG9ydCBjb25zdCBFVVJPX0NVUlJFTkNZX1NZTUJPTCA9ICfigqwnO1xuZXhwb3J0IGNvbnN0IEVVUk9fQ1VSUkVOQ1kgPSAnRVVSJztcblxuZXhwb3J0IGNvbnN0IElTT19EQVRFX0ZPUk1BVCA9ICdZWVlZLU1NLUREW1RdSEg6bW06c3MuU1NTW1pdJztcblxuZXhwb3J0IGNvbnN0IElTT19EQVRFX1JFR0VYID1cbiAgL15bMC05XXs0fS0oMFsxLTldfDFbMC0yXSktKDBbMS05XXxbMS0yXVswLTldfDNbMC0xXSlUKFswLTFdWzAtOV18MlswLTNdKSg6WzAtNV1bMC05XSl7Mn1cXC5bMC05XXszfVokLztcbiJdLCJtYXBwaW5ncyI6Ijs7Ozs7O0FBQU8sTUFBTUEsc0JBQXNCLEdBQUFDLE9BQUEsQ0FBQUQsc0JBQUEsR0FBRyxHQUFHO0FBQ2xDLE1BQU1FLHVCQUF1QixHQUFBRCxPQUFBLENBQUFDLHVCQUFBLEdBQUcsS0FBSztBQUNyQyxNQUFNQyxtQkFBbUIsR0FBQUYsT0FBQSxDQUFBRSxtQkFBQSxHQUFHLEtBQUs7QUFDakMsTUFBTUMsZUFBZSxHQUFBSCxPQUFBLENBQUFHLGVBQUEsR0FBRyxLQUFLO0FBRTdCLE1BQU1DLHNCQUFzQixHQUFBSixPQUFBLENBQUFJLHNCQUFBLEdBQUcsR0FBRztBQUNsQyxNQUFNQyxlQUFlLEdBQUFMLE9BQUEsQ0FBQUssZUFBQSxHQUFHLEtBQUs7QUFFN0IsTUFBTUMsb0JBQW9CLEdBQUFOLE9BQUEsQ0FBQU0sb0JBQUEsR0FBRyxHQUFHO0FBQ2hDLE1BQU1DLGFBQWEsR0FBQVAsT0FBQSxDQUFBTyxhQUFBLEdBQUcsS0FBSztBQUUzQixNQUFNQyxlQUFlLEdBQUFSLE9BQUEsQ0FBQVEsZUFBQSxHQUFHLDhCQUE4QjtBQUV0RCxNQUFNQyxjQUFjLEdBQUFULE9BQUEsQ0FBQVMsY0FBQSxHQUN6QixzR0FBc0ciLCJpZ25vcmVMaXN0IjpbXX0=

|

|

@@ -0,0 +1,105 @@

|

|

|

1

|

+

export declare const PASSWORD_FIELD = "password";

|

|

2

|

+

export declare enum CompanyTypes {

|

|

3

|

+

hapoalim = "hapoalim",

|

|

4

|

+

beinleumi = "beinleumi",

|

|

5

|

+

union = "union",

|

|

6

|

+

amex = "amex",

|

|

7

|

+

isracard = "isracard",

|

|

8

|

+

visaCal = "visaCal",

|

|

9

|

+

max = "max",

|

|

10

|

+

otsarHahayal = "otsarHahayal",

|

|

11

|

+

discount = "discount",

|

|

12

|

+

mercantile = "mercantile",

|

|

13

|

+

mizrahi = "mizrahi",

|

|

14

|

+

leumi = "leumi",

|

|

15

|

+

massad = "massad",

|

|

16

|

+

yahav = "yahav",

|

|

17

|

+

behatsdaa = "behatsdaa",

|

|

18

|

+

beyahadBishvilha = "beyahadBishvilha",

|

|

19

|

+

oneZero = "oneZero",

|

|

20

|

+

pagi = "pagi"

|

|

21

|

+

}

|

|

22

|

+

export declare const SCRAPERS: {

|

|

23

|

+

hapoalim: {

|

|

24

|

+

name: string;

|

|

25

|

+

loginFields: string[];

|

|

26

|

+

};

|

|

27

|

+

leumi: {

|

|

28

|

+

name: string;

|

|

29

|

+

loginFields: string[];

|

|

30

|

+

};

|

|

31

|

+

mizrahi: {

|

|

32

|

+

name: string;

|

|

33

|

+

loginFields: string[];

|

|

34

|

+

};

|

|

35

|

+

discount: {

|

|

36

|

+

name: string;

|

|

37

|

+

loginFields: string[];

|

|

38

|

+

};

|

|

39

|

+

mercantile: {

|

|

40

|

+

name: string;

|

|

41

|

+

loginFields: string[];

|

|

42

|

+

};

|

|

43

|

+

otsarHahayal: {

|

|

44

|

+

name: string;

|

|

45

|

+

loginFields: string[];

|

|

46

|

+

};

|

|

47

|

+

max: {

|

|

48

|

+

name: string;

|

|

49

|

+

loginFields: string[];

|

|

50

|

+

};

|

|

51

|

+

visaCal: {

|

|

52

|

+

name: string;

|

|

53

|

+

loginFields: string[];

|

|

54

|

+

};

|

|

55

|

+

isracard: {

|

|

56

|

+

name: string;

|

|

57

|

+

loginFields: string[];

|

|

58

|

+

};

|

|

59

|

+

amex: {

|

|

60

|

+

name: string;

|

|

61

|

+

loginFields: string[];

|

|

62

|

+

};

|

|

63

|

+

union: {

|

|

64

|

+

name: string;

|

|

65

|

+

loginFields: string[];

|

|

66

|

+

};

|

|

67

|

+

beinleumi: {

|

|

68

|

+

name: string;

|

|

69

|

+

loginFields: string[];

|

|

70

|

+

};

|

|

71

|

+

massad: {

|

|

72

|

+

name: string;

|

|

73

|

+

loginFields: string[];

|

|

74

|

+

};

|

|

75

|

+

yahav: {

|

|

76

|

+

name: string;

|

|

77

|

+

loginFields: string[];

|

|

78

|

+

};

|

|

79

|

+

beyahadBishvilha: {

|

|

80

|

+

name: string;

|

|

81

|

+

loginFields: string[];

|

|

82

|

+

};

|

|

83

|

+

oneZero: {

|

|

84

|

+

name: string;

|

|

85

|

+

loginFields: string[];

|

|

86

|

+

};

|

|

87

|

+

behatsdaa: {

|

|

88

|

+

name: string;

|

|

89

|

+

loginFields: string[];

|

|

90

|

+

};

|

|

91

|

+

pagi: {

|

|

92

|

+

name: string;

|

|

93

|

+

loginFields: string[];

|

|

94

|

+

};

|

|

95

|

+

};

|

|

96

|

+

export declare enum ScraperProgressTypes {

|

|

97

|

+

Initializing = "INITIALIZING",

|

|

98

|

+

StartScraping = "START_SCRAPING",

|

|

99

|

+

LoggingIn = "LOGGING_IN",

|

|

100

|

+

LoginSuccess = "LOGIN_SUCCESS",

|

|

101

|

+

LoginFailed = "LOGIN_FAILED",

|

|

102

|

+

ChangePassword = "CHANGE_PASSWORD",

|

|

103

|

+

EndScraping = "END_SCRAPING",

|

|

104

|

+

Terminating = "TERMINATING"

|

|

105

|

+

}

|