@pgflow/edge-worker 0.0.5 → 0.0.7-prealpha.1

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/package.json +10 -4

- package/.envrc +0 -2

- package/CHANGELOG.md +0 -10

- package/deno.lock +0 -336

- package/deno.test.json +0 -32

- package/dist/LICENSE.md +0 -660

- package/dist/README.md +0 -46

- package/dist/index.js +0 -972

- package/dist/index.js.map +0 -7

- package/mod.ts +0 -7

- package/pkgs/edge-worker/dist/index.js +0 -953

- package/pkgs/edge-worker/dist/index.js.map +0 -7

- package/pkgs/edge-worker/dist/pkgs/edge-worker/LICENSE.md +0 -660

- package/pkgs/edge-worker/dist/pkgs/edge-worker/README.md +0 -46

- package/project.json +0 -164

- package/scripts/concatenate-migrations.sh +0 -22

- package/scripts/wait-for-localhost +0 -17

- package/sql/990_active_workers.sql +0 -11

- package/sql/991_inactive_workers.sql +0 -12

- package/sql/992_spawn_worker.sql +0 -68

- package/sql/benchmarks/max_concurrency.sql +0 -32

- package/sql/queries/debug_connections.sql +0 -0

- package/sql/queries/debug_processing_gaps.sql +0 -115

- package/src/EdgeWorker.ts +0 -172

- package/src/core/BatchProcessor.ts +0 -38

- package/src/core/ExecutionController.ts +0 -51

- package/src/core/Heartbeat.ts +0 -23

- package/src/core/Logger.ts +0 -42

- package/src/core/Queries.ts +0 -44

- package/src/core/Worker.ts +0 -102

- package/src/core/WorkerLifecycle.ts +0 -93

- package/src/core/WorkerState.ts +0 -85

- package/src/core/types.ts +0 -47

- package/src/flow/FlowWorkerLifecycle.ts +0 -81

- package/src/flow/StepTaskExecutor.ts +0 -87

- package/src/flow/StepTaskPoller.ts +0 -51

- package/src/flow/createFlowWorker.ts +0 -105

- package/src/flow/types.ts +0 -1

- package/src/index.ts +0 -15

- package/src/queue/MessageExecutor.ts +0 -105

- package/src/queue/Queue.ts +0 -92

- package/src/queue/ReadWithPollPoller.ts +0 -35

- package/src/queue/createQueueWorker.ts +0 -145

- package/src/queue/types.ts +0 -14

- package/src/spawnNewEdgeFunction.ts +0 -33

- package/supabase/call +0 -23

- package/supabase/cli +0 -3

- package/supabase/config.toml +0 -42

- package/supabase/functions/cpu_intensive/index.ts +0 -20

- package/supabase/functions/creating_queue/index.ts +0 -5

- package/supabase/functions/failing_always/index.ts +0 -13

- package/supabase/functions/increment_sequence/index.ts +0 -14

- package/supabase/functions/max_concurrency/index.ts +0 -17

- package/supabase/functions/serial_sleep/index.ts +0 -16

- package/supabase/functions/utils.ts +0 -13

- package/supabase/seed.sql +0 -2

- package/tests/db/compose.yaml +0 -20

- package/tests/db.ts +0 -71

- package/tests/e2e/README.md +0 -54

- package/tests/e2e/_helpers.ts +0 -135

- package/tests/e2e/performance.test.ts +0 -60

- package/tests/e2e/restarts.test.ts +0 -56

- package/tests/helpers.ts +0 -22

- package/tests/integration/_helpers.ts +0 -43

- package/tests/integration/creating_queue.test.ts +0 -32

- package/tests/integration/flow/minimalFlow.test.ts +0 -121

- package/tests/integration/maxConcurrent.test.ts +0 -76

- package/tests/integration/retries.test.ts +0 -78

- package/tests/integration/starting_worker.test.ts +0 -35

- package/tests/sql.ts +0 -46

- package/tests/unit/WorkerState.test.ts +0 -74

- package/tsconfig.lib.json +0 -23

|

@@ -1,46 +0,0 @@

|

|

|

1

|

-

<div align="center">

|

|

2

|

-

<h1>Edge Worker</h1>

|

|

3

|

-

<a href="https://pgflow.dev">

|

|

4

|

-

<h3>📚 Documentation @ pgflow.dev</h3>

|

|

5

|

-

</a>

|

|

6

|

-

|

|

7

|

-

<h4>⚠️ <strong>ADVANCED PROOF of CONCEPT - NOT PRODUCTION READY</strong> ⚠️</h4>

|

|

8

|

-

</div>

|

|

9

|

-

|

|

10

|

-

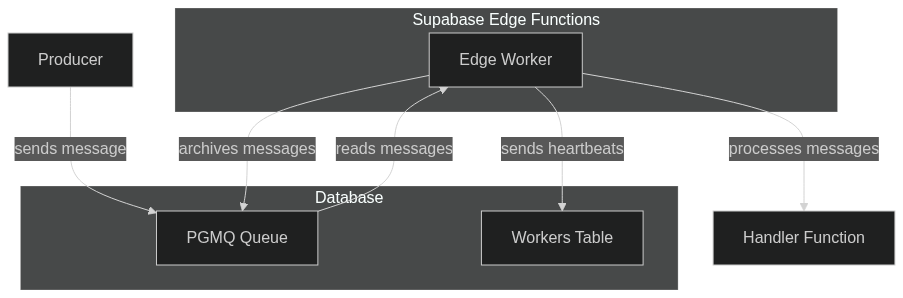

A task queue worker for Supabase Edge Functions that extends background tasks with useful features.

|

|

11

|

-

|

|

12

|

-

> [!NOTE]

|

|

13

|

-

> This project is licensed under [AGPL v3](./LICENSE.md) license and is part of **pgflow** stack.

|

|

14

|

-

> See [LICENSING_OVERVIEW.md](../../LICENSING_OVERVIEW.md) in root of this monorepo for more details.

|

|

15

|

-

|

|

16

|

-

## What is Edge Worker?

|

|

17

|

-

|

|

18

|

-

Edge Worker processes messages from a queue and executes user-defined functions with their payloads. It builds upon [Supabase Background Tasks](https://supabase.com/docs/guides/functions/background-tasks) to add reliability features like retries, concurrency control and monitoring.

|

|

19

|

-

|

|

20

|

-

## Key Features

|

|

21

|

-

|

|

22

|

-

- ⚡ **Reliable Processing**: Retries with configurable delays

|

|

23

|

-

- 🔄 **Concurrency Control**: Limit parallel task execution

|

|

24

|

-

- 📊 **Observability**: Built-in heartbeats and logging

|

|

25

|

-

- 📈 **Horizontal Scaling**: Deploy multiple edge functions for the same queue

|

|

26

|

-

- 🛡️ **Edge-Native**: Designed for Edge Functions' CPU/clock limits

|

|

27

|

-

|

|

28

|

-

## How It Works

|

|

29

|

-

|

|

30

|

-

[](https://mermaid.live/edit#pako:eNplkcFugzAMhl8lyrl9AQ47VLBxqdSqlZAGHEziASokyEkmTaXvvoR0o1VziGL_n_9Y9pULLZEnvFItwdSxc1op5o9xTUxU_OQmaMAgy2SL7N0pYXutTMUjGU5WlItYaLog1VFAJSv14paCXdweyw8f-2MZLnZ06LBelXxXRk_DztAM-Gp9KA-kpRP-W7bdvs3Ga4aNaAy0OC_WdzD4B4IQVsLMvvkIZMUiA4mu_8ZHYjW5MxNp4dUnKC9zUHJA-h9R_VQTG-sQyDYINlTs-IaPSCP00q_gGvCK2w5HP53EPyXQJczp5jlwVp9-lOCJJYcbTtq13V_gJgkW0x78lEeefMFgfHYC9an1GqPsraZ9XPiy99svlAqmtA)

|

|

31

|

-

|

|

32

|

-

## Edge Function Optimization

|

|

33

|

-

|

|

34

|

-

Edge Worker is specifically designed to handle Edge Function limitations:

|

|

35

|

-

|

|

36

|

-

- Stops polling near CPU/clock limits

|

|

37

|

-

- Gracefully aborts pending tasks

|

|

38

|

-

- Uses PGMQ's visibility timeout to prevent message loss

|

|

39

|

-

- Auto-spawns new instances for continuous operation

|

|

40

|

-

- Monitors worker health with database heartbeats

|

|

41

|

-

|

|

42

|

-

|

|

43

|

-

## Documentation

|

|

44

|

-

|

|

45

|

-

For detailed documentation and getting started guide, visit [pgflow.dev](https://pgflow.dev).

|

|

46

|

-

|

package/project.json

DELETED

|

@@ -1,164 +0,0 @@

|

|

|

1

|

-

{

|

|

2

|

-

"name": "edge-worker",

|

|

3

|

-

"$schema": "../../node_modules/nx/schemas/project-schema.json",

|

|

4

|

-

"sourceRoot": "pkgs/edge-worker",

|

|

5

|

-

"projectType": "library",

|

|

6

|

-

"targets": {

|

|

7

|

-

"build": {

|

|

8

|

-

"executor": "@nx/esbuild:esbuild",

|

|

9

|

-

"outputs": ["{options.outputPath}"],

|

|

10

|

-

"options": {

|

|

11

|

-

"outputPath": "pkgs/edge-worker/dist",

|

|

12

|

-

"main": "pkgs/edge-worker/src/index.ts",

|

|

13

|

-

"tsConfig": "pkgs/edge-worker/tsconfig.lib.json",

|

|

14

|

-

"platform": "node",

|

|

15

|

-

"format": ["esm"],

|

|

16

|

-

"assets": ["pkgs/edge-worker/*.md"],

|

|

17

|

-

"external": ["@jsr/*", "postgres"],

|

|

18

|

-

"bundle": true,

|

|

19

|

-

"sourcemap": true

|

|

20

|

-

}

|

|

21

|

-

},

|

|

22

|

-

"test:nx-deno": {

|

|

23

|

-

"executor": "@axhxrx/nx-deno:test",

|

|

24

|

-

"outputs": ["{workspaceRoot}/coverage/pkgs/edge-worker"],

|

|

25

|

-

"options": {

|

|

26

|

-

"coverageDirectory": "coverage/pkgs/edge-worker",

|

|

27

|

-

"denoConfig": "pkgs/edge-worker/deno.json",

|

|

28

|

-

"allowNone": false,

|

|

29

|

-

"check": "local"

|

|

30

|

-

}

|

|

31

|

-

},

|

|

32

|

-

"lint": {

|

|

33

|

-

"executor": "@axhxrx/nx-deno:lint",

|

|

34

|

-

"options": {

|

|

35

|

-

"denoConfig": "pkgs/edge-worker/deno.json",

|

|

36

|

-

"ignore": "pkgs/edge-worker/supabase/functions/_src/"

|

|

37

|

-

}

|

|

38

|

-

},

|

|

39

|

-

"supabase:start": {

|

|

40

|

-

"executor": "nx:run-commands",

|

|

41

|

-

"options": {

|

|

42

|

-

"cwd": "pkgs/edge-worker",

|

|

43

|

-

"commands": ["supabase start"],

|

|

44

|

-

"parallel": false

|

|

45

|

-

}

|

|

46

|

-

},

|

|

47

|

-

"supabase:stop": {

|

|

48

|

-

"executor": "nx:run-commands",

|

|

49

|

-

"options": {

|

|

50

|

-

"cwd": "pkgs/edge-worker",

|

|

51

|

-

"commands": ["supabase stop --no-backup"],

|

|

52

|

-

"parallel": false

|

|

53

|

-

}

|

|

54

|

-

},

|

|

55

|

-

"supabase:status": {

|

|

56

|

-

"executor": "nx:run-commands",

|

|

57

|

-

"options": {

|

|

58

|

-

"cwd": "pkgs/edge-worker",

|

|

59

|

-

"commands": ["supabase status"],

|

|

60

|

-

"parallel": false

|

|

61

|

-

}

|

|

62

|

-

},

|

|

63

|

-

"supabase:restart": {

|

|

64

|

-

"executor": "nx:run-commands",

|

|

65

|

-

"options": {

|

|

66

|

-

"cwd": "pkgs/edge-worker",

|

|

67

|

-

"commands": ["supabase stop --no-backup", "supabase start"],

|

|

68

|

-

"parallel": false

|

|

69

|

-

}

|

|

70

|

-

},

|

|

71

|

-

"supabase:reset": {

|

|

72

|

-

"dependsOn": ["supabase:prepare"],

|

|

73

|

-

"executor": "nx:run-commands",

|

|

74

|

-

"options": {

|

|

75

|

-

"cwd": "pkgs/edge-worker",

|

|

76

|

-

"commands": [

|

|

77

|

-

"rm -f supabase/migrations/*.sql",

|

|

78

|

-

"cp ../core/supabase/migrations/*.sql supabase/migrations/",

|

|

79

|

-

"cp sql/*_*.sql supabase/migrations/",

|

|

80

|

-

"supabase db reset"

|

|

81

|

-

],

|

|

82

|

-

"parallel": false

|

|

83

|

-

}

|

|

84

|

-

},

|

|

85

|

-

"supabase:prepare-edge-fn": {

|

|

86

|

-

"executor": "nx:run-commands",

|

|

87

|

-

"options": {

|

|

88

|

-

"cwd": "pkgs/edge-worker",

|

|

89

|

-

"commands": [

|

|

90

|

-

"rm -f supabase/functions/_src/*.ts",

|

|

91

|

-

"rm -f supabase/functions/_src/deno.*",

|

|

92

|

-

"cp -r src/* ./supabase/functions/_src/",

|

|

93

|

-

"cp deno.* ./supabase/functions/_src/",

|

|

94

|

-

"find supabase/functions -maxdepth 1 -mindepth 1 -type d -not -name '_src' -exec cp deno.* {} \\;"

|

|

95

|

-

],

|

|

96

|

-

"parallel": false

|

|

97

|

-

}

|

|

98

|

-

},

|

|

99

|

-

"supabase:functions-serve": {

|

|

100

|

-

"dependsOn": ["supabase:start", "supabase:prepare-edge-fn"],

|

|

101

|

-

"executor": "nx:run-commands",

|

|

102

|

-

"options": {

|

|

103

|

-

"cwd": "pkgs/edge-worker",

|

|

104

|

-

"commands": [

|

|

105

|

-

"supabase functions serve --env-file supabase/functions/.env"

|

|

106

|

-

],

|

|

107

|

-

"parallel": false

|

|

108

|

-

}

|

|

109

|

-

},

|

|

110

|

-

"db:ensure": {

|

|

111

|

-

"executor": "nx:run-commands",

|

|

112

|

-

"options": {

|

|

113

|

-

"cwd": "pkgs/edge-worker",

|

|

114

|

-

"commands": ["deno task db:ensure"],

|

|

115

|

-

"parallel": false

|

|

116

|

-

}

|

|

117

|

-

},

|

|

118

|

-

"test:unit": {

|

|

119

|

-

"dependsOn": ["db:ensure"],

|

|

120

|

-

"executor": "nx:run-commands",

|

|

121

|

-

"options": {

|

|

122

|

-

"cwd": "pkgs/edge-worker",

|

|

123

|

-

"commands": [

|

|

124

|

-

"deno test --allow-all --env=supabase/functions/.env tests/unit/"

|

|

125

|

-

],

|

|

126

|

-

"parallel": false

|

|

127

|

-

}

|

|

128

|

-

},

|

|

129

|

-

"test:integration": {

|

|

130

|

-

"dependsOn": ["db:ensure"],

|

|

131

|

-

"executor": "nx:run-commands",

|

|

132

|

-

"options": {

|

|

133

|

-

"cwd": "pkgs/edge-worker",

|

|

134

|

-

"commands": [

|

|

135

|

-

"deno test --allow-all --env=supabase/functions/.env tests/integration/"

|

|

136

|

-

],

|

|

137

|

-

"parallel": false

|

|

138

|

-

}

|

|

139

|

-

},

|

|

140

|

-

"test:e2e": {

|

|

141

|

-

"dependsOn": ["supabase:prepare-edge-fn"],

|

|

142

|

-

"executor": "nx:run-commands",

|

|

143

|

-

"options": {

|

|

144

|

-

"cwd": "pkgs/edge-worker",

|

|

145

|

-

"commands": [

|

|

146

|

-

"deno test --allow-all --env=supabase/functions/.env tests/e2e/"

|

|

147

|

-

],

|

|

148

|

-

"parallel": false

|

|

149

|

-

}

|

|

150

|

-

},

|

|

151

|

-

"test": {

|

|

152

|

-

"dependsOn": ["test:unit", "test:integration"]

|

|

153

|

-

},

|

|

154

|

-

"jsr:publish": {

|

|

155

|

-

"executor": "nx:run-commands",

|

|

156

|

-

"options": {

|

|

157

|

-

"cwd": "pkgs/edge-worker",

|

|

158

|

-

"commands": ["pnpm dlx jsr publish --allow-slow-types"],

|

|

159

|

-

"parallel": false

|

|

160

|

-

}

|

|

161

|

-

}

|

|

162

|

-

},

|

|

163

|

-

"tags": []

|

|

164

|

-

}

|

|

@@ -1,22 +0,0 @@

|

|

|

1

|

-

#!/bin/bash

|

|

2

|

-

|

|

3

|

-

# Create or clear the target file

|

|

4

|

-

target_file="./tests/db/migrations/edge_worker.sql"

|

|

5

|

-

mkdir -p $(dirname "$target_file")

|

|

6

|

-

echo "-- Combined migrations file" > "$target_file"

|

|

7

|

-

echo "-- Generated on $(date)" >> "$target_file"

|

|

8

|

-

echo "" >> "$target_file"

|

|

9

|

-

|

|

10

|

-

# Also add core migrations

|

|

11

|

-

for f in $(find ../core/supabase/migrations -name '*.sql' | sort); do

|

|

12

|

-

echo "-- From file: $(basename $f)" >> "$target_file"

|

|

13

|

-

cat "$f" >> "$target_file"

|

|

14

|

-

echo "" >> "$target_file"

|

|

15

|

-

echo "" >> "$target_file"

|

|

16

|

-

done

|

|

17

|

-

|

|

18

|

-

# And copy the pgflow_tests

|

|

19

|

-

echo "-- From file: seed.sql" >> "$target_file"

|

|

20

|

-

cat "../core/supabase/seed.sql" >> "$target_file"

|

|

21

|

-

echo "" >> "$target_file"

|

|

22

|

-

echo "" >> "$target_file"

|

|

@@ -1,17 +0,0 @@

|

|

|

1

|

-

#!/bin/bash

|

|

2

|

-

port_number=$1

|

|

3

|

-

|

|

4

|

-

# exit if port is not provided

|

|

5

|

-

if [ -z "$port_number" ]; then

|

|

6

|

-

echo "Port number is not provided"

|

|

7

|

-

exit 1

|

|

8

|

-

fi

|

|

9

|

-

|

|

10

|

-

echo "Waiting for localhost:$port_number..."

|

|

11

|

-

|

|

12

|

-

until nc -z localhost "$port_number" 2>/dev/null; do

|

|

13

|

-

echo -n "."

|

|

14

|

-

sleep 0.1

|

|

15

|

-

done

|

|

16

|

-

|

|

17

|

-

echo -e "\nPort $port_number is available!"

|

|

@@ -1,11 +0,0 @@

|

|

|

1

|

-

-- Active workers are workers that have sent a heartbeat in the last 6 seconds

|

|

2

|

-

create or replace view edge_worker.active_workers as

|

|

3

|

-

select

|

|

4

|

-

worker_id,

|

|

5

|

-

queue_name,

|

|

6

|

-

function_name,

|

|

7

|

-

started_at,

|

|

8

|

-

stopped_at,

|

|

9

|

-

last_heartbeat_at

|

|

10

|

-

from edge_worker.workers

|

|

11

|

-

where last_heartbeat_at > now() - make_interval(secs => 6);

|

|

@@ -1,12 +0,0 @@

|

|

|

1

|

-

-- Inactive workers are workers that have not sent

|

|

2

|

-

-- a heartbeat in the last 6 seconds

|

|

3

|

-

create or replace view edge_worker.inactive_workers as

|

|

4

|

-

select

|

|

5

|

-

worker_id,

|

|

6

|

-

queue_name,

|

|

7

|

-

function_name,

|

|

8

|

-

started_at,

|

|

9

|

-

stopped_at,

|

|

10

|

-

last_heartbeat_at

|

|

11

|

-

from edge_worker.workers

|

|

12

|

-

where last_heartbeat_at < now() - make_interval(secs => 6);

|

package/sql/992_spawn_worker.sql

DELETED

|

@@ -1,68 +0,0 @@

|

|

|

1

|

-

create extension if not exists pg_net;

|

|

2

|

-

|

|

3

|

-

-- Calls edge function asynchronously, requires Vault secrets to be set:

|

|

4

|

-

-- - supabase_anon_key

|

|

5

|

-

-- - app_url

|

|

6

|

-

create or replace function edge_worker.call_edgefn_async(

|

|

7

|

-

function_name text,

|

|

8

|

-

body text

|

|

9

|

-

)

|

|

10

|

-

returns bigint

|

|

11

|

-

language plpgsql

|

|

12

|

-

volatile

|

|

13

|

-

set search_path to edge_worker

|

|

14

|

-

as $$

|

|

15

|

-

declare

|

|

16

|

-

request_id bigint;

|

|

17

|

-

begin

|

|

18

|

-

IF function_name IS NULL OR function_name = '' THEN

|

|

19

|

-

raise exception 'function_name cannot be null or empty';

|

|

20

|

-

END IF;

|

|

21

|

-

|

|

22

|

-

WITH secret as (

|

|

23

|

-

select decrypted_secret AS supabase_anon_key

|

|

24

|

-

from vault.decrypted_secrets

|

|

25

|

-

where name = 'supabase_anon_key'

|

|

26

|

-

),

|

|

27

|

-

settings AS (

|

|

28

|

-

select decrypted_secret AS app_url

|

|

29

|

-

from vault.decrypted_secrets

|

|

30

|

-

where name = 'app_url'

|

|

31

|

-

)

|

|

32

|

-

select net.http_post(

|

|

33

|

-

url => (select app_url from settings) || '/functions/v1/' || function_name,

|

|

34

|

-

body => jsonb_build_object('body', body),

|

|

35

|

-

headers := jsonb_build_object(

|

|

36

|

-

'Authorization', 'Bearer ' || (select supabase_anon_key from secret)

|

|

37

|

-

)

|

|

38

|

-

) into request_id;

|

|

39

|

-

|

|

40

|

-

return request_id;

|

|

41

|

-

end;

|

|

42

|

-

$$;

|

|

43

|

-

|

|

44

|

-

-- Spawn a new worker asynchronously via edge function

|

|

45

|

-

--

|

|

46

|

-

-- It is intended to be used in a cron job that ensures continuos operation

|

|

47

|

-

create or replace function edge_worker.spawn(

|

|

48

|

-

function_name text

|

|

49

|

-

) returns integer as $$

|

|

50

|

-

declare

|

|

51

|

-

p_function_name text := function_name;

|

|

52

|

-

v_active_count integer;

|

|

53

|

-

begin

|

|

54

|

-

SELECT COUNT(*)

|

|

55

|

-

INTO v_active_count

|

|

56

|

-

FROM edge_worker.active_workers AS aw

|

|

57

|

-

WHERE aw.function_name = p_function_name;

|

|

58

|

-

|

|

59

|

-

IF v_active_count < 1 THEN

|

|

60

|

-

raise notice 'Spawning new worker: %', p_function_name;

|

|

61

|

-

PERFORM edge_worker.call_edgefn_async(p_function_name, '');

|

|

62

|

-

return 1;

|

|

63

|

-

ELSE

|

|

64

|

-

raise notice 'Worker Exists for queue: NOT spawning new worker for queue: %', p_function_name;

|

|

65

|

-

return 0;

|

|

66

|

-

END IF;

|

|

67

|

-

end;

|

|

68

|

-

$$ language plpgsql;

|

|

@@ -1,32 +0,0 @@

|

|

|

1

|

-

-- select * from pgmq.create('max_concurrency');

|

|

2

|

-

-- select * from pgmq.drop_queue('max_concurrency');

|

|

3

|

-

WITH

|

|

4

|

-

params AS (

|

|

5

|

-

SELECT

|

|

6

|

-

2000000 as msg_count,

|

|

7

|

-

1000 as batch_size

|

|

8

|

-

),

|

|

9

|

-

batch_nums AS (

|

|

10

|

-

SELECT generate_series(0, msg_count/batch_size - 1) as batch_num

|

|

11

|

-

FROM params

|

|

12

|

-

),

|

|

13

|

-

batch_ranges AS (

|

|

14

|

-

SELECT

|

|

15

|

-

batch_num,

|

|

16

|

-

batch_num * batch_size + 1 as start_id,

|

|

17

|

-

(batch_num + 1) * batch_size as end_id

|

|

18

|

-

FROM batch_nums

|

|

19

|

-

CROSS JOIN params

|

|

20

|

-

),

|

|

21

|

-

batches AS (

|

|

22

|

-

SELECT

|

|

23

|

-

batch_num,

|

|

24

|

-

array_agg(jsonb_build_object('id', i)) as msg_array

|

|

25

|

-

FROM batch_ranges,

|

|

26

|

-

generate_series(start_id, end_id) i

|

|

27

|

-

GROUP BY batch_num

|

|

28

|

-

)

|

|

29

|

-

SELECT pgmq.send_batch('max_concurrency', msg_array)

|

|

30

|

-

FROM batches

|

|

31

|

-

ORDER BY batch_num;

|

|

32

|

-

|

|

File without changes

|

|

@@ -1,115 +0,0 @@

|

|

|

1

|

-

-- select count(*) from pgmq.a_max_concurrency;

|

|

2

|

-

-- select read_ct, count(*) from pgmq.q_max_concurrency group by read_ct;

|

|

3

|

-

select read_ct - 1 as retries_count, count(*)

|

|

4

|

-

from pgmq.a_max_concurrency group by read_ct order by read_ct;

|

|

5

|

-

|

|

6

|

-

select * from pgmq.metrics('max_concurrency');

|

|

7

|

-

|

|

8

|

-

select * from pgmq.a_max_concurrency limit 10;

|

|

9

|

-

select EXTRACT(EPOCH FROM (max(archived_at) - min(enqueued_at))) as total_seconds from pgmq.a_max_concurrency;

|

|

10

|

-

|

|

11

|

-

-- Processing time ranges per read_ct

|

|

12

|

-

SELECT

|

|

13

|

-

read_ct - 1 as retry_count,

|

|

14

|

-

COUNT(*) as messages,

|

|

15

|

-

round(avg(EXTRACT(EPOCH FROM (vt - make_interval(secs => 3) - enqueued_at))), 2) as avg_s,

|

|

16

|

-

round(min(EXTRACT(EPOCH FROM (vt - make_interval(secs => 3) - enqueued_at))), 2) as min_s,

|

|

17

|

-

round(max(EXTRACT(EPOCH FROM (vt - make_interval(secs => 3) - enqueued_at))), 2) as max_s

|

|

18

|

-

FROM pgmq.a_max_concurrency

|

|

19

|

-

GROUP BY read_ct

|

|

20

|

-

ORDER BY read_ct;

|

|

21

|

-

|

|

22

|

-

-- Processing time percentiles

|

|

23

|

-

WITH processing_times AS (

|

|

24

|

-

SELECT archived_at - (vt - make_interval(secs=>3)) as processing_time

|

|

25

|

-

FROM pgmq.a_max_concurrency

|

|

26

|

-

)

|

|

27

|

-

SELECT

|

|

28

|

-

ROUND(EXTRACT(epoch FROM percentile_cont(0.50) WITHIN GROUP (ORDER BY processing_time)) * 1000) as p50_ms,

|

|

29

|

-

ROUND(EXTRACT(epoch FROM percentile_cont(0.75) WITHIN GROUP (ORDER BY processing_time)) * 1000) as p75_ms,

|

|

30

|

-

ROUND(EXTRACT(epoch FROM percentile_cont(0.90) WITHIN GROUP (ORDER BY processing_time)) * 1000) as p90_ms,

|

|

31

|

-

ROUND(EXTRACT(epoch FROM percentile_cont(0.95) WITHIN GROUP (ORDER BY processing_time)) * 1000) as p95_ms,

|

|

32

|

-

ROUND(EXTRACT(epoch FROM percentile_cont(0.99) WITHIN GROUP (ORDER BY processing_time)) * 1000) as p99_ms,

|

|

33

|

-

ROUND(EXTRACT(epoch FROM MIN(processing_time)) * 1000) as min_ms,

|

|

34

|

-

ROUND(EXTRACT(epoch FROM MAX(processing_time)) * 1000) as max_ms

|

|

35

|

-

FROM processing_times;

|

|

36

|

-

|

|

37

|

-

-- Total processing time for messages with read_ct 1 or 2

|

|

38

|

-

SELECT

|

|

39

|

-

round(sum(EXTRACT(EPOCH FROM (archived_at - enqueued_at))), 2) as total_processing_seconds

|

|

40

|

-

FROM pgmq.a_max_concurrency

|

|

41

|

-

WHERE read_ct IN (1, 2);

|

|

42

|

-

|

|

43

|

-

-- Distribution of processing times in configurable intervals

|

|

44

|

-

WITH

|

|

45

|

-

interval_conf AS (

|

|

46

|

-

SELECT 1 as interval_seconds

|

|

47

|

-

),

|

|

48

|

-

processing_times AS (

|

|

49

|

-

SELECT

|

|

50

|

-

EXTRACT(EPOCH FROM (archived_at - enqueued_at)) as seconds

|

|

51

|

-

FROM pgmq.a_max_concurrency

|

|

52

|

-

)

|

|

53

|

-

SELECT

|

|

54

|

-

((floor(seconds / interval_seconds) * interval_seconds) || '-' ||

|

|

55

|

-

(floor(seconds / interval_seconds) * interval_seconds + interval_seconds) || 's')::text as time_bucket,

|

|

56

|

-

COUNT(*) as message_count,

|

|

57

|

-

round((COUNT(*)::numeric / interval_seconds), 1) as messages_per_second,

|

|

58

|

-

SUM(COUNT(*)) OVER (ORDER BY floor(seconds / interval_seconds)) as total_processed_so_far

|

|

59

|

-

FROM processing_times, interval_conf

|

|

60

|

-

GROUP BY floor(seconds / interval_seconds), interval_seconds

|

|

61

|

-

ORDER BY floor(seconds / interval_seconds);

|

|

62

|

-

|

|

63

|

-

|

|

64

|

-

-- First let's check the raw distribution

|

|

65

|

-

WITH processing_times AS (

|

|

66

|

-

SELECT

|

|

67

|

-

EXTRACT(EPOCH FROM (archived_at - enqueued_at)) as seconds

|

|

68

|

-

FROM pgmq.a_max_concurrency

|

|

69

|

-

)

|

|

70

|

-

SELECT

|

|

71

|

-

floor(seconds) as seconds,

|

|

72

|

-

COUNT(*) as message_count

|

|

73

|

-

FROM processing_times

|

|

74

|

-

WHERE seconds BETWEEN 165 AND 381

|

|

75

|

-

GROUP BY floor(seconds)

|

|

76

|

-

ORDER BY floor(seconds);

|

|

77

|

-

|

|

78

|

-

|

|

79

|

-

-- Examine messages around the gap

|

|

80

|

-

WITH processing_times AS (

|

|

81

|

-

SELECT

|

|

82

|

-

msg_id,

|

|

83

|

-

enqueued_at,

|

|

84

|

-

archived_at,

|

|

85

|

-

EXTRACT(EPOCH FROM (archived_at - enqueued_at)) as processing_time,

|

|

86

|

-

read_ct

|

|

87

|

-

FROM pgmq.a_max_concurrency

|

|

88

|

-

)

|

|

89

|

-

SELECT

|

|

90

|

-

msg_id,

|

|

91

|

-

enqueued_at,

|

|

92

|

-

archived_at,

|

|

93

|

-

round(processing_time::numeric, 2) as processing_seconds,

|

|

94

|

-

read_ct

|

|

95

|

-

FROM processing_times

|

|

96

|

-

WHERE

|

|

97

|

-

processing_time BETWEEN 164 AND 380

|

|

98

|

-

ORDER BY processing_time;

|

|

99

|

-

|

|

100

|

-

-- Show processing time distribution by retry count

|

|

101

|

-

WITH processing_times AS (

|

|

102

|

-

SELECT

|

|

103

|

-

EXTRACT(EPOCH FROM (archived_at - enqueued_at)) as processing_time,

|

|

104

|

-

read_ct,

|

|

105

|

-

width_bucket(EXTRACT(EPOCH FROM (archived_at - enqueued_at)), 0, 400, 20) as time_bucket

|

|

106

|

-

FROM pgmq.a_max_concurrency

|

|

107

|

-

)

|

|

108

|

-

SELECT

|

|

109

|

-

((time_bucket - 1) * 20) || '-' || (time_bucket * 20) || 's' as time_range,

|

|

110

|

-

read_ct,

|

|

111

|

-

COUNT(*) as message_count

|

|

112

|

-

FROM processing_times

|

|

113

|

-

GROUP BY time_bucket, read_ct

|

|

114

|

-

ORDER BY time_bucket, read_ct;

|

|

115

|

-

|

package/src/EdgeWorker.ts

DELETED

|

@@ -1,172 +0,0 @@

|

|

|

1

|

-

import type { Worker } from './core/Worker.ts';

|

|

2

|

-

import spawnNewEdgeFunction from './spawnNewEdgeFunction.ts';

|

|

3

|

-

import type { Json } from './core/types.ts';

|

|

4

|

-

import { getLogger, setupLogger } from './core/Logger.ts';

|

|

5

|

-

import {

|

|

6

|

-

createQueueWorker,

|

|

7

|

-

type QueueWorkerConfig,

|

|

8

|

-

} from './queue/createQueueWorker.ts';

|

|

9

|

-

|

|

10

|

-

/**

|

|

11

|

-

* Configuration options for the EdgeWorker.

|

|

12

|

-

*/

|

|

13

|

-

export type EdgeWorkerConfig = QueueWorkerConfig;

|

|

14

|

-

|

|

15

|

-

/**

|

|

16

|

-

* EdgeWorker is the main entry point for creating and starting edge workers.

|

|

17

|

-

*

|

|

18

|

-

* It provides a simple interface for starting a worker that processes messages from a queue.

|

|

19

|

-

*

|

|

20

|

-

* @example

|

|

21

|

-

* ```typescript

|

|

22

|

-

* import { EdgeWorker } from '@pgflow/edge-worker';

|

|

23

|

-

*

|

|

24

|

-

* EdgeWorker.start(async (message) => {

|

|

25

|

-

* // Process the message

|

|

26

|

-

* console.log('Processing message:', message);

|

|

27

|

-

* }, {

|

|

28

|

-

* queueName: 'my-queue',

|

|

29

|

-

* maxConcurrent: 5,

|

|

30

|

-

* retryLimit: 3

|

|

31

|

-

* });

|

|

32

|

-

* ```

|

|

33

|

-

*/

|

|

34

|

-

export class EdgeWorker {

|

|

35

|

-

private static logger = getLogger('EdgeWorker');

|

|

36

|

-

private static wasCalled = false;

|

|

37

|

-

|

|

38

|

-

/**

|

|

39

|

-

* Start the EdgeWorker with the given message handler and configuration.

|

|

40

|

-

*

|

|

41

|

-

* @param handler - Function that processes each message from the queue

|

|

42

|

-

* @param config - Configuration options for the worker

|

|

43

|

-

*

|

|

44

|

-

* @example

|

|

45

|

-

* ```typescript

|

|

46

|

-

* EdgeWorker.start(handler, {

|

|

47

|

-

* // name of the queue to poll for messages

|

|

48

|

-

* queueName: 'tasks',

|

|

49

|

-

*

|

|

50

|

-

* // how many tasks are processed at the same time

|

|

51

|

-

* maxConcurrent: 10,

|

|

52

|

-

*

|

|

53

|

-

* // how many connections to the database are opened

|

|

54

|

-

* maxPgConnections: 4,

|

|

55

|

-

*

|

|

56

|

-

* // in-worker polling interval

|

|

57

|

-

* maxPollSeconds: 5,

|

|

58

|

-

*

|

|

59

|

-

* // in-database polling interval

|

|

60

|

-

* pollIntervalMs: 200,

|

|

61

|

-

*

|

|

62

|

-

* // how long to wait before retrying a failed job

|

|

63

|

-

* retryDelay: 5,

|

|

64

|

-

*

|

|

65

|

-

* // how many times to retry a failed job

|

|

66

|

-

* retryLimit: 5,

|

|

67

|

-

*

|

|

68

|

-

* // how long a job is invisible after reading

|

|

69

|

-

* // if not successful, will reappear after this time

|

|

70

|

-

* visibilityTimeout: 3,

|

|

71

|

-

* });

|

|

72

|

-

* ```

|

|

73

|

-

*/

|

|

74

|

-

static start<TPayload extends Json = Json>(

|

|

75

|

-

handler: (message: TPayload) => Promise<void> | void,

|

|

76

|

-

config: EdgeWorkerConfig = {}

|

|

77

|

-

) {

|

|

78

|

-

this.ensureFirstCall();

|

|

79

|

-

|

|

80

|

-

// Get connection string from config or environment

|

|

81

|

-

const connectionString =

|

|

82

|

-

config.connectionString || this.getConnectionString();

|

|

83

|

-

|

|

84

|

-

// Create a complete configuration object with defaults

|

|

85

|

-

const completeConfig: EdgeWorkerConfig = {

|

|

86

|

-

// Pass through any config options first

|

|

87

|

-

...config,

|

|

88

|

-

|

|

89

|

-

// Then override with defaults for missing values

|

|

90

|

-

queueName: config.queueName || 'tasks',

|

|

91

|

-

maxConcurrent: config.maxConcurrent ?? 10,

|

|

92

|

-

maxPgConnections: config.maxPgConnections ?? 4,

|

|

93

|

-

maxPollSeconds: config.maxPollSeconds ?? 5,

|

|

94

|

-

pollIntervalMs: config.pollIntervalMs ?? 200,

|

|

95

|

-

retryDelay: config.retryDelay ?? 5,

|

|

96

|

-

retryLimit: config.retryLimit ?? 5,

|

|

97

|

-

visibilityTimeout: config.visibilityTimeout ?? 3,

|

|

98

|

-

|

|

99

|

-

// Ensure connectionString is always set

|

|

100

|

-

connectionString,

|

|

101

|

-

};

|

|

102

|

-

|

|

103

|

-

this.setupRequestHandler(handler, completeConfig);

|

|

104

|

-

}

|

|

105

|

-

|

|

106

|

-

private static ensureFirstCall() {

|

|

107

|

-

if (this.wasCalled) {

|

|

108

|

-

throw new Error('EdgeWorker.start() can only be called once');

|

|

109

|

-

}

|

|

110

|

-

this.wasCalled = true;

|

|

111

|

-

}

|

|

112

|

-

|

|

113

|

-

private static getConnectionString(): string {

|

|

114

|

-

// @ts-ignore - TODO: fix the types

|

|

115

|

-

const connectionString = Deno.env.get('EDGE_WORKER_DB_URL');

|

|

116

|

-

if (!connectionString) {

|

|

117

|

-

const message =

|

|

118

|

-

'EDGE_WORKER_DB_URL is not set!\n' +

|

|

119

|

-

'See https://pgflow.pages.dev/edge-worker/prepare-environment/#prepare-connection-string';

|

|

120

|

-

throw new Error(message);

|

|

121

|

-

}

|

|

122

|

-

return connectionString;

|

|

123

|

-

}

|

|

124

|

-

|

|

125

|

-

private static setupShutdownHandler(worker: Worker) {

|

|

126

|

-

globalThis.onbeforeunload = async () => {

|

|

127

|

-

if (worker.edgeFunctionName) {

|

|

128

|

-

await spawnNewEdgeFunction(worker.edgeFunctionName);

|

|

129

|

-

}

|

|

130

|

-

|

|

131

|

-

worker.stop();

|

|

132

|

-

};

|

|

133

|

-

|

|

134

|

-

// use waitUntil to prevent the function from exiting

|

|

135

|

-

// @ts-ignore: TODO: fix the types

|

|

136

|

-

EdgeRuntime.waitUntil(new Promise(() => {}));

|

|

137

|

-

}

|

|

138

|

-

|

|

139

|

-

private static setupRequestHandler<TPayload extends Json>(

|

|

140

|

-

handler: (message: TPayload) => Promise<void> | void,

|

|

141

|

-

workerConfig: EdgeWorkerConfig

|

|

142

|

-

) {

|

|

143

|

-

let worker: Worker | null = null;

|

|

144

|

-

|

|

145

|

-

Deno.serve({}, (req) => {

|

|

146

|

-

if (!worker) {

|

|

147

|

-

const edgeFunctionName = this.extractFunctionName(req);

|

|

148

|

-

const sbExecutionId = Deno.env.get('SB_EXECUTION_ID')!;

|

|

149

|

-

setupLogger(sbExecutionId);

|

|

150

|

-

|

|

151

|

-

this.logger.info(`HTTP Request: ${edgeFunctionName}`);

|

|

152

|

-

// Create the worker with all configuration options

|

|

153

|

-

|

|

154

|

-

worker = createQueueWorker(handler, workerConfig);

|

|

155

|

-

worker.startOnlyOnce({

|

|

156

|

-

edgeFunctionName,

|

|

157

|

-

workerId: sbExecutionId,

|

|

158

|

-

});

|

|

159

|

-

|

|

160

|

-

this.setupShutdownHandler(worker);

|

|

161

|

-

}

|

|

162

|

-

|

|

163

|

-

return new Response('ok', {

|

|

164

|

-

headers: { 'Content-Type': 'application/json' },

|

|

165

|

-

});

|

|

166

|

-

});

|

|

167

|

-

}

|

|

168

|

-

|

|

169

|

-

private static extractFunctionName(req: Request): string {

|

|

170

|

-

return new URL(req.url).pathname.replace(/^\/+|\/+$/g, '');

|

|

171

|

-

}

|

|

172

|

-

}

|