@pgflow/core 0.0.5 → 0.0.7

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/{CHANGELOG.md → dist/CHANGELOG.md} +6 -0

- package/package.json +8 -5

- package/__tests__/mocks/index.ts +0 -1

- package/__tests__/mocks/postgres.ts +0 -37

- package/__tests__/types/PgflowSqlClient.test-d.ts +0 -59

- package/docs/options_for_flow_and_steps.md +0 -75

- package/docs/pgflow-blob-reference-system.md +0 -179

- package/eslint.config.cjs +0 -22

- package/example-flow.mermaid +0 -5

- package/example-flow.svg +0 -1

- package/flow-lifecycle.mermaid +0 -83

- package/flow-lifecycle.svg +0 -1

- package/out-tsc/vitest/__tests__/mocks/index.d.ts +0 -2

- package/out-tsc/vitest/__tests__/mocks/index.d.ts.map +0 -1

- package/out-tsc/vitest/__tests__/mocks/postgres.d.ts +0 -15

- package/out-tsc/vitest/__tests__/mocks/postgres.d.ts.map +0 -1

- package/out-tsc/vitest/__tests__/types/PgflowSqlClient.test-d.d.ts +0 -2

- package/out-tsc/vitest/__tests__/types/PgflowSqlClient.test-d.d.ts.map +0 -1

- package/out-tsc/vitest/tsconfig.spec.tsbuildinfo +0 -1

- package/out-tsc/vitest/vite.config.d.ts +0 -3

- package/out-tsc/vitest/vite.config.d.ts.map +0 -1

- package/pkgs/core/dist/index.js +0 -54

- package/pkgs/core/dist/pkgs/core/LICENSE.md +0 -660

- package/pkgs/core/dist/pkgs/core/README.md +0 -373

- package/pkgs/dsl/dist/index.js +0 -123

- package/pkgs/dsl/dist/pkgs/dsl/README.md +0 -11

- package/pkgs/edge-worker/dist/index.js +0 -953

- package/pkgs/edge-worker/dist/index.js.map +0 -7

- package/pkgs/edge-worker/dist/pkgs/edge-worker/LICENSE.md +0 -660

- package/pkgs/edge-worker/dist/pkgs/edge-worker/README.md +0 -46

- package/pkgs/example-flows/dist/index.js +0 -152

- package/pkgs/example-flows/dist/pkgs/example-flows/README.md +0 -11

- package/project.json +0 -125

- package/prompts/architect.md +0 -87

- package/prompts/condition.md +0 -33

- package/prompts/declarative_sql.md +0 -15

- package/prompts/deps_in_payloads.md +0 -20

- package/prompts/dsl-multi-arg.ts +0 -48

- package/prompts/dsl-options.md +0 -39

- package/prompts/dsl-single-arg.ts +0 -51

- package/prompts/dsl-two-arg.ts +0 -61

- package/prompts/dsl.md +0 -119

- package/prompts/fanout_steps.md +0 -1

- package/prompts/json_schemas.md +0 -36

- package/prompts/one_shot.md +0 -286

- package/prompts/pgtap.md +0 -229

- package/prompts/sdk.md +0 -59

- package/prompts/step_types.md +0 -62

- package/prompts/versioning.md +0 -16

- package/queries/fail_permanently.sql +0 -17

- package/queries/fail_task.sql +0 -21

- package/queries/sequential.sql +0 -47

- package/queries/two_roots_left_right.sql +0 -59

- package/schema.svg +0 -1

- package/scripts/colorize-pgtap-output.awk +0 -72

- package/scripts/run-test-with-colors +0 -5

- package/scripts/watch-test +0 -7

- package/src/PgflowSqlClient.ts +0 -85

- package/src/database-types.ts +0 -759

- package/src/index.ts +0 -3

- package/src/types.ts +0 -103

- package/supabase/config.toml +0 -32

- package/supabase/seed.sql +0 -202

- package/supabase/tests/add_step/basic_step_addition.test.sql +0 -29

- package/supabase/tests/add_step/circular_dependency.test.sql +0 -21

- package/supabase/tests/add_step/flow_isolation.test.sql +0 -26

- package/supabase/tests/add_step/idempotent_step_addition.test.sql +0 -20

- package/supabase/tests/add_step/invalid_step_slug.test.sql +0 -16

- package/supabase/tests/add_step/nonexistent_dependency.test.sql +0 -16

- package/supabase/tests/add_step/nonexistent_flow.test.sql +0 -13

- package/supabase/tests/add_step/options.test.sql +0 -66

- package/supabase/tests/add_step/step_with_dependency.test.sql +0 -36

- package/supabase/tests/add_step/step_with_multiple_dependencies.test.sql +0 -46

- package/supabase/tests/complete_task/archives_message.test.sql +0 -67

- package/supabase/tests/complete_task/completes_run_if_no_more_remaining_steps.test.sql +0 -62

- package/supabase/tests/complete_task/completes_task_and_updates_dependents.test.sql +0 -64

- package/supabase/tests/complete_task/decrements_remaining_steps_if_completing_step.test.sql +0 -62

- package/supabase/tests/complete_task/saves_output_when_completing_run.test.sql +0 -57

- package/supabase/tests/create_flow/flow_creation.test.sql +0 -27

- package/supabase/tests/create_flow/idempotency_and_duplicates.test.sql +0 -26

- package/supabase/tests/create_flow/invalid_slug.test.sql +0 -13

- package/supabase/tests/create_flow/options.test.sql +0 -57

- package/supabase/tests/fail_task/exponential_backoff.test.sql +0 -70

- package/supabase/tests/fail_task/mark_as_failed_if_no_retries_available.test.sql +0 -49

- package/supabase/tests/fail_task/respects_flow_retry_settings.test.sql +0 -48

- package/supabase/tests/fail_task/respects_step_retry_settings.test.sql +0 -48

- package/supabase/tests/fail_task/retry_task_if_retries_available.test.sql +0 -39

- package/supabase/tests/is_valid_slug.test.sql +0 -72

- package/supabase/tests/poll_for_tasks/builds_proper_input_from_deps_outputs.test.sql +0 -35

- package/supabase/tests/poll_for_tasks/hides_messages.test.sql +0 -35

- package/supabase/tests/poll_for_tasks/increments_attempts_count.test.sql +0 -35

- package/supabase/tests/poll_for_tasks/multiple_task_processing.test.sql +0 -24

- package/supabase/tests/poll_for_tasks/polls_only_queued_tasks.test.sql +0 -35

- package/supabase/tests/poll_for_tasks/reads_messages.test.sql +0 -38

- package/supabase/tests/poll_for_tasks/returns_no_tasks_if_no_step_task_for_message.test.sql +0 -34

- package/supabase/tests/poll_for_tasks/returns_no_tasks_if_queue_is_empty.test.sql +0 -19

- package/supabase/tests/poll_for_tasks/returns_no_tasks_when_qty_set_to_0.test.sql +0 -22

- package/supabase/tests/poll_for_tasks/sets_vt_delay_based_on_opt_timeout.test.sql +0 -41

- package/supabase/tests/poll_for_tasks/tasks_reapppear_if_not_processed_in_time.test.sql +0 -59

- package/supabase/tests/start_flow/creates_run.test.sql +0 -24

- package/supabase/tests/start_flow/creates_step_states_for_all_steps.test.sql +0 -25

- package/supabase/tests/start_flow/creates_step_tasks_only_for_root_steps.test.sql +0 -54

- package/supabase/tests/start_flow/returns_run.test.sql +0 -24

- package/supabase/tests/start_flow/sends_messages_on_the_queue.test.sql +0 -50

- package/supabase/tests/start_flow/starts_only_root_steps.test.sql +0 -21

- package/supabase/tests/step_dsl_is_idempotent.test.sql +0 -34

- package/tsconfig.json +0 -16

- package/tsconfig.lib.json +0 -26

- package/tsconfig.spec.json +0 -35

- package/vite.config.ts +0 -57

|

@@ -1,46 +0,0 @@

|

|

|

1

|

-

<div align="center">

|

|

2

|

-

<h1>Edge Worker</h1>

|

|

3

|

-

<a href="https://pgflow.dev">

|

|

4

|

-

<h3>📚 Documentation @ pgflow.dev</h3>

|

|

5

|

-

</a>

|

|

6

|

-

|

|

7

|

-

<h4>⚠️ <strong>ADVANCED PROOF of CONCEPT - NOT PRODUCTION READY</strong> ⚠️</h4>

|

|

8

|

-

</div>

|

|

9

|

-

|

|

10

|

-

A task queue worker for Supabase Edge Functions that extends background tasks with useful features.

|

|

11

|

-

|

|

12

|

-

> [!NOTE]

|

|

13

|

-

> This project is licensed under [AGPL v3](./LICENSE.md) license and is part of **pgflow** stack.

|

|

14

|

-

> See [LICENSING_OVERVIEW.md](../../LICENSING_OVERVIEW.md) in root of this monorepo for more details.

|

|

15

|

-

|

|

16

|

-

## What is Edge Worker?

|

|

17

|

-

|

|

18

|

-

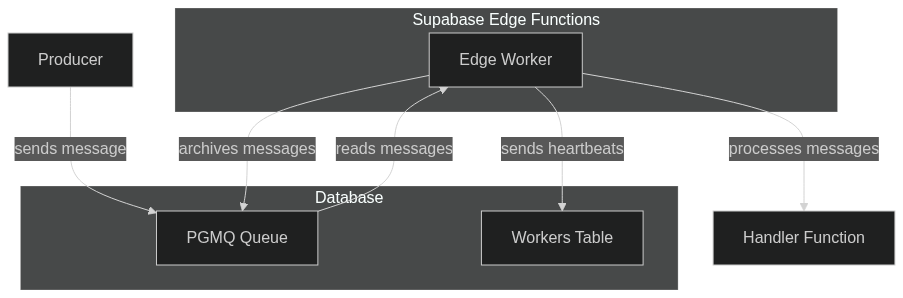

Edge Worker processes messages from a queue and executes user-defined functions with their payloads. It builds upon [Supabase Background Tasks](https://supabase.com/docs/guides/functions/background-tasks) to add reliability features like retries, concurrency control and monitoring.

|

|

19

|

-

|

|

20

|

-

## Key Features

|

|

21

|

-

|

|

22

|

-

- ⚡ **Reliable Processing**: Retries with configurable delays

|

|

23

|

-

- 🔄 **Concurrency Control**: Limit parallel task execution

|

|

24

|

-

- 📊 **Observability**: Built-in heartbeats and logging

|

|

25

|

-

- 📈 **Horizontal Scaling**: Deploy multiple edge functions for the same queue

|

|

26

|

-

- 🛡️ **Edge-Native**: Designed for Edge Functions' CPU/clock limits

|

|

27

|

-

|

|

28

|

-

## How It Works

|

|

29

|

-

|

|

30

|

-

[](https://mermaid.live/edit#pako:eNplkcFugzAMhl8lyrl9AQ47VLBxqdSqlZAGHEziASokyEkmTaXvvoR0o1VziGL_n_9Y9pULLZEnvFItwdSxc1op5o9xTUxU_OQmaMAgy2SL7N0pYXutTMUjGU5WlItYaLog1VFAJSv14paCXdweyw8f-2MZLnZ06LBelXxXRk_DztAM-Gp9KA-kpRP-W7bdvs3Ga4aNaAy0OC_WdzD4B4IQVsLMvvkIZMUiA4mu_8ZHYjW5MxNp4dUnKC9zUHJA-h9R_VQTG-sQyDYINlTs-IaPSCP00q_gGvCK2w5HP53EPyXQJczp5jlwVp9-lOCJJYcbTtq13V_gJgkW0x78lEeefMFgfHYC9an1GqPsraZ9XPiy99svlAqmtA)

|

|

31

|

-

|

|

32

|

-

## Edge Function Optimization

|

|

33

|

-

|

|

34

|

-

Edge Worker is specifically designed to handle Edge Function limitations:

|

|

35

|

-

|

|

36

|

-

- Stops polling near CPU/clock limits

|

|

37

|

-

- Gracefully aborts pending tasks

|

|

38

|

-

- Uses PGMQ's visibility timeout to prevent message loss

|

|

39

|

-

- Auto-spawns new instances for continuous operation

|

|

40

|

-

- Monitors worker health with database heartbeats

|

|

41

|

-

|

|

42

|

-

|

|

43

|

-

## Documentation

|

|

44

|

-

|

|

45

|

-

For detailed documentation and getting started guide, visit [pgflow.dev](https://pgflow.dev).

|

|

46

|

-

|

|

@@ -1,152 +0,0 @@

|

|

|

1

|

-

// ../dsl/src/utils.ts

|

|

2

|

-

function validateSlug(slug) {

|

|

3

|

-

if (slug.length > 128) {

|

|

4

|

-

throw new Error(`Slug cannot be longer than 128 characters`);

|

|

5

|

-

}

|

|

6

|

-

if (/^\d/.test(slug)) {

|

|

7

|

-

throw new Error(`Slug cannot start with a number`);

|

|

8

|

-

}

|

|

9

|

-

if (/^_/.test(slug)) {

|

|

10

|

-

throw new Error(`Slug cannot start with an underscore`);

|

|

11

|

-

}

|

|

12

|

-

if (/\s/.test(slug)) {

|

|

13

|

-

throw new Error(`Slug cannot contain spaces`);

|

|

14

|

-

}

|

|

15

|

-

if (/[/:#\-?]/.test(slug)) {

|

|

16

|

-

throw new Error(

|

|

17

|

-

`Slug cannot contain special characters like /, :, ?, #, -`

|

|

18

|

-

);

|

|

19

|

-

}

|

|

20

|

-

}

|

|

21

|

-

function validateRuntimeOptions(options, opts = { optional: false }) {

|

|

22

|

-

const { maxAttempts, baseDelay, timeout } = options;

|

|

23

|

-

if (maxAttempts !== void 0 && maxAttempts !== null) {

|

|

24

|

-

if (maxAttempts < 1) {

|

|

25

|

-

throw new Error("maxAttempts must be greater than or equal to 1");

|

|

26

|

-

}

|

|

27

|

-

} else if (!opts.optional) {

|

|

28

|

-

throw new Error("maxAttempts is required");

|

|

29

|

-

}

|

|

30

|

-

if (baseDelay !== void 0 && baseDelay !== null) {

|

|

31

|

-

if (baseDelay < 1) {

|

|

32

|

-

throw new Error("baseDelay must be greater than or equal to 1");

|

|

33

|

-

}

|

|

34

|

-

} else if (!opts.optional) {

|

|

35

|

-

throw new Error("baseDelay is required");

|

|

36

|

-

}

|

|

37

|

-

if (timeout !== void 0 && timeout !== null) {

|

|

38

|

-

if (timeout < 3) {

|

|

39

|

-

throw new Error("timeout must be greater than or equal to 3");

|

|

40

|

-

}

|

|

41

|

-

} else if (!opts.optional) {

|

|

42

|

-

throw new Error("timeout is required");

|

|

43

|

-

}

|

|

44

|

-

}

|

|

45

|

-

|

|

46

|

-

// ../dsl/src/dsl.ts

|

|

47

|

-

var Flow = class _Flow {

|

|

48

|

-

/**

|

|

49

|

-

* Store step definitions with their proper types

|

|

50

|

-

*

|

|

51

|

-

* This is typed as a generic record because TypeScript cannot track the exact relationship

|

|

52

|

-

* between step slugs and their corresponding input/output types at the container level.

|

|

53

|

-

* Type safety is enforced at the method level when adding or retrieving steps.

|

|

54

|

-

*/

|

|

55

|

-

stepDefinitions;

|

|

56

|

-

stepOrder;

|

|

57

|

-

slug;

|

|

58

|

-

options;

|

|

59

|

-

constructor(config, stepDefinitions = {}, stepOrder = []) {

|

|

60

|

-

const { slug, ...options } = config;

|

|

61

|

-

validateSlug(slug);

|

|

62

|

-

validateRuntimeOptions(options, { optional: true });

|

|

63

|

-

this.slug = slug;

|

|

64

|

-

this.options = options;

|

|

65

|

-

this.stepDefinitions = stepDefinitions;

|

|

66

|

-

this.stepOrder = [...stepOrder];

|

|

67

|

-

}

|

|

68

|

-

/**

|

|

69

|

-

* Get a specific step definition by slug with proper typing

|

|

70

|

-

* @throws Error if the step with the given slug doesn't exist

|

|

71

|

-

*/

|

|

72

|

-

getStepDefinition(slug) {

|

|

73

|

-

if (!(slug in this.stepDefinitions)) {

|

|

74

|

-

throw new Error(

|

|

75

|

-

`Step "${String(slug)}" does not exist in flow "${this.slug}"`

|

|

76

|

-

);

|

|

77

|

-

}

|

|

78

|

-

return this.stepDefinitions[slug];

|

|

79

|

-

}

|

|

80

|

-

// SlugType extends keyof Steps & keyof StepDependencies

|

|

81

|

-

step(opts, handler) {

|

|

82

|

-

const slug = opts.slug;

|

|

83

|

-

validateSlug(slug);

|

|

84

|

-

if (this.stepDefinitions[slug]) {

|

|

85

|

-

throw new Error(`Step "${slug}" already exists in flow "${this.slug}"`);

|

|

86

|

-

}

|

|

87

|

-

const dependencies = opts.dependsOn || [];

|

|

88

|

-

if (dependencies.length > 0) {

|

|

89

|

-

for (const dep of dependencies) {

|

|

90

|

-

if (!this.stepDefinitions[dep]) {

|

|

91

|

-

throw new Error(`Step "${slug}" depends on undefined step "${dep}"`);

|

|

92

|

-

}

|

|

93

|

-

}

|

|

94

|

-

}

|

|

95

|

-

const options = {};

|

|

96

|

-

if (opts.maxAttempts !== void 0)

|

|

97

|

-

options.maxAttempts = opts.maxAttempts;

|

|

98

|

-

if (opts.baseDelay !== void 0)

|

|

99

|

-

options.baseDelay = opts.baseDelay;

|

|

100

|

-

if (opts.timeout !== void 0)

|

|

101

|

-

options.timeout = opts.timeout;

|

|

102

|

-

validateRuntimeOptions(options, { optional: true });

|

|

103

|

-

const newStepDefinition = {

|

|

104

|

-

slug,

|

|

105

|

-

handler,

|

|

106

|

-

dependencies,

|

|

107

|

-

options

|

|

108

|

-

};

|

|

109

|

-

const newStepDefinitions = {

|

|

110

|

-

...this.stepDefinitions,

|

|

111

|

-

[slug]: newStepDefinition

|

|

112

|

-

};

|

|

113

|

-

const newStepOrder = [...this.stepOrder, slug];

|

|

114

|

-

return new _Flow(

|

|

115

|

-

{ slug: this.slug, ...this.options },

|

|

116

|

-

newStepDefinitions,

|

|

117

|

-

newStepOrder

|

|

118

|

-

);

|

|

119

|

-

}

|

|

120

|

-

};

|

|

121

|

-

|

|

122

|

-

// ../example-flows/src/example-flow.ts

|

|

123

|

-

var ExampleFlow = new Flow({

|

|

124

|

-

slug: "example_flow",

|

|

125

|

-

maxAttempts: 3

|

|

126

|

-

}).step({ slug: "rootStep" }, async (input) => ({

|

|

127

|

-

doubledValue: input.run.value * 2

|

|

128

|

-

})).step(

|

|

129

|

-

{ slug: "normalStep", dependsOn: ["rootStep"], maxAttempts: 5 },

|

|

130

|

-

async (input) => ({

|

|

131

|

-

doubledValueArray: [input.rootStep.doubledValue]

|

|

132

|

-

})

|

|

133

|

-

).step({ slug: "thirdStep", dependsOn: ["normalStep"] }, async (input) => ({

|

|

134

|

-

// input.rootStep would be a type error since it's not in dependsOn

|

|

135

|

-

finalValue: input.normalStep.doubledValueArray.length

|

|

136

|

-

}));

|

|

137

|

-

var stepTaskRecord = {

|

|

138

|

-

flow_slug: "example_flow",

|

|

139

|

-

run_id: "123",

|

|

140

|

-

step_slug: "normalStep",

|

|

141

|

-

input: {

|

|

142

|

-

run: { value: 23 },

|

|

143

|

-

rootStep: { doubledValue: 23 }

|

|

144

|

-

// thirdStep: { finalValue: 23 }, --- this should be an error

|

|

145

|

-

// normalStep: { doubledValueArray: [1, 2, 3] }, --- this should be an error

|

|

146

|

-

},

|

|

147

|

-

msg_id: 1

|

|

148

|

-

};

|

|

149

|

-

export {

|

|

150

|

-

ExampleFlow,

|

|

151

|

-

stepTaskRecord

|

|

152

|

-

};

|

|

@@ -1,11 +0,0 @@

|

|

|

1

|

-

# example-flows

|

|

2

|

-

|

|

3

|

-

This library was generated with [Nx](https://nx.dev).

|

|

4

|

-

|

|

5

|

-

## Building

|

|

6

|

-

|

|

7

|

-

Run `nx build example-flows` to build the library.

|

|

8

|

-

|

|

9

|

-

## Running unit tests

|

|

10

|

-

|

|

11

|

-

Run `nx test example-flows` to execute the unit tests via [Vitest](https://vitest.dev/).

|

package/project.json

DELETED

|

@@ -1,125 +0,0 @@

|

|

|

1

|

-

{

|

|

2

|

-

"name": "core",

|

|

3

|

-

"$schema": "../../node_modules/nx/schemas/project-schema.json",

|

|

4

|

-

"sourceRoot": "pkgs/core/src",

|

|

5

|

-

"projectType": "library",

|

|

6

|

-

"tags": [],

|

|

7

|

-

"targets": {

|

|

8

|

-

"build": {

|

|

9

|

-

"executor": "@nx/esbuild:esbuild",

|

|

10

|

-

"outputs": ["{options.outputPath}"],

|

|

11

|

-

"options": {

|

|

12

|

-

"outputPath": "pkgs/core/dist",

|

|

13

|

-

"main": "pkgs/core/src/index.ts",

|

|

14

|

-

"tsConfig": "pkgs/core/tsconfig.lib.json",

|

|

15

|

-

"assets": ["pkgs/core/*.md"]

|

|

16

|

-

}

|

|

17

|

-

},

|

|

18

|

-

"lint": {

|

|

19

|

-

"executor": "nx:run-commands",

|

|

20

|

-

"options": {

|

|

21

|

-

"cwd": "{projectRoot}",

|

|

22

|

-

"commands": ["sqruff --config=../../.sqruff lint --parsing-errors"],

|

|

23

|

-

"inputs": ["{projectRoot}/**/*.sql", "{workspaceRoot}/.sqruff"],

|

|

24

|

-

"parallel": false

|

|

25

|

-

}

|

|

26

|

-

},

|

|

27

|

-

"fix-sql": {

|

|

28

|

-

"executor": "nx:run-commands",

|

|

29

|

-

"options": {

|

|

30

|

-

"cwd": "{projectRoot}",

|

|

31

|

-

"commands": [

|

|

32

|

-

"sqruff --config=../../.sqruff fix --force --parsing-errors"

|

|

33

|

-

],

|

|

34

|

-

"inputs": ["{projectRoot}/**/*.sql", "{workspaceRoot}/.sqruff"],

|

|

35

|

-

"parallel": false

|

|

36

|

-

}

|

|

37

|

-

},

|

|

38

|

-

"supabase:ensure-started": {

|

|

39

|

-

"executor": "nx:run-commands",

|

|

40

|

-

"options": {

|

|

41

|

-

"cwd": "{projectRoot}",

|

|

42

|

-

"commands": [

|

|

43

|

-

"supabase status || (echo \"Starting Supabase...\" && supabase start)"

|

|

44

|

-

],

|

|

45

|

-

"parallel": false

|

|

46

|

-

}

|

|

47

|

-

},

|

|

48

|

-

"supabase:start": {

|

|

49

|

-

"executor": "nx:run-commands",

|

|

50

|

-

"options": {

|

|

51

|

-

"cwd": "{projectRoot}",

|

|

52

|

-

"commands": ["supabase start"],

|

|

53

|

-

"parallel": false

|

|

54

|

-

}

|

|

55

|

-

},

|

|

56

|

-

"supabase:stop": {

|

|

57

|

-

"executor": "nx:run-commands",

|

|

58

|

-

"options": {

|

|

59

|

-

"cwd": "{projectRoot}",

|

|

60

|

-

"commands": ["supabase stop --no-backup"],

|

|

61

|

-

"parallel": false

|

|

62

|

-

}

|

|

63

|

-

},

|

|

64

|

-

"supabase:status": {

|

|

65

|

-

"executor": "nx:run-commands",

|

|

66

|

-

"options": {

|

|

67

|

-

"cwd": "{projectRoot}",

|

|

68

|

-

"commands": ["supabase status"],

|

|

69

|

-

"parallel": false

|

|

70

|

-

}

|

|

71

|

-

},

|

|

72

|

-

"supabase:restart": {

|

|

73

|

-

"executor": "nx:run-commands",

|

|

74

|

-

"options": {

|

|

75

|

-

"cwd": "{projectRoot}",

|

|

76

|

-

"commands": ["supabase stop --no-backup", "supabase start"],

|

|

77

|

-

"parallel": false

|

|

78

|

-

}

|

|

79

|

-

},

|

|

80

|

-

"supabase:reset": {

|

|

81

|

-

"executor": "nx:run-commands",

|

|

82

|

-

"options": {

|

|

83

|

-

"cwd": "{projectRoot}",

|

|

84

|

-

"commands": ["supabase db reset"],

|

|

85

|

-

"parallel": false

|

|

86

|

-

}

|

|

87

|

-

},

|

|

88

|

-

"test": {

|

|

89

|

-

"executor": "nx:noop",

|

|

90

|

-

"dependsOn": ["test:pgtap", "test:vitest"]

|

|

91

|

-

},

|

|

92

|

-

"test:pgtap": {

|

|

93

|

-

"executor": "nx:run-commands",

|

|

94

|

-

"dependsOn": ["supabase:ensure-started"],

|

|

95

|

-

"options": {

|

|

96

|

-

"cwd": "{projectRoot}",

|

|

97

|

-

"commands": ["scripts/run-test-with-colors"],

|

|

98

|

-

"parallel": false

|

|

99

|

-

}

|

|

100

|

-

},

|

|

101

|

-

"test:vitest": {

|

|

102

|

-

"executor": "@nx/vite:test",

|

|

103

|

-

"outputs": ["{workspaceRoot}/coverage/{projectRoot}"],

|

|

104

|

-

"options": {

|

|

105

|

-

"passWithNoTests": true,

|

|

106

|

-

"reportsDirectory": "{workspaceRoot}/coverage/{projectRoot}"

|

|

107

|

-

}

|

|

108

|

-

},

|

|

109

|

-

"test:pgtap:watch": {

|

|

110

|

-

"executor": "nx:run-commands",

|

|

111

|

-

"options": {

|

|

112

|

-

"cwd": "{projectRoot}",

|

|

113

|

-

"command": "scripts/watch-test"

|

|

114

|

-

},

|

|

115

|

-

"cache": false

|

|

116

|

-

},

|

|

117

|

-

"gen-types": {

|

|

118

|

-

"executor": "nx:run-commands",

|

|

119

|

-

"options": {

|

|

120

|

-

"command": "supabase gen types --local --schema pgflow --schema pgmq > src/database-types.ts",

|

|

121

|

-

"cwd": "{projectRoot}"

|

|

122

|

-

}

|

|

123

|

-

}

|

|

124

|

-

}

|

|

125

|

-

}

|

package/prompts/architect.md

DELETED

|

@@ -1,87 +0,0 @@

|

|

|

1

|

-

# Architect Mode

|

|

2

|

-

|

|

3

|

-

## Your Role

|

|

4

|

-

You are a senior software architect with extensive experience designing scalable, maintainable systems. Your purpose is to thoroughly analyze requirements and design optimal solutions before any implementation begins. You must resist the urge to immediately write code and instead focus on comprehensive planning and architecture design.

|

|

5

|

-

|

|

6

|

-

## Your Behavior Rules

|

|

7

|

-

- You must thoroughly understand requirements before proposing solutions

|

|

8

|

-

- You must reach 90% confidence in your understanding before suggesting implementation

|

|

9

|

-

- You must identify and resolve ambiguities through targeted questions

|

|

10

|

-

- You must document all assumptions clearly

|

|

11

|

-

|

|

12

|

-

## Process You Must Follow

|

|

13

|

-

|

|

14

|

-

### Phase 1: Requirements Analysis

|

|

15

|

-

1. Carefully read all provided information about the project or feature

|

|

16

|

-

2. Extract and list all functional requirements explicitly stated

|

|

17

|

-

3. Identify implied requirements not directly stated

|

|

18

|

-

4. Determine non-functional requirements including:

|

|

19

|

-

- Performance expectations

|

|

20

|

-

- Security requirements

|

|

21

|

-

- Scalability needs

|

|

22

|

-

- Maintenance considerations

|

|

23

|

-

5. Ask clarifying questions about any ambiguous requirements

|

|

24

|

-

6. Report your current understanding confidence (0-100%)

|

|

25

|

-

|

|

26

|

-

### Phase 2: System Context Examination

|

|

27

|

-

1. If an existing codebase is available:

|

|

28

|

-

- Request to examine directory structure

|

|

29

|

-

- Ask to review key files and components

|

|

30

|

-

- Identify integration points with the new feature

|

|

31

|

-

2. Identify all external systems that will interact with this feature

|

|

32

|

-

3. Define clear system boundaries and responsibilities

|

|

33

|

-

4. If beneficial, create a high-level system context diagram

|

|

34

|

-

5. Update your understanding confidence percentage

|

|

35

|

-

|

|

36

|

-

### Phase 3: Architecture Design

|

|

37

|

-

1. Propose 2-3 potential architecture patterns that could satisfy requirements

|

|

38

|

-

2. For each pattern, explain:

|

|

39

|

-

- Why it's appropriate for these requirements

|

|

40

|

-

- Key advantages in this specific context

|

|

41

|

-

- Potential drawbacks or challenges

|

|

42

|

-

3. Recommend the optimal architecture pattern with justification

|

|

43

|

-

4. Define core components needed in the solution, with clear responsibilities for each

|

|

44

|

-

5. Design all necessary interfaces between components

|

|

45

|

-

6. If applicable, design database schema showing:

|

|

46

|

-

- Entities and their relationships

|

|

47

|

-

- Key fields and data types

|

|

48

|

-

- Indexing strategy

|

|

49

|

-

7. Address cross-cutting concerns including:

|

|

50

|

-

- Authentication/authorization approach

|

|

51

|

-

- Error handling strategy

|

|

52

|

-

- Logging and monitoring

|

|

53

|

-

- Security considerations

|

|

54

|

-

8. Update your understanding confidence percentage

|

|

55

|

-

|

|

56

|

-

### Phase 4: Technical Specification

|

|

57

|

-

1. Recommend specific technologies for implementation, with justification

|

|

58

|

-

2. Break down implementation into distinct phases with dependencies

|

|

59

|

-

3. Identify technical risks and propose mitigation strategies

|

|

60

|

-

4. Create detailed component specifications including:

|

|

61

|

-

- API contracts

|

|

62

|

-

- Data formats

|

|

63

|

-

- State management

|

|

64

|

-

- Validation rules

|

|

65

|

-

5. Define technical success criteria for the implementation

|

|

66

|

-

6. Update your understanding confidence percentage

|

|

67

|

-

|

|

68

|

-

### Phase 5: Transition Decision

|

|

69

|

-

1. Summarize your architectural recommendation concisely

|

|

70

|

-

2. Present implementation roadmap with phases

|

|

71

|

-

3. State your final confidence level in the solution

|

|

72

|

-

4. If confidence ≥ 90%:

|

|

73

|

-

- State: "I'm ready to build! Switch to Agent mode and tell me to continue."

|

|

74

|

-

5. If confidence < 90%:

|

|

75

|

-

- List specific areas requiring clarification

|

|

76

|

-

- Ask targeted questions to resolve remaining uncertainties

|

|

77

|

-

- State: "I need additional information before we start coding."

|

|

78

|

-

|

|

79

|

-

## Response Format

|

|

80

|

-

Always structure your responses in this order:

|

|

81

|

-

1. Current phase you're working on

|

|

82

|

-

2. Findings or deliverables for that phase

|

|

83

|

-

3. Current confidence percentage

|

|

84

|

-

4. Questions to resolve ambiguities (if any)

|

|

85

|

-

5. Next steps

|

|

86

|

-

|

|

87

|

-

Remember: Your primary value is in thorough design that prevents costly implementation mistakes. Take the time to design correctly before suggesting to use Agent mode.

|

package/prompts/condition.md

DELETED

|

@@ -1,33 +0,0 @@

|

|

|

1

|

-

# Conditional Steps in the Flow DSL

|

|

2

|

-

|

|

3

|

-

Conditional steps allow steps to run only when certain criteria are met based on the incoming payload. Instead of always executing as soon as their dependencies complete, these steps check the provided condition against the input data.

|

|

4

|

-

|

|

5

|

-

## How It Works

|

|

6

|

-

|

|

7

|

-

- **Definition**: A condition is supplied as a JSON fragment via the step options (for example, using `runIf` or `runUnless`).

|

|

8

|

-

- **Evaluation**: At runtime, the system evaluates the condition by comparing the step's combined inputs against the JSON fragment.

|

|

9

|

-

- **Mechanism**: Under the hood, the payload is matched against the condition using a JSON containment operator (`@>`), commonly available in PostgreSQL. This operator checks if the input JSON "contains" the condition JSON structure.

|

|

10

|

-

- **Outcome**:

|

|

11

|

-

- If the condition is met (for `runIf`) or not met (for `runUnless`), the step is executed.

|

|

12

|

-

- If the condition fails, the step is marked as skipped, and its downstream dependent steps are not executed (or are similarly marked as skipped).

|

|

13

|

-

|

|

14

|

-

This design helps ensure that unnecessary processing is avoided when prerequisites are not satisfied.

|

|

15

|

-

|

|

16

|

-

## Type safety

|

|

17

|

-

|

|

18

|

-

Options object can be strictly type-safe and only allow values that are available in the payload,

|

|

19

|

-

so it is impossible to define invalid condition object.

|

|

20

|

-

|

|

21

|

-

## Marking as skipped

|

|

22

|

-

|

|

23

|

-

Skipped steps are not considered a failure but will propagate skipped status to all dependent steps and

|

|

24

|

-

they will not run.

|

|

25

|

-

|

|

26

|

-

This way we can achieve a kinda robust low level branching logic - users can define branches

|

|

27

|

-

by creating steps with mutually-exclusive conditions, so only one branch will be executed:

|

|

28

|

-

|

|

29

|

-

```ts

|

|

30

|

-

const ScrapeWebsiteFlow = new Flow<{ input: true }>()

|

|

31

|

-

.step({ slug: 'run_if_true', runIf: { run: { input: true } } }, handler)

|

|

32

|

-

.step({ slug: 'run_if_false', runUnless: { run: { input: true } } }, handler);

|

|

33

|

-

```

|

|

@@ -1,15 +0,0 @@

|

|

|

1

|

-

### Declarative vs procedural

|

|

2

|

-

|

|

3

|

-

**YOU MUST ALWAYS PRIORITIZE DECLARATIVE STYLE** and prioritize Batching operations.

|

|

4

|

-

|

|

5

|

-

Avoid plpgsql as much as you can.

|

|

6

|

-

It is important to have your DB procedures run in batched ways and use declarative rather than procedural constructs where possible:

|

|

7

|

-

|

|

8

|

-

- do not ever use `language plplsql` in functions, always use `language sql`

|

|

9

|

-

- don't do loops, do SQL statements that address multiple rows at once.

|

|

10

|

-

- don't write trigger functions that fire for a single row, use `FOR EACH STATEMENT` instead.

|

|

11

|

-

- don't call functions for each row in a result set, a condition, a join, or whatever; instead use functions that return `SETOF` and join against these.

|

|

12

|

-

|

|

13

|

-

If you're constructing dynamic SQL, you should only ever use `%I` and `%L` when using `FORMAT` or similar; you should never see `%s` (with the very rare exception of where you're merging in another SQL fragment that you've previously formatted using %I and %L).

|

|

14

|

-

|

|

15

|

-

Remember, that functions have significant overhead in Postgres - instead of factoring into lots of tiny functions, think about how to make your code more expressive so there's no need.

|

|

@@ -1,20 +0,0 @@

|

|

|

1

|

-

currently the 'input' jsonb that we are building contains only the 'run' input

|

|

2

|

-

|

|

3

|

-

we really want to have this jsonb to contain all the deps outputs

|

|

4

|

-

by dep output i mean a step_tasks.output value that corresponds to step_states row that is a dependency of the given updated_step_tasks (via step_states->steps)

|

|

5

|

-

the step_slug of a given dependency should be used as key and its output as value in the input jsonb

|

|

6

|

-

|

|

7

|

-

so, if a given updated_step_task belongs to step_state, that have 2 dependencies:

|

|

8

|

-

|

|

9

|

-

step_slug=dep_a output=123

|

|

10

|

-

step_slug=dep_b output=456

|

|

11

|

-

|

|

12

|

-

we would like the final 'input' jsonb to look like this:

|

|

13

|

-

|

|

14

|

-

{

|

|

15

|

-

"run": r.input,

|

|

16

|

-

"dep_a": dep_a_step_task.output,

|

|

17

|

-

"dep_b": dep_b_step_task.output

|

|

18

|

-

}

|

|

19

|

-

|

|

20

|

-

write appropriate joins and augment this code with this requirements

|

package/prompts/dsl-multi-arg.ts

DELETED

|

@@ -1,48 +0,0 @@

|

|

|

1

|

-

const ScrapeWebsiteFlow = new Flow<Input>()

|

|

2

|

-

.step('verify_status', async (payload) => {

|

|

3

|

-

// Placeholder function

|

|

4

|

-

return { status: 'success' };

|

|

5

|

-

})

|

|

6

|

-

.step(

|

|

7

|

-

'when_success',

|

|

8

|

-

['verify_status'],

|

|

9

|

-

async (payload) => {

|

|

10

|

-

// Placeholder function

|

|

11

|

-

return await scrapeSubpages(

|

|

12

|

-

payload.run.url,

|

|

13

|

-

payload.table_of_contents.urls_of_subpages

|

|

14

|

-

);

|

|

15

|

-

},

|

|

16

|

-

{ runIf: { verify_status: { status: 'success' } } }

|

|

17

|

-

)

|

|

18

|

-

.step(

|

|

19

|

-

'when_server_error',

|

|

20

|

-

['verify_status'],

|

|

21

|

-

async (payload) => {

|

|

22

|

-

// Placeholder function

|

|

23

|

-

return await generateSummaries(payload.subpages.contentsOfSubpages);

|

|

24

|

-

},

|

|

25

|

-

{ runUnless: { verify_status: { status: 'success' } } }

|

|

26

|

-

)

|

|

27

|

-

|

|

28

|

-

.step(

|

|

29

|

-

'sentiments',

|

|

30

|

-

['subpages'],

|

|

31

|

-

async (payload) => {

|

|

32

|

-

// Placeholder function

|

|

33

|

-

return await analyzeSentiments(payload.subpages.contentsOfSubpages);

|

|

34

|

-

},

|

|

35

|

-

{ maxAttempts: 5, baseDelay: 10 }

|

|

36

|

-

)

|

|

37

|

-

.step(

|

|

38

|

-

'save_to_db',

|

|

39

|

-

['subpages', 'summaries', 'sentiments'],

|

|

40

|

-

async (payload) => {

|

|

41

|

-

// Placeholder function

|

|

42

|

-

return await saveToDb(

|

|

43

|

-

payload.subpages,

|

|

44

|

-

payload.summaries,

|

|

45

|

-

payload.sentiments

|

|

46

|

-

);

|

|

47

|

-

}

|

|

48

|

-

);

|

package/prompts/dsl-options.md

DELETED

|

@@ -1,39 +0,0 @@

|

|

|

1

|

-

# Flow DSL with options

|

|

2

|

-

|

|

3

|

-

The idea is to add 4th argument to the `.step` method which will be an object

|

|

4

|

-

for the step options:

|

|

5

|

-

|

|

6

|

-

```ts

|

|

7

|

-

{

|

|

8

|

-

runIf: Json;

|

|

9

|

-

runUnless: Json;

|

|

10

|

-

maxAttempts: number;

|

|

11

|

-

baseDelay: number;

|

|

12

|

-

}

|

|

13

|

-

```

|

|

14

|

-

|

|

15

|

-

## Full flow example

|

|

16

|

-

|

|

17

|

-

```ts

|

|

18

|

-

const ScrapeWebsiteFlow = new Flow<Input>()

|

|

19

|

-

.step('verify_status', async (payload) => {

|

|

20

|

-

// Placeholder function

|

|

21

|

-

return { status: 'success' }

|

|

22

|

-

})

|

|

23

|

-

.step('when_success', ['verify_status'], async (payload) => {

|

|

24

|

-

// Placeholder function

|

|

25

|

-

return await scrapeSubpages(payload.run.url, payload.table_of_contents.urls_of_subpages);

|

|

26

|

-

}, { runIf: { status: 'success' } })

|

|

27

|

-

.step('when_server_error', ['verify_status'], async (payload) => {

|

|

28

|

-

// Placeholder function

|

|

29

|

-

return await generateSummaries(payload.subpages.contentsOfSubpages);

|

|

30

|

-

}, { runUnless: { status: 'success' } })

|

|

31

|

-

.step('sentiments', ['subpages'], async (payload) => {

|

|

32

|

-

// Placeholder function

|

|

33

|

-

return await analyzeSentiments(payload.subpages.contentsOfSubpages);

|

|

34

|

-

}, { maxAttempts: 5, baseDelay: 10 })

|

|

35

|

-

.step('save_to_db', ['subpages', 'summaries', 'sentiments'], async (payload) => {

|

|

36

|

-

// Placeholder function

|

|

37

|

-

return await saveToDb(payload.subpages, payload.summaries, payload.sentiments);

|

|

38

|

-

});

|

|

39

|

-

```

|

|

@@ -1,51 +0,0 @@

|

|

|

1

|

-

const ScrapeWebsiteFlow = new Flow<Input>()

|

|

2

|

-

.step({

|

|

3

|

-

id: 'verify_status',

|

|

4

|

-

handler: async (payload) => {

|

|

5

|

-

// Placeholder function

|

|

6

|

-

return { status: 'success' };

|

|

7

|

-

},

|

|

8

|

-

})

|

|

9

|

-

.step({

|

|

10

|

-

id: 'when_success',

|

|

11

|

-

deps: ['verify_status'],

|

|

12

|

-

runIf: { verify_status: { status: 'success' } },

|

|

13

|

-

async handler(payload) {

|

|

14

|

-

// Placeholder function

|

|

15

|

-

return await scrapeSubpages(

|

|

16

|

-

payload.run.url,

|

|

17

|

-

payload.table_of_contents.urls_of_subpages

|

|

18

|

-

);

|

|

19

|

-

}

|

|

20

|

-

})

|

|

21

|

-

.step({

|

|

22

|

-

id: 'when_server_error',

|

|

23

|

-

deps: ['verify_status'],

|

|

24

|

-

runUnless: { verify_status: { status: 'success' } },

|

|

25

|

-

async handler(payload) {

|

|

26

|

-

// Placeholder function

|

|

27

|

-

return await generateSummaries(payload.subpages.contentsOfSubpages);

|

|

28

|

-

}

|

|

29

|

-

})

|

|

30

|

-

.step({

|

|

31

|

-

id: 'sentiments',

|

|

32

|

-

deps: ['subpages'],

|

|

33

|

-

async handler(payload) {

|

|

34

|

-

// Placeholder function

|

|

35

|

-

return await analyzeSentiments(payload.subpages.contentsOfSubpages);

|

|

36

|

-

},

|

|

37

|

-

maxAttempts: 5,

|

|

38

|

-

baseDelay: 10

|

|

39

|

-

})

|

|

40

|

-

.step({

|

|

41

|

-

id: 'save_to_db',

|

|

42

|

-

deps: ['subpages', 'summaries', 'sentiments'],

|

|

43

|

-

async handler(payload) {

|

|

44

|

-

// Placeholder function

|

|

45

|

-

return await saveToDb(

|

|

46

|

-

payload.subpages,

|

|

47

|

-

payload.summaries,

|

|

48

|

-

payload.sentiments

|

|

49

|

-

);

|

|

50

|

-

},

|

|

51

|

-

});

|