@lobehub/chat 1.99.6 → 1.100.1

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/.cursor/rules/testing-guide/testing-guide.mdc +173 -0

- package/.github/workflows/desktop-pr-build.yml +3 -3

- package/.github/workflows/release-desktop-beta.yml +3 -3

- package/CHANGELOG.md +50 -0

- package/apps/desktop/package.json +5 -2

- package/apps/desktop/src/main/controllers/AuthCtr.ts +310 -111

- package/apps/desktop/src/main/controllers/NetworkProxyCtr.ts +1 -1

- package/apps/desktop/src/main/controllers/RemoteServerConfigCtr.ts +50 -3

- package/apps/desktop/src/main/controllers/RemoteServerSyncCtr.ts +188 -23

- package/apps/desktop/src/main/controllers/__tests__/NetworkProxyCtr.test.ts +37 -18

- package/apps/desktop/src/main/types/store.ts +1 -0

- package/apps/desktop/src/preload/electronApi.ts +2 -1

- package/apps/desktop/src/preload/streamer.ts +58 -0

- package/changelog/v1.json +18 -0

- package/docs/development/database-schema.dbml +9 -0

- package/docs/self-hosting/environment-variables/model-provider.mdx +25 -0

- package/docs/self-hosting/environment-variables/model-provider.zh-CN.mdx +25 -0

- package/docs/self-hosting/faq/vercel-ai-image-timeout.mdx +65 -0

- package/docs/self-hosting/faq/vercel-ai-image-timeout.zh-CN.mdx +63 -0

- package/docs/usage/providers/fal.mdx +6 -6

- package/docs/usage/providers/fal.zh-CN.mdx +6 -6

- package/locales/ar/electron.json +3 -0

- package/locales/ar/oauth.json +8 -4

- package/locales/bg-BG/electron.json +3 -0

- package/locales/bg-BG/oauth.json +8 -4

- package/locales/de-DE/electron.json +3 -0

- package/locales/de-DE/oauth.json +9 -5

- package/locales/en-US/electron.json +3 -0

- package/locales/en-US/oauth.json +8 -4

- package/locales/es-ES/electron.json +3 -0

- package/locales/es-ES/oauth.json +9 -5

- package/locales/fa-IR/electron.json +3 -0

- package/locales/fa-IR/oauth.json +8 -4

- package/locales/fr-FR/electron.json +3 -0

- package/locales/fr-FR/oauth.json +8 -4

- package/locales/it-IT/electron.json +3 -0

- package/locales/it-IT/oauth.json +9 -5

- package/locales/ja-JP/electron.json +3 -0

- package/locales/ja-JP/oauth.json +8 -4

- package/locales/ko-KR/electron.json +3 -0

- package/locales/ko-KR/oauth.json +8 -4

- package/locales/nl-NL/electron.json +3 -0

- package/locales/nl-NL/oauth.json +9 -5

- package/locales/pl-PL/electron.json +3 -0

- package/locales/pl-PL/oauth.json +8 -4

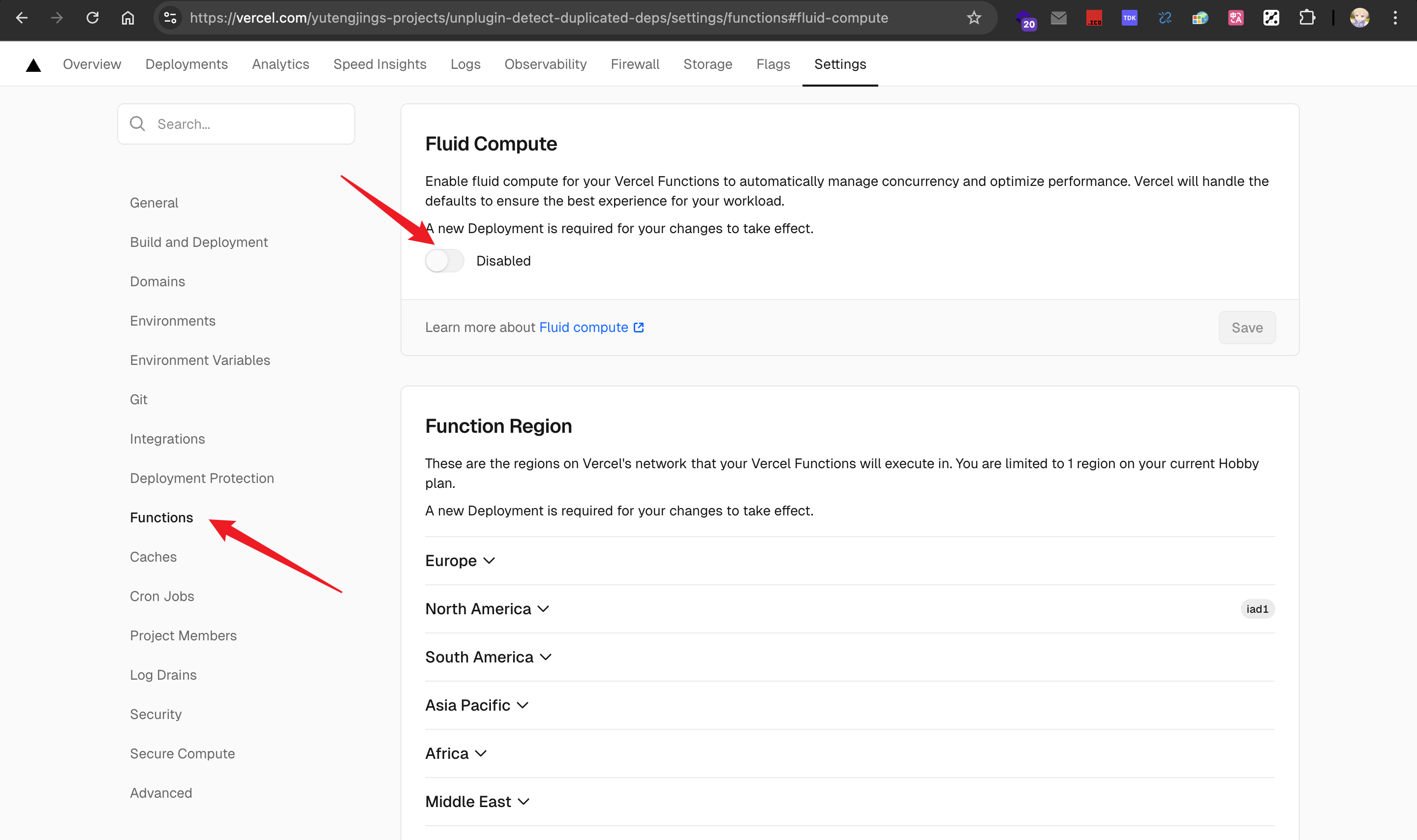

- package/locales/pt-BR/electron.json +3 -0

- package/locales/pt-BR/oauth.json +8 -4

- package/locales/ru-RU/electron.json +3 -0

- package/locales/ru-RU/oauth.json +8 -4

- package/locales/tr-TR/electron.json +3 -0

- package/locales/tr-TR/oauth.json +8 -4

- package/locales/vi-VN/electron.json +3 -0

- package/locales/vi-VN/oauth.json +9 -5

- package/locales/zh-CN/electron.json +3 -0

- package/locales/zh-CN/oauth.json +8 -4

- package/locales/zh-TW/electron.json +3 -0

- package/locales/zh-TW/oauth.json +8 -4

- package/package.json +3 -3

- package/packages/electron-client-ipc/src/dispatch.ts +14 -2

- package/packages/electron-client-ipc/src/index.ts +1 -0

- package/packages/electron-client-ipc/src/streamInvoke.ts +62 -0

- package/packages/electron-client-ipc/src/types/proxyTRPCRequest.ts +5 -0

- package/packages/electron-client-ipc/src/utils/headers.ts +27 -0

- package/packages/electron-client-ipc/src/utils/request.ts +28 -0

- package/src/app/(backend)/oidc/callback/desktop/route.ts +58 -0

- package/src/app/(backend)/oidc/handoff/route.ts +46 -0

- package/src/app/[variants]/oauth/callback/error/page.tsx +55 -0

- package/src/app/[variants]/oauth/callback/layout.tsx +12 -0

- package/src/app/[variants]/oauth/callback/loading.tsx +3 -0

- package/src/app/[variants]/oauth/{consent/[uid] → callback}/success/page.tsx +10 -1

- package/src/app/[variants]/oauth/consent/[uid]/Consent.tsx +7 -1

- package/src/database/client/migrations.json +8 -0

- package/src/database/migrations/0028_oauth_handoffs.sql +8 -0

- package/src/database/migrations/meta/0028_snapshot.json +6055 -0

- package/src/database/migrations/meta/_journal.json +7 -0

- package/src/database/models/oauthHandoff.ts +94 -0

- package/src/database/repositories/tableViewer/index.test.ts +1 -1

- package/src/database/schemas/oidc.ts +46 -0

- package/src/features/ElectronTitlebar/Connection/Waiting.tsx +59 -115

- package/src/features/ElectronTitlebar/Connection/WaitingAnim.tsx +114 -0

- package/src/libs/model-runtime/utils/openaiCompatibleFactory/index.test.ts +1 -1

- package/src/libs/model-runtime/utils/openaiCompatibleFactory/index.ts +2 -1

- package/src/libs/oidc-provider/config.ts +16 -17

- package/src/libs/oidc-provider/jwt.ts +135 -0

- package/src/libs/oidc-provider/provider.ts +22 -38

- package/src/libs/trpc/client/async.ts +1 -2

- package/src/libs/trpc/client/edge.ts +1 -2

- package/src/libs/trpc/client/lambda.ts +1 -1

- package/src/libs/trpc/client/tools.ts +1 -2

- package/src/libs/trpc/lambda/context.ts +9 -16

- package/src/locales/default/electron.ts +3 -0

- package/src/locales/default/oauth.ts +8 -4

- package/src/middleware.ts +10 -4

- package/src/server/globalConfig/genServerAiProviderConfig.test.ts +235 -0

- package/src/server/globalConfig/genServerAiProviderConfig.ts +9 -10

- package/src/server/services/oidc/index.ts +0 -71

- package/src/services/chat.ts +5 -1

- package/src/services/electron/remoteServer.ts +0 -7

- package/src/store/aiInfra/slices/aiProvider/action.ts +2 -1

- package/src/{libs/trpc/client/helpers → utils/electron}/desktopRemoteRPCFetch.ts +22 -7

- package/src/utils/getFallbackModelProperty.test.ts +193 -0

- package/src/utils/getFallbackModelProperty.ts +36 -0

- package/src/utils/parseModels.test.ts +150 -48

- package/src/utils/parseModels.ts +26 -11

- package/src/utils/server/auth.ts +22 -0

- package/src/app/[variants]/oauth/consent/[uid]/failed/page.tsx +0 -36

- package/src/app/[variants]/oauth/handoff/Client.tsx +0 -98

- package/src/app/[variants]/oauth/handoff/page.tsx +0 -13

|

@@ -1,12 +1,17 @@

|

|

|

1

1

|

import {

|

|

2

2

|

ProxyTRPCRequestParams,

|

|

3

3

|

ProxyTRPCRequestResult,

|

|

4

|

-

|

|

4

|

+

ProxyTRPCStreamRequestParams,

|

|

5

|

+

} from '@lobechat/electron-client-ipc';

|

|

6

|

+

import { IpcMainEvent, WebContents, ipcMain } from 'electron';

|

|

7

|

+

import { HttpProxyAgent } from 'http-proxy-agent';

|

|

8

|

+

import { HttpsProxyAgent } from 'https-proxy-agent';

|

|

5

9

|

import { Buffer } from 'node:buffer';

|

|

6

10

|

import http, { IncomingMessage, OutgoingHttpHeaders } from 'node:http';

|

|

7

11

|

import https from 'node:https';

|

|

8

12

|

import { URL } from 'node:url';

|

|

9

13

|

|

|

14

|

+

import { defaultProxySettings } from '@/const/store';

|

|

10

15

|

import { createLogger } from '@/utils/logger';

|

|

11

16

|

|

|

12

17

|

import RemoteServerConfigCtr from './RemoteServerConfigCtr';

|

|

@@ -41,6 +46,137 @@ export default class RemoteServerSyncCtr extends ControllerModule {

|

|

|

41

46

|

afterAppReady() {

|

|

42

47

|

logger.info('RemoteServerSyncCtr initialized (IPC based)');

|

|

43

48

|

// No need to register protocol handler anymore

|

|

49

|

+

ipcMain.on('stream:start', this.handleStreamRequest);

|

|

50

|

+

}

|

|

51

|

+

|

|

52

|

+

/**

|

|

53

|

+

* 处理流式请求的 IPC 调用

|

|

54

|

+

*/

|

|

55

|

+

private handleStreamRequest = async (event: IpcMainEvent, args: ProxyTRPCStreamRequestParams) => {

|

|

56

|

+

const { requestId } = args;

|

|

57

|

+

const logPrefix = `[StreamProxy ${args.method} ${args.urlPath}][${requestId}]`;

|

|

58

|

+

logger.debug(`${logPrefix} Received stream:start IPC call`);

|

|

59

|

+

|

|

60

|

+

try {

|

|

61

|

+

const config = await this.remoteServerConfigCtr.getRemoteServerConfig();

|

|

62

|

+

if (!config.active || (config.storageMode === 'selfHost' && !config.remoteServerUrl)) {

|

|

63

|

+

logger.warn(`${logPrefix} Remote server sync not active or configured.`);

|

|

64

|

+

event.sender.send(

|

|

65

|

+

`stream:error:${requestId}`,

|

|

66

|

+

new Error('Remote server sync not active or configured'),

|

|

67

|

+

);

|

|

68

|

+

return;

|

|

69

|

+

}

|

|

70

|

+

|

|

71

|

+

const remoteServerUrl = await this.remoteServerConfigCtr.getRemoteServerUrl();

|

|

72

|

+

const token = await this.remoteServerConfigCtr.getAccessToken();

|

|

73

|

+

|

|

74

|

+

if (!token) {

|

|

75

|

+

// 401 Unauthorized

|

|

76

|

+

event.sender.send(`stream:response:${requestId}`, {

|

|

77

|

+

headers: {},

|

|

78

|

+

status: 401,

|

|

79

|

+

statusText: 'Authentication required, missing token',

|

|

80

|

+

});

|

|

81

|

+

event.sender.send(`stream:end:${requestId}`);

|

|

82

|

+

return;

|

|

83

|

+

}

|

|

84

|

+

|

|

85

|

+

// 调用新的流式转发方法

|

|

86

|

+

await this.forwardStreamRequest(event.sender, {

|

|

87

|

+

...args,

|

|

88

|

+

accessToken: token,

|

|

89

|

+

remoteServerUrl,

|

|

90

|

+

});

|

|

91

|

+

} catch (error) {

|

|

92

|

+

logger.error(`${logPrefix} Unhandled error processing stream request:`, error);

|

|

93

|

+

event.sender.send(

|

|

94

|

+

`stream:error:${requestId}`,

|

|

95

|

+

error instanceof Error ? error : new Error('Unknown error'),

|

|

96

|

+

);

|

|

97

|

+

}

|

|

98

|

+

};

|

|

99

|

+

|

|

100

|

+

/**

|

|

101

|

+

* 执行实际的流式请求转发

|

|

102

|

+

*/

|

|

103

|

+

private async forwardStreamRequest(

|

|

104

|

+

sender: WebContents,

|

|

105

|

+

args: ProxyTRPCStreamRequestParams & { accessToken: string; remoteServerUrl: string },

|

|

106

|

+

) {

|

|

107

|

+

const {

|

|

108

|

+

urlPath,

|

|

109

|

+

method,

|

|

110

|

+

headers: originalHeaders,

|

|

111

|

+

body: requestBody,

|

|

112

|

+

accessToken,

|

|

113

|

+

remoteServerUrl,

|

|

114

|

+

requestId,

|

|

115

|

+

} = args;

|

|

116

|

+

const targetUrl = new URL(urlPath, remoteServerUrl);

|

|

117

|

+

const logPrefix = `[ForwardStream ${method} ${targetUrl.pathname}][${requestId}]`;

|

|

118

|

+

|

|

119

|

+

const { requestOptions, requester } = this.createRequester({

|

|

120

|

+

accessToken,

|

|

121

|

+

headers: originalHeaders,

|

|

122

|

+

method,

|

|

123

|

+

url: targetUrl,

|

|

124

|

+

});

|

|

125

|

+

|

|

126

|

+

const clientReq = requester.request(requestOptions, (clientRes: IncomingMessage) => {

|

|

127

|

+

logger.debug(`${logPrefix} Received response with status ${clientRes.statusCode}`);

|

|

128

|

+

|

|

129

|

+

// 添加调试信息

|

|

130

|

+

logger.debug(`${logPrefix} Response details:`, {

|

|

131

|

+

headers: clientRes.headers,

|

|

132

|

+

statusCode: clientRes.statusCode,

|

|

133

|

+

statusMessage: clientRes.statusMessage,

|

|

134

|

+

});

|

|

135

|

+

|

|

136

|

+

// 1. 立刻发送响应头和状态码

|

|

137

|

+

const responseData = {

|

|

138

|

+

headers: clientRes.headers || {},

|

|

139

|

+

status: clientRes.statusCode || 500,

|

|

140

|

+

statusText: clientRes.statusMessage || 'Unknown Status',

|

|

141

|

+

};

|

|

142

|

+

|

|

143

|

+

logger.debug(`${logPrefix} Sending response data:`, responseData);

|

|

144

|

+

sender.send(`stream:response:${requestId}`, responseData);

|

|

145

|

+

|

|

146

|

+

// 2. 监听数据块并转发

|

|

147

|

+

clientRes.on('data', (chunk: Buffer) => {

|

|

148

|

+

if (sender.isDestroyed()) return;

|

|

149

|

+

logger.debug(`${logPrefix} Received data chunk, size: ${chunk.length}. Forwarding...`);

|

|

150

|

+

sender.send(`stream:data:${requestId}`, chunk);

|

|

151

|

+

});

|

|

152

|

+

|

|

153

|

+

// 3. 监听结束信号并转发

|

|

154

|

+

clientRes.on('end', () => {

|

|

155

|

+

logger.debug(`${logPrefix} Stream ended. Forwarding end signal...`);

|

|

156

|

+

if (sender.isDestroyed()) return;

|

|

157

|

+

sender.send(`stream:end:${requestId}`);

|

|

158

|

+

});

|

|

159

|

+

|

|

160

|

+

// 4. 监听响应流错误并转发

|

|

161

|

+

clientRes.on('error', (error) => {

|

|

162

|

+

logger.error(`${logPrefix} Error reading response stream:`, error);

|

|

163

|

+

if (sender.isDestroyed()) return;

|

|

164

|

+

sender.send(`stream:error:${requestId}`, error);

|

|

165

|

+

});

|

|

166

|

+

});

|

|

167

|

+

|

|

168

|

+

// 5. 监听请求本身的错误(如 DNS 解析失败)

|

|

169

|

+

clientReq.on('error', (error) => {

|

|

170

|

+

logger.error(`${logPrefix} Error forwarding request:`, error);

|

|

171

|

+

if (sender.isDestroyed()) return;

|

|

172

|

+

sender.send(`stream:error:${requestId}`, error);

|

|

173

|

+

});

|

|

174

|

+

|

|

175

|

+

if (requestBody) {

|

|

176

|

+

clientReq.write(Buffer.from(requestBody));

|

|

177

|

+

}

|

|

178

|

+

|

|

179

|

+

clientReq.end();

|

|

44

180

|

}

|

|

45

181

|

|

|

46

182

|

/**

|

|

@@ -85,28 +221,12 @@ export default class RemoteServerSyncCtr extends ControllerModule {

|

|

|

85

221

|

|

|

86

222

|

// 1. Determine target URL and prepare request options

|

|

87

223

|

const targetUrl = new URL(urlPath, remoteServerUrl); // Combine base URL and path

|

|

88

|

-

|

|

89

|

-

|

|

90

|

-

|

|

91

|

-

|

|

92

|

-

|

|

93

|

-

|

|

94

|

-

delete requestHeaders['host'];

|

|

95

|

-

delete requestHeaders['connection']; // Often causes issues

|

|

96

|

-

// delete requestHeaders['content-length']; // Let node handle it based on body

|

|

97

|

-

|

|

98

|

-

const requestOptions: https.RequestOptions | http.RequestOptions = {

|

|

99

|

-

// Use union type

|

|

100

|

-

headers: requestHeaders,

|

|

101

|

-

hostname: targetUrl.hostname,

|

|

102

|

-

method: method,

|

|

103

|

-

path: targetUrl.pathname + targetUrl.search,

|

|

104

|

-

port: targetUrl.port || (targetUrl.protocol === 'https:' ? 443 : 80),

|

|

105

|

-

protocol: targetUrl.protocol,

|

|

106

|

-

// agent: false, // Consider for keep-alive issues if they arise

|

|

107

|

-

};

|

|

108

|

-

|

|

109

|

-

const requester = targetUrl.protocol === 'https:' ? https : http;

|

|

224

|

+

const { requestOptions, requester } = this.createRequester({

|

|

225

|

+

accessToken,

|

|

226

|

+

headers: originalHeaders,

|

|

227

|

+

method,

|

|

228

|

+

url: targetUrl,

|

|

229

|

+

});

|

|

110

230

|

|

|

111

231

|

// 2. Make the request and capture response

|

|

112

232

|

return new Promise((resolve) => {

|

|

@@ -176,6 +296,51 @@ export default class RemoteServerSyncCtr extends ControllerModule {

|

|

|

176

296

|

});

|

|

177

297

|

}

|

|

178

298

|

|

|

299

|

+

private createRequester({

|

|

300

|

+

headers,

|

|

301

|

+

accessToken,

|

|

302

|

+

method,

|

|

303

|

+

url,

|

|

304

|

+

}: {

|

|

305

|

+

accessToken: string;

|

|

306

|

+

headers: Record<string, string>;

|

|

307

|

+

method: string;

|

|

308

|

+

url: URL;

|

|

309

|

+

}) {

|

|

310

|

+

// Prepare headers, cloning and adding Oidc-Auth

|

|

311

|

+

const requestHeaders: OutgoingHttpHeaders = { ...headers }; // Use OutgoingHttpHeaders

|

|

312

|

+

requestHeaders['Oidc-Auth'] = accessToken;

|

|

313

|

+

|

|

314

|

+

// Let node handle Host, Content-Length etc. Remove potentially problematic headers

|

|

315

|

+

delete requestHeaders['host'];

|

|

316

|

+

delete requestHeaders['connection']; // Often causes issues

|

|

317

|

+

// delete requestHeaders['content-length']; // Let node handle it based on body

|

|

318

|

+

|

|

319

|

+

// 读取代理配置

|

|

320

|

+

const proxyConfig = this.app.storeManager.get('networkProxy', defaultProxySettings);

|

|

321

|

+

|

|

322

|

+

let agent;

|

|

323

|

+

if (proxyConfig?.enableProxy && proxyConfig.proxyServer) {

|

|

324

|

+

const proxyUrl = `${proxyConfig.proxyType}://${proxyConfig.proxyServer}${proxyConfig.proxyPort ? `:${proxyConfig.proxyPort}` : ''}`;

|

|

325

|

+

agent =

|

|

326

|

+

url.protocol === 'https:' ? new HttpsProxyAgent(proxyUrl) : new HttpProxyAgent(proxyUrl);

|

|

327

|

+

}

|

|

328

|

+

|

|

329

|

+

const requestOptions: https.RequestOptions | http.RequestOptions = {

|

|

330

|

+

agent,

|

|

331

|

+

// Use union type

|

|

332

|

+

headers: requestHeaders,

|

|

333

|

+

hostname: url.hostname,

|

|

334

|

+

method: method,

|

|

335

|

+

path: url.pathname + url.search,

|

|

336

|

+

port: url.port || (url.protocol === 'https:' ? 443 : 80),

|

|

337

|

+

protocol: url.protocol,

|

|

338

|

+

};

|

|

339

|

+

|

|

340

|

+

const requester = url.protocol === 'https:' ? https : http;

|

|

341

|

+

return { requestOptions, requester };

|

|

342

|

+

}

|

|

343

|

+

|

|

179

344

|

/**

|

|

180

345

|

* Handles the 'proxy-trpc-request' IPC call from the renderer process.

|

|

181

346

|

* This method should be invoked by the ipcMain.handle setup in your main process entry point.

|

|

@@ -15,11 +15,12 @@ vi.mock('@/utils/logger', () => ({

|

|

|

15

15

|

}),

|

|

16

16

|

}));

|

|

17

17

|

|

|

18

|

-

// 模拟 undici

|

|

18

|

+

// 模拟 undici - 使用 vi.fn() 直接在 Mock 中创建

|

|

19

19

|

vi.mock('undici', () => ({

|

|

20

20

|

fetch: vi.fn(),

|

|

21

21

|

getGlobalDispatcher: vi.fn(),

|

|

22

22

|

setGlobalDispatcher: vi.fn(),

|

|

23

|

+

Agent: vi.fn(),

|

|

23

24

|

ProxyAgent: vi.fn(),

|

|

24

25

|

}));

|

|

25

26

|

|

|

@@ -35,9 +36,6 @@ vi.mock('@/const/store', () => ({

|

|

|

35

36

|

},

|

|

36

37

|

}));

|

|

37

38

|

|

|

38

|

-

// 模拟 fetch

|

|

39

|

-

global.fetch = vi.fn();

|

|

40

|

-

|

|

41

39

|

// 模拟 App 及其依赖项

|

|

42

40

|

const mockStoreManager = {

|

|

43

41

|

get: vi.fn(),

|

|

@@ -51,12 +49,31 @@ const mockApp = {

|

|

|

51

49

|

describe('NetworkProxyCtr', () => {

|

|

52

50

|

let networkProxyCtr: NetworkProxyCtr;

|

|

53

51

|

|

|

54

|

-

|

|

52

|

+

// 动态导入 undici 的 Mock

|

|

53

|

+

let mockUndici: any;

|

|

54

|

+

|

|

55

|

+

beforeEach(async () => {

|

|

55

56

|

vi.clearAllMocks();

|

|

57

|

+

|

|

58

|

+

// 动态导入 undici Mock

|

|

59

|

+

mockUndici = await import('undici');

|

|

60

|

+

|

|

56

61

|

networkProxyCtr = new NetworkProxyCtr(mockApp);

|

|

57

62

|

|

|

58

|

-

//

|

|

59

|

-

(

|

|

63

|

+

// 设置 undici mocks 的默认返回值

|

|

64

|

+

vi.mocked(mockUndici.Agent).mockReturnValue({});

|

|

65

|

+

vi.mocked(mockUndici.ProxyAgent).mockReturnValue({});

|

|

66

|

+

vi.mocked(mockUndici.getGlobalDispatcher).mockReturnValue({

|

|

67

|

+

destroy: vi.fn().mockResolvedValue(undefined),

|

|

68

|

+

});

|

|

69

|

+

vi.mocked(mockUndici.setGlobalDispatcher).mockReturnValue(undefined);

|

|

70

|

+

|

|

71

|

+

// 设置 fetch mock 的默认返回值

|

|

72

|

+

vi.mocked(mockUndici.fetch).mockResolvedValue({

|

|

73

|

+

ok: true,

|

|

74

|

+

status: 200,

|

|

75

|

+

statusText: 'OK',

|

|

76

|

+

});

|

|

60

77

|

});

|

|

61

78

|

|

|

62

79

|

describe('ProxyConfigValidator', () => {

|

|

@@ -213,12 +230,12 @@ describe('NetworkProxyCtr', () => {

|

|

|

213

230

|

statusText: 'OK',

|

|

214

231

|

};

|

|

215

232

|

|

|

216

|

-

(

|

|

233

|

+

vi.mocked(mockUndici.fetch).mockResolvedValueOnce(mockResponse);

|

|

217

234

|

|

|

218

235

|

const result = await networkProxyCtr.testProxyConnection('https://www.google.com');

|

|

219

236

|

|

|

220

237

|

expect(result).toEqual({ success: true });

|

|

221

|

-

expect(

|

|

238

|

+

expect(mockUndici.fetch).toHaveBeenCalledWith('https://www.google.com', expect.any(Object));

|

|

222

239

|

});

|

|

223

240

|

|

|

224

241

|

it('should throw error for failed connection', async () => {

|

|

@@ -228,13 +245,13 @@ describe('NetworkProxyCtr', () => {

|

|

|

228

245

|

statusText: 'Not Found',

|

|

229

246

|

};

|

|

230

247

|

|

|

231

|

-

(

|

|

248

|

+

vi.mocked(mockUndici.fetch).mockResolvedValueOnce(mockResponse);

|

|

232

249

|

|

|

233

250

|

await expect(networkProxyCtr.testProxyConnection('https://www.google.com')).rejects.toThrow();

|

|

234

251

|

});

|

|

235

252

|

|

|

236

253

|

it('should throw error for network error', async () => {

|

|

237

|

-

(

|

|

254

|

+

vi.mocked(mockUndici.fetch).mockRejectedValueOnce(new Error('Network error'));

|

|

238

255

|

|

|

239

256

|

await expect(networkProxyCtr.testProxyConnection('https://www.google.com')).rejects.toThrow();

|

|

240

257

|

});

|

|

@@ -257,7 +274,7 @@ describe('NetworkProxyCtr', () => {

|

|

|

257

274

|

statusText: 'OK',

|

|

258

275

|

};

|

|

259

276

|

|

|

260

|

-

(

|

|

277

|

+

vi.mocked(mockUndici.fetch).mockResolvedValueOnce(mockResponse);

|

|

261

278

|

|

|

262

279

|

const result = await networkProxyCtr.testProxyConfig({ config: validConfig });

|

|

263

280

|

|

|

@@ -289,7 +306,7 @@ describe('NetworkProxyCtr', () => {

|

|

|

289

306

|

statusText: 'OK',

|

|

290

307

|

};

|

|

291

308

|

|

|

292

|

-

(

|

|

309

|

+

vi.mocked(mockUndici.fetch).mockResolvedValueOnce(mockResponse);

|

|

293

310

|

|

|

294

311

|

const result = await networkProxyCtr.testProxyConfig({ config: disabledConfig });

|

|

295

312

|

|

|

@@ -297,7 +314,7 @@ describe('NetworkProxyCtr', () => {

|

|

|

297

314

|

});

|

|

298

315

|

|

|

299

316

|

it('should return failure for connection error', async () => {

|

|

300

|

-

(

|

|

317

|

+

vi.mocked(mockUndici.fetch).mockRejectedValueOnce(new Error('Connection failed'));

|

|

301

318

|

|

|

302

319

|

const result = await networkProxyCtr.testProxyConfig({ config: validConfig });

|

|

303

320

|

|

|

@@ -306,7 +323,7 @@ describe('NetworkProxyCtr', () => {

|

|

|

306

323

|

});

|

|

307

324

|

});

|

|

308

325

|

|

|

309

|

-

describe('

|

|

326

|

+

describe('beforeAppReady', () => {

|

|

310

327

|

it('should apply stored proxy settings on app ready', async () => {

|

|

311

328

|

const storedConfig: NetworkProxySettings = {

|

|

312

329

|

enableProxy: true,

|

|

@@ -319,7 +336,7 @@ describe('NetworkProxyCtr', () => {

|

|

|

319

336

|

|

|

320

337

|

mockStoreManager.get.mockReturnValue(storedConfig);

|

|

321

338

|

|

|

322

|

-

await networkProxyCtr.

|

|

339

|

+

await networkProxyCtr.beforeAppReady();

|

|

323

340

|

|

|

324

341

|

expect(mockStoreManager.get).toHaveBeenCalledWith('networkProxy', expect.any(Object));

|

|

325

342

|

});

|

|

@@ -336,7 +353,7 @@ describe('NetworkProxyCtr', () => {

|

|

|

336

353

|

|

|

337

354

|

mockStoreManager.get.mockReturnValue(invalidConfig);

|

|

338

355

|

|

|

339

|

-

await networkProxyCtr.

|

|

356

|

+

await networkProxyCtr.beforeAppReady();

|

|

340

357

|

|

|

341

358

|

expect(mockStoreManager.get).toHaveBeenCalledWith('networkProxy', expect.any(Object));

|

|

342

359

|

});

|

|

@@ -347,7 +364,9 @@ describe('NetworkProxyCtr', () => {

|

|

|

347

364

|

});

|

|

348

365

|

|

|

349

366

|

// 不应该抛出错误

|

|

350

|

-

await expect(networkProxyCtr.

|

|

367

|

+

await expect(networkProxyCtr.beforeAppReady()).resolves.not.toThrow();

|

|

368

|

+

|

|

369

|

+

mockStoreManager.get.mockReset();

|

|

351

370

|

});

|

|

352

371

|

});

|

|

353

372

|

|

|

@@ -2,6 +2,7 @@ import { electronAPI } from '@electron-toolkit/preload';

|

|

|

2

2

|

import { contextBridge } from 'electron';

|

|

3

3

|

|

|

4

4

|

import { invoke } from './invoke';

|

|

5

|

+

import { onStreamInvoke } from './streamer';

|

|

5

6

|

|

|

6

7

|

export const setupElectronApi = () => {

|

|

7

8

|

// Use `contextBridge` APIs to expose Electron APIs to

|

|

@@ -14,5 +15,5 @@ export const setupElectronApi = () => {

|

|

|

14

15

|

console.error(error);

|

|

15

16

|

}

|

|

16

17

|

|

|

17

|

-

contextBridge.exposeInMainWorld('electronAPI', { invoke });

|

|

18

|

+

contextBridge.exposeInMainWorld('electronAPI', { invoke, onStreamInvoke });

|

|

18

19

|

};

|

|

@@ -0,0 +1,58 @@

|

|

|

1

|

+

import type { ProxyTRPCRequestParams } from '@lobechat/electron-client-ipc';

|

|

2

|

+

import { ipcRenderer } from 'electron';

|

|

3

|

+

import { v4 as uuid } from 'uuid';

|

|

4

|

+

|

|

5

|

+

interface StreamResponse {

|

|

6

|

+

headers: Record<string, string>;

|

|

7

|

+

status: number;

|

|

8

|

+

statusText: string;

|

|

9

|

+

}

|

|

10

|

+

|

|

11

|

+

export interface StreamerCallbacks {

|

|

12

|

+

onData: (chunk: Uint8Array) => void;

|

|

13

|

+

onEnd: () => void;

|

|

14

|

+

onError: (error: Error) => void;

|

|

15

|

+

onResponse: (response: StreamResponse) => void;

|

|

16

|

+

}

|

|

17

|

+

|

|

18

|

+

/**

|

|

19

|

+

* Calls the main process method and handles the stream response via callbacks.

|

|

20

|

+

* @param params The request parameters.

|

|

21

|

+

* @param callbacks The callbacks to handle stream events.

|

|

22

|

+

*/

|

|

23

|

+

export const onStreamInvoke = (

|

|

24

|

+

params: ProxyTRPCRequestParams,

|

|

25

|

+

callbacks: StreamerCallbacks,

|

|

26

|

+

): (() => void) => {

|

|

27

|

+

const requestId = uuid();

|

|

28

|

+

|

|

29

|

+

const cleanup = () => {

|

|

30

|

+

ipcRenderer.removeAllListeners(`stream:data:${requestId}`);

|

|

31

|

+

ipcRenderer.removeAllListeners(`stream:end:${requestId}`);

|

|

32

|

+

ipcRenderer.removeAllListeners(`stream:error:${requestId}`);

|

|

33

|

+

ipcRenderer.removeAllListeners(`stream:response:${requestId}`);

|

|

34

|

+

};

|

|

35

|

+

|

|

36

|

+

ipcRenderer.on(`stream:data:${requestId}`, (_, chunk: Buffer) => {

|

|

37

|

+

callbacks.onData(new Uint8Array(chunk));

|

|

38

|

+

});

|

|

39

|

+

|

|

40

|

+

ipcRenderer.once(`stream:end:${requestId}`, () => {

|

|

41

|

+

callbacks.onEnd();

|

|

42

|

+

cleanup();

|

|

43

|

+

});

|

|

44

|

+

|

|

45

|

+

ipcRenderer.once(`stream:error:${requestId}`, (_, error: Error) => {

|

|

46

|

+

callbacks.onError(error);

|

|

47

|

+

cleanup();

|

|

48

|

+

});

|

|

49

|

+

|

|

50

|

+

ipcRenderer.once(`stream:response:${requestId}`, (_, response: StreamResponse) => {

|

|

51

|

+

callbacks.onResponse(response);

|

|

52

|

+

});

|

|

53

|

+

|

|

54

|

+

ipcRenderer.send('stream:start', { ...params, requestId });

|

|

55

|

+

|

|

56

|

+

// Return a cleanup function to be called on cancellation

|

|

57

|

+

return cleanup;

|

|

58

|

+

};

|

package/changelog/v1.json

CHANGED

|

@@ -1,4 +1,22 @@

|

|

|

1

1

|

[

|

|

2

|

+

{

|

|

3

|

+

"children": {

|

|

4

|

+

"fixes": [

|

|

5

|

+

"Use server env config image models."

|

|

6

|

+

]

|

|

7

|

+

},

|

|

8

|

+

"date": "2025-07-17",

|

|

9

|

+

"version": "1.100.1"

|

|

10

|

+

},

|

|

11

|

+

{

|

|

12

|

+

"children": {

|

|

13

|

+

"features": [

|

|

14

|

+

"Refactor desktop oauth and use JWTs token to support remote chat."

|

|

15

|

+

]

|

|

16

|

+

},

|

|

17

|

+

"date": "2025-07-17",

|

|

18

|

+

"version": "1.100.0"

|

|

19

|

+

},

|

|

2

20

|

{

|

|

3

21

|

"children": {

|

|

4

22

|

"fixes": [

|

|

@@ -431,6 +431,15 @@ table nextauth_verificationtokens {

|

|

|

431

431

|

}

|

|

432

432

|

}

|

|

433

433

|

|

|

434

|

+

table oauth_handoffs {

|

|

435

|

+

id text [pk, not null]

|

|

436

|

+

client varchar(50) [not null]

|

|

437

|

+

payload jsonb [not null]

|

|

438

|

+

accessed_at "timestamp with time zone" [not null, default: `now()`]

|

|

439

|

+

created_at "timestamp with time zone" [not null, default: `now()`]

|

|

440

|

+

updated_at "timestamp with time zone" [not null, default: `now()`]

|

|

441

|

+

}

|

|

442

|

+

|

|

434

443

|

table oidc_access_tokens {

|

|

435

444

|

id varchar(255) [pk, not null]

|

|

436

445

|

data jsonb [not null]

|

|

@@ -625,4 +625,29 @@ If you need to use Azure OpenAI to provide model services, you can refer to the

|

|

|

625

625

|

- Default: `-`

|

|

626

626

|

- Example: `-all,+qwq-32b,+deepseek-r1`

|

|

627

627

|

|

|

628

|

+

## FAL

|

|

629

|

+

|

|

630

|

+

### `ENABLED_FAL`

|

|

631

|

+

|

|

632

|

+

- Type: Optional

|

|

633

|

+

- Description: Enables FAL as a model provider by default. Set to `0` to disable the FAL service.

|

|

634

|

+

- Default: `1`

|

|

635

|

+

- Example: `0`

|

|

636

|

+

|

|

637

|

+

### `FAL_API_KEY`

|

|

638

|

+

|

|

639

|

+

- Type: Required

|

|

640

|

+

- Description: This is the API key you applied for in the FAL service.

|

|

641

|

+

- Default: -

|

|

642

|

+

- Example: `fal-xxxxxx...xxxxxx`

|

|

643

|

+

|

|

644

|

+

### `FAL_MODEL_LIST`

|

|

645

|

+

|

|

646

|

+

- Type: Optional

|

|

647

|

+

- Description: Used to control the FAL model list. Use `+` to add a model, `-` to hide a model, and `model_name=display_name` to customize the display name of a model. Separate multiple entries with commas. The definition syntax follows the same rules as other providers' model lists.

|

|

648

|

+

- Default: `-`

|

|

649

|

+

- Example: `-all,+fal-model-1,+fal-model-2=fal-special`

|

|

650

|

+

|

|

651

|

+

The above example disables all models first, then enables `fal-model-1` and `fal-model-2` (displayed as `fal-special`).

|

|

652

|

+

|

|

628

653

|

[model-list]: /docs/self-hosting/advanced/model-list

|

|

@@ -624,4 +624,29 @@ LobeChat 在部署时提供了丰富的模型服务商相关的环境变量,

|

|

|

624

624

|

- 默认值:`-`

|

|

625

625

|

- 示例:`-all,+qwq-32b,+deepseek-r1`

|

|

626

626

|

|

|

627

|

+

## FAL

|

|

628

|

+

|

|

629

|

+

### `ENABLED_FAL`

|

|

630

|

+

|

|

631

|

+

- 类型:可选

|

|

632

|

+

- 描述:默认启用 FAL 作为模型供应商,当设为 0 时关闭 FAL 服务

|

|

633

|

+

- 默认值:`1`

|

|

634

|

+

- 示例:`0`

|

|

635

|

+

|

|

636

|

+

### `FAL_API_KEY`

|

|

637

|

+

|

|

638

|

+

- 类型:必选

|

|

639

|

+

- 描述:这是你在 FAL 服务中申请的 API 密钥

|

|

640

|

+

- 默认值:-

|

|

641

|

+

- 示例:`fal-xxxxxx...xxxxxx`

|

|

642

|

+

|

|

643

|

+

### `FAL_MODEL_LIST`

|

|

644

|

+

|

|

645

|

+

- 类型:可选

|

|

646

|

+

- 描述:用来控制 FAL 模型列表,使用 `+` 增加一个模型,使用 `-` 来隐藏一个模型,使用 `模型名=展示名` 来自定义模型的展示名,用英文逗号隔开。模型定义语法规则与其他 provider 保持一致。

|

|

647

|

+

- 默认值:`-`

|

|

648

|

+

- 示例:`-all,+fal-model-1,+fal-model-2=fal-special`

|

|

649

|

+

|

|

650

|

+

上述示例表示先禁用所有模型,再启用 `fal-model-1` 和 `fal-model-2`(显示名为 `fal-special`)。

|

|

651

|

+

|

|

627

652

|

[model-list]: /zh/docs/self-hosting/advanced/model-list

|

|

@@ -0,0 +1,65 @@

|

|

|

1

|

+

---

|

|

2

|

+

title: Resolving AI Image Generation Timeout on Vercel

|

|

3

|

+

description: >-

|

|

4

|

+

Learn how to resolve timeout issues when using AI image generation models like gpt-image-1 on Vercel by enabling Fluid Compute for extended execution time.

|

|

5

|

+

|

|

6

|

+

tags:

|

|

7

|

+

- Vercel

|

|

8

|

+

- AI Image Generation

|

|

9

|

+

- Timeout

|

|

10

|

+

- Fluid Compute

|

|

11

|

+

- gpt-image-1

|

|

12

|

+

---

|

|

13

|

+

|

|

14

|

+

# Resolving AI Image Generation Timeout on Vercel

|

|

15

|

+

|

|

16

|

+

## Problem Description

|

|

17

|

+

|

|

18

|

+

When using AI image generation models (such as `gpt-image-1`) on Vercel, you may encounter timeout errors. This occurs because AI image generation typically requires more than 1 minute to complete, which exceeds Vercel's default function execution time limit.

|

|

19

|

+

|

|

20

|

+

Common error symptoms include:

|

|

21

|

+

|

|

22

|

+

- Function timeout errors during image generation

|

|

23

|

+

- Failed image generation requests after approximately 60 seconds

|

|

24

|

+

- "Function execution timed out" messages

|

|

25

|

+

|

|

26

|

+

### Typical Log Symptoms

|

|

27

|

+

|

|

28

|

+

In your Vercel function logs, you may see entries like this:

|

|

29

|

+

|

|

30

|

+

```plaintext

|

|

31

|

+

JUL 16 18:39:09.51 POST 504 /trpc/async/image.createImage

|

|

32

|

+

Provider runtime map found for provider: openai

|

|

33

|

+

```

|

|

34

|

+

|

|

35

|

+

The key indicators are:

|

|

36

|

+

|

|

37

|

+

- **Status Code**: `504` (Gateway Timeout)

|

|

38

|

+

- **Endpoint**: `/trpc/async/image.createImage` or similar image generation endpoints

|

|

39

|

+

- **Timing**: Usually occurs around 60 seconds after the request starts

|

|

40

|

+

|

|

41

|

+

## Solution: Enable Fluid Compute

|

|

42

|

+

|

|

43

|

+

For projects created before Vercel's dashboard update, you can resolve this issue by enabling Fluid Compute, which extends the maximum execution duration to 300 seconds.

|

|

44

|

+

|

|

45

|

+

### Steps to Enable Fluid Compute (Legacy Vercel Dashboard)

|

|

46

|

+

|

|

47

|

+

1. Go to your project dashboard on Vercel

|

|

48

|

+

2. Navigate to the **Settings** tab

|

|

49

|

+

3. Find the **Functions** section

|

|

50

|

+

4. Enable **Fluid Compute** as shown in the screenshot below:

|

|

51

|

+

|

|

52

|

+

|

|

53

|

+

|

|

54

|

+

5. After enabling, the maximum execution duration will be extended to 300 seconds by default

|

|

55

|

+

|

|

56

|

+

### Important Notes

|

|

57

|

+

|

|

58

|

+

- **For new projects**: Newer Vercel projects have Fluid Compute enabled by default, so this issue primarily affects legacy projects

|

|

59

|

+

|

|

60

|

+

## Additional Resources

|

|

61

|

+

|

|

62

|

+

For more information about Vercel's function limitations and Fluid Compute:

|

|

63

|

+

|

|

64

|

+

- [Vercel Fluid Compute Documentation](https://vercel.com/docs/fluid-compute)

|

|

65

|

+

- [Vercel Functions Limitations](https://vercel.com/docs/functions/limitations#max-duration)

|