@lobehub/chat 1.100.0 → 1.100.1

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/.cursor/rules/testing-guide/testing-guide.mdc +173 -0

- package/CHANGELOG.md +25 -0

- package/changelog/v1.json +9 -0

- package/docs/self-hosting/environment-variables/model-provider.mdx +25 -0

- package/docs/self-hosting/environment-variables/model-provider.zh-CN.mdx +25 -0

- package/docs/self-hosting/faq/vercel-ai-image-timeout.mdx +65 -0

- package/docs/self-hosting/faq/vercel-ai-image-timeout.zh-CN.mdx +63 -0

- package/docs/usage/providers/fal.mdx +6 -6

- package/docs/usage/providers/fal.zh-CN.mdx +6 -6

- package/package.json +1 -1

- package/src/libs/model-runtime/utils/openaiCompatibleFactory/index.test.ts +1 -1

- package/src/libs/model-runtime/utils/openaiCompatibleFactory/index.ts +2 -1

- package/src/server/globalConfig/genServerAiProviderConfig.test.ts +235 -0

- package/src/server/globalConfig/genServerAiProviderConfig.ts +9 -10

- package/src/store/aiInfra/slices/aiProvider/action.ts +2 -1

- package/src/utils/getFallbackModelProperty.test.ts +193 -0

- package/src/utils/getFallbackModelProperty.ts +36 -0

- package/src/utils/parseModels.test.ts +150 -48

- package/src/utils/parseModels.ts +26 -11

|

@@ -214,6 +214,176 @@ describe('<UserProfile />', () => {

|

|

|

214

214

|

**修复方法**: 更新了测试文件中的 mock 数据结构,使其与最新的 API 响应格式保持一致。具体修改了 `user.test.ts` 中的 `mockUserData` 对象结构。

|

|

215

215

|

```

|

|

216

216

|

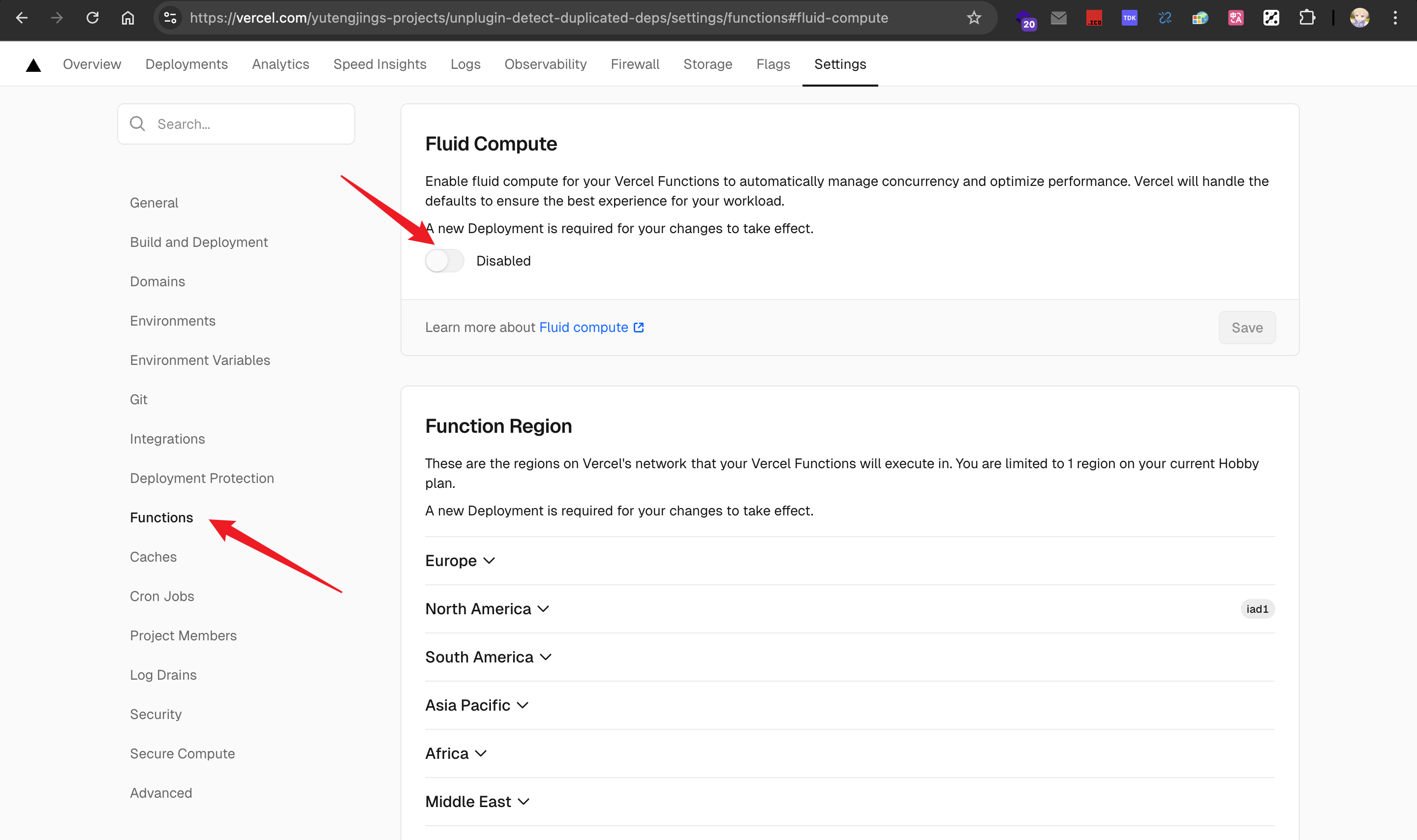

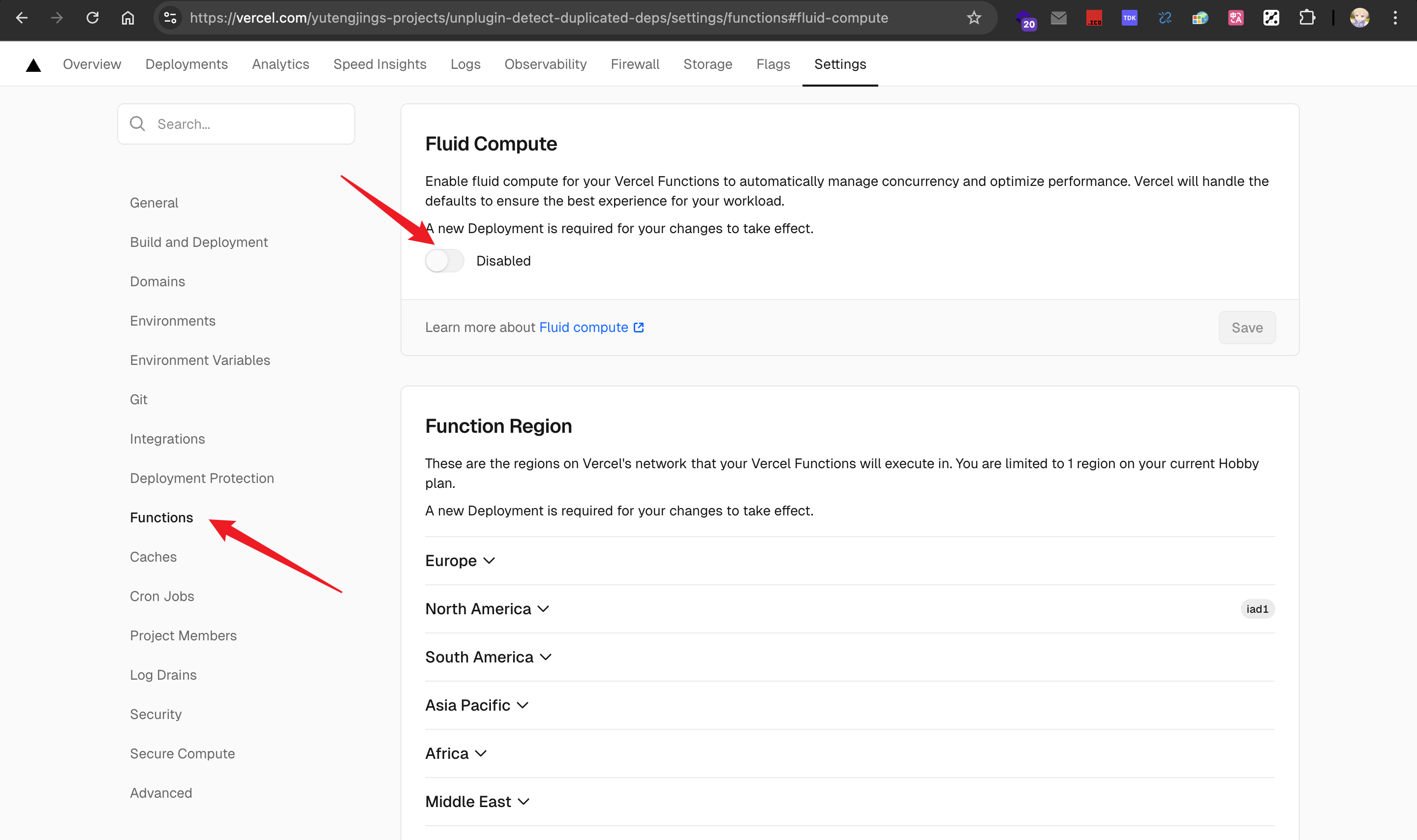

|

|

217

|

+

## 🎯 测试编写最佳实践

|

|

218

|

+

|

|

219

|

+

### Mock 数据策略:追求"低成本的真实性" 📋

|

|

220

|

+

|

|

221

|

+

**核心原则**: 测试数据应默认追求真实性,只有在引入"高昂的测试成本"时才进行简化。

|

|

222

|

+

|

|

223

|

+

#### 什么是"高昂的测试成本"?

|

|

224

|

+

|

|

225

|

+

"高成本"指的是测试中引入了外部依赖,使测试变慢、不稳定或复杂:

|

|

226

|

+

|

|

227

|

+

- **文件 I/O 操作**:读写硬盘文件

|

|

228

|

+

- **网络请求**:HTTP 调用、数据库连接

|

|

229

|

+

- **系统调用**:获取系统时间、环境变量等

|

|

230

|

+

|

|

231

|

+

#### ✅ 推荐做法:Mock 依赖,保留真实数据

|

|

232

|

+

|

|

233

|

+

```typescript

|

|

234

|

+

// ✅ 好的做法:Mock I/O 操作,但使用真实的文件内容格式

|

|

235

|

+

describe('parseContentType', () => {

|

|

236

|

+

beforeEach(() => {

|

|

237

|

+

// Mock 文件读取操作(避免真实 I/O)

|

|

238

|

+

vi.spyOn(fs, 'readFileSync').mockImplementation((path) => {

|

|

239

|

+

// 但返回真实的文件内容格式

|

|

240

|

+

if (path.includes('.pdf')) return '%PDF-1.4\n%âãÏÓ'; // 真实 PDF 文件头

|

|

241

|

+

if (path.includes('.png')) return '\x89PNG\r\n\x1a\n'; // 真实 PNG 文件头

|

|

242

|

+

return '';

|

|

243

|

+

});

|

|

244

|

+

});

|

|

245

|

+

|

|

246

|

+

it('should detect PDF content type correctly', () => {

|

|

247

|

+

const result = parseContentType('/path/to/file.pdf');

|

|

248

|

+

expect(result).toBe('application/pdf');

|

|

249

|

+

});

|

|

250

|

+

});

|

|

251

|

+

|

|

252

|

+

// ❌ 过度简化:使用不真实的数据

|

|

253

|

+

describe('parseContentType', () => {

|

|

254

|

+

it('should detect PDF content type correctly', () => {

|

|

255

|

+

// 这种简化数据没有测试价值

|

|

256

|

+

const result = parseContentType('fake-pdf-content');

|

|

257

|

+

expect(result).toBe('application/pdf');

|

|

258

|

+

});

|

|

259

|

+

});

|

|

260

|

+

```

|

|

261

|

+

|

|

262

|

+

#### 🎯 真实标识符的价值

|

|

263

|

+

|

|

264

|

+

```typescript

|

|

265

|

+

// ✅ 使用真实的提供商标识符

|

|

266

|

+

it('should parse OpenAI model list correctly', () => {

|

|

267

|

+

const result = parseModelString('openai', '+gpt-4,+gpt-3.5-turbo');

|

|

268

|

+

expect(result.add).toHaveLength(2);

|

|

269

|

+

expect(result.add[0].id).toBe('gpt-4');

|

|

270

|

+

});

|

|

271

|

+

|

|

272

|

+

// ❌ 使用占位符标识符(价值较低)

|

|

273

|

+

it('should parse model list correctly', () => {

|

|

274

|

+

const result = parseModelString('test-provider', '+model1,+model2');

|

|

275

|

+

expect(result.add).toHaveLength(2);

|

|

276

|

+

// 这种测试对理解真实场景帮助不大

|

|

277

|

+

});

|

|

278

|

+

```

|

|

279

|

+

|

|

280

|

+

### 错误处理测试:测试"行为"而非"文本" ⚠️

|

|

281

|

+

|

|

282

|

+

**核心原则**: 测试应该验证程序在错误发生时的行为是可预测的,而不是验证易变的错误信息文本。

|

|

283

|

+

|

|

284

|

+

#### ✅ 推荐的错误测试方式

|

|

285

|

+

|

|

286

|

+

```typescript

|

|

287

|

+

// ✅ 测试是否抛出错误

|

|

288

|

+

it('should throw error when invalid input provided', () => {

|

|

289

|

+

expect(() => processInput(null)).toThrow();

|

|

290

|

+

});

|

|

291

|

+

|

|

292

|

+

// ✅ 测试错误类型(最推荐)

|

|

293

|

+

it('should throw ValidationError for invalid data', () => {

|

|

294

|

+

expect(() => validateUser({})).toThrow(ValidationError);

|

|

295

|

+

});

|

|

296

|

+

|

|

297

|

+

// ✅ 测试错误属性而非消息文本

|

|

298

|

+

it('should throw error with correct error code', () => {

|

|

299

|

+

expect(() => processPayment({})).toThrow(

|

|

300

|

+

expect.objectContaining({

|

|

301

|

+

code: 'INVALID_PAYMENT_DATA',

|

|

302

|

+

statusCode: 400,

|

|

303

|

+

}),

|

|

304

|

+

);

|

|

305

|

+

});

|

|

306

|

+

```

|

|

307

|

+

|

|

308

|

+

#### ❌ 应避免的做法

|

|

309

|

+

|

|

310

|

+

```typescript

|

|

311

|

+

// ❌ 过度依赖具体错误信息文本

|

|

312

|

+

it('should throw specific error message', () => {

|

|

313

|

+

expect(() => processUser({})).toThrow('用户数据不能为空,请检查输入参数');

|

|

314

|

+

// 这种测试很脆弱,错误文案稍有修改就会失败

|

|

315

|

+

});

|

|

316

|

+

```

|

|

317

|

+

|

|

318

|

+

#### 🎯 例外情况:何时可以测试错误信息

|

|

319

|

+

|

|

320

|

+

```typescript

|

|

321

|

+

// ✅ 测试标准 API 错误(这是契约的一部分)

|

|

322

|

+

it('should return proper HTTP error for API', () => {

|

|

323

|

+

expect(response.statusCode).toBe(400);

|

|

324

|

+

expect(response.error).toBe('Bad Request');

|

|

325

|

+

});

|

|

326

|

+

|

|

327

|

+

// ✅ 测试错误信息的关键部分(使用正则)

|

|

328

|

+

it('should include field name in validation error', () => {

|

|

329

|

+

expect(() => validateField('email', '')).toThrow(/email/i);

|

|

330

|

+

});

|

|

331

|

+

```

|

|

332

|

+

|

|

333

|

+

### 疑难解答:警惕模块污染 🚨

|

|

334

|

+

|

|

335

|

+

**识别信号**: 当你的测试出现以下"灵异"现象时,优先怀疑模块污染:

|

|

336

|

+

|

|

337

|

+

- 单独运行某个测试通过,但和其他测试一起运行就失败

|

|

338

|

+

- 测试的执行顺序影响结果

|

|

339

|

+

- Mock 设置看起来正确,但实际使用的是旧的 Mock 版本

|

|

340

|

+

|

|

341

|

+

#### 典型场景:动态 Mock 同一模块

|

|

342

|

+

|

|

343

|

+

```typescript

|

|

344

|

+

// ❌ 容易出现模块污染的写法

|

|

345

|

+

describe('ConfigService', () => {

|

|

346

|

+

it('should work in development mode', async () => {

|

|

347

|

+

vi.doMock('./config', () => ({ isDev: true }));

|

|

348

|

+

const { getSettings } = await import('./configService'); // 第一次加载

|

|

349

|

+

expect(getSettings().debugMode).toBe(true);

|

|

350

|

+

});

|

|

351

|

+

|

|

352

|

+

it('should work in production mode', async () => {

|

|

353

|

+

vi.doMock('./config', () => ({ isDev: false }));

|

|

354

|

+

const { getSettings } = await import('./configService'); // 可能使用缓存的旧版本!

|

|

355

|

+

expect(getSettings().debugMode).toBe(false); // ❌ 可能失败

|

|

356

|

+

});

|

|

357

|

+

});

|

|

358

|

+

|

|

359

|

+

// ✅ 使用 resetModules 解决模块污染

|

|

360

|

+

describe('ConfigService', () => {

|

|

361

|

+

beforeEach(() => {

|

|

362

|

+

vi.resetModules(); // 清除模块缓存,确保每个测试都是干净的环境

|

|

363

|

+

});

|

|

364

|

+

|

|

365

|

+

it('should work in development mode', async () => {

|

|

366

|

+

vi.doMock('./config', () => ({ isDev: true }));

|

|

367

|

+

const { getSettings } = await import('./configService');

|

|

368

|

+

expect(getSettings().debugMode).toBe(true);

|

|

369

|

+

});

|

|

370

|

+

|

|

371

|

+

it('should work in production mode', async () => {

|

|

372

|

+

vi.doMock('./config', () => ({ isDev: false }));

|

|

373

|

+

const { getSettings } = await import('./configService');

|

|

374

|

+

expect(getSettings().debugMode).toBe(false); // ✅ 测试通过

|

|

375

|

+

});

|

|

376

|

+

});

|

|

377

|

+

```

|

|

378

|

+

|

|

379

|

+

#### 🔧 排查和解决步骤

|

|

380

|

+

|

|

381

|

+

1. **识别问题**: 测试失败时,首先问自己:"是否有多个测试在 Mock 同一个模块?"

|

|

382

|

+

2. **添加隔离**: 在 `beforeEach` 中添加 `vi.resetModules()`

|

|

383

|

+

3. **验证修复**: 重新运行测试,确认问题解决

|

|

384

|

+

|

|

385

|

+

**记住**: `vi.resetModules()` 是解决测试"灵异"失败的终极武器,当常规调试方法都无效时,它往往能一针见血地解决问题。

|

|

386

|

+

|

|

217

387

|

## 📂 测试文件组织

|

|

218

388

|

|

|

219

389

|

### 文件命名约定

|

|

@@ -320,4 +490,7 @@ git show HEAD -- path/to/component.ts | cat # 查看最新提交的修改

|

|

|

320

490

|

- **修复原则**: 失败1-2次后寻求帮助,测试命名关注行为而非实现细节

|

|

321

491

|

- **调试流程**: 复现 → 分析 → 假设 → 修复 → 验证 → 总结

|

|

322

492

|

- **文件组织**: 优先在现有 `describe` 块中添加测试,避免创建冗余顶级块

|

|

493

|

+

- **数据策略**: 默认追求真实性,只有高成本(I/O、网络等)时才简化

|

|

494

|

+

- **错误测试**: 测试错误类型和行为,避免依赖具体的错误信息文本

|

|

495

|

+

- **模块污染**: 测试"灵异"失败时,优先怀疑模块污染,使用 `vi.resetModules()` 解决

|

|

323

496

|

- **安全要求**: Model 测试必须包含权限检查,并在双环境下验证通过

|

package/CHANGELOG.md

CHANGED

|

@@ -2,6 +2,31 @@

|

|

|

2

2

|

|

|

3

3

|

# Changelog

|

|

4

4

|

|

|

5

|

+

### [Version 1.100.1](https://github.com/lobehub/lobe-chat/compare/v1.100.0...v1.100.1)

|

|

6

|

+

|

|

7

|

+

<sup>Released on **2025-07-17**</sup>

|

|

8

|

+

|

|

9

|

+

#### 🐛 Bug Fixes

|

|

10

|

+

|

|

11

|

+

- **misc**: Use server env config image models.

|

|

12

|

+

|

|

13

|

+

<br/>

|

|

14

|

+

|

|

15

|

+

<details>

|

|

16

|

+

<summary><kbd>Improvements and Fixes</kbd></summary>

|

|

17

|

+

|

|

18

|

+

#### What's fixed

|

|

19

|

+

|

|

20

|

+

- **misc**: Use server env config image models, closes [#8478](https://github.com/lobehub/lobe-chat/issues/8478) ([768ee2b](https://github.com/lobehub/lobe-chat/commit/768ee2b))

|

|

21

|

+

|

|

22

|

+

</details>

|

|

23

|

+

|

|

24

|

+

<div align="right">

|

|

25

|

+

|

|

26

|

+

[](#readme-top)

|

|

27

|

+

|

|

28

|

+

</div>

|

|

29

|

+

|

|

5

30

|

## [Version 1.100.0](https://github.com/lobehub/lobe-chat/compare/v1.99.6...v1.100.0)

|

|

6

31

|

|

|

7

32

|

<sup>Released on **2025-07-17**</sup>

|

package/changelog/v1.json

CHANGED

|

@@ -625,4 +625,29 @@ If you need to use Azure OpenAI to provide model services, you can refer to the

|

|

|

625

625

|

- Default: `-`

|

|

626

626

|

- Example: `-all,+qwq-32b,+deepseek-r1`

|

|

627

627

|

|

|

628

|

+

## FAL

|

|

629

|

+

|

|

630

|

+

### `ENABLED_FAL`

|

|

631

|

+

|

|

632

|

+

- Type: Optional

|

|

633

|

+

- Description: Enables FAL as a model provider by default. Set to `0` to disable the FAL service.

|

|

634

|

+

- Default: `1`

|

|

635

|

+

- Example: `0`

|

|

636

|

+

|

|

637

|

+

### `FAL_API_KEY`

|

|

638

|

+

|

|

639

|

+

- Type: Required

|

|

640

|

+

- Description: This is the API key you applied for in the FAL service.

|

|

641

|

+

- Default: -

|

|

642

|

+

- Example: `fal-xxxxxx...xxxxxx`

|

|

643

|

+

|

|

644

|

+

### `FAL_MODEL_LIST`

|

|

645

|

+

|

|

646

|

+

- Type: Optional

|

|

647

|

+

- Description: Used to control the FAL model list. Use `+` to add a model, `-` to hide a model, and `model_name=display_name` to customize the display name of a model. Separate multiple entries with commas. The definition syntax follows the same rules as other providers' model lists.

|

|

648

|

+

- Default: `-`

|

|

649

|

+

- Example: `-all,+fal-model-1,+fal-model-2=fal-special`

|

|

650

|

+

|

|

651

|

+

The above example disables all models first, then enables `fal-model-1` and `fal-model-2` (displayed as `fal-special`).

|

|

652

|

+

|

|

628

653

|

[model-list]: /docs/self-hosting/advanced/model-list

|

|

@@ -624,4 +624,29 @@ LobeChat 在部署时提供了丰富的模型服务商相关的环境变量,

|

|

|

624

624

|

- 默认值:`-`

|

|

625

625

|

- 示例:`-all,+qwq-32b,+deepseek-r1`

|

|

626

626

|

|

|

627

|

+

## FAL

|

|

628

|

+

|

|

629

|

+

### `ENABLED_FAL`

|

|

630

|

+

|

|

631

|

+

- 类型:可选

|

|

632

|

+

- 描述:默认启用 FAL 作为模型供应商,当设为 0 时关闭 FAL 服务

|

|

633

|

+

- 默认值:`1`

|

|

634

|

+

- 示例:`0`

|

|

635

|

+

|

|

636

|

+

### `FAL_API_KEY`

|

|

637

|

+

|

|

638

|

+

- 类型:必选

|

|

639

|

+

- 描述:这是你在 FAL 服务中申请的 API 密钥

|

|

640

|

+

- 默认值:-

|

|

641

|

+

- 示例:`fal-xxxxxx...xxxxxx`

|

|

642

|

+

|

|

643

|

+

### `FAL_MODEL_LIST`

|

|

644

|

+

|

|

645

|

+

- 类型:可选

|

|

646

|

+

- 描述:用来控制 FAL 模型列表,使用 `+` 增加一个模型,使用 `-` 来隐藏一个模型,使用 `模型名=展示名` 来自定义模型的展示名,用英文逗号隔开。模型定义语法规则与其他 provider 保持一致。

|

|

647

|

+

- 默认值:`-`

|

|

648

|

+

- 示例:`-all,+fal-model-1,+fal-model-2=fal-special`

|

|

649

|

+

|

|

650

|

+

上述示例表示先禁用所有模型,再启用 `fal-model-1` 和 `fal-model-2`(显示名为 `fal-special`)。

|

|

651

|

+

|

|

627

652

|

[model-list]: /zh/docs/self-hosting/advanced/model-list

|

|

@@ -0,0 +1,65 @@

|

|

|

1

|

+

---

|

|

2

|

+

title: Resolving AI Image Generation Timeout on Vercel

|

|

3

|

+

description: >-

|

|

4

|

+

Learn how to resolve timeout issues when using AI image generation models like gpt-image-1 on Vercel by enabling Fluid Compute for extended execution time.

|

|

5

|

+

|

|

6

|

+

tags:

|

|

7

|

+

- Vercel

|

|

8

|

+

- AI Image Generation

|

|

9

|

+

- Timeout

|

|

10

|

+

- Fluid Compute

|

|

11

|

+

- gpt-image-1

|

|

12

|

+

---

|

|

13

|

+

|

|

14

|

+

# Resolving AI Image Generation Timeout on Vercel

|

|

15

|

+

|

|

16

|

+

## Problem Description

|

|

17

|

+

|

|

18

|

+

When using AI image generation models (such as `gpt-image-1`) on Vercel, you may encounter timeout errors. This occurs because AI image generation typically requires more than 1 minute to complete, which exceeds Vercel's default function execution time limit.

|

|

19

|

+

|

|

20

|

+

Common error symptoms include:

|

|

21

|

+

|

|

22

|

+

- Function timeout errors during image generation

|

|

23

|

+

- Failed image generation requests after approximately 60 seconds

|

|

24

|

+

- "Function execution timed out" messages

|

|

25

|

+

|

|

26

|

+

### Typical Log Symptoms

|

|

27

|

+

|

|

28

|

+

In your Vercel function logs, you may see entries like this:

|

|

29

|

+

|

|

30

|

+

```plaintext

|

|

31

|

+

JUL 16 18:39:09.51 POST 504 /trpc/async/image.createImage

|

|

32

|

+

Provider runtime map found for provider: openai

|

|

33

|

+

```

|

|

34

|

+

|

|

35

|

+

The key indicators are:

|

|

36

|

+

|

|

37

|

+

- **Status Code**: `504` (Gateway Timeout)

|

|

38

|

+

- **Endpoint**: `/trpc/async/image.createImage` or similar image generation endpoints

|

|

39

|

+

- **Timing**: Usually occurs around 60 seconds after the request starts

|

|

40

|

+

|

|

41

|

+

## Solution: Enable Fluid Compute

|

|

42

|

+

|

|

43

|

+

For projects created before Vercel's dashboard update, you can resolve this issue by enabling Fluid Compute, which extends the maximum execution duration to 300 seconds.

|

|

44

|

+

|

|

45

|

+

### Steps to Enable Fluid Compute (Legacy Vercel Dashboard)

|

|

46

|

+

|

|

47

|

+

1. Go to your project dashboard on Vercel

|

|

48

|

+

2. Navigate to the **Settings** tab

|

|

49

|

+

3. Find the **Functions** section

|

|

50

|

+

4. Enable **Fluid Compute** as shown in the screenshot below:

|

|

51

|

+

|

|

52

|

+

|

|

53

|

+

|

|

54

|

+

5. After enabling, the maximum execution duration will be extended to 300 seconds by default

|

|

55

|

+

|

|

56

|

+

### Important Notes

|

|

57

|

+

|

|

58

|

+

- **For new projects**: Newer Vercel projects have Fluid Compute enabled by default, so this issue primarily affects legacy projects

|

|

59

|

+

|

|

60

|

+

## Additional Resources

|

|

61

|

+

|

|

62

|

+

For more information about Vercel's function limitations and Fluid Compute:

|

|

63

|

+

|

|

64

|

+

- [Vercel Fluid Compute Documentation](https://vercel.com/docs/fluid-compute)

|

|

65

|

+

- [Vercel Functions Limitations](https://vercel.com/docs/functions/limitations#max-duration)

|

|

@@ -0,0 +1,63 @@

|

|

|

1

|

+

---

|

|

2

|

+

title: 解决 Vercel 上 AI 绘画生图超时问题

|

|

3

|

+

description: 了解如何通过开启 Fluid Compute 来解决在 Vercel 上使用 gpt-image-1 等 AI 绘画模型时遇到的超时问题。

|

|

4

|

+

tags:

|

|

5

|

+

- Vercel

|

|

6

|

+

- AI 绘画

|

|

7

|

+

- 超时问题

|

|

8

|

+

- Fluid Compute

|

|

9

|

+

- gpt-image-1

|

|

10

|

+

---

|

|

11

|

+

|

|

12

|

+

# 解决 Vercel 上 AI 绘画生图超时问题

|

|

13

|

+

|

|

14

|

+

## 问题描述

|

|

15

|

+

|

|

16

|

+

在 Vercel 上使用 AI 绘画模型(如 `gpt-image-1`)时,您可能会遇到超时错误。这是因为 AI 绘画生成通常需要超过 1 分钟的时间,超出了 Vercel 默认的函数执行时间限制。

|

|

17

|

+

|

|

18

|

+

常见的错误症状包括:

|

|

19

|

+

|

|

20

|

+

- 图像生成过程中出现函数超时错误

|

|

21

|

+

- 图像生成请求在大约 60 秒后失败

|

|

22

|

+

- 出现 "函数执行超时" 的错误消息

|

|

23

|

+

|

|

24

|

+

### 典型的日志现象

|

|

25

|

+

|

|

26

|

+

在您的 Vercel 函数日志中,您可能会看到类似这样的条目:

|

|

27

|

+

|

|

28

|

+

```plaintext

|

|

29

|

+

JUL 16 18:39:09.51 POST 504 /trpc/async/image.createImage

|

|

30

|

+

Provider runtime map found for provider: openai

|

|

31

|

+

```

|

|

32

|

+

|

|

33

|

+

关键指标包括:

|

|

34

|

+

|

|

35

|

+

- **状态码**: `504`(网关超时)

|

|

36

|

+

- **端点**: `/trpc/async/image.createImage` 或类似的图像生成端点

|

|

37

|

+

- **时间**: 通常在请求开始后约 60 秒出现

|

|

38

|

+

|

|

39

|

+

## 解决方案:开启 Fluid Compute

|

|

40

|

+

|

|

41

|

+

对于在 Vercel 控制台更新前创建的项目,您可以通过开启 Fluid Compute 来解决此问题,这将最大执行时长延长至 300 秒。

|

|

42

|

+

|

|

43

|

+

### 开启 Fluid Compute 的步骤(旧版 Vercel 控制台)

|

|

44

|

+

|

|

45

|

+

1. 前往您在 Vercel 上的项目控制台

|

|

46

|

+

2. 进入 **Settings**(设置)选项卡

|

|

47

|

+

3. 找到 **Functions**(函数)部分

|

|

48

|

+

4. 按照下方截图所示开启 **Fluid Compute**:

|

|

49

|

+

|

|

50

|

+

|

|

51

|

+

|

|

52

|

+

5. 开启后,最大执行时长将默认延长至 300 秒

|

|

53

|

+

|

|

54

|

+

### 重要说明

|

|

55

|

+

|

|

56

|

+

- **新项目**:较新的 Vercel 项目默认已启用 Fluid Compute,因此此问题主要影响旧版项目

|

|

57

|

+

|

|

58

|

+

## 其他资源

|

|

59

|

+

|

|

60

|

+

有关 Vercel 函数限制和 Fluid Compute 的更多信息:

|

|

61

|

+

|

|

62

|

+

- [Vercel Fluid Compute 文档](https://vercel.com/docs/fluid-compute)

|

|

63

|

+

- [Vercel 函数限制说明](https://vercel.com/docs/functions/limitations#max-duration)

|

|

@@ -13,7 +13,7 @@ tags:

|

|

|

13

13

|

|

|

14

14

|

# Using Fal in LobeChat

|

|

15

15

|

|

|

16

|

-

<Image alt={'Using Fal in LobeChat'} cover src={'https://

|

|

16

|

+

<Image alt={'Using Fal in LobeChat'} cover src={'https://hub-apac-1.lobeobjects.space/docs/f253e749baaa2ccac498014178f93091.png'} />

|

|

17

17

|

|

|

18

18

|

[Fal.ai](https://fal.ai/) is a lightning-fast inference platform specialized in AI media generation, hosting state-of-the-art models for image and video creation including FLUX, Kling, HiDream, and other cutting-edge generative models. This document will guide you on how to use Fal in LobeChat:

|

|

19

19

|

|

|

@@ -28,7 +28,7 @@ tags:

|

|

|

28

28

|

alt={'Open the creation window'}

|

|

29

29

|

inStep

|

|

30

30

|

src={

|

|

31

|

-

'https://

|

|

31

|

+

'https://hub-apac-1.lobeobjects.space/docs/3f3676e7f9c04a55603bc1174b636b45.png'

|

|

32

32

|

}

|

|

33

33

|

/>

|

|

34

34

|

|

|

@@ -36,7 +36,7 @@ tags:

|

|

|

36

36

|

alt={'Create API Key'}

|

|

37

37

|

inStep

|

|

38

38

|

src={

|

|

39

|

-

'https://

|

|

39

|

+

'https://hub-apac-1.lobeobjects.space/docs/214cc5019d9c0810951b33215349136e.png'

|

|

40

40

|

}

|

|

41

41

|

/>

|

|

42

42

|

|

|

@@ -44,7 +44,7 @@ tags:

|

|

|

44

44

|

alt={'Retrieve API Key'}

|

|

45

45

|

inStep

|

|

46

46

|

src={

|

|

47

|

-

'https://

|

|

47

|

+

'https://hub-apac-1.lobeobjects.space/docs/499a447e98dcc79407d56495d0305e2a.png'

|

|

48

48

|

}

|

|

49

49

|

/>

|

|

50

50

|

|

|

@@ -53,12 +53,12 @@ tags:

|

|

|

53

53

|

- Visit the `Settings` page in LobeChat.

|

|

54

54

|

- Under **AI Service Provider**, locate the **Fal** configuration section.

|

|

55

55

|

|

|

56

|

-

<Image alt={'Enter API Key'} inStep src={'https://

|

|

56

|

+

<Image alt={'Enter API Key'} inStep src={'https://hub-apac-1.lobeobjects.space/docs/fa056feecba0133c76abe1ad12706c05.png'} />

|

|

57

57

|

|

|

58

58

|

- Paste the API key you obtained.

|

|

59

59

|

- Choose a Fal model (e.g. `fal-ai/flux-pro`, `fal-ai/kling-video`, `fal-ai/hidream-i1-fast`) for image or video generation.

|

|

60

60

|

|

|

61

|

-

<Image alt={'Select Fal model for media generation'} inStep src={'https://

|

|

61

|

+

<Image alt={'Select Fal model for media generation'} inStep src={'https://hub-apac-1.lobeobjects.space/docs/7560502f31b8500032922103fc22e69b.png'} />

|

|

62

62

|

|

|

63

63

|

<Callout type={'warning'}>

|

|

64

64

|

During usage, you may incur charges according to Fal's pricing policy. Please review Fal's

|

|

@@ -13,7 +13,7 @@ tags:

|

|

|

13

13

|

|

|

14

14

|

# 在 LobeChat 中使用 Fal

|

|

15

15

|

|

|

16

|

-

<Image alt={'在 LobeChat 中使用 Fal'} cover src={'https://

|

|

16

|

+

<Image alt={'在 LobeChat 中使用 Fal'} cover src={'https://hub-apac-1.lobeobjects.space/docs/f253e749baaa2ccac498014178f93091.png'} />

|

|

17

17

|

|

|

18

18

|

[Fal.ai](https://fal.ai/) 是一个专门从事 AI 媒体生成的快速推理平台,提供包括 FLUX、Kling、HiDream 等在内的最先进图像和视频生成模型。本文将指导你如何在 LobeChat 中使用 Fal:

|

|

19

19

|

|

|

@@ -28,7 +28,7 @@ tags:

|

|

|

28

28

|

alt={'打开创建窗口'}

|

|

29

29

|

inStep

|

|

30

30

|

src={

|

|

31

|

-

'https://

|

|

31

|

+

'https://hub-apac-1.lobeobjects.space/docs/3f3676e7f9c04a55603bc1174b636b45.png'

|

|

32

32

|

}

|

|

33

33

|

/>

|

|

34

34

|

|

|

@@ -36,7 +36,7 @@ tags:

|

|

|

36

36

|

alt={'创建 API Key'}

|

|

37

37

|

inStep

|

|

38

38

|

src={

|

|

39

|

-

'https://

|

|

39

|

+

'https://hub-apac-1.lobeobjects.space/docs/214cc5019d9c0810951b33215349136e.png'

|

|

40

40

|

}

|

|

41

41

|

/>

|

|

42

42

|

|

|

@@ -44,7 +44,7 @@ tags:

|

|

|

44

44

|

alt={'获取 API Key'}

|

|

45

45

|

inStep

|

|

46

46

|

src={

|

|

47

|

-

'https://

|

|

47

|

+

'https://hub-apac-1.lobeobjects.space/docs/499a447e98dcc79407d56495d0305e2a.png'

|

|

48

48

|

}

|

|

49

49

|

/>

|

|

50

50

|

|

|

@@ -53,12 +53,12 @@ tags:

|

|

|

53

53

|

- 访问 LobeChat 的 `设置` 页面;

|

|

54

54

|

- 在 `AI服务商` 下找到 `Fal` 的设置项;

|

|

55

55

|

|

|

56

|

-

<Image alt={'填入 API 密钥'} inStep src={'https://

|

|

56

|

+

<Image alt={'填入 API 密钥'} inStep src={'https://hub-apac-1.lobeobjects.space/docs/fa056feecba0133c76abe1ad12706c05.png'} />

|

|

57

57

|

|

|

58

58

|

- 粘贴获取到的 API Key;

|

|

59

59

|

- 选择一个 Fal 模型(如 `fal-ai/flux-pro`、`fal-ai/kling-video`、`fal-ai/hidream-i1-fast`)用于图像或视频生成。

|

|

60

60

|

|

|

61

|

-

<Image alt={'选择 Fal 模型进行媒体生成'} inStep src={'https://

|

|

61

|

+

<Image alt={'选择 Fal 模型进行媒体生成'} inStep src={'https://hub-apac-1.lobeobjects.space/docs/7560502f31b8500032922103fc22e69b.png'} />

|

|

62

62

|

|

|

63

63

|

<Callout type={'warning'}>

|

|

64

64

|

在使用过程中,你可能需要向 Fal 支付相应费用,请在大量调用前查阅 Fal 的官方计费政策。

|

package/package.json

CHANGED

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

{

|

|

2

2

|

"name": "@lobehub/chat",

|

|

3

|

-

"version": "1.100.

|

|

3

|

+

"version": "1.100.1",

|

|

4

4

|

"description": "Lobe Chat - an open-source, high-performance chatbot framework that supports speech synthesis, multimodal, and extensible Function Call plugin system. Supports one-click free deployment of your private ChatGPT/LLM web application.",

|

|

5

5

|

"keywords": [

|

|

6

6

|

"framework",

|

|

@@ -7,6 +7,7 @@ import { Stream } from 'openai/streaming';

|

|

|

7

7

|

import { LOBE_DEFAULT_MODEL_LIST } from '@/config/aiModels';

|

|

8

8

|

import { RuntimeImageGenParamsValue } from '@/libs/standard-parameters/meta-schema';

|

|

9

9

|

import type { ChatModelCard } from '@/types/llm';

|

|

10

|

+

import { getModelPropertyWithFallback } from '@/utils/getFallbackModelProperty';

|

|

10

11

|

|

|

11

12

|

import { LobeRuntimeAI } from '../../BaseAI';

|

|

12

13

|

import { AgentRuntimeErrorType, ILobeAgentRuntimeErrorType } from '../../error';

|

|

@@ -462,7 +463,7 @@ export const createOpenAICompatibleRuntime = <T extends Record<string, any> = an

|

|

|

462

463

|

return resultModels.map((model) => {

|

|

463

464

|

return {

|

|

464

465

|

...model,

|

|

465

|

-

type: model.type ||

|

|

466

|

+

type: model.type || getModelPropertyWithFallback(model.id, 'type'),

|

|

466

467

|

};

|

|

467

468

|

}) as ChatModelCard[];

|

|

468

469

|

}

|