@huggingface/tasks 0.0.4 → 0.0.6

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- package/dist/index.d.ts +19 -1

- package/dist/index.js +50 -0

- package/dist/index.mjs +41 -0

- package/package.json +4 -4

- package/src/image-to-image/about.md +2 -2

- package/src/index.ts +15 -2

- package/src/modelLibraries.ts +4 -0

- package/src/pipelines.ts +18 -0

- package/src/tags.ts +15 -0

package/dist/index.d.ts

CHANGED

|

@@ -40,9 +40,19 @@ declare enum ModelLibrary {

|

|

|

40

40

|

"mindspore" = "MindSpore"

|

|

41

41

|

}

|

|

42

42

|

type ModelLibraryKey = keyof typeof ModelLibrary;

|

|

43

|

+

declare const ALL_DISPLAY_MODEL_LIBRARY_KEYS: string[];

|

|

43

44

|

|

|

44

45

|

declare const MODALITIES: readonly ["cv", "nlp", "audio", "tabular", "multimodal", "rl", "other"];

|

|

45

46

|

type Modality = (typeof MODALITIES)[number];

|

|

47

|

+

declare const MODALITY_LABELS: {

|

|

48

|

+

multimodal: string;

|

|

49

|

+

nlp: string;

|

|

50

|

+

audio: string;

|

|

51

|

+

cv: string;

|

|

52

|

+

rl: string;

|

|

53

|

+

tabular: string;

|

|

54

|

+

other: string;

|

|

55

|

+

};

|

|

46

56

|

/**

|

|

47

57

|

* Public interface for a sub task.

|

|

48

58

|

*

|

|

@@ -400,6 +410,9 @@ declare const PIPELINE_DATA: {

|

|

|

400

410

|

};

|

|

401

411

|

};

|

|

402

412

|

type PipelineType = keyof typeof PIPELINE_DATA;

|

|

413

|

+

declare const PIPELINE_TYPES: ("other" | "text-classification" | "token-classification" | "table-question-answering" | "question-answering" | "zero-shot-classification" | "translation" | "summarization" | "conversational" | "feature-extraction" | "text-generation" | "text2text-generation" | "fill-mask" | "sentence-similarity" | "text-to-speech" | "text-to-audio" | "automatic-speech-recognition" | "audio-to-audio" | "audio-classification" | "voice-activity-detection" | "depth-estimation" | "image-classification" | "object-detection" | "image-segmentation" | "text-to-image" | "image-to-text" | "image-to-image" | "unconditional-image-generation" | "video-classification" | "reinforcement-learning" | "robotics" | "tabular-classification" | "tabular-regression" | "tabular-to-text" | "table-to-text" | "multiple-choice" | "text-retrieval" | "time-series-forecasting" | "text-to-video" | "visual-question-answering" | "document-question-answering" | "zero-shot-image-classification" | "graph-ml")[];

|

|

414

|

+

declare const SUBTASK_TYPES: string[];

|

|

415

|

+

declare const PIPELINE_TYPES_SET: Set<"other" | "text-classification" | "token-classification" | "table-question-answering" | "question-answering" | "zero-shot-classification" | "translation" | "summarization" | "conversational" | "feature-extraction" | "text-generation" | "text2text-generation" | "fill-mask" | "sentence-similarity" | "text-to-speech" | "text-to-audio" | "automatic-speech-recognition" | "audio-to-audio" | "audio-classification" | "voice-activity-detection" | "depth-estimation" | "image-classification" | "object-detection" | "image-segmentation" | "text-to-image" | "image-to-text" | "image-to-image" | "unconditional-image-generation" | "video-classification" | "reinforcement-learning" | "robotics" | "tabular-classification" | "tabular-regression" | "tabular-to-text" | "table-to-text" | "multiple-choice" | "text-retrieval" | "time-series-forecasting" | "text-to-video" | "visual-question-answering" | "document-question-answering" | "zero-shot-image-classification" | "graph-ml">;

|

|

403

416

|

|

|

404

417

|

interface ExampleRepo {

|

|

405

418

|

description: string;

|

|

@@ -454,4 +467,9 @@ interface TaskData {

|

|

|

454

467

|

|

|

455

468

|

declare const TASKS_DATA: Record<PipelineType, TaskData | undefined>;

|

|

456

469

|

|

|

457

|

-

|

|

470

|

+

declare const TAG_NFAA_CONTENT = "not-for-all-audiences";

|

|

471

|

+

declare const OTHER_TAGS_SUGGESTIONS: string[];

|

|

472

|

+

declare const TAG_TEXT_GENERATION_INFERENCE = "text-generation-inference";

|

|

473

|

+

declare const TAG_CUSTOM_CODE = "custom_code";

|

|

474

|

+

|

|

475

|

+

export { ALL_DISPLAY_MODEL_LIBRARY_KEYS, ExampleRepo, MODALITIES, MODALITY_LABELS, Modality, ModelLibrary, ModelLibraryKey, OTHER_TAGS_SUGGESTIONS, PIPELINE_DATA, PIPELINE_TYPES, PIPELINE_TYPES_SET, PipelineData, PipelineType, SUBTASK_TYPES, TAG_CUSTOM_CODE, TAG_NFAA_CONTENT, TAG_TEXT_GENERATION_INFERENCE, TASKS_DATA, TaskData, TaskDemo, TaskDemoEntry };

|

package/dist/index.js

CHANGED

|

@@ -20,15 +20,33 @@ var __toCommonJS = (mod) => __copyProps(__defProp({}, "__esModule", { value: tru

|

|

|

20

20

|

// src/index.ts

|

|

21

21

|

var src_exports = {};

|

|

22

22

|

__export(src_exports, {

|

|

23

|

+

ALL_DISPLAY_MODEL_LIBRARY_KEYS: () => ALL_DISPLAY_MODEL_LIBRARY_KEYS,

|

|

23

24

|

MODALITIES: () => MODALITIES,

|

|

25

|

+

MODALITY_LABELS: () => MODALITY_LABELS,

|

|

24

26

|

ModelLibrary: () => ModelLibrary,

|

|

27

|

+

OTHER_TAGS_SUGGESTIONS: () => OTHER_TAGS_SUGGESTIONS,

|

|

25

28

|

PIPELINE_DATA: () => PIPELINE_DATA,

|

|

29

|

+

PIPELINE_TYPES: () => PIPELINE_TYPES,

|

|

30

|

+

PIPELINE_TYPES_SET: () => PIPELINE_TYPES_SET,

|

|

31

|

+

SUBTASK_TYPES: () => SUBTASK_TYPES,

|

|

32

|

+

TAG_CUSTOM_CODE: () => TAG_CUSTOM_CODE,

|

|

33

|

+

TAG_NFAA_CONTENT: () => TAG_NFAA_CONTENT,

|

|

34

|

+

TAG_TEXT_GENERATION_INFERENCE: () => TAG_TEXT_GENERATION_INFERENCE,

|

|

26

35

|

TASKS_DATA: () => TASKS_DATA

|

|

27

36

|

});

|

|

28

37

|

module.exports = __toCommonJS(src_exports);

|

|

29

38

|

|

|

30

39

|

// src/pipelines.ts

|

|

31

40

|

var MODALITIES = ["cv", "nlp", "audio", "tabular", "multimodal", "rl", "other"];

|

|

41

|

+

var MODALITY_LABELS = {

|

|

42

|

+

multimodal: "Multimodal",

|

|

43

|

+

nlp: "Natural Language Processing",

|

|

44

|

+

audio: "Audio",

|

|

45

|

+

cv: "Computer Vision",

|

|

46

|

+

rl: "Reinforcement Learning",

|

|

47

|

+

tabular: "Tabular",

|

|

48

|

+

other: "Other"

|

|

49

|

+

};

|

|

32

50

|

var PIPELINE_DATA = {

|

|

33

51

|

"text-classification": {

|

|

34

52

|

name: "Text Classification",

|

|

@@ -574,6 +592,9 @@ var PIPELINE_DATA = {

|

|

|

574

592

|

hideInDatasets: true

|

|

575

593

|

}

|

|

576

594

|

};

|

|

595

|

+

var PIPELINE_TYPES = Object.keys(PIPELINE_DATA);

|

|

596

|

+

var SUBTASK_TYPES = Object.values(PIPELINE_DATA).flatMap((data) => "subtasks" in data ? data.subtasks : []).map((s) => s.type);

|

|

597

|

+

var PIPELINE_TYPES_SET = new Set(PIPELINE_TYPES);

|

|

577

598

|

|

|

578

599

|

// src/audio-classification/data.ts

|

|

579

600

|

var taskData = {

|

|

@@ -3132,10 +3153,39 @@ var ModelLibrary = /* @__PURE__ */ ((ModelLibrary2) => {

|

|

|

3132

3153

|

ModelLibrary2["mindspore"] = "MindSpore";

|

|

3133

3154

|

return ModelLibrary2;

|

|

3134

3155

|

})(ModelLibrary || {});

|

|

3156

|

+

var ALL_DISPLAY_MODEL_LIBRARY_KEYS = Object.keys(ModelLibrary).filter(

|

|

3157

|

+

(k) => !["doctr", "k2", "mindspore", "tensorflowtts"].includes(k)

|

|

3158

|

+

);

|

|

3159

|

+

|

|

3160

|

+

// src/tags.ts

|

|

3161

|

+

var TAG_NFAA_CONTENT = "not-for-all-audiences";

|

|

3162

|

+

var OTHER_TAGS_SUGGESTIONS = [

|

|

3163

|

+

"chemistry",

|

|

3164

|

+

"biology",

|

|

3165

|

+

"finance",

|

|

3166

|

+

"legal",

|

|

3167

|

+

"music",

|

|

3168

|

+

"art",

|

|

3169

|

+

"code",

|

|

3170

|

+

"climate",

|

|

3171

|

+

"medical",

|

|

3172

|

+

TAG_NFAA_CONTENT

|

|

3173

|

+

];

|

|

3174

|

+

var TAG_TEXT_GENERATION_INFERENCE = "text-generation-inference";

|

|

3175

|

+

var TAG_CUSTOM_CODE = "custom_code";

|

|

3135

3176

|

// Annotate the CommonJS export names for ESM import in node:

|

|

3136

3177

|

0 && (module.exports = {

|

|

3178

|

+

ALL_DISPLAY_MODEL_LIBRARY_KEYS,

|

|

3137

3179

|

MODALITIES,

|

|

3180

|

+

MODALITY_LABELS,

|

|

3138

3181

|

ModelLibrary,

|

|

3182

|

+

OTHER_TAGS_SUGGESTIONS,

|

|

3139

3183

|

PIPELINE_DATA,

|

|

3184

|

+

PIPELINE_TYPES,

|

|

3185

|

+

PIPELINE_TYPES_SET,

|

|

3186

|

+

SUBTASK_TYPES,

|

|

3187

|

+

TAG_CUSTOM_CODE,

|

|

3188

|

+

TAG_NFAA_CONTENT,

|

|

3189

|

+

TAG_TEXT_GENERATION_INFERENCE,

|

|

3140

3190

|

TASKS_DATA

|

|

3141

3191

|

});

|

package/dist/index.mjs

CHANGED

|

@@ -1,5 +1,14 @@

|

|

|

1

1

|

// src/pipelines.ts

|

|

2

2

|

var MODALITIES = ["cv", "nlp", "audio", "tabular", "multimodal", "rl", "other"];

|

|

3

|

+

var MODALITY_LABELS = {

|

|

4

|

+

multimodal: "Multimodal",

|

|

5

|

+

nlp: "Natural Language Processing",

|

|

6

|

+

audio: "Audio",

|

|

7

|

+

cv: "Computer Vision",

|

|

8

|

+

rl: "Reinforcement Learning",

|

|

9

|

+

tabular: "Tabular",

|

|

10

|

+

other: "Other"

|

|

11

|

+

};

|

|

3

12

|

var PIPELINE_DATA = {

|

|

4

13

|

"text-classification": {

|

|

5

14

|

name: "Text Classification",

|

|

@@ -545,6 +554,9 @@ var PIPELINE_DATA = {

|

|

|

545

554

|

hideInDatasets: true

|

|

546

555

|

}

|

|

547

556

|

};

|

|

557

|

+

var PIPELINE_TYPES = Object.keys(PIPELINE_DATA);

|

|

558

|

+

var SUBTASK_TYPES = Object.values(PIPELINE_DATA).flatMap((data) => "subtasks" in data ? data.subtasks : []).map((s) => s.type);

|

|

559

|

+

var PIPELINE_TYPES_SET = new Set(PIPELINE_TYPES);

|

|

548

560

|

|

|

549

561

|

// src/audio-classification/data.ts

|

|

550

562

|

var taskData = {

|

|

@@ -3103,9 +3115,38 @@ var ModelLibrary = /* @__PURE__ */ ((ModelLibrary2) => {

|

|

|

3103

3115

|

ModelLibrary2["mindspore"] = "MindSpore";

|

|

3104

3116

|

return ModelLibrary2;

|

|

3105

3117

|

})(ModelLibrary || {});

|

|

3118

|

+

var ALL_DISPLAY_MODEL_LIBRARY_KEYS = Object.keys(ModelLibrary).filter(

|

|

3119

|

+

(k) => !["doctr", "k2", "mindspore", "tensorflowtts"].includes(k)

|

|

3120

|

+

);

|

|

3121

|

+

|

|

3122

|

+

// src/tags.ts

|

|

3123

|

+

var TAG_NFAA_CONTENT = "not-for-all-audiences";

|

|

3124

|

+

var OTHER_TAGS_SUGGESTIONS = [

|

|

3125

|

+

"chemistry",

|

|

3126

|

+

"biology",

|

|

3127

|

+

"finance",

|

|

3128

|

+

"legal",

|

|

3129

|

+

"music",

|

|

3130

|

+

"art",

|

|

3131

|

+

"code",

|

|

3132

|

+

"climate",

|

|

3133

|

+

"medical",

|

|

3134

|

+

TAG_NFAA_CONTENT

|

|

3135

|

+

];

|

|

3136

|

+

var TAG_TEXT_GENERATION_INFERENCE = "text-generation-inference";

|

|

3137

|

+

var TAG_CUSTOM_CODE = "custom_code";

|

|

3106

3138

|

export {

|

|

3139

|

+

ALL_DISPLAY_MODEL_LIBRARY_KEYS,

|

|

3107

3140

|

MODALITIES,

|

|

3141

|

+

MODALITY_LABELS,

|

|

3108

3142

|

ModelLibrary,

|

|

3143

|

+

OTHER_TAGS_SUGGESTIONS,

|

|

3109

3144

|

PIPELINE_DATA,

|

|

3145

|

+

PIPELINE_TYPES,

|

|

3146

|

+

PIPELINE_TYPES_SET,

|

|

3147

|

+

SUBTASK_TYPES,

|

|

3148

|

+

TAG_CUSTOM_CODE,

|

|

3149

|

+

TAG_NFAA_CONTENT,

|

|

3150

|

+

TAG_TEXT_GENERATION_INFERENCE,

|

|

3110

3151

|

TASKS_DATA

|

|

3111

3152

|

};

|

package/package.json

CHANGED

|

@@ -1,8 +1,8 @@

|

|

|

1

1

|

{

|

|

2

2

|

"name": "@huggingface/tasks",

|

|

3

|

-

"packageManager": "pnpm@8.

|

|

4

|

-

"version": "0.0.

|

|

5

|

-

"description": "List of

|

|

3

|

+

"packageManager": "pnpm@8.10.5",

|

|

4

|

+

"version": "0.0.6",

|

|

5

|

+

"description": "List of ML tasks for huggingface.co/tasks",

|

|

6

6

|

"repository": "https://github.com/huggingface/huggingface.js.git",

|

|

7

7

|

"publishConfig": {

|

|

8

8

|

"access": "public"

|

|

@@ -39,6 +39,6 @@

|

|

|

39

39

|

"format": "prettier --write .",

|

|

40

40

|

"format:check": "prettier --check .",

|

|

41

41

|

"build": "tsup src/index.ts --format cjs,esm --clean --dts",

|

|

42

|

-

"

|

|

42

|

+

"check": "tsc"

|

|

43

43

|

}

|

|

44

44

|

}

|

|

@@ -65,9 +65,9 @@ Pix2Pix is a popular model used for image to image translation tasks. It is base

|

|

|

65

65

|

|

|

66

66

|

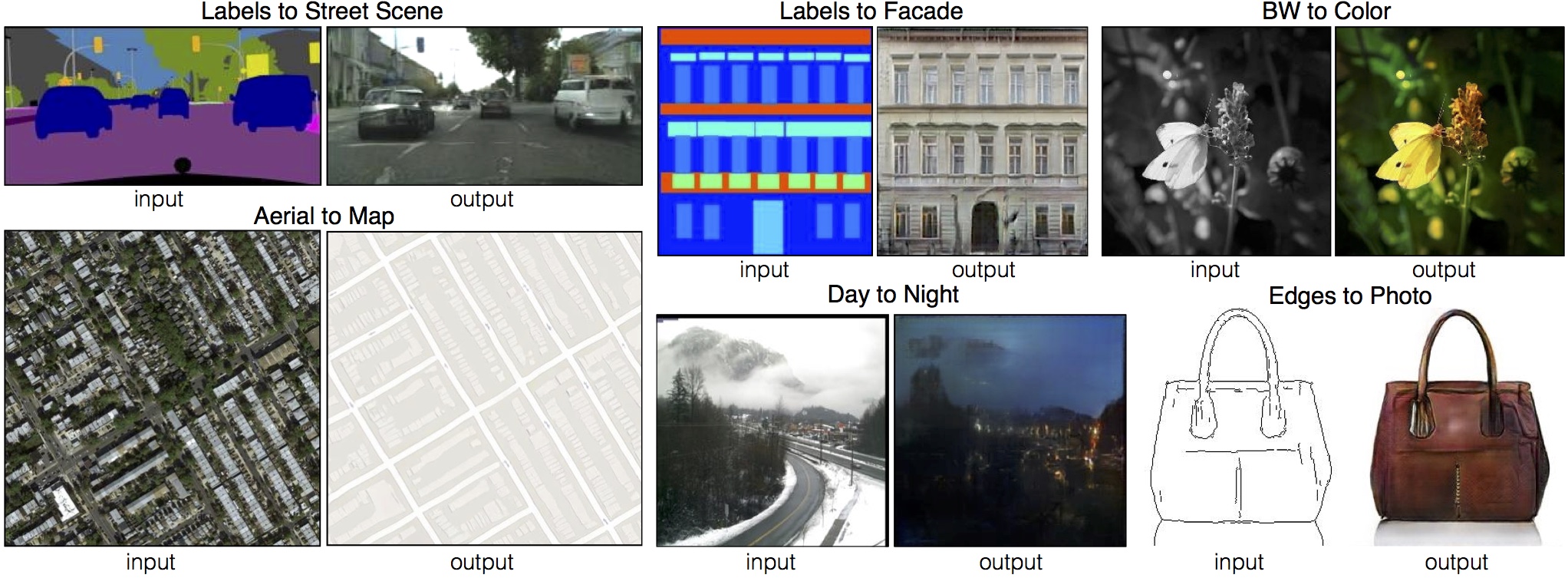

Below images show some of the examples shared in the paper that can be obtained using Pix2Pix. There are various cases this model can be applied on. It is capable of relatively simpler things, e.g. converting a grayscale image to its colored version. But more importantly, it can generate realistic pictures from rough sketches (can be seen in the purse example) or from painting-like images (can be seen in the street and facade examples below).

|

|

67

67

|

|

|

68

|

-

|

|

68

|

+

|

|

69

69

|

|

|

70

|

-

##

|

|

70

|

+

## Useful Resources

|

|

71

71

|

|

|

72

72

|

- [Train your ControlNet with diffusers 🧨](https://huggingface.co/blog/train-your-controlnet)

|

|

73

73

|

- [Ultra fast ControlNet with 🧨 Diffusers](https://huggingface.co/blog/controlnet)

|

package/src/index.ts

CHANGED

|

@@ -1,4 +1,17 @@

|

|

|

1

1

|

export type { TaskData, TaskDemo, TaskDemoEntry, ExampleRepo } from "./Types";

|

|

2

2

|

export { TASKS_DATA } from "./tasksData";

|

|

3

|

-

export {

|

|

4

|

-

|

|

3

|

+

export {

|

|

4

|

+

PIPELINE_DATA,

|

|

5

|

+

PIPELINE_TYPES,

|

|

6

|

+

type PipelineType,

|

|

7

|

+

type PipelineData,

|

|

8

|

+

type Modality,

|

|

9

|

+

MODALITIES,

|

|

10

|

+

MODALITY_LABELS,

|

|

11

|

+

SUBTASK_TYPES,

|

|

12

|

+

PIPELINE_TYPES_SET,

|

|

13

|

+

} from "./pipelines";

|

|

14

|

+

export { ModelLibrary, ALL_DISPLAY_MODEL_LIBRARY_KEYS } from "./modelLibraries";

|

|

15

|

+

export type { ModelLibraryKey } from "./modelLibraries";

|

|

16

|

+

|

|

17

|

+

export { TAG_NFAA_CONTENT, OTHER_TAGS_SUGGESTIONS, TAG_TEXT_GENERATION_INFERENCE, TAG_CUSTOM_CODE } from "./tags";

|

package/src/modelLibraries.ts

CHANGED

package/src/pipelines.ts

CHANGED

|

@@ -2,6 +2,16 @@ export const MODALITIES = ["cv", "nlp", "audio", "tabular", "multimodal", "rl",

|

|

|

2

2

|

|

|

3

3

|

export type Modality = (typeof MODALITIES)[number];

|

|

4

4

|

|

|

5

|

+

export const MODALITY_LABELS = {

|

|

6

|

+

multimodal: "Multimodal",

|

|

7

|

+

nlp: "Natural Language Processing",

|

|

8

|

+

audio: "Audio",

|

|

9

|

+

cv: "Computer Vision",

|

|

10

|

+

rl: "Reinforcement Learning",

|

|

11

|

+

tabular: "Tabular",

|

|

12

|

+

other: "Other",

|

|

13

|

+

} satisfies Record<Modality, string>;

|

|

14

|

+

|

|

5

15

|

/**

|

|

6

16

|

* Public interface for a sub task.

|

|

7

17

|

*

|

|

@@ -606,3 +616,11 @@ export const PIPELINE_DATA = {

|

|

|

606

616

|

} satisfies Record<string, PipelineData>;

|

|

607

617

|

|

|

608

618

|

export type PipelineType = keyof typeof PIPELINE_DATA;

|

|

619

|

+

|

|

620

|

+

export const PIPELINE_TYPES = Object.keys(PIPELINE_DATA) as PipelineType[];

|

|

621

|

+

|

|

622

|

+

export const SUBTASK_TYPES = Object.values(PIPELINE_DATA)

|

|

623

|

+

.flatMap((data) => ("subtasks" in data ? data.subtasks : []))

|

|

624

|

+

.map((s) => s.type);

|

|

625

|

+

|

|

626

|

+

export const PIPELINE_TYPES_SET = new Set(PIPELINE_TYPES);

|

package/src/tags.ts

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

1

|

+

export const TAG_NFAA_CONTENT = "not-for-all-audiences";

|

|

2

|

+

export const OTHER_TAGS_SUGGESTIONS = [

|

|

3

|

+

"chemistry",

|

|

4

|

+

"biology",

|

|

5

|

+

"finance",

|

|

6

|

+

"legal",

|

|

7

|

+

"music",

|

|

8

|

+

"art",

|

|

9

|

+

"code",

|

|

10

|

+

"climate",

|

|

11

|

+

"medical",

|

|

12

|

+

TAG_NFAA_CONTENT,

|

|

13

|

+

];

|

|

14

|

+

export const TAG_TEXT_GENERATION_INFERENCE = "text-generation-inference";

|

|

15

|

+

export const TAG_CUSTOM_CODE = "custom_code";

|