waterdrop 2.0.0 → 2.4.0

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- checksums.yaml +4 -4

- checksums.yaml.gz.sig +0 -0

- data/.github/workflows/ci.yml +33 -6

- data/.ruby-version +1 -1

- data/CHANGELOG.md +80 -0

- data/Gemfile +0 -2

- data/Gemfile.lock +36 -87

- data/MIT-LICENSE +18 -0

- data/README.md +180 -46

- data/certs/mensfeld.pem +21 -21

- data/config/errors.yml +29 -5

- data/docker-compose.yml +2 -1

- data/lib/{water_drop → waterdrop}/config.rb +47 -19

- data/lib/waterdrop/contracts/config.rb +40 -0

- data/lib/waterdrop/contracts/message.rb +60 -0

- data/lib/waterdrop/instrumentation/callbacks/delivery.rb +30 -0

- data/lib/waterdrop/instrumentation/callbacks/error.rb +36 -0

- data/lib/waterdrop/instrumentation/callbacks/statistics.rb +41 -0

- data/lib/waterdrop/instrumentation/callbacks/statistics_decorator.rb +77 -0

- data/lib/waterdrop/instrumentation/callbacks_manager.rb +39 -0

- data/lib/{water_drop/instrumentation/stdout_listener.rb → waterdrop/instrumentation/logger_listener.rb} +17 -26

- data/lib/waterdrop/instrumentation/monitor.rb +20 -0

- data/lib/{water_drop/instrumentation/monitor.rb → waterdrop/instrumentation/notifications.rb} +12 -13

- data/lib/waterdrop/instrumentation/vendors/datadog/dashboard.json +1 -0

- data/lib/waterdrop/instrumentation/vendors/datadog/listener.rb +210 -0

- data/lib/waterdrop/instrumentation.rb +20 -0

- data/lib/waterdrop/patches/rdkafka/bindings.rb +42 -0

- data/lib/waterdrop/patches/rdkafka/producer.rb +28 -0

- data/lib/{water_drop → waterdrop}/producer/async.rb +2 -2

- data/lib/{water_drop → waterdrop}/producer/buffer.rb +15 -8

- data/lib/waterdrop/producer/builder.rb +28 -0

- data/lib/{water_drop → waterdrop}/producer/sync.rb +2 -2

- data/lib/{water_drop → waterdrop}/producer.rb +29 -15

- data/lib/{water_drop → waterdrop}/version.rb +1 -1

- data/lib/waterdrop.rb +33 -2

- data/waterdrop.gemspec +12 -10

- data.tar.gz.sig +0 -0

- metadata +64 -97

- metadata.gz.sig +0 -0

- data/.github/FUNDING.yml +0 -1

- data/LICENSE +0 -165

- data/lib/water_drop/contracts/config.rb +0 -26

- data/lib/water_drop/contracts/message.rb +0 -41

- data/lib/water_drop/instrumentation.rb +0 -7

- data/lib/water_drop/producer/builder.rb +0 -63

- data/lib/water_drop/producer/statistics_decorator.rb +0 -71

- data/lib/water_drop.rb +0 -30

- /data/lib/{water_drop → waterdrop}/contracts.rb +0 -0

- /data/lib/{water_drop → waterdrop}/errors.rb +0 -0

- /data/lib/{water_drop → waterdrop}/producer/dummy_client.rb +0 -0

- /data/lib/{water_drop → waterdrop}/producer/status.rb +0 -0

data/README.md

CHANGED

|

@@ -1,33 +1,52 @@

|

|

|

1

1

|

# WaterDrop

|

|

2

2

|

|

|

3

|

-

**Note**: Documentation presented here refers to WaterDrop `2.

|

|

3

|

+

**Note**: Documentation presented here refers to WaterDrop `2.x`.

|

|

4

4

|

|

|

5

|

-

WaterDrop `2.

|

|

5

|

+

WaterDrop `2.x` works with Karafka `2.*` and aims to either work as a standalone producer or as a part of the Karafka `2.*`.

|

|

6

6

|

|

|

7

|

-

Please refer to [this](https://github.com/karafka/waterdrop/tree/1.4) branch and

|

|

7

|

+

Please refer to [this](https://github.com/karafka/waterdrop/tree/1.4) branch and its documentation for details about WaterDrop `1.*` usage.

|

|

8

8

|

|

|

9

9

|

[](https://github.com/karafka/waterdrop/actions?query=workflow%3Aci)

|

|

10

|

-

[](http://badge.fury.io/rb/waterdrop)

|

|

11

|

+

[](https://slack.karafka.io)

|

|

11

12

|

|

|

12

|

-

|

|

13

|

+

A gem to send messages to Kafka easily with an extra validation layer. It is a part of the [Karafka](https://github.com/karafka/karafka) ecosystem.

|

|

13

14

|

|

|

14

15

|

It:

|

|

15

16

|

|

|

16

|

-

- Is thread

|

|

17

|

+

- Is thread-safe

|

|

17

18

|

- Supports sync producing

|

|

18

19

|

- Supports async producing

|

|

19

20

|

- Supports buffering

|

|

20

21

|

- Supports producing messages to multiple clusters

|

|

21

22

|

- Supports multiple delivery policies

|

|

22

|

-

- Works with Kafka 1.0

|

|

23

|

+

- Works with Kafka `1.0+` and Ruby `2.7+`

|

|

24

|

+

|

|

25

|

+

## Table of contents

|

|

26

|

+

|

|

27

|

+

- [Installation](#installation)

|

|

28

|

+

- [Setup](#setup)

|

|

29

|

+

* [WaterDrop configuration options](#waterdrop-configuration-options)

|

|

30

|

+

* [Kafka configuration options](#kafka-configuration-options)

|

|

31

|

+

- [Usage](#usage)

|

|

32

|

+

* [Basic usage](#basic-usage)

|

|

33

|

+

* [Using WaterDrop across the application and with Ruby on Rails](#using-waterdrop-across-the-application-and-with-ruby-on-rails)

|

|

34

|

+

* [Using WaterDrop with a connection-pool](#using-waterdrop-with-a-connection-pool)

|

|

35

|

+

* [Buffering](#buffering)

|

|

36

|

+

+ [Using WaterDrop to buffer messages based on the application logic](#using-waterdrop-to-buffer-messages-based-on-the-application-logic)

|

|

37

|

+

+ [Using WaterDrop with rdkafka buffers to achieve periodic auto-flushing](#using-waterdrop-with-rdkafka-buffers-to-achieve-periodic-auto-flushing)

|

|

38

|

+

- [Instrumentation](#instrumentation)

|

|

39

|

+

* [Usage statistics](#usage-statistics)

|

|

40

|

+

* [Error notifications](#error-notifications)

|

|

41

|

+

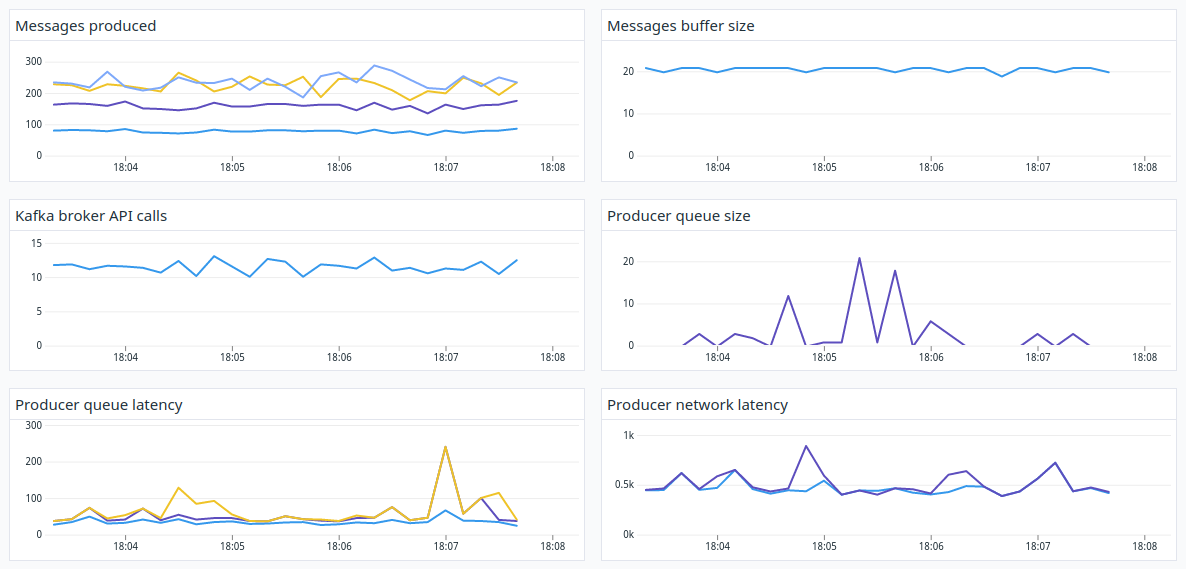

* [Datadog and StatsD integration](#datadog-and-statsd-integration)

|

|

42

|

+

* [Forking and potential memory problems](#forking-and-potential-memory-problems)

|

|

43

|

+

- [Note on contributions](#note-on-contributions)

|

|

23

44

|

|

|

24

45

|

## Installation

|

|

25

46

|

|

|

26

|

-

|

|

27

|

-

gem install waterdrop

|

|

28

|

-

```

|

|

47

|

+

**Note**: If you want to both produce and consume messages, please use [Karafka](https://github.com/karafka/karafka/). It integrates WaterDrop automatically.

|

|

29

48

|

|

|

30

|

-

|

|

49

|

+

Add this to your Gemfile:

|

|

31

50

|

|

|

32

51

|

```ruby

|

|

33

52

|

gem 'waterdrop'

|

|

@@ -41,10 +60,10 @@ bundle install

|

|

|

41

60

|

|

|

42

61

|

## Setup

|

|

43

62

|

|

|

44

|

-

WaterDrop is a complex tool

|

|

63

|

+

WaterDrop is a complex tool that contains multiple configuration options. To keep everything organized, all the configuration options were divided into two groups:

|

|

45

64

|

|

|

46

|

-

- WaterDrop options - options directly related to

|

|

47

|

-

- Kafka driver options - options related to `

|

|

65

|

+

- WaterDrop options - options directly related to WaterDrop and its components

|

|

66

|

+

- Kafka driver options - options related to `rdkafka`

|

|

48

67

|

|

|

49

68

|

To apply all those configuration options, you need to create a producer instance and use the ```#setup``` method:

|

|

50

69

|

|

|

@@ -88,8 +107,6 @@ You can create producers with different `kafka` settings. Documentation of the a

|

|

|

88

107

|

|

|

89

108

|

## Usage

|

|

90

109

|

|

|

91

|

-

Please refer to the [documentation](https://www.rubydoc.info/github/karafka/waterdrop) in case you're interested in the more advanced API.

|

|

92

|

-

|

|

93

110

|

### Basic usage

|

|

94

111

|

|

|

95

112

|

To send Kafka messages, just create a producer and use it:

|

|

@@ -130,20 +147,60 @@ Each message that you want to publish, will have its value checked.

|

|

|

130

147

|

|

|

131

148

|

Here are all the things you can provide in the message hash:

|

|

132

149

|

|

|

133

|

-

| Option

|

|

134

|

-

|

|

135

|

-

| `topic`

|

|

136

|

-

| `payload`

|

|

137

|

-

| `key`

|

|

138

|

-

| `partition`

|

|

139

|

-

| `

|

|

140

|

-

| `

|

|

150

|

+

| Option | Required | Value type | Description |

|

|

151

|

+

|-----------------|----------|---------------|----------------------------------------------------------|

|

|

152

|

+

| `topic` | true | String | The Kafka topic that should be written to |

|

|

153

|

+

| `payload` | true | String | Data you want to send to Kafka |

|

|

154

|

+

| `key` | false | String | The key that should be set in the Kafka message |

|

|

155

|

+

| `partition` | false | Integer | A specific partition number that should be written to |

|

|

156

|

+

| `partition_key` | false | String | Key to indicate the destination partition of the message |

|

|

157

|

+

| `timestamp` | false | Time, Integer | The timestamp that should be set on the message |

|

|

158

|

+

| `headers` | false | Hash | Headers for the message |

|

|

141

159

|

|

|

142

160

|

Keep in mind, that message you want to send should be either binary or stringified (to_s, to_json, etc).

|

|

143

161

|

|

|

162

|

+

### Using WaterDrop across the application and with Ruby on Rails

|

|

163

|

+

|

|

164

|

+

If you plan to both produce and consume messages using Kafka, you should install and use [Karafka](https://github.com/karafka/karafka). It integrates automatically with Ruby on Rails applications and auto-configures WaterDrop producer to make it accessible via `Karafka#producer` method.

|

|

165

|

+

|

|

166

|

+

If you want to only produce messages from within your application, since WaterDrop is thread-safe you can create a single instance in an initializer like so:

|

|

167

|

+

|

|

168

|

+

```ruby

|

|

169

|

+

KAFKA_PRODUCER = WaterDrop::Producer.new

|

|

170

|

+

|

|

171

|

+

KAFKA_PRODUCER.setup do |config|

|

|

172

|

+

config.kafka = { 'bootstrap.servers': 'localhost:9092' }

|

|

173

|

+

end

|

|

174

|

+

|

|

175

|

+

# And just dispatch messages

|

|

176

|

+

KAFKA_PRODUCER.produce_sync(topic: 'my-topic', payload: 'my message')

|

|

177

|

+

```

|

|

178

|

+

|

|

179

|

+

### Using WaterDrop with a connection-pool

|

|

180

|

+

|

|

181

|

+

While WaterDrop is thread-safe, there is no problem in using it with a connection pool inside high-intensity applications. The only thing worth keeping in mind, is that WaterDrop instances should be shutdown before the application is closed.

|

|

182

|

+

|

|

183

|

+

```ruby

|

|

184

|

+

KAFKA_PRODUCERS_CP = ConnectionPool.new do

|

|

185

|

+

WaterDrop::Producer.new do |config|

|

|

186

|

+

config.kafka = { 'bootstrap.servers': 'localhost:9092' }

|

|

187

|

+

end

|

|

188

|

+

end

|

|

189

|

+

|

|

190

|

+

KAFKA_PRODUCERS_CP.with do |producer|

|

|

191

|

+

producer.produce_async(topic: 'my-topic', payload: 'my message')

|

|

192

|

+

end

|

|

193

|

+

|

|

194

|

+

KAFKA_PRODUCERS_CP.shutdown { |producer| producer.close }

|

|

195

|

+

```

|

|

196

|

+

|

|

144

197

|

### Buffering

|

|

145

198

|

|

|

146

|

-

WaterDrop producers support buffering

|

|

199

|

+

WaterDrop producers support buffering messages in their internal buffers and on the `rdkafka` level via `queue.buffering.*` set of settings.

|

|

200

|

+

|

|

201

|

+

This means that depending on your use case, you can achieve both granular buffering and flushing control when needed with context awareness and periodic and size-based flushing functionalities.

|

|

202

|

+

|

|

203

|

+

#### Using WaterDrop to buffer messages based on the application logic

|

|

147

204

|

|

|

148

205

|

```ruby

|

|

149

206

|

producer = WaterDrop::Producer.new

|

|

@@ -152,16 +209,41 @@ producer.setup do |config|

|

|

|

152

209

|

config.kafka = { 'bootstrap.servers': 'localhost:9092' }

|

|

153

210

|

end

|

|

154

211

|

|

|

155

|

-

|

|

212

|

+

# Simulating some events states of a transaction - notice, that the messages will be flushed to

|

|

213

|

+

# kafka only upon arrival of the `finished` state.

|

|

214

|

+

%w[

|

|

215

|

+

started

|

|

216

|

+

processed

|

|

217

|

+

finished

|

|

218

|

+

].each do |state|

|

|

219

|

+

producer.buffer(topic: 'events', payload: state)

|

|

220

|

+

|

|

221

|

+

puts "The messages buffer size #{producer.messages.size}"

|

|

222

|

+

producer.flush_sync if state == 'finished'

|

|

223

|

+

puts "The messages buffer size #{producer.messages.size}"

|

|

224

|

+

end

|

|

225

|

+

|

|

226

|

+

producer.close

|

|

227

|

+

```

|

|

228

|

+

|

|

229

|

+

#### Using WaterDrop with rdkafka buffers to achieve periodic auto-flushing

|

|

230

|

+

|

|

231

|

+

```ruby

|

|

232

|

+

producer = WaterDrop::Producer.new

|

|

156

233

|

|

|

157

|

-

|

|

158

|

-

|

|

159

|

-

|

|

234

|

+

producer.setup do |config|

|

|

235

|

+

config.kafka = {

|

|

236

|

+

'bootstrap.servers': 'localhost:9092',

|

|

237

|

+

# Accumulate messages for at most 10 seconds

|

|

238

|

+

'queue.buffering.max.ms': 10_000

|

|

239

|

+

}

|

|

160

240

|

end

|

|

161

241

|

|

|

162

|

-

|

|

163

|

-

|

|

164

|

-

|

|

242

|

+

# WaterDrop will flush messages minimum once every 10 seconds

|

|

243

|

+

30.times do |i|

|

|

244

|

+

producer.produce_async(topic: 'events', payload: i.to_s)

|

|

245

|

+

sleep(1)

|

|

246

|

+

end

|

|

165

247

|

|

|

166

248

|

producer.close

|

|

167

249

|

```

|

|

@@ -240,27 +322,79 @@ producer.close

|

|

|

240

322

|

|

|

241

323

|

Note: The metrics returned may not be completely consistent between brokers, toppars and totals, due to the internal asynchronous nature of librdkafka. E.g., the top level tx total may be less than the sum of the broker tx values which it represents.

|

|

242

324

|

|

|

243

|

-

###

|

|

325

|

+

### Datadog and StatsD integration

|

|

244

326

|

|

|

245

|

-

|

|

327

|

+

WaterDrop comes with (optional) full Datadog and StatsD integration that you can use. To use it:

|

|

246

328

|

|

|

247

|

-

|

|

329

|

+

```ruby

|

|

330

|

+

# require datadog/statsd and the listener as it is not loaded by default

|

|

331

|

+

require 'datadog/statsd'

|

|

332

|

+

require 'waterdrop/instrumentation/vendors/datadog/listener'

|

|

333

|

+

|

|

334

|

+

# initialize your producer with statistics.interval.ms enabled so the metrics are published

|

|

335

|

+

producer = WaterDrop::Producer.new do |config|

|

|

336

|

+

config.deliver = true

|

|

337

|

+

config.kafka = {

|

|

338

|

+

'bootstrap.servers': 'localhost:9092',

|

|

339

|

+

'statistics.interval.ms': 1_000

|

|

340

|

+

}

|

|

341

|

+

end

|

|

248

342

|

|

|

249

|

-

|

|

343

|

+

# initialize the listener with statsd client

|

|

344

|

+

listener = ::WaterDrop::Instrumentation::Vendors::Datadog::Listener.new do |config|

|

|

345

|

+

config.client = Datadog::Statsd.new('localhost', 8125)

|

|

346

|

+

# Publish host as a tag alongside the rest of tags

|

|

347

|

+

config.default_tags = ["host:#{Socket.gethostname}"]

|

|

348

|

+

end

|

|

250

349

|

|

|

251

|

-

|

|

252

|

-

|

|

253

|

-

|

|

254

|

-

* [WaterDrop Coditsu](https://app.coditsu.io/karafka/repositories/waterdrop)

|

|

350

|

+

# Subscribe with your listener to your producer and you should be ready to go!

|

|

351

|

+

producer.monitor.subscribe(listener)

|

|

352

|

+

```

|

|

255

353

|

|

|

256

|

-

|

|

354

|

+

You can also find [here](https://github.com/karafka/waterdrop/blob/master/lib/waterdrop/instrumentation/vendors/datadog/dashboard.json) a ready to import DataDog dashboard configuration file that you can use to monitor all of your producers.

|

|

355

|

+

|

|

356

|

+

|

|

357

|

+

|

|

358

|

+

### Error notifications

|

|

359

|

+

|

|

360

|

+

WaterDrop allows you to listen to all errors that occur while producing messages and in its internal background threads. Things like reconnecting to Kafka upon network errors and others unrelated to publishing messages are all available under `error.occurred` notification key. You can subscribe to this event to ensure your setup is healthy and without any problems that would otherwise go unnoticed as long as messages are delivered.

|

|

257

361

|

|

|

258

|

-

|

|

362

|

+

```ruby

|

|

363

|

+

producer = WaterDrop::Producer.new do |config|

|

|

364

|

+

# Note invalid connection port...

|

|

365

|

+

config.kafka = { 'bootstrap.servers': 'localhost:9090' }

|

|

366

|

+

end

|

|

367

|

+

|

|

368

|

+

producer.monitor.subscribe('error.occurred') do |event|

|

|

369

|

+

error = event[:error]

|

|

370

|

+

|

|

371

|

+

p "WaterDrop error occurred: #{error}"

|

|

372

|

+

end

|

|

373

|

+

|

|

374

|

+

# Run this code without Kafka cluster

|

|

375

|

+

loop do

|

|

376

|

+

producer.produce_async(topic: 'events', payload: 'data')

|

|

377

|

+

|

|

378

|

+

sleep(1)

|

|

379

|

+

end

|

|

259

380

|

|

|

260

|

-

|

|

381

|

+

# After you stop your Kafka cluster, you will see a lot of those:

|

|

382

|

+

#

|

|

383

|

+

# WaterDrop error occurred: Local: Broker transport failure (transport)

|

|

384

|

+

#

|

|

385

|

+

# WaterDrop error occurred: Local: Broker transport failure (transport)

|

|

386

|

+

```

|

|

387

|

+

|

|

388

|

+

### Forking and potential memory problems

|

|

389

|

+

|

|

390

|

+

If you work with forked processes, make sure you **don't** use the producer before the fork. You can easily configure the producer and then fork and use it.

|

|

391

|

+

|

|

392

|

+

To tackle this [obstacle](https://github.com/appsignal/rdkafka-ruby/issues/15) related to rdkafka, WaterDrop adds finalizer to each of the producers to close the rdkafka client before the Ruby process is shutdown. Due to the [nature of the finalizers](https://www.mikeperham.com/2010/02/24/the-trouble-with-ruby-finalizers/), this implementation prevents producers from being GCed (except upon VM shutdown) and can cause memory leaks if you don't use persistent/long-lived producers in a long-running process or if you don't use the `#close` method of a producer when it is no longer needed. Creating a producer instance for each message is anyhow a rather bad idea, so we recommend not to.

|

|

393

|

+

|

|

394

|

+

## Note on contributions

|

|

261

395

|

|

|

262

|

-

|

|

396

|

+

First, thank you for considering contributing to the Karafka ecosystem! It's people like you that make the open source community such a great community!

|

|

263

397

|

|

|

264

|

-

|

|

398

|

+

Each pull request must pass all the RSpec specs, integration tests and meet our quality requirements.

|

|

265

399

|

|

|

266

|

-

|

|

400

|

+

Fork it, update and wait for the Github Actions results.

|

data/certs/mensfeld.pem

CHANGED

|

@@ -1,25 +1,25 @@

|

|

|

1

1

|

-----BEGIN CERTIFICATE-----

|

|

2

2

|

MIIEODCCAqCgAwIBAgIBATANBgkqhkiG9w0BAQsFADAjMSEwHwYDVQQDDBhtYWNp

|

|

3

|

-

|

|

4

|

-

|

|

5

|

-

|

|

6

|

-

|

|

7

|

-

|

|

8

|

-

|

|

9

|

-

|

|

10

|

-

|

|

11

|

-

|

|

12

|

-

|

|

13

|

-

|

|

14

|

-

|

|

3

|

+

ZWovREM9bWVuc2ZlbGQvREM9cGwwHhcNMjEwODExMTQxNTEzWhcNMjIwODExMTQx

|

|

4

|

+

NTEzWjAjMSEwHwYDVQQDDBhtYWNpZWovREM9bWVuc2ZlbGQvREM9cGwwggGiMA0G

|

|

5

|

+

CSqGSIb3DQEBAQUAA4IBjwAwggGKAoIBgQDV2jKH4Ti87GM6nyT6D+ESzTI0MZDj

|

|

6

|

+

ak2/TEwnxvijMJyCCPKT/qIkbW4/f0VHM4rhPr1nW73sb5SZBVFCLlJcOSKOBdUY

|

|

7

|

+

TMY+SIXN2EtUaZuhAOe8LxtxjHTgRHvHcqUQMBENXTISNzCo32LnUxweu66ia4Pd

|

|

8

|

+

1mNRhzOqNv9YiBZvtBf7IMQ+sYdOCjboq2dlsWmJiwiDpY9lQBTnWORnT3mQxU5x

|

|

9

|

+

vPSwnLB854cHdCS8fQo4DjeJBRZHhEbcE5sqhEMB3RZA3EtFVEXOxlNxVTS3tncI

|

|

10

|

+

qyNXiWDaxcipaens4ObSY1C2HTV7OWb7OMqSCIybeYTSfkaSdqmcl4S6zxXkjH1J

|

|

11

|

+

tnjayAVzD+QVXGijsPLE2PFnJAh9iDET2cMsjabO1f6l1OQNyAtqpcyQcgfnyW0z

|

|

12

|

+

g7tGxTYD+6wJHffM9d9txOUw6djkF6bDxyqB8lo4Z3IObCx18AZjI9XPS9QG7w6q

|

|

13

|

+

LCWuMG2lkCcRgASqaVk9fEf9yMc2xxz5o3kCAwEAAaN3MHUwCQYDVR0TBAIwADAL

|

|

14

|

+

BgNVHQ8EBAMCBLAwHQYDVR0OBBYEFBqUFCKCOe5IuueUVqOB991jyCLLMB0GA1Ud

|

|

15

15

|

EQQWMBSBEm1hY2llakBtZW5zZmVsZC5wbDAdBgNVHRIEFjAUgRJtYWNpZWpAbWVu

|

|

16

|

-

|

|

17

|

-

|

|

18

|

-

|

|

19

|

-

|

|

20

|

-

|

|

21

|

-

|

|

22

|

-

|

|

23

|

-

|

|

24

|

-

|

|

16

|

+

c2ZlbGQucGwwDQYJKoZIhvcNAQELBQADggGBADD0/UuTTFgW+CGk2U0RDw2RBOca

|

|

17

|

+

W2LTF/G7AOzuzD0Tc4voc7WXyrgKwJREv8rgBimLnNlgmFJLmtUCh2U/MgxvcilH

|

|

18

|

+

yshYcbseNvjkrtYnLRlWZR4SSB6Zei5AlyGVQLPkvdsBpNegcG6w075YEwzX/38a

|

|

19

|

+

8V9B/Yri2OGELBz8ykl7BsXUgNoUPA/4pHF6YRLz+VirOaUIQ4JfY7xGj6fSOWWz

|

|

20

|

+

/rQ/d77r6o1mfJYM/3BRVg73a3b7DmRnE5qjwmSaSQ7u802pJnLesmArch0xGCT/

|

|

21

|

+

fMmRli1Qb+6qOTl9mzD6UDMAyFR4t6MStLm0mIEqM0nBO5nUdUWbC7l9qXEf8XBE

|

|

22

|

+

2DP28p3EqSuS+lKbAWKcqv7t0iRhhmaod+Yn9mcrLN1sa3q3KSQ9BCyxezCD4Mk2

|

|

23

|

+

R2P11bWoCtr70BsccVrN8jEhzwXngMyI2gVt750Y+dbTu1KgRqZKp/ECe7ZzPzXj

|

|

24

|

+

pIy9vHxTANKYVyI4qj8OrFdEM5BQNu8oQpL0iQ==

|

|

25

25

|

-----END CERTIFICATE-----

|

data/config/errors.yml

CHANGED

|

@@ -1,6 +1,30 @@

|

|

|

1

1

|

en:

|

|

2

|

-

|

|

3

|

-

|

|

4

|

-

|

|

5

|

-

|

|

6

|

-

|

|

2

|

+

validations:

|

|

3

|

+

config:

|

|

4

|

+

missing: must be present

|

|

5

|

+

logger_format: must be present

|

|

6

|

+

deliver_format: must be boolean

|

|

7

|

+

id_format: must be a non-empty string

|

|

8

|

+

max_payload_size_format: must be an integer that is equal or bigger than 1

|

|

9

|

+

wait_timeout_format: must be a numeric that is bigger than 0

|

|

10

|

+

max_wait_timeout_format: must be an integer that is equal or bigger than 0

|

|

11

|

+

kafka_format: must be a hash with symbol based keys

|

|

12

|

+

kafka_key_must_be_a_symbol: All keys under the kafka settings scope need to be symbols

|

|

13

|

+

|

|

14

|

+

message:

|

|

15

|

+

missing: must be present

|

|

16

|

+

partition_format: must be an integer greater or equal to -1

|

|

17

|

+

topic_format: 'does not match the topic allowed format'

|

|

18

|

+

partition_key_format: must be a non-empty string

|

|

19

|

+

timestamp_format: must be either time or integer

|

|

20

|

+

payload_format: must be string

|

|

21

|

+

headers_format: must be a hash

|

|

22

|

+

key_format: must be a non-empty string

|

|

23

|

+

payload_max_size: is more than `max_payload_size` config value

|

|

24

|

+

headers_invalid_key_type: all headers keys need to be of type String

|

|

25

|

+

headers_invalid_value_type: all headers values need to be of type String

|

|

26

|

+

|

|

27

|

+

test:

|

|

28

|

+

missing: must be present

|

|

29

|

+

nested.id_format: 'is invalid'

|

|

30

|

+

nested.id2_format: 'is invalid'

|

data/docker-compose.yml

CHANGED

|

@@ -5,7 +5,7 @@ services:

|

|

|

5

5

|

ports:

|

|

6

6

|

- "2181:2181"

|

|

7

7

|

kafka:

|

|

8

|

-

image: wurstmeister/kafka:

|

|

8

|

+

image: wurstmeister/kafka:2.12-2.5.0

|

|

9

9

|

ports:

|

|

10

10

|

- "9092:9092"

|

|

11

11

|

environment:

|

|

@@ -13,5 +13,6 @@ services:

|

|

|

13

13

|

KAFKA_ADVERTISED_PORT: 9092

|

|

14

14

|

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

|

|

15

15

|

KAFKA_AUTO_CREATE_TOPICS_ENABLE: 'true'

|

|

16

|

+

KAFKA_CREATE_TOPICS: 'example_topic:1:1'

|

|

16

17

|

volumes:

|

|

17

18

|

- /var/run/docker.sock:/var/run/docker.sock

|

|

@@ -5,35 +5,55 @@

|

|

|

5

5

|

module WaterDrop

|

|

6

6

|

# Configuration object for setting up all options required by WaterDrop

|

|

7

7

|

class Config

|

|

8

|

-

include

|

|

8

|

+

include ::Karafka::Core::Configurable

|

|

9

|

+

|

|

10

|

+

# Defaults for kafka settings, that will be overwritten only if not present already

|

|

11

|

+

KAFKA_DEFAULTS = {

|

|

12

|

+

'client.id': 'waterdrop'

|

|

13

|

+

}.freeze

|

|

14

|

+

|

|

15

|

+

private_constant :KAFKA_DEFAULTS

|

|

9

16

|

|

|

10

17

|

# WaterDrop options

|

|

11

18

|

#

|

|

12

19

|

# option [String] id of the producer. This can be helpful when building producer specific

|

|

13

|

-

# instrumentation or loggers. It is not the kafka

|

|

14

|

-

|

|

20

|

+

# instrumentation or loggers. It is not the kafka client id. It is an id that should be

|

|

21

|

+

# unique for each of the producers

|

|

22

|

+

setting(

|

|

23

|

+

:id,

|

|

24

|

+

default: false,

|

|

25

|

+

constructor: ->(id) { id || SecureRandom.uuid }

|

|

26

|

+

)

|

|

15

27

|

# option [Instance] logger that we want to use

|

|

16

28

|

# @note Due to how rdkafka works, this setting is global for all the producers

|

|

17

|

-

setting(

|

|

29

|

+

setting(

|

|

30

|

+

:logger,

|

|

31

|

+

default: false,

|

|

32

|

+

constructor: ->(logger) { logger || Logger.new($stdout, level: Logger::WARN) }

|

|

33

|

+

)

|

|

18

34

|

# option [Instance] monitor that we want to use. See instrumentation part of the README for

|

|

19

35

|

# more details

|

|

20

|

-

setting(

|

|

36

|

+

setting(

|

|

37

|

+

:monitor,

|

|

38

|

+

default: false,

|

|

39

|

+

constructor: ->(monitor) { monitor || WaterDrop::Instrumentation::Monitor.new }

|

|

40

|

+

)

|

|

21

41

|

# option [Integer] max payload size allowed for delivery to Kafka

|

|

22

|

-

setting :max_payload_size, 1_000_012

|

|

42

|

+

setting :max_payload_size, default: 1_000_012

|

|

23

43

|

# option [Integer] Wait that long for the delivery report or raise an error if this takes

|

|

24

44

|

# longer than the timeout.

|

|

25

|

-

setting :max_wait_timeout, 5

|

|

45

|

+

setting :max_wait_timeout, default: 5

|

|

26

46

|

# option [Numeric] how long should we wait between re-checks on the availability of the

|

|

27

47

|

# delivery report. In a really robust systems, this describes the min-delivery time

|

|

28

48

|

# for a single sync message when produced in isolation

|

|

29

|

-

setting :wait_timeout, 0.005 # 5 milliseconds

|

|

49

|

+

setting :wait_timeout, default: 0.005 # 5 milliseconds

|

|

30

50

|

# option [Boolean] should we send messages. Setting this to false can be really useful when

|

|

31

51

|

# testing and or developing because when set to false, won't actually ping Kafka but will

|

|

32

52

|

# run all the validations, etc

|

|

33

|

-

setting :deliver, true

|

|

53

|

+

setting :deliver, default: true

|

|

34

54

|

# rdkafka options

|

|

35

55

|

# @see https://github.com/edenhill/librdkafka/blob/master/CONFIGURATION.md

|

|

36

|

-

setting :kafka, {}

|

|

56

|

+

setting :kafka, default: {}

|

|

37

57

|

|

|

38

58

|

# Configuration method

|

|

39

59

|

# @yield Runs a block of code providing a config singleton instance to it

|

|

@@ -41,21 +61,29 @@ module WaterDrop

|

|

|

41

61

|

def setup

|

|

42

62

|

configure do |config|

|

|

43

63

|

yield(config)

|

|

44

|

-

|

|

64

|

+

|

|

65

|

+

merge_kafka_defaults!(config)

|

|

66

|

+

|

|

67

|

+

Contracts::Config.new.validate!(config.to_h, Errors::ConfigurationInvalidError)

|

|

68

|

+

|

|

69

|

+

::Rdkafka::Config.logger = config.logger

|

|

45

70

|

end

|

|

71

|

+

|

|

72

|

+

self

|

|

46

73

|

end

|

|

47

74

|

|

|

48

75

|

private

|

|

49

76

|

|

|

50

|

-

#

|

|

51

|

-

#

|

|

52

|

-

#

|

|

53

|

-

#

|

|

54

|

-

def

|

|

55

|

-

|

|

56

|

-

|

|

77

|

+

# Propagates the kafka setting defaults unless they are already present

|

|

78

|

+

# This makes it easier to set some values that users usually don't change but still allows them

|

|

79

|

+

# to overwrite the whole hash if they want to

|

|

80

|

+

# @param config [WaterDrop::Configurable::Node] config of this producer

|

|

81

|

+

def merge_kafka_defaults!(config)

|

|

82

|

+

KAFKA_DEFAULTS.each do |key, value|

|

|

83

|

+

next if config.kafka.key?(key)

|

|

57

84

|

|

|

58

|

-

|

|

85

|

+

config.kafka[key] = value

|

|

86

|

+

end

|

|

59

87

|

end

|

|

60

88

|

end

|

|

61

89

|

end

|

|

@@ -0,0 +1,40 @@

|

|

|

1

|

+

# frozen_string_literal: true

|

|

2

|

+

|

|

3

|

+

module WaterDrop

|

|

4

|

+

module Contracts

|

|

5

|

+

# Contract with validation rules for WaterDrop configuration details

|

|

6

|

+

class Config < ::Karafka::Core::Contractable::Contract

|

|

7

|

+

configure do |config|

|

|

8

|

+

config.error_messages = YAML.safe_load(

|

|

9

|

+

File.read(

|

|

10

|

+

File.join(WaterDrop.gem_root, 'config', 'errors.yml')

|

|

11

|

+

)

|

|

12

|

+

).fetch('en').fetch('validations').fetch('config')

|

|

13

|

+

end

|

|

14

|

+

|

|

15

|

+

required(:id) { |val| val.is_a?(String) && !val.empty? }

|

|

16

|

+

required(:logger) { |val| !val.nil? }

|

|

17

|

+

required(:deliver) { |val| [true, false].include?(val) }

|

|

18

|

+

required(:max_payload_size) { |val| val.is_a?(Integer) && val >= 1 }

|

|

19

|

+

required(:max_wait_timeout) { |val| val.is_a?(Numeric) && val >= 0 }

|

|

20

|

+

required(:wait_timeout) { |val| val.is_a?(Numeric) && val.positive? }

|

|

21

|

+

required(:kafka) { |val| val.is_a?(Hash) && !val.empty? }

|

|

22

|

+

|

|

23

|

+

# rdkafka allows both symbols and strings as keys for config but then casts them to strings

|

|

24

|

+

# This can be confusing, so we expect all keys to be symbolized

|

|

25

|

+

virtual do |config, errors|

|

|

26

|

+

next true unless errors.empty?

|

|

27

|

+

|

|

28

|

+

errors = []

|

|

29

|

+

|

|

30

|

+

config

|

|

31

|

+

.fetch(:kafka)

|

|

32

|

+

.keys

|

|

33

|

+

.reject { |key| key.is_a?(Symbol) }

|

|

34

|

+

.each { |key| errors << [[:kafka, key], :kafka_key_must_be_a_symbol] }

|

|

35

|

+

|

|

36

|

+

errors

|

|

37

|

+

end

|

|

38

|

+

end

|

|

39

|

+

end

|

|

40

|

+

end

|

|

@@ -0,0 +1,60 @@

|

|

|

1

|

+

# frozen_string_literal: true

|

|

2

|

+

|

|

3

|

+

module WaterDrop

|

|

4

|

+

module Contracts

|

|

5

|

+

# Contract with validation rules for validating that all the message options that

|

|

6

|

+

# we provide to producer ale valid and usable

|

|

7

|

+

class Message < ::Karafka::Core::Contractable::Contract

|

|

8

|

+

configure do |config|

|

|

9

|

+

config.error_messages = YAML.safe_load(

|

|

10

|

+

File.read(

|

|

11

|

+

File.join(WaterDrop.gem_root, 'config', 'errors.yml')

|

|

12

|

+

)

|

|

13

|

+

).fetch('en').fetch('validations').fetch('message')

|

|

14

|

+

end

|

|

15

|

+

|

|

16

|

+

# Regex to check that topic has a valid format

|

|

17

|

+

TOPIC_REGEXP = /\A(\w|-|\.)+\z/

|

|

18

|

+

|

|

19

|

+

private_constant :TOPIC_REGEXP

|

|

20

|

+

|

|

21

|

+

attr_reader :max_payload_size

|

|

22

|

+

|

|

23

|

+

# @param max_payload_size [Integer] max payload size

|

|

24

|

+

def initialize(max_payload_size:)

|

|

25

|

+

super()

|

|

26

|

+

@max_payload_size = max_payload_size

|

|

27

|

+

end

|

|

28

|

+

|

|

29

|

+

required(:topic) { |val| val.is_a?(String) && TOPIC_REGEXP.match?(val) }

|

|

30

|

+

required(:payload) { |val| val.is_a?(String) }

|

|

31

|

+

optional(:key) { |val| val.nil? || (val.is_a?(String) && !val.empty?) }

|

|

32

|

+

optional(:partition) { |val| val.is_a?(Integer) && val >= -1 }

|

|

33

|

+

optional(:partition_key) { |val| val.nil? || (val.is_a?(String) && !val.empty?) }

|

|

34

|

+

optional(:timestamp) { |val| val.nil? || (val.is_a?(Time) || val.is_a?(Integer)) }

|

|

35

|

+

optional(:headers) { |val| val.nil? || val.is_a?(Hash) }

|

|

36

|

+

|

|

37

|

+

virtual do |config, errors|

|

|

38

|

+

next true unless errors.empty?

|

|

39

|

+

next true unless config.key?(:headers)

|

|

40

|

+

next true if config[:headers].nil?

|

|

41

|

+

|

|

42

|

+

errors = []

|

|

43

|

+

|

|

44

|

+

config.fetch(:headers).each do |key, value|

|

|

45

|

+

errors << [%i[headers], :invalid_key_type] unless key.is_a?(String)

|

|

46

|

+

errors << [%i[headers], :invalid_value_type] unless value.is_a?(String)

|

|

47

|

+

end

|

|

48

|

+

|

|

49

|

+

errors

|

|

50

|

+

end

|

|

51

|

+

|

|

52

|

+

virtual do |config, errors, validator|

|

|

53

|

+

next true unless errors.empty?

|

|

54

|

+

next true if config[:payload].bytesize <= validator.max_payload_size

|

|

55

|

+

|

|

56

|

+

[[%i[payload], :max_size]]

|

|

57

|

+

end

|

|

58

|

+

end

|

|

59

|

+

end

|

|

60

|

+

end

|

|

@@ -0,0 +1,30 @@

|

|

|

1

|

+

# frozen_string_literal: true

|

|

2

|

+

|

|

3

|

+

module WaterDrop

|

|

4

|

+

module Instrumentation

|

|

5

|

+

module Callbacks

|

|

6

|

+

# Creates a callable that we want to run upon each message delivery or failure

|

|

7

|

+

#

|

|

8

|

+

# @note We don't have to provide client_name here as this callback is per client instance

|

|

9

|

+

class Delivery

|

|

10

|

+

# @param producer_id [String] id of the current producer

|

|

11

|

+

# @param monitor [WaterDrop::Instrumentation::Monitor] monitor we are using

|

|

12

|

+

def initialize(producer_id, monitor)

|

|

13

|

+

@producer_id = producer_id

|

|

14

|

+

@monitor = monitor

|

|

15

|

+

end

|

|

16

|

+

|

|

17

|

+

# Emits delivery details to the monitor

|

|

18

|

+

# @param delivery_report [Rdkafka::Producer::DeliveryReport] delivery report

|

|

19

|

+

def call(delivery_report)

|

|

20

|

+

@monitor.instrument(

|

|

21

|

+

'message.acknowledged',

|

|

22

|

+

producer_id: @producer_id,

|

|

23

|

+

offset: delivery_report.offset,

|

|

24

|

+

partition: delivery_report.partition

|

|

25

|

+

)

|

|

26

|

+

end

|

|

27

|

+

end

|

|

28

|

+

end

|

|

29

|

+

end

|

|

30

|

+

end

|