vexor 0.19.0a1__tar.gz → 0.21.0__tar.gz

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- {vexor-0.19.0a1 → vexor-0.21.0}/PKG-INFO +42 -30

- {vexor-0.19.0a1 → vexor-0.21.0}/README.md +41 -29

- {vexor-0.19.0a1 → vexor-0.21.0}/plugins/vexor/.claude-plugin/plugin.json +1 -1

- {vexor-0.19.0a1 → vexor-0.21.0}/plugins/vexor/skills/vexor-cli/SKILL.md +1 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/__init__.py +4 -2

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/api.py +87 -1

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/cache.py +483 -275

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/cli.py +78 -5

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/config.py +240 -2

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/providers/gemini.py +79 -13

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/providers/openai.py +79 -13

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/services/config_service.py +14 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/services/index_service.py +285 -4

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/services/search_service.py +235 -24

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/text.py +14 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/.gitignore +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/LICENSE +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/gui/README.md +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/plugins/vexor/README.md +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/plugins/vexor/skills/vexor-cli/references/install-vexor.md +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/pyproject.toml +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/__main__.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/modes.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/output.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/providers/__init__.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/providers/local.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/search.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/services/__init__.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/services/cache_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/services/content_extract_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/services/init_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/services/js_parser.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/services/keyword_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/services/skill_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/services/system_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.21.0}/vexor/utils.py +0 -0

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: vexor

|

|

3

|

-

Version: 0.

|

|

3

|

+

Version: 0.21.0

|

|

4

4

|

Summary: A vector-powered CLI for semantic search over files.

|

|

5

5

|

Project-URL: Repository, https://github.com/scarletkc/vexor

|

|

6

6

|

Author: scarletkc

|

|

@@ -69,9 +69,8 @@ Description-Content-Type: text/markdown

|

|

|

69

69

|

|

|

70

70

|

---

|

|

71

71

|

|

|

72

|

-

**Vexor** is a

|

|

73

|

-

|

|

74

|

-

|

|

72

|

+

**Vexor** is a semantic search engine that builds reusable indexes over files and code.

|

|

73

|

+

It supports configurable embedding and reranking providers, and exposes the same core through a Python API, a CLI tool, and an optional desktop frontend.

|

|

75

74

|

|

|

76

75

|

<video src="https://github.com/user-attachments/assets/4d53eefd-ab35-4232-98a7-f8dc005983a9" controls="controls" style="max-width: 600px;">

|

|

77

76

|

Vexor Demo Video

|

|

@@ -98,18 +97,13 @@ vexor init

|

|

|

98

97

|

```

|

|

99

98

|

The wizard also runs automatically on first use when no config exists.

|

|

100

99

|

|

|

101

|

-

### 1.

|

|

102

|

-

```bash

|

|

103

|

-

vexor config --set-api-key "YOUR_KEY"

|

|

104

|

-

```

|

|

105

|

-

Or via environment: `VEXOR_API_KEY`, `OPENAI_API_KEY`, or `GOOGLE_GENAI_API_KEY`.

|

|

106

|

-

|

|

107

|

-

### 2. Search

|

|

100

|

+

### 1. Search

|

|

108

101

|

```bash

|

|

109

|

-

vexor "api client config" # defaults to search

|

|

110

|

-

vexor search "api client config" # searches current directory

|

|

102

|

+

vexor "api client config" # defaults to search current directory

|

|

111

103

|

# or explicit path:

|

|

112

104

|

vexor search "api client config" --path ~/projects/demo --top 5

|

|

105

|

+

# in-memory search only:

|

|

106

|

+

vexor search "api client config" --no-cache

|

|

113

107

|

```

|

|

114

108

|

|

|

115

109

|

Vexor auto-indexes on first search. Example output:

|

|

@@ -122,7 +116,7 @@ Vexor semantic file search results

|

|

|

122

116

|

3 0.809 ./tests/test_config_loader.py - tests for config loader

|

|

123

117

|

```

|

|

124

118

|

|

|

125

|

-

###

|

|

119

|

+

### 2. Explicit Index (Optional)

|

|

126

120

|

```bash

|

|

127

121

|

vexor index # indexes current directory

|

|

128

122

|

# or explicit path:

|

|

@@ -130,6 +124,15 @@ vexor index --path ~/projects/demo --mode code

|

|

|

130

124

|

```

|

|

131

125

|

Useful for CI warmup or when `auto_index` is disabled.

|

|

132

126

|

|

|

127

|

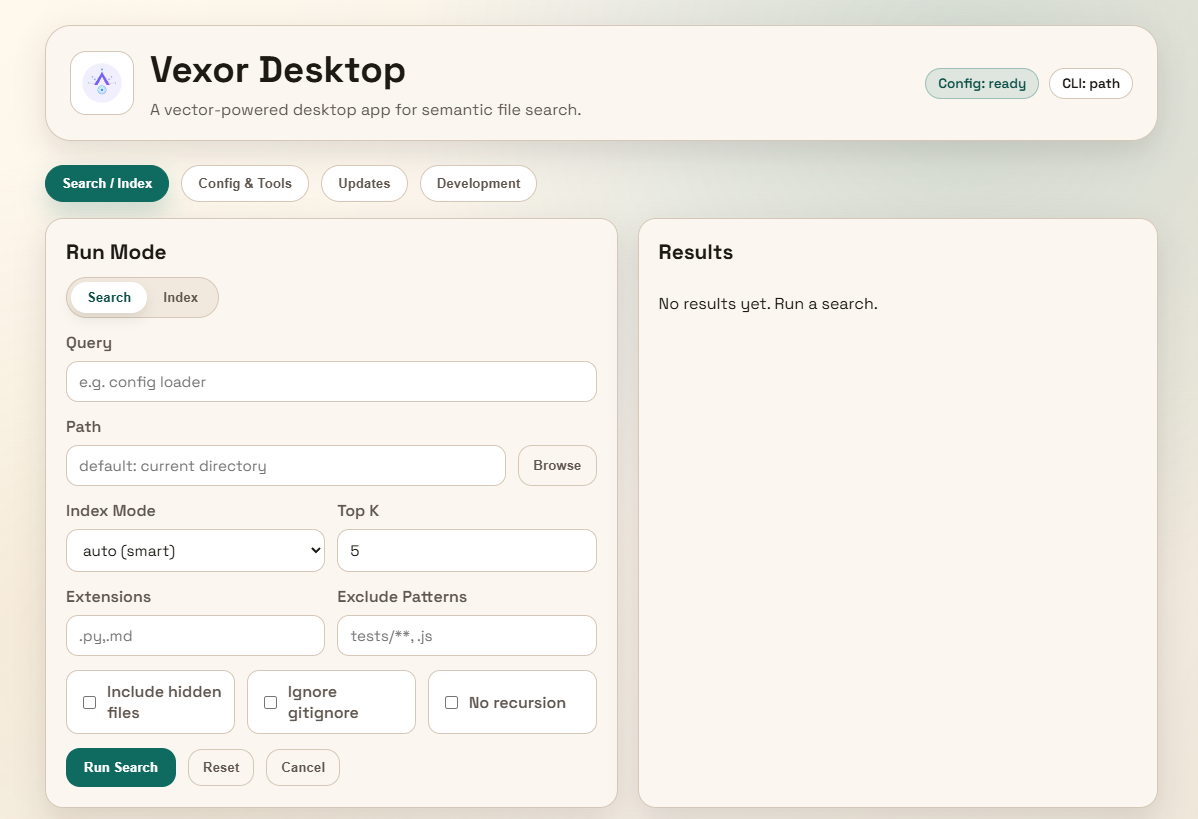

+

## Desktop App (Experimental)

|

|

128

|

+

|

|

129

|

+

> The desktop app is experimental and not actively maintained.

|

|

130

|

+

> It may be unstable. For production use, prefer the CLI.

|

|

131

|

+

|

|

132

|

+

|

|

133

|

+

|

|

134

|

+

Download the desktop app from [releases](https://github.com/scarletkc/vexor/releases).

|

|

135

|

+

|

|

133

136

|

## Python API

|

|

134

137

|

|

|

135

138

|

Vexor can also be imported and used directly from Python:

|

|

@@ -144,8 +147,19 @@ for hit in response.results:

|

|

|

144

147

|

print(hit.path, hit.score)

|

|

145

148

|

```

|

|

146

149

|

|

|

147

|

-

By default it reads `~/.vexor/config.json`.

|

|

148

|

-

|

|

150

|

+

By default it reads `~/.vexor/config.json`. For runtime config overrides, cache

|

|

151

|

+

controls, and per-call options, see [`docs/api/python.md`](https://github.com/scarletkc/vexor/tree/main/docs/api/python.md).

|

|

152

|

+

|

|

153

|

+

## AI Agent Skill

|

|

154

|

+

|

|

155

|

+

This repo includes a skill for AI agents to use Vexor effectively:

|

|

156

|

+

|

|

157

|

+

```bash

|

|

158

|

+

vexor install --skills claude # Claude Code

|

|

159

|

+

vexor install --skills codex # Codex

|

|

160

|

+

```

|

|

161

|

+

|

|

162

|

+

Skill source: [`plugins/vexor/skills/vexor-cli`](https://github.com/scarletkc/vexor/raw/refs/heads/main/plugins/vexor/skills/vexor-cli/SKILL.md)

|

|

149

163

|

|

|

150

164

|

## Configuration

|

|

151

165

|

|

|

@@ -153,7 +167,9 @@ set `use_config=False`.

|

|

|

153

167

|

vexor config --set-provider openai # default; also supports gemini/custom/local

|

|

154

168

|

vexor config --set-model text-embedding-3-small

|

|

155

169

|

vexor config --set-batch-size 0 # 0 = single request

|

|

156

|

-

vexor config --set-embed-concurrency

|

|

170

|

+

vexor config --set-embed-concurrency 4 # parallel embedding requests

|

|

171

|

+

vexor config --set-extract-concurrency 4 # parallel file extraction workers

|

|

172

|

+

vexor config --set-extract-backend auto # auto|thread|process (default: auto)

|

|

157

173

|

vexor config --set-auto-index true # auto-index before search (default)

|

|

158

174

|

vexor config --rerank bm25 # optional BM25 rerank for top-k results

|

|

159

175

|

vexor config --rerank flashrank # FlashRank rerank (requires optional extra)

|

|

@@ -175,10 +191,16 @@ FlashRank requires `pip install "vexor[flashrank]"` and caches models under `~/.

|

|

|

175

191

|

|

|

176

192

|

Config stored in `~/.vexor/config.json`.

|

|

177

193

|

|

|

194

|

+

### Configure API Key

|

|

195

|

+

```bash

|

|

196

|

+

vexor config --set-api-key "YOUR_KEY"

|

|

197

|

+

```

|

|

198

|

+

Or via environment: `VEXOR_API_KEY`, `OPENAI_API_KEY`, or `GOOGLE_GENAI_API_KEY`.

|

|

199

|

+

|

|

178

200

|

### Rerank

|

|

179

201

|

|

|

180

|

-

Rerank reorders the semantic results with a secondary ranker.

|

|

181

|

-

|

|

202

|

+

Rerank reorders the semantic results with a secondary ranker. Candidate sizing uses

|

|

203

|

+

`clamp(int(--top * 2), 20, 150)`.

|

|

182

204

|

|

|

183

205

|

Recommended defaults:

|

|

184

206

|

- Keep `off` unless you want extra precision.

|

|

@@ -285,20 +307,10 @@ Re-running `vexor index` only re-embeds changed files; >50% changes trigger full

|

|

|

285

307

|

| `--no-respect-gitignore` | Include gitignored files |

|

|

286

308

|

| `--format porcelain` | Script-friendly TSV output |

|

|

287

309

|

| `--format porcelain-z` | NUL-delimited output |

|

|

310

|

+

| `--no-cache` | In-memory only; do not read/write index cache |

|

|

288

311

|

|

|

289

312

|

Porcelain output fields: `rank`, `similarity`, `path`, `chunk_index`, `start_line`, `end_line`, `preview` (line fields are `-` when unavailable).

|

|

290

313

|

|

|

291

|

-

## AI Agent Skill

|

|

292

|

-

|

|

293

|

-

This repo includes a skill for AI agents to use Vexor effectively:

|

|

294

|

-

|

|

295

|

-

```bash

|

|

296

|

-

vexor install --skills claude # Claude Code

|

|

297

|

-

vexor install --skills codex # Codex

|

|

298

|

-

```

|

|

299

|

-

|

|

300

|

-

Skill source: [`plugins/vexor/skills/vexor-cli`](https://github.com/scarletkc/vexor/raw/refs/heads/main/plugins/vexor/skills/vexor-cli/SKILL.md)

|

|

301

|

-

|

|

302

314

|

## Documentation

|

|

303

315

|

|

|

304

316

|

See [docs](https://github.com/scarletkc/vexor/tree/main/docs) for more details.

|

|

@@ -14,9 +14,8 @@

|

|

|

14

14

|

|

|

15

15

|

---

|

|

16

16

|

|

|

17

|

-

**Vexor** is a

|

|

18

|

-

|

|

19

|

-

|

|

17

|

+

**Vexor** is a semantic search engine that builds reusable indexes over files and code.

|

|

18

|

+

It supports configurable embedding and reranking providers, and exposes the same core through a Python API, a CLI tool, and an optional desktop frontend.

|

|

20

19

|

|

|

21

20

|

<video src="https://github.com/user-attachments/assets/4d53eefd-ab35-4232-98a7-f8dc005983a9" controls="controls" style="max-width: 600px;">

|

|

22

21

|

Vexor Demo Video

|

|

@@ -43,18 +42,13 @@ vexor init

|

|

|

43

42

|

```

|

|

44

43

|

The wizard also runs automatically on first use when no config exists.

|

|

45

44

|

|

|

46

|

-

### 1.

|

|

47

|

-

```bash

|

|

48

|

-

vexor config --set-api-key "YOUR_KEY"

|

|

49

|

-

```

|

|

50

|

-

Or via environment: `VEXOR_API_KEY`, `OPENAI_API_KEY`, or `GOOGLE_GENAI_API_KEY`.

|

|

51

|

-

|

|

52

|

-

### 2. Search

|

|

45

|

+

### 1. Search

|

|

53

46

|

```bash

|

|

54

|

-

vexor "api client config" # defaults to search

|

|

55

|

-

vexor search "api client config" # searches current directory

|

|

47

|

+

vexor "api client config" # defaults to search current directory

|

|

56

48

|

# or explicit path:

|

|

57

49

|

vexor search "api client config" --path ~/projects/demo --top 5

|

|

50

|

+

# in-memory search only:

|

|

51

|

+

vexor search "api client config" --no-cache

|

|

58

52

|

```

|

|

59

53

|

|

|

60

54

|

Vexor auto-indexes on first search. Example output:

|

|

@@ -67,7 +61,7 @@ Vexor semantic file search results

|

|

|

67

61

|

3 0.809 ./tests/test_config_loader.py - tests for config loader

|

|

68

62

|

```

|

|

69

63

|

|

|

70

|

-

###

|

|

64

|

+

### 2. Explicit Index (Optional)

|

|

71

65

|

```bash

|

|

72

66

|

vexor index # indexes current directory

|

|

73

67

|

# or explicit path:

|

|

@@ -75,6 +69,15 @@ vexor index --path ~/projects/demo --mode code

|

|

|

75

69

|

```

|

|

76

70

|

Useful for CI warmup or when `auto_index` is disabled.

|

|

77

71

|

|

|

72

|

+

## Desktop App (Experimental)

|

|

73

|

+

|

|

74

|

+

> The desktop app is experimental and not actively maintained.

|

|

75

|

+

> It may be unstable. For production use, prefer the CLI.

|

|

76

|

+

|

|

77

|

+

|

|

78

|

+

|

|

79

|

+

Download the desktop app from [releases](https://github.com/scarletkc/vexor/releases).

|

|

80

|

+

|

|

78

81

|

## Python API

|

|

79

82

|

|

|

80

83

|

Vexor can also be imported and used directly from Python:

|

|

@@ -89,8 +92,19 @@ for hit in response.results:

|

|

|

89

92

|

print(hit.path, hit.score)

|

|

90

93

|

```

|

|

91

94

|

|

|

92

|

-

By default it reads `~/.vexor/config.json`.

|

|

93

|

-

|

|

95

|

+

By default it reads `~/.vexor/config.json`. For runtime config overrides, cache

|

|

96

|

+

controls, and per-call options, see [`docs/api/python.md`](https://github.com/scarletkc/vexor/tree/main/docs/api/python.md).

|

|

97

|

+

|

|

98

|

+

## AI Agent Skill

|

|

99

|

+

|

|

100

|

+

This repo includes a skill for AI agents to use Vexor effectively:

|

|

101

|

+

|

|

102

|

+

```bash

|

|

103

|

+

vexor install --skills claude # Claude Code

|

|

104

|

+

vexor install --skills codex # Codex

|

|

105

|

+

```

|

|

106

|

+

|

|

107

|

+

Skill source: [`plugins/vexor/skills/vexor-cli`](https://github.com/scarletkc/vexor/raw/refs/heads/main/plugins/vexor/skills/vexor-cli/SKILL.md)

|

|

94

108

|

|

|

95

109

|

## Configuration

|

|

96

110

|

|

|

@@ -98,7 +112,9 @@ set `use_config=False`.

|

|

|

98

112

|

vexor config --set-provider openai # default; also supports gemini/custom/local

|

|

99

113

|

vexor config --set-model text-embedding-3-small

|

|

100

114

|

vexor config --set-batch-size 0 # 0 = single request

|

|

101

|

-

vexor config --set-embed-concurrency

|

|

115

|

+

vexor config --set-embed-concurrency 4 # parallel embedding requests

|

|

116

|

+

vexor config --set-extract-concurrency 4 # parallel file extraction workers

|

|

117

|

+

vexor config --set-extract-backend auto # auto|thread|process (default: auto)

|

|

102

118

|

vexor config --set-auto-index true # auto-index before search (default)

|

|

103

119

|

vexor config --rerank bm25 # optional BM25 rerank for top-k results

|

|

104

120

|

vexor config --rerank flashrank # FlashRank rerank (requires optional extra)

|

|

@@ -120,10 +136,16 @@ FlashRank requires `pip install "vexor[flashrank]"` and caches models under `~/.

|

|

|

120

136

|

|

|

121

137

|

Config stored in `~/.vexor/config.json`.

|

|

122

138

|

|

|

139

|

+

### Configure API Key

|

|

140

|

+

```bash

|

|

141

|

+

vexor config --set-api-key "YOUR_KEY"

|

|

142

|

+

```

|

|

143

|

+

Or via environment: `VEXOR_API_KEY`, `OPENAI_API_KEY`, or `GOOGLE_GENAI_API_KEY`.

|

|

144

|

+

|

|

123

145

|

### Rerank

|

|

124

146

|

|

|

125

|

-

Rerank reorders the semantic results with a secondary ranker.

|

|

126

|

-

|

|

147

|

+

Rerank reorders the semantic results with a secondary ranker. Candidate sizing uses

|

|

148

|

+

`clamp(int(--top * 2), 20, 150)`.

|

|

127

149

|

|

|

128

150

|

Recommended defaults:

|

|

129

151

|

- Keep `off` unless you want extra precision.

|

|

@@ -230,20 +252,10 @@ Re-running `vexor index` only re-embeds changed files; >50% changes trigger full

|

|

|

230

252

|

| `--no-respect-gitignore` | Include gitignored files |

|

|

231

253

|

| `--format porcelain` | Script-friendly TSV output |

|

|

232

254

|

| `--format porcelain-z` | NUL-delimited output |

|

|

255

|

+

| `--no-cache` | In-memory only; do not read/write index cache |

|

|

233

256

|

|

|

234

257

|

Porcelain output fields: `rank`, `similarity`, `path`, `chunk_index`, `start_line`, `end_line`, `preview` (line fields are `-` when unavailable).

|

|

235

258

|

|

|

236

|

-

## AI Agent Skill

|

|

237

|

-

|

|

238

|

-

This repo includes a skill for AI agents to use Vexor effectively:

|

|

239

|

-

|

|

240

|

-

```bash

|

|

241

|

-

vexor install --skills claude # Claude Code

|

|

242

|

-

vexor install --skills codex # Codex

|

|

243

|

-

```

|

|

244

|

-

|

|

245

|

-

Skill source: [`plugins/vexor/skills/vexor-cli`](https://github.com/scarletkc/vexor/raw/refs/heads/main/plugins/vexor/skills/vexor-cli/SKILL.md)

|

|

246

|

-

|

|

247

259

|

## Documentation

|

|

248

260

|

|

|

249

261

|

See [docs](https://github.com/scarletkc/vexor/tree/main/docs) for more details.

|

|

@@ -31,6 +31,7 @@ vexor "<QUERY>" [--path <ROOT>] [--mode <MODE>] [--ext .py,.md] [--exclude-patte

|

|

|

31

31

|

- `--no-respect-gitignore`: include ignored files

|

|

32

32

|

- `--no-recursive`: only the top directory

|

|

33

33

|

- `--format`: `rich` (default) or `porcelain`/`porcelain-z` for scripts

|

|

34

|

+

- `--no-cache`: in-memory only, do not read/write index cache

|

|

34

35

|

|

|

35

36

|

## Modes (pick the cheapest that works)

|

|

36

37

|

|

|

@@ -2,7 +2,7 @@

|

|

|

2

2

|

|

|

3

3

|

from __future__ import annotations

|

|

4

4

|

|

|

5

|

-

from .api import VexorError, clear_index, index, search

|

|

5

|

+

from .api import VexorError, clear_index, index, search, set_config_json, set_data_dir

|

|

6

6

|

|

|

7

7

|

__all__ = [

|

|

8

8

|

"__version__",

|

|

@@ -11,9 +11,11 @@ __all__ = [

|

|

|

11

11

|

"get_version",

|

|

12

12

|

"index",

|

|

13

13

|

"search",

|

|

14

|

+

"set_config_json",

|

|

15

|

+

"set_data_dir",

|

|

14

16

|

]

|

|

15

17

|

|

|

16

|

-

__version__ = "0.

|

|

18

|

+

__version__ = "0.21.0"

|

|

17

19

|

|

|

18

20

|

|

|

19

21

|

def get_version() -> str:

|

|

@@ -4,6 +4,7 @@ from __future__ import annotations

|

|

|

4

4

|

|

|

5

5

|

from dataclasses import dataclass

|

|

6

6

|

from pathlib import Path

|

|

7

|

+

from collections.abc import Mapping

|

|

7

8

|

from typing import Sequence

|

|

8

9

|

|

|

9

10

|

from .config import (

|

|

@@ -13,9 +14,12 @@ from .config import (

|

|

|

13

14

|

Config,

|

|

14

15

|

RemoteRerankConfig,

|

|

15

16

|

SUPPORTED_RERANKERS,

|

|

17

|

+

config_from_json,

|

|

16

18

|

load_config,

|

|

17

19

|

resolve_default_model,

|

|

20

|

+

set_config_dir,

|

|

18

21

|

)

|

|

22

|

+

from .cache import set_cache_dir

|

|

19

23

|

from .modes import available_modes, get_strategy

|

|

20

24

|

from .services.index_service import IndexResult, build_index, clear_index_entries

|

|

21

25

|

from .services.search_service import SearchRequest, SearchResponse, perform_search

|

|

@@ -38,6 +42,8 @@ class RuntimeSettings:

|

|

|

38

42

|

model_name: str

|

|

39

43

|

batch_size: int

|

|

40

44

|

embed_concurrency: int

|

|

45

|

+

extract_concurrency: int

|

|

46

|

+

extract_backend: str

|

|

41

47

|

base_url: str | None

|

|

42

48

|

api_key: str | None

|

|

43

49

|

local_cuda: bool

|

|

@@ -47,6 +53,30 @@ class RuntimeSettings:

|

|

|

47

53

|

remote_rerank: RemoteRerankConfig | None

|

|

48

54

|

|

|

49

55

|

|

|

56

|

+

_RUNTIME_CONFIG: Config | None = None

|

|

57

|

+

|

|

58

|

+

|

|

59

|

+

def set_data_dir(path: Path | str | None) -> None:

|

|

60

|

+

"""Set the base directory for config and cache data."""

|

|

61

|

+

set_config_dir(path)

|

|

62

|

+

set_cache_dir(path)

|

|

63

|

+

|

|

64

|

+

|

|

65

|

+

def set_config_json(

|

|

66

|

+

payload: Mapping[str, object] | str | None, *, replace: bool = False

|

|

67

|

+

) -> None:

|

|

68

|

+

"""Set in-memory config for API calls from a JSON string or mapping."""

|

|

69

|

+

global _RUNTIME_CONFIG

|

|

70

|

+

if payload is None:

|

|

71

|

+

_RUNTIME_CONFIG = None

|

|

72

|

+

return

|

|

73

|

+

base = None if replace else (_RUNTIME_CONFIG or load_config())

|

|

74

|

+

try:

|

|

75

|

+

_RUNTIME_CONFIG = config_from_json(payload, base=base)

|

|

76

|

+

except ValueError as exc:

|

|

77

|

+

raise VexorError(str(exc)) from exc

|

|

78

|

+

|

|

79

|

+

|

|

50

80

|

def search(

|

|

51

81

|

query: str,

|

|

52

82

|

*,

|

|

@@ -62,11 +92,16 @@ def search(

|

|

|

62

92

|

model: str | None = None,

|

|

63

93

|

batch_size: int | None = None,

|

|

64

94

|

embed_concurrency: int | None = None,

|

|

95

|

+

extract_concurrency: int | None = None,

|

|

96

|

+

extract_backend: str | None = None,

|

|

65

97

|

base_url: str | None = None,

|

|

66

98

|

api_key: str | None = None,

|

|

67

99

|

local_cuda: bool | None = None,

|

|

68

100

|

auto_index: bool | None = None,

|

|

69

101

|

use_config: bool = True,

|

|

102

|

+

config: Config | Mapping[str, object] | str | None = None,

|

|

103

|

+

temporary_index: bool = False,

|

|

104

|

+

no_cache: bool = False,

|

|

70

105

|

) -> SearchResponse:

|

|

71

106

|

"""Run a semantic search and return ranked results."""

|

|

72

107

|

|

|

@@ -90,11 +125,15 @@ def search(

|

|

|

90

125

|

model=model,

|

|

91

126

|

batch_size=batch_size,

|

|

92

127

|

embed_concurrency=embed_concurrency,

|

|

128

|

+

extract_concurrency=extract_concurrency,

|

|

129

|

+

extract_backend=extract_backend,

|

|

93

130

|

base_url=base_url,

|

|

94

131

|

api_key=api_key,

|

|

95

132

|

local_cuda=local_cuda,

|

|

96

133

|

auto_index=auto_index,

|

|

97

134

|

use_config=use_config,

|

|

135

|

+

runtime_config=_RUNTIME_CONFIG,

|

|

136

|

+

config_override=config,

|

|

98

137

|

)

|

|

99

138

|

|

|

100

139

|

request = SearchRequest(

|

|

@@ -108,6 +147,8 @@ def search(

|

|

|

108

147

|

model_name=settings.model_name,

|

|

109

148

|

batch_size=settings.batch_size,

|

|

110

149

|

embed_concurrency=settings.embed_concurrency,

|

|

150

|

+

extract_concurrency=settings.extract_concurrency,

|

|

151

|

+

extract_backend=settings.extract_backend,

|

|

111

152

|

provider=settings.provider,

|

|

112

153

|

base_url=settings.base_url,

|

|

113

154

|

api_key=settings.api_key,

|

|

@@ -115,6 +156,8 @@ def search(

|

|

|

115

156

|

exclude_patterns=normalized_excludes,

|

|

116

157

|

extensions=normalized_exts,

|

|

117

158

|

auto_index=settings.auto_index,

|

|

159

|

+

temporary_index=temporary_index,

|

|

160

|

+

no_cache=no_cache,

|

|

118

161

|

rerank=settings.rerank,

|

|

119

162

|

flashrank_model=settings.flashrank_model,

|

|

120

163

|

remote_rerank=settings.remote_rerank,

|

|

@@ -135,10 +178,13 @@ def index(

|

|

|

135

178

|

model: str | None = None,

|

|

136

179

|

batch_size: int | None = None,

|

|

137

180

|

embed_concurrency: int | None = None,

|

|

181

|

+

extract_concurrency: int | None = None,

|

|

182

|

+

extract_backend: str | None = None,

|

|

138

183

|

base_url: str | None = None,

|

|

139

184

|

api_key: str | None = None,

|

|

140

185

|

local_cuda: bool | None = None,

|

|

141

186

|

use_config: bool = True,

|

|

187

|

+

config: Config | Mapping[str, object] | str | None = None,

|

|

142

188

|

) -> IndexResult:

|

|

143

189

|

"""Build or refresh the index for the given directory."""

|

|

144

190

|

|

|

@@ -154,11 +200,15 @@ def index(

|

|

|

154

200

|

model=model,

|

|

155

201

|

batch_size=batch_size,

|

|

156

202

|

embed_concurrency=embed_concurrency,

|

|

203

|

+

extract_concurrency=extract_concurrency,

|

|

204

|

+

extract_backend=extract_backend,

|

|

157

205

|

base_url=base_url,

|

|

158

206

|

api_key=api_key,

|

|

159

207

|

local_cuda=local_cuda,

|

|

160

208

|

auto_index=None,

|

|

161

209

|

use_config=use_config,

|

|

210

|

+

runtime_config=_RUNTIME_CONFIG,

|

|

211

|

+

config_override=config,

|

|

162

212

|

)

|

|

163

213

|

|

|

164

214

|

return build_index(

|

|

@@ -170,6 +220,8 @@ def index(

|

|

|

170

220

|

model_name=settings.model_name,

|

|

171

221

|

batch_size=settings.batch_size,

|

|

172

222

|

embed_concurrency=settings.embed_concurrency,

|

|

223

|

+

extract_concurrency=settings.extract_concurrency,

|

|

224

|

+

extract_backend=settings.extract_backend,

|

|

173

225

|

provider=settings.provider,

|

|

174

226

|

base_url=settings.base_url,

|

|

175

227

|

api_key=settings.api_key,

|

|

@@ -220,6 +272,8 @@ def _validate_mode(mode: str) -> str:

|

|

|

220

272

|

return mode

|

|

221

273

|

|

|

222

274

|

|

|

275

|

+

|

|

276

|

+

|

|

223

277

|

def _normalize_extensions(values: Sequence[str] | str | None) -> tuple[str, ...]:

|

|

224

278

|

return normalize_extensions(_coerce_iterable(values))

|

|

225

279

|

|

|

@@ -242,13 +296,23 @@ def _resolve_settings(

|

|

|

242

296

|

model: str | None,

|

|

243

297

|

batch_size: int | None,

|

|

244

298

|

embed_concurrency: int | None,

|

|

299

|

+

extract_concurrency: int | None,

|

|

300

|

+

extract_backend: str | None,

|

|

245

301

|

base_url: str | None,

|

|

246

302

|

api_key: str | None,

|

|

247

303

|

local_cuda: bool | None,

|

|

248

304

|

auto_index: bool | None,

|

|

249

305

|

use_config: bool,

|

|

306

|

+

runtime_config: Config | None = None,

|

|

307

|

+

config_override: Config | Mapping[str, object] | str | None = None,

|

|

250

308

|

) -> RuntimeSettings:

|

|

251

|

-

config =

|

|

309

|

+

config = (

|

|

310

|

+

runtime_config if (use_config and runtime_config is not None) else None

|

|

311

|

+

)

|

|

312

|

+

if config is None:

|

|

313

|

+

config = load_config() if use_config else Config()

|

|

314

|

+

if config_override is not None:

|

|

315

|

+

config = _apply_config_override(config, config_override)

|

|

252

316

|

provider_value = (provider or config.provider or DEFAULT_PROVIDER).lower()

|

|

253

317

|

rerank_value = (config.rerank or DEFAULT_RERANK).strip().lower()

|

|

254

318

|

if rerank_value not in SUPPORTED_RERANKERS:

|

|

@@ -265,11 +329,21 @@ def _resolve_settings(

|

|

|

265

329

|

embed_value = (

|

|

266

330

|

embed_concurrency if embed_concurrency is not None else config.embed_concurrency

|

|

267

331

|

)

|

|

332

|

+

extract_value = (

|

|

333

|

+

extract_concurrency

|

|

334

|

+

if extract_concurrency is not None

|

|

335

|

+

else config.extract_concurrency

|

|

336

|

+

)

|

|

337

|

+

extract_backend_value = (

|

|

338

|

+

extract_backend if extract_backend is not None else config.extract_backend

|

|

339

|

+

)

|

|

268

340

|

return RuntimeSettings(

|

|

269

341

|

provider=provider_value,

|

|

270

342

|

model_name=model_name,

|

|

271

343

|

batch_size=batch_value,

|

|

272

344

|

embed_concurrency=embed_value,

|

|

345

|

+

extract_concurrency=extract_value,

|

|

346

|

+

extract_backend=extract_backend_value,

|

|

273

347

|

base_url=base_url if base_url is not None else config.base_url,

|

|

274

348

|

api_key=api_key if api_key is not None else config.api_key,

|

|

275

349

|

local_cuda=bool(local_cuda if local_cuda is not None else config.local_cuda),

|

|

@@ -278,3 +352,15 @@ def _resolve_settings(

|

|

|

278

352

|

flashrank_model=config.flashrank_model,

|

|

279

353

|

remote_rerank=config.remote_rerank,

|

|

280

354

|

)

|

|

355

|

+

|

|

356

|

+

|

|

357

|

+

def _apply_config_override(

|

|

358

|

+

base: Config,

|

|

359

|

+

override: Config | Mapping[str, object] | str,

|

|

360

|

+

) -> Config:

|

|

361

|

+

if isinstance(override, Config):

|

|

362

|

+

return override

|

|

363

|

+

try:

|

|

364

|

+

return config_from_json(override, base=base)

|

|

365

|

+

except ValueError as exc:

|

|

366

|

+

raise VexorError(str(exc)) from exc

|