vexor 0.19.0a1__tar.gz → 0.20.0__tar.gz

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- {vexor-0.19.0a1 → vexor-0.20.0}/PKG-INFO +28 -18

- {vexor-0.19.0a1 → vexor-0.20.0}/README.md +27 -17

- {vexor-0.19.0a1 → vexor-0.20.0}/plugins/vexor/.claude-plugin/plugin.json +1 -1

- {vexor-0.19.0a1 → vexor-0.20.0}/plugins/vexor/skills/vexor-cli/SKILL.md +1 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/__init__.py +4 -2

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/api.py +61 -1

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/cache.py +13 -1

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/cli.py +25 -5

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/config.py +186 -1

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/services/index_service.py +154 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/services/search_service.py +156 -12

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/text.py +4 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/.gitignore +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/LICENSE +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/gui/README.md +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/plugins/vexor/README.md +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/plugins/vexor/skills/vexor-cli/references/install-vexor.md +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/pyproject.toml +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/__main__.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/modes.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/output.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/providers/__init__.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/providers/gemini.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/providers/local.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/providers/openai.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/search.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/services/__init__.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/services/cache_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/services/config_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/services/content_extract_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/services/init_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/services/js_parser.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/services/keyword_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/services/skill_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/services/system_service.py +0 -0

- {vexor-0.19.0a1 → vexor-0.20.0}/vexor/utils.py +0 -0

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: vexor

|

|

3

|

-

Version: 0.

|

|

3

|

+

Version: 0.20.0

|

|

4

4

|

Summary: A vector-powered CLI for semantic search over files.

|

|

5

5

|

Project-URL: Repository, https://github.com/scarletkc/vexor

|

|

6

6

|

Author: scarletkc

|

|

@@ -69,9 +69,8 @@ Description-Content-Type: text/markdown

|

|

|

69

69

|

|

|

70

70

|

---

|

|

71

71

|

|

|

72

|

-

**Vexor** is a

|

|

73

|

-

|

|

74

|

-

|

|

72

|

+

**Vexor** is a semantic search engine that builds reusable indexes over files and code.

|

|

73

|

+

It supports configurable embedding and reranking providers, and exposes the same core through a Python API, a CLI tool, and an optional desktop frontend.

|

|

75

74

|

|

|

76

75

|

<video src="https://github.com/user-attachments/assets/4d53eefd-ab35-4232-98a7-f8dc005983a9" controls="controls" style="max-width: 600px;">

|

|

77

76

|

Vexor Demo Video

|

|

@@ -98,18 +97,13 @@ vexor init

|

|

|

98

97

|

```

|

|

99

98

|

The wizard also runs automatically on first use when no config exists.

|

|

100

99

|

|

|

101

|

-

### 1.

|

|

100

|

+

### 1. Search

|

|

102

101

|

```bash

|

|

103

|

-

vexor config

|

|

104

|

-

```

|

|

105

|

-

Or via environment: `VEXOR_API_KEY`, `OPENAI_API_KEY`, or `GOOGLE_GENAI_API_KEY`.

|

|

106

|

-

|

|

107

|

-

### 2. Search

|

|

108

|

-

```bash

|

|

109

|

-

vexor "api client config" # defaults to search

|

|

110

|

-

vexor search "api client config" # searches current directory

|

|

102

|

+

vexor "api client config" # defaults to search current directory

|

|

111

103

|

# or explicit path:

|

|

112

104

|

vexor search "api client config" --path ~/projects/demo --top 5

|

|

105

|

+

# in-memory search only:

|

|

106

|

+

vexor search "api client config" --no-cache

|

|

113

107

|

```

|

|

114

108

|

|

|

115

109

|

Vexor auto-indexes on first search. Example output:

|

|

@@ -122,7 +116,7 @@ Vexor semantic file search results

|

|

|

122

116

|

3 0.809 ./tests/test_config_loader.py - tests for config loader

|

|

123

117

|

```

|

|

124

118

|

|

|

125

|

-

###

|

|

119

|

+

### 2. Explicit Index (Optional)

|

|

126

120

|

```bash

|

|

127

121

|

vexor index # indexes current directory

|

|

128

122

|

# or explicit path:

|

|

@@ -130,6 +124,15 @@ vexor index --path ~/projects/demo --mode code

|

|

|

130

124

|

```

|

|

131

125

|

Useful for CI warmup or when `auto_index` is disabled.

|

|

132

126

|

|

|

127

|

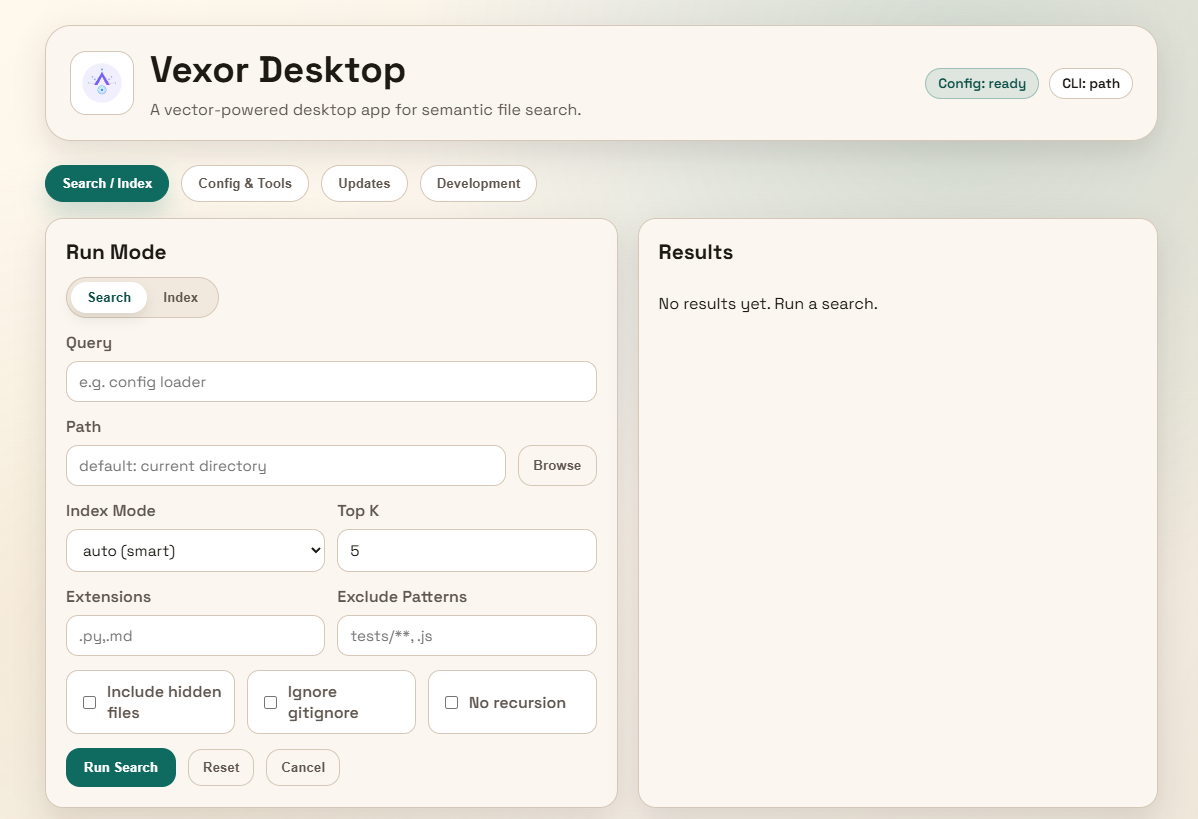

+

## Desktop App (Experimental)

|

|

128

|

+

|

|

129

|

+

> The desktop app is experimental and not actively maintained.

|

|

130

|

+

> It may be unstable. For production use, prefer the CLI.

|

|

131

|

+

|

|

132

|

+

|

|

133

|

+

|

|

134

|

+

Download the desktop app from [releases](https://github.com/scarletkc/vexor/releases).

|

|

135

|

+

|

|

133

136

|

## Python API

|

|

134

137

|

|

|

135

138

|

Vexor can also be imported and used directly from Python:

|

|

@@ -144,8 +147,8 @@ for hit in response.results:

|

|

|

144

147

|

print(hit.path, hit.score)

|

|

145

148

|

```

|

|

146

149

|

|

|

147

|

-

By default it reads `~/.vexor/config.json`.

|

|

148

|

-

|

|

150

|

+

By default it reads `~/.vexor/config.json`. For runtime config overrides, cache

|

|

151

|

+

controls, and per-call options, see [`docs/api/python.md`](https://github.com/scarletkc/vexor/tree/main/docs/api/python.md).

|

|

149

152

|

|

|

150

153

|

## Configuration

|

|

151

154

|

|

|

@@ -175,10 +178,16 @@ FlashRank requires `pip install "vexor[flashrank]"` and caches models under `~/.

|

|

|

175

178

|

|

|

176

179

|

Config stored in `~/.vexor/config.json`.

|

|

177

180

|

|

|

181

|

+

### Configure API Key

|

|

182

|

+

```bash

|

|

183

|

+

vexor config --set-api-key "YOUR_KEY"

|

|

184

|

+

```

|

|

185

|

+

Or via environment: `VEXOR_API_KEY`, `OPENAI_API_KEY`, or `GOOGLE_GENAI_API_KEY`.

|

|

186

|

+

|

|

178

187

|

### Rerank

|

|

179

188

|

|

|

180

|

-

Rerank reorders the semantic results with a secondary ranker.

|

|

181

|

-

|

|

189

|

+

Rerank reorders the semantic results with a secondary ranker. Candidate sizing uses

|

|

190

|

+

`clamp(int(--top * 2), 20, 150)`.

|

|

182

191

|

|

|

183

192

|

Recommended defaults:

|

|

184

193

|

- Keep `off` unless you want extra precision.

|

|

@@ -285,6 +294,7 @@ Re-running `vexor index` only re-embeds changed files; >50% changes trigger full

|

|

|

285

294

|

| `--no-respect-gitignore` | Include gitignored files |

|

|

286

295

|

| `--format porcelain` | Script-friendly TSV output |

|

|

287

296

|

| `--format porcelain-z` | NUL-delimited output |

|

|

297

|

+

| `--no-cache` | In-memory only; do not read/write index cache |

|

|

288

298

|

|

|

289

299

|

Porcelain output fields: `rank`, `similarity`, `path`, `chunk_index`, `start_line`, `end_line`, `preview` (line fields are `-` when unavailable).

|

|

290

300

|

|

|

@@ -14,9 +14,8 @@

|

|

|

14

14

|

|

|

15

15

|

---

|

|

16

16

|

|

|

17

|

-

**Vexor** is a

|

|

18

|

-

|

|

19

|

-

|

|

17

|

+

**Vexor** is a semantic search engine that builds reusable indexes over files and code.

|

|

18

|

+

It supports configurable embedding and reranking providers, and exposes the same core through a Python API, a CLI tool, and an optional desktop frontend.

|

|

20

19

|

|

|

21

20

|

<video src="https://github.com/user-attachments/assets/4d53eefd-ab35-4232-98a7-f8dc005983a9" controls="controls" style="max-width: 600px;">

|

|

22

21

|

Vexor Demo Video

|

|

@@ -43,18 +42,13 @@ vexor init

|

|

|

43

42

|

```

|

|

44

43

|

The wizard also runs automatically on first use when no config exists.

|

|

45

44

|

|

|

46

|

-

### 1.

|

|

45

|

+

### 1. Search

|

|

47

46

|

```bash

|

|

48

|

-

vexor config

|

|

49

|

-

```

|

|

50

|

-

Or via environment: `VEXOR_API_KEY`, `OPENAI_API_KEY`, or `GOOGLE_GENAI_API_KEY`.

|

|

51

|

-

|

|

52

|

-

### 2. Search

|

|

53

|

-

```bash

|

|

54

|

-

vexor "api client config" # defaults to search

|

|

55

|

-

vexor search "api client config" # searches current directory

|

|

47

|

+

vexor "api client config" # defaults to search current directory

|

|

56

48

|

# or explicit path:

|

|

57

49

|

vexor search "api client config" --path ~/projects/demo --top 5

|

|

50

|

+

# in-memory search only:

|

|

51

|

+

vexor search "api client config" --no-cache

|

|

58

52

|

```

|

|

59

53

|

|

|

60

54

|

Vexor auto-indexes on first search. Example output:

|

|

@@ -67,7 +61,7 @@ Vexor semantic file search results

|

|

|

67

61

|

3 0.809 ./tests/test_config_loader.py - tests for config loader

|

|

68

62

|

```

|

|

69

63

|

|

|

70

|

-

###

|

|

64

|

+

### 2. Explicit Index (Optional)

|

|

71

65

|

```bash

|

|

72

66

|

vexor index # indexes current directory

|

|

73

67

|

# or explicit path:

|

|

@@ -75,6 +69,15 @@ vexor index --path ~/projects/demo --mode code

|

|

|

75

69

|

```

|

|

76

70

|

Useful for CI warmup or when `auto_index` is disabled.

|

|

77

71

|

|

|

72

|

+

## Desktop App (Experimental)

|

|

73

|

+

|

|

74

|

+

> The desktop app is experimental and not actively maintained.

|

|

75

|

+

> It may be unstable. For production use, prefer the CLI.

|

|

76

|

+

|

|

77

|

+

|

|

78

|

+

|

|

79

|

+

Download the desktop app from [releases](https://github.com/scarletkc/vexor/releases).

|

|

80

|

+

|

|

78

81

|

## Python API

|

|

79

82

|

|

|

80

83

|

Vexor can also be imported and used directly from Python:

|

|

@@ -89,8 +92,8 @@ for hit in response.results:

|

|

|

89

92

|

print(hit.path, hit.score)

|

|

90

93

|

```

|

|

91

94

|

|

|

92

|

-

By default it reads `~/.vexor/config.json`.

|

|

93

|

-

|

|

95

|

+

By default it reads `~/.vexor/config.json`. For runtime config overrides, cache

|

|

96

|

+

controls, and per-call options, see [`docs/api/python.md`](https://github.com/scarletkc/vexor/tree/main/docs/api/python.md).

|

|

94

97

|

|

|

95

98

|

## Configuration

|

|

96

99

|

|

|

@@ -120,10 +123,16 @@ FlashRank requires `pip install "vexor[flashrank]"` and caches models under `~/.

|

|

|

120

123

|

|

|

121

124

|

Config stored in `~/.vexor/config.json`.

|

|

122

125

|

|

|

126

|

+

### Configure API Key

|

|

127

|

+

```bash

|

|

128

|

+

vexor config --set-api-key "YOUR_KEY"

|

|

129

|

+

```

|

|

130

|

+

Or via environment: `VEXOR_API_KEY`, `OPENAI_API_KEY`, or `GOOGLE_GENAI_API_KEY`.

|

|

131

|

+

|

|

123

132

|

### Rerank

|

|

124

133

|

|

|

125

|

-

Rerank reorders the semantic results with a secondary ranker.

|

|

126

|

-

|

|

134

|

+

Rerank reorders the semantic results with a secondary ranker. Candidate sizing uses

|

|

135

|

+

`clamp(int(--top * 2), 20, 150)`.

|

|

127

136

|

|

|

128

137

|

Recommended defaults:

|

|

129

138

|

- Keep `off` unless you want extra precision.

|

|

@@ -230,6 +239,7 @@ Re-running `vexor index` only re-embeds changed files; >50% changes trigger full

|

|

|

230

239

|

| `--no-respect-gitignore` | Include gitignored files |

|

|

231

240

|

| `--format porcelain` | Script-friendly TSV output |

|

|

232

241

|

| `--format porcelain-z` | NUL-delimited output |

|

|

242

|

+

| `--no-cache` | In-memory only; do not read/write index cache |

|

|

233

243

|

|

|

234

244

|

Porcelain output fields: `rank`, `similarity`, `path`, `chunk_index`, `start_line`, `end_line`, `preview` (line fields are `-` when unavailable).

|

|

235

245

|

|

|

@@ -31,6 +31,7 @@ vexor "<QUERY>" [--path <ROOT>] [--mode <MODE>] [--ext .py,.md] [--exclude-patte

|

|

|

31

31

|

- `--no-respect-gitignore`: include ignored files

|

|

32

32

|

- `--no-recursive`: only the top directory

|

|

33

33

|

- `--format`: `rich` (default) or `porcelain`/`porcelain-z` for scripts

|

|

34

|

+

- `--no-cache`: in-memory only, do not read/write index cache

|

|

34

35

|

|

|

35

36

|

## Modes (pick the cheapest that works)

|

|

36

37

|

|

|

@@ -2,7 +2,7 @@

|

|

|

2

2

|

|

|

3

3

|

from __future__ import annotations

|

|

4

4

|

|

|

5

|

-

from .api import VexorError, clear_index, index, search

|

|

5

|

+

from .api import VexorError, clear_index, index, search, set_config_json, set_data_dir

|

|

6

6

|

|

|

7

7

|

__all__ = [

|

|

8

8

|

"__version__",

|

|

@@ -11,9 +11,11 @@ __all__ = [

|

|

|

11

11

|

"get_version",

|

|

12

12

|

"index",

|

|

13

13

|

"search",

|

|

14

|

+

"set_config_json",

|

|

15

|

+

"set_data_dir",

|

|

14

16

|

]

|

|

15

17

|

|

|

16

|

-

__version__ = "0.

|

|

18

|

+

__version__ = "0.20.0"

|

|

17

19

|

|

|

18

20

|

|

|

19

21

|

def get_version() -> str:

|

|

@@ -4,6 +4,7 @@ from __future__ import annotations

|

|

|

4

4

|

|

|

5

5

|

from dataclasses import dataclass

|

|

6

6

|

from pathlib import Path

|

|

7

|

+

from collections.abc import Mapping

|

|

7

8

|

from typing import Sequence

|

|

8

9

|

|

|

9

10

|

from .config import (

|

|

@@ -13,9 +14,12 @@ from .config import (

|

|

|

13

14

|

Config,

|

|

14

15

|

RemoteRerankConfig,

|

|

15

16

|

SUPPORTED_RERANKERS,

|

|

17

|

+

config_from_json,

|

|

16

18

|

load_config,

|

|

17

19

|

resolve_default_model,

|

|

20

|

+

set_config_dir,

|

|

18

21

|

)

|

|

22

|

+

from .cache import set_cache_dir

|

|

19

23

|

from .modes import available_modes, get_strategy

|

|

20

24

|

from .services.index_service import IndexResult, build_index, clear_index_entries

|

|

21

25

|

from .services.search_service import SearchRequest, SearchResponse, perform_search

|

|

@@ -47,6 +51,30 @@ class RuntimeSettings:

|

|

|

47

51

|

remote_rerank: RemoteRerankConfig | None

|

|

48

52

|

|

|

49

53

|

|

|

54

|

+

_RUNTIME_CONFIG: Config | None = None

|

|

55

|

+

|

|

56

|

+

|

|

57

|

+

def set_data_dir(path: Path | str | None) -> None:

|

|

58

|

+

"""Set the base directory for config and cache data."""

|

|

59

|

+

set_config_dir(path)

|

|

60

|

+

set_cache_dir(path)

|

|

61

|

+

|

|

62

|

+

|

|

63

|

+

def set_config_json(

|

|

64

|

+

payload: Mapping[str, object] | str | None, *, replace: bool = False

|

|

65

|

+

) -> None:

|

|

66

|

+

"""Set in-memory config for API calls from a JSON string or mapping."""

|

|

67

|

+

global _RUNTIME_CONFIG

|

|

68

|

+

if payload is None:

|

|

69

|

+

_RUNTIME_CONFIG = None

|

|

70

|

+

return

|

|

71

|

+

base = None if replace else (_RUNTIME_CONFIG or load_config())

|

|

72

|

+

try:

|

|

73

|

+

_RUNTIME_CONFIG = config_from_json(payload, base=base)

|

|

74

|

+

except ValueError as exc:

|

|

75

|

+

raise VexorError(str(exc)) from exc

|

|

76

|

+

|

|

77

|

+

|

|

50

78

|

def search(

|

|

51

79

|

query: str,

|

|

52

80

|

*,

|

|

@@ -67,6 +95,9 @@ def search(

|

|

|

67

95

|

local_cuda: bool | None = None,

|

|

68

96

|

auto_index: bool | None = None,

|

|

69

97

|

use_config: bool = True,

|

|

98

|

+

config: Config | Mapping[str, object] | str | None = None,

|

|

99

|

+

temporary_index: bool = False,

|

|

100

|

+

no_cache: bool = False,

|

|

70

101

|

) -> SearchResponse:

|

|

71

102

|

"""Run a semantic search and return ranked results."""

|

|

72

103

|

|

|

@@ -95,6 +126,8 @@ def search(

|

|

|

95

126

|

local_cuda=local_cuda,

|

|

96

127

|

auto_index=auto_index,

|

|

97

128

|

use_config=use_config,

|

|

129

|

+

runtime_config=_RUNTIME_CONFIG,

|

|

130

|

+

config_override=config,

|

|

98

131

|

)

|

|

99

132

|

|

|

100

133

|

request = SearchRequest(

|

|

@@ -115,6 +148,8 @@ def search(

|

|

|

115

148

|

exclude_patterns=normalized_excludes,

|

|

116

149

|

extensions=normalized_exts,

|

|

117

150

|

auto_index=settings.auto_index,

|

|

151

|

+

temporary_index=temporary_index,

|

|

152

|

+

no_cache=no_cache,

|

|

118

153

|

rerank=settings.rerank,

|

|

119

154

|

flashrank_model=settings.flashrank_model,

|

|

120

155

|

remote_rerank=settings.remote_rerank,

|

|

@@ -139,6 +174,7 @@ def index(

|

|

|

139

174

|

api_key: str | None = None,

|

|

140

175

|

local_cuda: bool | None = None,

|

|

141

176

|

use_config: bool = True,

|

|

177

|

+

config: Config | Mapping[str, object] | str | None = None,

|

|

142

178

|

) -> IndexResult:

|

|

143

179

|

"""Build or refresh the index for the given directory."""

|

|

144

180

|

|

|

@@ -159,6 +195,8 @@ def index(

|

|

|

159

195

|

local_cuda=local_cuda,

|

|

160

196

|

auto_index=None,

|

|

161

197

|

use_config=use_config,

|

|

198

|

+

runtime_config=_RUNTIME_CONFIG,

|

|

199

|

+

config_override=config,

|

|

162

200

|

)

|

|

163

201

|

|

|

164

202

|

return build_index(

|

|

@@ -220,6 +258,8 @@ def _validate_mode(mode: str) -> str:

|

|

|

220

258

|

return mode

|

|

221

259

|

|

|

222

260

|

|

|

261

|

+

|

|

262

|

+

|

|

223

263

|

def _normalize_extensions(values: Sequence[str] | str | None) -> tuple[str, ...]:

|

|

224

264

|

return normalize_extensions(_coerce_iterable(values))

|

|

225

265

|

|

|

@@ -247,8 +287,16 @@ def _resolve_settings(

|

|

|

247

287

|

local_cuda: bool | None,

|

|

248

288

|

auto_index: bool | None,

|

|

249

289

|

use_config: bool,

|

|

290

|

+

runtime_config: Config | None = None,

|

|

291

|

+

config_override: Config | Mapping[str, object] | str | None = None,

|

|

250

292

|

) -> RuntimeSettings:

|

|

251

|

-

config =

|

|

293

|

+

config = (

|

|

294

|

+

runtime_config if (use_config and runtime_config is not None) else None

|

|

295

|

+

)

|

|

296

|

+

if config is None:

|

|

297

|

+

config = load_config() if use_config else Config()

|

|

298

|

+

if config_override is not None:

|

|

299

|

+

config = _apply_config_override(config, config_override)

|

|

252

300

|

provider_value = (provider or config.provider or DEFAULT_PROVIDER).lower()

|

|

253

301

|

rerank_value = (config.rerank or DEFAULT_RERANK).strip().lower()

|

|

254

302

|

if rerank_value not in SUPPORTED_RERANKERS:

|

|

@@ -278,3 +326,15 @@ def _resolve_settings(

|

|

|

278

326

|

flashrank_model=config.flashrank_model,

|

|

279

327

|

remote_rerank=config.remote_rerank,

|

|

280

328

|

)

|

|

329

|

+

|

|

330

|

+

|

|

331

|

+

def _apply_config_override(

|

|

332

|

+

base: Config,

|

|

333

|

+

override: Config | Mapping[str, object] | str,

|

|

334

|

+

) -> Config:

|

|

335

|

+

if isinstance(override, Config):

|

|

336

|

+

return override

|

|

337

|

+

try:

|

|

338

|

+

return config_from_json(override, base=base)

|

|

339

|

+

except ValueError as exc:

|

|

340

|

+

raise VexorError(str(exc)) from exc

|

|

@@ -14,7 +14,8 @@ import numpy as np

|

|

|

14

14

|

|

|

15

15

|

from .utils import collect_files

|

|

16

16

|

|

|

17

|

-

|

|

17

|

+

DEFAULT_CACHE_DIR = Path(os.path.expanduser("~")) / ".vexor"

|

|

18

|

+

CACHE_DIR = DEFAULT_CACHE_DIR

|

|

18

19

|

CACHE_VERSION = 5

|

|

19

20

|

DB_FILENAME = "index.db"

|

|

20

21

|

EMBED_CACHE_TTL_DAYS = 30

|

|

@@ -119,6 +120,17 @@ def ensure_cache_dir() -> Path:

|

|

|

119

120

|

return CACHE_DIR

|

|

120

121

|

|

|

121

122

|

|

|

123

|

+

def set_cache_dir(path: Path | str | None) -> None:

|

|

124

|

+

global CACHE_DIR

|

|

125

|

+

if path is None:

|

|

126

|

+

CACHE_DIR = DEFAULT_CACHE_DIR

|

|

127

|

+

return

|

|

128

|

+

dir_path = Path(path).expanduser().resolve()

|

|

129

|

+

if dir_path.exists() and not dir_path.is_dir():

|

|

130

|

+

raise NotADirectoryError(f"Path is not a directory: {dir_path}")

|

|

131

|

+

CACHE_DIR = dir_path

|

|

132

|

+

|

|

133

|

+

|

|

122

134

|

def cache_db_path() -> Path:

|

|

123

135

|

"""Return the absolute path to the shared SQLite cache database."""

|

|

124

136

|

|

|

@@ -389,6 +389,11 @@ def search(

|

|

|

389

389

|

"--format",

|

|

390

390

|

help=Messages.HELP_SEARCH_FORMAT,

|

|

391

391

|

),

|

|

392

|

+

no_cache: bool = typer.Option(

|

|

393

|

+

False,

|

|

394

|

+

"--no-cache",

|

|

395

|

+

help=Messages.HELP_NO_CACHE,

|

|

396

|

+

),

|

|

392

397

|

) -> None:

|

|

393

398

|

"""Run the semantic search."""

|

|

394

399

|

config = load_config()

|

|

@@ -440,20 +445,35 @@ def search(

|

|

|

440

445

|

exclude_patterns=normalized_excludes,

|

|

441

446

|

extensions=normalized_exts,

|

|

442

447

|

auto_index=auto_index,

|

|

448

|

+

no_cache=no_cache,

|

|

443

449

|

rerank=rerank,

|

|

444

450

|

flashrank_model=flashrank_model,

|

|

445

451

|

remote_rerank=remote_rerank,

|

|

446

452

|

)

|

|

447

453

|

if output_format == SearchOutputFormat.rich:

|

|

448

|

-

|

|

449

|

-

if should_index_first:

|

|

454

|

+

if no_cache:

|

|

450

455

|

console.print(

|

|

451

|

-

_styled(

|

|

456

|

+

_styled(

|

|

457

|

+

Messages.INFO_SEARCH_RUNNING_NO_CACHE.format(path=directory),

|

|

458

|

+

Styles.INFO,

|

|

459

|

+

)

|

|

452

460

|

)

|

|

453

461

|

else:

|

|

454

|

-

|

|

455

|

-

|

|

462

|

+

should_index_first = (

|

|

463

|

+

_should_index_before_search(request) if auto_index else False

|

|

456

464

|

)

|

|

465

|

+

if should_index_first:

|

|

466

|

+

console.print(

|

|

467

|

+

_styled(

|

|

468

|

+

Messages.INFO_INDEX_RUNNING.format(path=directory), Styles.INFO

|

|

469

|

+

)

|

|

470

|

+

)

|

|

471

|

+

else:

|

|

472

|

+

console.print(

|

|

473

|

+

_styled(

|

|

474

|

+

Messages.INFO_SEARCH_RUNNING.format(path=directory), Styles.INFO

|

|

475

|

+

)

|

|

476

|

+

)

|

|

457

477

|

try:

|

|

458

478

|

response = perform_search(request)

|

|

459

479

|

except FileNotFoundError:

|

|

@@ -5,11 +5,15 @@ from __future__ import annotations

|

|

|

5

5

|

import json

|

|

6

6

|

import os

|

|

7

7

|

from dataclasses import dataclass

|

|

8

|

+

from collections.abc import Mapping

|

|

8

9

|

from pathlib import Path

|

|

9

10

|

from typing import Any, Dict

|

|

10

11

|

from urllib.parse import urlparse, urlunparse

|

|

11

12

|

|

|

12

|

-

|

|

13

|

+

from .text import Messages

|

|

14

|

+

|

|

15

|

+

DEFAULT_CONFIG_DIR = Path(os.path.expanduser("~")) / ".vexor"

|

|

16

|

+

CONFIG_DIR = DEFAULT_CONFIG_DIR

|

|

13

17

|

CONFIG_FILE = CONFIG_DIR / "config.json"

|

|

14

18

|

DEFAULT_MODEL = "text-embedding-3-small"

|

|

15

19

|

DEFAULT_GEMINI_MODEL = "gemini-embedding-001"

|

|

@@ -129,6 +133,38 @@ def flashrank_cache_dir(*, create: bool = True) -> Path:

|

|

|

129

133

|

return cache_dir

|

|

130

134

|

|

|

131

135

|

|

|

136

|

+

def set_config_dir(path: Path | str | None) -> None:

|

|

137

|

+

global CONFIG_DIR, CONFIG_FILE

|

|

138

|

+

if path is None:

|

|

139

|

+

CONFIG_DIR = DEFAULT_CONFIG_DIR

|

|

140

|

+

else:

|

|

141

|

+

dir_path = Path(path).expanduser().resolve()

|

|

142

|

+

if dir_path.exists() and not dir_path.is_dir():

|

|

143

|

+

raise NotADirectoryError(f"Path is not a directory: {dir_path}")

|

|

144

|

+

CONFIG_DIR = dir_path

|

|

145

|

+

CONFIG_FILE = CONFIG_DIR / "config.json"

|

|

146

|

+

|

|

147

|

+

|

|

148

|

+

def config_from_json(

|

|

149

|

+

payload: str | Mapping[str, object], *, base: Config | None = None

|

|

150

|

+

) -> Config:

|

|

151

|

+

"""Return a Config from a JSON string or mapping without saving it."""

|

|

152

|

+

data = _coerce_config_payload(payload)

|

|

153

|

+

config = Config() if base is None else _clone_config(base)

|

|

154

|

+

_apply_config_payload(config, data)

|

|

155

|

+

return config

|

|

156

|

+

|

|

157

|

+

|

|

158

|

+

def update_config_from_json(

|

|

159

|

+

payload: str | Mapping[str, object], *, replace: bool = False

|

|

160

|

+

) -> Config:

|

|

161

|

+

"""Update config from a JSON string or mapping and persist it."""

|

|

162

|

+

base = None if replace else load_config()

|

|

163

|

+

config = config_from_json(payload, base=base)

|

|

164

|

+

save_config(config)

|

|

165

|

+

return config

|

|

166

|

+

|

|

167

|

+

|

|

132

168

|

def set_api_key(value: str | None) -> None:

|

|

133

169

|

config = load_config()

|

|

134

170

|

config.api_key = value

|

|

@@ -281,3 +317,152 @@ def resolve_remote_rerank_api_key(configured: str | None) -> str | None:

|

|

|

281

317

|

if env_key:

|

|

282

318

|

return env_key

|

|

283

319

|

return None

|

|

320

|

+

|

|

321

|

+

|

|

322

|

+

def _coerce_config_payload(payload: str | Mapping[str, object]) -> Mapping[str, object]:

|

|

323

|

+

if isinstance(payload, str):

|

|

324

|

+

try:

|

|

325

|

+

data = json.loads(payload)

|

|

326

|

+

except json.JSONDecodeError as exc:

|

|

327

|

+

raise ValueError(Messages.ERROR_CONFIG_JSON_INVALID) from exc

|

|

328

|

+

elif isinstance(payload, Mapping):

|

|

329

|

+

data = dict(payload)

|

|

330

|

+

else:

|

|

331

|

+

raise ValueError(Messages.ERROR_CONFIG_JSON_INVALID)

|

|

332

|

+

if not isinstance(data, Mapping):

|

|

333

|

+

raise ValueError(Messages.ERROR_CONFIG_JSON_INVALID)

|

|

334

|

+

return data

|

|

335

|

+

|

|

336

|

+

|

|

337

|

+

def _clone_config(config: Config) -> Config:

|

|

338

|

+

remote = config.remote_rerank

|

|

339

|

+

return Config(

|

|

340

|

+

api_key=config.api_key,

|

|

341

|

+

model=config.model,

|

|

342

|

+

batch_size=config.batch_size,

|

|

343

|

+

embed_concurrency=config.embed_concurrency,

|

|

344

|

+

provider=config.provider,

|

|

345

|

+

base_url=config.base_url,

|

|

346

|

+

auto_index=config.auto_index,

|

|

347

|

+

local_cuda=config.local_cuda,

|

|

348

|

+

rerank=config.rerank,

|

|

349

|

+

flashrank_model=config.flashrank_model,

|

|

350

|

+

remote_rerank=(

|

|

351

|

+

None

|

|

352

|

+

if remote is None

|

|

353

|

+

else RemoteRerankConfig(

|

|

354

|

+

base_url=remote.base_url,

|

|

355

|

+

api_key=remote.api_key,

|

|

356

|

+

model=remote.model,

|

|

357

|

+

)

|

|

358

|

+

),

|

|

359

|

+

)

|

|

360

|

+

|

|

361

|

+

|

|

362

|

+

def _apply_config_payload(config: Config, payload: Mapping[str, object]) -> None:

|

|

363

|

+

if "api_key" in payload:

|

|

364

|

+

config.api_key = _coerce_optional_str(payload["api_key"], "api_key")

|

|

365

|

+

if "model" in payload:

|

|

366

|

+

config.model = _coerce_required_str(payload["model"], "model", DEFAULT_MODEL)

|

|

367

|

+

if "batch_size" in payload:

|

|

368

|

+

config.batch_size = _coerce_int(

|

|

369

|

+

payload["batch_size"], "batch_size", DEFAULT_BATCH_SIZE

|

|

370

|

+

)

|

|

371

|

+

if "embed_concurrency" in payload:

|

|

372

|

+

config.embed_concurrency = _coerce_int(

|

|

373

|

+

payload["embed_concurrency"],

|

|

374

|

+

"embed_concurrency",

|

|

375

|

+

DEFAULT_EMBED_CONCURRENCY,

|

|

376

|

+

)

|

|

377

|

+

if "provider" in payload:

|

|

378

|

+

config.provider = _coerce_required_str(

|

|

379

|

+

payload["provider"], "provider", DEFAULT_PROVIDER

|

|

380

|

+

)

|

|

381

|

+

if "base_url" in payload:

|

|

382

|

+

config.base_url = _coerce_optional_str(payload["base_url"], "base_url")

|

|

383

|

+

if "auto_index" in payload:

|

|

384

|

+

config.auto_index = _coerce_bool(payload["auto_index"], "auto_index")

|

|

385

|

+

if "local_cuda" in payload:

|

|

386

|

+

config.local_cuda = _coerce_bool(payload["local_cuda"], "local_cuda")

|

|

387

|

+

if "rerank" in payload:

|

|

388

|

+

config.rerank = _normalize_rerank(payload["rerank"])

|

|

389

|

+

if "flashrank_model" in payload:

|

|

390

|

+

config.flashrank_model = _coerce_optional_str(

|

|

391

|

+

payload["flashrank_model"], "flashrank_model"

|

|

392

|

+

)

|

|

393

|

+

if "remote_rerank" in payload:

|

|

394

|

+

config.remote_rerank = _coerce_remote_rerank(payload["remote_rerank"])

|

|

395

|

+

|

|

396

|

+

|

|

397

|

+

def _coerce_optional_str(value: object, field: str) -> str | None:

|

|

398

|

+

if value is None:

|

|

399

|

+

return None

|

|

400

|

+

if isinstance(value, str):

|

|

401

|

+

cleaned = value.strip()

|

|

402

|

+

return cleaned or None

|

|

403

|

+

raise ValueError(Messages.ERROR_CONFIG_VALUE_INVALID.format(field=field))

|

|

404

|

+

|

|

405

|

+

|

|

406

|

+

def _coerce_required_str(value: object, field: str, default: str) -> str:

|

|

407

|

+

if value is None:

|

|

408

|

+

return default

|

|

409

|

+

if isinstance(value, str):

|

|

410

|

+

cleaned = value.strip()

|

|

411

|

+

return cleaned or default

|

|

412

|

+

raise ValueError(Messages.ERROR_CONFIG_VALUE_INVALID.format(field=field))

|

|

413

|

+

|

|

414

|

+

|

|

415

|

+

def _coerce_int(value: object, field: str, default: int) -> int:

|

|

416

|

+

if value is None:

|

|

417

|

+

return default

|

|

418

|

+

if isinstance(value, bool):

|

|

419

|

+

raise ValueError(Messages.ERROR_CONFIG_VALUE_INVALID.format(field=field))

|

|

420

|

+

if isinstance(value, int):

|

|

421

|

+

return value

|

|

422

|

+

if isinstance(value, float):

|

|

423

|

+

if value.is_integer():

|

|

424

|

+

return int(value)

|

|

425

|

+

raise ValueError(Messages.ERROR_CONFIG_VALUE_INVALID.format(field=field))

|

|

426

|

+

if isinstance(value, str):

|

|

427

|

+

cleaned = value.strip()

|

|

428

|

+

if not cleaned:

|

|

429

|

+

return default

|

|

430

|

+

try:

|

|

431

|

+

return int(cleaned)

|

|

432

|

+

except ValueError as exc:

|

|

433

|

+

raise ValueError(Messages.ERROR_CONFIG_VALUE_INVALID.format(field=field)) from exc

|

|

434

|

+

raise ValueError(Messages.ERROR_CONFIG_VALUE_INVALID.format(field=field))

|

|

435

|

+

|

|

436

|

+

|

|

437

|

+

def _coerce_bool(value: object, field: str) -> bool:

|

|

438

|

+

if isinstance(value, bool):

|

|

439

|

+

return value

|

|

440

|

+

if isinstance(value, int) and value in (0, 1):

|

|

441

|

+

return bool(value)

|

|

442

|

+

if isinstance(value, str):

|

|

443

|

+

cleaned = value.strip().lower()

|

|

444

|

+

if cleaned in {"true", "1", "yes", "on"}:

|

|

445

|

+

return True

|

|

446

|

+

if cleaned in {"false", "0", "no", "off"}:

|

|

447

|

+

return False

|

|

448

|

+

raise ValueError(Messages.ERROR_CONFIG_VALUE_INVALID.format(field=field))

|

|

449

|

+

|

|

450

|

+

|

|

451

|

+

def _normalize_rerank(value: object) -> str:

|

|

452

|

+

if value is None:

|

|

453

|

+

normalized = DEFAULT_RERANK

|

|

454

|

+

elif isinstance(value, str):

|

|

455

|

+

normalized = value.strip().lower() or DEFAULT_RERANK

|

|

456

|

+

else:

|

|

457

|

+

raise ValueError(Messages.ERROR_CONFIG_VALUE_INVALID.format(field="rerank"))

|

|

458

|

+

if normalized not in SUPPORTED_RERANKERS:

|

|

459

|

+

normalized = DEFAULT_RERANK

|

|

460

|

+

return normalized

|

|

461

|

+

|

|

462

|

+

|

|

463

|

+

def _coerce_remote_rerank(value: object) -> RemoteRerankConfig | None:

|

|

464

|

+

if value is None:

|

|

465

|

+

return None

|

|

466

|

+

if isinstance(value, Mapping):

|

|

467

|

+

return _parse_remote_rerank(dict(value))

|

|

468

|

+

raise ValueError(Messages.ERROR_CONFIG_VALUE_INVALID.format(field="remote_rerank"))

|

|

@@ -4,6 +4,7 @@ from __future__ import annotations

|

|

|

4

4

|

|

|

5

5

|

import os

|

|

6

6

|

from dataclasses import dataclass, field

|

|

7

|

+

from datetime import datetime, timezone

|

|

7

8

|

from enum import Enum

|

|

8

9

|

from pathlib import Path

|

|

9

10

|

from typing import MutableMapping, Sequence

|

|

@@ -51,6 +52,7 @@ def build_index(

|

|

|

51

52

|

local_cuda: bool = False,

|

|

52

53

|

exclude_patterns: Sequence[str] | None = None,

|

|

53

54

|

extensions: Sequence[str] | None = None,

|

|

55

|

+

no_cache: bool = False,

|

|

54

56

|

) -> IndexResult:

|

|

55

57

|

"""Create or refresh the cached index for *directory*."""

|

|

56

58

|

|

|

@@ -187,6 +189,7 @@ def build_index(

|

|

|

187

189

|

exclude_patterns=exclude_patterns,

|

|

188

190

|

extensions=extensions,

|

|

189

191

|

stat_cache=stat_cache,

|

|

192

|

+

no_cache=no_cache,

|

|

190

193

|

)

|

|

191

194

|

|

|

192

195

|

line_backfill_targets = missing_line_files - changed_rel_paths - removed_rel_paths

|

|

@@ -220,6 +223,7 @@ def build_index(

|

|

|

220

223

|

searcher=searcher,

|

|

221

224

|

model_name=model_name,

|

|

222

225

|

labels=file_labels,

|

|

226

|

+

no_cache=no_cache,

|

|

223

227

|

)

|

|

224

228

|

entries = _build_index_entries(payloads, embeddings, directory, stat_cache=stat_cache)

|

|

225

229

|

|

|

@@ -241,6 +245,150 @@ def build_index(

|

|

|

241

245

|

)

|

|

242

246

|

|

|

243

247

|

|

|

248

|

+

def build_index_in_memory(

|

|

249

|

+

directory: Path,

|

|

250

|

+

*,

|

|

251

|

+

include_hidden: bool,

|

|

252

|

+

respect_gitignore: bool = True,

|

|

253

|

+

mode: str,

|

|

254

|

+

recursive: bool,

|

|

255

|

+

model_name: str,

|

|

256

|

+

batch_size: int,

|

|

257

|

+

embed_concurrency: int = DEFAULT_EMBED_CONCURRENCY,

|

|

258

|

+

provider: str,

|

|

259

|

+

base_url: str | None,

|

|

260

|

+

api_key: str | None,

|

|

261

|

+

local_cuda: bool = False,

|

|

262

|

+

exclude_patterns: Sequence[str] | None = None,

|

|

263

|

+

extensions: Sequence[str] | None = None,

|

|

264

|

+

no_cache: bool = False,

|

|

265

|

+

) -> tuple[list[Path], np.ndarray, dict]:

|

|

266

|

+

"""Build an index in memory without writing to disk."""

|

|

267

|

+

|

|

268

|

+

from ..search import VexorSearcher # local import

|

|

269

|

+

from ..utils import collect_files # local import

|

|

270

|

+

|

|

271

|

+

files = collect_files(

|

|

272

|

+

directory,

|

|

273

|

+

include_hidden=include_hidden,

|

|

274

|

+

recursive=recursive,

|

|

275

|

+

extensions=extensions,

|

|

276

|

+

exclude_patterns=exclude_patterns,

|

|

277

|

+

respect_gitignore=respect_gitignore,

|

|

278

|

+

)

|

|

279

|

+

if not files:

|

|

280

|

+

empty = np.empty((0, 0), dtype=np.float32)

|

|

281

|

+

metadata = {

|

|

282

|

+

"index_id": None,

|

|

283

|

+

"version": CACHE_VERSION,

|

|

284

|

+

"generated_at": datetime.now(timezone.utc).isoformat(),

|

|

285

|

+

"root": str(directory),

|

|

286

|

+

"model": model_name,

|

|

287

|

+

"include_hidden": include_hidden,

|

|

288

|

+

"respect_gitignore": respect_gitignore,

|

|

289

|

+

"recursive": recursive,

|

|

290

|

+

"mode": mode,

|

|

291

|

+

"dimension": 0,

|

|

292

|

+

"exclude_patterns": tuple(exclude_patterns or ()),

|

|

293

|

+

"extensions": tuple(extensions or ()),

|

|

294

|

+

"files": [],

|

|

295

|

+

"chunks": [],

|

|

296

|

+

}

|

|

297

|

+

return [], empty, metadata

|

|

298

|

+

|

|

299

|

+

stat_cache: dict[Path, os.stat_result] = {}

|

|

300

|

+

strategy = get_strategy(mode)

|

|

301

|

+

searcher = VexorSearcher(

|

|

302

|

+

model_name=model_name,

|

|

303

|

+

batch_size=batch_size,

|

|

304

|

+

embed_concurrency=embed_concurrency,

|

|

305

|

+

provider=provider,

|

|

306

|

+

base_url=base_url,

|

|

307

|

+

api_key=api_key,

|

|

308

|

+

local_cuda=local_cuda,

|

|

309

|

+

)

|

|

310

|

+

payloads = strategy.payloads_for_files(files)

|

|

311

|

+

if not payloads:

|

|

312

|

+

empty = np.empty((0, 0), dtype=np.float32)

|

|

313

|

+

metadata = {

|

|

314

|

+

"index_id": None,

|

|

315

|

+

"version": CACHE_VERSION,

|

|

316

|

+

"generated_at": datetime.now(timezone.utc).isoformat(),

|

|

317

|

+

"root": str(directory),

|

|

318

|

+

"model": model_name,

|

|

319

|

+

"include_hidden": include_hidden,

|

|

320

|

+

"respect_gitignore": respect_gitignore,

|

|

321

|

+

"recursive": recursive,

|

|

322

|

+

"mode": mode,

|

|

323

|

+

"dimension": 0,

|

|

324

|

+

"exclude_patterns": tuple(exclude_patterns or ()),

|

|

325

|

+

"extensions": tuple(extensions or ()),

|

|

326

|

+

"files": [],

|

|

327

|

+

"chunks": [],

|

|

328

|

+

}

|

|

329

|

+

return [], empty, metadata

|

|

330

|

+

|

|

331

|

+

labels = [payload.label for payload in payloads]

|

|

332

|

+

if no_cache:

|

|

333

|

+

embeddings = searcher.embed_texts(labels)

|

|

334

|

+

vectors = np.asarray(embeddings, dtype=np.float32)

|

|

335

|

+

else:

|

|

336

|

+

vectors = _embed_labels_with_cache(

|

|

337

|

+

searcher=searcher,

|

|

338

|

+

model_name=model_name,

|

|

339

|

+

labels=labels,

|

|

340

|

+

)

|

|

341

|

+

entries = _build_index_entries(

|

|

342

|

+

payloads,

|

|

343

|

+

vectors,

|

|

344

|

+

directory,

|

|

345

|

+

stat_cache=stat_cache,

|

|

346

|

+

)

|

|

347

|

+

paths = [entry.path for entry in entries]

|

|

348

|

+

file_snapshot: dict[str, dict] = {}

|

|

349

|

+

chunk_entries: list[dict] = []

|

|

350

|

+

for entry in entries:

|

|

351

|

+

rel_path = entry.rel_path

|

|

352

|

+

chunk_entries.append(

|

|

353

|

+

{

|

|

354

|

+

"path": rel_path,

|

|

355

|

+

"absolute": str(entry.path),

|

|

356

|

+

"mtime": entry.mtime,

|

|

357

|

+

"size": entry.size_bytes,

|

|

358

|

+

"preview": entry.preview,

|

|

359

|

+

"label_hash": entry.label_hash,

|

|

360

|

+

"chunk_index": entry.chunk_index,

|

|

361

|

+

"start_line": entry.start_line,

|

|

362

|

+

"end_line": entry.end_line,

|

|

363

|

+

}

|

|

364

|

+

)

|

|

365

|

+

if rel_path not in file_snapshot:

|

|

366

|

+

file_snapshot[rel_path] = {

|

|

367

|

+

"path": rel_path,

|

|

368

|

+

"absolute": str(entry.path),

|

|

369

|

+

"mtime": entry.mtime,

|

|

370

|

+

"size": entry.size_bytes,

|

|

371

|

+

}

|

|

372

|

+

|

|

373

|

+

metadata = {

|

|

374

|

+

"index_id": None,

|

|

375

|

+

"version": CACHE_VERSION,

|

|

376

|

+

"generated_at": datetime.now(timezone.utc).isoformat(),

|

|

377

|

+

"root": str(directory),

|

|

378

|

+

"model": model_name,

|

|

379

|

+

"include_hidden": include_hidden,

|

|

380

|

+

"respect_gitignore": respect_gitignore,

|

|

381

|

+

"recursive": recursive,

|

|

382

|

+

"mode": mode,

|

|

383

|

+

"dimension": int(vectors.shape[1]) if vectors.size else 0,

|

|

384

|

+

"exclude_patterns": tuple(exclude_patterns or ()),

|

|

385

|

+

"extensions": tuple(extensions or ()),

|

|

386

|

+

"files": list(file_snapshot.values()),

|

|

387

|

+

"chunks": chunk_entries,

|

|

388

|

+

}

|

|

389

|

+

return paths, vectors, metadata

|

|

390

|

+

|

|

391

|

+

|

|

244

392

|

def clear_index_entries(

|

|

245

393

|

directory: Path,

|

|

246

394

|

*,

|

|

@@ -367,6 +515,7 @@ def _apply_incremental_update(

|

|

|

367

515

|

exclude_patterns: Sequence[str] | None,

|

|

368

516

|

extensions: Sequence[str] | None,

|

|

369

517

|

stat_cache: MutableMapping[Path, os.stat_result] | None = None,

|

|

518

|

+

no_cache: bool = False,

|

|

370

519

|

) -> Path:

|

|

371

520

|

payloads_to_embed, payloads_to_touch = _split_payloads_by_label(

|

|

372

521

|

changed_payloads,

|

|

@@ -387,6 +536,7 @@ def _apply_incremental_update(

|

|

|

387

536

|

searcher=searcher,

|

|

388

537

|

model_name=model_name,

|

|

389

538

|

labels=labels,

|

|

539

|

+

no_cache=no_cache,

|

|

390

540

|

)

|

|

391

541

|

changed_entries = _build_index_entries(

|

|

392

542

|

payloads_to_embed,

|

|

@@ -424,9 +574,13 @@ def _embed_labels_with_cache(

|

|

|

424

574

|

searcher,

|

|

425

575

|

model_name: str,

|

|

426

576

|

labels: Sequence[str],

|

|

577

|

+

no_cache: bool = False,

|

|

427

578

|

) -> np.ndarray:

|

|

428

579

|

if not labels:

|

|

429

580

|

return np.empty((0, 0), dtype=np.float32)

|

|

581

|

+

if no_cache:

|

|

582

|

+

vectors = searcher.embed_texts(labels)

|

|

583

|

+

return np.asarray(vectors, dtype=np.float32)

|

|

430

584

|

from ..cache import embedding_cache_key, load_embedding_cache, store_embedding_cache

|

|

431

585

|

|

|

432

586

|

hashes = [embedding_cache_key(label) for label in labels]

|

|

@@ -45,6 +45,8 @@ class SearchRequest:

|

|

|

45

45

|

exclude_patterns: tuple[str, ...]

|

|

46

46

|

extensions: tuple[str, ...]

|

|

47

47

|

auto_index: bool = True

|

|

48

|

+

temporary_index: bool = False

|

|

49

|

+

no_cache: bool = False

|

|

48

50

|

embed_concurrency: int = DEFAULT_EMBED_CONCURRENCY

|

|

49

51

|

rerank: str = DEFAULT_RERANK

|

|

50

52

|

flashrank_model: str | None = None

|

|

@@ -105,6 +107,11 @@ def _normalize_by_max(scores: Sequence[float]) -> list[float]:

|

|

|

105

107

|

return [score / max_score for score in scores]

|

|

106

108

|

|

|

107

109

|

|

|

110

|

+

def _resolve_rerank_candidates(top_k: int) -> int:

|

|

111

|

+

candidate = int(top_k * 2)

|

|

112

|

+

return max(20, min(candidate, 150))

|

|

113

|

+

|

|

114

|

+

|

|

108

115

|

def _bm25_scores(

|

|

109

116

|

query_tokens: Sequence[str],

|

|

110

117

|

documents: Sequence[Sequence[str]],

|

|

@@ -336,6 +343,9 @@ def _apply_remote_rerank(

|

|

|

336

343

|

def perform_search(request: SearchRequest) -> SearchResponse:

|

|

337

344

|

"""Execute the semantic search flow and return ranked results."""

|

|

338

345

|

|

|

346

|

+

if request.temporary_index or request.no_cache:

|

|

347

|

+

return _perform_search_with_temporary_index(request)

|

|

348

|

+

|

|

339

349

|

from ..cache import ( # local import

|

|

340

350

|

embedding_cache_key,

|

|

341

351

|

list_cache_entries,

|

|

@@ -381,6 +391,7 @@ def perform_search(request: SearchRequest) -> SearchResponse:

|

|

|

381

391

|

local_cuda=request.local_cuda,

|

|

382

392

|

exclude_patterns=request.exclude_patterns,

|

|

383

393

|

extensions=request.extensions,

|

|

394

|

+

no_cache=request.no_cache,

|

|

384

395

|

)

|

|

385

396

|

if result.status == IndexStatus.EMPTY:

|

|

386

397

|

return SearchResponse(

|

|

@@ -461,6 +472,7 @@ def perform_search(request: SearchRequest) -> SearchResponse:

|

|

|

461

472

|

local_cuda=request.local_cuda,

|

|

462

473

|

exclude_patterns=index_excludes,

|

|

463

474

|

extensions=index_extensions,

|

|

475

|

+

no_cache=request.no_cache,

|

|

464

476

|

)

|

|

465

477

|

if result.status == IndexStatus.EMPTY:

|

|

466

478

|

return SearchResponse(

|

|

@@ -542,9 +554,9 @@ def perform_search(request: SearchRequest) -> SearchResponse:

|

|

|

542

554

|

)

|

|

543

555

|

query_vector = None

|

|

544

556

|

query_hash = None

|

|

545

|

-

query_text_hash =

|

|

557

|

+

query_text_hash = None

|

|

546

558

|

index_id = metadata.get("index_id")

|

|

547

|

-

if index_id is not None:

|

|

559

|

+

if index_id is not None and not request.no_cache:

|

|

548

560

|

query_hash = query_cache_key(request.query, request.model_name)

|

|

549

561

|

try:

|

|

550

562

|

query_vector = load_query_vector(int(index_id), query_hash)

|

|

@@ -554,7 +566,8 @@ def perform_search(request: SearchRequest) -> SearchResponse:

|

|

|

554

566

|

if query_vector is not None and query_vector.size != file_vectors.shape[1]:

|

|

555

567

|

query_vector = None

|

|

556

568

|

|

|

557

|

-

if query_vector is None:

|

|

569

|

+

if query_vector is None and not request.no_cache:

|

|

570

|

+

query_text_hash = embedding_cache_key(request.query)

|

|

558

571

|

cached = load_embedding_cache(request.model_name, [query_text_hash])

|

|

559

572

|

query_vector = cached.get(query_text_hash)

|

|

560

573

|

if query_vector is not None and query_vector.size != file_vectors.shape[1]:

|

|

@@ -562,14 +575,22 @@ def perform_search(request: SearchRequest) -> SearchResponse:

|

|

|

562

575

|

|

|

563

576

|

if query_vector is None:

|

|

564

577

|

query_vector = searcher.embed_texts([request.query])[0]

|

|

565

|

-

|

|

566

|

-

|

|

567

|

-

|

|

568

|

-

|

|

569

|

-

|

|

570

|

-

|

|

571

|

-

|

|

572

|

-

|

|

578

|

+

if not request.no_cache:

|

|

579

|

+

if query_text_hash is None:

|

|

580

|

+

query_text_hash = embedding_cache_key(request.query)

|

|

581

|

+

try:

|

|

582

|

+

store_embedding_cache(

|

|

583

|

+

model=request.model_name,

|

|

584

|

+

embeddings={query_text_hash: query_vector},

|

|

585

|

+

)

|

|

586

|

+

except Exception: # pragma: no cover - best-effort cache storage

|

|

587

|

+

pass

|

|

588

|

+

if (

|

|

589

|

+

not request.no_cache

|

|

590

|

+

and query_vector is not None

|

|

591

|

+

and index_id is not None

|

|

592

|

+

and query_hash is not None

|

|

593

|

+

):

|

|

573

594