ngpt 4.0.2__tar.gz → 4.1.0__tar.gz

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- {ngpt-4.0.2 → ngpt-4.1.0}/PKG-INFO +9 -1

- {ngpt-4.0.2 → ngpt-4.1.0}/README.md +8 -0

- ngpt-4.1.0/docs/index.md +74 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/overview.md +29 -19

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/usage/cli_usage.md +26 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/modes/interactive.py +107 -15

- {ngpt-4.0.2 → ngpt-4.1.0}/pyproject.toml +1 -1

- ngpt-4.0.2/docs/index.md +0 -64

- {ngpt-4.0.2 → ngpt-4.1.0}/.github/banner.svg +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/.github/workflows/aur-publish.yml +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/.github/workflows/python-publish.yml +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/.github/workflows/repo-mirror.yml +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/.gitignore +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/.python-version +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/COMMIT_GUIDELINES.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/CONTRIBUTING.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/LICENSE +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/PKGBUILD +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/CONTRIBUTING.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/LICENSE.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/_config.yml +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/_sass/custom/custom.scss +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/configuration.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/examples/advanced.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/examples/basic.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/examples/role_gallery.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/examples/specialized_tools.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/examples.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/installation.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/usage/cli_config.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/usage/gitcommsg.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/usage/roles.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/usage/web_search.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/docs/usage.md +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/__init__.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/__main__.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/__init__.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/args.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/config_manager.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/formatters.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/main.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/modes/__init__.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/modes/chat.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/modes/code.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/modes/gitcommsg.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/modes/rewrite.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/modes/shell.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/modes/text.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/renderers.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/roles.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/cli/ui.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/client.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/utils/__init__.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/utils/cli_config.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/utils/config.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/utils/log.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/utils/pipe.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/ngpt/utils/web_search.py +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/previews/icon.png +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/previews/ngpt-g.png +0 -0

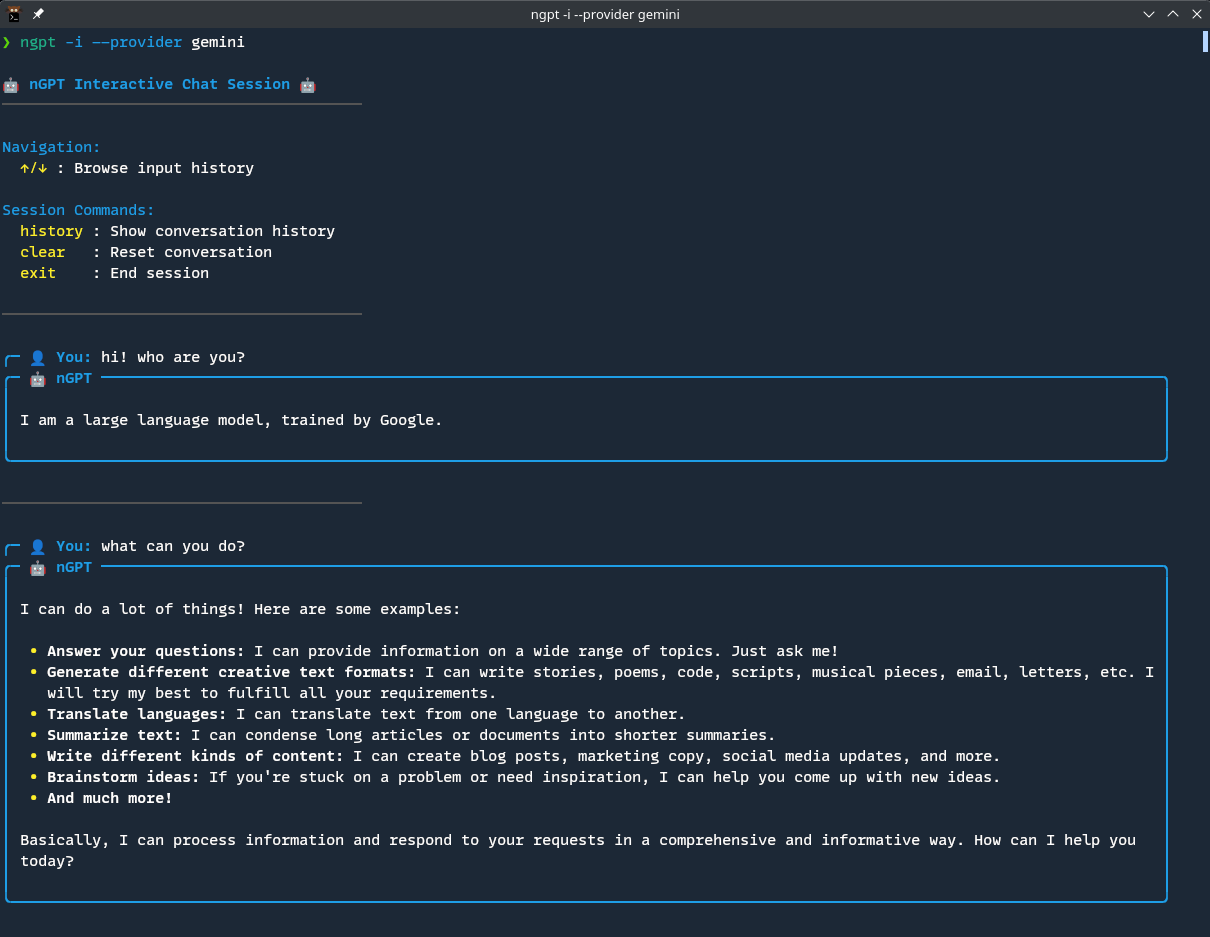

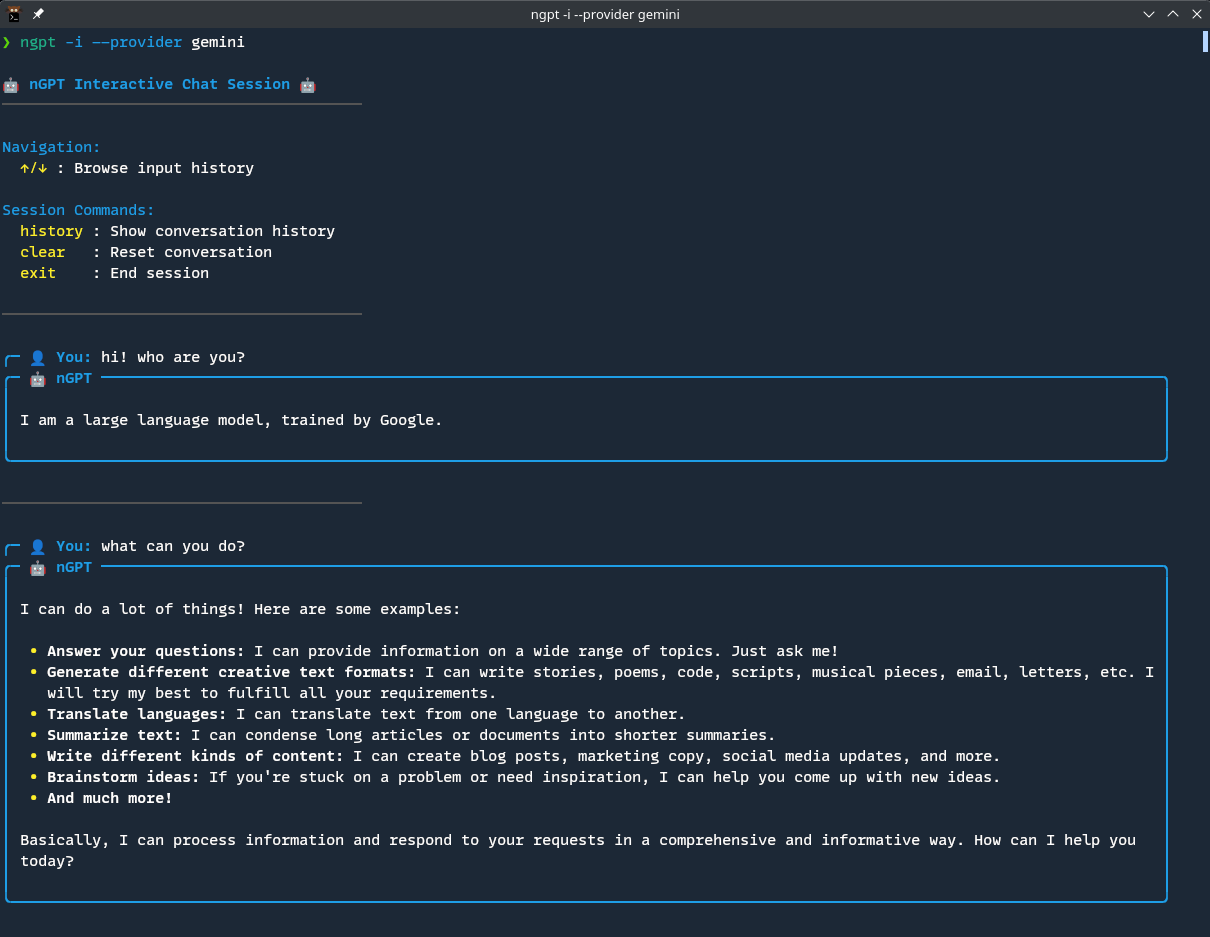

- {ngpt-4.0.2 → ngpt-4.1.0}/previews/ngpt-i.png +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/previews/ngpt-s-c.png +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/previews/ngpt-sh-c-a.png +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/previews/ngpt-w-self.png +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/previews/ngpt-w.png +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/previews/social-preview.png +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/uv.lock +0 -0

- {ngpt-4.0.2 → ngpt-4.1.0}/wiki.md +0 -0

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: ngpt

|

|

3

|

-

Version: 4.0

|

|

3

|

+

Version: 4.1.0

|

|

4

4

|

Summary: A Swiss army knife for LLMs: A fast, lightweight CLI and interactive chat tool that brings the power of any OpenAI-compatible LLM (OpenAI, Ollama, Groq, Claude, Gemini, etc.) straight to your terminal. rewrite texts or refine code, craft git commit messages, generate and run OS-aware shell commands.

|

|

5

5

|

Project-URL: Homepage, https://github.com/nazdridoy/ngpt

|

|

6

6

|

Project-URL: Repository, https://github.com/nazdridoy/ngpt

|

|

@@ -81,6 +81,7 @@ Description-Content-Type: text/markdown

|

|

|

81

81

|

- 🎭 **System Prompts**: Customize model behavior with custom system prompts

|

|

82

82

|

- 🤖 **Custom Roles**: Create and use reusable AI roles for specialized tasks

|

|

83

83

|

- 📃 **Conversation Logging**: Save your conversations to text files for later reference

|

|

84

|

+

- 💾 **Session Management**: Save, load, and list interactive chat sessions.

|

|

84

85

|

- 🔌 **Modular Architecture**: Well-structured codebase with clean separation of concerns

|

|

85

86

|

- 🔄 **Provider Switching**: Easily switch between different LLM providers with a single parameter

|

|

86

87

|

- 🚀 **Performance Optimized**: Fast response times and minimal resource usage

|

|

@@ -138,6 +139,13 @@ python -m ngpt "Tell me about quantum computing"

|

|

|

138

139

|

|

|

139

140

|

# Start an interactive chat session with conversation memory

|

|

140

141

|

ngpt -i

|

|

142

|

+

# Inside interactive mode, you can use commands like:

|

|

143

|

+

# help - Show help menu

|

|

144

|

+

# save - Save the current session

|

|

145

|

+

# load - Load a previous session

|

|

146

|

+

# sessions - List saved sessions

|

|

147

|

+

# clear - Clear the conversation

|

|

148

|

+

# exit - Exit the session

|

|

141

149

|

|

|

142

150

|

# Return response without streaming

|

|

143

151

|

ngpt --no-stream "Tell me about quantum computing"

|

|

@@ -44,6 +44,7 @@

|

|

|

44

44

|

- 🎭 **System Prompts**: Customize model behavior with custom system prompts

|

|

45

45

|

- 🤖 **Custom Roles**: Create and use reusable AI roles for specialized tasks

|

|

46

46

|

- 📃 **Conversation Logging**: Save your conversations to text files for later reference

|

|

47

|

+

- 💾 **Session Management**: Save, load, and list interactive chat sessions.

|

|

47

48

|

- 🔌 **Modular Architecture**: Well-structured codebase with clean separation of concerns

|

|

48

49

|

- 🔄 **Provider Switching**: Easily switch between different LLM providers with a single parameter

|

|

49

50

|

- 🚀 **Performance Optimized**: Fast response times and minimal resource usage

|

|

@@ -101,6 +102,13 @@ python -m ngpt "Tell me about quantum computing"

|

|

|

101

102

|

|

|

102

103

|

# Start an interactive chat session with conversation memory

|

|

103

104

|

ngpt -i

|

|

105

|

+

# Inside interactive mode, you can use commands like:

|

|

106

|

+

# help - Show help menu

|

|

107

|

+

# save - Save the current session

|

|

108

|

+

# load - Load a previous session

|

|

109

|

+

# sessions - List saved sessions

|

|

110

|

+

# clear - Clear the conversation

|

|

111

|

+

# exit - Exit the session

|

|

104

112

|

|

|

105

113

|

# Return response without streaming

|

|

106

114

|

ngpt --no-stream "Tell me about quantum computing"

|

ngpt-4.1.0/docs/index.md

ADDED

|

@@ -0,0 +1,74 @@

|

|

|

1

|

+

---

|

|

2

|

+

layout: default

|

|

3

|

+

title: nGPT Documentation

|

|

4

|

+

nav_order: 1

|

|

5

|

+

permalink: /

|

|

6

|

+

---

|

|

7

|

+

|

|

8

|

+

# nGPT Documentation

|

|

9

|

+

|

|

10

|

+

Welcome to the nGPT documentation. This guide will help you get started with nGPT, a Swiss army knife for LLMs that combines a powerful CLI and interactive chatbot in one package.

|

|

11

|

+

|

|

12

|

+

|

|

13

|

+

|

|

14

|

+

|

|

15

|

+

## What is nGPT?

|

|

16

|

+

|

|

17

|

+

nGPT is a versatile command-line tool designed to interact with AI language models through various APIs. It provides a seamless interface for generating text, code, shell commands, and more, all from your terminal.

|

|

18

|

+

|

|

19

|

+

## Getting Started

|

|

20

|

+

|

|

21

|

+

For a quick start, refer to the [Installation](installation.md) and [CLI Usage](usage/cli_usage.md) guides.

|

|

22

|

+

|

|

23

|

+

## Key Features

|

|

24

|

+

|

|

25

|

+

- ✅ **Versatile**: Powerful and easy-to-use CLI tool for various AI tasks

|

|

26

|

+

- 🪶 **Lightweight**: Minimal dependencies with everything you need included

|

|

27

|

+

- 🔄 **API Flexibility**: Works with OpenAI, Ollama, Groq, Claude, Gemini, and any OpenAI-compatible endpoint

|

|

28

|

+

- 💬 **Interactive Chat**: Continuous conversation with memory in modern UI

|

|

29

|

+

- 📊 **Streaming Responses**: Real-time output for better user experience

|

|

30

|

+

- 🔍 **Web Search**: Enhance any model with contextual information from the web, using advanced content extraction to identify the most relevant information from web pages

|

|

31

|

+

- 📥 **Stdin Processing**: Process piped content by using `{}` placeholder in prompts

|

|

32

|

+

- 🎨 **Markdown Rendering**: Beautiful formatting of markdown and code with syntax highlighting

|

|

33

|

+

- ⚡ **Real-time Markdown**: Stream responses with live updating syntax highlighting and formatting

|

|

34

|

+

- ⚙️ **Multiple Configurations**: Cross-platform config system supporting different profiles

|

|

35

|

+

- 💻 **Shell Command Generation**: OS-aware command execution

|

|

36

|

+

- 🧠 **Text Rewriting**: Improve text quality while maintaining original tone and meaning

|

|

37

|

+

- 🧩 **Clean Code Generation**: Output code without markdown or explanations

|

|

38

|

+

- 📝 **Rich Multiline Editor**: Interactive multiline text input with syntax highlighting and intuitive controls

|

|

39

|

+

- 📑 **Git Commit Messages**: AI-powered generation of conventional, detailed commit messages from git diffs

|

|

40

|

+

- 🎭 **System Prompts**: Customize model behavior with custom system prompts

|

|

41

|

+

- 🤖 **Custom Roles**: Create and use reusable AI roles for specialized tasks

|

|

42

|

+

- 📃 **Conversation Logging**: Save your conversations to text files for later reference

|

|

43

|

+

- 💾 **Session Management**: Save, load, and list interactive chat sessions.

|

|

44

|

+

- 🔌 **Modular Architecture**: Well-structured codebase with clean separation of concerns

|

|

45

|

+

- 🔄 **Provider Switching**: Easily switch between different LLM providers with a single parameter

|

|

46

|

+

- 🚀 **Performance Optimized**: Fast response times and minimal resource usage

|

|

47

|

+

|

|

48

|

+

## Quick Examples

|

|

49

|

+

|

|

50

|

+

```bash

|

|

51

|

+

# Basic chat

|

|

52

|

+

ngpt "Tell me about quantum computing"

|

|

53

|

+

|

|

54

|

+

# Interactive chat session

|

|

55

|

+

ngpt -i

|

|

56

|

+

# Inside interactive mode, you can use commands like:

|

|

57

|

+

# help - Show help menu

|

|

58

|

+

# save - Save the current session

|

|

59

|

+

# load - Load a previous session

|

|

60

|

+

# sessions - List saved sessions

|

|

61

|

+

# clear - Clear the conversation

|

|

62

|

+

# exit - Exit the session

|

|

63

|

+

|

|

64

|

+

# Generate code

|

|

65

|

+

ngpt --code "function to calculate Fibonacci numbers"

|

|

66

|

+

|

|

67

|

+

# Generate and execute shell commands

|

|

68

|

+

ngpt --shell "find large files in current directory"

|

|

69

|

+

|

|

70

|

+

# Generate git commit messages

|

|

71

|

+

ngpt --gitcommsg

|

|

72

|

+

```

|

|

73

|

+

|

|

74

|

+

For more examples and detailed instructions, please refer to the side panel for navigation through the documentation sections.

|

|

@@ -15,25 +15,28 @@ nGPT is a Swiss army knife for LLMs: powerful CLI and interactive chatbot in one

|

|

|

15

15

|

|

|

16

16

|

## Key Features

|

|

17

17

|

|

|

18

|

-

- **Versatile**: Powerful and easy-to-use CLI tool for various AI tasks

|

|

19

|

-

- **Lightweight**: Minimal dependencies with everything you need included

|

|

20

|

-

- **API Flexibility**: Works with OpenAI, Ollama, Groq, Claude, Gemini, and any OpenAI-compatible endpoint

|

|

21

|

-

- **Interactive Chat**: Continuous conversation with memory in modern UI

|

|

22

|

-

- **Streaming Responses**: Real-time output for better user experience

|

|

23

|

-

- **Web Search**: Enhance any model with contextual information from the web, using advanced content extraction to identify the most relevant information from web pages

|

|

24

|

-

- **Stdin Processing**: Process piped content by using `{}` placeholder in prompts

|

|

25

|

-

- **Markdown Rendering**: Beautiful formatting of markdown and code with syntax highlighting

|

|

26

|

-

- **Real-time Markdown**: Stream responses with live updating syntax highlighting and formatting

|

|

27

|

-

- **Multiple Configurations**: Cross-platform config system supporting different profiles

|

|

28

|

-

- **Shell Command Generation**: OS-aware command execution

|

|

29

|

-

- **Text Rewriting**: Improve text quality while maintaining original tone and meaning

|

|

30

|

-

- **Clean Code Generation**: Output code without markdown or explanations

|

|

31

|

-

- **Rich Multiline Editor**: Interactive multiline text input with syntax highlighting and intuitive controls

|

|

32

|

-

- **Git Commit Messages**: AI-powered generation of conventional, detailed commit messages from git diffs

|

|

33

|

-

- **System Prompts**: Customize model behavior with custom system prompts

|

|

34

|

-

- **

|

|

35

|

-

- **

|

|

36

|

-

- **

|

|

18

|

+

- ✅ **Versatile**: Powerful and easy-to-use CLI tool for various AI tasks

|

|

19

|

+

- 🪶 **Lightweight**: Minimal dependencies with everything you need included

|

|

20

|

+

- 🔄 **API Flexibility**: Works with OpenAI, Ollama, Groq, Claude, Gemini, and any OpenAI-compatible endpoint

|

|

21

|

+

- 💬 **Interactive Chat**: Continuous conversation with memory in modern UI

|

|

22

|

+

- 📊 **Streaming Responses**: Real-time output for better user experience

|

|

23

|

+

- 🔍 **Web Search**: Enhance any model with contextual information from the web, using advanced content extraction to identify the most relevant information from web pages

|

|

24

|

+

- 📥 **Stdin Processing**: Process piped content by using `{}` placeholder in prompts

|

|

25

|

+

- 🎨 **Markdown Rendering**: Beautiful formatting of markdown and code with syntax highlighting

|

|

26

|

+

- ⚡ **Real-time Markdown**: Stream responses with live updating syntax highlighting and formatting

|

|

27

|

+

- ⚙️ **Multiple Configurations**: Cross-platform config system supporting different profiles

|

|

28

|

+

- 💻 **Shell Command Generation**: OS-aware command execution

|

|

29

|

+

- 🧠 **Text Rewriting**: Improve text quality while maintaining original tone and meaning

|

|

30

|

+

- 🧩 **Clean Code Generation**: Output code without markdown or explanations

|

|

31

|

+

- 📝 **Rich Multiline Editor**: Interactive multiline text input with syntax highlighting and intuitive controls

|

|

32

|

+

- 📑 **Git Commit Messages**: AI-powered generation of conventional, detailed commit messages from git diffs

|

|

33

|

+

- 🎭 **System Prompts**: Customize model behavior with custom system prompts

|

|

34

|

+

- 🤖 **Custom Roles**: Create and use reusable AI roles for specialized tasks

|

|

35

|

+

- 📃 **Conversation Logging**: Save your conversations to text files for later reference

|

|

36

|

+

- 💾 **Session Management**: Save, load, and list interactive chat sessions.

|

|

37

|

+

- 🔌 **Modular Architecture**: Well-structured codebase with clean separation of concerns

|

|

38

|

+

- 🔄 **Provider Switching**: Easily switch between different LLM providers with a single parameter

|

|

39

|

+

- 🚀 **Performance Optimized**: Fast response times and minimal resource usage

|

|

37

40

|

|

|

38

41

|

## Core Modes

|

|

39

42

|

|

|

@@ -50,6 +53,13 @@ Start an ongoing conversation with memory:

|

|

|

50

53

|

```bash

|

|

51

54

|

ngpt -i

|

|

52

55

|

ngpt --interactive

|

|

56

|

+

# Inside interactive mode, you can use commands like:

|

|

57

|

+

# help - Show help menu

|

|

58

|

+

# save - Save the current session

|

|

59

|

+

# load - Load a previous session

|

|

60

|

+

# sessions - List saved sessions

|

|

61

|

+

# clear - Clear the conversation

|

|

62

|

+

# exit - Exit the session

|

|

53

63

|

```

|

|

54

64

|

|

|

55

65

|

### Code Generation Mode

|

|

@@ -151,6 +151,32 @@ This opens a continuous chat session where the AI remembers previous exchanges.

|

|

|

151

151

|

- Type your messages and press Enter to send

|

|

152

152

|

- Use arrow keys to navigate message history

|

|

153

153

|

- Press Ctrl+C to exit the session

|

|

154

|

+

- Use `help` to see a list of available commands.

|

|

155

|

+

|

|

156

|

+

#### Session Management

|

|

157

|

+

|

|

158

|

+

In interactive mode, you can manage your chat sessions with the following commands:

|

|

159

|

+

|

|

160

|

+

- **`save`**: Saves the current conversation to a timestamped JSON file in the `history` subdirectory of your configuration folder.

|

|

161

|

+

- **`load`**: Lists recent sessions and prompts you to select one to load, restoring the conversation history.

|

|

162

|

+

- **`sessions`**: Displays a list of all saved sessions.

|

|

163

|

+

- **`clear`**: Clears the current conversation history.

|

|

164

|

+

- **`history`**: Shows the full history of the current conversation.

|

|

165

|

+

- **`exit`**: Exits the interactive session.

|

|

166

|

+

- **`help`**: Shows the help menu with all available commands.

|

|

167

|

+

|

|

168

|

+

Example:

|

|

169

|

+

```

|

|

170

|

+

> save

|

|

171

|

+

Session saved to /home/user/.config/ngpt/history/session_2024-10-26_12-30-00.json

|

|

172

|

+

|

|

173

|

+

> load

|

|

174

|

+

Saved Sessions:

|

|

175

|

+

[0] session_2024-10-26_12-30-00.json

|

|

176

|

+

[1] session_2024-10-25_18-00-00.json

|

|

177

|

+

Enter the number of the session to load: 0

|

|

178

|

+

Session loaded from ...

|

|

179

|

+

```

|

|

154

180

|

|

|

155

181

|

#### Conversation Logging

|

|

156

182

|

|

|

@@ -4,6 +4,9 @@ import traceback

|

|

|

4

4

|

import threading

|

|

5

5

|

import sys

|

|

6

6

|

import time

|

|

7

|

+

import json

|

|

8

|

+

from datetime import datetime

|

|

9

|

+

from ...utils.config import get_config_dir

|

|

7

10

|

from ..formatters import COLORS

|

|

8

11

|

from ..renderers import prettify_markdown, prettify_streaming_markdown, TERMINAL_RENDER_LOCK

|

|

9

12

|

from ..ui import spinner, get_multiline_input

|

|

@@ -50,21 +53,29 @@ def interactive_chat_session(client, web_search=False, no_stream=False, temperat

|

|

|

50

53

|

# Create a separator line - use a consistent separator length for all lines

|

|

51

54

|

separator_length = min(40, term_width - 10)

|

|

52

55

|

separator = f"{COLORS['gray']}{'─' * separator_length}{COLORS['reset']}"

|

|

53

|

-

|

|

54

|

-

|

|

55

|

-

|

|

56

|

-

|

|

57

|

-

|

|

58

|

-

|

|

59

|

-

|

|

60

|

-

|

|

61

|

-

|

|

62

|

-

|

|

63

|

-

|

|

64

|

-

|

|

65

|

-

print(f" {COLORS['yellow']}

|

|

66

|

-

|

|

67

|

-

|

|

56

|

+

|

|

57

|

+

def show_help():

|

|

58

|

+

"""Displays the help menu."""

|

|

59

|

+

print(separator)

|

|

60

|

+

# Group commands into categories with better formatting

|

|

61

|

+

print(f"\n{COLORS['cyan']}Navigation:{COLORS['reset']}")

|

|

62

|

+

print(f" {COLORS['yellow']}↑/↓{COLORS['reset']} : Browse input history")

|

|

63

|

+

|

|

64

|

+

print(f"\n{COLORS['cyan']}Session Commands:{COLORS['reset']}")

|

|

65

|

+

print(f" {COLORS['yellow']}history{COLORS['reset']} : Show conversation history")

|

|

66

|

+

print(f" {COLORS['yellow']}clear{COLORS['reset']} : Reset conversation")

|

|

67

|

+

print(f" {COLORS['yellow']}exit{COLORS['reset']} : End session")

|

|

68

|

+

print(f" {COLORS['yellow']}save{COLORS['reset']} : Save current session")

|

|

69

|

+

print(f" {COLORS['yellow']}load{COLORS['reset']} : Load a previous session")

|

|

70

|

+

print(f" {COLORS['yellow']}sessions{COLORS['reset']}: List saved sessions")

|

|

71

|

+

print(f" {COLORS['yellow']}help{COLORS['reset']} : Show this help message")

|

|

72

|

+

|

|

73

|

+

if multiline_enabled:

|

|

74

|

+

print(f" {COLORS['yellow']}ml{COLORS['reset']} : Open multiline editor")

|

|

75

|

+

|

|

76

|

+

print(f"\n{separator}\n")

|

|

77

|

+

|

|

78

|

+

show_help()

|

|

68

79

|

|

|

69

80

|

# Show logging info if logger is available

|

|

70

81

|

if logger:

|

|

@@ -148,6 +159,71 @@ def interactive_chat_session(client, web_search=False, no_stream=False, temperat

|

|

|

148

159

|

print(f"\n{COLORS['yellow']}Conversation history cleared.{COLORS['reset']}")

|

|

149

160

|

print(separator) # Add separator for consistency

|

|

150

161

|

|

|

162

|

+

# --- Session Management Functions ---

|

|

163

|

+

|

|

164

|

+

def get_history_dir():

|

|

165

|

+

"""Get the history directory, creating it if it doesn't exist."""

|

|

166

|

+

history_dir = get_config_dir() / "history"

|

|

167

|

+

history_dir.mkdir(parents=True, exist_ok=True)

|

|

168

|

+

return history_dir

|

|

169

|

+

|

|

170

|

+

def save_session():

|

|

171

|

+

"""Save the current conversation to a JSON file."""

|

|

172

|

+

history_dir = get_history_dir()

|

|

173

|

+

timestamp = datetime.now().strftime("%Y-%m-%d_%H-%M-%S")

|

|

174

|

+

filename = history_dir / f"session_{timestamp}.json"

|

|

175

|

+

|

|

176

|

+

with open(filename, "w") as f:

|

|

177

|

+

json.dump(conversation, f, indent=2)

|

|

178

|

+

|

|

179

|

+

print(f"\n{COLORS['green']}Session saved to {filename}{COLORS['reset']}")

|

|

180

|

+

|

|

181

|

+

def list_sessions():

|

|

182

|

+

"""List all saved sessions."""

|

|

183

|

+

history_dir = get_history_dir()

|

|

184

|

+

sessions = sorted(history_dir.glob("session_*.json"), reverse=True)

|

|

185

|

+

|

|

186

|

+

if not sessions:

|

|

187

|

+

print(f"\n{COLORS['yellow']}No saved sessions found.{COLORS['reset']}")

|

|

188

|

+

return

|

|

189

|

+

|

|

190

|

+

print(f"\n{COLORS['cyan']}{COLORS['bold']}Saved Sessions:{COLORS['reset']}")

|

|

191

|

+

for i, session_file in enumerate(sessions):

|

|

192

|

+

print(f" [{i}] {session_file.name}")

|

|

193

|

+

|

|

194

|

+

def load_session():

|

|

195

|

+

"""Load a conversation from a saved session file."""

|

|

196

|

+

nonlocal conversation

|

|

197

|

+

history_dir = get_history_dir()

|

|

198

|

+

sessions = sorted(history_dir.glob("session_*.json"), reverse=True)

|

|

199

|

+

|

|

200

|

+

if not sessions:

|

|

201

|

+

print(f"\n{COLORS['yellow']}No saved sessions to load.{COLORS['reset']}")

|

|

202

|

+

return

|

|

203

|

+

|

|

204

|

+

list_sessions()

|

|

205

|

+

|

|

206

|

+

try:

|

|

207

|

+

choice = input("Enter the number of the session to load: ")

|

|

208

|

+

choice_index = int(choice)

|

|

209

|

+

|

|

210

|

+

if 0 <= choice_index < len(sessions):

|

|

211

|

+

session_file = sessions[choice_index]

|

|

212

|

+

with open(session_file, "r") as f:

|

|

213

|

+

loaded_conversation = json.load(f)

|

|

214

|

+

|

|

215

|

+

# Basic validation

|

|

216

|

+

if isinstance(loaded_conversation, list) and all(isinstance(item, dict) for item in loaded_conversation):

|

|

217

|

+

conversation = loaded_conversation

|

|

218

|

+

print(f"\n{COLORS['green']}Session loaded from {session_file.name}{COLORS['reset']}")

|

|

219

|

+

display_history()

|

|

220

|

+

else:

|

|

221

|

+

print(f"\n{COLORS['red']}Error: Invalid session file format.{COLORS['reset']}")

|

|

222

|

+

else:

|

|

223

|

+

print(f"\n{COLORS['red']}Error: Invalid selection.{COLORS['reset']}")

|

|

224

|

+

except (ValueError, IndexError):

|

|

225

|

+

print(f"\n{COLORS['red']}Error: Invalid input. Please enter a number from the list.{COLORS['reset']}")

|

|

226

|

+

|

|

151

227

|

try:

|

|

152

228

|

while True:

|

|

153

229

|

# Get user input

|

|

@@ -188,6 +264,22 @@ def interactive_chat_session(client, web_search=False, no_stream=False, temperat

|

|

|

188

264

|

if user_input.lower() == 'clear':

|

|

189

265

|

clear_history()

|

|

190

266

|

continue

|

|

267

|

+

|

|

268

|

+

if user_input.lower() == 'save':

|

|

269

|

+

save_session()

|

|

270

|

+

continue

|

|

271

|

+

|

|

272

|

+

if user_input.lower() == 'sessions':

|

|

273

|

+

list_sessions()

|

|

274

|

+

continue

|

|

275

|

+

|

|

276

|

+

if user_input.lower() == 'load':

|

|

277

|

+

load_session()

|

|

278

|

+

continue

|

|

279

|

+

|

|

280

|

+

if user_input.lower() == 'help':

|

|

281

|

+

show_help()

|

|

282

|

+

continue

|

|

191

283

|

|

|

192

284

|

if multiline_enabled and user_input.lower() == 'ml':

|

|

193

285

|

print(f"{COLORS['cyan']}Opening multiline editor. Press Ctrl+D to submit.{COLORS['reset']}")

|

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

[project]

|

|

2

2

|

name = "ngpt"

|

|

3

|

-

version = "4.0

|

|

3

|

+

version = "4.1.0"

|

|

4

4

|

description = "A Swiss army knife for LLMs: A fast, lightweight CLI and interactive chat tool that brings the power of any OpenAI-compatible LLM (OpenAI, Ollama, Groq, Claude, Gemini, etc.) straight to your terminal. rewrite texts or refine code, craft git commit messages, generate and run OS-aware shell commands."

|

|

5

5

|

authors = [

|

|

6

6

|

{name = "nazDridoy", email = "nazdridoy399@gmail.com"},

|

ngpt-4.0.2/docs/index.md

DELETED

|

@@ -1,64 +0,0 @@

|

|

|

1

|

-

---

|

|

2

|

-

layout: default

|

|

3

|

-

title: nGPT Documentation

|

|

4

|

-

nav_order: 1

|

|

5

|

-

permalink: /

|

|

6

|

-

---

|

|

7

|

-

|

|

8

|

-

# nGPT Documentation

|

|

9

|

-

|

|

10

|

-

Welcome to the nGPT documentation. This guide will help you get started with nGPT, a Swiss army knife for LLMs that combines a powerful CLI and interactive chatbot in one package.

|

|

11

|

-

|

|

12

|

-

|

|

13

|

-

|

|

14

|

-

|

|

15

|

-

## What is nGPT?

|

|

16

|

-

|

|

17

|

-

nGPT is a versatile command-line tool designed to interact with AI language models through various APIs. It provides a seamless interface for generating text, code, shell commands, and more, all from your terminal.

|

|

18

|

-

|

|

19

|

-

## Getting Started

|

|

20

|

-

|

|

21

|

-

For a quick start, refer to the [Installation](installation.md) and [CLI Usage](usage/cli_usage.md) guides.

|

|

22

|

-

|

|

23

|

-

## Key Features

|

|

24

|

-

|

|

25

|

-

- **Versatile**: Powerful and easy-to-use CLI tool for various AI tasks

|

|

26

|

-

- **Lightweight**: Minimal dependencies with everything you need included

|

|

27

|

-

- **API Flexibility**: Works with OpenAI, Ollama, Groq, Claude, Gemini, and any OpenAI-compatible endpoint

|

|

28

|

-

- **Interactive Chat**: Continuous conversation with memory in modern UI

|

|

29

|

-

- **Streaming Responses**: Real-time output for better user experience

|

|

30

|

-

- **Web Search**: Enhance any model with contextual information from the web

|

|

31

|

-

- **Stdin Processing**: Process piped content by using `{}` placeholder in prompts

|

|

32

|

-

- **Markdown Rendering**: Beautiful formatting of markdown and code with syntax highlighting

|

|

33

|

-

- **Real-time Markdown**: Stream responses with live updating syntax highlighting and formatting

|

|

34

|

-

- **Multiple Configurations**: Cross-platform config system supporting different profiles

|

|

35

|

-

- **Shell Command Generation**: OS-aware command execution

|

|

36

|

-

- **Text Rewriting**: Improve text quality while maintaining original tone and meaning

|

|

37

|

-

- **Clean Code Generation**: Output code without markdown or explanations

|

|

38

|

-

- **Rich Multiline Editor**: Interactive multiline text input with syntax highlighting and intuitive controls

|

|

39

|

-

- **Git Commit Messages**: AI-powered generation of conventional, detailed commit messages from git diffs

|

|

40

|

-

- **System Prompts**: Customize model behavior with custom system prompts

|

|

41

|

-

- **Conversation Logging**: Save your conversations to text files for later reference

|

|

42

|

-

- **Provider Switching**: Easily switch between different LLM providers with a single parameter

|

|

43

|

-

- **Performance Optimized**: Fast response times and minimal resource usage

|

|

44

|

-

|

|

45

|

-

## Quick Examples

|

|

46

|

-

|

|

47

|

-

```bash

|

|

48

|

-

# Basic chat

|

|

49

|

-

ngpt "Tell me about quantum computing"

|

|

50

|

-

|

|

51

|

-

# Interactive chat session

|

|

52

|

-

ngpt -i

|

|

53

|

-

|

|

54

|

-

# Generate code

|

|

55

|

-

ngpt --code "function to calculate Fibonacci numbers"

|

|

56

|

-

|

|

57

|

-

# Generate and execute shell commands

|

|

58

|

-

ngpt --shell "find large files in current directory"

|

|

59

|

-

|

|

60

|

-

# Generate git commit messages

|

|

61

|

-

ngpt --gitcommsg

|

|

62

|

-

```

|

|

63

|

-

|

|

64

|

-

For more examples and detailed instructions, please refer to the side panel for navigation through the documentation sections.

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|