llms-py 2.0.27__tar.gz → 2.0.29__tar.gz

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- {llms_py-2.0.27/llms_py.egg-info → llms_py-2.0.29}/PKG-INFO +32 -9

- {llms_py-2.0.27 → llms_py-2.0.29}/README.md +31 -8

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/llms.json +11 -1

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/main.py +194 -6

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/Analytics.mjs +12 -4

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/ChatPrompt.mjs +46 -6

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/Main.mjs +18 -3

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/ModelSelector.mjs +20 -2

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/SystemPromptSelector.mjs +21 -1

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/ai.mjs +1 -1

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/app.css +16 -70

- llms_py-2.0.29/llms/ui/lib/servicestack-vue.mjs +37 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/typography.css +4 -2

- {llms_py-2.0.27 → llms_py-2.0.29/llms_py.egg-info}/PKG-INFO +32 -9

- {llms_py-2.0.27 → llms_py-2.0.29}/pyproject.toml +1 -1

- {llms_py-2.0.27 → llms_py-2.0.29}/requirements.txt +1 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/setup.py +1 -1

- llms_py-2.0.27/llms/ui/lib/servicestack-vue.mjs +0 -37

- {llms_py-2.0.27 → llms_py-2.0.29}/LICENSE +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/MANIFEST.in +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/__init__.py +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/__main__.py +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/index.html +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/App.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/Avatar.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/Brand.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/OAuthSignIn.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/ProviderIcon.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/ProviderStatus.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/Recents.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/SettingsDialog.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/Sidebar.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/SignIn.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/SystemPromptEditor.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/Welcome.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/fav.svg +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/lib/chart.js +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/lib/charts.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/lib/color.js +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/lib/highlight.min.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/lib/idb.min.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/lib/marked.min.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/lib/servicestack-client.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/lib/vue-router.min.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/lib/vue.min.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/lib/vue.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/markdown.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/tailwind.input.css +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/threadStore.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui/utils.mjs +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms/ui.json +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms_py.egg-info/SOURCES.txt +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms_py.egg-info/dependency_links.txt +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms_py.egg-info/entry_points.txt +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms_py.egg-info/not-zip-safe +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms_py.egg-info/requires.txt +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/llms_py.egg-info/top_level.txt +0 -0

- {llms_py-2.0.27 → llms_py-2.0.29}/setup.cfg +0 -0

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: llms-py

|

|

3

|

-

Version: 2.0.

|

|

3

|

+

Version: 2.0.29

|

|

4

4

|

Summary: A lightweight CLI tool and OpenAI-compatible server for querying multiple Large Language Model (LLM) providers

|

|

5

5

|

Home-page: https://github.com/ServiceStack/llms

|

|

6

6

|

Author: ServiceStack

|

|

@@ -50,7 +50,7 @@ Configure additional providers and models in [llms.json](llms/llms.json)

|

|

|

50

50

|

|

|

51

51

|

## Features

|

|

52

52

|

|

|

53

|

-

- **Lightweight**: Single [llms.py](https://github.com/ServiceStack/llms/blob/main/llms/main.py) Python file with single `aiohttp` dependency

|

|

53

|

+

- **Lightweight**: Single [llms.py](https://github.com/ServiceStack/llms/blob/main/llms/main.py) Python file with single `aiohttp` dependency (Pillow optional)

|

|

54

54

|

- **Multi-Provider Support**: OpenRouter, Ollama, Anthropic, Google, OpenAI, Grok, Groq, Qwen, Z.ai, Mistral

|

|

55

55

|

- **OpenAI-Compatible API**: Works with any client that supports OpenAI's chat completion API

|

|

56

56

|

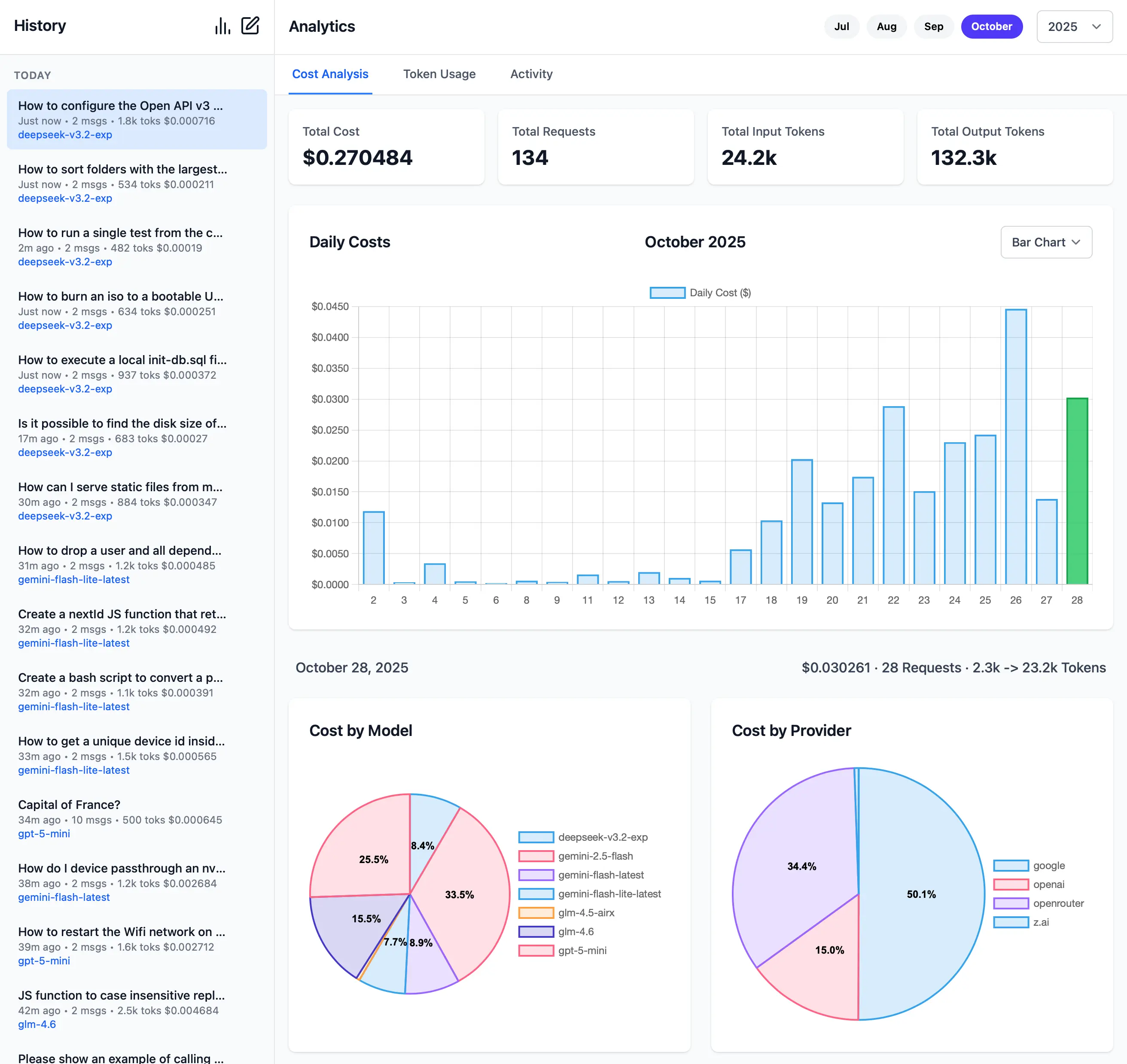

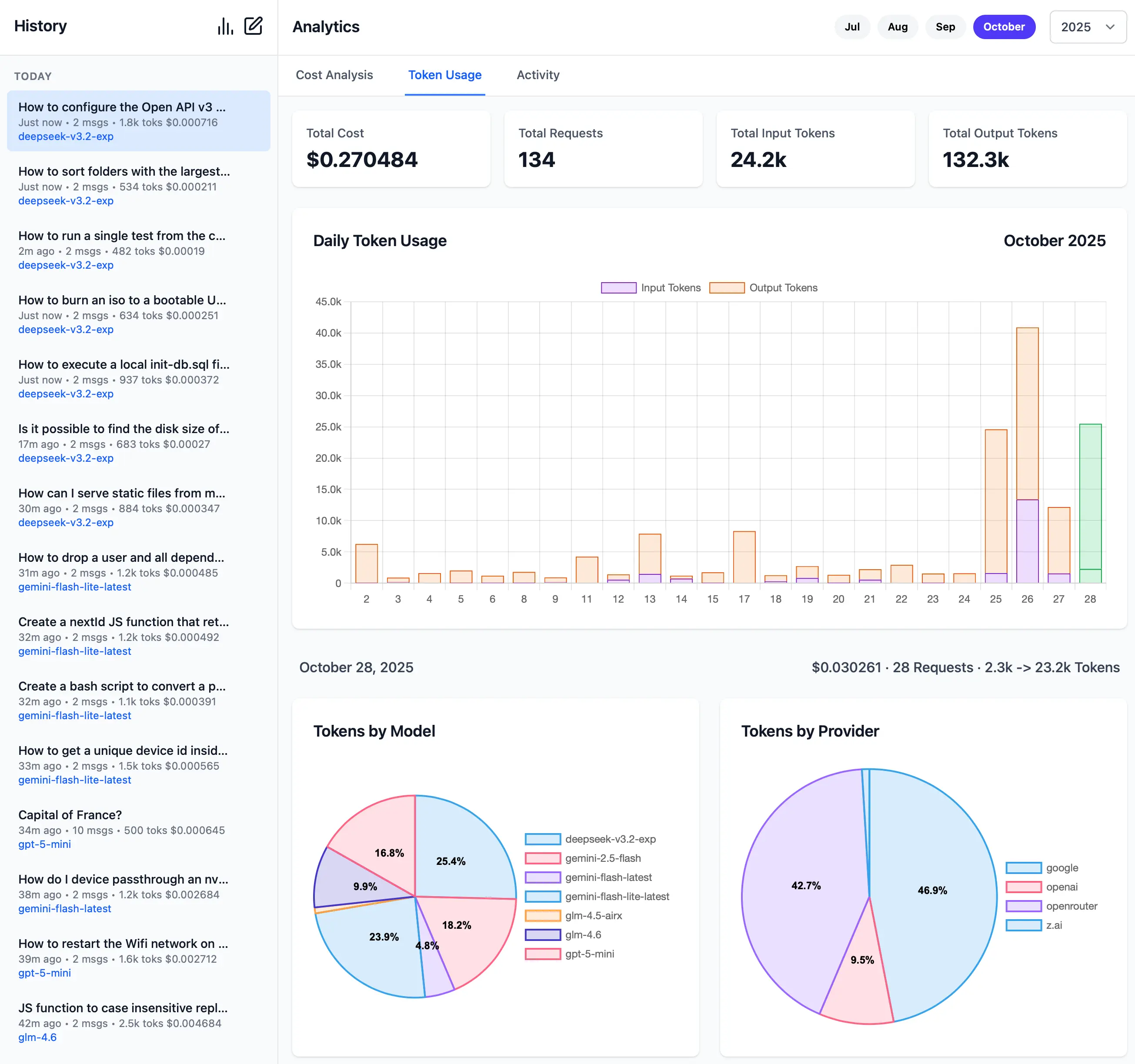

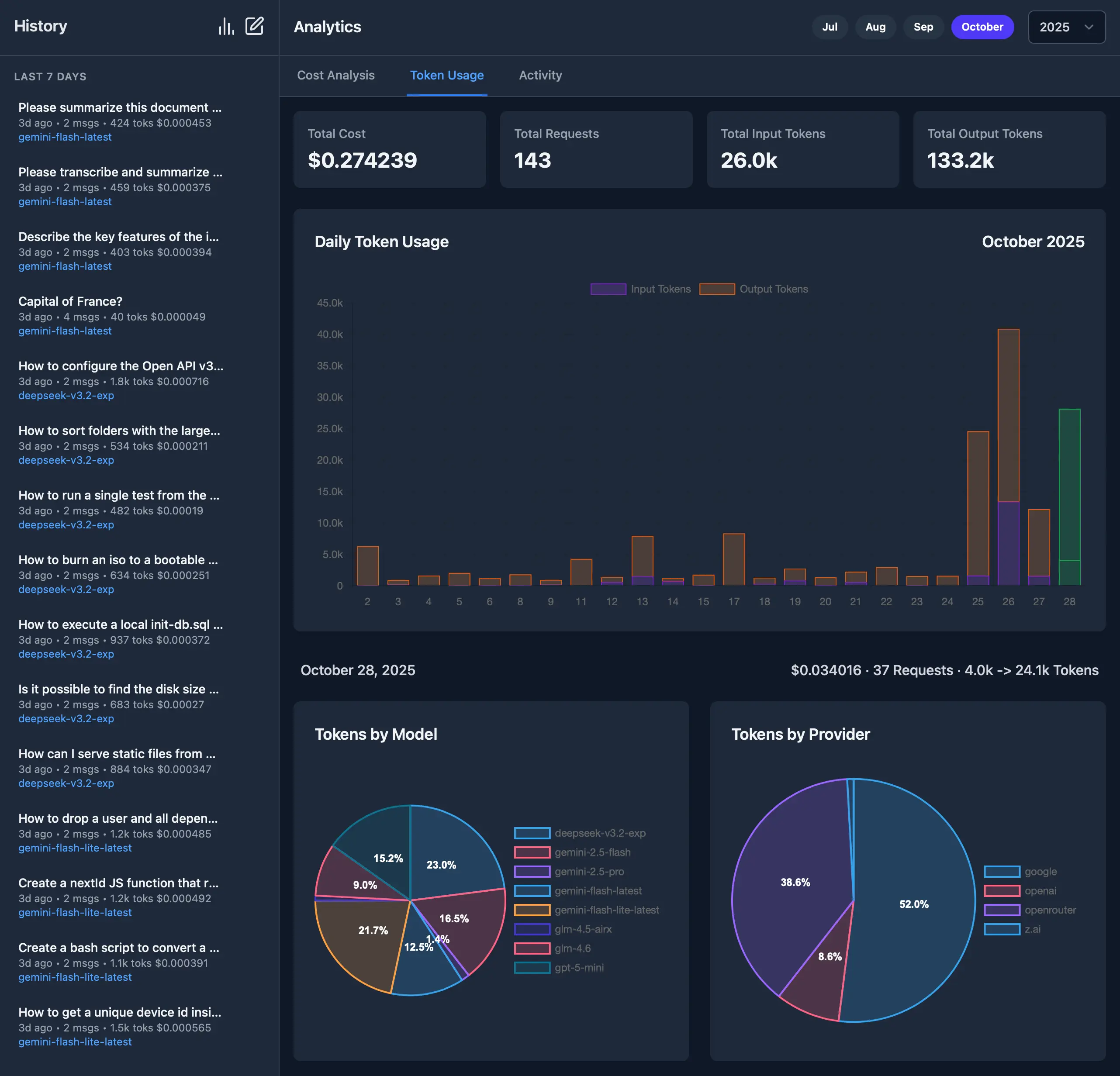

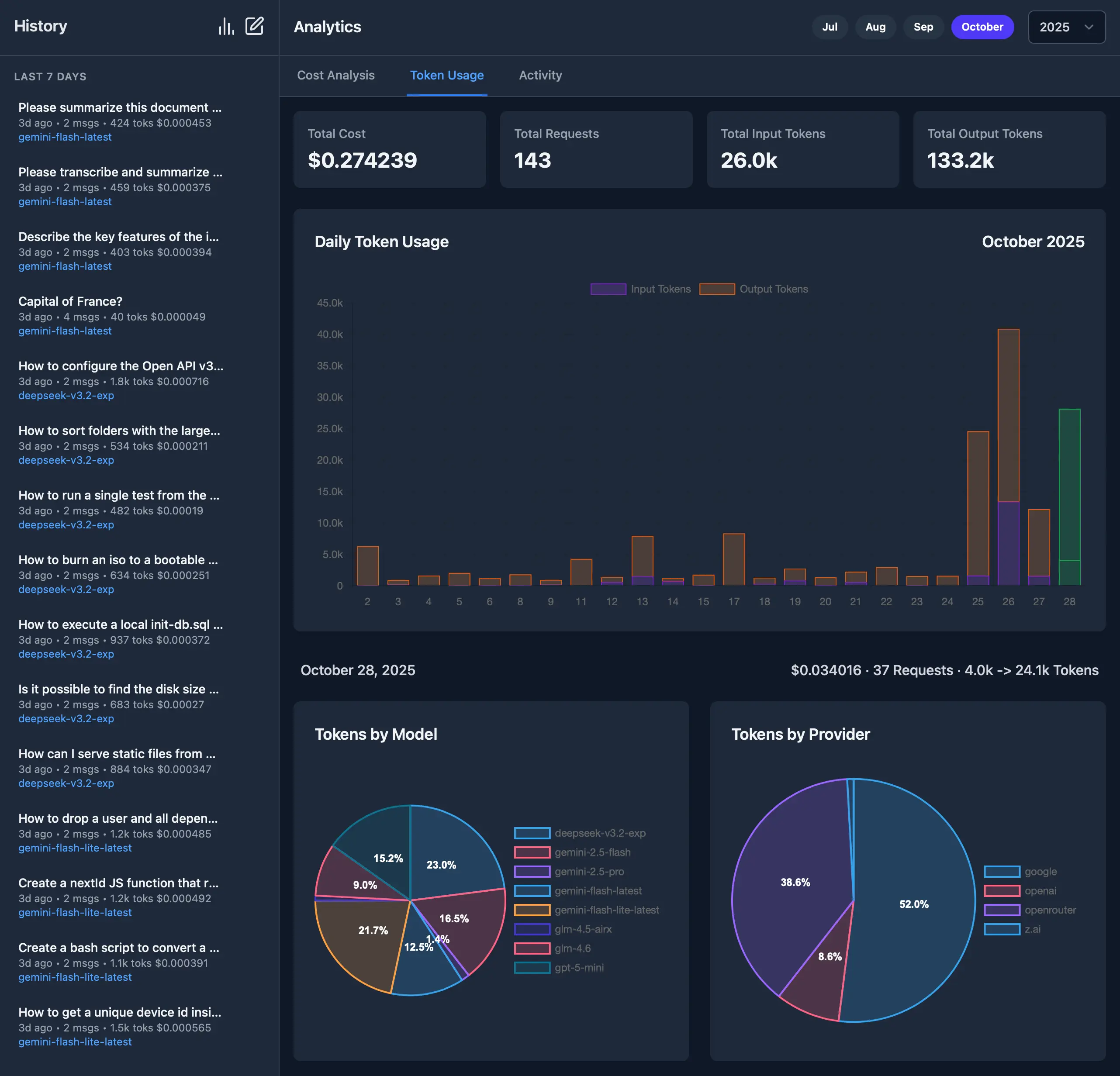

- **Built-in Analytics**: Built-in analytics UI to visualize costs, requests, and token usage

|

|

@@ -58,6 +58,7 @@ Configure additional providers and models in [llms.json](llms/llms.json)

|

|

|

58

58

|

- **CLI Interface**: Simple command-line interface for quick interactions

|

|

59

59

|

- **Server Mode**: Run an OpenAI-compatible HTTP server at `http://localhost:{PORT}/v1/chat/completions`

|

|

60

60

|

- **Image Support**: Process images through vision-capable models

|

|

61

|

+

- Auto resizes and converts to webp if exceeds configured limits

|

|

61

62

|

- **Audio Support**: Process audio through audio-capable models

|

|

62

63

|

- **Custom Chat Templates**: Configurable chat completion request templates for different modalities

|

|

63

64

|

- **Auto-Discovery**: Automatically discover available Ollama models

|

|

@@ -68,23 +69,27 @@ Configure additional providers and models in [llms.json](llms/llms.json)

|

|

|

68

69

|

|

|

69

70

|

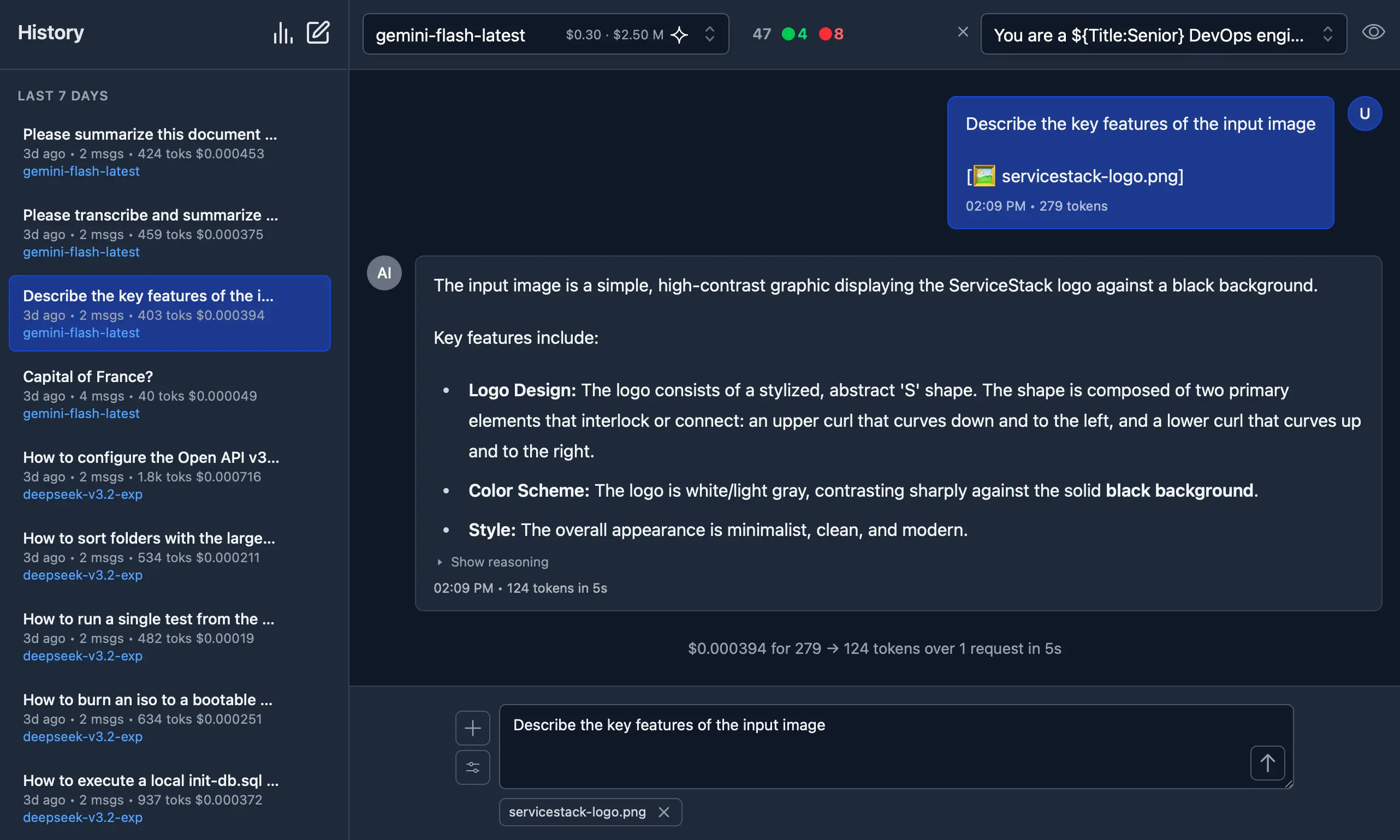

Access all your local all remote LLMs with a single ChatGPT-like UI:

|

|

70

71

|

|

|

71

|

-

[](https://servicestack.net/posts/llms-py-ui)

|

|

72

73

|

|

|

73

|

-

|

|

74

|

+

#### Dark Mode Support

|

|

75

|

+

|

|

76

|

+

[](https://servicestack.net/posts/llms-py-ui)

|

|

77

|

+

|

|

78

|

+

#### Monthly Costs Analysis

|

|

74

79

|

|

|

75

80

|

[](https://servicestack.net/posts/llms-py-ui)

|

|

76

81

|

|

|

77

|

-

|

|

82

|

+

#### Monthly Token Usage (Dark Mode)

|

|

78

83

|

|

|

79

|

-

[](https://servicestack.net/posts/llms-py-ui)

|

|

84

|

+

[](https://servicestack.net/posts/llms-py-ui)

|

|

80

85

|

|

|

81

|

-

|

|

86

|

+

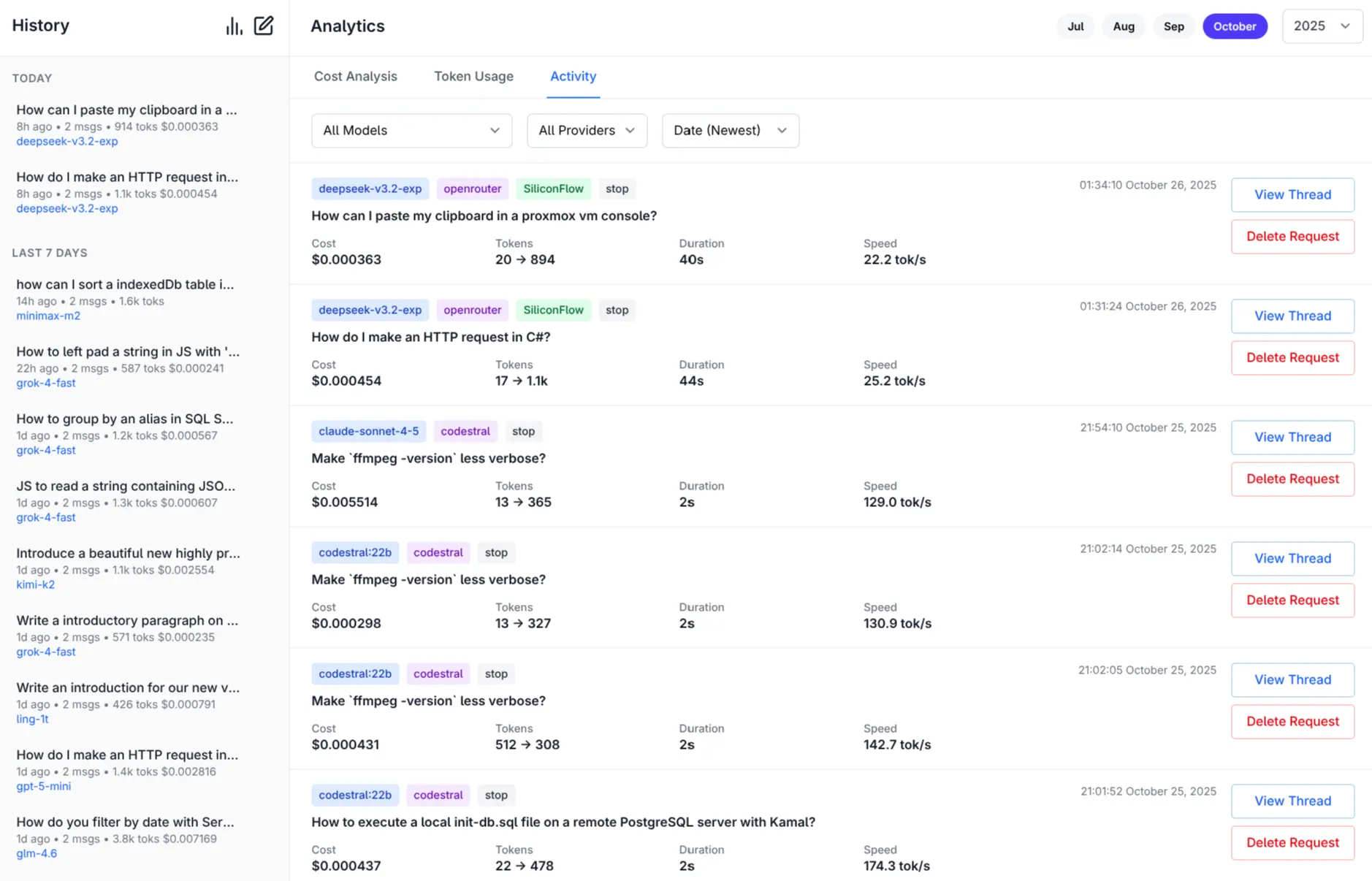

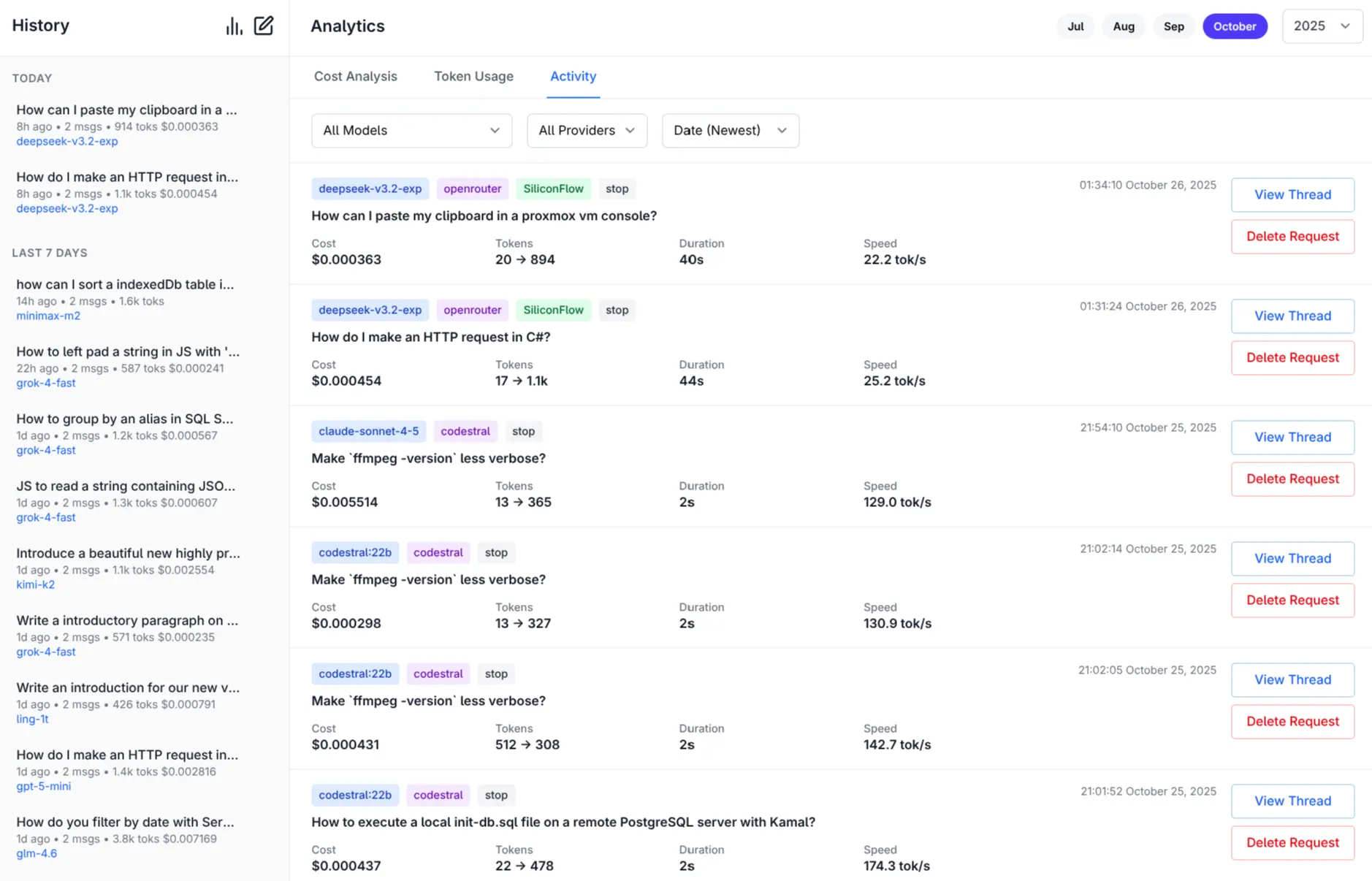

#### Monthly Activity Log

|

|

82

87

|

|

|

83

88

|

[](https://servicestack.net/posts/llms-py-ui)

|

|

84

89

|

|

|

85

90

|

[More Features and Screenshots](https://servicestack.net/posts/llms-py-ui).

|

|

86

91

|

|

|

87

|

-

|

|

92

|

+

#### Check Provider Reliability and Response Times

|

|

88

93

|

|

|

89

94

|

Check the status of configured providers to test if they're configured correctly, reachable and what their response times is for the simplest `1+1=` request:

|

|

90

95

|

|

|

@@ -230,6 +235,22 @@ See [DOCKER.md](DOCKER.md) for detailed instructions on customizing configuratio

|

|

|

230

235

|

|

|

231

236

|

llms.py supports optional GitHub OAuth authentication to secure your web UI and API endpoints. When enabled, users must sign in with their GitHub account before accessing the application.

|

|

232

237

|

|

|

238

|

+

```json

|

|

239

|

+

{

|

|

240

|

+

"auth": {

|

|

241

|

+

"enabled": true,

|

|

242

|

+

"github": {

|

|

243

|

+

"client_id": "$GITHUB_CLIENT_ID",

|

|

244

|

+

"client_secret": "$GITHUB_CLIENT_SECRET",

|

|

245

|

+

"redirect_uri": "http://localhost:8000/auth/github/callback",

|

|

246

|

+

"restrict_to": "$GITHUB_USERS"

|

|

247

|

+

}

|

|

248

|

+

}

|

|

249

|

+

}

|

|

250

|

+

```

|

|

251

|

+

|

|

252

|

+

`GITHUB_USERS` is optional but if set will only allow access to the specified users.

|

|

253

|

+

|

|

233

254

|

See [GITHUB_OAUTH_SETUP.md](GITHUB_OAUTH_SETUP.md) for detailed setup instructions.

|

|

234

255

|

|

|

235

256

|

## Configuration

|

|

@@ -243,6 +264,8 @@ The configuration file [llms.json](llms/llms.json) is saved to `~/.llms/llms.jso

|

|

|

243

264

|

- `audio`: Default chat completion request template for audio prompts

|

|

244

265

|

- `file`: Default chat completion request template for file prompts

|

|

245

266

|

- `check`: Check request template for testing provider connectivity

|

|

267

|

+

- `limits`: Override Request size limits

|

|

268

|

+

- `convert`: Max image size and length limits and auto conversion settings

|

|

246

269

|

|

|

247

270

|

### Providers

|

|

248

271

|

|

|

@@ -1211,7 +1234,7 @@ This shows:

|

|

|

1211

1234

|

- `llms/main.py` - Main script with CLI and server functionality

|

|

1212

1235

|

- `llms/llms.json` - Default configuration file

|

|

1213

1236

|

- `llms/ui.json` - UI configuration file

|

|

1214

|

-

- `requirements.txt` - Python dependencies

|

|

1237

|

+

- `requirements.txt` - Python dependencies, required: `aiohttp`, optional: `Pillow`

|

|

1215

1238

|

|

|

1216

1239

|

### Provider Classes

|

|

1217

1240

|

|

|

@@ -10,7 +10,7 @@ Configure additional providers and models in [llms.json](llms/llms.json)

|

|

|

10

10

|

|

|

11

11

|

## Features

|

|

12

12

|

|

|

13

|

-

- **Lightweight**: Single [llms.py](https://github.com/ServiceStack/llms/blob/main/llms/main.py) Python file with single `aiohttp` dependency

|

|

13

|

+

- **Lightweight**: Single [llms.py](https://github.com/ServiceStack/llms/blob/main/llms/main.py) Python file with single `aiohttp` dependency (Pillow optional)

|

|

14

14

|

- **Multi-Provider Support**: OpenRouter, Ollama, Anthropic, Google, OpenAI, Grok, Groq, Qwen, Z.ai, Mistral

|

|

15

15

|

- **OpenAI-Compatible API**: Works with any client that supports OpenAI's chat completion API

|

|

16

16

|

- **Built-in Analytics**: Built-in analytics UI to visualize costs, requests, and token usage

|

|

@@ -18,6 +18,7 @@ Configure additional providers and models in [llms.json](llms/llms.json)

|

|

|

18

18

|

- **CLI Interface**: Simple command-line interface for quick interactions

|

|

19

19

|

- **Server Mode**: Run an OpenAI-compatible HTTP server at `http://localhost:{PORT}/v1/chat/completions`

|

|

20

20

|

- **Image Support**: Process images through vision-capable models

|

|

21

|

+

- Auto resizes and converts to webp if exceeds configured limits

|

|

21

22

|

- **Audio Support**: Process audio through audio-capable models

|

|

22

23

|

- **Custom Chat Templates**: Configurable chat completion request templates for different modalities

|

|

23

24

|

- **Auto-Discovery**: Automatically discover available Ollama models

|

|

@@ -28,23 +29,27 @@ Configure additional providers and models in [llms.json](llms/llms.json)

|

|

|

28

29

|

|

|

29

30

|

Access all your local all remote LLMs with a single ChatGPT-like UI:

|

|

30

31

|

|

|

31

|

-

[](https://servicestack.net/posts/llms-py-ui)

|

|

32

33

|

|

|

33

|

-

|

|

34

|

+

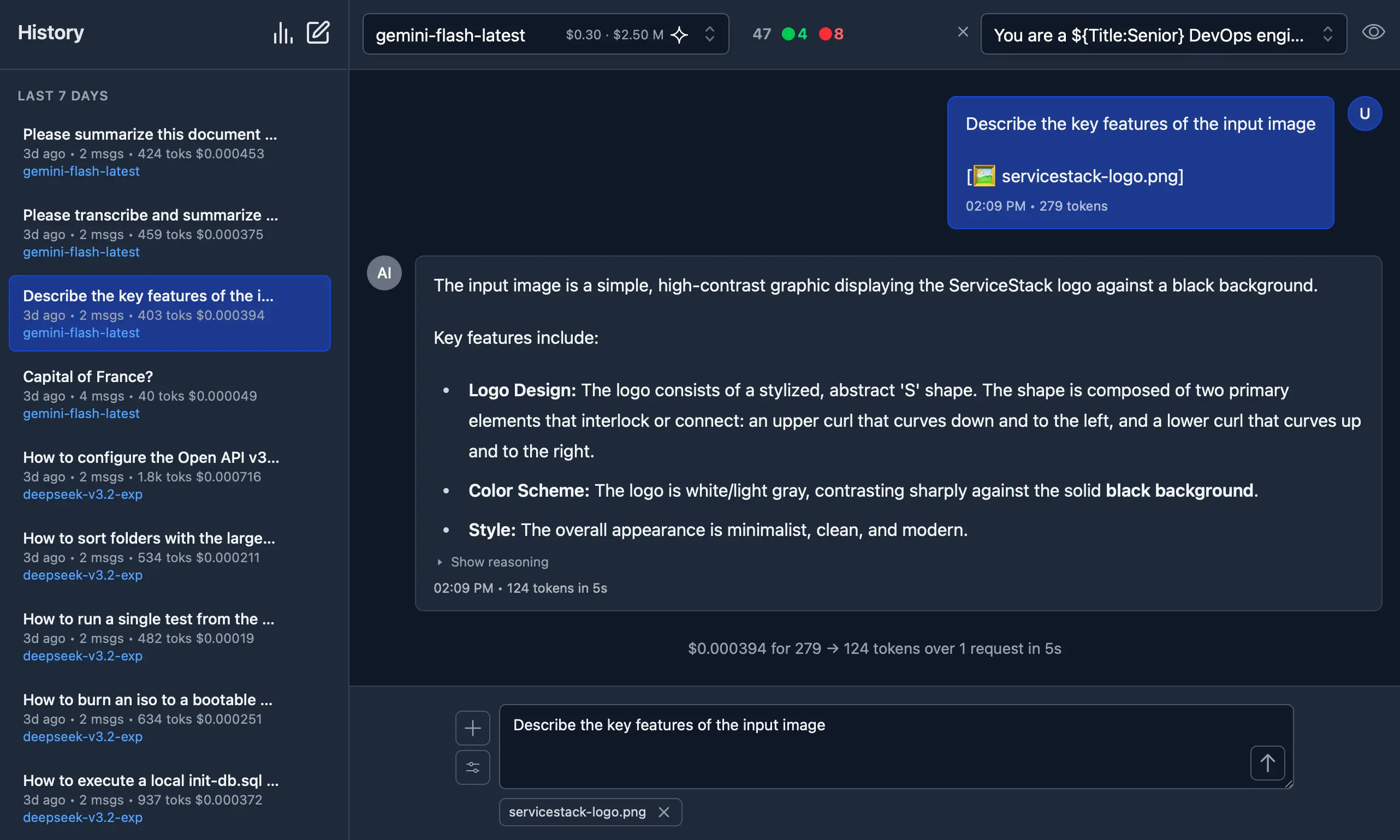

#### Dark Mode Support

|

|

35

|

+

|

|

36

|

+

[](https://servicestack.net/posts/llms-py-ui)

|

|

37

|

+

|

|

38

|

+

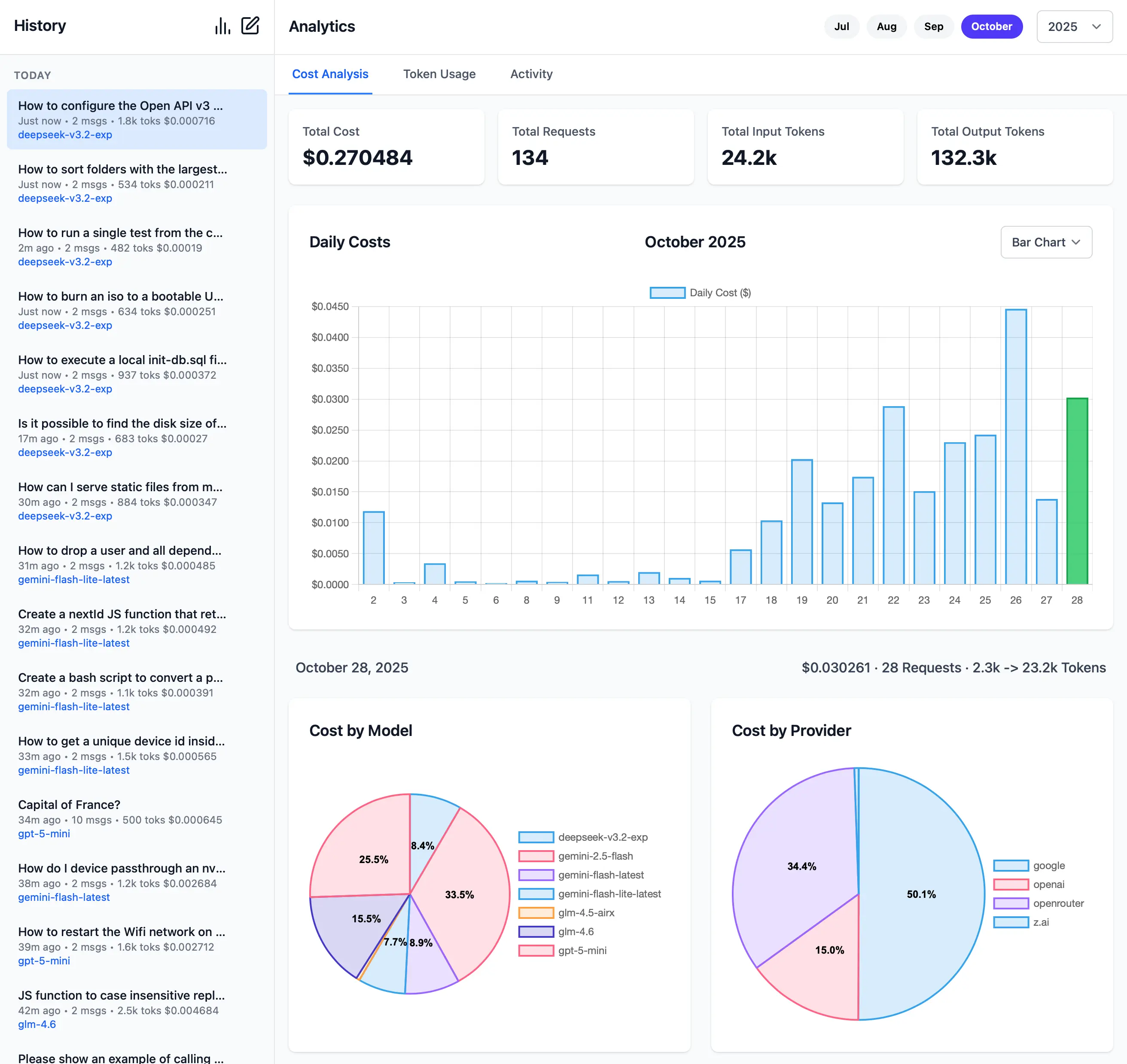

#### Monthly Costs Analysis

|

|

34

39

|

|

|

35

40

|

[](https://servicestack.net/posts/llms-py-ui)

|

|

36

41

|

|

|

37

|

-

|

|

42

|

+

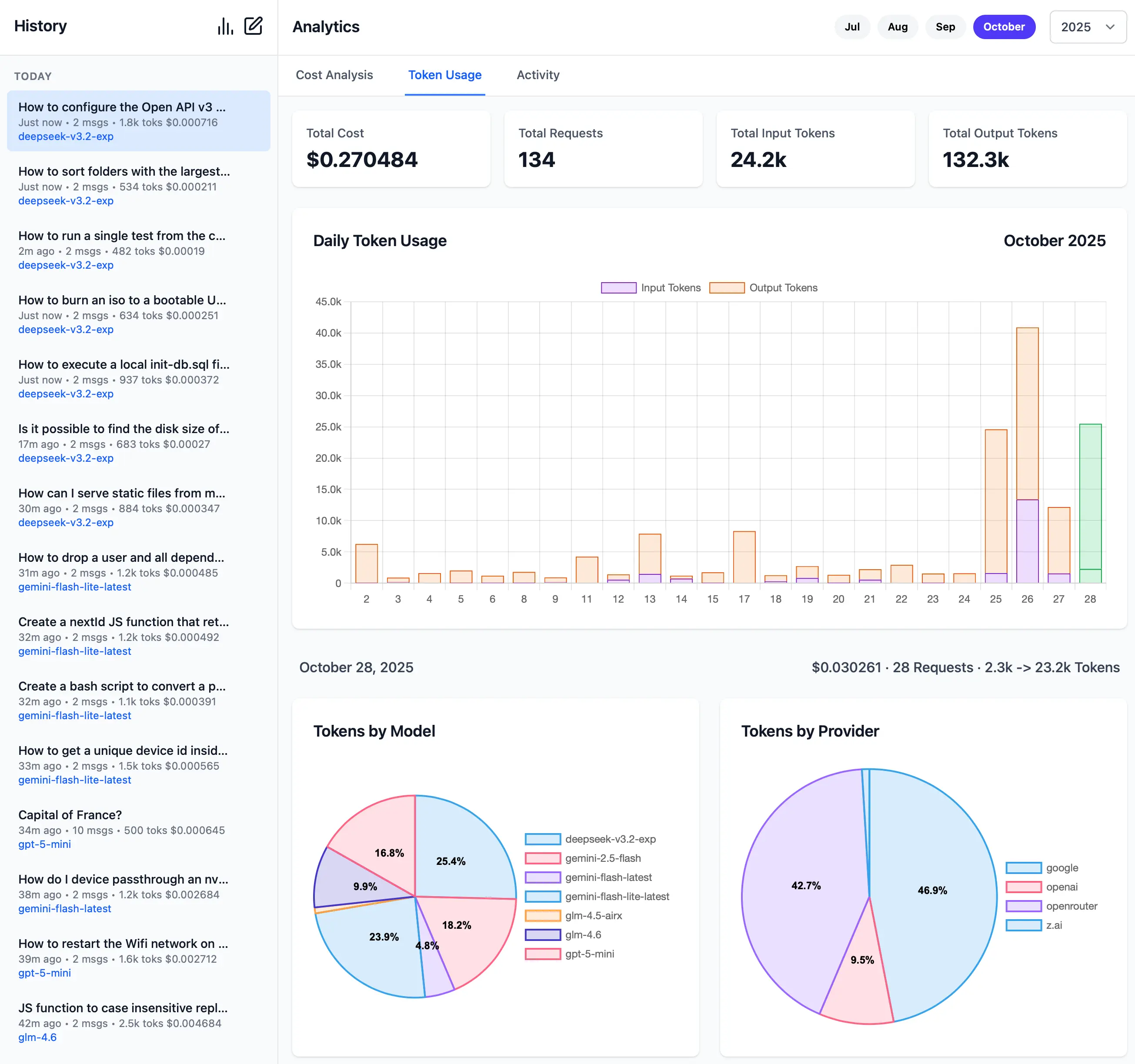

#### Monthly Token Usage (Dark Mode)

|

|

38

43

|

|

|

39

|

-

[](https://servicestack.net/posts/llms-py-ui)

|

|

44

|

+

[](https://servicestack.net/posts/llms-py-ui)

|

|

40

45

|

|

|

41

|

-

|

|

46

|

+

#### Monthly Activity Log

|

|

42

47

|

|

|

43

48

|

[](https://servicestack.net/posts/llms-py-ui)

|

|

44

49

|

|

|

45

50

|

[More Features and Screenshots](https://servicestack.net/posts/llms-py-ui).

|

|

46

51

|

|

|

47

|

-

|

|

52

|

+

#### Check Provider Reliability and Response Times

|

|

48

53

|

|

|

49

54

|

Check the status of configured providers to test if they're configured correctly, reachable and what their response times is for the simplest `1+1=` request:

|

|

50

55

|

|

|

@@ -190,6 +195,22 @@ See [DOCKER.md](DOCKER.md) for detailed instructions on customizing configuratio

|

|

|

190

195

|

|

|

191

196

|

llms.py supports optional GitHub OAuth authentication to secure your web UI and API endpoints. When enabled, users must sign in with their GitHub account before accessing the application.

|

|

192

197

|

|

|

198

|

+

```json

|

|

199

|

+

{

|

|

200

|

+

"auth": {

|

|

201

|

+

"enabled": true,

|

|

202

|

+

"github": {

|

|

203

|

+

"client_id": "$GITHUB_CLIENT_ID",

|

|

204

|

+

"client_secret": "$GITHUB_CLIENT_SECRET",

|

|

205

|

+

"redirect_uri": "http://localhost:8000/auth/github/callback",

|

|

206

|

+

"restrict_to": "$GITHUB_USERS"

|

|

207

|

+

}

|

|

208

|

+

}

|

|

209

|

+

}

|

|

210

|

+

```

|

|

211

|

+

|

|

212

|

+

`GITHUB_USERS` is optional but if set will only allow access to the specified users.

|

|

213

|

+

|

|

193

214

|

See [GITHUB_OAUTH_SETUP.md](GITHUB_OAUTH_SETUP.md) for detailed setup instructions.

|

|

194

215

|

|

|

195

216

|

## Configuration

|

|

@@ -203,6 +224,8 @@ The configuration file [llms.json](llms/llms.json) is saved to `~/.llms/llms.jso

|

|

|

203

224

|

- `audio`: Default chat completion request template for audio prompts

|

|

204

225

|

- `file`: Default chat completion request template for file prompts

|

|

205

226

|

- `check`: Check request template for testing provider connectivity

|

|

227

|

+

- `limits`: Override Request size limits

|

|

228

|

+

- `convert`: Max image size and length limits and auto conversion settings

|

|

206

229

|

|

|

207

230

|

### Providers

|

|

208

231

|

|

|

@@ -1171,7 +1194,7 @@ This shows:

|

|

|

1171

1194

|

- `llms/main.py` - Main script with CLI and server functionality

|

|

1172

1195

|

- `llms/llms.json` - Default configuration file

|

|

1173

1196

|

- `llms/ui.json` - UI configuration file

|

|

1174

|

-

- `requirements.txt` - Python dependencies

|

|

1197

|

+

- `requirements.txt` - Python dependencies, required: `aiohttp`, optional: `Pillow`

|

|

1175

1198

|

|

|

1176

1199

|

### Provider Classes

|

|

1177

1200

|

|

|

@@ -4,7 +4,8 @@

|

|

|

4

4

|

"github": {

|

|

5

5

|

"client_id": "$GITHUB_CLIENT_ID",

|

|

6

6

|

"client_secret": "$GITHUB_CLIENT_SECRET",

|

|

7

|

-

"redirect_uri": "http://localhost:8000/auth/github/callback"

|

|

7

|

+

"redirect_uri": "http://localhost:8000/auth/github/callback",

|

|

8

|

+

"restrict_to": "$GITHUB_USERS"

|

|

8

9

|

}

|

|

9

10

|

},

|

|

10

11

|

"defaults": {

|

|

@@ -104,6 +105,15 @@

|

|

|

104

105

|

"stream": false

|

|

105

106

|

}

|

|

106

107

|

},

|

|

108

|

+

"limits": {

|

|

109

|

+

"client_max_size": 20971520

|

|

110

|

+

},

|

|

111

|

+

"convert": {

|

|

112

|

+

"image": {

|

|

113

|

+

"max_size": "1536x1024",

|

|

114

|

+

"max_length": 1572864

|

|

115

|

+

}

|

|

116

|

+

},

|

|

107

117

|

"providers": {

|

|

108

118

|

"openrouter_free": {

|

|

109

119

|

"enabled": true,

|

|

@@ -15,6 +15,8 @@ import traceback

|

|

|

15

15

|

import sys

|

|

16

16

|

import site

|

|

17

17

|

import secrets

|

|

18

|

+

import re

|

|

19

|

+

from io import BytesIO

|

|

18

20

|

from urllib.parse import parse_qs, urlencode

|

|

19

21

|

|

|

20

22

|

import aiohttp

|

|

@@ -23,7 +25,13 @@ from aiohttp import web

|

|

|

23

25

|

from pathlib import Path

|

|

24

26

|

from importlib import resources # Py≥3.9 (pip install importlib_resources for 3.7/3.8)

|

|

25

27

|

|

|

26

|

-

|

|

28

|

+

try:

|

|

29

|

+

from PIL import Image

|

|

30

|

+

HAS_PIL = True

|

|

31

|

+

except ImportError:

|

|

32

|

+

HAS_PIL = False

|

|

33

|

+

|

|

34

|

+

VERSION = "2.0.29"

|

|

27

35

|

_ROOT = None

|

|

28

36

|

g_config_path = None

|

|

29

37

|

g_ui_path = None

|

|

@@ -200,6 +208,77 @@ def price_to_string(price: float | int | str | None) -> str | None:

|

|

|

200

208

|

except (ValueError, TypeError):

|

|

201

209

|

return None

|

|

202

210

|

|

|

211

|

+

def convert_image_if_needed(image_bytes, mimetype='image/png'):

|

|

212

|

+

"""

|

|

213

|

+

Convert and resize image to WebP if it exceeds configured limits.

|

|

214

|

+

|

|

215

|

+

Args:

|

|

216

|

+

image_bytes: Raw image bytes

|

|

217

|

+

mimetype: Original image MIME type

|

|

218

|

+

|

|

219

|

+

Returns:

|

|

220

|

+

tuple: (converted_bytes, new_mimetype) or (original_bytes, original_mimetype) if no conversion needed

|

|

221

|

+

"""

|

|

222

|

+

if not HAS_PIL:

|

|

223

|

+

return image_bytes, mimetype

|

|

224

|

+

|

|

225

|

+

# Get conversion config

|

|

226

|

+

convert_config = g_config.get('convert', {}).get('image', {}) if g_config else {}

|

|

227

|

+

if not convert_config:

|

|

228

|

+

return image_bytes, mimetype

|

|

229

|

+

|

|

230

|

+

max_size_str = convert_config.get('max_size', '1536x1024')

|

|

231

|

+

max_length = convert_config.get('max_length', 1.5*1024*1024) # 1.5MB

|

|

232

|

+

|

|

233

|

+

try:

|

|

234

|

+

# Parse max_size (e.g., "1536x1024")

|

|

235

|

+

max_width, max_height = map(int, max_size_str.split('x'))

|

|

236

|

+

|

|

237

|

+

# Open image

|

|

238

|

+

with Image.open(BytesIO(image_bytes)) as img:

|

|

239

|

+

original_width, original_height = img.size

|

|

240

|

+

|

|

241

|

+

# Check if image exceeds limits

|

|

242

|

+

needs_resize = original_width > max_width or original_height > max_height

|

|

243

|

+

|

|

244

|

+

# Check if base64 length would exceed max_length (in KB)

|

|

245

|

+

# Base64 encoding increases size by ~33%, so check raw bytes * 1.33 / 1024

|

|

246

|

+

estimated_kb = (len(image_bytes) * 1.33) / 1024

|

|

247

|

+

needs_conversion = estimated_kb > max_length

|

|

248

|

+

|

|

249

|

+

if not needs_resize and not needs_conversion:

|

|

250

|

+

return image_bytes, mimetype

|

|

251

|

+

|

|

252

|

+

# Convert RGBA to RGB if necessary (WebP doesn't support transparency in RGB mode)

|

|

253

|

+

if img.mode in ('RGBA', 'LA', 'P'):

|

|

254

|

+

# Create a white background

|

|

255

|

+

background = Image.new('RGB', img.size, (255, 255, 255))

|

|

256

|

+

if img.mode == 'P':

|

|

257

|

+

img = img.convert('RGBA')

|

|

258

|

+

background.paste(img, mask=img.split()[-1] if img.mode in ('RGBA', 'LA') else None)

|

|

259

|

+

img = background

|

|

260

|

+

elif img.mode != 'RGB':

|

|

261

|

+

img = img.convert('RGB')

|

|

262

|

+

|

|

263

|

+

# Resize if needed (preserve aspect ratio)

|

|

264

|

+

if needs_resize:

|

|

265

|

+

img.thumbnail((max_width, max_height), Image.Resampling.LANCZOS)

|

|

266

|

+

_log(f"Resized image from {original_width}x{original_height} to {img.size[0]}x{img.size[1]}")

|

|

267

|

+

|

|

268

|

+

# Convert to WebP

|

|

269

|

+

output = BytesIO()

|

|

270

|

+

img.save(output, format='WEBP', quality=85, method=6)

|

|

271

|

+

converted_bytes = output.getvalue()

|

|

272

|

+

|

|

273

|

+

_log(f"Converted image to WebP: {len(image_bytes)} bytes -> {len(converted_bytes)} bytes ({len(converted_bytes)*100//len(image_bytes)}%)")

|

|

274

|

+

|

|

275

|

+

return converted_bytes, 'image/webp'

|

|

276

|

+

|

|

277

|

+

except Exception as e:

|

|

278

|

+

_log(f"Error converting image: {e}")

|

|

279

|

+

# Return original if conversion fails

|

|

280

|

+

return image_bytes, mimetype

|

|

281

|

+

|

|

203

282

|

async def process_chat(chat):

|

|

204

283

|

if not chat:

|

|

205

284

|

raise Exception("No chat provided")

|

|

@@ -230,19 +309,31 @@ async def process_chat(chat):

|

|

|

230

309

|

mimetype = get_file_mime_type(get_filename(url))

|

|

231

310

|

if 'Content-Type' in response.headers:

|

|

232

311

|

mimetype = response.headers['Content-Type']

|

|

312

|

+

# convert/resize image if needed

|

|

313

|

+

content, mimetype = convert_image_if_needed(content, mimetype)

|

|

233

314

|

# convert to data uri

|

|

234

315

|

image_url['url'] = f"data:{mimetype};base64,{base64.b64encode(content).decode('utf-8')}"

|

|

235

316

|

elif is_file_path(url):

|

|

236

317

|

_log(f"Reading image: {url}")

|

|

237

318

|

with open(url, "rb") as f:

|

|

238

319

|

content = f.read()

|

|

239

|

-

ext = os.path.splitext(url)[1].lower().lstrip('.') if '.' in url else 'png'

|

|

240

320

|

# get mimetype from file extension

|

|

241

321

|

mimetype = get_file_mime_type(get_filename(url))

|

|

322

|

+

# convert/resize image if needed

|

|

323

|

+

content, mimetype = convert_image_if_needed(content, mimetype)

|

|

242

324

|

# convert to data uri

|

|

243

325

|

image_url['url'] = f"data:{mimetype};base64,{base64.b64encode(content).decode('utf-8')}"

|

|

244

326

|

elif url.startswith('data:'):

|

|

245

|

-

|

|

327

|

+

# Extract existing data URI and process it

|

|

328

|

+

if ';base64,' in url:

|

|

329

|

+

prefix = url.split(';base64,')[0]

|

|

330

|

+

mimetype = prefix.split(':')[1] if ':' in prefix else 'image/png'

|

|

331

|

+

base64_data = url.split(';base64,')[1]

|

|

332

|

+

content = base64.b64decode(base64_data)

|

|

333

|

+

# convert/resize image if needed

|

|

334

|

+

content, mimetype = convert_image_if_needed(content, mimetype)

|

|

335

|

+

# update data uri with potentially converted image

|

|

336

|

+

image_url['url'] = f"data:{mimetype};base64,{base64.b64encode(content).decode('utf-8')}"

|

|

246

337

|

else:

|

|

247

338

|

raise Exception(f"Invalid image: {url}")

|

|

248

339

|

elif item['type'] == 'input_audio' and 'input_audio' in item:

|

|

@@ -1314,6 +1405,66 @@ async def save_home_configs():

|

|

|

1314

1405

|

print("Could not create llms.json. Create one with --init or use --config <path>")

|

|

1315

1406

|

exit(1)

|

|

1316

1407

|

|

|

1408

|

+

async def reload_providers():

|

|

1409

|

+

global g_config, g_handlers

|

|

1410

|

+

g_handlers = init_llms(g_config)

|

|

1411

|

+

await load_llms()

|

|

1412

|

+

_log(f"{len(g_handlers)} providers loaded")

|

|

1413

|

+

return g_handlers

|

|

1414

|

+

|

|

1415

|

+

async def watch_config_files(config_path, ui_path, interval=1):

|

|

1416

|

+

"""Watch config files and reload providers when they change"""

|

|

1417

|

+

global g_config

|

|

1418

|

+

|

|

1419

|

+

config_path = Path(config_path)

|

|

1420

|

+

ui_path = Path(ui_path) if ui_path else None

|

|

1421

|

+

|

|

1422

|

+

file_mtimes = {}

|

|

1423

|

+

|

|

1424

|

+

_log(f"Watching config files: {config_path}" + (f", {ui_path}" if ui_path else ""))

|

|

1425

|

+

|

|

1426

|

+

while True:

|

|

1427

|

+

await asyncio.sleep(interval)

|

|

1428

|

+

|

|

1429

|

+

# Check llms.json

|

|

1430

|

+

try:

|

|

1431

|

+

if config_path.is_file():

|

|

1432

|

+

mtime = config_path.stat().st_mtime

|

|

1433

|

+

|

|

1434

|

+

if str(config_path) not in file_mtimes:

|

|

1435

|

+

file_mtimes[str(config_path)] = mtime

|

|

1436

|

+

elif file_mtimes[str(config_path)] != mtime:

|

|

1437

|

+

_log(f"Config file changed: {config_path.name}")

|

|

1438

|

+

file_mtimes[str(config_path)] = mtime

|

|

1439

|

+

|

|

1440

|

+

try:

|

|

1441

|

+

# Reload llms.json

|

|

1442

|

+

with open(config_path, "r") as f:

|

|

1443

|

+

g_config = json.load(f)

|

|

1444

|

+

|

|

1445

|

+

# Reload providers

|

|

1446

|

+

await reload_providers()

|

|

1447

|

+

_log("Providers reloaded successfully")

|

|

1448

|

+

except Exception as e:

|

|

1449

|

+

_log(f"Error reloading config: {e}")

|

|

1450

|

+

except FileNotFoundError:

|

|

1451

|

+

pass

|

|

1452

|

+

|

|

1453

|

+

# Check ui.json

|

|

1454

|

+

if ui_path:

|

|

1455

|

+

try:

|

|

1456

|

+

if ui_path.is_file():

|

|

1457

|

+

mtime = ui_path.stat().st_mtime

|

|

1458

|

+

|

|

1459

|

+

if str(ui_path) not in file_mtimes:

|

|

1460

|

+

file_mtimes[str(ui_path)] = mtime

|

|

1461

|

+

elif file_mtimes[str(ui_path)] != mtime:

|

|

1462

|

+

_log(f"Config file changed: {ui_path.name}")

|

|

1463

|

+

file_mtimes[str(ui_path)] = mtime

|

|

1464

|

+

_log("ui.json reloaded - reload page to update")

|

|

1465

|

+

except FileNotFoundError:

|

|

1466

|

+

pass

|

|

1467

|

+

|

|

1317

1468

|

def main():

|

|

1318

1469

|

global _ROOT, g_verbose, g_default_model, g_logprefix, g_config, g_config_path, g_ui_path

|

|

1319

1470

|

|

|

@@ -1401,8 +1552,7 @@ def main():

|

|

|

1401

1552

|

g_ui_path = home_ui_path

|

|

1402

1553

|

g_config = json.loads(text_from_file(g_config_path))

|

|

1403

1554

|

|

|

1404

|

-

|

|

1405

|

-

asyncio.run(load_llms())

|

|

1555

|

+

asyncio.run(reload_providers())

|

|

1406

1556

|

|

|

1407

1557

|

# print names

|

|

1408

1558

|

_log(f"enabled providers: {', '.join(g_handlers.keys())}")

|

|

@@ -1480,7 +1630,9 @@ def main():

|

|

|

1480

1630

|

|

|

1481

1631

|

_log("Authentication enabled - GitHub OAuth configured")

|

|

1482

1632

|

|

|

1483

|

-

|

|

1633

|

+

client_max_size = g_config.get('limits', {}).get('client_max_size', 20*1024*1024) # 20MB max request size (to handle base64 encoding overhead)

|

|

1634

|

+

_log(f"client_max_size set to {client_max_size} bytes ({client_max_size/1024/1024:.1f}MB)")

|

|

1635

|

+

app = web.Application(client_max_size=client_max_size)

|

|

1484

1636

|

|

|

1485

1637

|

# Authentication middleware helper

|

|

1486

1638

|

def check_auth(request):

|

|

@@ -1601,6 +1753,29 @@ def main():

|

|

|

1601

1753

|

auth_url = f"https://github.com/login/oauth/authorize?{urlencode(params)}"

|

|

1602

1754

|

|

|

1603

1755

|

return web.HTTPFound(auth_url)

|

|

1756

|

+

|

|

1757

|

+

def validate_user(github_username):

|

|

1758

|

+

auth_config = g_config['auth']['github']

|

|

1759

|

+

# Check if user is restricted

|

|

1760

|

+

restrict_to = auth_config.get('restrict_to', '')

|

|

1761

|

+

|

|

1762

|

+

# Expand environment variables

|

|

1763

|

+

if restrict_to.startswith('$'):

|

|

1764

|

+

restrict_to = os.environ.get(restrict_to[1:], '')

|

|

1765

|

+

|

|

1766

|

+

# If restrict_to is configured, validate the user

|

|

1767

|

+

if restrict_to:

|

|

1768

|

+

# Parse allowed users (comma or space delimited)

|

|

1769

|

+

allowed_users = [u.strip() for u in re.split(r'[,\s]+', restrict_to) if u.strip()]

|

|

1770

|

+

|

|

1771

|

+

# Check if user is in the allowed list

|

|

1772

|

+

if not github_username or github_username not in allowed_users:

|

|

1773

|

+

_log(f"Access denied for user: {github_username}. Not in allowed list: {allowed_users}")

|

|

1774

|

+

return web.Response(

|

|

1775

|

+

text=f"Access denied. User '{github_username}' is not authorized to access this application.",

|

|

1776

|

+

status=403

|

|

1777

|

+

)

|

|

1778

|

+

return None

|

|

1604

1779

|

|

|

1605

1780

|

async def github_callback_handler(request):

|

|

1606

1781

|

"""Handle GitHub OAuth callback"""

|

|

@@ -1664,6 +1839,11 @@ def main():

|

|

|

1664

1839

|

async with session.get(user_url, headers=headers) as resp:

|

|

1665

1840

|

user_data = await resp.json()

|

|

1666

1841

|

|

|

1842

|

+

# Validate user

|

|

1843

|

+

error_response = validate_user(user_data.get('login', ''))

|

|

1844

|

+

if error_response:

|

|

1845

|

+

return error_response

|

|

1846

|

+

|

|

1667

1847

|

# Create session

|

|

1668

1848

|

session_token = secrets.token_urlsafe(32)

|

|

1669

1849

|

g_sessions[session_token] = {

|

|

@@ -1814,6 +1994,14 @@ def main():

|

|

|

1814

1994

|

# Serve index.html as fallback route (SPA routing)

|

|

1815

1995

|

app.router.add_route('*', '/{tail:.*}', index_handler)

|

|

1816

1996

|

|

|

1997

|

+

# Setup file watcher for config files

|

|

1998

|

+

async def start_background_tasks(app):

|

|

1999

|

+

"""Start background tasks when the app starts"""

|

|

2000

|

+

# Start watching config files in the background

|

|

2001

|

+

asyncio.create_task(watch_config_files(g_config_path, g_ui_path))

|

|

2002

|

+

|

|

2003

|

+

app.on_startup.append(start_background_tasks)

|

|

2004

|

+

|

|

1817

2005

|

print(f"Starting server on port {port}...")

|

|

1818

2006

|

web.run_app(app, host='0.0.0.0', port=port, print=_log)

|

|

1819

2007

|

exit(0)

|

|

@@ -1021,7 +1021,9 @@ export default {

|

|

|

1021

1021

|

|

|

1022

1022

|

// Only display label if percentage > 1%

|

|

1023

1023

|

if (parseFloat(percentage) > 1) {

|

|

1024

|

-

|

|

1024

|

+

// Use white color in dark mode, black in light mode

|

|

1025

|

+

const isDarkMode = document.documentElement.classList.contains('dark')

|

|

1026

|

+

chartCtx.fillStyle = isDarkMode ? '#fff' : '#000'

|

|

1025

1027

|

chartCtx.font = 'bold 12px Arial'

|

|

1026

1028

|

chartCtx.textAlign = 'center'

|

|

1027

1029

|

chartCtx.textBaseline = 'middle'

|

|

@@ -1078,7 +1080,9 @@ export default {

|

|

|

1078

1080

|

|

|

1079

1081

|

// Only display label if percentage > 1%

|

|

1080

1082

|

if (parseFloat(percentage) > 1) {

|

|

1081

|

-

|

|

1083

|

+

// Use white color in dark mode, black in light mode

|

|

1084

|

+

const isDarkMode = document.documentElement.classList.contains('dark')

|

|

1085

|

+

chartCtx.fillStyle = isDarkMode ? '#fff' : '#000'

|

|

1082

1086

|

chartCtx.font = 'bold 12px Arial'

|

|

1083

1087

|

chartCtx.textAlign = 'center'

|

|

1084

1088

|

chartCtx.textBaseline = 'middle'

|

|

@@ -1135,7 +1139,9 @@ export default {

|

|

|

1135

1139

|

|

|

1136

1140

|

// Only display label if percentage > 1%

|

|

1137

1141

|

if (parseFloat(percentage) > 1) {

|

|

1138

|

-

|

|

1142

|

+

// Use white color in dark mode, black in light mode

|

|

1143

|

+

const isDarkMode = document.documentElement.classList.contains('dark')

|

|

1144

|

+

chartCtx.fillStyle = isDarkMode ? '#fff' : '#000'

|

|

1139

1145

|

chartCtx.font = 'bold 12px Arial'

|

|

1140

1146

|

chartCtx.textAlign = 'center'

|

|

1141

1147

|

chartCtx.textBaseline = 'middle'

|

|

@@ -1192,7 +1198,9 @@ export default {

|

|

|

1192

1198

|

|

|

1193

1199

|

// Only display label if percentage > 1%

|

|

1194

1200

|

if (parseFloat(percentage) > 1) {

|

|

1195

|

-

|

|

1201

|

+

// Use white color in dark mode, black in light mode

|

|

1202

|

+

const isDarkMode = document.documentElement.classList.contains('dark')

|

|

1203

|

+

chartCtx.fillStyle = isDarkMode ? '#fff' : '#000'

|

|

1196

1204

|

chartCtx.font = 'bold 12px Arial'

|

|

1197

1205

|

chartCtx.textAlign = 'center'

|

|

1198

1206

|

chartCtx.textBaseline = 'middle'

|

|

@@ -11,6 +11,7 @@ export function useChatPrompt() {

|

|

|

11

11

|

const attachedFiles = ref([])

|

|

12

12

|

const isGenerating = ref(false)

|

|

13

13

|

const errorStatus = ref(null)

|

|

14

|

+

const abortController = ref(null)

|

|

14

15

|

const hasImage = () => attachedFiles.value.some(f => imageExts.includes(lastRightPart(f.name, '.')))

|

|

15

16

|

const hasAudio = () => attachedFiles.value.some(f => audioExts.includes(lastRightPart(f.name, '.')))

|

|

16

17

|

const hasFile = () => attachedFiles.value.length > 0

|

|

@@ -21,6 +22,17 @@ export function useChatPrompt() {

|

|

|

21

22

|

isGenerating.value = false

|

|

22

23

|

attachedFiles.value = []

|

|

23

24

|

messageText.value = ''

|

|

25

|

+

abortController.value = null

|

|

26

|

+

}

|

|

27

|

+

|

|

28

|

+

function cancel() {

|

|

29

|

+

// Cancel the pending request

|

|

30

|

+

if (abortController.value) {

|

|

31

|

+

abortController.value.abort()

|

|

32

|

+

}

|

|

33

|

+

// Reset UI state

|

|

34

|

+

isGenerating.value = false

|

|

35

|

+

abortController.value = null

|

|

24

36

|

}

|

|

25

37

|

|

|

26

38

|

return {

|

|

@@ -28,6 +40,7 @@ export function useChatPrompt() {

|

|

|

28

40

|

attachedFiles,

|

|

29

41

|

errorStatus,

|

|

30

42

|

isGenerating,

|

|

43

|

+

abortController,

|

|

31

44

|

get generating() {

|

|

32

45

|

return isGenerating.value

|

|

33

46

|

},

|

|

@@ -36,6 +49,7 @@ export function useChatPrompt() {

|

|

|

36

49

|

hasFile,

|

|

37

50

|

// hasText,

|

|

38

51

|

reset,

|

|

52

|

+

cancel,

|

|

39

53

|

}

|

|

40

54

|

}

|

|

41

55

|

|

|

@@ -91,15 +105,18 @@ export default {

|

|

|

91

105

|

]"

|

|

92

106

|

:disabled="isGenerating || !model"

|

|

93

107

|

></textarea>

|

|

94

|

-

<button title="Send (Enter)" type="button"

|

|

108

|

+

<button v-if="!isGenerating" title="Send (Enter)" type="button"

|

|

95

109

|

@click="sendMessage"

|

|

96

110

|

:disabled="!messageText.trim() || isGenerating || !model"

|

|

97

111

|

class="absolute bottom-2 right-2 size-8 flex items-center justify-center rounded-md border border-gray-300 dark:border-gray-600 text-gray-600 dark:text-gray-400 hover:bg-gray-50 dark:hover:bg-gray-700 disabled:text-gray-400 disabled:cursor-not-allowed disabled:border-gray-200 dark:disabled:border-gray-700 transition-colors">

|

|

98

|

-

<svg

|

|

99

|

-

|

|

100

|

-

|

|

112

|

+

<svg class="size-5" xmlns="http://www.w3.org/2000/svg" width="24" height="24" viewBox="0 0 24 24"><g fill="none" stroke="currentColor" stroke-linecap="round" stroke-linejoin="round" stroke-width="2"><path stroke-dasharray="20" stroke-dashoffset="20" d="M12 21l0 -17.5"><animate fill="freeze" attributeName="stroke-dashoffset" dur="0.2s" values="20;0"/></path><path stroke-dasharray="12" stroke-dashoffset="12" d="M12 3l7 7M12 3l-7 7"><animate fill="freeze" attributeName="stroke-dashoffset" begin="0.2s" dur="0.2s" values="12;0"/></path></g></svg>

|

|

113

|

+

</button>

|

|

114

|

+

<button v-else title="Cancel request" type="button"

|

|

115

|

+

@click="cancelRequest"

|

|

116

|

+

class="absolute bottom-2 right-2 size-8 flex items-center justify-center rounded-md border border-red-300 dark:border-red-600 text-red-600 dark:text-red-400 hover:bg-red-50 dark:hover:bg-red-900/30 transition-colors">

|

|

117

|

+

<svg class="size-5" xmlns="http://www.w3.org/2000/svg" viewBox="0 0 24 24" fill="none" stroke="currentColor" stroke-width="2" stroke-linecap="round" stroke-linejoin="round">

|

|

118

|

+

<rect x="3" y="3" width="18" height="18" rx="2" ry="2"></rect>

|

|

101

119

|

</svg>

|

|

102

|

-

<svg v-else class="size-5" xmlns="http://www.w3.org/2000/svg" width="24" height="24" viewBox="0 0 24 24"><g fill="none" stroke="currentColor" stroke-linecap="round" stroke-linejoin="round" stroke-width="2"><path stroke-dasharray="20" stroke-dashoffset="20" d="M12 21l0 -17.5"><animate fill="freeze" attributeName="stroke-dashoffset" dur="0.2s" values="20;0"/></path><path stroke-dasharray="12" stroke-dashoffset="12" d="M12 3l7 7M12 3l-7 7"><animate fill="freeze" attributeName="stroke-dashoffset" begin="0.2s" dur="0.2s" values="12;0"/></path></g></svg>

|

|

103

120

|

</button>

|

|

104

121

|

</div>

|

|

105

122

|

|

|

@@ -304,6 +321,10 @@ export default {

|

|

|

304

321

|

}

|

|

305

322

|

messageText.value = ''

|

|

306

323

|

|

|

324

|

+

// Create AbortController for this request

|

|

325

|

+

const controller = new AbortController()

|

|

326

|

+

chatPrompt.abortController.value = controller

|

|

327

|

+

|

|

307

328

|

try {

|

|

308

329

|

let threadId

|

|

309

330

|

|

|

@@ -434,11 +455,15 @@ export default {

|

|

|

434

455

|

}))

|

|

435

456

|

}

|

|

436

457

|

|

|

458

|

+

chatRequest.metadata ??= {}

|

|

459

|

+

chatRequest.metadata.threadId = threadId

|

|

460

|

+

|

|

437

461

|

// Send to API

|

|

438

462

|

console.debug('chatRequest', chatRequest)

|

|

439

463

|

const startTime = Date.now()

|

|

440

464

|

const response = await ai.post('/v1/chat/completions', {

|

|

441

|

-

body: JSON.stringify(chatRequest)

|

|

465

|

+

body: JSON.stringify(chatRequest),

|

|

466

|

+

signal: controller.signal

|

|

442

467

|

})

|

|

443

468

|

|

|

444

469

|

let result = null

|

|

@@ -513,11 +538,25 @@ export default {

|

|

|

513

538

|

attachedFiles.value = []

|

|

514

539

|

// Error will be cleared when user sends next message (no auto-timeout)

|

|

515

540

|

}

|

|

541

|

+

} catch (error) {

|

|

542

|

+

// Check if the error is due to abort

|

|

543

|

+

if (error.name === 'AbortError') {

|

|

544

|

+

console.log('Request was cancelled by user')

|

|

545

|

+

// Don't show error for cancelled requests

|

|

546

|

+

} else {

|

|

547

|

+

// Re-throw other errors to be handled by outer catch

|

|

548

|

+

throw error

|

|

549

|

+

}

|

|

516

550

|

} finally {

|

|

517

551

|

isGenerating.value = false

|

|

552

|

+

chatPrompt.abortController.value = null

|

|

518

553

|

}

|

|

519

554

|

}

|

|

520

555

|

|

|

556

|

+

const cancelRequest = () => {

|

|

557

|

+

chatPrompt.cancel()

|

|

558

|

+

}

|

|

559

|

+

|

|

521

560

|

const addNewLine = () => {

|

|

522

561

|

// Enter key already adds new line

|

|

523

562

|

//messageText.value += '\n'

|

|

@@ -538,6 +577,7 @@ export default {

|

|

|

538

577

|

onDrop,

|

|

539

578

|

removeAttachment,

|

|

540

579

|

sendMessage,

|

|

580

|

+

cancelRequest,

|

|

541

581

|

addNewLine,

|

|

542

582

|

}

|

|

543

583

|

}

|