liger-kernel-nightly 0.5.2.dev20250121233718__tar.gz → 0.5.2.dev20250122004106__tar.gz

Sign up to get free protection for your applications and to get access to all the features.

- liger_kernel_nightly-0.5.2.dev20250122004106/.github/workflows/docs.yml +28 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/Makefile +16 -3

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/PKG-INFO +1 -1

- liger_kernel_nightly-0.5.2.dev20250122004106/docs/Examples.md +268 -0

- liger_kernel_nightly-0.5.2.dev20250122004106/docs/Getting-Started.md +64 -0

- liger_kernel_nightly-0.5.2.dev20250122004106/docs/High-Level-APIs.md +30 -0

- liger_kernel_nightly-0.5.2.dev20250122004106/docs/Low-Level-APIs.md +74 -0

- liger_kernel_nightly-0.5.2.dev20250121233718/docs/Acknowledgement.md → liger_kernel_nightly-0.5.2.dev20250122004106/docs/acknowledgement.md +0 -3

- liger_kernel_nightly-0.5.2.dev20250121233718/docs/CONTRIBUTING.md → liger_kernel_nightly-0.5.2.dev20250122004106/docs/contributing.md +52 -42

- liger_kernel_nightly-0.5.2.dev20250122004106/docs/index.md +188 -0

- liger_kernel_nightly-0.5.2.dev20250122004106/mkdocs.yml +69 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/pyproject.toml +1 -1

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel_nightly.egg-info/PKG-INFO +1 -1

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel_nightly.egg-info/SOURCES.txt +10 -3

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/.github/ISSUE_TEMPLATE/bug_report.yaml +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/.github/ISSUE_TEMPLATE/feature_request.yaml +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/.github/pull_request_template.md +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/.github/workflows/amd-ci.yml +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/.github/workflows/nvi-ci.yml +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/.github/workflows/publish-nightly.yml +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/.github/workflows/publish-release.yml +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/.gitignore +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/LICENSE +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/NOTICE +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/README.md +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/benchmarks_visualizer.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/data/all_benchmark_data.csv +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_cpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_cross_entropy.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_dpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_embedding.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_fused_linear_cross_entropy.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_fused_linear_jsd.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_geglu.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_group_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_jsd.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_kl_div.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_layer_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_orpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_qwen2vl_mrope.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_rms_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_rope.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_simpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/benchmark_swiglu.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/benchmark/scripts/utils.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/dev/fmt-requirements.txt +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/dev/modal/tests.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/dev/modal/tests_bwd.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/docs/images/banner.GIF +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/docs/images/compose.gif +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/docs/images/e2e-memory.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/docs/images/e2e-tps.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/docs/images/logo-banner.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/docs/images/patch.gif +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/docs/images/post-training.png +0 -0

- /liger_kernel_nightly-0.5.2.dev20250121233718/docs/License.md → /liger_kernel_nightly-0.5.2.dev20250122004106/docs/license.md +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/alignment/accelerate_config.yaml +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/alignment/run_orpo.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/README.md +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/callback.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/config/fsdp_config.json +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/img/gemma_7b_mem.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/img/gemma_7b_tp.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/img/llama_mem_alloc.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/img/llama_tps.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/img/qwen_mem_alloc.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/img/qwen_tps.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/launch_on_modal.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/requirements.txt +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/run_benchmarks.sh +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/run_gemma.sh +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/run_llama.sh +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/run_qwen.sh +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/run_qwen2_vl.sh +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/training.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/huggingface/training_multimodal.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/lightning/README.md +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/lightning/requirements.txt +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/lightning/training.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/README.md +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/callback.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/docs/images/Memory_Stage1_num_head_3.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/docs/images/Memory_Stage1_num_head_5.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/docs/images/Memory_Stage2_num_head_3.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/docs/images/Memory_Stage2_num_head_5.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/docs/images/Throughput_Stage1_num_head_3.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/docs/images/Throughput_Stage1_num_head_5.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/docs/images/Throughput_Stage2_num_head_3.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/docs/images/Throughput_Stage2_num_head_5.png +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/fsdp/acc-fsdp.conf +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/medusa_util.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/requirements.txt +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/scripts/llama3_8b_medusa.sh +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/examples/medusa/train.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/licenses/LICENSE-Apache-2.0 +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/licenses/LICENSE-MIT-AutoAWQ +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/licenses/LICENSE-MIT-Efficient-Cross-Entropy +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/licenses/LICENSE-MIT-llmc +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/licenses/LICENSE-MIT-triton +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/setup.cfg +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/setup.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/chunked_loss/README.md +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/chunked_loss/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/chunked_loss/cpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/chunked_loss/dpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/chunked_loss/functional.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/chunked_loss/fused_linear_distillation.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/chunked_loss/fused_linear_preference.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/chunked_loss/orpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/chunked_loss/simpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/env_report.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/cross_entropy.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/experimental/embedding.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/experimental/mm_int8int2.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/fused_linear_cross_entropy.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/fused_linear_jsd.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/geglu.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/group_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/jsd.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/kl_div.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/layer_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/qwen2vl_mrope.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/rms_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/rope.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/swiglu.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/ops/utils.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/auto_model.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/cross_entropy.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/experimental/embedding.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/functional.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/fused_linear_cross_entropy.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/fused_linear_jsd.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/geglu.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/group_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/jsd.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/kl_div.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/layer_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/model/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/model/gemma.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/model/gemma2.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/model/llama.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/model/mistral.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/model/mixtral.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/model/mllama.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/model/phi3.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/model/qwen2.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/model/qwen2_vl.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/monkey_patch.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/qwen2vl_mrope.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/rms_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/rope.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/swiglu.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/trainer/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/trainer/orpo_trainer.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/transformers/trainer_integration.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/triton/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/triton/monkey_patch.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel/utils.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel_nightly.egg-info/dependency_links.txt +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel_nightly.egg-info/requires.txt +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/src/liger_kernel_nightly.egg-info/top_level.txt +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/chunked_loss/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/chunked_loss/test_cpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/chunked_loss/test_dpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/chunked_loss/test_orpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/chunked_loss/test_simpo_loss.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/conftest.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/convergence/__init__.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/convergence/test_mini_models.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/convergence/test_mini_models_multimodal.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/convergence/test_mini_models_with_logits.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/resources/fake_configs/Qwen/Qwen2-VL-7B-Instruct/tokenizer_config.json +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/resources/fake_configs/meta-llama/Llama-3.2-11B-Vision-Instruct/tokenizer_config.json +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/resources/scripts/generate_tokenized_dataset.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/resources/tiny_shakespeare.txt +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/resources/tiny_shakespeare_tokenized/data-00000-of-00001.arrow +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/resources/tiny_shakespeare_tokenized/dataset_info.json +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/resources/tiny_shakespeare_tokenized/state.json +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_auto_model.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_cross_entropy.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_embedding.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_fused_linear_cross_entropy.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_fused_linear_jsd.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_geglu.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_group_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_jsd.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_kl_div.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_layer_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_mm_int8int2.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_monkey_patch.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_qwen2vl_mrope.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_rms_norm.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_rope.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_swiglu.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_trainer_integration.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/transformers/test_transformers.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/triton/test_triton_monkey_patch.py +0 -0

- {liger_kernel_nightly-0.5.2.dev20250121233718 → liger_kernel_nightly-0.5.2.dev20250122004106}/test/utils.py +0 -0

|

@@ -0,0 +1,28 @@

|

|

|

1

|

+

name: Publish documentation

|

|

2

|

+

on:

|

|

3

|

+

push:

|

|

4

|

+

branches:

|

|

5

|

+

- gh-pages

|

|

6

|

+

permissions:

|

|

7

|

+

contents: write

|

|

8

|

+

jobs:

|

|

9

|

+

deploy:

|

|

10

|

+

runs-on: ubuntu-latest

|

|

11

|

+

steps:

|

|

12

|

+

- uses: actions/checkout@v4

|

|

13

|

+

- name: Configure Git Credentials

|

|

14

|

+

run: |

|

|

15

|

+

git config user.name github-actions[bot]

|

|

16

|

+

git config user.email 41898282+github-actions[bot]@users.noreply.github.com

|

|

17

|

+

- uses: actions/setup-python@v5

|

|

18

|

+

with:

|

|

19

|

+

python-version: 3.x

|

|

20

|

+

- run: echo "cache_id=$(date --utc '+%V')" >> $GITHUB_ENV

|

|

21

|

+

- uses: actions/cache@v4

|

|

22

|

+

with:

|

|

23

|

+

key: mkdocs-material-${{ env.cache_id }}

|

|

24

|

+

path: .cache

|

|

25

|

+

restore-keys: |

|

|

26

|

+

mkdocs-material-

|

|

27

|

+

- run: pip install mkdocs-material

|

|

28

|

+

- run: mkdocs gh-deploy --force

|

|

@@ -1,4 +1,4 @@

|

|

|

1

|

-

.PHONY: test checkstyle test-convergence all

|

|

1

|

+

.PHONY: test checkstyle test-convergence all serve build clean

|

|

2

2

|

|

|

3

3

|

|

|

4

4

|

all: checkstyle test test-convergence

|

|

@@ -7,8 +7,7 @@ all: checkstyle test test-convergence

|

|

|

7

7

|

test:

|

|

8

8

|

python -m pytest --disable-warnings test/ --ignore=test/convergence

|

|

9

9

|

|

|

10

|

-

# Command to run

|

|

11

|

-

# Subsequent commands still run if the previous fails, but return failure at the end

|

|

10

|

+

# Command to run ruff for linting and formatting code

|

|

12

11

|

checkstyle:

|

|

13

12

|

ruff check . --fix; ruff_check_status=$$?; \

|

|

14

13

|

ruff format .; ruff_format_status=$$?; \

|

|

@@ -39,3 +38,17 @@ run-benchmarks:

|

|

|

39

38

|

python $$script; \

|

|

40

39

|

fi; \

|

|

41

40

|

done

|

|

41

|

+

|

|

42

|

+

# MkDocs Configuration

|

|

43

|

+

MKDOCS = mkdocs

|

|

44

|

+

CONFIG_FILE = mkdocs.yml

|

|

45

|

+

|

|

46

|

+

# MkDocs targets

|

|

47

|

+

serve:

|

|

48

|

+

$(MKDOCS) serve -f $(CONFIG_FILE)

|

|

49

|

+

|

|

50

|

+

build:

|

|

51

|

+

$(MKDOCS) build -f $(CONFIG_FILE)

|

|

52

|

+

|

|

53

|

+

clean:

|

|

54

|

+

rm -rf site/

|

|

@@ -0,0 +1,268 @@

|

|

|

1

|

+

|

|

2

|

+

!!! Example "HANDS-ON USECASE EXAMPLES"

|

|

3

|

+

| **Use Case** | **Description** |

|

|

4

|

+

|------------------------------------------------|---------------------------------------------------------------------------------------------------|

|

|

5

|

+

| [**Hugging Face Trainer**](https://github.com/linkedin/Liger-Kernel/tree/main/examples/huggingface) | Train LLaMA 3-8B ~20% faster with over 40% memory reduction on Alpaca dataset using 4 A100s with FSDP |

|

|

6

|

+

| [**Lightning Trainer**](https://github.com/linkedin/Liger-Kernel/tree/main/examples/lightning) | Increase 15% throughput and reduce memory usage by 40% with LLaMA3-8B on MMLU dataset using 8 A100s with DeepSpeed ZeRO3 |

|

|

7

|

+

| [**Medusa Multi-head LLM (Retraining Phase)**](https://github.com/linkedin/Liger-Kernel/tree/main/examples/medusa) | Reduce memory usage by 80% with 5 LM heads and improve throughput by 40% using 8 A100s with FSDP |

|

|

8

|

+

| [**Vision-Language Model SFT**](https://github.com/linkedin/Liger-Kernel/tree/main/examples/huggingface/run_qwen2_vl.sh) | Finetune Qwen2-VL on image-text data using 4 A100s with FSDP |

|

|

9

|

+

| [**Liger ORPO Trainer**](https://github.com/linkedin/Liger-Kernel/blob/main/examples/alignment/run_orpo.py) | Align Llama 3.2 using Liger ORPO Trainer with FSDP with 50% memory reduction |

|

|

10

|

+

|

|

11

|

+

## HuggingFace Trainer

|

|

12

|

+

|

|

13

|

+

### How to Run

|

|

14

|

+

|

|

15

|

+

#### Locally on a GPU machine

|

|

16

|

+

You can run the example locally on a GPU machine. The default hyperparameters and configurations work on single node with 4xA100 80GB GPUs and FSDP.

|

|

17

|

+

|

|

18

|

+

!!! Example

|

|

19

|

+

|

|

20

|

+

```bash

|

|

21

|

+

pip install -r requirements.txt

|

|

22

|

+

sh run_{MODEL}.sh

|

|

23

|

+

```

|

|

24

|

+

|

|

25

|

+

#### Remotely on Modal

|

|

26

|

+

If you do not have access to a GPU machine, you can run the example on Modal. Modal is a serverless platform that allows you to run your code on a remote GPU machine. You can sign up for a free account at [Modal](https://www.modal.com/).

|

|

27

|

+

|

|

28

|

+

!!! Example

|

|

29

|

+

|

|

30

|

+

```bash

|

|

31

|

+

pip install modal

|

|

32

|

+

modal setup # authenticate with Modal

|

|

33

|

+

modal run launch_on_modal.py --script "run_qwen2_vl.sh"

|

|

34

|

+

```

|

|

35

|

+

|

|

36

|

+

!!! Notes

|

|

37

|

+

|

|

38

|

+

1. This example uses an optional `use_liger` flag. If true, it does a 1 line monkey patch to apply liger kernel.

|

|

39

|

+

|

|

40

|

+

2. The example uses Llama3 model that requires community license agreement and HuggingFace Hub login. If you want to use Llama3 in this example, please make sure you have done the following:

|

|

41

|

+

* Agree on the [community license agreement](https://huggingface.co/meta-llama/Meta-Llama-3-8B) .

|

|

42

|

+

* Run `huggingface-cli login` and enter your HuggingFace token.

|

|

43

|

+

|

|

44

|

+

3. The default hyperparameters and configurations work on single node with 4xA100 80GB GPUs. For running on device with less GPU RAM, please consider reducing the per-GPU batch size and/or enable `CPUOffload` in FSDP.

|

|

45

|

+

|

|

46

|

+

|

|

47

|

+

### Benchmark Result

|

|

48

|

+

|

|

49

|

+

### Llama

|

|

50

|

+

|

|

51

|

+

!!! Info

|

|

52

|

+

>Benchmark conditions:

|

|

53

|

+

>Model= LLaMA 3-8B,Datset= Alpaca, Max seq len = 512, Data Type = bf16, Optimizer = AdamW, Gradient Checkpointing = True, Distributed Strategy = FSDP1 on 4 A100s.

|

|

54

|

+

|

|

55

|

+

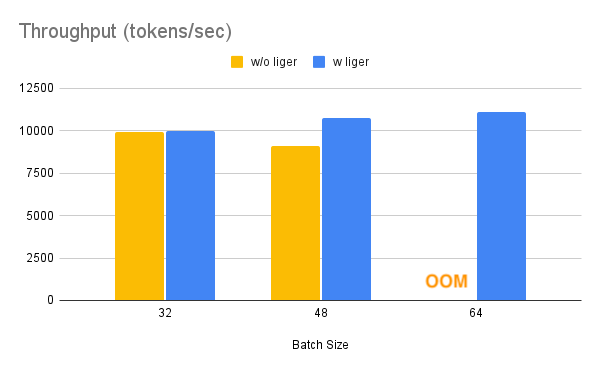

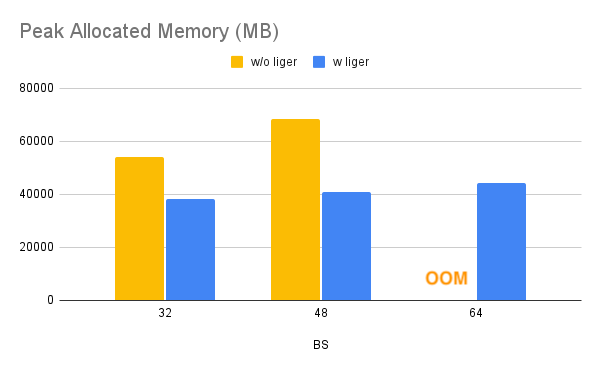

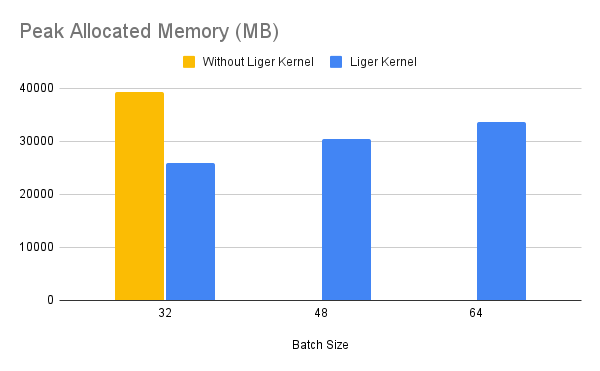

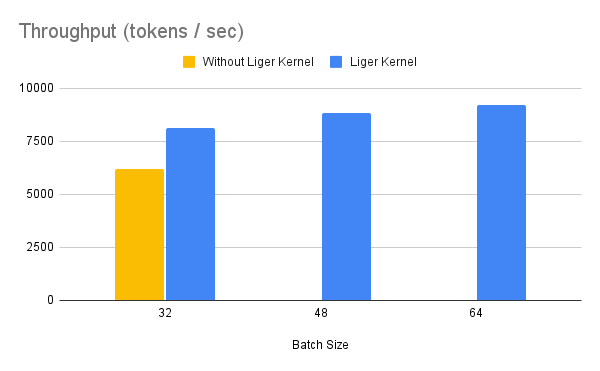

Throughput improves by around 20%, while GPU memory usage drops by 40%. This allows you to train the model on smaller GPUs, use larger batch sizes, or handle longer sequence lengths without incurring additional costs.

|

|

56

|

+

|

|

57

|

+

|

|

58

|

+

|

|

59

|

+

|

|

60

|

+

### Qwen

|

|

61

|

+

|

|

62

|

+

!!! Info

|

|

63

|

+

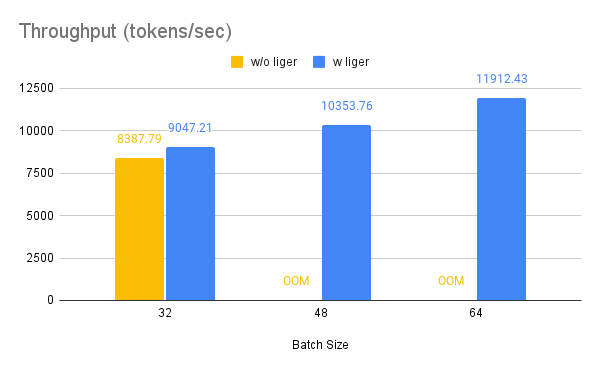

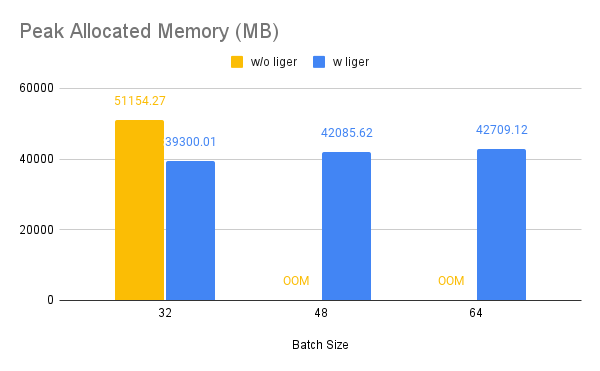

>Benchmark conditions:

|

|

64

|

+

>Model= Qwen2-7B, Dataset= Alpaca, Max seq len = 512, Data Type = bf16, Optimizer = AdamW, Gradient Checkpointing = True, Distributed Strategy = FSDP1 on 4 A100s.

|

|

65

|

+

|

|

66

|

+

Throughput improves by around 10%, while GPU memory usage drops by 50%.

|

|

67

|

+

|

|

68

|

+

|

|

69

|

+

|

|

70

|

+

|

|

71

|

+

|

|

72

|

+

### Gemma 7B

|

|

73

|

+

|

|

74

|

+

!!! Info

|

|

75

|

+

>Benchmark conditions:

|

|

76

|

+

> Model= Gemma-7B, Dataset= Alpaca, Max seq len = 512, Data Type = bf16, Optimizer = AdamW, Gradient Checkpointing = True, Distributed Strategy = FSDP1 on 4 A100s.

|

|

77

|

+

|

|

78

|

+

Throughput improves by around 24%, while GPU memory usage drops by 33%.

|

|

79

|

+

|

|

80

|

+

|

|

81

|

+

|

|

82

|

+

|

|

83

|

+

## Lightning Trainer

|

|

84

|

+

|

|

85

|

+

### How to Run

|

|

86

|

+

|

|

87

|

+

#### Locally on a GPU machine

|

|

88

|

+

You can run the example locally on a GPU machine.

|

|

89

|

+

|

|

90

|

+

!!! Example

|

|

91

|

+

|

|

92

|

+

```bash

|

|

93

|

+

pip install -r requirements.txt

|

|

94

|

+

|

|

95

|

+

# For single L40 48GB GPU

|

|

96

|

+

python training.py --model Qwen/Qwen2-0.5B-Instruct --num_gpu 1 --max_length 1024

|

|

97

|

+

|

|

98

|

+

# For 8XA100 40GB

|

|

99

|

+

python training.py --model meta-llama/Meta-Llama-3-8B --strategy deepspeed

|

|

100

|

+

```

|

|

101

|

+

|

|

102

|

+

!!! Notes

|

|

103

|

+

|

|

104

|

+

1. The example uses Llama3 model that requires community license agreement and HuggingFace Hub login. If you want to use Llama3 in this example, please make sure you have done the following:

|

|

105

|

+

* Agree on the [community license agreement](https://huggingface.co/meta-llama/Meta-Llama-3-8B)

|

|

106

|

+

* Run `huggingface-cli login` and enter your HuggingFace token.

|

|

107

|

+

|

|

108

|

+

2. The default hyperparameters and configurations for gemma works on single L40 48GB GPU and config for llama work on single node with 8xA100 40GB GPUs. For running on device with less GPU RAM, please consider reducing the per-GPU batch size and/or enable `CPUOffload` in FSDP.

|

|

109

|

+

|

|

110

|

+

## Medusa

|

|

111

|

+

|

|

112

|

+

Medusa is a simple framework that democratizes the acceleration techniques for LLM generation with multiple decoding heads. To know more, you can check out the [repo](https://arxiv.org/abs/2401.10774) and the [paper](https://arxiv.org/abs/2401.10774) .

|

|

113

|

+

|

|

114

|

+

The Liger fused CE kernel is highly effective in this scenario, eliminating the need to materialize logits for each head, which usually consumes a large volume of memory due to the extensive vocabulary size (e.g., for LLaMA-3, the vocabulary size is 128k).

|

|

115

|

+

|

|

116

|

+

The introduction of multiple heads can easily lead to OOM (Out of Memory) issues. However, thanks to the efficient Liger fused CE, which calculates the gradient in place and doesn't materialize the logits, we have observed very effective results. This efficiency opens up more opportunities for multi-token prediction research and development.

|

|

117

|

+

|

|

118

|

+

|

|

119

|

+

### How to Run

|

|

120

|

+

|

|

121

|

+

!!! Example

|

|

122

|

+

|

|

123

|

+

```bash

|

|

124

|

+

git clone git@github.com:linkedin/Liger-Kernel.git

|

|

125

|

+

cd {PATH_TO_Liger-Kernel}/Liger-Kernel/

|

|

126

|

+

pip install -e .

|

|

127

|

+

cd {PATH_TO_Liger-Kernel}/Liger-Kernel/examples/medusa

|

|

128

|

+

pip install -r requirements.txt

|

|

129

|

+

sh scripts/llama3_8b_medusa.sh

|

|

130

|

+

```

|

|

131

|

+

|

|

132

|

+

!!! Notes

|

|

133

|

+

|

|

134

|

+

1. This example uses an optional `use_liger` flag. If true, it does a monkey patch to apply liger kernel with medusa heads.

|

|

135

|

+

|

|

136

|

+

2. The example uses Llama3 model that requires community license agreement and HuggingFace Hub login. If you want to use Llama3 in this example, please make sure you have done the followings:

|

|

137

|

+

* Agree on the community license agreement https://huggingface.co/meta-llama/Meta-Llama-3-8B

|

|

138

|

+

* Run `huggingface-cli login` and enter your HuggingFace token

|

|

139

|

+

|

|

140

|

+

3. The default hyperparameters and configurations work on single node with 8xA100 GPUs. For running on device with less GPU RAM, please consider reducing the per-GPU batch size and/or enable `CPUOffload` in FSDP.

|

|

141

|

+

|

|

142

|

+

4. We are using a smaller sample of shared GPT data primarily to benchmark performance. The example requires hyperparameter tuning and dataset selection to work effectively, also ensuring the dataset has the same distribution as the LLaMA pretraining data. Welcome contribution to enhance the example code.

|

|

143

|

+

|

|

144

|

+

### Benchmark Result

|

|

145

|

+

|

|

146

|

+

!!! Info

|

|

147

|

+

> 1. Benchmark conditions: LLaMA 3-8B, Batch Size = 6, Data Type = bf16, Optimizer = AdamW, Gradient Checkpointing = True, Distributed Strategy = FSDP1 on 8 A100s.

|

|

148

|

+

|

|

149

|

+

#### Stage 1

|

|

150

|

+

|

|

151

|

+

Stage 1 refers to Medusa-1 where the backbone model is frozen and only weights of LLM heads are updated.

|

|

152

|

+

|

|

153

|

+

!!! Warning

|

|

154

|

+

```bash

|

|

155

|

+

# Modify this flag in llama3_8b_medusa.sh to True enables stage1

|

|

156

|

+

--medusa_only_heads True

|

|

157

|

+

```

|

|

158

|

+

|

|

159

|

+

#### num_head = 3

|

|

160

|

+

|

|

161

|

+

|

|

162

|

+

|

|

163

|

+

|

|

164

|

+

#### num_head = 5

|

|

165

|

+

|

|

166

|

+

|

|

167

|

+

|

|

168

|

+

|

|

169

|

+

#### Stage 2

|

|

170

|

+

|

|

171

|

+

!!! Warning

|

|

172

|

+

```bash

|

|

173

|

+

# Modify this flag to False in llama3_8b_medusa.sh enables stage2

|

|

174

|

+

--medusa_only_heads False

|

|

175

|

+

```

|

|

176

|

+

|

|

177

|

+

Stage 2 refers to Medusa-2 where all the model weights are updated including the backbone model and llm heads.

|

|

178

|

+

|

|

179

|

+

#### num_head = 3

|

|

180

|

+

|

|

181

|

+

|

|

182

|

+

|

|

183

|

+

|

|

184

|

+

#### num_head = 5

|

|

185

|

+

|

|

186

|

+

|

|

187

|

+

|

|

188

|

+

|

|

189

|

+

|

|

190

|

+

## Vision-Language Model SFT

|

|

191

|

+

|

|

192

|

+

## How to Run

|

|

193

|

+

|

|

194

|

+

### Locally on a GPU Machine

|

|

195

|

+

You can run the example locally on a GPU machine. The default hyperparameters and configurations work on single node with 4xA100 80GB GPUs.

|

|

196

|

+

|

|

197

|

+

!!! Example

|

|

198

|

+

```bash

|

|

199

|

+

#!/bin/bash

|

|

200

|

+

|

|

201

|

+

torchrun --nnodes=1 --nproc-per-node=4 training_multimodal.py \

|

|

202

|

+

--model_name "Qwen/Qwen2-VL-7B-Instruct" \

|

|

203

|

+

--bf16 \

|

|

204

|

+

--num_train_epochs 1 \

|

|

205

|

+

--per_device_train_batch_size 8 \

|

|

206

|

+

--per_device_eval_batch_size 8 \

|

|

207

|

+

--eval_strategy "no" \

|

|

208

|

+

--save_strategy "no" \

|

|

209

|

+

--learning_rate 6e-6 \

|

|

210

|

+

--weight_decay 0.05 \

|

|

211

|

+

--warmup_ratio 0.1 \

|

|

212

|

+

--lr_scheduler_type "cosine" \

|

|

213

|

+

--logging_steps 1 \

|

|

214

|

+

--include_num_input_tokens_seen \

|

|

215

|

+

--report_to none \

|

|

216

|

+

--fsdp "full_shard auto_wrap" \

|

|

217

|

+

--fsdp_config config/fsdp_config.json \

|

|

218

|

+

--seed 42 \

|

|

219

|

+

--use_liger True \

|

|

220

|

+

--output_dir multimodal_finetuning

|

|

221

|

+

```

|

|

222

|

+

|

|

223

|

+

## ORPO Trainer

|

|

224

|

+

|

|

225

|

+

### How to Run

|

|

226

|

+

|

|

227

|

+

#### Locally on a GPU Machine

|

|

228

|

+

|

|

229

|

+

You can run the example locally on a GPU machine and FSDP.

|

|

230

|

+

|

|

231

|

+

!!! Example

|

|

232

|

+

```py

|

|

233

|

+

import torch

|

|

234

|

+

from datasets import load_dataset

|

|

235

|

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

|

236

|

+

from trl import ORPOConfig # noqa: F401

|

|

237

|

+

|

|

238

|

+

from liger_kernel.transformers.trainer import LigerORPOTrainer # noqa: F401

|

|

239

|

+

|

|

240

|

+

model = AutoModelForCausalLM.from_pretrained(

|

|

241

|

+

"meta-llama/Llama-3.2-1B-Instruct",

|

|

242

|

+

torch_dtype=torch.bfloat16,

|

|

243

|

+

)

|

|

244

|

+

|

|

245

|

+

tokenizer = AutoTokenizer.from_pretrained(

|

|

246

|

+

"meta-llama/Llama-3.2-1B-Instruct",

|

|

247

|

+

max_length=512,

|

|

248

|

+

padding="max_length",

|

|

249

|

+

)

|

|

250

|

+

tokenizer.pad_token = tokenizer.eos_token

|

|

251

|

+

|

|

252

|

+

train_dataset = load_dataset("trl-lib/tldr-preference", split="train")

|

|

253

|

+

|

|

254

|

+

training_args = ORPOConfig(

|

|

255

|

+

output_dir="Llama3.2_1B_Instruct",

|

|

256

|

+

beta=0.1,

|

|

257

|

+

max_length=128,

|

|

258

|

+

per_device_train_batch_size=32,

|

|

259

|

+

max_steps=100,

|

|

260

|

+

save_strategy="no",

|

|

261

|

+

)

|

|

262

|

+

|

|

263

|

+

trainer = LigerORPOTrainer(

|

|

264

|

+

model=model, args=training_args, tokenizer=tokenizer, train_dataset=train_dataset

|

|

265

|

+

)

|

|

266

|

+

|

|

267

|

+

trainer.train()

|

|

268

|

+

```

|

|

@@ -0,0 +1,64 @@

|

|

|

1

|

+

There are a couple of ways to apply Liger kernels, depending on the level of customization required.

|

|

2

|

+

|

|

3

|

+

### 1. Use AutoLigerKernelForCausalLM

|

|

4

|

+

|

|

5

|

+

Using the `AutoLigerKernelForCausalLM` is the simplest approach, as you don't have to import a model-specific patching API. If the model type is supported, the modeling code will be automatically patched using the default settings.

|

|

6

|

+

|

|

7

|

+

!!! Example

|

|

8

|

+

|

|

9

|

+

```python

|

|

10

|

+

from liger_kernel.transformers import AutoLigerKernelForCausalLM

|

|

11

|

+

|

|

12

|

+

# This AutoModel wrapper class automatically monkey-patches the

|

|

13

|

+

# model with the optimized Liger kernels if the model is supported.

|

|

14

|

+

model = AutoLigerKernelForCausalLM.from_pretrained("path/to/some/model")

|

|

15

|

+

```

|

|

16

|

+

|

|

17

|

+

### 2. Apply Model-Specific Patching APIs

|

|

18

|

+

|

|

19

|

+

Using the [patching APIs](https://github.com/linkedin/Liger-Kernel?tab=readme-ov-file#patching), you can swap Hugging Face models with optimized Liger Kernels.

|

|

20

|

+

|

|

21

|

+

!!! Example

|

|

22

|

+

|

|

23

|

+

```python

|

|

24

|

+

import transformers

|

|

25

|

+

from liger_kernel.transformers import apply_liger_kernel_to_llama

|

|

26

|

+

|

|

27

|

+

# 1a. Adding this line automatically monkey-patches the model with the optimized Liger kernels

|

|

28

|

+

apply_liger_kernel_to_llama()

|

|

29

|

+

|

|

30

|

+

# 1b. You could alternatively specify exactly which kernels are applied

|

|

31

|

+

apply_liger_kernel_to_llama(

|

|

32

|

+

rope=True,

|

|

33

|

+

swiglu=True,

|

|

34

|

+

cross_entropy=True,

|

|

35

|

+

fused_linear_cross_entropy=False,

|

|

36

|

+

rms_norm=False

|

|

37

|

+

)

|

|

38

|

+

|

|

39

|

+

# 2. Instantiate patched model

|

|

40

|

+

model = transformers.AutoModelForCausalLM("path/to/llama/model")

|

|

41

|

+

```

|

|

42

|

+

|

|

43

|

+

### 3. Compose Your Own Model

|

|

44

|

+

|

|

45

|

+

You can take individual [kernels](https://github.com/linkedin/Liger-Kernel?tab=readme-ov-file#model-kernels) to compose your models.

|

|

46

|

+

|

|

47

|

+

!!! Example

|

|

48

|

+

|

|

49

|

+

```python

|

|

50

|

+

from liger_kernel.transformers import LigerFusedLinearCrossEntropyLoss

|

|

51

|

+

import torch.nn as nn

|

|

52

|

+

import torch

|

|

53

|

+

|

|

54

|

+

model = nn.Linear(128, 256).cuda()

|

|

55

|

+

|

|

56

|

+

# fuses linear + cross entropy layers together and performs chunk-by-chunk computation to reduce memory

|

|

57

|

+

loss_fn = LigerFusedLinearCrossEntropyLoss()

|

|

58

|

+

|

|

59

|

+

input = torch.randn(4, 128, requires_grad=True, device="cuda")

|

|

60

|

+

target = torch.randint(256, (4, ), device="cuda")

|

|

61

|

+

|

|

62

|

+

loss = loss_fn(model.weight, input, target)

|

|

63

|

+

loss.backward()

|

|

64

|

+

```

|

|

@@ -0,0 +1,30 @@

|

|

|

1

|

+

|

|

2

|

+

### AutoModel

|

|

3

|

+

|

|

4

|

+

| **AutoModel Variant** | **API** |

|

|

5

|

+

|-----------|---------|

|

|

6

|

+

| AutoModelForCausalLM | `liger_kernel.transformers.AutoLigerKernelForCausalLM` |

|

|

7

|

+

|

|

8

|

+

This API extends the implementation of the `AutoModelForCausalLM` within the `transformers` library from Hugging Face.

|

|

9

|

+

|

|

10

|

+

!!! Example "Try it Out"

|

|

11

|

+

You can experiment as shown in this example [here](https://github.com/linkedin/Liger-Kernel?tab=readme-ov-file#1-use-autoligerkernelforcausallm).

|

|

12

|

+

|

|

13

|

+

### Patching

|

|

14

|

+

|

|

15

|

+

You can also use the Patching APIs to use the kernels for a specific model architecture.

|

|

16

|

+

|

|

17

|

+

!!! Example "Try it Out"

|

|

18

|

+

You can experiment as shown in this example [here](https://github.com/linkedin/Liger-Kernel?tab=readme-ov-file#2-apply-model-specific-patching-apis).

|

|

19

|

+

|

|

20

|

+

| **Model** | **API** | **Supported Operations** |

|

|

21

|

+

|-------------|--------------------------------------------------------------|-------------------------------------------------------------------------|

|

|

22

|

+

| LLaMA 2 & 3 | `liger_kernel.transformers.apply_liger_kernel_to_llama` | RoPE, RMSNorm, SwiGLU, CrossEntropyLoss, FusedLinearCrossEntropy |

|

|

23

|

+

| LLaMA 3.2-Vision | `liger_kernel.transformers.apply_liger_kernel_to_mllama` | RoPE, RMSNorm, SwiGLU, CrossEntropyLoss, FusedLinearCrossEntropy |

|

|

24

|

+

| Mistral | `liger_kernel.transformers.apply_liger_kernel_to_mistral` | RoPE, RMSNorm, SwiGLU, CrossEntropyLoss, FusedLinearCrossEntropy |

|

|

25

|

+

| Mixtral | `liger_kernel.transformers.apply_liger_kernel_to_mixtral` | RoPE, RMSNorm, SwiGLU, CrossEntropyLoss, FusedLinearCrossEntropy |

|

|

26

|

+

| Gemma1 | `liger_kernel.transformers.apply_liger_kernel_to_gemma` | RoPE, RMSNorm, GeGLU, CrossEntropyLoss, FusedLinearCrossEntropy |

|

|

27

|

+

| Gemma2 | `liger_kernel.transformers.apply_liger_kernel_to_gemma2` | RoPE, RMSNorm, GeGLU, CrossEntropyLoss, FusedLinearCrossEntropy |

|

|

28

|

+

| Qwen2, Qwen2.5, & QwQ | `liger_kernel.transformers.apply_liger_kernel_to_qwen2` | RoPE, RMSNorm, SwiGLU, CrossEntropyLoss, FusedLinearCrossEntropy |

|

|

29

|

+

| Qwen2-VL | `liger_kernel.transformers.apply_liger_kernel_to_qwen2_vl` | RMSNorm, LayerNorm, SwiGLU, CrossEntropyLoss, FusedLinearCrossEntropy |

|

|

30

|

+

| Phi3 & Phi3.5 | `liger_kernel.transformers.apply_liger_kernel_to_phi3` | RoPE, RMSNorm, SwiGLU, CrossEntropyLoss, FusedLinearCrossEntropy |

|

|

@@ -0,0 +1,74 @@

|

|

|

1

|

+

## Model Kernels

|

|

2

|

+

|

|

3

|

+

| **Kernel** | **API** |

|

|

4

|

+

|---------------------------------|-------------------------------------------------------------|

|

|

5

|

+

| RMSNorm | `liger_kernel.transformers.LigerRMSNorm` |

|

|

6

|

+

| LayerNorm | `liger_kernel.transformers.LigerLayerNorm` |

|

|

7

|

+

| RoPE | `liger_kernel.transformers.liger_rotary_pos_emb` |

|

|

8

|

+

| SwiGLU | `liger_kernel.transformers.LigerSwiGLUMLP` |

|

|

9

|

+

| GeGLU | `liger_kernel.transformers.LigerGEGLUMLP` |

|

|

10

|

+

| CrossEntropy | `liger_kernel.transformers.LigerCrossEntropyLoss` |

|

|

11

|

+

| Fused Linear CrossEntropy | `liger_kernel.transformers.LigerFusedLinearCrossEntropyLoss`|

|

|

12

|

+

|

|

13

|

+

### RMS Norm

|

|

14

|

+

|

|

15

|

+

RMS Norm simplifies the LayerNorm operation by eliminating mean subtraction, which reduces computational complexity while retaining effectiveness.

|

|

16

|

+

|

|

17

|

+

This kernel performs normalization by scaling input vectors to have a unit root mean square (RMS) value. This method allows for a ~7x speed improvement and a ~3x reduction in memory footprint compared to

|

|

18

|

+

implementations in PyTorch.

|

|

19

|

+

|

|

20

|

+

!!! Example "Try it out"

|

|

21

|

+

You can experiment as shown in this example [here](https://colab.research.google.com/drive/1CQYhul7MVG5F0gmqTBbx1O1HgolPgF0M?usp=sharing).

|

|

22

|

+

|

|

23

|

+

### RoPE

|

|

24

|

+

|

|

25

|

+

RoPE (Rotary Position Embedding) enhances the positional encoding used in transformer models.

|

|

26

|

+

|

|

27

|

+

The implementation allows for effective handling of positional information without incurring significant computational overhead.

|

|

28

|

+

|

|

29

|

+

!!! Example "Try it out"

|

|

30

|

+

You can experiment as shown in this example [here](https://colab.research.google.com/drive/1llnAdo0hc9FpxYRRnjih0l066NCp7Ylu?usp=sharing).

|

|

31

|

+

|

|

32

|

+

### SwiGLU

|

|

33

|

+

|

|

34

|

+

### GeGLU

|

|

35

|

+

|

|

36

|

+

### CrossEntropy

|

|

37

|

+

|

|

38

|

+

This kernel is optimized for calculating the loss function used in classification tasks.

|

|

39

|

+

|

|

40

|

+

The kernel achieves a ~3x execution speed increase and a ~5x reduction in memory usage for substantial vocabulary sizes compared to implementations in PyTorch.

|

|

41

|

+

|

|

42

|

+

!!! Example "Try it out"

|

|

43

|

+

You can experiment as shown in this example [here](https://colab.research.google.com/drive/1WgaU_cmaxVzx8PcdKB5P9yHB6_WyGd4T?usp=sharing).

|

|

44

|

+

|

|

45

|

+

### Fused Linear CrossEntropy

|

|

46

|

+

|

|

47

|

+

This kernel combines linear transformations with cross-entropy loss calculations into a single operation.

|

|

48

|

+

|

|

49

|

+

!!! Example "Try it out"

|

|

50

|

+

You can experiment as shown in this example [here](https://colab.research.google.com/drive/1Z2QtvaIiLm5MWOs7X6ZPS1MN3hcIJFbj?usp=sharing)

|

|

51

|

+

|

|

52

|

+

## Alignment Kernels

|

|

53

|

+

|

|

54

|

+

| **Kernel** | **API** |

|

|

55

|

+

|---------------------------------|-------------------------------------------------------------|

|

|

56

|

+

| Fused Linear CPO Loss | `liger_kernel.chunked_loss.LigerFusedLinearCPOLoss` |

|

|

57

|

+

| Fused Linear DPO Loss | `liger_kernel.chunked_loss.LigerFusedLinearDPOLoss` |

|

|

58

|

+

| Fused Linear ORPO Loss | `liger_kernel.chunked_loss.LigerFusedLinearORPOLoss` |

|

|

59

|

+

| Fused Linear SimPO Loss | `liger_kernel.chunked_loss.LigerFusedLinearSimPOLoss` |

|

|

60

|

+

|

|

61

|

+

## Distillation Kernels

|

|

62

|

+

|

|

63

|

+

| **Kernel** | **API** |

|

|

64

|

+

|---------------------------------|-------------------------------------------------------------|

|

|

65

|

+

| KLDivergence | `liger_kernel.transformers.LigerKLDIVLoss` |

|

|

66

|

+

| JSD | `liger_kernel.transformers.LigerJSD` |

|

|

67

|

+

| Fused Linear JSD | `liger_kernel.transformers.LigerFusedLinearJSD` |

|

|

68

|

+

|

|

69

|

+

## Experimental Kernels

|

|

70

|

+

|

|

71

|

+

| **Kernel** | **API** |

|

|

72

|

+

|---------------------------------|-------------------------------------------------------------|

|

|

73

|

+

| Embedding | `liger_kernel.transformers.experimental.LigerEmbedding` |

|

|

74

|

+

| Matmul int2xint8 | `liger_kernel.transformers.experimental.matmul` |

|

|

@@ -1,10 +1,10 @@

|

|

|

1

|

-

# Contributing to Liger-Kernel

|

|

2

1

|

|

|

3

|

-

Thank you for your interest in contributing to Liger-Kernel! This guide will help you set up your development environment, add a new kernel, run tests, and submit a pull request (PR).

|

|

4

2

|

|

|

5

|

-

|

|

3

|

+

Thank you for your interest in contributing to Liger-Kernel! This guide will help you set up your development environment, add a new kernel, run tests, and submit a pull request (PR).

|

|

6

4

|

|

|

7

|

-

|

|

5

|

+

!!! Note

|

|

6

|

+

### Maintainers

|

|

7

|

+

@ByronHsu(admin) @qingquansong @yundai424 @kvignesh1420 @lancerts @JasonZhu1313 @shimizust

|

|

8

8

|

|

|

9

9

|

## Interested in the ticket?

|

|

10

10

|

|

|

@@ -12,52 +12,59 @@ Leave `#take` in the comment and tag the maintainer.

|

|

|

12

12

|

|

|

13

13

|

## Setting Up Your Development Environment

|

|

14

14

|

|

|

15

|

-

|

|

16

|

-

|

|

17

|

-

|

|

18

|

-

|

|

19

|

-

|

|

20

|

-

|

|

21

|

-

|

|

22

|

-

|

|

23

|

-

|

|

24

|

-

|

|

25

|

-

|

|

26

|

-

|

|

27

|

-

|

|

15

|

+

!!! Note

|

|

16

|

+

1. **Clone the Repository**

|

|

17

|

+

```sh

|

|

18

|

+

git clone https://github.com/linkedin/Liger-Kernel.git

|

|

19

|

+

cd Liger-Kernel

|

|

20

|

+

```

|

|

21

|

+

2. **Install Dependencies and Editable Package**

|

|

22

|

+

```

|

|

23

|

+

pip install . -e[dev]

|

|

24

|

+

```

|

|

25

|

+

If encounter error `no matches found: .[dev]`, please use

|

|

26

|

+

```

|

|

27

|

+

pip install -e .'[dev]'

|

|

28

|

+

```

|

|

28

29

|

|

|

29

30

|

## Structure

|

|

30

31

|

|

|

31

|

-

|

|

32

|

+

!!! Info

|

|

33

|

+

### Source Code

|

|

32

34

|

|

|

33

|

-

- `ops/`: Core Triton operations.

|

|

34

|

-

- `transformers/`: PyTorch `nn.Module` implementations built on Triton operations, compliant with the `transformers` API.

|

|

35

|

+

- `ops/`: Core Triton operations.

|

|

36

|

+

- `transformers/`: PyTorch `nn.Module` implementations built on Triton operations, compliant with the `transformers` API.

|

|

35

37

|

|

|

36

|

-

### Tests

|

|

38

|

+

### Tests

|

|

37

39

|

|

|

38

|

-

- `transformers/`: Correctness tests for the Triton-based layers.

|

|

39

|

-

- `convergence/`: Patches Hugging Face models with all kernels, runs multiple iterations, and compares weights, logits, and loss layer-by-layer.

|

|

40

|

+

- `transformers/`: Correctness tests for the Triton-based layers.

|

|

41

|

+

- `convergence/`: Patches Hugging Face models with all kernels, runs multiple iterations, and compares weights, logits, and loss layer-by-layer.

|

|

40

42

|

|

|

41

|

-

### Benchmark

|

|

43

|

+

### Benchmark

|

|

42

44

|

|

|

43

|

-

- `benchmark/`: Execution time and memory benchmarks compared to Hugging Face layers.

|

|

45

|

+

- `benchmark/`: Execution time and memory benchmarks compared to Hugging Face layers.

|

|

44

46

|

|

|

45

47

|

## Adding support for a new model

|

|

46

|

-

To get familiar with the folder structure, please refer

|

|

48

|

+

To get familiar with the folder structure, please refer [here](https://github.com/linkedin/Liger-Kernel?tab=readme-ov-file#structure.).

|

|

49

|

+

|

|

50

|

+

#### 1 Figure out the kernels that can be monkey-patched

|

|

51

|

+

|

|

52

|

+

a) Check the `src/liger_kernel/ops` directory to find the kernels that can be monkey-patched.

|

|

47

53

|

|

|

48

|

-

|

|

49

|

-

- Check the `src/liger_kernel/ops` directory to find the kernels that can be monkey-patched.

|

|

50

|

-

- Kernels like Fused Linear Cross Entropy require a custom lce_forward function to allow monkey-patching. For adding kernels requiring a similar approach, ensure that you create the corresponding forward function in the `src/liger_kernel/transformers/model` directory.

|

|

54

|

+

b) Kernels like Fused Linear Cross Entropy require a custom lce_forward function to allow monkey-patching. For adding kernels requiring a similar approach, ensure that you create the corresponding forward function in the `src/liger_kernel/transformers/model` directory.

|

|

51

55

|

|

|

52

|

-

2

|

|

53

|

-