fastquadtree 0.5.0__tar.gz → 0.5.1__tar.gz

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/.pre-commit-config.yaml +11 -9

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/Cargo.lock +1 -1

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/Cargo.toml +1 -1

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/PKG-INFO +10 -2

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/README.md +2 -1

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/benchmarks/benchmark_native_vs_shim.py +25 -6

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/benchmarks/quadtree_bench/__init__.py +2 -2

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/benchmarks/quadtree_bench/engines.py +21 -8

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/benchmarks/quadtree_bench/main.py +1 -1

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/benchmarks/quadtree_bench/plotting.py +24 -24

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/benchmarks/quadtree_bench/runner.py +82 -12

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/benchmarks/runner.py +0 -7

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/interactive/interactive.py +12 -6

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/interactive/interactive_v2.py +123 -95

- fastquadtree-0.5.1/pyproject.toml +130 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/pysrc/fastquadtree/__init__.py +42 -45

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/pysrc/fastquadtree/__init__.pyi +25 -21

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/pysrc/fastquadtree/_bimap.py +12 -11

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/pysrc/fastquadtree/_item.py +5 -4

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/test_bimap.py +7 -6

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/test_delete_by_object.py +1 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/test_delete_python.py +0 -1

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/test_python.py +7 -4

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/test_unconventional_bounds.py +4 -2

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/test_wrapper_edges.py +14 -15

- fastquadtree-0.5.0/pyproject.toml +0 -56

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/.github/workflows/release.yml +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/.gitignore +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/LICENSE +0 -0

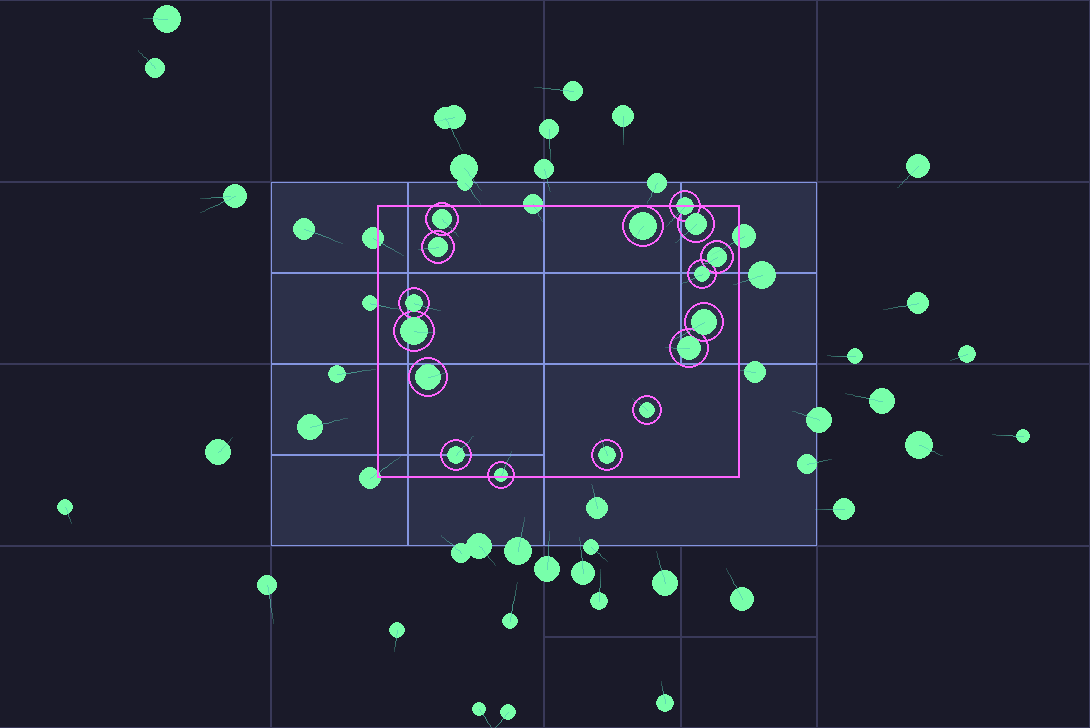

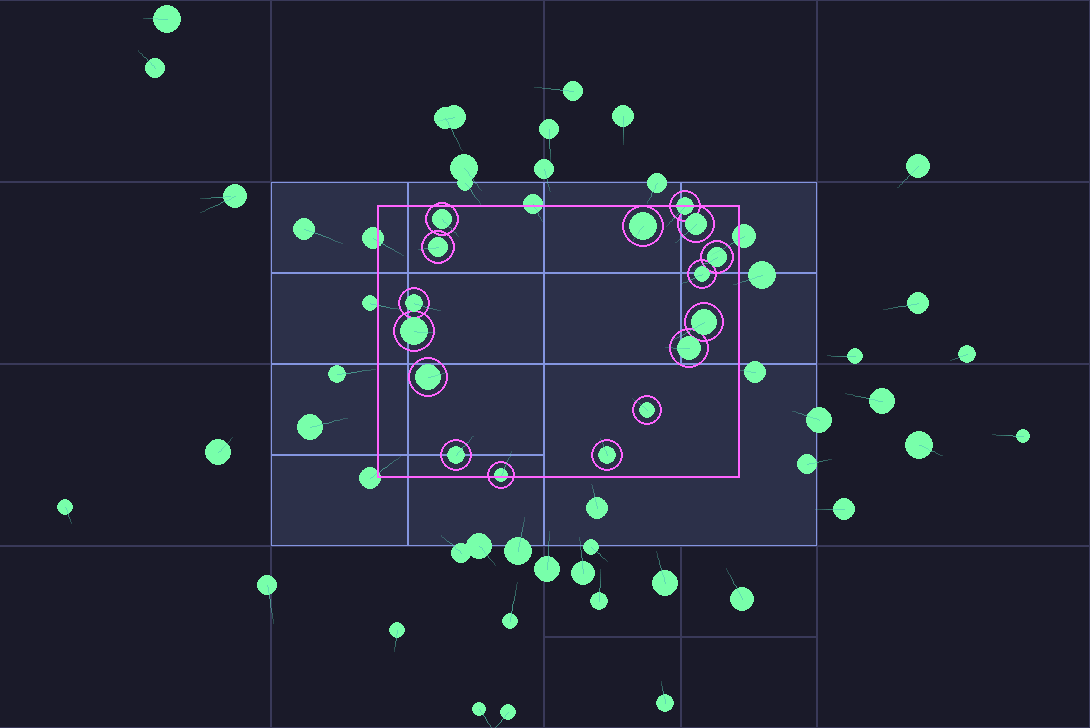

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/assets/interactive_v2_screenshot.png +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/assets/quadtree_bench_throughput.png +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/assets/quadtree_bench_time.png +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/benchmarks/cross_library_bench.py +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/benchmarks/requirements.txt +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/interactive/requirements.txt +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/pysrc/fastquadtree/py.typed +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/src/geom.rs +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/src/lib.rs +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/src/quadtree.rs +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/insertions.rs +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/nearest_neighbor.rs +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/query.rs +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/rectangle_traversal.rs +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/test_delete.rs +0 -0

- {fastquadtree-0.5.0 → fastquadtree-0.5.1}/tests/unconventional_bounds.rs +0 -0

|

@@ -1,12 +1,15 @@

|

|

|

1

1

|

repos:

|

|

2

|

-

# 0) Python formatter:

|

|

3

|

-

- repo: https://github.com/

|

|

4

|

-

rev:

|

|

2

|

+

# 0) Python linter and formatter: Ruff

|

|

3

|

+

- repo: https://github.com/astral-sh/ruff-pre-commit

|

|

4

|

+

rev: v0.6.9

|

|

5

5

|

hooks:

|

|

6

|

-

|

|

7

|

-

|

|

8

|

-

|

|

9

|

-

args: ["--

|

|

6

|

+

# Lint + autofix. If Ruff fixes something, the hook exits nonzero to force a re-run.

|

|

7

|

+

- id: ruff

|

|

8

|

+

name: ruff (lint + fix)

|

|

9

|

+

args: ["--fix", "--exit-non-zero-on-fix"]

|

|

10

|

+

# Code formatter (Black replacement)

|

|

11

|

+

- id: ruff-format

|

|

12

|

+

name: ruff (format)

|

|

10

13

|

|

|

11

14

|

# Local hooks that run in sequence and do not receive file args

|

|

12

15

|

- repo: local

|

|

@@ -28,10 +31,9 @@ repos:

|

|

|

28

31

|

always_run: true

|

|

29

32

|

|

|

30

33

|

# 3) Python tests under coverage

|

|

31

|

-

# This both runs pytest and generates .coverage data

|

|

32

34

|

- id: pytest

|

|

33

35

|

name: pytest (under coverage)

|

|

34

36

|

entry: pytest

|

|

35

37

|

language: system

|

|

36

38

|

pass_filenames: false

|

|

37

|

-

always_run: true

|

|

39

|

+

always_run: true

|

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.4

|

|

2

2

|

Name: fastquadtree

|

|

3

|

-

Version: 0.5.

|

|

3

|

+

Version: 0.5.1

|

|

4

4

|

Classifier: Programming Language :: Python :: 3

|

|

5

5

|

Classifier: Programming Language :: Python :: 3 :: Only

|

|

6

6

|

Classifier: Programming Language :: Rust

|

|

@@ -11,6 +11,13 @@ Classifier: Topic :: Scientific/Engineering :: Information Analysis

|

|

|

11

11

|

Classifier: Topic :: Software Development :: Libraries

|

|

12

12

|

Classifier: Typing :: Typed

|

|

13

13

|

Classifier: License :: OSI Approved :: MIT License

|

|

14

|

+

Requires-Dist: ruff>=0.6.0 ; extra == 'dev'

|

|

15

|

+

Requires-Dist: pytest>=7.0 ; extra == 'dev'

|

|

16

|

+

Requires-Dist: pytest-cov>=4.1 ; extra == 'dev'

|

|

17

|

+

Requires-Dist: coverage>=7.5 ; extra == 'dev'

|

|

18

|

+

Requires-Dist: mypy>=1.10 ; extra == 'dev'

|

|

19

|

+

Requires-Dist: build>=1.2.1 ; extra == 'dev'

|

|

20

|

+

Provides-Extra: dev

|

|

14

21

|

License-File: LICENSE

|

|

15

22

|

Summary: Rust-accelerated quadtree for Python with fast inserts, range queries, and k-NN search.

|

|

16

23

|

Keywords: quadtree,spatial-index,geometry,rust,pyo3,nearest-neighbor,k-nn

|

|

@@ -36,7 +43,8 @@ Project-URL: Issues, https://github.com/Elan456/fastquadtree/issues

|

|

|

36

43

|

|

|

37

44

|

[](https://pyo3.rs/)

|

|

38

45

|

[](https://www.maturin.rs/)

|

|

39

|

-

[](https://docs.astral.sh/ruff/)

|

|

47

|

+

|

|

40

48

|

|

|

41

49

|

|

|

42

50

|

|

|

@@ -13,7 +13,8 @@

|

|

|

13

13

|

|

|

14

14

|

[](https://pyo3.rs/)

|

|

15

15

|

[](https://www.maturin.rs/)

|

|

16

|

-

[](https://docs.astral.sh/ruff/)

|

|

17

|

+

|

|

17

18

|

|

|

18

19

|

|

|

19

20

|

|

|

@@ -6,11 +6,11 @@ import gc

|

|

|

6

6

|

import random

|

|

7

7

|

import statistics as stats

|

|

8

8

|

from time import perf_counter as now

|

|

9

|

+

|

|

9

10

|

from tqdm import tqdm

|

|

10

11

|

|

|

11

|

-

from fastquadtree._native import QuadTree as NativeQuadTree

|

|

12

12

|

from fastquadtree import QuadTree as ShimQuadTree

|

|

13

|

-

|

|

13

|

+

from fastquadtree._native import QuadTree as NativeQuadTree

|

|

14

14

|

|

|

15

15

|

BOUNDS = (0.0, 0.0, 1000.0, 1000.0)

|

|

16

16

|

CAPACITY = 20

|

|

@@ -68,9 +68,10 @@ def bench_shim(points, queries, *, track_objects: bool, with_objs: bool):

|

|

|

68

68

|

return t_build, t_query

|

|

69

69

|

|

|

70

70

|

|

|

71

|

-

def median_times(fn, points, queries, repeats: int):

|

|

71

|

+

def median_times(fn, points, queries, repeats: int, desc: str = "Running"):

|

|

72

|

+

"""Run benchmark multiple times and return median times."""

|

|

72

73

|

builds, queries_t = [], []

|

|

73

|

-

for _ in tqdm(range(repeats)):

|

|

74

|

+

for _ in tqdm(range(repeats), desc=desc, unit="run"):

|

|

74

75

|

gc.disable()

|

|

75

76

|

b, q = fn(points, queries)

|

|

76

77

|

gc.enable()

|

|

@@ -86,29 +87,47 @@ def main():

|

|

|

86

87

|

ap.add_argument("--repeats", type=int, default=5)

|

|

87

88

|

args = ap.parse_args()

|

|

88

89

|

|

|

90

|

+

print("Native vs Shim Benchmark")

|

|

91

|

+

print("=" * 50)

|

|

92

|

+

print("Configuration:")

|

|

93

|

+

print(f" Points: {args.points:,}")

|

|

94

|

+

print(f" Queries: {args.queries}")

|

|

95

|

+

print(f" Repeats: {args.repeats}")

|

|

96

|

+

print()

|

|

97

|

+

|

|

89

98

|

rng = random.Random(SEED)

|

|

90

99

|

points = gen_points(args.points, rng)

|

|

91

100

|

queries = gen_queries(args.queries, rng)

|

|

92

101

|

|

|

93

102

|

# Warmup to load modules

|

|

103

|

+

print("Warming up...")

|

|

94

104

|

_ = bench_native(points[:1000], queries[:50])

|

|

95

105

|

_ = bench_shim(points[:1000], queries[:50], track_objects=False, with_objs=False)

|

|

106

|

+

print()

|

|

96

107

|

|

|

108

|

+

print("Running benchmarks...")

|

|

97

109

|

n_build, n_query = median_times(

|

|

98

|

-

lambda pts, qs: bench_native(pts, qs),

|

|

110

|

+

lambda pts, qs: bench_native(pts, qs),

|

|

111

|

+

points,

|

|

112

|

+

queries,

|

|

113

|

+

args.repeats,

|

|

114

|

+

desc="Native",

|

|

99

115

|

)

|

|

100

116

|

s_build_no_map, s_query_no_map = median_times(

|

|

101

117

|

lambda pts, qs: bench_shim(pts, qs, track_objects=False, with_objs=False),

|

|

102

118

|

points,

|

|

103

119

|

queries,

|

|

104

120

|

args.repeats,

|

|

121

|

+

desc="Shim (no map)",

|

|

105

122

|

)

|

|

106

123

|

s_build_map, s_query_map = median_times(

|

|

107

124

|

lambda pts, qs: bench_shim(pts, qs, track_objects=True, with_objs=True),

|

|

108

125

|

points,

|

|

109

126

|

queries,

|

|

110

127

|

args.repeats,

|

|

128

|

+

desc="Shim (track+objs)",

|

|

111

129

|

)

|

|

130

|

+

print()

|

|

112

131

|

|

|

113

132

|

def fmt(x):

|

|

114

133

|

return f"{x:.3f}"

|

|

@@ -131,7 +150,7 @@ def main():

|

|

|

131

150

|

|

|

132

151

|

**Overhead vs Native**

|

|

133

152

|

|

|

134

|

-

- No map: build {s_build_no_map / n_build:.2f}x, query {s_query_no_map / n_query:.2f}x, total {(s_build_no_map + s_query_no_map) / (n_build + n_query):.2f}x

|

|

153

|

+

- No map: build {s_build_no_map / n_build:.2f}x, query {s_query_no_map / n_query:.2f}x, total {(s_build_no_map + s_query_no_map) / (n_build + n_query):.2f}x

|

|

135

154

|

- Track + objs: build {s_build_map / n_build:.2f}x, query {s_query_map / n_query:.2f}x, total {(s_build_map + s_query_map) / (n_build + n_query):.2f}x

|

|

136

155

|

"""

|

|

137

156

|

print(md.strip())

|

|

@@ -6,8 +6,8 @@ implementations, including performance comparison, visualization, and analysis.

|

|

|

6

6

|

"""

|

|

7

7

|

|

|

8

8

|

from .engines import Engine, get_engines

|

|

9

|

-

from .runner import BenchmarkRunner, BenchmarkConfig

|

|

10

9

|

from .plotting import PlotManager

|

|

10

|

+

from .runner import BenchmarkConfig, BenchmarkRunner

|

|

11

11

|

|

|

12

12

|

__version__ = "1.0.0"

|

|

13

|

-

__all__ = ["

|

|

13

|

+

__all__ = ["BenchmarkConfig", "BenchmarkRunner", "Engine", "PlotManager", "get_engines"]

|

|

@@ -7,9 +7,11 @@ allowing fair comparison of their performance characteristics.

|

|

|

7

7

|

|

|

8

8

|

from typing import Any, Callable, Dict, List, Optional, Tuple

|

|

9

9

|

|

|

10

|

+

from pyqtree import Index as PyQTree # Pyqtree

|

|

11

|

+

|

|

10

12

|

# Built-in engines (always available in this repo)

|

|

11

13

|

from pyquadtree.quadtree import QuadTree as EPyQuadTree # e-pyquadtree

|

|

12

|

-

|

|

14

|

+

|

|

13

15

|

from fastquadtree import QuadTree as RustQuadTree # fastquadtree

|

|

14

16

|

|

|

15

17

|

|

|

@@ -67,7 +69,10 @@ def _create_e_pyquadtree_engine(

|

|

|

67

69

|

_ = qt.query(q)

|

|

68

70

|

|

|

69

71

|

return Engine(

|

|

70

|

-

"e-pyquadtree",

|

|

72

|

+

"e-pyquadtree",

|

|

73

|

+

"#1f77b4",

|

|

74

|

+

build,

|

|

75

|

+

query, # display name # color (blue)

|

|

71

76

|

)

|

|

72

77

|

|

|

73

78

|

|

|

@@ -105,7 +110,10 @@ def _create_fastquadtree_engine(

|

|

|

105

110

|

_ = qt.query(q)

|

|

106

111

|

|

|

107

112

|

return Engine(

|

|

108

|

-

"fastquadtree",

|

|

113

|

+

"fastquadtree",

|

|

114

|

+

"#ff7f0e",

|

|

115

|

+

build,

|

|

116

|

+

query, # display name # color (orange)

|

|

109

117

|

)

|

|

110

118

|

|

|

111

119

|

|

|

@@ -165,7 +173,10 @@ def _create_nontree_engine(

|

|

|

165

173

|

_ = tm.get_rect((xmin, ymin, xmax - xmin, ymax - ymin))

|

|

166

174

|

|

|

167

175

|

return Engine(

|

|

168

|

-

"nontree-QuadTree",

|

|

176

|

+

"nontree-QuadTree",

|

|

177

|

+

"#17becf",

|

|

178

|

+

build,

|

|

179

|

+

query, # display name # color (cyan)

|

|

169

180

|

)

|

|

170

181

|

|

|

171

182

|

|

|

@@ -178,7 +189,7 @@ def _create_brute_force_engine(

|

|

|

178

189

|

# Append each item as if they were being added separately

|

|

179

190

|

out = []

|

|

180

191

|

for p in points:

|

|

181

|

-

out.append(p)

|

|

192

|

+

out.append(p) # noqa: PERF402

|

|

182

193

|

return out

|

|

183

194

|

|

|

184

195

|

def query(points, queries):

|

|

@@ -187,7 +198,10 @@ def _create_brute_force_engine(

|

|

|

187

198

|

_ = [p for p in points if q[0] <= p[0] <= q[2] and q[1] <= p[1] <= q[3]]

|

|

188

199

|

|

|

189

200

|

return Engine(

|

|

190

|

-

"Brute force",

|

|

201

|

+

"Brute force",

|

|

202

|

+

"#9467bd",

|

|

203

|

+

build,

|

|

204

|

+

query, # display name # color (purple)

|

|

191

205

|

)

|

|

192

206

|

|

|

193

207

|

|

|

@@ -213,8 +227,7 @@ def _create_rtree_engine(

|

|

|

213

227

|

# Bulk stream loading is the fastest way to build

|

|

214

228

|

# Keep the same 1x1 bbox convention used elsewhere for fairness

|

|

215

229

|

stream = ((i, (x, y, x + 1, y + 1), None) for i, (x, y) in enumerate(points))

|

|

216

|

-

|

|

217

|

-

return idx

|

|

230

|

+

return rindex.Index(stream, properties=p)

|

|

218

231

|

|

|

219

232

|

def query(idx, queries):

|

|

220

233

|

# Do not materialize results into a list, just consume the generator

|

|

@@ -5,7 +5,7 @@ This module handles creation of performance charts, graphs, and visualizations

|

|

|

5

5

|

for benchmark results analysis.

|

|

6

6

|

"""

|

|

7

7

|

|

|

8

|

-

from typing import

|

|

8

|

+

from typing import Any, Dict, Tuple

|

|

9

9

|

|

|

10

10

|

import plotly.graph_objects as go

|

|

11

11

|

from plotly.subplots import make_subplots

|

|

@@ -43,7 +43,7 @@ class PlotManager:

|

|

|

43

43

|

name=name,

|

|

44

44

|

legendgroup=name,

|

|

45

45

|

showlegend=show_legend,

|

|

46

|

-

line=

|

|

46

|

+

line={"color": color, "width": 3},

|

|

47

47

|

),

|

|

48

48

|

row=1,

|

|

49

49

|

col=col,

|

|

@@ -69,15 +69,15 @@ class PlotManager:

|

|

|

69

69

|

f"{self.config.n_queries} queries)"

|

|

70

70

|

),

|

|

71

71

|

template="plotly_dark",

|

|

72

|

-

legend=

|

|

73

|

-

orientation

|

|

74

|

-

traceorder

|

|

75

|

-

xanchor

|

|

76

|

-

x

|

|

77

|

-

yanchor

|

|

78

|

-

y

|

|

79

|

-

|

|

80

|

-

margin=

|

|

72

|

+

legend={

|

|

73

|

+

"orientation": "v",

|

|

74

|

+

"traceorder": "normal",

|

|

75

|

+

"xanchor": "left",

|

|

76

|

+

"x": 0,

|

|

77

|

+

"yanchor": "top",

|

|

78

|

+

"y": 1,

|

|

79

|

+

},

|

|

80

|

+

margin={"l": 40, "r": 20, "t": 80, "b": 40},

|

|

81

81

|

height=520,

|

|

82

82

|

)

|

|

83

83

|

|

|

@@ -108,7 +108,7 @@ class PlotManager:

|

|

|

108

108

|

name=name,

|

|

109

109

|

legendgroup=name,

|

|

110

110

|

showlegend=False,

|

|

111

|

-

line=

|

|

111

|

+

line={"color": color, "width": 3},

|

|

112

112

|

),

|

|

113

113

|

row=1,

|

|

114

114

|

col=1,

|

|

@@ -122,7 +122,7 @@ class PlotManager:

|

|

|

122

122

|

name=name,

|

|

123

123

|

legendgroup=name,

|

|

124

124

|

showlegend=True,

|

|

125

|

-

line=

|

|

125

|

+

line={"color": color, "width": 3},

|

|

126

126

|

),

|

|

127

127

|

row=1,

|

|

128

128

|

col=2,

|

|

@@ -138,14 +138,14 @@ class PlotManager:

|

|

|

138

138

|

fig.update_layout(

|

|

139

139

|

title="Throughput",

|

|

140

140

|

template="plotly_dark",

|

|

141

|

-

legend=

|

|

142

|

-

orientation

|

|

143

|

-

x

|

|

144

|

-

xanchor

|

|

145

|

-

y

|

|

146

|

-

yanchor

|

|

147

|

-

|

|

148

|

-

margin=

|

|

141

|

+

legend={

|

|

142

|

+

"orientation": "h",

|

|

143

|

+

"x": 0,

|

|

144

|

+

"xanchor": "left",

|

|

145

|

+

"y": 1.08, # above the subplots

|

|

146

|

+

"yanchor": "bottom",

|

|

147

|

+

},

|

|

148

|

+

margin={"l": 60, "r": 40, "t": 120, "b": 40},

|

|

149

149

|

height=480,

|

|

150

150

|

)

|

|

151

151

|

|

|

@@ -187,7 +187,7 @@ class PlotManager:

|

|

|

187

187

|

height=480,

|

|

188

188

|

)

|

|

189

189

|

print(f"Saved PNG images to {output_dir}/ with prefix '{output_prefix}'")

|

|

190

|

-

except Exception as e:

|

|

190

|

+

except Exception as e: # noqa: BLE001

|

|

191

191

|

print(

|

|

192

192

|

f"Failed to save PNG images. Install kaleido to enable PNG export: {e}"

|

|

193

193

|

)

|

|

@@ -227,7 +227,7 @@ class PlotManager:

|

|

|

227

227

|

x=config.experiments,

|

|

228

228

|

y=values,

|

|

229

229

|

name=f"{label} - {engine_name}",

|

|

230

|

-

line=

|

|

230

|

+

line={"color": color, "width": 3},

|

|

231

231

|

mode="lines+markers",

|

|

232

232

|

)

|

|

233

233

|

)

|

|

@@ -237,7 +237,7 @@ class PlotManager:

|

|

|

237

237

|

xaxis_title="Number of points",

|

|

238

238

|

yaxis_title="Time (s)" if "rate" not in metric else "Ops/sec",

|

|

239

239

|

template="plotly_dark",

|

|

240

|

-

legend=

|

|

240

|

+

legend={"orientation": "v"},

|

|

241

241

|

height=600,

|

|

242

242

|

)

|

|

243

243

|

|

|

@@ -11,7 +11,7 @@ import random

|

|

|

11

11

|

import statistics as stats

|

|

12

12

|

from dataclasses import dataclass

|

|

13

13

|

from time import perf_counter as now

|

|

14

|

-

from typing import Dict, List, Tuple

|

|

14

|

+

from typing import Any, Dict, List, Tuple

|

|

15

15

|

|

|

16

16

|

from tqdm import tqdm

|

|

17

17

|

|

|

@@ -35,8 +35,7 @@ class BenchmarkConfig:

|

|

|

35

35

|

self.experiments = [2, 4, 8, 16]

|

|

36

36

|

while self.experiments[-1] < self.max_experiment_points:

|

|

37

37

|

self.experiments.append(int(self.experiments[-1] * 2))

|

|

38

|

-

|

|

39

|

-

self.experiments[-1] = self.max_experiment_points

|

|

38

|

+

self.experiments[-1] = min(self.experiments[-1], self.max_experiment_points)

|

|

40

39

|

|

|

41

40

|

|

|

42

41

|

class BenchmarkRunner:

|

|

@@ -98,6 +97,44 @@ class BenchmarkRunner:

|

|

|

98

97

|

cleaned = [x for x in vals if isinstance(x, (int, float)) and not math.isnan(x)]

|

|

99

98

|

return stats.median(cleaned) if cleaned else math.nan

|

|

100

99

|

|

|

100

|

+

def _print_experiment_summary(

|

|

101

|

+

self, n: int, results: Dict[str, Any], exp_idx: int

|

|

102

|

+

) -> None:

|

|

103

|

+

"""Print a summary of results for the current experiment."""

|

|

104

|

+

|

|

105

|

+

def fmt(x):

|

|

106

|

+

return f"{x:.3f}" if not math.isnan(x) else "nan"

|

|

107

|

+

|

|

108

|

+

# Get the results for this experiment (last index)

|

|

109

|

+

total = results["total"]

|

|

110

|

+

build = results["build"]

|

|

111

|

+

query = results["query"]

|

|

112

|

+

|

|

113

|

+

# Find the fastest engine for this experiment

|

|

114

|

+

valid_engines = [

|

|

115

|

+

(name, total[name][exp_idx])

|

|

116

|

+

for name in total

|

|

117

|

+

if not math.isnan(total[name][exp_idx])

|

|

118

|

+

]

|

|

119

|

+

|

|

120

|

+

if not valid_engines:

|

|

121

|

+

return

|

|

122

|

+

|

|

123

|

+

fastest = min(valid_engines, key=lambda x: x[1])

|

|

124

|

+

|

|

125

|

+

print(f"\n 📊 Results for {n:,} points:")

|

|

126

|

+

print(f" Fastest: {fastest[0]} ({fmt(fastest[1])}s total)")

|

|

127

|

+

|

|

128

|

+

# Show top 3 performers

|

|

129

|

+

sorted_engines = sorted(valid_engines, key=lambda x: x[1])[:3]

|

|

130

|

+

for rank, (name, time) in enumerate(sorted_engines, 1):

|

|

131

|

+

b = build[name][exp_idx]

|

|

132

|

+

q = query[name][exp_idx]

|

|

133

|

+

print(

|

|

134

|

+

f" {rank}. {name:15} build={fmt(b)}s, query={fmt(q)}s, total={fmt(time)}s"

|

|

135

|

+

)

|

|

136

|

+

print()

|

|

137

|

+

|

|

101

138

|

def run_benchmark(self, engines: Dict[str, Engine]) -> Dict[str, Any]:

|

|

102

139

|

"""

|

|

103

140

|

Run complete benchmark suite.

|

|

@@ -109,13 +146,11 @@ class BenchmarkRunner:

|

|

|

109

146

|

Dictionary containing benchmark results

|

|

110

147

|

"""

|

|

111

148

|

# Warmup on a small set to JIT caches, etc.

|

|

149

|

+

print("Warming up engines...")

|

|

112

150

|

warmup_points = self.generate_points(2_000)

|

|

113

151

|

warmup_queries = self.generate_queries(self.config.n_queries)

|

|

114

152

|

for engine in engines.values():

|

|

115

|

-

|

|

116

|

-

self.benchmark_engine_once(engine, warmup_points, warmup_queries)

|

|

117

|

-

except Exception:

|

|

118

|

-

pass # Ignore warmup failures

|

|

153

|

+

self.benchmark_engine_once(engine, warmup_points, warmup_queries)

|

|

119

154

|

|

|

120

155

|

# Initialize result containers

|

|

121

156

|

results = {

|

|

@@ -127,9 +162,18 @@ class BenchmarkRunner:

|

|

|

127

162

|

}

|

|

128

163

|

|

|

129

164

|

# Run experiments

|

|

130

|

-

|

|

131

|

-

|

|

132

|

-

|

|

165

|

+

print(

|

|

166

|

+

f"\nRunning {len(self.config.experiments)} experiments with {len(engines)} engines..."

|

|

167

|

+

)

|

|

168

|

+

experiment_bar = tqdm(

|

|

169

|

+

self.config.experiments, desc="Experiments", unit="exp", position=0

|

|

170

|

+

)

|

|

171

|

+

|

|

172

|

+

for exp_idx, n in enumerate(experiment_bar):

|

|

173

|

+

experiment_bar.set_description(

|

|

174

|

+

f"Experiment {exp_idx + 1}/{len(self.config.experiments)}"

|

|

175

|

+

)

|

|

176

|

+

experiment_bar.set_postfix({"points": f"{n:,}"})

|

|

133

177

|

|

|

134

178

|

# Generate data for this experiment

|

|

135

179

|

exp_rng = random.Random(10_000 + n)

|

|

@@ -139,24 +183,45 @@ class BenchmarkRunner:

|

|

|

139

183

|

# Collect results across repeats

|

|

140

184

|

engine_times = {name: {"build": [], "query": []} for name in engines}

|

|

141

185

|

|

|

186

|

+

# Progress bar for engines x repeats

|

|

187

|

+

total_iterations = len(engines) * self.config.repeats

|

|

188

|

+

engine_bar = tqdm(

|

|

189

|

+

total=total_iterations,

|

|

190

|

+

desc=" Testing engines",

|

|

191

|

+

unit="run",

|

|

192

|

+

position=1,

|

|

193

|

+

leave=False,

|

|

194

|

+

)

|

|

195

|

+

|

|

142

196

|

for repeat in range(self.config.repeats):

|

|

143

197

|

gc.disable()

|

|

144

198

|

|

|

145

199

|

# Benchmark each engine

|

|

146

200

|

for name, engine in engines.items():

|

|

201

|

+

engine_bar.set_description(

|

|

202

|

+

f" {name} (repeat {repeat + 1}/{self.config.repeats})"

|

|

203

|

+

)

|

|

204

|

+

|

|

147

205

|

try:

|

|

148

206

|

build_time, query_time = self.benchmark_engine_once(

|

|

149

207

|

engine, points, queries

|

|

150

208

|

)

|

|

151

|

-

except Exception:

|

|

209

|

+

except Exception as e: # noqa: BLE001

|

|

152

210

|

# Mark as failed for this repeat

|

|

211

|

+

print(

|

|

212

|

+

f" {name} (repeat {repeat + 1}/{self.config.repeats}) failed: {e}"

|

|

213

|

+

)

|

|

153

214

|

build_time, query_time = math.nan, math.nan

|

|

154

215

|

|

|

155

216

|

engine_times[name]["build"].append(build_time)

|

|

156

217

|

engine_times[name]["query"].append(query_time)

|

|

157

218

|

|

|

219

|

+

engine_bar.update(1)

|

|

220

|

+

|

|

158

221

|

gc.enable()

|

|

159

222

|

|

|

223

|

+

engine_bar.close()

|

|

224

|

+

|

|

160

225

|

# Calculate medians and derived metrics

|

|

161

226

|

for name in engines:

|

|

162

227

|

build_median = self.median_or_nan(engine_times[name]["build"])

|

|

@@ -184,6 +249,11 @@ class BenchmarkRunner:

|

|

|

184

249

|

results["insert_rate"][name].append(insert_rate)

|

|

185

250

|

results["query_rate"][name].append(query_rate)

|

|

186

251

|

|

|

252

|

+

# Print intermediate results for this experiment

|

|

253

|

+

self._print_experiment_summary(n, results, exp_idx)

|

|

254

|

+

|

|

255

|

+

experiment_bar.close()

|

|

256

|

+

|

|

187

257

|

# Add metadata to results

|

|

188

258

|

results["engines"] = engines

|

|

189

259

|

results["config"] = self.config

|

|

@@ -227,7 +297,7 @@ class BenchmarkRunner:

|

|

|

227

297

|

t = total[name][i]

|

|

228

298

|

if math.isnan(pyqt_total) or math.isnan(t) or t <= 0:

|

|

229

299

|

return "n/a"

|

|

230

|

-

return f"{(pyqt_total / t):.2f}×"

|

|

300

|

+

return f"{(pyqt_total / t):.2f}×" # noqa: RUF001

|

|

231

301

|

|

|

232

302

|

for name in ranked:

|

|

233

303

|

b = build.get(name, [math.nan])[i] if name in build else math.nan

|

|

@@ -6,14 +6,7 @@ This script can be run directly or imported as a module.

|

|

|

6

6

|

"""

|

|

7

7

|

|

|

8

8

|

import sys

|

|

9

|

-

from pathlib import Path

|

|

10

9

|

|

|

11

|

-

# Add the benchmarks directory to Python path for imports

|

|

12

|

-

benchmark_dir = Path(__file__).parent

|

|

13

|

-

if str(benchmark_dir) not in sys.path:

|

|

14

|

-

sys.path.insert(0, str(benchmark_dir))

|

|

15

|

-

|

|

16

|

-

# Now we can import the package

|

|

17

10

|

from quadtree_bench.main import main, run_quick_benchmark

|

|

18

11

|

|

|

19

12

|

if __name__ == "__main__":

|

|

@@ -1,6 +1,9 @@

|

|

|

1

|

-

from fastquadtree import QuadTree

|

|

2

|

-

import pygame

|

|

3

1

|

import random

|

|

2

|

+

import sys

|

|

3

|

+

|

|

4

|

+

import pygame

|

|

5

|

+

|

|

6

|

+

from fastquadtree import QuadTree

|

|

4

7

|

|

|

5

8

|

screen = pygame.display.set_mode((1000, 1000))

|

|

6

9

|

|

|

@@ -81,7 +84,7 @@ def interactive_test():

|

|

|

81

84

|

event.type == pygame.KEYDOWN and event.key == pygame.K_ESCAPE

|

|

82

85

|

):

|

|

83

86

|

pygame.quit()

|

|

84

|

-

|

|

87

|

+

sys.exit()

|

|

85

88

|

|

|

86

89

|

if event.type == pygame.MOUSEBUTTONDOWN:

|

|

87

90

|

x, y = pygame.mouse.get_pos()

|

|

@@ -90,9 +93,12 @@ def interactive_test():

|

|

|

90

93

|

qtree.insert((x, y), obj=Ball(x, y))

|

|

91

94

|

# print("New total number of nodes: ", len(qtree.get_all_bbox()))

|

|

92

95

|

|

|

93

|

-

if

|

|

94

|

-

|

|

95

|

-

|

|

96

|

+

if (

|

|

97

|

+

event.type == pygame.KEYDOWN

|

|

98

|

+

and event.key == pygame.K_SPACE

|

|

99

|

+

and closest_to_mouse

|

|

100

|

+

):

|

|

101

|

+

qtree.delete_by_object(closest_to_mouse.obj)

|

|

96

102

|

|

|

97

103

|

# If right arrow down, move the query area to the right

|

|

98

104

|

if pygame.key.get_pressed()[pygame.K_RIGHT]:

|