dpdispatcher 0.6.4__tar.gz → 0.6.5__tar.gz

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

Potentially problematic release.

This version of dpdispatcher might be problematic. Click here for more details.

- dpdispatcher-0.6.5/.git_archival.txt +4 -0

- dpdispatcher-0.6.5/.gitattributes +1 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/.github/workflows/ci-docker.yml +1 -1

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/.github/workflows/machines.yml +2 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/.github/workflows/pyright.yml +3 -2

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/.github/workflows/test-bohrium.yml +5 -2

- dpdispatcher-0.6.5/.github/workflows/test.yml +56 -0

- dpdispatcher-0.6.5/.pre-commit-config.yaml +44 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/.readthedocs.yaml +6 -7

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/PKG-INFO +4 -3

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/README.md +1 -1

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/ci/pbs/docker-compose.yml +0 -1

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/ci/pbs.sh +1 -1

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/ci/slurm/docker-compose.yml +1 -1

- dpdispatcher-0.6.5/ci/slurm.sh +10 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/ci/ssh/docker-compose.yml +1 -1

- dpdispatcher-0.6.5/ci/ssh.sh +10 -0

- dpdispatcher-0.6.5/ci/ssh_rsync.sh +14 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/batch.md +91 -85

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/context.md +54 -51

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/dpdispatcher_on_yarn.md +7 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/getting-started.md +31 -31

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/index.rst +2 -1

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/install.md +1 -2

- dpdispatcher-0.6.5/doc/pep723.rst +3 -0

- dpdispatcher-0.6.5/doc/run.md +21 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/_version.py +2 -2

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/contexts/__init__.py +1 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/contexts/hdfs_context.py +3 -5

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/contexts/local_context.py +6 -6

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/contexts/ssh_context.py +9 -10

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/dlog.py +9 -5

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/dpdisp.py +15 -0

- dpdispatcher-0.6.5/dpdispatcher/entrypoints/run.py +9 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/machine.py +2 -2

- dpdispatcher-0.6.5/dpdispatcher/machines/JH_UniScheduler.py +175 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/machines/__init__.py +1 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/machines/distributed_shell.py +4 -6

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/machines/fugaku.py +9 -9

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/machines/lsf.py +2 -4

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/machines/pbs.py +14 -14

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/machines/shell.py +1 -6

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/machines/slurm.py +12 -12

- dpdispatcher-0.6.5/dpdispatcher/run.py +172 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/submission.py +1 -3

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/utils/hdfs_cli.py +4 -8

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher.egg-info/PKG-INFO +4 -3

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher.egg-info/SOURCES.txt +23 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher.egg-info/requires.txt +3 -0

- dpdispatcher-0.6.5/examples/dpdisp_run.py +28 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/pyproject.toml +3 -2

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/context.py +2 -0

- dpdispatcher-0.6.5/tests/devel_test_JH_UniScheduler.py +57 -0

- dpdispatcher-0.6.5/tests/hello_world.py +28 -0

- dpdispatcher-0.6.5/tests/jsons/machine_JH_UniScheduler.json +16 -0

- dpdispatcher-0.6.5/tests/jsons/machine_lazy_local_jh_unischeduler.json +18 -0

- dpdispatcher-0.6.5/tests/test_JH_UniScheduler_script_generation.py +157 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_class_machine_dispatch.py +12 -2

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_cli.py +1 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_examples.py +1 -0

- dpdispatcher-0.6.5/tests/test_jh_unischeduler/0_md/graph.pb +1 -0

- dpdispatcher-0.6.5/tests/test_run.py +25 -0

- dpdispatcher-0.6.5/tests/test_slurm_dir/0_md/bct-1/conf.lmp +12 -0

- dpdispatcher-0.6.5/tests/test_slurm_dir/0_md/bct-1/input.lammps +31 -0

- dpdispatcher-0.6.5/tests/test_slurm_dir/0_md/bct-2/conf.lmp +12 -0

- dpdispatcher-0.6.5/tests/test_slurm_dir/0_md/bct-2/input.lammps +31 -0

- dpdispatcher-0.6.5/tests/test_slurm_dir/0_md/bct-3/conf.lmp +12 -0

- dpdispatcher-0.6.5/tests/test_slurm_dir/0_md/bct-3/input.lammps +31 -0

- dpdispatcher-0.6.5/tests/test_slurm_dir/0_md/bct-4/conf.lmp +12 -0

- dpdispatcher-0.6.5/tests/test_slurm_dir/0_md/bct-4/input.lammps +31 -0

- dpdispatcher-0.6.4/.github/workflows/test.yml +0 -44

- dpdispatcher-0.6.4/.pre-commit-config.yaml +0 -36

- dpdispatcher-0.6.4/ci/slurm.sh +0 -10

- dpdispatcher-0.6.4/ci/ssh.sh +0 -10

- dpdispatcher-0.6.4/ci/ssh_rsync.sh +0 -14

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/.github/dependabot.yml +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/.github/workflows/mirror_gitee.yml +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/.github/workflows/publish_conda.yml +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/.github/workflows/release.yml +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/.gitignore +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/CONTRIBUTING.md +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/Dockerfile +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/LICENSE +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/ci/LICENSE +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/ci/README.md +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/ci/pbs/start-pbs.sh +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/ci/slurm/register_cluster.sh +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/ci/slurm/start-slurm.sh +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/ci/ssh/start-ssh.sh +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/codecov.yml +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/conda/conda_build_config.yaml +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/conda/meta.yaml +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/.gitignore +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/Makefile +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/cli.rst +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/conf.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/credits.rst +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/examples/expanse.md +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/examples/g16.md +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/examples/shell.md +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/examples/template.md +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/machine.rst +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/make.bat +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/requirements.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/resources.rst +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/doc/task.rst +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/__init__.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/__main__.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/arginfo.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/base_context.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/contexts/dp_cloud_server_context.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/contexts/lazy_local_context.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/contexts/openapi_context.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/dpcloudserver/__init__.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/dpcloudserver/client.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/entrypoints/__init__.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/entrypoints/gui.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/entrypoints/submission.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/machines/dp_cloud_server.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/machines/openapi.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/utils/__init__.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/utils/dpcloudserver/__init__.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/utils/dpcloudserver/client.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/utils/dpcloudserver/config.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/utils/dpcloudserver/retcode.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/utils/dpcloudserver/zip_file.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/utils/job_status.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/utils/record.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher/utils/utils.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher.egg-info/dependency_links.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher.egg-info/entry_points.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/dpdispatcher.egg-info/top_level.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/examples/machine/expanse.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/examples/machine/lazy_local.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/examples/machine/mandu.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/examples/resources/expanse_cpu.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/examples/resources/mandu.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/examples/resources/template.slurm +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/examples/resources/tiger.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/examples/task/deepmd-kit.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/examples/task/g16.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/scripts/script_gen_dargs_docs.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/scripts/script_gen_dargs_json.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/setup.cfg +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/.gitignore +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/__init__.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/batch.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/debug_test_class_submission_init.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/devel_test_ali_ehpc.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/devel_test_dp_cloud_server.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/devel_test_lazy_ali_ehpc.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/devel_test_lsf.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/devel_test_shell.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/devel_test_slurm.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/devel_test_ssh_ali_ehpc.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/graph.pb +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/job.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_ali_ehpc.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_center.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_diffenert.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_dp_cloud_server.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_fugaku.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_if_cuda_multi_devices.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_lazy_local_lsf.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_lazy_local_slurm.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_lazylocal_shell.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_local_fugaku.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_local_shell.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_lsf.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_openapi.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_slurm.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/machine_yarn.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/resources.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/submission.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/jsons/task.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/sample_class.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/script_gen_json.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/slurm_test.env +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_argcheck.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_class_job.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_class_machine.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_class_resources.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_class_submission.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_class_submission_init.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_class_task.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/bct-1/conf.lmp +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/bct-1/input.lammps +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/bct-1/some_dir/some_file +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/bct-2/conf.lmp +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/bct-2/input.lammps +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/bct-3/conf.lmp +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/bct-3/input.lammps +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/bct-4/conf.lmp +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/bct-4/input.lammps +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/dir with space/file with space +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/graph.pb +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_context_dir/0_md/some_dir/some_file +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_group_size.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_gui.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_hdfs_context.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_hdfs_dir/0_md/bct-1/conf.lmp +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_hdfs_dir/0_md/bct-1/input.lammps +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_hdfs_dir/0_md/bct-2/conf.lmp +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_hdfs_dir/0_md/bct-2/input.lammps +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_hdfs_dir/0_md/bct-3/conf.lmp +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_hdfs_dir/0_md/bct-3/input.lammps +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_hdfs_dir/0_md/bct-4/conf.lmp +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_hdfs_dir/0_md/bct-4/input.lammps +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_hdfs_dir/0_md/graph.pb +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_if_cuda_multi_devices/test_dir/test.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_import_classes.py +0 -0

- {dpdispatcher-0.6.4/tests/test_lsf_dir → dpdispatcher-0.6.5/tests/test_jh_unischeduler}/0_md/bct-1/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_lsf_dir → dpdispatcher-0.6.5/tests/test_jh_unischeduler}/0_md/bct-1/input.lammps +0 -0

- {dpdispatcher-0.6.4/tests/test_lsf_dir → dpdispatcher-0.6.5/tests/test_jh_unischeduler}/0_md/bct-2/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_lsf_dir → dpdispatcher-0.6.5/tests/test_jh_unischeduler}/0_md/bct-2/input.lammps +0 -0

- {dpdispatcher-0.6.4/tests/test_lsf_dir → dpdispatcher-0.6.5/tests/test_jh_unischeduler}/0_md/bct-3/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_lsf_dir → dpdispatcher-0.6.5/tests/test_jh_unischeduler}/0_md/bct-3/input.lammps +0 -0

- {dpdispatcher-0.6.4/tests/test_lsf_dir → dpdispatcher-0.6.5/tests/test_jh_unischeduler}/0_md/bct-4/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_lsf_dir → dpdispatcher-0.6.5/tests/test_jh_unischeduler}/0_md/bct-4/input.lammps +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_lazy_local_context.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_local_context.py +0 -0

- {dpdispatcher-0.6.4/tests/test_pbs_dir → dpdispatcher-0.6.5/tests/test_lsf_dir}/0_md/bct-1/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_pbs_dir → dpdispatcher-0.6.5/tests/test_lsf_dir}/0_md/bct-1/input.lammps +0 -0

- {dpdispatcher-0.6.4/tests/test_pbs_dir → dpdispatcher-0.6.5/tests/test_lsf_dir}/0_md/bct-2/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_pbs_dir → dpdispatcher-0.6.5/tests/test_lsf_dir}/0_md/bct-2/input.lammps +0 -0

- {dpdispatcher-0.6.4/tests/test_pbs_dir → dpdispatcher-0.6.5/tests/test_lsf_dir}/0_md/bct-3/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_pbs_dir → dpdispatcher-0.6.5/tests/test_lsf_dir}/0_md/bct-3/input.lammps +0 -0

- {dpdispatcher-0.6.4/tests/test_pbs_dir → dpdispatcher-0.6.5/tests/test_lsf_dir}/0_md/bct-4/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_pbs_dir → dpdispatcher-0.6.5/tests/test_lsf_dir}/0_md/bct-4/input.lammps +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_lsf_dir/0_md/graph.pb +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_lsf_dir/0_md/submission.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_lsf_script_generation.py +0 -0

- {dpdispatcher-0.6.4/tests/test_slurm_dir → dpdispatcher-0.6.5/tests/test_pbs_dir}/0_md/bct-1/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_slurm_dir → dpdispatcher-0.6.5/tests/test_pbs_dir}/0_md/bct-1/input.lammps +0 -0

- {dpdispatcher-0.6.4/tests/test_slurm_dir → dpdispatcher-0.6.5/tests/test_pbs_dir}/0_md/bct-2/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_slurm_dir → dpdispatcher-0.6.5/tests/test_pbs_dir}/0_md/bct-2/input.lammps +0 -0

- {dpdispatcher-0.6.4/tests/test_slurm_dir → dpdispatcher-0.6.5/tests/test_pbs_dir}/0_md/bct-3/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_slurm_dir → dpdispatcher-0.6.5/tests/test_pbs_dir}/0_md/bct-3/input.lammps +0 -0

- {dpdispatcher-0.6.4/tests/test_slurm_dir → dpdispatcher-0.6.5/tests/test_pbs_dir}/0_md/bct-4/conf.lmp +0 -0

- {dpdispatcher-0.6.4/tests/test_slurm_dir → dpdispatcher-0.6.5/tests/test_pbs_dir}/0_md/bct-4/input.lammps +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_pbs_dir/0_md/graph.pb +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_retry.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_run_submission.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_run_submission_bohrium.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_run_submission_ratio_unfinished.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_shell_cuda_multi_devices.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_shell_trival.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_shell_trival_dir/fail_dir/mock_fail_task.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_shell_trival_dir/parent_dir/dir with space/example.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_shell_trival_dir/parent_dir/dir1/example.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_shell_trival_dir/parent_dir/dir2/example.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_shell_trival_dir/parent_dir/dir3/example.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_shell_trival_dir/parent_dir/dir4/example.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_shell_trival_dir/parent_dir/graph.pb +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_shell_trival_dir/recover_dir/mock_recover_task.txt +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_slurm_dir/0_md/d3c842c5b9476e48f7145b370cd330372b9293e1.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_slurm_dir/0_md/graph.pb +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_slurm_dir/0_md/submission.json +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_slurm_script_generation.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_ssh_context.py +0 -0

- {dpdispatcher-0.6.4 → dpdispatcher-0.6.5}/tests/test_work_path/.gitkeep +0 -0

|

@@ -0,0 +1 @@

|

|

|

1

|

+

.git_archival.txt export-subst

|

|

@@ -15,7 +15,7 @@ jobs:

|

|

|

15

15

|

uses: actions/checkout@v4

|

|

16

16

|

|

|

17

17

|

- name: Log in to Docker Hub

|

|

18

|

-

uses: docker/login-action@

|

|

18

|

+

uses: docker/login-action@v3

|

|

19

19

|

with:

|

|

20

20

|

username: ${{ secrets.DOCKER_USERNAME }}

|

|

21

21

|

password: ${{ secrets.DOCKER_PASSWORD }}

|

|

@@ -11,7 +11,8 @@ jobs:

|

|

|

11

11

|

- uses: actions/setup-python@v5

|

|

12

12

|

with:

|

|

13

13

|

python-version: '3.11'

|

|

14

|

-

- run: pip install

|

|

15

|

-

-

|

|

14

|

+

- run: pip install uv

|

|

15

|

+

- run: uv pip install --system -e .[cloudserver,gui]

|

|

16

|

+

- uses: jakebailey/pyright-action@v2

|

|

16

17

|

with:

|

|

17

18

|

version: 1.1.308

|

|

@@ -20,7 +20,8 @@ jobs:

|

|

|

20

20

|

with:

|

|

21

21

|

python-version: '3.12'

|

|

22

22

|

cache: 'pip'

|

|

23

|

-

- run: pip install

|

|

23

|

+

- run: pip install uv

|

|

24

|

+

- run: uv pip install --system .[bohrium] coverage

|

|

24

25

|

- name: Test

|

|

25

26

|

run: coverage run --source=./dpdispatcher -m unittest -v tests/test_run_submission_bohrium.py && coverage report

|

|

26

27

|

env:

|

|

@@ -29,7 +30,9 @@ jobs:

|

|

|

29

30

|

BOHRIUM_PASSWORD: ${{ secrets.BOHRIUM_PASSWORD }}

|

|

30

31

|

BOHRIUM_PROJECT_ID: ${{ secrets.BOHRIUM_PROJECT_ID }}

|

|

31

32

|

BOHRIUM_ACCESS_KEY: ${{ secrets.BOHRIUM_ACCESS_KEY }}

|

|

32

|

-

- uses: codecov/codecov-action@

|

|

33

|

+

- uses: codecov/codecov-action@v4

|

|

34

|

+

env:

|

|

35

|

+

CODECOV_TOKEN: ${{ secrets.CODECOV_TOKEN }}

|

|

33

36

|

remove_label:

|

|

34

37

|

permissions:

|

|

35

38

|

contents: read

|

|

@@ -0,0 +1,56 @@

|

|

|

1

|

+

name: Python package

|

|

2

|

+

|

|

3

|

+

on:

|

|

4

|

+

- push

|

|

5

|

+

- pull_request

|

|

6

|

+

|

|

7

|

+

jobs:

|

|

8

|

+

test:

|

|

9

|

+

runs-on: ${{ matrix.platform }}

|

|

10

|

+

strategy:

|

|

11

|

+

matrix:

|

|

12

|

+

python-version:

|

|

13

|

+

- 3.7

|

|

14

|

+

- 3.9

|

|

15

|

+

- '3.10'

|

|

16

|

+

- '3.11'

|

|

17

|

+

- '3.12'

|

|

18

|

+

platform:

|

|

19

|

+

- ubuntu-latest

|

|

20

|

+

- macos-latest

|

|

21

|

+

- windows-latest

|

|

22

|

+

exclude: # Apple Silicon ARM64 does not support Python < v3.8

|

|

23

|

+

- python-version: "3.7"

|

|

24

|

+

platform: macos-latest

|

|

25

|

+

include: # So run those legacy versions on Intel CPUs

|

|

26

|

+

- python-version: "3.7"

|

|

27

|

+

platform: macos-13

|

|

28

|

+

steps:

|

|

29

|

+

- uses: actions/checkout@v4

|

|

30

|

+

- name: Set up Python ${{ matrix.python-version }}

|

|

31

|

+

uses: actions/setup-python@v5

|

|

32

|

+

with:

|

|

33

|

+

python-version: ${{ matrix.python-version }}

|

|

34

|

+

- run: curl -LsSf https://astral.sh/uv/install.sh | sh

|

|

35

|

+

if: matrix.platform != 'windows-latest'

|

|

36

|

+

- run: powershell -c "irm https://astral.sh/uv/install.ps1 | iex"

|

|

37

|

+

if: matrix.platform == 'windows-latest'

|

|

38

|

+

- run: uv pip install --system .[test] coverage

|

|

39

|

+

- name: Test

|

|

40

|

+

run: |

|

|

41

|

+

python -m coverage run -p --source=./dpdispatcher -m unittest -v

|

|

42

|

+

python -m coverage run -p --source=./dpdispatcher -m dpdispatcher -h

|

|

43

|

+

python -m coverage combine

|

|

44

|

+

python -m coverage report

|

|

45

|

+

- uses: codecov/codecov-action@v4

|

|

46

|

+

env:

|

|

47

|

+

CODECOV_TOKEN: ${{ secrets.CODECOV_TOKEN }}

|

|

48

|

+

pass:

|

|

49

|

+

needs: [test]

|

|

50

|

+

runs-on: ubuntu-latest

|

|

51

|

+

if: always()

|

|

52

|

+

steps:

|

|

53

|

+

- name: Decide whether the needed jobs succeeded or failed

|

|

54

|

+

uses: re-actors/alls-green@release/v1

|

|

55

|

+

with:

|

|

56

|

+

jobs: ${{ toJSON(needs) }}

|

|

@@ -0,0 +1,44 @@

|

|

|

1

|

+

# See https://pre-commit.com for more information

|

|

2

|

+

# See https://pre-commit.com/hooks.html for more hooks

|

|

3

|

+

repos:

|

|

4

|

+

- repo: https://github.com/pre-commit/pre-commit-hooks

|

|

5

|

+

rev: v4.6.0

|

|

6

|

+

hooks:

|

|

7

|

+

- id: trailing-whitespace

|

|

8

|

+

exclude: "^tests/"

|

|

9

|

+

- id: end-of-file-fixer

|

|

10

|

+

exclude: "^tests/"

|

|

11

|

+

- id: check-yaml

|

|

12

|

+

exclude: "^conda/"

|

|

13

|

+

- id: check-json

|

|

14

|

+

- id: check-added-large-files

|

|

15

|

+

- id: check-merge-conflict

|

|

16

|

+

- id: check-symlinks

|

|

17

|

+

- id: check-toml

|

|

18

|

+

# Python

|

|

19

|

+

- repo: https://github.com/astral-sh/ruff-pre-commit

|

|

20

|

+

# Ruff version.

|

|

21

|

+

rev: v0.4.4

|

|

22

|

+

hooks:

|

|

23

|

+

- id: ruff

|

|

24

|

+

args: ["--fix"]

|

|

25

|

+

- id: ruff-format

|

|

26

|

+

# numpydoc

|

|

27

|

+

- repo: https://github.com/Carreau/velin

|

|

28

|

+

rev: 0.0.12

|

|

29

|

+

hooks:

|

|

30

|

+

- id: velin

|

|

31

|

+

args: ["--write"]

|

|

32

|

+

# Python inside docs

|

|

33

|

+

- repo: https://github.com/asottile/blacken-docs

|

|

34

|

+

rev: 1.16.0

|

|

35

|

+

hooks:

|

|

36

|

+

- id: blacken-docs

|

|

37

|

+

# markdown, yaml

|

|

38

|

+

- repo: https://github.com/pre-commit/mirrors-prettier

|

|

39

|

+

rev: v4.0.0-alpha.8

|

|

40

|

+

hooks:

|

|

41

|

+

- id: prettier

|

|

42

|

+

types_or: [markdown, yaml]

|

|

43

|

+

# workflow files cannot be modified by pre-commit.ci

|

|

44

|

+

exclude: ^(\.github/workflows|conda)

|

|

@@ -10,15 +10,14 @@ build:

|

|

|

10

10

|

os: ubuntu-22.04

|

|

11

11

|

tools:

|

|

12

12

|

python: "3.10"

|

|

13

|

-

|

|

13

|

+

jobs:

|

|

14

|

+

post_create_environment:

|

|

15

|

+

- pip install uv

|

|

16

|

+

post_install:

|

|

17

|

+

- VIRTUAL_ENV=$READTHEDOCS_VIRTUALENV_PATH uv pip install .[docs]

|

|

14

18

|

# Build documentation in the docs/ directory with Sphinx

|

|

15

19

|

sphinx:

|

|

16

|

-

|

|

20

|

+

configuration: doc/conf.py

|

|

17

21

|

|

|

18

22

|

# If using Sphinx, optionally build your docs in additional formats such as PDF

|

|

19

23

|

formats: all

|

|

20

|

-

|

|

21

|

-

# Optionally declare the Python requirements required to build your docs

|

|

22

|

-

python:

|

|

23

|

-

install:

|

|

24

|

-

- requirements: doc/requirements.txt

|

|

@@ -1,6 +1,6 @@

|

|

|

1

1

|

Metadata-Version: 2.1

|

|

2

2

|

Name: dpdispatcher

|

|

3

|

-

Version: 0.6.

|

|

3

|

+

Version: 0.6.5

|

|

4

4

|

Summary: Generate HPC scheduler systems jobs input scripts, submit these scripts to HPC systems, and poke until they finish

|

|

5

5

|

Author: DeepModeling

|

|

6

6

|

License: GNU LESSER GENERAL PUBLIC LICENSE

|

|

@@ -172,7 +172,7 @@ License: GNU LESSER GENERAL PUBLIC LICENSE

|

|

|

172

172

|

Project-URL: Homepage, https://github.com/deepmodeling/dpdispatcher

|

|

173

173

|

Project-URL: documentation, https://docs.deepmodeling.com/projects/dpdispatcher

|

|

174

174

|

Project-URL: repository, https://github.com/deepmodeling/dpdispatcher

|

|

175

|

-

Keywords: dispatcher,hpc,slurm,lsf,pbs,ssh

|

|

175

|

+

Keywords: dispatcher,hpc,slurm,lsf,pbs,ssh,jh_unischeduler

|

|

176

176

|

Classifier: Programming Language :: Python :: 3.7

|

|

177

177

|

Classifier: Programming Language :: Python :: 3.8

|

|

178

178

|

Classifier: Programming Language :: Python :: 3.9

|

|

@@ -191,6 +191,7 @@ Requires-Dist: requests

|

|

|

191

191

|

Requires-Dist: tqdm>=4.9.0

|

|

192

192

|

Requires-Dist: typing_extensions; python_version < "3.7"

|

|

193

193

|

Requires-Dist: pyyaml

|

|

194

|

+

Requires-Dist: tomli>=1.1.0; python_version < "3.11"

|

|

194

195

|

Provides-Extra: docs

|

|

195

196

|

Requires-Dist: sphinx; extra == "docs"

|

|

196

197

|

Requires-Dist: myst-parser; extra == "docs"

|

|

@@ -250,4 +251,4 @@ See [Contributing Guide](CONTRIBUTING.md) to become a contributor! 🤓

|

|

|

250

251

|

|

|

251

252

|

## References

|

|

252

253

|

|

|

253

|

-

DPDispatcher is

|

|

254

|

+

DPDispatcher is derived from the [DP-GEN](https://github.com/deepmodeling/dpgen) package. To mention DPDispatcher in a scholarly publication, please read Section 3.3 in the [DP-GEN paper](https://doi.org/10.1016/j.cpc.2020.107206).

|

|

@@ -36,4 +36,4 @@ See [Contributing Guide](CONTRIBUTING.md) to become a contributor! 🤓

|

|

|

36

36

|

|

|

37

37

|

## References

|

|

38

38

|

|

|

39

|

-

DPDispatcher is

|

|

39

|

+

DPDispatcher is derived from the [DP-GEN](https://github.com/deepmodeling/dpgen) package. To mention DPDispatcher in a scholarly publication, please read Section 3.3 in the [DP-GEN paper](https://doi.org/10.1016/j.cpc.2020.107206).

|

|

@@ -9,6 +9,6 @@ cd -

|

|

|

9

9

|

docker exec pbs_master /bin/bash -c "chmod -R 777 /shared_space"

|

|

10

10

|

docker exec pbs_master /bin/bash -c "chown -R pbsuser:pbsuser /home/pbsuser"

|

|

11

11

|

|

|

12

|

-

docker exec pbs_master /bin/bash -c "cd /dpdispatcher && pip install .[test] coverage && chown -R pbsuser ."

|

|

12

|

+

docker exec pbs_master /bin/bash -c "cd /dpdispatcher && pip install uv && uv pip install --system .[test] coverage && chown -R pbsuser ."

|

|

13

13

|

docker exec -u pbsuser pbs_master /bin/bash -c "cd /dpdispatcher && coverage run --source=./dpdispatcher -m unittest -v && coverage report"

|

|

14

14

|

docker exec -u pbsuser --env-file <(env | grep GITHUB) pbs_master /bin/bash -c "cd /dpdispatcher && curl -Os https://uploader.codecov.io/latest/linux/codecov && chmod +x codecov && ./codecov"

|

|

@@ -0,0 +1,10 @@

|

|

|

1

|

+

#!/usr/bin/env bash

|

|

2

|

+

set -e

|

|

3

|

+

|

|

4

|

+

cd ./ci/slurm

|

|

5

|

+

docker-compose pull

|

|

6

|

+

./start-slurm.sh

|

|

7

|

+

cd -

|

|

8

|

+

|

|

9

|

+

docker exec slurmctld /bin/bash -c "cd dpdispatcher && pip install uv && uv pip install --system .[test] coverage && coverage run --source=./dpdispatcher -m unittest -v && coverage report"

|

|

10

|

+

docker exec --env-file <(env | grep -e GITHUB -e CODECOV) slurmctld /bin/bash -c "cd dpdispatcher && curl -Os https://uploader.codecov.io/latest/linux/codecov && chmod +x codecov && ./codecov"

|

|

@@ -0,0 +1,10 @@

|

|

|

1

|

+

#!/usr/bin/env bash

|

|

2

|

+

set -e

|

|

3

|

+

|

|

4

|

+

cd ./ci/ssh

|

|

5

|

+

docker-compose pull

|

|

6

|

+

./start-ssh.sh

|

|

7

|

+

cd -

|

|

8

|

+

|

|

9

|

+

docker exec test /bin/bash -c "cd /dpdispatcher && pip install uv && uv pip install --system .[test] coverage && coverage run --source=./dpdispatcher -m unittest -v && coverage report"

|

|

10

|

+

docker exec --env-file <(env | grep -e GITHUB -e CODECOV) test /bin/bash -c "cd /dpdispatcher && curl -Os https://uploader.codecov.io/latest/linux/codecov && chmod +x codecov && ./codecov"

|

|

@@ -0,0 +1,14 @@

|

|

|

1

|

+

#!/usr/bin/env bash

|

|

2

|

+

set -e

|

|

3

|

+

|

|

4

|

+

cd ./ci/ssh

|

|

5

|

+

docker-compose pull

|

|

6

|

+

./start-ssh.sh

|

|

7

|

+

cd -

|

|

8

|

+

|

|

9

|

+

# install rsync

|

|

10

|

+

docker exec server /bin/bash -c "apt-get -y update && apt-get -y install rsync"

|

|

11

|

+

docker exec test /bin/bash -c "apt-get -y update && apt-get -y install rsync"

|

|

12

|

+

|

|

13

|

+

docker exec test /bin/bash -c "cd /dpdispatcher && pip install uv && uv pip install --system .[test] coverage && coverage run --source=./dpdispatcher -m unittest -v && coverage report"

|

|

14

|

+

docker exec --env-file <(env | grep -e GITHUB -e CODECOV) test /bin/bash -c "cd /dpdispatcher && curl -Os https://uploader.codecov.io/latest/linux/codecov && chmod +x codecov && ./codecov"

|

|

@@ -1,85 +1,91 @@

|

|

|

1

|

-

# Supported batch job systems

|

|

2

|

-

|

|

3

|

-

Batch job system is a system to process batch jobs.

|

|

4

|

-

One needs to set {dargs:argument}`batch_type <machine/batch_type>` to one of the following values:

|

|

5

|

-

|

|

6

|

-

## Bash

|

|

7

|

-

|

|

8

|

-

{dargs:argument}`batch_type <resources/batch_type>`: `Shell`

|

|

9

|

-

|

|

10

|

-

When {dargs:argument}`batch_type <resources/batch_type>` is set to `Shell`, dpdispatcher will generate a bash script to process jobs.

|

|

11

|

-

No extra packages are required for `Shell`.

|

|

12

|

-

|

|

13

|

-

Due to lack of scheduling system, `Shell` runs all jobs at the same time.

|

|

14

|

-

To avoid running multiple jobs at the same time, one could set {dargs:argument}`group_size <resources/group_size>` to `0` (means infinity) to generate only one job with multiple tasks.

|

|

15

|

-

|

|

16

|

-

## Slurm

|

|

17

|

-

|

|

18

|

-

{dargs:argument}`batch_type <resources/batch_type>`: `Slurm`, `SlurmJobArray`

|

|

19

|

-

|

|

20

|

-

[Slurm](https://slurm.schedmd.com/) is a job scheduling system used by lots of HPCs.

|

|

21

|

-

One needs to make sure slurm has been

|

|

22

|

-

|

|

23

|

-

When `SlurmJobArray` is used, dpdispatcher submits Slurm jobs with [job arrays](https://slurm.schedmd.com/job_array.html).

|

|

24

|

-

In this way, several dpdispatcher {class}`task <dpdispatcher.submission.Task>`s map to a Slurm job and a dpdispatcher {class}`job <dpdispatcher.submission.Job>` maps to a Slurm job array.

|

|

25

|

-

Millions of Slurm jobs can be submitted quickly and Slurm can execute all Slurm jobs at the same time.

|

|

26

|

-

One can use {dargs:argument}`group_size <resources/group_size>` and {dargs:argument}`slurm_job_size <resources[SlurmJobArray]/kwargs/slurm_job_size>` to control how many Slurm jobs are contained in a Slurm job array.

|

|

27

|

-

|

|

28

|

-

## OpenPBS or PBSPro

|

|

29

|

-

|

|

30

|

-

{dargs:argument}`batch_type <resources/batch_type>`: `PBS`

|

|

31

|

-

|

|

32

|

-

[OpenPBS](https://www.openpbs.org/) is an open-source job scheduling of the Linux Foundation and [PBS Profession](https://www.altair.com/pbs-professional/) is its commercial solution.

|

|

33

|

-

One needs to make sure OpenPBS has been

|

|

34

|

-

|

|

35

|

-

Note that do not use `PBS` for Torque.

|

|

36

|

-

|

|

37

|

-

## TORQUE

|

|

38

|

-

|

|

39

|

-

{dargs:argument}`batch_type <resources/batch_type>`: `Torque`

|

|

40

|

-

|

|

41

|

-

The [Terascale Open-source Resource and QUEue Manager (TORQUE)](https://adaptivecomputing.com/cherry-services/torque-resource-manager/) is a distributed resource manager based on standard OpenPBS.

|

|

42

|

-

However, not all OpenPBS flags are still supported in TORQUE.

|

|

43

|

-

One needs to make sure TORQUE has been

|

|

44

|

-

|

|

45

|

-

## LSF

|

|

46

|

-

|

|

47

|

-

{dargs:argument}`batch_type <resources/batch_type>`: `LSF`

|

|

48

|

-

|

|

49

|

-

[IBM Spectrum LSF Suites](https://www.ibm.com/products/hpc-workload-management) is a comprehensive workload management solution used by HPCs.

|

|

50

|

-

One needs to make sure LSF has been

|

|

51

|

-

|

|

52

|

-

##

|

|

53

|

-

|

|

54

|

-

{dargs:argument}`batch_type <resources/batch_type>`: `

|

|

55

|

-

|

|

56

|

-

|

|

57

|

-

|

|

58

|

-

|

|

59

|

-

|

|

60

|

-

|

|

61

|

-

|

|

62

|

-

|

|

63

|

-

|

|

64

|

-

|

|

65

|

-

|

|

66

|

-

|

|

67

|

-

|

|

68

|

-

|

|

69

|

-

|

|

70

|

-

|

|

71

|

-

|

|

72

|

-

Read

|

|

73

|

-

|

|

74

|

-

|

|

75

|

-

|

|

76

|

-

|

|

77

|

-

|

|

78

|

-

|

|

79

|

-

|

|

80

|

-

|

|

81

|

-

|

|

82

|

-

|

|

83

|

-

|

|

84

|

-

|

|

85

|

-

|

|

1

|

+

# Supported batch job systems

|

|

2

|

+

|

|

3

|

+

Batch job system is a system to process batch jobs.

|

|

4

|

+

One needs to set {dargs:argument}`batch_type <machine/batch_type>` to one of the following values:

|

|

5

|

+

|

|

6

|

+

## Bash

|

|

7

|

+

|

|

8

|

+

{dargs:argument}`batch_type <resources/batch_type>`: `Shell`

|

|

9

|

+

|

|

10

|

+

When {dargs:argument}`batch_type <resources/batch_type>` is set to `Shell`, dpdispatcher will generate a bash script to process jobs.

|

|

11

|

+

No extra packages are required for `Shell`.

|

|

12

|

+

|

|

13

|

+

Due to lack of scheduling system, `Shell` runs all jobs at the same time.

|

|

14

|

+

To avoid running multiple jobs at the same time, one could set {dargs:argument}`group_size <resources/group_size>` to `0` (means infinity) to generate only one job with multiple tasks.

|

|

15

|

+

|

|

16

|

+

## Slurm

|

|

17

|

+

|

|

18

|

+

{dargs:argument}`batch_type <resources/batch_type>`: `Slurm`, `SlurmJobArray`

|

|

19

|

+

|

|

20

|

+

[Slurm](https://slurm.schedmd.com/) is a job scheduling system used by lots of HPCs.

|

|

21

|

+

One needs to make sure slurm has been set up in the remote server and the related environment is activated.

|

|

22

|

+

|

|

23

|

+

When `SlurmJobArray` is used, dpdispatcher submits Slurm jobs with [job arrays](https://slurm.schedmd.com/job_array.html).

|

|

24

|

+

In this way, several dpdispatcher {class}`task <dpdispatcher.submission.Task>`s map to a Slurm job and a dpdispatcher {class}`job <dpdispatcher.submission.Job>` maps to a Slurm job array.

|

|

25

|

+

Millions of Slurm jobs can be submitted quickly and Slurm can execute all Slurm jobs at the same time.

|

|

26

|

+

One can use {dargs:argument}`group_size <resources/group_size>` and {dargs:argument}`slurm_job_size <resources[SlurmJobArray]/kwargs/slurm_job_size>` to control how many Slurm jobs are contained in a Slurm job array.

|

|

27

|

+

|

|

28

|

+

## OpenPBS or PBSPro

|

|

29

|

+

|

|

30

|

+

{dargs:argument}`batch_type <resources/batch_type>`: `PBS`

|

|

31

|

+

|

|

32

|

+

[OpenPBS](https://www.openpbs.org/) is an open-source job scheduling of the Linux Foundation and [PBS Profession](https://www.altair.com/pbs-professional/) is its commercial solution.

|

|

33

|

+

One needs to make sure OpenPBS has been set up in the remote server and the related environment is activated.

|

|

34

|

+

|

|

35

|

+

Note that do not use `PBS` for Torque.

|

|

36

|

+

|

|

37

|

+

## TORQUE

|

|

38

|

+

|

|

39

|

+

{dargs:argument}`batch_type <resources/batch_type>`: `Torque`

|

|

40

|

+

|

|

41

|

+

The [Terascale Open-source Resource and QUEue Manager (TORQUE)](https://adaptivecomputing.com/cherry-services/torque-resource-manager/) is a distributed resource manager based on standard OpenPBS.

|

|

42

|

+

However, not all OpenPBS flags are still supported in TORQUE.

|

|

43

|

+

One needs to make sure TORQUE has been set up in the remote server and the related environment is activated.

|

|

44

|

+

|

|

45

|

+

## LSF

|

|

46

|

+

|

|

47

|

+

{dargs:argument}`batch_type <resources/batch_type>`: `LSF`

|

|

48

|

+

|

|

49

|

+

[IBM Spectrum LSF Suites](https://www.ibm.com/products/hpc-workload-management) is a comprehensive workload management solution used by HPCs.

|

|

50

|

+

One needs to make sure LSF has been set up in the remote server and the related environment is activated.

|

|

51

|

+

|

|

52

|

+

## JH UniScheduler

|

|

53

|

+

|

|

54

|

+

{dargs:argument}`batch_type <resources/batch_type>`: `JH_UniScheduler`

|

|

55

|

+

|

|

56

|

+

[JH UniScheduler](http://www.jhinno.com/m/custom_case_05.html) was developed by JHINNO company and uses "jsub" to submit tasks.

|

|

57

|

+

Its overall architecture is similar to that of IBM's LSF. However, there are still some differences between them. One needs to

|

|

58

|

+

make sure JH UniScheduler has been set up in the remote server and the related environment is activated.

|

|

59

|

+

|

|

60

|

+

## Bohrium

|

|

61

|

+

|

|

62

|

+

{dargs:argument}`batch_type <resources/batch_type>`: `Bohrium`

|

|

63

|

+

|

|

64

|

+

Bohrium is the cloud platform for scientific computing.

|

|

65

|

+

Read Bohrium documentation for details.

|

|

66

|

+

|

|

67

|

+

## DistributedShell

|

|

68

|

+

|

|

69

|

+

{dargs:argument}`batch_type <resources/batch_type>`: `DistributedShell`

|

|

70

|

+

|

|

71

|

+

`DistributedShell` is used to submit yarn jobs.

|

|

72

|

+

Read [Support DPDispatcher on Yarn](dpdispatcher_on_yarn.md) for details.

|

|

73

|

+

|

|

74

|

+

## Fugaku

|

|

75

|

+

|

|

76

|

+

{dargs:argument}`batch_type <resources/batch_type>`: `Fugaku`

|

|

77

|

+

|

|

78

|

+

[Fujitsu cloud service](https://doc.cloud.global.fujitsu.com/lib/common/jp/hpc-user-manual/) is a job scheduling system used by Fujitsu's HPCs such as Fugaku, ITO and K computer. It should be noted that although the same job scheduling system is used, there are some differences in the details, Fagaku class cannot be directly used for other HPCs.

|

|

79

|

+

|

|

80

|

+

Read Fujitsu cloud service documentation for details.

|

|

81

|

+

|

|

82

|

+

## OpenAPI

|

|

83

|

+

|

|

84

|

+

{dargs:argument}`batcy_type <resources/batch_type>`: `OpenAPI`

|

|

85

|

+

OpenAPI is a new way to submit jobs to Bohrium. It is using [AccessKey](https://bohrium.dp.tech/personal/setting) instead of username and password. Read Bohrium documentation for details.

|

|

86

|

+

|

|

87

|

+

## SGE

|

|

88

|

+

|

|

89

|

+

{dargs:argument}`batch_type <resources/batch_type>`: `SGE`

|

|

90

|

+

|

|

91

|

+

The [Sun Grid Engine (SGE) scheduler](https://gridscheduler.sourceforge.net) is a batch-queueing system distributed resource management. The commands and flags of SGE share a lot of similarity with PBS except when checking job status. Use this argument if one is submitting job to an SGE-based batch system.

|

|

@@ -1,52 +1,55 @@

|

|

|

1

|

-

# Supported contexts

|

|

2

|

-

|

|

3

|

-

Context is the way to connect to the remote server.

|

|

4

|

-

One needs to set {dargs:argument}`context_type <machine/context_type>` to one of the following values:

|

|

5

|

-

|

|

6

|

-

## LazyLocal

|

|

7

|

-

|

|

8

|

-

{dargs:argument}`context_type <machine/context_type>`: `LazyLocal`

|

|

9

|

-

|

|

10

|

-

`LazyLocal` directly runs jobs in the local server and local directory.

|

|

11

|

-

|

|

12

|

-

|

|

13

|

-

|

|

14

|

-

|

|

15

|

-

|

|

16

|

-

`Local`

|

|

17

|

-

|

|

18

|

-

|

|

19

|

-

|

|

20

|

-

|

|

21

|

-

{dargs:argument}`

|

|

22

|

-

|

|

23

|

-

|

|

24

|

-

|

|

25

|

-

|

|

26

|

-

|

|

27

|

-

|

|

28

|

-

|

|

29

|

-

|

|

30

|

-

|

|

31

|

-

|

|

32

|

-

|

|

33

|

-

|

|

34

|

-

|

|

35

|

-

Bohrium

|

|

36

|

-

|

|

37

|

-

|

|

38

|

-

|

|

39

|

-

|

|

40

|

-

|

|

41

|

-

{dargs:argument}`

|

|

42

|

-

|

|

43

|

-

|

|

44

|

-

|

|

45

|

-

|

|

46

|

-

|

|

47

|

-

|

|

48

|

-

|

|

49

|

-

|

|

50

|

-

|

|

51

|

-

|

|

1

|

+

# Supported contexts

|

|

2

|

+

|

|

3

|

+

Context is the way to connect to the remote server.

|

|

4

|

+

One needs to set {dargs:argument}`context_type <machine/context_type>` to one of the following values:

|

|

5

|

+

|

|

6

|

+

## LazyLocal

|

|

7

|

+

|

|

8

|

+

{dargs:argument}`context_type <machine/context_type>`: `LazyLocal`

|

|

9

|

+

|

|

10

|

+

`LazyLocal` directly runs jobs in the local server and local directory.

|

|

11

|

+

|

|

12

|

+

Since [`bash -l`](https://www.gnu.org/software/bash/manual/bash.html#Invoking-Bash) is used in the shebang line of the submission scripts, the [login shell startup files](https://www.gnu.org/software/bash/manual/bash.html#Invoking-Bash) will be executed, potentially overriding the current environmental variables. Therefore, it's advisable to explicitly set the environmental variables using {dargs:argument}`envs <resources/envs>` or {dargs:argument}`source_list <resources/source_list>`.

|

|

13

|

+

|

|

14

|

+

## Local

|

|

15

|

+

|

|

16

|

+

{dargs:argument}`context_type <machine/context_type>`: `Local`

|

|

17

|

+

|

|

18

|

+

`Local` runs jobs in the local server, but in a different directory.

|

|

19

|

+

Files will be copied to the remote directory before jobs start and copied back after jobs finish.

|

|

20

|

+

|

|

21

|

+

Since [`bash -l`](https://www.gnu.org/software/bash/manual/bash.html#Invoking-Bash) is used in the shebang line of the submission scripts, the [login shell startup files](https://www.gnu.org/software/bash/manual/bash.html#Invoking-Bash) will be executed, potentially overriding the current environmental variables. Therefore, it's advisable to explicitly set the environmental variables using {dargs:argument}`envs <resources/envs>` or {dargs:argument}`source_list <resources/source_list>`.

|

|

22

|

+

|

|

23

|

+

## SSH

|

|

24

|

+

|

|

25

|

+

{dargs:argument}`context_type <machine/context_type>`: `SSH`

|

|

26

|

+

|

|

27

|

+

`SSH` runs jobs in a remote server.

|

|

28

|

+

Files will be copied to the remote directory via SSH channels before jobs start and copied back after jobs finish.

|

|

29

|

+

To use SSH, one needs to provide necessary parameters in {dargs:argument}`remote_profile <machine[SSHContext]/remote_profile>`, such as {dargs:argument}`username <machine[SSHContext]/remote_profile/hostname>` and {dargs:argument}`hostname <username[SSHContext]/remote_profile/hostname>`.

|

|

30

|

+

|

|

31

|

+

It's suggested to generate [SSH keys](https://help.ubuntu.com/community/SSH/OpenSSH/Keys) and transfer the public key to the remote server in advance, which is more secure than password authentication.

|

|

32

|

+

|

|

33

|

+

Note that `SSH` context is [non-login](https://www.gnu.org/software/bash/manual/html_node/Bash-Startup-Files.html), so `bash_profile` files will not be executed outside the submission script.

|

|

34

|

+

|

|

35

|

+

## Bohrium

|

|

36

|

+

|

|

37

|

+

{dargs:argument}`context_type <machine/context_type>`: `Bohrium`

|

|

38

|

+

|

|

39

|

+

Bohrium is the cloud platform for scientific computing.

|

|

40

|

+

Read Bohrium documentation for details.

|

|

41

|

+

To use Bohrium, one needs to provide necessary parameters in {dargs:argument}`remote_profile <machine[BohriumContext]/remote_profile>`.

|

|

42

|

+

|

|

43

|

+

## HDFS

|

|

44

|

+

|

|

45

|

+

{dargs:argument}`context_type <machine/context_type>`: `HDFS`

|

|

46

|

+

|

|

47

|

+

The Hadoop Distributed File System (HDFS) is a distributed file system.

|

|

48

|

+

Read [Support DPDispatcher on Yarn](dpdispatcher_on_yarn.md) for details.

|

|

49

|

+

|

|

50

|

+

## OpenAPI

|

|

51

|

+

|

|

52

|

+

{dargs:argument}`context_type <machine/context_type>`: `OpenAPI`

|

|

53

|

+

|

|

54

|

+

OpenAPI is a new way to submit jobs to Bohrium. It using [AccessKey](https://bohrium.dp.tech/personal/setting) instead of username and password. Read Bohrium documentation for details.

|

|

52

55

|

To use OpenAPI, one needs to provide necessary parameters in {dargs:argument}`remote_profile <machine[OpenAPIContext]/remote_profile>`.

|

|

@@ -1,14 +1,19 @@

|

|

|

1

1

|

# Support DPDispatcher on Yarn

|

|

2

|

+

|

|

2

3

|

## Background

|

|

4

|

+

|

|

3

5

|

Currently, DPGen(or other DP softwares) supports for HPC systems like Slurm, PBS, LSF and cloud machines. In order to run DPGen jobs on ByteDance internal platform, we need to extend it to support yarn resources. Hadoop Ecosystem is a very commonly used platform to process the big data, and in the process of developing the new interface, we found it can be implemented by only using hadoop opensource components. So for the convenience of the masses, we decided to contribute the codes to opensource community.

|

|

4

6

|

|

|

5

7

|

## Design

|

|

8

|

+

|

|

6

9

|

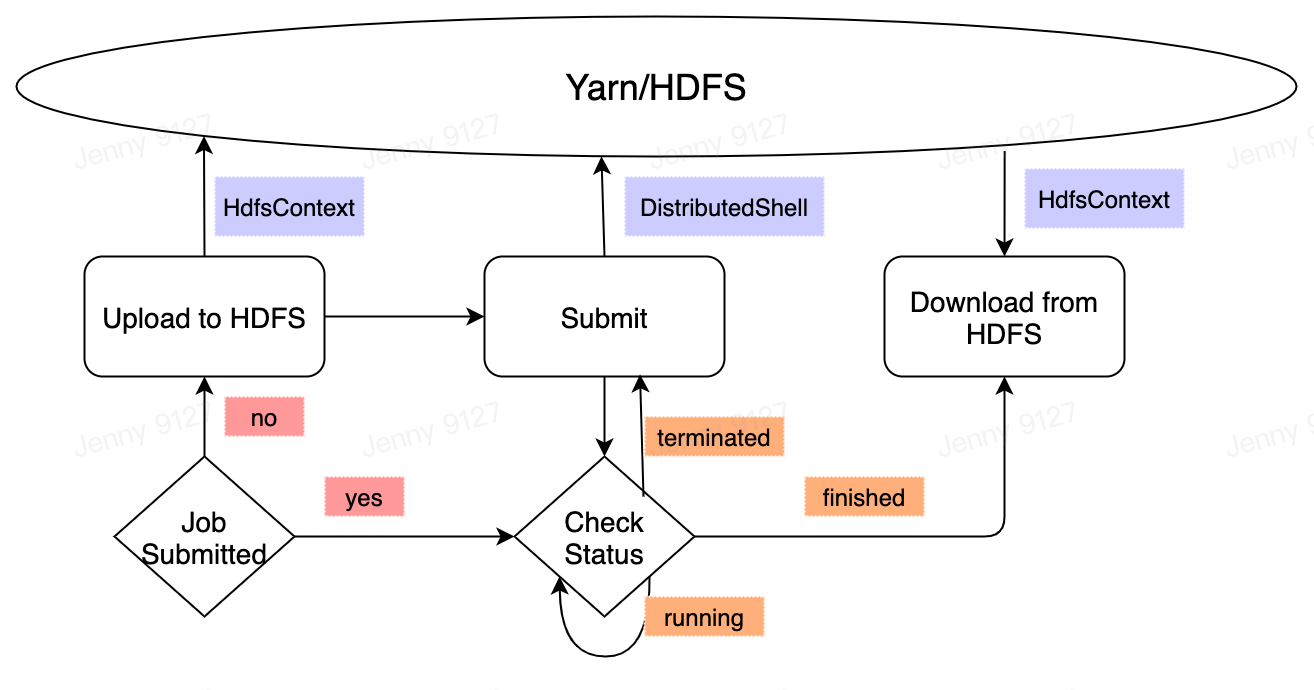

We use DistributedShell and HDFS to implement it. The control flow shows as follows:

|

|

7

10

|

|

|

11

|

+

|

|

8

12

|

- Use DistributedShell to submit yarn jobs. It contains generating shell script and submitting it to yarn queues.

|

|

9

13

|

- Use HDFS to save input files and output results. For performance reasons, we choose to pack forward files to a tar.gz file and upload it to HDFS directory. Accordingly, the task will download the tar file before running and upload result tar file to HDFS after it has done.

|

|

10

14

|

|

|

11

15

|

## Implement

|

|

16

|

+

|

|

12

17

|

We only need to add two Class which are HDFSContext and DistributedShell:

|

|

13

18

|

|

|

14

19

|

```

|

|

@@ -106,6 +111,7 @@ class DistributedShell(Machine):

|

|

|

106

111

|

```

|

|

107

112

|

|

|

108

113

|

The following is an example of generated shell script. It will be executed in a yarn container:

|

|

114

|

+

|

|

109

115

|

```

|

|

110

116

|

#!/bin/bash

|

|

111

117

|

|

|

@@ -146,6 +152,7 @@ hadoop fs -put -f uuid_download.tar.gz /root/uuid/sys-0001-0015

|

|

|

146

152

|

## mark the job has finished

|

|

147

153

|

hadoop fs -touchz /root/uuid/uuid_tag_finished

|

|

148

154

|

```

|

|

155

|

+

|

|

149

156

|

An example of machine.json is as follows, whose batch_type is `DistributedShell`,and context_type is `HDFSContext`:

|

|

150

157

|

|

|

151

158

|

```

|