cobweb-launcher 1.2.0__tar.gz → 1.2.2__tar.gz

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

Potentially problematic release.

This version of cobweb-launcher might be problematic. Click here for more details.

- cobweb-launcher-1.2.2/PKG-INFO +200 -0

- cobweb-launcher-1.2.2/README.md +183 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/launchers/launcher.py +4 -3

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/launchers/launcher_pro.py +17 -37

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/pipelines/pipeline_console.py +0 -2

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/setting.py +5 -0

- cobweb-launcher-1.2.2/cobweb_launcher.egg-info/PKG-INFO +200 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/setup.py +1 -1

- cobweb-launcher-1.2.0/PKG-INFO +0 -44

- cobweb-launcher-1.2.0/README.md +0 -27

- cobweb-launcher-1.2.0/cobweb_launcher.egg-info/PKG-INFO +0 -44

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/LICENSE +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/__init__.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/base/__init__.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/base/common_queue.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/base/decorators.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/base/item.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/base/log.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/base/request.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/base/response.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/base/seed.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/constant.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/crawlers/__init__.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/crawlers/crawler.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/db/__init__.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/db/redis_db.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/exceptions/__init__.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/exceptions/oss_db_exception.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/launchers/__init__.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/launchers/launcher_air.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/pipelines/__init__.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/pipelines/pipeline.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/pipelines/pipeline_loghub.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/utils/__init__.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/utils/oss.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb/utils/tools.py +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb_launcher.egg-info/SOURCES.txt +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb_launcher.egg-info/dependency_links.txt +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb_launcher.egg-info/requires.txt +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb_launcher.egg-info/top_level.txt +0 -0

- {cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/setup.cfg +0 -0

|

@@ -0,0 +1,200 @@

|

|

|

1

|

+

Metadata-Version: 2.1

|

|

2

|

+

Name: cobweb-launcher

|

|

3

|

+

Version: 1.2.2

|

|

4

|

+

Summary: spider_hole

|

|

5

|

+

Home-page: https://github.com/Juannie-PP/cobweb

|

|

6

|

+

Author: Juannie-PP

|

|

7

|

+

Author-email: 2604868278@qq.com

|

|

8

|

+

License: MIT

|

|

9

|

+

Keywords: cobweb-launcher, cobweb

|

|

10

|

+

Platform: UNKNOWN

|

|

11

|

+

Classifier: Programming Language :: Python :: 3

|

|

12

|

+

Requires-Python: >=3.7

|

|

13

|

+

Description-Content-Type: text/markdown

|

|

14

|

+

License-File: LICENSE

|

|

15

|

+

|

|

16

|

+

# cobweb

|

|

17

|

+

cobweb是一个基于python的分布式爬虫调度框架,目前支持分布式爬虫,单机爬虫,支持自定义数据库,支持自定义数据存储,支持自定义数据处理等操作。

|

|

18

|

+

|

|

19

|

+

cobweb主要由3个模块和一个配置文件组成:Launcher启动器、Crawler采集器、Pipeline存储和setting配置文件。

|

|

20

|

+

1. Launcher启动器:用于启动爬虫任务,控制爬虫任务的执行流程,以及数据存储和数据处理。

|

|

21

|

+

框架提供两种启动器模式:LauncherAir、LauncherPro,分别对应单机爬虫模式和分布式调度模式。

|

|

22

|

+

2. Crawler采集器:用于控制采集流程、数据下载和数据处理。

|

|

23

|

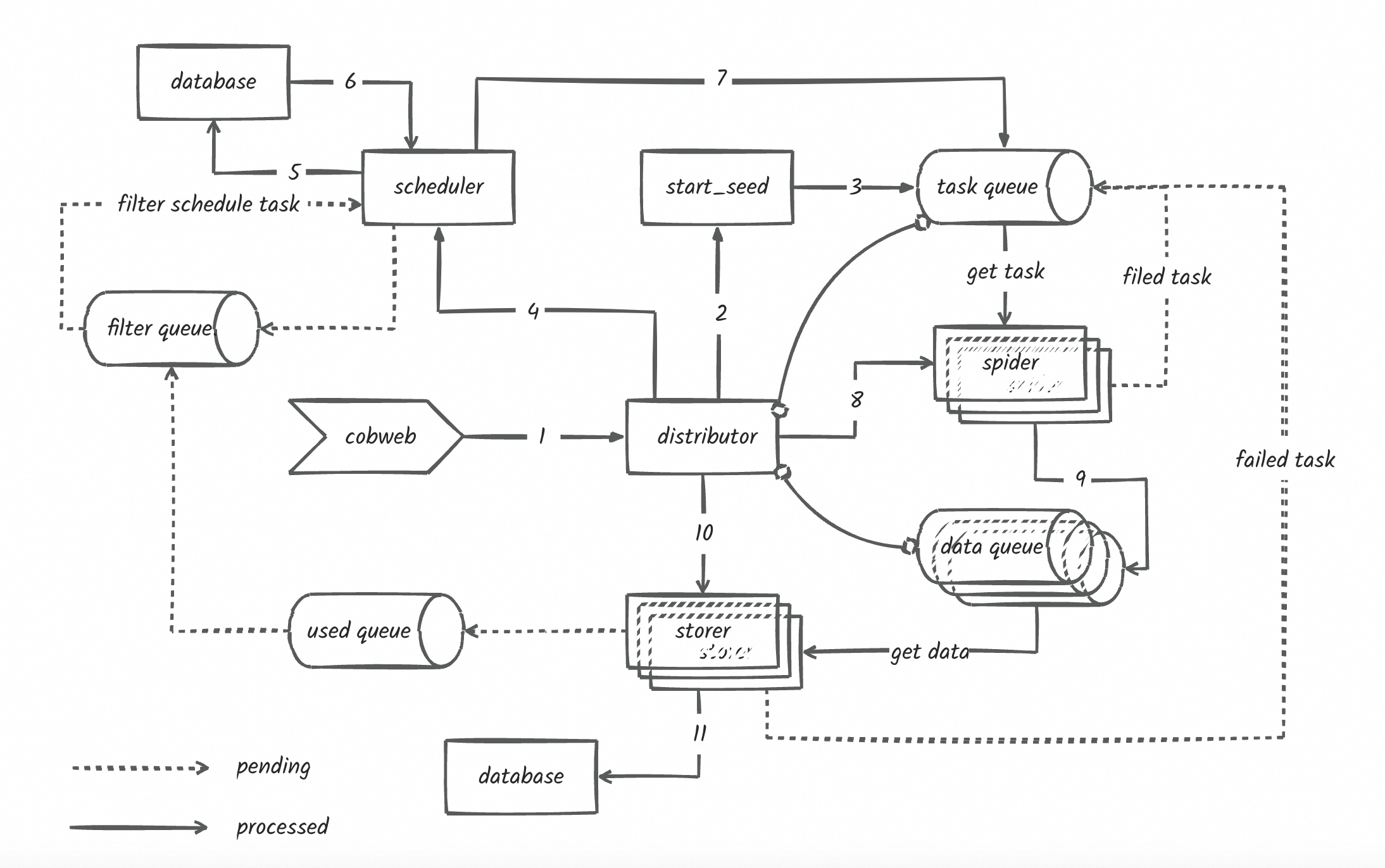

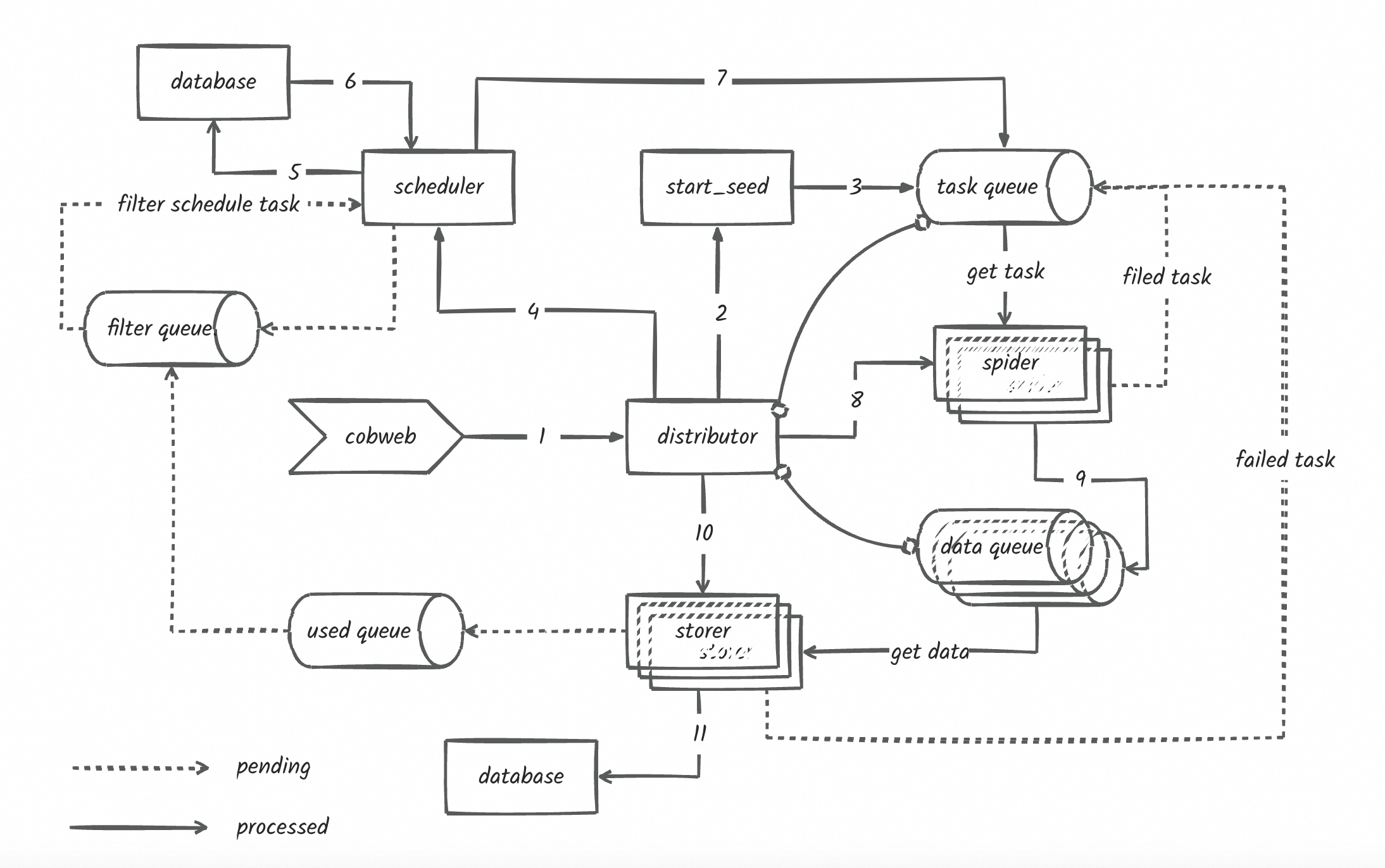

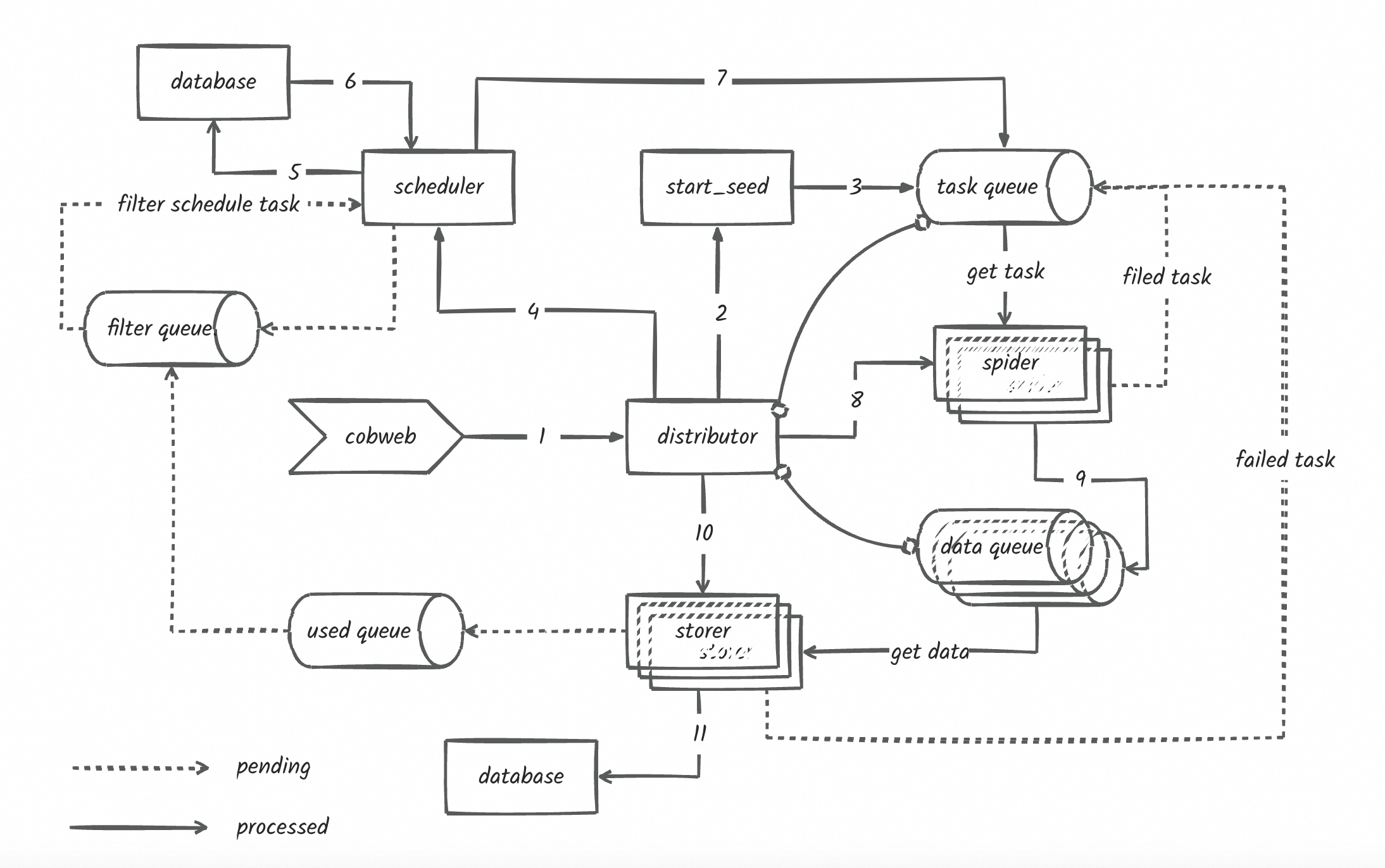

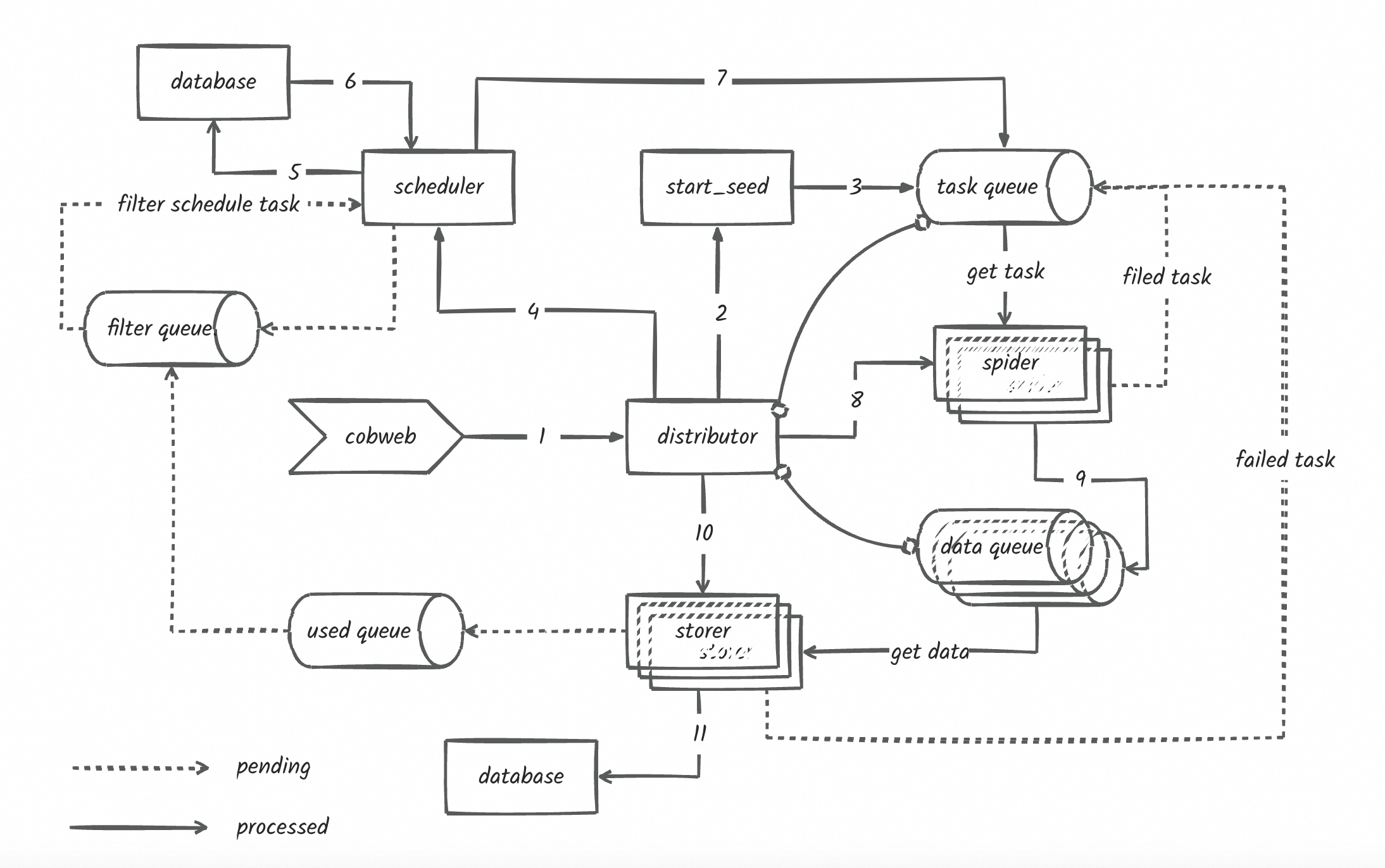

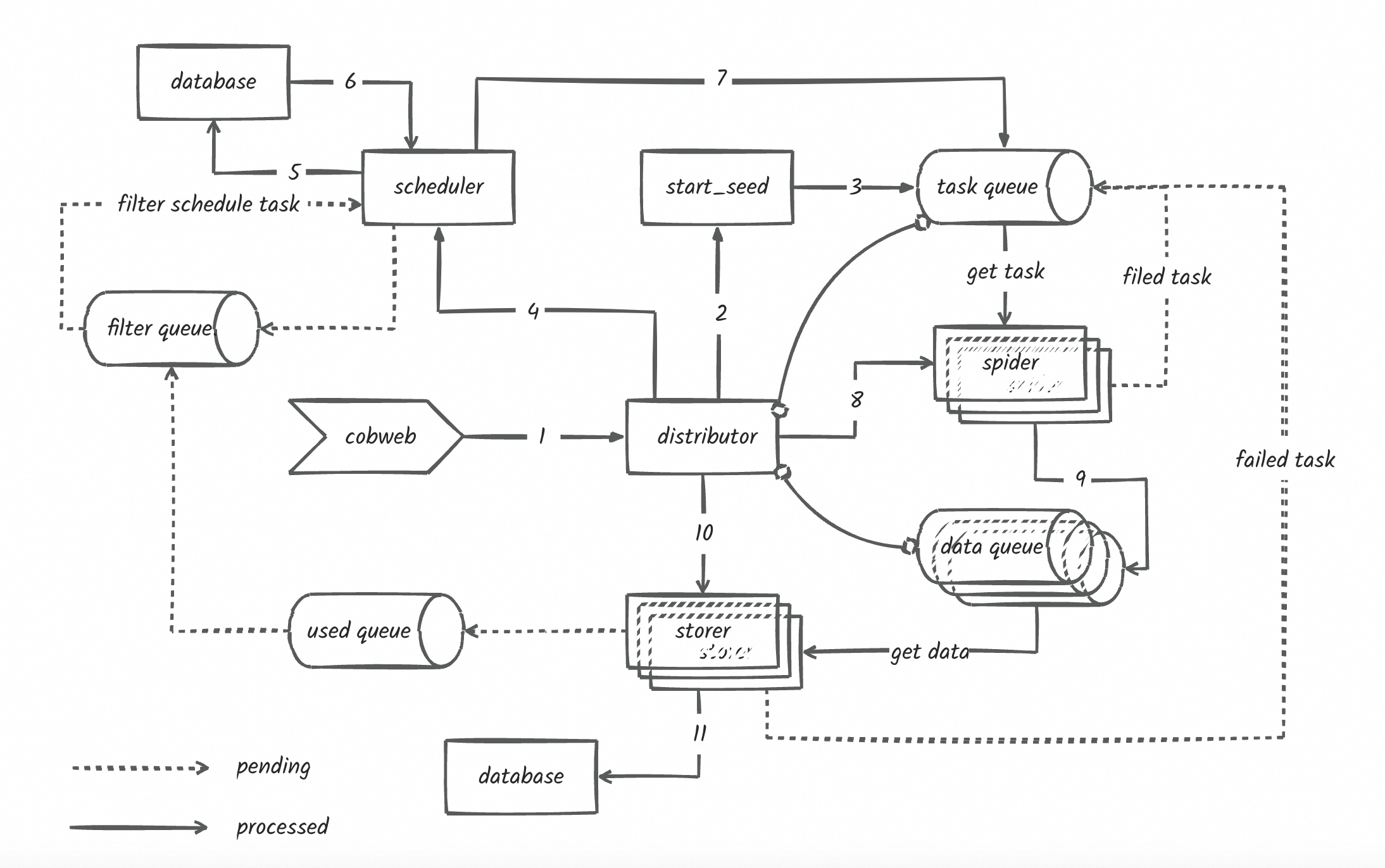

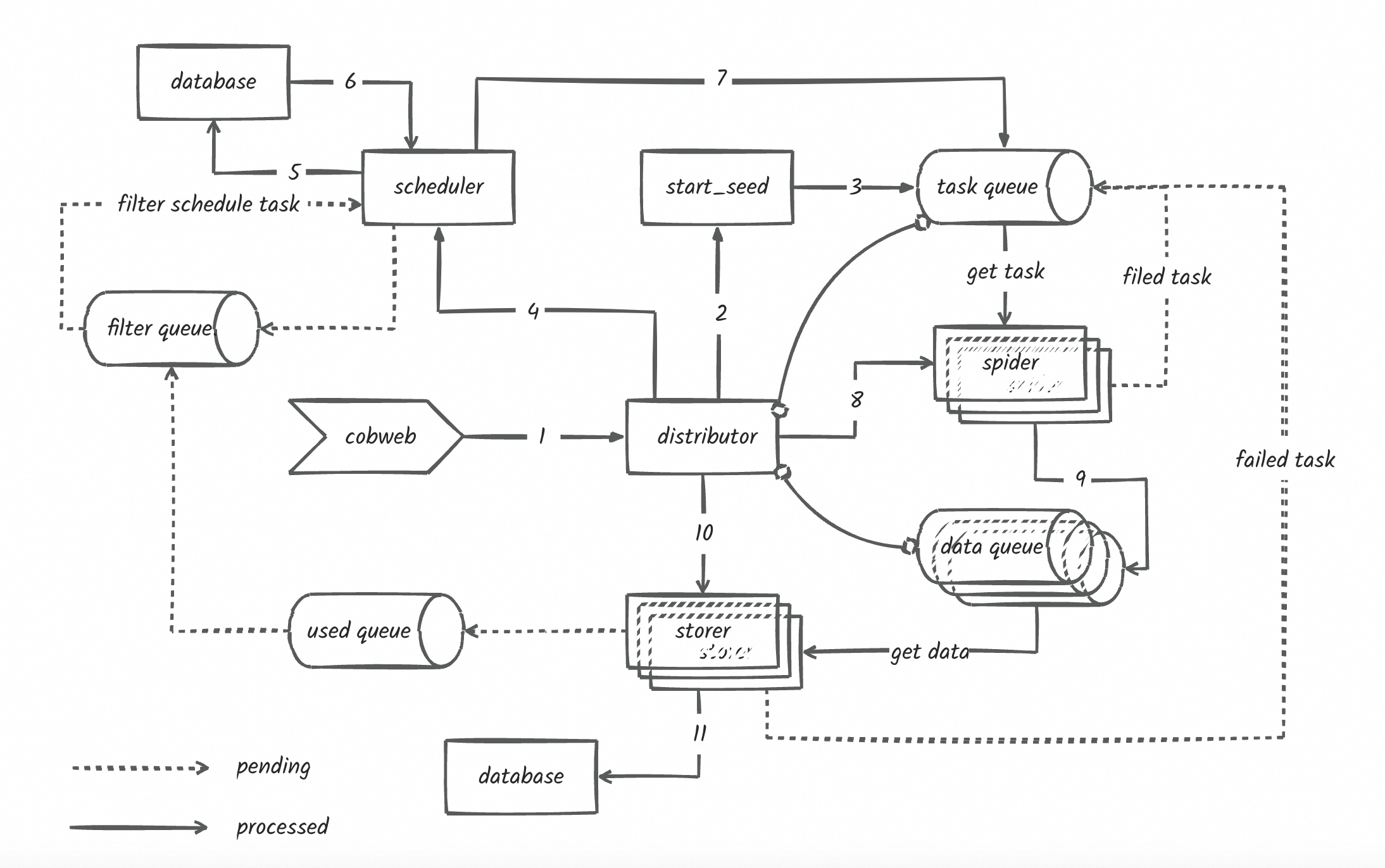

+

框架提供了基础的采集器,用于控制采集流程、数据下载和数据处理,用户也可在创建任务时自定义请求、下载和解析方法,具体看使用方法介绍。

|

|

24

|

+

3. Pipeline存储:用于存储采集到的数据,支持自定义数据存储和数据处理。框架提供了Console和Loghub两种存储方式,用户也可继承Pipeline抽象类自定义存储方式。

|

|

25

|

+

4. setting配置文件:用于配置采集器、存储器、队列长度、采集线程数等参数,框架提供了默认配置,用户也可自定义配置。

|

|

26

|

+

## 安装

|

|

27

|

+

```

|

|

28

|

+

pip3 install --upgrade cobweb-launcher

|

|

29

|

+

```

|

|

30

|

+

## 使用方法介绍

|

|

31

|

+

### 1. 任务创建

|

|

32

|

+

- LauncherAir任务创建

|

|

33

|

+

```python

|

|

34

|

+

from cobweb import LauncherAir

|

|

35

|

+

|

|

36

|

+

# 创建启动器

|

|

37

|

+

app = LauncherAir(task="test", project="test")

|

|

38

|

+

|

|

39

|

+

# 设置采集种子

|

|

40

|

+

app.SEEDS = [{

|

|

41

|

+

"url": "https://www.baidu.com"

|

|

42

|

+

}]

|

|

43

|

+

...

|

|

44

|

+

# 启动任务

|

|

45

|

+

app.start()

|

|

46

|

+

```

|

|

47

|

+

- LauncherPro任务创建

|

|

48

|

+

LauncherPro依赖redis实现分布式调度,使用LauncherPro启动器需要完成环境变量的配置或自定义setting文件中的redis配置,如何配置查看`2. 自定义配置文件参数`

|

|

49

|

+

```python

|

|

50

|

+

from cobweb import LauncherPro

|

|

51

|

+

|

|

52

|

+

# 创建启动器

|

|

53

|

+

app = LauncherPro(

|

|

54

|

+

task="test",

|

|

55

|

+

project="test"

|

|

56

|

+

)

|

|

57

|

+

...

|

|

58

|

+

# 启动任务

|

|

59

|

+

app.start()

|

|

60

|

+

```

|

|

61

|

+

### 2. 自定义配置文件参数

|

|

62

|

+

- 通过自定义setting文件,配置文件导入字符串方式

|

|

63

|

+

> 默认配置文件:import cobweb.setting

|

|

64

|

+

> 不推荐!!!目前有bug,随缘使用...

|

|

65

|

+

例如:同级目录下自定义创建了setting.py文件。

|

|

66

|

+

```python

|

|

67

|

+

from cobweb import LauncherAir

|

|

68

|

+

|

|

69

|

+

app = LauncherAir(

|

|

70

|

+

task="test",

|

|

71

|

+

project="test",

|

|

72

|

+

setting="import setting"

|

|

73

|

+

)

|

|

74

|

+

|

|

75

|

+

...

|

|

76

|

+

|

|

77

|

+

app.start()

|

|

78

|

+

```

|

|

79

|

+

- 自定义修改setting中对象值

|

|

80

|

+

```python

|

|

81

|

+

from cobweb import LauncherPro

|

|

82

|

+

|

|

83

|

+

# 创建启动器

|

|

84

|

+

app = LauncherPro(

|

|

85

|

+

task="test",

|

|

86

|

+

project="test",

|

|

87

|

+

REDIS_CONFIG = {

|

|

88

|

+

"host": ...,

|

|

89

|

+

"password":...,

|

|

90

|

+

"port": ...,

|

|

91

|

+

"db": ...

|

|

92

|

+

}

|

|

93

|

+

)

|

|

94

|

+

...

|

|

95

|

+

# 启动任务

|

|

96

|

+

app.start()

|

|

97

|

+

```

|

|

98

|

+

### 3. 自定义请求

|

|

99

|

+

`@app.request`使用装饰器封装自定义请求方法,作用于发生请求前的操作,返回Request对象或继承于BaseItem对象,用于控制请求参数。

|

|

100

|

+

```python

|

|

101

|

+

from typing import Union

|

|

102

|

+

from cobweb import LauncherAir

|

|

103

|

+

from cobweb.base import Seed, Request, BaseItem

|

|

104

|

+

|

|

105

|

+

app = LauncherAir(

|

|

106

|

+

task="test",

|

|

107

|

+

project="test"

|

|

108

|

+

)

|

|

109

|

+

|

|

110

|

+

...

|

|

111

|

+

|

|

112

|

+

@app.request

|

|

113

|

+

def request(seed: Seed) -> Union[Request, BaseItem]:

|

|

114

|

+

# 可自定义headers,代理,构造请求参数等操作

|

|

115

|

+

proxies = {"http": ..., "https": ...}

|

|

116

|

+

yield Request(seed.url, seed, ..., proxies=proxies, timeout=15)

|

|

117

|

+

# yield xxxItem(seed, ...) # 跳过请求和解析直接进入数据存储流程

|

|

118

|

+

|

|

119

|

+

...

|

|

120

|

+

|

|

121

|

+

app.start()

|

|

122

|

+

```

|

|

123

|

+

> 默认请求方法

|

|

124

|

+

> def request(seed: Seed) -> Union[Request, BaseItem]:

|

|

125

|

+

> yield Request(seed.url, seed, timeout=5)

|

|

126

|

+

### 4. 自定义下载

|

|

127

|

+

`@app.download`使用装饰器封装自定义下载方法,作用于发生请求时的操作,返回Response对象或继承于BaseItem对象,用于控制请求参数。

|

|

128

|

+

```python

|

|

129

|

+

from typing import Union

|

|

130

|

+

from cobweb import LauncherAir

|

|

131

|

+

from cobweb.base import Request, Response, BaseItem

|

|

132

|

+

|

|

133

|

+

app = LauncherAir(

|

|

134

|

+

task="test",

|

|

135

|

+

project="test"

|

|

136

|

+

)

|

|

137

|

+

|

|

138

|

+

...

|

|

139

|

+

|

|

140

|

+

@app.download

|

|

141

|

+

def download(item: Request) -> Union[BaseItem, Response]:

|

|

142

|

+

...

|

|

143

|

+

response = ...

|

|

144

|

+

...

|

|

145

|

+

yield Response(item.seed, response, ...) # 返回Response对象,进行解析

|

|

146

|

+

# yield xxxItem(seed, ...) # 跳过请求和解析直接进入数据存储流程

|

|

147

|

+

|

|

148

|

+

...

|

|

149

|

+

|

|

150

|

+

app.start()

|

|

151

|

+

```

|

|

152

|

+

> 默认下载方法

|

|

153

|

+

> def download(item: Request) -> Union[Seed, BaseItem, Response, str]:

|

|

154

|

+

> response = item.download()

|

|

155

|

+

> yield Response(item.seed, response, **item.to_dict)

|

|

156

|

+

### 5. 自定义解析

|

|

157

|

+

自定义解析需要由一个存储数据类和解析方法组成。存储数据类继承于BaseItem的对象,规定存储表名及字段,

|

|

158

|

+

解析方法返回继承于BaseItem的对象,yield返回进行控制数据存储流程。

|

|

159

|

+

```python

|

|

160

|

+

from typing import Union

|

|

161

|

+

from cobweb import LauncherAir

|

|

162

|

+

from cobweb.base import Seed, Response, BaseItem

|

|

163

|

+

|

|

164

|

+

class TestItem(BaseItem):

|

|

165

|

+

__TABLE__ = "test_data" # 表名

|

|

166

|

+

__FIELDS__ = "field1, field2, field3" # 字段名

|

|

167

|

+

|

|

168

|

+

app = LauncherAir(

|

|

169

|

+

task="test",

|

|

170

|

+

project="test"

|

|

171

|

+

)

|

|

172

|

+

|

|

173

|

+

...

|

|

174

|

+

|

|

175

|

+

@app.parse

|

|

176

|

+

def parse(item: Response) -> Union[Seed, BaseItem]:

|

|

177

|

+

...

|

|

178

|

+

yield TestItem(item.seed, field1=..., field2=..., field3=...)

|

|

179

|

+

# yield Seed(...) # 构造新种子推送至消费队列

|

|

180

|

+

|

|

181

|

+

...

|

|

182

|

+

|

|

183

|

+

app.start()

|

|

184

|

+

```

|

|

185

|

+

> 默认解析方法

|

|

186

|

+

> def parse(item: Request) -> Union[Seed, BaseItem]:

|

|

187

|

+

> upload_item = item.to_dict

|

|

188

|

+

> upload_item["text"] = item.response.text

|

|

189

|

+

> yield ConsoleItem(item.seed, data=json.dumps(upload_item, ensure_ascii=False))

|

|

190

|

+

## need deal

|

|

191

|

+

- 队列优化完善,使用queue的机制wait()同步各模块执行?

|

|

192

|

+

- 日志功能完善,单机模式调度和保存数据写入文件,结构化输出各任务日志

|

|

193

|

+

- 去重过滤(布隆过滤器等)

|

|

194

|

+

- 单机防丢失

|

|

195

|

+

- excel、mysql、redis数据完善

|

|

196

|

+

|

|

197

|

+

> 未更新流程图!!!

|

|

198

|

+

|

|

199

|

+

|

|

200

|

+

|

|

@@ -0,0 +1,183 @@

|

|

|

1

|

+

# cobweb

|

|

2

|

+

cobweb是一个基于python的分布式爬虫调度框架,目前支持分布式爬虫,单机爬虫,支持自定义数据库,支持自定义数据存储,支持自定义数据处理等操作。

|

|

3

|

+

|

|

4

|

+

cobweb主要由3个模块和一个配置文件组成:Launcher启动器、Crawler采集器、Pipeline存储和setting配置文件。

|

|

5

|

+

1. Launcher启动器:用于启动爬虫任务,控制爬虫任务的执行流程,以及数据存储和数据处理。

|

|

6

|

+

框架提供两种启动器模式:LauncherAir、LauncherPro,分别对应单机爬虫模式和分布式调度模式。

|

|

7

|

+

2. Crawler采集器:用于控制采集流程、数据下载和数据处理。

|

|

8

|

+

框架提供了基础的采集器,用于控制采集流程、数据下载和数据处理,用户也可在创建任务时自定义请求、下载和解析方法,具体看使用方法介绍。

|

|

9

|

+

3. Pipeline存储:用于存储采集到的数据,支持自定义数据存储和数据处理。框架提供了Console和Loghub两种存储方式,用户也可继承Pipeline抽象类自定义存储方式。

|

|

10

|

+

4. setting配置文件:用于配置采集器、存储器、队列长度、采集线程数等参数,框架提供了默认配置,用户也可自定义配置。

|

|

11

|

+

## 安装

|

|

12

|

+

```

|

|

13

|

+

pip3 install --upgrade cobweb-launcher

|

|

14

|

+

```

|

|

15

|

+

## 使用方法介绍

|

|

16

|

+

### 1. 任务创建

|

|

17

|

+

- LauncherAir任务创建

|

|

18

|

+

```python

|

|

19

|

+

from cobweb import LauncherAir

|

|

20

|

+

|

|

21

|

+

# 创建启动器

|

|

22

|

+

app = LauncherAir(task="test", project="test")

|

|

23

|

+

|

|

24

|

+

# 设置采集种子

|

|

25

|

+

app.SEEDS = [{

|

|

26

|

+

"url": "https://www.baidu.com"

|

|

27

|

+

}]

|

|

28

|

+

...

|

|

29

|

+

# 启动任务

|

|

30

|

+

app.start()

|

|

31

|

+

```

|

|

32

|

+

- LauncherPro任务创建

|

|

33

|

+

LauncherPro依赖redis实现分布式调度,使用LauncherPro启动器需要完成环境变量的配置或自定义setting文件中的redis配置,如何配置查看`2. 自定义配置文件参数`

|

|

34

|

+

```python

|

|

35

|

+

from cobweb import LauncherPro

|

|

36

|

+

|

|

37

|

+

# 创建启动器

|

|

38

|

+

app = LauncherPro(

|

|

39

|

+

task="test",

|

|

40

|

+

project="test"

|

|

41

|

+

)

|

|

42

|

+

...

|

|

43

|

+

# 启动任务

|

|

44

|

+

app.start()

|

|

45

|

+

```

|

|

46

|

+

### 2. 自定义配置文件参数

|

|

47

|

+

- 通过自定义setting文件,配置文件导入字符串方式

|

|

48

|

+

> 默认配置文件:import cobweb.setting

|

|

49

|

+

> 不推荐!!!目前有bug,随缘使用...

|

|

50

|

+

例如:同级目录下自定义创建了setting.py文件。

|

|

51

|

+

```python

|

|

52

|

+

from cobweb import LauncherAir

|

|

53

|

+

|

|

54

|

+

app = LauncherAir(

|

|

55

|

+

task="test",

|

|

56

|

+

project="test",

|

|

57

|

+

setting="import setting"

|

|

58

|

+

)

|

|

59

|

+

|

|

60

|

+

...

|

|

61

|

+

|

|

62

|

+

app.start()

|

|

63

|

+

```

|

|

64

|

+

- 自定义修改setting中对象值

|

|

65

|

+

```python

|

|

66

|

+

from cobweb import LauncherPro

|

|

67

|

+

|

|

68

|

+

# 创建启动器

|

|

69

|

+

app = LauncherPro(

|

|

70

|

+

task="test",

|

|

71

|

+

project="test",

|

|

72

|

+

REDIS_CONFIG = {

|

|

73

|

+

"host": ...,

|

|

74

|

+

"password":...,

|

|

75

|

+

"port": ...,

|

|

76

|

+

"db": ...

|

|

77

|

+

}

|

|

78

|

+

)

|

|

79

|

+

...

|

|

80

|

+

# 启动任务

|

|

81

|

+

app.start()

|

|

82

|

+

```

|

|

83

|

+

### 3. 自定义请求

|

|

84

|

+

`@app.request`使用装饰器封装自定义请求方法,作用于发生请求前的操作,返回Request对象或继承于BaseItem对象,用于控制请求参数。

|

|

85

|

+

```python

|

|

86

|

+

from typing import Union

|

|

87

|

+

from cobweb import LauncherAir

|

|

88

|

+

from cobweb.base import Seed, Request, BaseItem

|

|

89

|

+

|

|

90

|

+

app = LauncherAir(

|

|

91

|

+

task="test",

|

|

92

|

+

project="test"

|

|

93

|

+

)

|

|

94

|

+

|

|

95

|

+

...

|

|

96

|

+

|

|

97

|

+

@app.request

|

|

98

|

+

def request(seed: Seed) -> Union[Request, BaseItem]:

|

|

99

|

+

# 可自定义headers,代理,构造请求参数等操作

|

|

100

|

+

proxies = {"http": ..., "https": ...}

|

|

101

|

+

yield Request(seed.url, seed, ..., proxies=proxies, timeout=15)

|

|

102

|

+

# yield xxxItem(seed, ...) # 跳过请求和解析直接进入数据存储流程

|

|

103

|

+

|

|

104

|

+

...

|

|

105

|

+

|

|

106

|

+

app.start()

|

|

107

|

+

```

|

|

108

|

+

> 默认请求方法

|

|

109

|

+

> def request(seed: Seed) -> Union[Request, BaseItem]:

|

|

110

|

+

> yield Request(seed.url, seed, timeout=5)

|

|

111

|

+

### 4. 自定义下载

|

|

112

|

+

`@app.download`使用装饰器封装自定义下载方法,作用于发生请求时的操作,返回Response对象或继承于BaseItem对象,用于控制请求参数。

|

|

113

|

+

```python

|

|

114

|

+

from typing import Union

|

|

115

|

+

from cobweb import LauncherAir

|

|

116

|

+

from cobweb.base import Request, Response, BaseItem

|

|

117

|

+

|

|

118

|

+

app = LauncherAir(

|

|

119

|

+

task="test",

|

|

120

|

+

project="test"

|

|

121

|

+

)

|

|

122

|

+

|

|

123

|

+

...

|

|

124

|

+

|

|

125

|

+

@app.download

|

|

126

|

+

def download(item: Request) -> Union[BaseItem, Response]:

|

|

127

|

+

...

|

|

128

|

+

response = ...

|

|

129

|

+

...

|

|

130

|

+

yield Response(item.seed, response, ...) # 返回Response对象,进行解析

|

|

131

|

+

# yield xxxItem(seed, ...) # 跳过请求和解析直接进入数据存储流程

|

|

132

|

+

|

|

133

|

+

...

|

|

134

|

+

|

|

135

|

+

app.start()

|

|

136

|

+

```

|

|

137

|

+

> 默认下载方法

|

|

138

|

+

> def download(item: Request) -> Union[Seed, BaseItem, Response, str]:

|

|

139

|

+

> response = item.download()

|

|

140

|

+

> yield Response(item.seed, response, **item.to_dict)

|

|

141

|

+

### 5. 自定义解析

|

|

142

|

+

自定义解析需要由一个存储数据类和解析方法组成。存储数据类继承于BaseItem的对象,规定存储表名及字段,

|

|

143

|

+

解析方法返回继承于BaseItem的对象,yield返回进行控制数据存储流程。

|

|

144

|

+

```python

|

|

145

|

+

from typing import Union

|

|

146

|

+

from cobweb import LauncherAir

|

|

147

|

+

from cobweb.base import Seed, Response, BaseItem

|

|

148

|

+

|

|

149

|

+

class TestItem(BaseItem):

|

|

150

|

+

__TABLE__ = "test_data" # 表名

|

|

151

|

+

__FIELDS__ = "field1, field2, field3" # 字段名

|

|

152

|

+

|

|

153

|

+

app = LauncherAir(

|

|

154

|

+

task="test",

|

|

155

|

+

project="test"

|

|

156

|

+

)

|

|

157

|

+

|

|

158

|

+

...

|

|

159

|

+

|

|

160

|

+

@app.parse

|

|

161

|

+

def parse(item: Response) -> Union[Seed, BaseItem]:

|

|

162

|

+

...

|

|

163

|

+

yield TestItem(item.seed, field1=..., field2=..., field3=...)

|

|

164

|

+

# yield Seed(...) # 构造新种子推送至消费队列

|

|

165

|

+

|

|

166

|

+

...

|

|

167

|

+

|

|

168

|

+

app.start()

|

|

169

|

+

```

|

|

170

|

+

> 默认解析方法

|

|

171

|

+

> def parse(item: Request) -> Union[Seed, BaseItem]:

|

|

172

|

+

> upload_item = item.to_dict

|

|

173

|

+

> upload_item["text"] = item.response.text

|

|

174

|

+

> yield ConsoleItem(item.seed, data=json.dumps(upload_item, ensure_ascii=False))

|

|

175

|

+

## need deal

|

|

176

|

+

- 队列优化完善,使用queue的机制wait()同步各模块执行?

|

|

177

|

+

- 日志功能完善,单机模式调度和保存数据写入文件,结构化输出各任务日志

|

|

178

|

+

- 去重过滤(布隆过滤器等)

|

|

179

|

+

- 单机防丢失

|

|

180

|

+

- excel、mysql、redis数据完善

|

|

181

|

+

|

|

182

|

+

> 未更新流程图!!!

|

|

183

|

+

|

|

@@ -40,6 +40,7 @@ class Launcher(threading.Thread):

|

|

|

40

40

|

self.task = task

|

|

41

41

|

self.project = project

|

|

42

42

|

|

|

43

|

+

self._app_time = int(time.time())

|

|

43

44

|

self._stop = threading.Event() # 结束事件

|

|

44

45

|

self._pause = threading.Event() # 暂停事件

|

|

45

46

|

|

|

@@ -65,6 +66,7 @@ class Launcher(threading.Thread):

|

|

|

65

66

|

self._Crawler = dynamic_load_class(setting.CRAWLER)

|

|

66

67

|

self._Pipeline = dynamic_load_class(setting.PIPELINE)

|

|

67

68

|

|

|

69

|

+

self._before_scheduler_wait_seconds = setting.BEFORE_SCHEDULER_WAIT_SECONDS

|

|

68

70

|

self._scheduler_wait_seconds = setting.SCHEDULER_WAIT_SECONDS

|

|

69

71

|

self._todo_queue_full_wait_seconds = setting.TODO_QUEUE_FULL_WAIT_SECONDS

|

|

70

72

|

self._new_queue_wait_seconds = setting.NEW_QUEUE_WAIT_SECONDS

|

|

@@ -83,7 +85,6 @@ class Launcher(threading.Thread):

|

|

|

83

85

|

self._done_model = setting.DONE_MODEL

|

|

84

86

|

self._task_model = setting.TASK_MODEL

|

|

85

87

|

|

|

86

|

-

# self._upload_queue = Queue()

|

|

87

88

|

|

|

88

89

|

@property

|

|

89

90

|

def start_seeds(self):

|

|

@@ -125,7 +126,7 @@ class Launcher(threading.Thread):

|

|

|

125

126

|

自定义parse函数, xxxItem为自定义的存储数据类型

|

|

126

127

|

use case:

|

|

127

128

|

from cobweb.base import Request, Response

|

|

128

|

-

@launcher.

|

|

129

|

+

@launcher.parse

|

|

129

130

|

def parse(item: Response) -> BaseItem:

|

|

130

131

|

...

|

|

131

132

|

yield xxxItem(seed, **kwargs)

|

|

@@ -141,7 +142,7 @@ class Launcher(threading.Thread):

|

|

|

141

142

|

def _execute(self):

|

|

142

143

|

for func_name in self.__LAUNCHER_FUNC__:

|

|

143

144

|

threading.Thread(name=func_name, target=getattr(self, func_name)).start()

|

|

144

|

-

time.sleep(

|

|

145

|

+

time.sleep(1)

|

|

145

146

|

|

|

146

147

|

def run(self):

|

|

147

148

|

threading.Thread(target=self._execute_heartbeat).start()

|

|

@@ -137,6 +137,7 @@ class LauncherPro(Launcher):

|

|

|

137

137

|

time.sleep(self._done_queue_wait_seconds)

|

|

138

138

|

|

|

139

139

|

def _polling(self):

|

|

140

|

+

wait_scheduler_execute = True

|

|

140

141

|

check_emtpy_times = 0

|

|

141

142

|

while not self._stop.is_set():

|

|

142

143

|

queue_not_empty_count = 0

|

|

@@ -145,26 +146,35 @@ class LauncherPro(Launcher):

|

|

|

145

146

|

for q in self.__LAUNCHER_QUEUE__.values():

|

|

146

147

|

if q.length != 0:

|

|

147

148

|

queue_not_empty_count += 1

|

|

149

|

+

wait_scheduler_execute = False

|

|

148

150

|

|

|

149

151

|

if queue_not_empty_count == 0:

|

|

150

152

|

pooling_wait_seconds = 3

|

|

151

153

|

if self._pause.is_set():

|

|

152

154

|

check_emtpy_times = 0

|

|

153

|

-

if not self._task_model

|

|

154

|

-

|

|

155

|

+

if not self._task_model and (

|

|

156

|

+

not wait_scheduler_execute or

|

|

157

|

+

int(time.time()) - self._app_time > self._before_scheduler_wait_seconds

|

|

158

|

+

):

|

|

159

|

+

logger.info("Done! ready to close thread...")

|

|

155

160

|

self._stop.set()

|

|

156

|

-

|

|

161

|

+

|

|

162

|

+

elif self._db.zcount(self._todo_key, _min=0, _max="(1000"):

|

|

163

|

+

logger.info(f"Recovery {self.task} task run!")

|

|

164

|

+

self._pause.clear()

|

|

165

|

+

self._execute()

|

|

166

|

+

else:

|

|

167

|

+

logger.info("pause! waiting for resume...")

|

|

168

|

+

elif check_emtpy_times > 2:

|

|

157

169

|

self.__DOING__ = {}

|

|

158

|

-

self.

|

|

170

|

+

if not self._db.zcount(self._todo_key, _min="-inf", _max="(1000"):

|

|

171

|

+

self._pause.set()

|

|

159

172

|

else:

|

|

160

173

|

logger.info(

|

|

161

174

|

"check whether the task is complete, "

|

|

162

175

|

f"reset times {3 - check_emtpy_times}"

|

|

163

176

|

)

|

|

164

177

|

check_emtpy_times += 1

|

|

165

|

-

elif self._pause.is_set():

|

|

166

|

-

self._pause.clear()

|

|

167

|

-

self._execute()

|

|

168

178

|

else:

|

|

169

179

|

logger.info(LogTemplate.launcher_pro_polling.format(

|

|

170

180

|

task=self.task,

|

|

@@ -178,36 +188,6 @@ class LauncherPro(Launcher):

|

|

|

178

188

|

))

|

|

179

189

|

|

|

180

190

|

time.sleep(pooling_wait_seconds)

|

|

181

|

-

# if self._pause.is_set():

|

|

182

|

-

# self._pause.clear()

|

|

183

|

-

# self._execute()

|

|

184

|

-

#

|

|

185

|

-

# elif queue_not_empty_count == 0:

|

|

186

|

-

# pooling_wait_seconds = 5

|

|

187

|

-

# check_emtpy_times += 1

|

|

188

|

-

# else:

|

|

189

|

-

# check_emtpy_times = 0

|

|

190

|

-

#

|

|

191

|

-

# if not self._db.zcount(self._todo, _min=0, _max="(1000") and check_emtpy_times > 2:

|

|

192

|

-

# check_emtpy_times = 0

|

|

193

|

-

# self.__DOING__ = {}

|

|

194

|

-

# self._pause.set()

|

|

195

|

-

#

|

|

196

|

-

# time.sleep(pooling_wait_seconds)

|

|

197

|

-

#

|

|

198

|

-

# if not self._pause.is_set():

|

|

199

|

-

# logger.info(LogTemplate.launcher_pro_polling.format(

|

|

200

|

-

# task=self.task,

|

|

201

|

-

# doing_len=len(self.__DOING__.keys()),

|

|

202

|

-

# todo_len=self.__LAUNCHER_QUEUE__['todo'].length,

|

|

203

|

-

# done_len=self.__LAUNCHER_QUEUE__['done'].length,

|

|

204

|

-

# redis_seed_count=self._db.zcount(self._todo, "-inf", "+inf"),

|

|

205

|

-

# redis_todo_len=self._db.zcount(self._todo, 0, "(1000"),

|

|

206

|

-

# redis_doing_len=self._db.zcount(self._todo, "-inf", "(0"),

|

|

207

|

-

# upload_len=self.__LAUNCHER_QUEUE__['upload'].length,

|

|

208

|

-

# ))

|

|

209

|

-

# elif not self._task_model:

|

|

210

|

-

# self._stop.set()

|

|

211

191

|

|

|

212

192

|

logger.info("Done! Ready to close thread...")

|

|

213

193

|

|

|

@@ -26,6 +26,9 @@ OSS_SECRET_KEY = os.getenv("OSS_SECRET_KEY")

|

|

|

26

26

|

OSS_CHUNK_SIZE = 10 * 1024 ** 2

|

|

27

27

|

OSS_MIN_UPLOAD_SIZE = 1024

|

|

28

28

|

|

|

29

|

+

# message

|

|

30

|

+

MESSAGE = ""

|

|

31

|

+

|

|

29

32

|

|

|

30

33

|

# 采集器选择

|

|

31

34

|

CRAWLER = "cobweb.crawlers.Crawler"

|

|

@@ -35,6 +38,8 @@ PIPELINE = "cobweb.pipelines.pipeline_console.Console"

|

|

|

35

38

|

|

|

36

39

|

|

|

37

40

|

# Launcher 等待时间

|

|

41

|

+

|

|

42

|

+

BEFORE_SCHEDULER_WAIT_SECONDS = 60 # 调度前等待时间,只作用于单次任务

|

|

38

43

|

SCHEDULER_WAIT_SECONDS = 15 # 调度等待时间

|

|

39

44

|

TODO_QUEUE_FULL_WAIT_SECONDS = 5 # todo队列已满时等待时间

|

|

40

45

|

NEW_QUEUE_WAIT_SECONDS = 30 # new队列等待时间

|

|

@@ -0,0 +1,200 @@

|

|

|

1

|

+

Metadata-Version: 2.1

|

|

2

|

+

Name: cobweb-launcher

|

|

3

|

+

Version: 1.2.2

|

|

4

|

+

Summary: spider_hole

|

|

5

|

+

Home-page: https://github.com/Juannie-PP/cobweb

|

|

6

|

+

Author: Juannie-PP

|

|

7

|

+

Author-email: 2604868278@qq.com

|

|

8

|

+

License: MIT

|

|

9

|

+

Keywords: cobweb-launcher, cobweb

|

|

10

|

+

Platform: UNKNOWN

|

|

11

|

+

Classifier: Programming Language :: Python :: 3

|

|

12

|

+

Requires-Python: >=3.7

|

|

13

|

+

Description-Content-Type: text/markdown

|

|

14

|

+

License-File: LICENSE

|

|

15

|

+

|

|

16

|

+

# cobweb

|

|

17

|

+

cobweb是一个基于python的分布式爬虫调度框架,目前支持分布式爬虫,单机爬虫,支持自定义数据库,支持自定义数据存储,支持自定义数据处理等操作。

|

|

18

|

+

|

|

19

|

+

cobweb主要由3个模块和一个配置文件组成:Launcher启动器、Crawler采集器、Pipeline存储和setting配置文件。

|

|

20

|

+

1. Launcher启动器:用于启动爬虫任务,控制爬虫任务的执行流程,以及数据存储和数据处理。

|

|

21

|

+

框架提供两种启动器模式:LauncherAir、LauncherPro,分别对应单机爬虫模式和分布式调度模式。

|

|

22

|

+

2. Crawler采集器:用于控制采集流程、数据下载和数据处理。

|

|

23

|

+

框架提供了基础的采集器,用于控制采集流程、数据下载和数据处理,用户也可在创建任务时自定义请求、下载和解析方法,具体看使用方法介绍。

|

|

24

|

+

3. Pipeline存储:用于存储采集到的数据,支持自定义数据存储和数据处理。框架提供了Console和Loghub两种存储方式,用户也可继承Pipeline抽象类自定义存储方式。

|

|

25

|

+

4. setting配置文件:用于配置采集器、存储器、队列长度、采集线程数等参数,框架提供了默认配置,用户也可自定义配置。

|

|

26

|

+

## 安装

|

|

27

|

+

```

|

|

28

|

+

pip3 install --upgrade cobweb-launcher

|

|

29

|

+

```

|

|

30

|

+

## 使用方法介绍

|

|

31

|

+

### 1. 任务创建

|

|

32

|

+

- LauncherAir任务创建

|

|

33

|

+

```python

|

|

34

|

+

from cobweb import LauncherAir

|

|

35

|

+

|

|

36

|

+

# 创建启动器

|

|

37

|

+

app = LauncherAir(task="test", project="test")

|

|

38

|

+

|

|

39

|

+

# 设置采集种子

|

|

40

|

+

app.SEEDS = [{

|

|

41

|

+

"url": "https://www.baidu.com"

|

|

42

|

+

}]

|

|

43

|

+

...

|

|

44

|

+

# 启动任务

|

|

45

|

+

app.start()

|

|

46

|

+

```

|

|

47

|

+

- LauncherPro任务创建

|

|

48

|

+

LauncherPro依赖redis实现分布式调度,使用LauncherPro启动器需要完成环境变量的配置或自定义setting文件中的redis配置,如何配置查看`2. 自定义配置文件参数`

|

|

49

|

+

```python

|

|

50

|

+

from cobweb import LauncherPro

|

|

51

|

+

|

|

52

|

+

# 创建启动器

|

|

53

|

+

app = LauncherPro(

|

|

54

|

+

task="test",

|

|

55

|

+

project="test"

|

|

56

|

+

)

|

|

57

|

+

...

|

|

58

|

+

# 启动任务

|

|

59

|

+

app.start()

|

|

60

|

+

```

|

|

61

|

+

### 2. 自定义配置文件参数

|

|

62

|

+

- 通过自定义setting文件,配置文件导入字符串方式

|

|

63

|

+

> 默认配置文件:import cobweb.setting

|

|

64

|

+

> 不推荐!!!目前有bug,随缘使用...

|

|

65

|

+

例如:同级目录下自定义创建了setting.py文件。

|

|

66

|

+

```python

|

|

67

|

+

from cobweb import LauncherAir

|

|

68

|

+

|

|

69

|

+

app = LauncherAir(

|

|

70

|

+

task="test",

|

|

71

|

+

project="test",

|

|

72

|

+

setting="import setting"

|

|

73

|

+

)

|

|

74

|

+

|

|

75

|

+

...

|

|

76

|

+

|

|

77

|

+

app.start()

|

|

78

|

+

```

|

|

79

|

+

- 自定义修改setting中对象值

|

|

80

|

+

```python

|

|

81

|

+

from cobweb import LauncherPro

|

|

82

|

+

|

|

83

|

+

# 创建启动器

|

|

84

|

+

app = LauncherPro(

|

|

85

|

+

task="test",

|

|

86

|

+

project="test",

|

|

87

|

+

REDIS_CONFIG = {

|

|

88

|

+

"host": ...,

|

|

89

|

+

"password":...,

|

|

90

|

+

"port": ...,

|

|

91

|

+

"db": ...

|

|

92

|

+

}

|

|

93

|

+

)

|

|

94

|

+

...

|

|

95

|

+

# 启动任务

|

|

96

|

+

app.start()

|

|

97

|

+

```

|

|

98

|

+

### 3. 自定义请求

|

|

99

|

+

`@app.request`使用装饰器封装自定义请求方法,作用于发生请求前的操作,返回Request对象或继承于BaseItem对象,用于控制请求参数。

|

|

100

|

+

```python

|

|

101

|

+

from typing import Union

|

|

102

|

+

from cobweb import LauncherAir

|

|

103

|

+

from cobweb.base import Seed, Request, BaseItem

|

|

104

|

+

|

|

105

|

+

app = LauncherAir(

|

|

106

|

+

task="test",

|

|

107

|

+

project="test"

|

|

108

|

+

)

|

|

109

|

+

|

|

110

|

+

...

|

|

111

|

+

|

|

112

|

+

@app.request

|

|

113

|

+

def request(seed: Seed) -> Union[Request, BaseItem]:

|

|

114

|

+

# 可自定义headers,代理,构造请求参数等操作

|

|

115

|

+

proxies = {"http": ..., "https": ...}

|

|

116

|

+

yield Request(seed.url, seed, ..., proxies=proxies, timeout=15)

|

|

117

|

+

# yield xxxItem(seed, ...) # 跳过请求和解析直接进入数据存储流程

|

|

118

|

+

|

|

119

|

+

...

|

|

120

|

+

|

|

121

|

+

app.start()

|

|

122

|

+

```

|

|

123

|

+

> 默认请求方法

|

|

124

|

+

> def request(seed: Seed) -> Union[Request, BaseItem]:

|

|

125

|

+

> yield Request(seed.url, seed, timeout=5)

|

|

126

|

+

### 4. 自定义下载

|

|

127

|

+

`@app.download`使用装饰器封装自定义下载方法,作用于发生请求时的操作,返回Response对象或继承于BaseItem对象,用于控制请求参数。

|

|

128

|

+

```python

|

|

129

|

+

from typing import Union

|

|

130

|

+

from cobweb import LauncherAir

|

|

131

|

+

from cobweb.base import Request, Response, BaseItem

|

|

132

|

+

|

|

133

|

+

app = LauncherAir(

|

|

134

|

+

task="test",

|

|

135

|

+

project="test"

|

|

136

|

+

)

|

|

137

|

+

|

|

138

|

+

...

|

|

139

|

+

|

|

140

|

+

@app.download

|

|

141

|

+

def download(item: Request) -> Union[BaseItem, Response]:

|

|

142

|

+

...

|

|

143

|

+

response = ...

|

|

144

|

+

...

|

|

145

|

+

yield Response(item.seed, response, ...) # 返回Response对象,进行解析

|

|

146

|

+

# yield xxxItem(seed, ...) # 跳过请求和解析直接进入数据存储流程

|

|

147

|

+

|

|

148

|

+

...

|

|

149

|

+

|

|

150

|

+

app.start()

|

|

151

|

+

```

|

|

152

|

+

> 默认下载方法

|

|

153

|

+

> def download(item: Request) -> Union[Seed, BaseItem, Response, str]:

|

|

154

|

+

> response = item.download()

|

|

155

|

+

> yield Response(item.seed, response, **item.to_dict)

|

|

156

|

+

### 5. 自定义解析

|

|

157

|

+

自定义解析需要由一个存储数据类和解析方法组成。存储数据类继承于BaseItem的对象,规定存储表名及字段,

|

|

158

|

+

解析方法返回继承于BaseItem的对象,yield返回进行控制数据存储流程。

|

|

159

|

+

```python

|

|

160

|

+

from typing import Union

|

|

161

|

+

from cobweb import LauncherAir

|

|

162

|

+

from cobweb.base import Seed, Response, BaseItem

|

|

163

|

+

|

|

164

|

+

class TestItem(BaseItem):

|

|

165

|

+

__TABLE__ = "test_data" # 表名

|

|

166

|

+

__FIELDS__ = "field1, field2, field3" # 字段名

|

|

167

|

+

|

|

168

|

+

app = LauncherAir(

|

|

169

|

+

task="test",

|

|

170

|

+

project="test"

|

|

171

|

+

)

|

|

172

|

+

|

|

173

|

+

...

|

|

174

|

+

|

|

175

|

+

@app.parse

|

|

176

|

+

def parse(item: Response) -> Union[Seed, BaseItem]:

|

|

177

|

+

...

|

|

178

|

+

yield TestItem(item.seed, field1=..., field2=..., field3=...)

|

|

179

|

+

# yield Seed(...) # 构造新种子推送至消费队列

|

|

180

|

+

|

|

181

|

+

...

|

|

182

|

+

|

|

183

|

+

app.start()

|

|

184

|

+

```

|

|

185

|

+

> 默认解析方法

|

|

186

|

+

> def parse(item: Request) -> Union[Seed, BaseItem]:

|

|

187

|

+

> upload_item = item.to_dict

|

|

188

|

+

> upload_item["text"] = item.response.text

|

|

189

|

+

> yield ConsoleItem(item.seed, data=json.dumps(upload_item, ensure_ascii=False))

|

|

190

|

+

## need deal

|

|

191

|

+

- 队列优化完善,使用queue的机制wait()同步各模块执行?

|

|

192

|

+

- 日志功能完善,单机模式调度和保存数据写入文件,结构化输出各任务日志

|

|

193

|

+

- 去重过滤(布隆过滤器等)

|

|

194

|

+

- 单机防丢失

|

|

195

|

+

- excel、mysql、redis数据完善

|

|

196

|

+

|

|

197

|

+

> 未更新流程图!!!

|

|

198

|

+

|

|

199

|

+

|

|

200

|

+

|

cobweb-launcher-1.2.0/PKG-INFO

DELETED

|

@@ -1,44 +0,0 @@

|

|

|

1

|

-

Metadata-Version: 2.1

|

|

2

|

-

Name: cobweb-launcher

|

|

3

|

-

Version: 1.2.0

|

|

4

|

-

Summary: spider_hole

|

|

5

|

-

Home-page: https://github.com/Juannie-PP/cobweb

|

|

6

|

-

Author: Juannie-PP

|

|

7

|

-

Author-email: 2604868278@qq.com

|

|

8

|

-

License: MIT

|

|

9

|

-

Keywords: cobweb-launcher, cobweb

|

|

10

|

-

Platform: UNKNOWN

|

|

11

|

-

Classifier: Programming Language :: Python :: 3

|

|

12

|

-

Requires-Python: >=3.7

|

|

13

|

-

Description-Content-Type: text/markdown

|

|

14

|

-

License-File: LICENSE

|

|

15

|

-

|

|

16

|

-

# cobweb

|

|

17

|

-

|

|

18

|

-

> 通用爬虫框架: 1.单机模式采集框架;2.分布式采集框架

|

|

19

|

-

>

|

|

20

|

-

> 5部分

|

|

21

|

-

>

|

|

22

|

-

> 1. starter -- 启动器

|

|

23

|

-

>

|

|

24

|

-

> 2. scheduler -- 调度器

|

|

25

|

-

>

|

|

26

|

-

> 3. distributor -- 分发器

|

|

27

|

-

>

|

|

28

|

-

> 4. storer -- 存储器

|

|

29

|

-

>

|

|

30

|

-

> 5. utils -- 工具函数

|

|

31

|

-

>

|

|

32

|

-

|

|

33

|

-

need deal

|

|

34

|

-

- 队列优化完善,使用queue的机制wait()同步各模块执行?

|

|

35

|

-

- 日志功能完善,单机模式调度和保存数据写入文件,结构化输出各任务日志

|

|

36

|

-

- 去重过滤(布隆过滤器等)

|

|

37

|

-

- 防丢失(单机模式可以通过日志文件进行检查种子)

|

|

38

|

-

- 自定义数据库的功能

|

|

39

|

-

- excel、mysql、redis数据完善

|

|

40

|

-

|

|

41

|

-

|

|

42

|

-

|

|

43

|

-

|

|

44

|

-

|

cobweb-launcher-1.2.0/README.md

DELETED

|

@@ -1,27 +0,0 @@

|

|

|

1

|

-

# cobweb

|

|

2

|

-

|

|

3

|

-

> 通用爬虫框架: 1.单机模式采集框架;2.分布式采集框架

|

|

4

|

-

>

|

|

5

|

-

> 5部分

|

|

6

|

-

>

|

|

7

|

-

> 1. starter -- 启动器

|

|

8

|

-

>

|

|

9

|

-

> 2. scheduler -- 调度器

|

|

10

|

-

>

|

|

11

|

-

> 3. distributor -- 分发器

|

|

12

|

-

>

|

|

13

|

-

> 4. storer -- 存储器

|

|

14

|

-

>

|

|

15

|

-

> 5. utils -- 工具函数

|

|

16

|

-

>

|

|

17

|

-

|

|

18

|

-

need deal

|

|

19

|

-

- 队列优化完善,使用queue的机制wait()同步各模块执行?

|

|

20

|

-

- 日志功能完善,单机模式调度和保存数据写入文件,结构化输出各任务日志

|

|

21

|

-

- 去重过滤(布隆过滤器等)

|

|

22

|

-

- 防丢失(单机模式可以通过日志文件进行检查种子)

|

|

23

|

-

- 自定义数据库的功能

|

|

24

|

-

- excel、mysql、redis数据完善

|

|

25

|

-

|

|

26

|

-

|

|

27

|

-

|

|

@@ -1,44 +0,0 @@

|

|

|

1

|

-

Metadata-Version: 2.1

|

|

2

|

-

Name: cobweb-launcher

|

|

3

|

-

Version: 1.2.0

|

|

4

|

-

Summary: spider_hole

|

|

5

|

-

Home-page: https://github.com/Juannie-PP/cobweb

|

|

6

|

-

Author: Juannie-PP

|

|

7

|

-

Author-email: 2604868278@qq.com

|

|

8

|

-

License: MIT

|

|

9

|

-

Keywords: cobweb-launcher, cobweb

|

|

10

|

-

Platform: UNKNOWN

|

|

11

|

-

Classifier: Programming Language :: Python :: 3

|

|

12

|

-

Requires-Python: >=3.7

|

|

13

|

-

Description-Content-Type: text/markdown

|

|

14

|

-

License-File: LICENSE

|

|

15

|

-

|

|

16

|

-

# cobweb

|

|

17

|

-

|

|

18

|

-

> 通用爬虫框架: 1.单机模式采集框架;2.分布式采集框架

|

|

19

|

-

>

|

|

20

|

-

> 5部分

|

|

21

|

-

>

|

|

22

|

-

> 1. starter -- 启动器

|

|

23

|

-

>

|

|

24

|

-

> 2. scheduler -- 调度器

|

|

25

|

-

>

|

|

26

|

-

> 3. distributor -- 分发器

|

|

27

|

-

>

|

|

28

|

-

> 4. storer -- 存储器

|

|

29

|

-

>

|

|

30

|

-

> 5. utils -- 工具函数

|

|

31

|

-

>

|

|

32

|

-

|

|

33

|

-

need deal

|

|

34

|

-

- 队列优化完善,使用queue的机制wait()同步各模块执行?

|

|

35

|

-

- 日志功能完善,单机模式调度和保存数据写入文件,结构化输出各任务日志

|

|

36

|

-

- 去重过滤(布隆过滤器等)

|

|

37

|

-

- 防丢失(单机模式可以通过日志文件进行检查种子)

|

|

38

|

-

- 自定义数据库的功能

|

|

39

|

-

- excel、mysql、redis数据完善

|

|

40

|

-

|

|

41

|

-

|

|

42

|

-

|

|

43

|

-

|

|

44

|

-

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|

{cobweb-launcher-1.2.0 → cobweb-launcher-1.2.2}/cobweb_launcher.egg-info/dependency_links.txt

RENAMED

|

File without changes

|

|

File without changes

|

|

File without changes

|

|

File without changes

|