cobweb-launcher 0.0.1__tar.gz

Sign up to get free protection for your applications and to get access to all the features.

- cobweb-launcher-0.0.1/LICENSE +21 -0

- cobweb-launcher-0.0.1/PKG-INFO +41 -0

- cobweb-launcher-0.0.1/README.md +27 -0

- cobweb-launcher-0.0.1/cobweb/__init__.py +2 -0

- cobweb-launcher-0.0.1/cobweb/base/__init__.py +0 -0

- cobweb-launcher-0.0.1/cobweb/base/bbb.py +187 -0

- cobweb-launcher-0.0.1/cobweb/base/config.py +164 -0

- cobweb-launcher-0.0.1/cobweb/base/decorators.py +95 -0

- cobweb-launcher-0.0.1/cobweb/base/hash_table.py +60 -0

- cobweb-launcher-0.0.1/cobweb/base/interface.py +44 -0

- cobweb-launcher-0.0.1/cobweb/base/log.py +96 -0

- cobweb-launcher-0.0.1/cobweb/base/queue_tmp.py +60 -0

- cobweb-launcher-0.0.1/cobweb/base/request.py +62 -0

- cobweb-launcher-0.0.1/cobweb/base/task.py +38 -0

- cobweb-launcher-0.0.1/cobweb/base/utils.py +15 -0

- cobweb-launcher-0.0.1/cobweb/db/__init__.py +0 -0

- cobweb-launcher-0.0.1/cobweb/db/base/__init__.py +0 -0

- cobweb-launcher-0.0.1/cobweb/db/base/client_db.py +1 -0

- cobweb-launcher-0.0.1/cobweb/db/base/oss_db.py +116 -0

- cobweb-launcher-0.0.1/cobweb/db/base/redis_db.py +214 -0

- cobweb-launcher-0.0.1/cobweb/db/base/redis_dbv3.py +231 -0

- cobweb-launcher-0.0.1/cobweb/db/scheduler/__init__.py +0 -0

- cobweb-launcher-0.0.1/cobweb/db/scheduler/default.py +8 -0

- cobweb-launcher-0.0.1/cobweb/db/scheduler/textfile.py +29 -0

- cobweb-launcher-0.0.1/cobweb/db/storer/__init__.py +0 -0

- cobweb-launcher-0.0.1/cobweb/db/storer/console.py +10 -0

- cobweb-launcher-0.0.1/cobweb/db/storer/loghub.py +55 -0

- cobweb-launcher-0.0.1/cobweb/db/storer/redis.py +16 -0

- cobweb-launcher-0.0.1/cobweb/db/storer/textfile.py +16 -0

- cobweb-launcher-0.0.1/cobweb/distributed/__init__.py +0 -0

- cobweb-launcher-0.0.1/cobweb/distributed/launcher.py +194 -0

- cobweb-launcher-0.0.1/cobweb/distributed/models.py +140 -0

- cobweb-launcher-0.0.1/cobweb/single/__init__.py +0 -0

- cobweb-launcher-0.0.1/cobweb/single/models.py +104 -0

- cobweb-launcher-0.0.1/cobweb/single/nest.py +153 -0

- cobweb-launcher-0.0.1/cobweb_launcher.egg-info/PKG-INFO +41 -0

- cobweb-launcher-0.0.1/cobweb_launcher.egg-info/SOURCES.txt +40 -0

- cobweb-launcher-0.0.1/cobweb_launcher.egg-info/dependency_links.txt +1 -0

- cobweb-launcher-0.0.1/cobweb_launcher.egg-info/requires.txt +4 -0

- cobweb-launcher-0.0.1/cobweb_launcher.egg-info/top_level.txt +1 -0

- cobweb-launcher-0.0.1/setup.cfg +4 -0

- cobweb-launcher-0.0.1/setup.py +26 -0

|

@@ -0,0 +1,21 @@

|

|

|

1

|

+

MIT License

|

|

2

|

+

|

|

3

|

+

Copyright (c) 2024 Juannie

|

|

4

|

+

|

|

5

|

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

|

6

|

+

of this software and associated documentation files (the "Software"), to deal

|

|

7

|

+

in the Software without restriction, including without limitation the rights

|

|

8

|

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

|

9

|

+

copies of the Software, and to permit persons to whom the Software is

|

|

10

|

+

furnished to do so, subject to the following conditions:

|

|

11

|

+

|

|

12

|

+

The above copyright notice and this permission notice shall be included in all

|

|

13

|

+

copies or substantial portions of the Software.

|

|

14

|

+

|

|

15

|

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

|

16

|

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

|

17

|

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

|

18

|

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

|

19

|

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

|

20

|

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

|

21

|

+

SOFTWARE.

|

|

@@ -0,0 +1,41 @@

|

|

|

1

|

+

Metadata-Version: 2.1

|

|

2

|

+

Name: cobweb-launcher

|

|

3

|

+

Version: 0.0.1

|

|

4

|

+

Summary: spider_hole

|

|

5

|

+

Home-page: https://github.com/Juannie-PP/cobweb

|

|

6

|

+

Author: Juannie-PP

|

|

7

|

+

Author-email: 2604868278@qq.com

|

|

8

|

+

License: MIT

|

|

9

|

+

Keywords: cobweb

|

|

10

|

+

Classifier: Programming Language :: Python :: 3

|

|

11

|

+

Requires-Python: >=3.7

|

|

12

|

+

Description-Content-Type: text/markdown

|

|

13

|

+

License-File: LICENSE

|

|

14

|

+

|

|

15

|

+

# cobweb

|

|

16

|

+

|

|

17

|

+

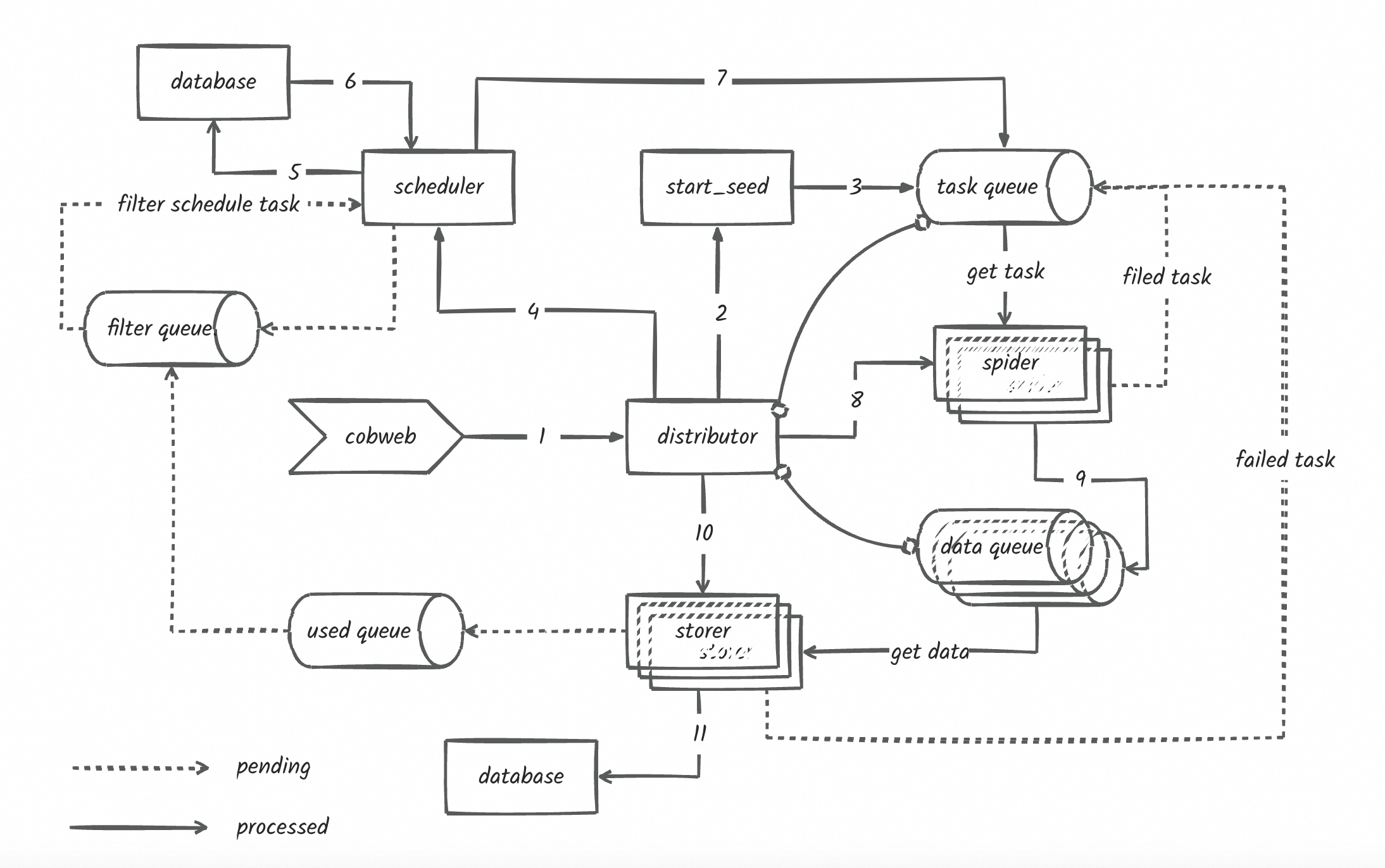

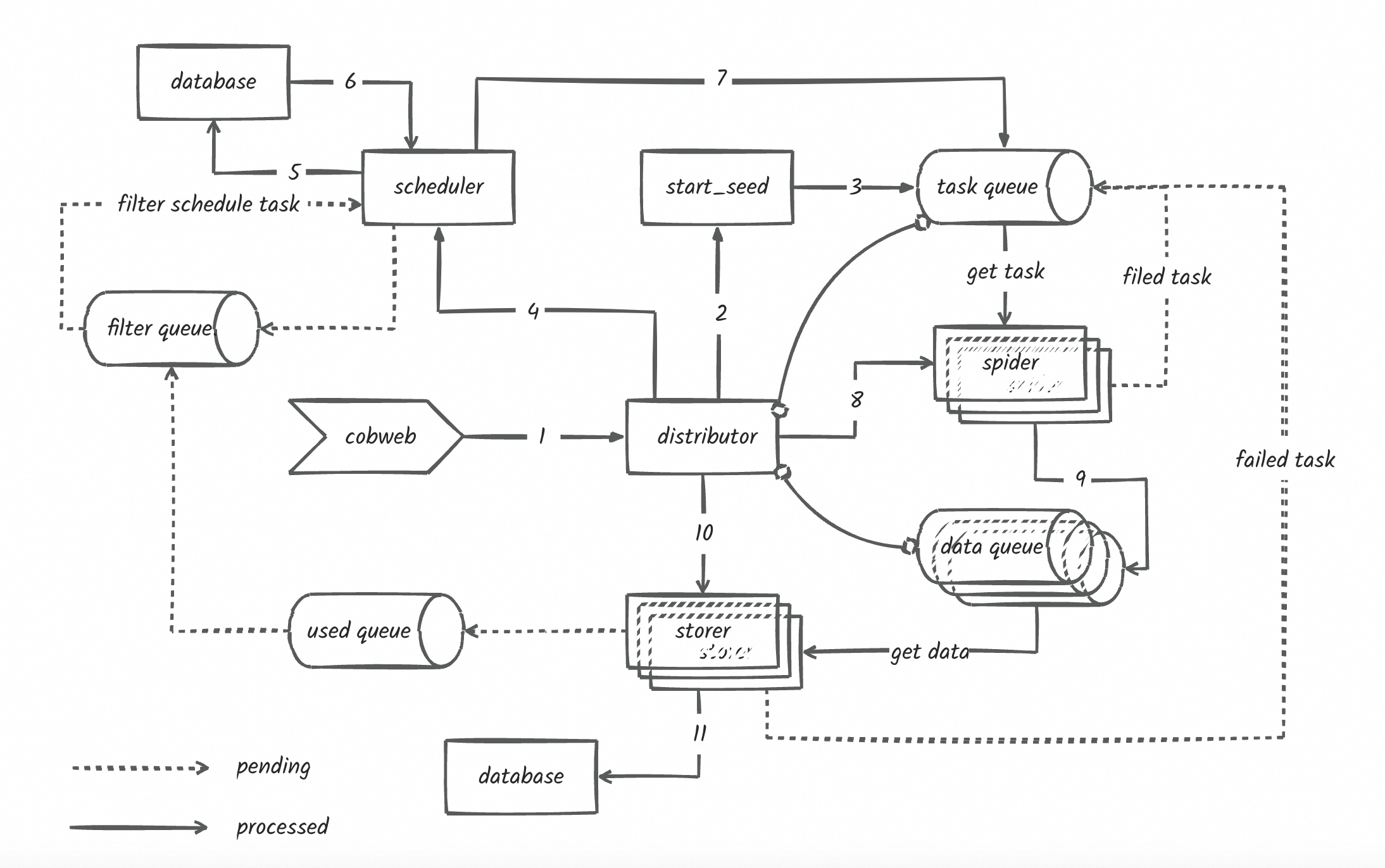

> 通用爬虫框架: 1.单机模式采集框架;2.分布式采集框架

|

|

18

|

+

>

|

|

19

|

+

> 5部分

|

|

20

|

+

>

|

|

21

|

+

> 1. starter -- 启动器

|

|

22

|

+

>

|

|

23

|

+

> 2. scheduler -- 调度器

|

|

24

|

+

>

|

|

25

|

+

> 3. distributor -- 分发器

|

|

26

|

+

>

|

|

27

|

+

> 4. storer -- 存储器

|

|

28

|

+

>

|

|

29

|

+

> 5. utils -- 工具函数

|

|

30

|

+

>

|

|

31

|

+

|

|

32

|

+

need deal

|

|

33

|

+

- 队列优化完善,使用queue的机制wait()同步各模块执行?

|

|

34

|

+

- 日志功能完善,单机模式调度和保存数据写入文件,结构化输出各任务日志

|

|

35

|

+

- 去重过滤(布隆过滤器等)

|

|

36

|

+

- 防丢失(单机模式可以通过日志文件进行检查种子)

|

|

37

|

+

- 自定义数据库的功能

|

|

38

|

+

- excel、mysql、redis数据完善

|

|

39

|

+

|

|

40

|

+

|

|

41

|

+

|

|

@@ -0,0 +1,27 @@

|

|

|

1

|

+

# cobweb

|

|

2

|

+

|

|

3

|

+

> 通用爬虫框架: 1.单机模式采集框架;2.分布式采集框架

|

|

4

|

+

>

|

|

5

|

+

> 5部分

|

|

6

|

+

>

|

|

7

|

+

> 1. starter -- 启动器

|

|

8

|

+

>

|

|

9

|

+

> 2. scheduler -- 调度器

|

|

10

|

+

>

|

|

11

|

+

> 3. distributor -- 分发器

|

|

12

|

+

>

|

|

13

|

+

> 4. storer -- 存储器

|

|

14

|

+

>

|

|

15

|

+

> 5. utils -- 工具函数

|

|

16

|

+

>

|

|

17

|

+

|

|

18

|

+

need deal

|

|

19

|

+

- 队列优化完善,使用queue的机制wait()同步各模块执行?

|

|

20

|

+

- 日志功能完善,单机模式调度和保存数据写入文件,结构化输出各任务日志

|

|

21

|

+

- 去重过滤(布隆过滤器等)

|

|

22

|

+

- 防丢失(单机模式可以通过日志文件进行检查种子)

|

|

23

|

+

- 自定义数据库的功能

|

|

24

|

+

- excel、mysql、redis数据完善

|

|

25

|

+

|

|

26

|

+

|

|

27

|

+

|

|

File without changes

|

|

@@ -0,0 +1,187 @@

|

|

|

1

|

+

# from typing import Iterable

|

|

2

|

+

import json

|

|

3

|

+

import time

|

|

4

|

+

import hashlib

|

|

5

|

+

from log import log

|

|

6

|

+

from utils import struct_queue_name

|

|

7

|

+

from collections import deque, namedtuple

|

|

8

|

+

|

|

9

|

+

|

|

10

|

+

class Queue:

|

|

11

|

+

|

|

12

|

+

def __init__(self):

|

|

13

|

+

self._queue = deque()

|

|

14

|

+

|

|

15

|

+

@property

|

|

16

|

+

def length(self) -> int:

|

|

17

|

+

return len(self._queue)

|

|

18

|

+

#

|

|

19

|

+

# @property

|

|

20

|

+

# def queue_names(self):

|

|

21

|

+

# return tuple(self.__dict__.keys())

|

|

22

|

+

#

|

|

23

|

+

# @property

|

|

24

|

+

# def used_memory(self):

|

|

25

|

+

# return asizeof.asizeof(self)

|

|

26

|

+

|

|

27

|

+

# def create_queue(self, queue_name: str):

|

|

28

|

+

# self.__setattr__(queue_name, deque())

|

|

29

|

+

|

|

30

|

+

# def push_seed(self, seed):

|

|

31

|

+

# self.push("_seed_queue", seed)

|

|

32

|

+

|

|

33

|

+

# def pop_seed(self):

|

|

34

|

+

# return self.pop("_seed_queue")

|

|

35

|

+

|

|

36

|

+

def push(self, data, left: bool = False, direct_insertion: bool = False):

|

|

37

|

+

try:

|

|

38

|

+

if not data:

|

|

39

|

+

return None

|

|

40

|

+

if direct_insertion or isinstance(data, Seed):

|

|

41

|

+

self._queue.appendleft(data) if left else self._queue.append(data)

|

|

42

|

+

elif any(isinstance(data, t) for t in (list, tuple)):

|

|

43

|

+

self._queue.extendleft(data) if left else self._queue.extend(data)

|

|

44

|

+

except AttributeError as e:

|

|

45

|

+

log.exception(e)

|

|

46

|

+

|

|

47

|

+

def pop(self, left: bool = True):

|

|

48

|

+

try:

|

|

49

|

+

return self._queue.popleft() if left else self._queue.pop()

|

|

50

|

+

except IndexError:

|

|

51

|

+

return None

|

|

52

|

+

except AttributeError as e:

|

|

53

|

+

log.exception(e)

|

|

54

|

+

return None

|

|

55

|

+

|

|

56

|

+

|

|

57

|

+

class Seed:

|

|

58

|

+

|

|

59

|

+

def __init__(

|

|

60

|

+

self,

|

|

61

|

+

seed_info=None,

|

|

62

|

+

priority=300,

|

|

63

|

+

version=None,

|

|

64

|

+

retry=0,

|

|

65

|

+

**kwargs

|

|

66

|

+

):

|

|

67

|

+

if seed_info:

|

|

68

|

+

if any(isinstance(seed_info, t) for t in (str, bytes)):

|

|

69

|

+

try:

|

|

70

|

+

item = json.loads(seed_info)

|

|

71

|

+

for k, v in item.items():

|

|

72

|

+

self.__setattr__(k, v)

|

|

73

|

+

except json.JSONDecodeError:

|

|

74

|

+

self.__setattr__("url", seed_info)

|

|

75

|

+

elif isinstance(seed_info, dict):

|

|

76

|

+

for k, v in seed_info.items():

|

|

77

|

+

self.__setattr__(k, v)

|

|

78

|

+

else:

|

|

79

|

+

raise TypeError(Exception(

|

|

80

|

+

f"seed type error, "

|

|

81

|

+

f"must be str or dict! "

|

|

82

|

+

f"seed_info: {seed_info}"

|

|

83

|

+

))

|

|

84

|

+

for k, v in kwargs.items():

|

|

85

|

+

self.__setattr__(k, v)

|

|

86

|

+

if not getattr(self, "_priority"):

|

|

87

|

+

self._priority = min(max(1, int(priority)), 999)

|

|

88

|

+

if not getattr(self, "_version"):

|

|

89

|

+

self._version = int(version) or int(time.time())

|

|

90

|

+

if not getattr(self, "_retry"):

|

|

91

|

+

self._retry = retry

|

|

92

|

+

if not getattr(self, "sid"):

|

|

93

|

+

self.init_id()

|

|

94

|

+

|

|

95

|

+

def init_id(self):

|

|

96

|

+

item_string = self.format_seed

|

|

97

|

+

seed_id = hashlib.md5(item_string.encode()).hexdigest()

|

|

98

|

+

self.__setattr__("sid", seed_id)

|

|

99

|

+

|

|

100

|

+

def __setitem__(self, key, value):

|

|

101

|

+

setattr(self, key, value)

|

|

102

|

+

|

|

103

|

+

def __getitem__(self, item):

|

|

104

|

+

return getattr(self, item)

|

|

105

|

+

|

|

106

|

+

def __getattr__(self, name):

|

|

107

|

+

return None

|

|

108

|

+

|

|

109

|

+

def __str__(self):

|

|

110

|

+

return json.dumps(self.__dict__, ensure_ascii=False)

|

|

111

|

+

|

|

112

|

+

def __repr__(self):

|

|

113

|

+

chars = [f"{k}={v}" for k, v in self.__dict__.items()]

|

|

114

|

+

return f'{self.__class__.__name__}({", ".join(chars)})'

|

|

115

|

+

|

|

116

|

+

@property

|

|

117

|

+

def format_seed(self):

|

|

118

|

+

seed = self.__dict__.copy()

|

|

119

|

+

del seed["_priority"]

|

|

120

|

+

del seed["_version"]

|

|

121

|

+

del seed["_retry"]

|

|

122

|

+

return json.dumps(seed, ensure_ascii=False)

|

|

123

|

+

|

|

124

|

+

|

|

125

|

+

class DBItem:

|

|

126

|

+

|

|

127

|

+

def __init__(self, **kwargs):

|

|

128

|

+

self.__setattr__("_index", 0, True)

|

|

129

|

+

for table in self.__class__.__table__:

|

|

130

|

+

if set(kwargs.keys()) == set(table._fields):

|

|

131

|

+

break

|

|

132

|

+

self._index += 1

|

|

133

|

+

|

|

134

|

+

if self._index > len(self.__class__.__table__):

|

|

135

|

+

raise Exception()

|

|

136

|

+

|

|

137

|

+

table = self.__class__.__table__[self._index]

|

|

138

|

+

self.__setattr__("struct_data", table(**kwargs), True)

|

|

139

|

+

self.__setattr__("db_name", self.__class__.__name__, True)

|

|

140

|

+

self.__setattr__("table_name", self.struct_data.__class__.__name__, True)

|

|

141

|

+

|

|

142

|

+

@classmethod

|

|

143

|

+

def init_item(cls, table_name, fields):

|

|

144

|

+

queue_name = struct_queue_name(cls.__name__, table_name)

|

|

145

|

+

if getattr(cls, queue_name, None) is None:

|

|

146

|

+

setattr(cls, queue_name, Queue())

|

|

147

|

+

|

|

148

|

+

if getattr(cls, "__table__", None) is None:

|

|

149

|

+

cls.__table__ = []

|

|

150

|

+

|

|

151

|

+

table = namedtuple(table_name, fields)

|

|

152

|

+

|

|

153

|

+

if table in getattr(cls, "__table__"):

|

|

154

|

+

raise Exception()

|

|

155

|

+

getattr(cls, "__table__").append(table)

|

|

156

|

+

|

|

157

|

+

def queue(self):

|

|

158

|

+

queue_name = struct_queue_name(self.db_name, self.table_name)

|

|

159

|

+

return getattr(self.__class__, queue_name)

|

|

160

|

+

|

|

161

|

+

def __setitem__(self, key, value):

|

|

162

|

+

self.__setattr__(key, value)

|

|

163

|

+

|

|

164

|

+

def __getitem__(self, item):

|

|

165

|

+

return self.struct_data[item]

|

|

166

|

+

|

|

167

|

+

def __getattr__(self, name):

|

|

168

|

+

return None

|

|

169

|

+

|

|

170

|

+

def __setattr__(self, key, value, init=None):

|

|

171

|

+

if init:

|

|

172

|

+

super().__setattr__(key, value)

|

|

173

|

+

elif not getattr(self, "struct_data"):

|

|

174

|

+

raise Exception(f"no struct_data")

|

|

175

|

+

else:

|

|

176

|

+

self.__setattr__(

|

|

177

|

+

"struct_data",

|

|

178

|

+

self.struct_data._replace(**{key: value}),

|

|

179

|

+

init=True

|

|

180

|

+

)

|

|

181

|

+

|

|

182

|

+

def __str__(self):

|

|

183

|

+

return json.dumps(self.struct_data._asdict(), ensure_ascii=False)

|

|

184

|

+

|

|

185

|

+

def __repr__(self):

|

|

186

|

+

return f'{self.__class__.__name__}:{self.struct_data}'

|

|

187

|

+

|

|

@@ -0,0 +1,164 @@

|

|

|

1

|

+

import json

|

|

2

|

+

from collections import namedtuple

|

|

3

|

+

from utils import struct_table_name

|

|

4

|

+

|

|

5

|

+

StorerInfo = namedtuple(

|

|

6

|

+

"StorerInfo",

|

|

7

|

+

"DB, table, fields, length, config"

|

|

8

|

+

)

|

|

9

|

+

SchedulerInfo = namedtuple(

|

|

10

|

+

"SchedulerInfo",

|

|

11

|

+

"DB, table, sql, length, size, config",

|

|

12

|

+

)

|

|

13

|

+

RedisInfo = namedtuple(

|

|

14

|

+

"RedisInfo",

|

|

15

|

+

"host, port, username, password, db",

|

|

16

|

+

defaults=("localhost", 6379, None, None, 0)

|

|

17

|

+

)

|

|

18

|

+

|

|

19

|

+

# redis_info = dict(

|

|

20

|

+

# host="localhost",

|

|

21

|

+

# port=6379,

|

|

22

|

+

# username=None,

|

|

23

|

+

# password=None,

|

|

24

|

+

# db=0

|

|

25

|

+

# )

|

|

26

|

+

|

|

27

|

+

|

|

28

|

+

class SchedulerDB:

|

|

29

|

+

|

|

30

|

+

@staticmethod

|

|

31

|

+

def default():

|

|

32

|

+

from db.scheduler.default import Default

|

|

33

|

+

return SchedulerInfo(DB=Default, table="", sql="", length=100, size=500000, config=None)

|

|

34

|

+

|

|

35

|

+

@staticmethod

|

|

36

|

+

def textfile(table, sql=None, length=100, size=500000):

|

|

37

|

+

from db.scheduler.textfile import Textfile

|

|

38

|

+

return SchedulerInfo(DB=Textfile, table=table, sql=sql, length=length, size=size, config=None)

|

|

39

|

+

|

|

40

|

+

@staticmethod

|

|

41

|

+

def diy(DB, table, sql=None, length=100, size=500000, config=None):

|

|

42

|

+

from base.interface import SchedulerInterface

|

|

43

|

+

if not isinstance(DB, SchedulerInterface):

|

|

44

|

+

raise Exception("DB must be inherit from SchedulerInterface")

|

|

45

|

+

return SchedulerInfo(DB=DB, table=table, sql=sql, length=length, size=size, config=config)

|

|

46

|

+

|

|

47

|

+

# @staticmethod

|

|

48

|

+

# def info(scheduler_info):

|

|

49

|

+

# if not scheduler_info:

|

|

50

|

+

# return SchedulerDB.default()

|

|

51

|

+

#

|

|

52

|

+

# if isinstance(scheduler_info, SchedulerInfo):

|

|

53

|

+

# return scheduler_info

|

|

54

|

+

#

|

|

55

|

+

# if isinstance(scheduler_info, str):

|

|

56

|

+

# scheduler = json.loads(scheduler_info)

|

|

57

|

+

# if isinstance(scheduler, dict):

|

|

58

|

+

# db_name = scheduler["DB"]

|

|

59

|

+

# if db_name in dir(SchedulerDB):

|

|

60

|

+

# del scheduler["DB"]

|

|

61

|

+

# else:

|

|

62

|

+

# db_name = "diy"

|

|

63

|

+

# func = getattr(SchedulerDB, db_name)

|

|

64

|

+

# return func(**scheduler)

|

|

65

|

+

|

|

66

|

+

|

|

67

|

+

class StorerDB:

|

|

68

|

+

|

|

69

|

+

@staticmethod

|

|

70

|

+

def console(table, fields, length=200):

|

|

71

|

+

from db.storer.console import Console

|

|

72

|

+

table = struct_table_name(table)

|

|

73

|

+

return StorerInfo(DB=Console, table=table, fields=fields, length=length, config=None)

|

|

74

|

+

|

|

75

|

+

@staticmethod

|

|

76

|

+

def textfile(table, fields, length=200):

|

|

77

|

+

from db.storer.textfile import Textfile

|

|

78

|

+

table = struct_table_name(table)

|

|

79

|

+

return StorerInfo(DB=Textfile, table=table, fields=fields, length=length, config=None)

|

|

80

|

+

|

|

81

|

+

@staticmethod

|

|

82

|

+

def loghub(table, fields, length=200, config=None):

|

|

83

|

+

from db.storer.loghub import Loghub

|

|

84

|

+

table = struct_table_name(table)

|

|

85

|

+

return StorerInfo(DB=Loghub, table=table, fields=fields, length=length, config=config)

|

|

86

|

+

|

|

87

|

+

@staticmethod

|

|

88

|

+

def diy(DB, table, fields, length=200, config=None):

|

|

89

|

+

from base.interface import StorerInterface

|

|

90

|

+

if not isinstance(DB, StorerInterface):

|

|

91

|

+

raise Exception("DB must be inherit from StorerInterface")

|

|

92

|

+

table = struct_table_name(table)

|

|

93

|

+

return StorerInfo(DB=DB, table=table, fields=fields, length=length, config=config)

|

|

94

|

+

|

|

95

|

+

# @staticmethod

|

|

96

|

+

# def info(storer_info):

|

|

97

|

+

# if not storer_info:

|

|

98

|

+

# return None

|

|

99

|

+

#

|

|

100

|

+

# if isinstance(storer_info, str):

|

|

101

|

+

# storer_info = json.loads(storer_info)

|

|

102

|

+

#

|

|

103

|

+

# if any(isinstance(storer_info, t) for t in (dict, StorerInfo)):

|

|

104

|

+

# storer_info = [storer_info]

|

|

105

|

+

#

|

|

106

|

+

# if not isinstance(storer_info, list):

|

|

107

|

+

# raise Exception("StorerDB.info storer_info")

|

|

108

|

+

#

|

|

109

|

+

# storer_info_list = []

|

|

110

|

+

# for storer in storer_info:

|

|

111

|

+

# if isinstance(storer, StorerInfo):

|

|

112

|

+

# storer_info_list.append(storer)

|

|

113

|

+

# else:

|

|

114

|

+

# db_name = storer["DB"]

|

|

115

|

+

# if db_name in dir(StorerDB):

|

|

116

|

+

# del storer["DB"]

|

|

117

|

+

# else:

|

|

118

|

+

# db_name = "diy"

|

|

119

|

+

# func = getattr(StorerDB, db_name)

|

|

120

|

+

# storer_info_list.append(func(**storer))

|

|

121

|

+

# return storer_info_list

|

|

122

|

+

|

|

123

|

+

|

|

124

|

+

|

|

125

|

+

def deal(config, tag):

|

|

126

|

+

if isinstance(config, dict):

|

|

127

|

+

if tag == 0:

|

|

128

|

+

return RedisInfo(**config)

|

|

129

|

+

elif tag == 1:

|

|

130

|

+

db_name = config["DB"]

|

|

131

|

+

if db_name in dir(SchedulerDB):

|

|

132

|

+

del config["DB"]

|

|

133

|

+

else:

|

|

134

|

+

db_name = "diy"

|

|

135

|

+

func = getattr(SchedulerDB, db_name)

|

|

136

|

+

return func(**config)

|

|

137

|

+

elif tag == 2:

|

|

138

|

+

db_name = config["DB"]

|

|

139

|

+

if db_name in dir(StorerDB):

|

|

140

|

+

del config["DB"]

|

|

141

|

+

else:

|

|

142

|

+

db_name = "diy"

|

|

143

|

+

func = getattr(StorerDB, db_name)

|

|

144

|

+

return func(**config)

|

|

145

|

+

raise ValueError("tag must be in [0, 1, 2]")

|

|

146

|

+

elif any(isinstance(config, t) for t in (StorerInfo, SchedulerInfo, RedisInfo)):

|

|

147

|

+

return config

|

|

148

|

+

raise TypeError("config must be in [StorerInfo, SchedulerInfo, RedisInfo]")

|

|

149

|

+

|

|

150

|

+

|

|

151

|

+

def info(configs, tag = 0):

|

|

152

|

+

if configs is None:

|

|

153

|

+

return SchedulerDB.default() if tag == 1 else None

|

|

154

|

+

|

|

155

|

+

if isinstance(configs, str):

|

|

156

|

+

configs = json.loads(configs)

|

|

157

|

+

|

|

158

|

+

if tag == 0:

|

|

159

|

+

return deal(configs, tag)

|

|

160

|

+

|

|

161

|

+

if not isinstance(configs, list):

|

|

162

|

+

configs = [configs]

|

|

163

|

+

|

|

164

|

+

return [deal(config, tag) for config in configs]

|

|

@@ -0,0 +1,95 @@

|

|

|

1

|

+

import time

|

|

2

|

+

from functools import wraps

|

|

3

|

+

|

|

4

|

+

# from config import DBType

|

|

5

|

+

from log import log

|

|

6

|

+

|

|

7

|

+

|

|

8

|

+

# def find_func_name(func_name, name_list):

|

|

9

|

+

# for name in name_list:

|

|

10

|

+

# if func_name.find(name) == 0:

|

|

11

|

+

# return True

|

|

12

|

+

# return False

|

|

13

|

+

|

|

14

|

+

|

|

15

|

+

# def starter_decorator(execute):

|

|

16

|

+

# @wraps(execute)

|

|

17

|

+

# def wrapper(starter, *args, **kwargs):

|

|

18

|

+

# spider_dynamic_funcs = []

|

|

19

|

+

# scheduler_dynamic_funcs = []

|

|

20

|

+

# starter_functions = inspect.getmembers(starter, lambda a: inspect.isfunction(a))

|

|

21

|

+

# for starter_function in starter_functions:

|

|

22

|

+

# if find_func_name(starter_function[0], starter.scheduler_funcs):

|

|

23

|

+

# scheduler_dynamic_funcs.append(starter_function)

|

|

24

|

+

# elif find_func_name(starter_function[0], starter.spider_funcs):

|

|

25

|

+

# spider_dynamic_funcs.append(starter_function)

|

|

26

|

+

# return execute(starter, scheduler_dynamic_funcs, spider_dynamic_funcs, *args, **kwargs)

|

|

27

|

+

#

|

|

28

|

+

# return wrapper

|

|

29

|

+

|

|

30

|

+

|

|

31

|

+

# def scheduler_decorator(execute):

|

|

32

|

+

# @wraps(execute)

|

|

33

|

+

# def wrapper(scheduler, distribute_task):

|

|

34

|

+

# if not issubclass(scheduler, SchedulerInterface):

|

|

35

|

+

# scheduler.stop = True

|

|

36

|

+

# elif getattr(scheduler, "scheduler", None):

|

|

37

|

+

# execute(scheduler, distribute_task)

|

|

38

|

+

# else:

|

|

39

|

+

# log.error(f"scheduler type: {scheduler.db_type} not have add function!")

|

|

40

|

+

# scheduler.stop = True

|

|

41

|

+

# return wrapper

|

|

42

|

+

|

|

43

|

+

|

|

44

|

+

def storer_decorator(execute):

|

|

45

|

+

@wraps(execute)

|

|

46

|

+

def wrapper(storer, stop_event, last_event, table_name, callback):

|

|

47

|

+

if getattr(storer, "save", None):

|

|

48

|

+

execute(storer, stop_event, last_event, table_name, callback)

|

|

49

|

+

else:

|

|

50

|

+

log.error(f"storer base_type: {storer.data_type} not have add function!")

|

|

51

|

+

storer.stop = True

|

|

52

|

+

return wrapper

|

|

53

|

+

|

|

54

|

+

|

|

55

|

+

def distribute_scheduler_decorators(func):

|

|

56

|

+

@wraps(func)

|

|

57

|

+

def wrapper(distributor, callback):

|

|

58

|

+

try:

|

|

59

|

+

func(distributor, callback)

|

|

60

|

+

except TypeError:

|

|

61

|

+

pass

|

|

62

|

+

distributor.event.set()

|

|

63

|

+

return wrapper

|

|

64

|

+

|

|

65

|

+

|

|

66

|

+

def distribute_spider_decorators(func):

|

|

67

|

+

@wraps(func)

|

|

68

|

+

def wrapper(distributor, stop_event, db, callback):

|

|

69

|

+

while not stop_event.is_set():

|

|

70

|

+

try:

|

|

71

|

+

seed = distributor.queue_client.pop("_seed_queue")

|

|

72

|

+

if not seed:

|

|

73

|

+

time.sleep(3)

|

|

74

|

+

continue

|

|

75

|

+

distributor.spider_in_progress.append(1)

|

|

76

|

+

func(distributor, db, seed, callback)

|

|

77

|

+

except Exception as e:

|

|

78

|

+

print(e)

|

|

79

|

+

finally:

|

|

80

|

+

distributor.spider_in_progress.pop()

|

|

81

|

+

|

|

82

|

+

return wrapper

|

|

83

|

+

|

|

84

|

+

|

|

85

|

+

def distribute_storer_decorators(func):

|

|

86

|

+

@wraps(func)

|

|

87

|

+

def wrapper(distributor, callback, data_type, table_name, last):

|

|

88

|

+

data_list = []

|

|

89

|

+

try:

|

|

90

|

+

func(distributor, callback, data_list, data_type, table_name, last)

|

|

91

|

+

except Exception as e:

|

|

92

|

+

log.info("storage exception! " + str(e))

|

|

93

|

+

# distributor._task_queue.extendleft(data_list)

|

|

94

|

+

|

|

95

|

+

return wrapper

|

|

@@ -0,0 +1,60 @@

|

|

|

1

|

+

# -*- coding: utf-8 -*-

|

|

2

|

+

|

|

3

|

+

class DynamicHashTable:

|

|

4

|

+

def __init__(self):

|

|

5

|

+

self.capacity = 1000 # 初始容量

|

|

6

|

+

self.size = 0 # 元素个数

|

|

7

|

+

self.table = [None] * self.capacity

|

|

8

|

+

|

|

9

|

+

def hash_function(self, key):

|

|

10

|

+

return hash(key) % self.capacity

|

|

11

|

+

|

|

12

|

+

def probe(self, index):

|

|

13

|

+

# 线性探测法

|

|

14

|

+

return (index + 1) % self.capacity

|

|

15

|

+

|

|

16

|

+

def insert(self, key, value):

|

|

17

|

+

index = self.hash_function(key)

|

|

18

|

+

while self.table[index] is not None:

|

|

19

|

+

if self.table[index][0] == key:

|

|

20

|

+

self.table[index][1] = value

|

|

21

|

+

return

|

|

22

|

+

index = self.probe(index)

|

|

23

|

+

self.table[index] = [key, value]

|

|

24

|

+

self.size += 1

|

|

25

|

+

|

|

26

|

+

# 动态扩容

|

|

27

|

+

if self.size / self.capacity >= 0.7:

|

|

28

|

+

self.resize()

|

|

29

|

+

|

|

30

|

+

def get(self, key):

|

|

31

|

+

index = self.hash_function(key)

|

|

32

|

+

while self.table[index] is not None:

|

|

33

|

+

if self.table[index][0] == key:

|

|

34

|

+

return self.table[index][1]

|

|

35

|

+

index = self.probe(index)

|

|

36

|

+

raise KeyError("Key not found")

|

|

37

|

+

|

|

38

|

+

def remove(self, key):

|

|

39

|

+

index = self.hash_function(key)

|

|

40

|

+

while self.table[index] is not None:

|

|

41

|

+

if self.table[index][0] == key:

|

|

42

|

+

self.table[index] = None

|

|

43

|

+

self.size -= 1

|

|

44

|

+

return

|

|

45

|

+

index = self.probe(index)

|

|

46

|

+

raise KeyError("Key not found")

|

|

47

|

+

|

|

48

|

+

def resize(self):

|

|

49

|

+

# 扩容为原容量的两倍

|

|

50

|

+

self.capacity *= 2

|

|

51

|

+

new_table = [None] * self.capacity

|

|

52

|

+

for item in self.table:

|

|

53

|

+

if item is not None:

|

|

54

|

+

key, value = item

|

|

55

|

+

new_index = self.hash_function(key)

|

|

56

|

+

while new_table[new_index] is not None:

|

|

57

|

+

new_index = self.probe(new_index)

|

|

58

|

+

new_table[new_index] = [key, value]

|

|

59

|

+

self.table = new_table

|

|

60

|

+

|

|

@@ -0,0 +1,44 @@

|

|

|

1

|

+

import json

|

|

2

|

+

from abc import ABC, abstractmethod

|

|

3

|

+

|

|

4

|

+

|

|

5

|

+

def parse_config(config):

|

|

6

|

+

if not config:

|

|

7

|

+

return None

|

|

8

|

+

if isinstance(config, str):

|

|

9

|

+

return json.loads(config)

|

|

10

|

+

if isinstance(config, dict):

|

|

11

|

+

return config

|

|

12

|

+

raise TypeError("config type is not in [string, dict]!")

|

|

13

|

+

|

|

14

|

+

|

|

15

|

+

class SchedulerInterface(ABC):

|

|

16

|

+

|

|

17

|

+

def __init__(self, table, sql, length, size, queue, config=None):

|

|

18

|

+

self.sql = sql

|

|

19

|

+

self.table = table

|

|

20

|

+

self.length = length

|

|

21

|

+

self.size = size

|

|

22

|

+

self.queue = queue

|

|

23

|

+

self.config = parse_config(config)

|

|

24

|

+

self.stop = False

|

|

25

|

+

|

|

26

|

+

@abstractmethod

|

|

27

|

+

def schedule(self, *args, **kwargs):

|

|

28

|

+

pass

|

|

29

|

+

|

|

30

|

+

|

|

31

|

+

class StorerInterface(ABC):

|

|

32

|

+

|

|

33

|

+

def __init__(self, table, fields, length, queue, config=None):

|

|

34

|

+

self.table = table

|

|

35

|

+

self.fields = fields

|

|

36

|

+

self.length = length

|

|

37

|

+

self.queue = queue

|

|

38

|

+

self.config = parse_config(config)

|

|

39

|

+

# self.redis_db = redis_db

|

|

40

|

+

|

|

41

|

+

@abstractmethod

|

|

42

|

+

def store(self, *args, **kwargs):

|

|

43

|

+

pass

|

|

44

|

+

|