recommendify 0.0.1 → 0.1.0

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- data/README.md +28 -23

- data/Rakefile +9 -0

- data/doc/example.rb +2 -1

- data/lib/recommendify/jaccard_input_matrix.rb +28 -9

- data/recommendify.gemspec +24 -0

- data/spec/similarity_matrix_spec.rb +2 -0

- data/src/cc_item.h +8 -0

- data/src/cosine.c +3 -0

- data/src/iikey.c +18 -0

- data/src/jaccard.c +19 -0

- data/src/output.c +22 -0

- data/src/recommendify.c +184 -0

- data/src/sort.c +23 -0

- data/src/version.h +17 -0

- metadata +14 -24

data/README.md

CHANGED

|

@@ -1,10 +1,11 @@

|

|

|

1

1

|

recommendify

|

|

2

2

|

============

|

|

3

3

|

|

|

4

|

-

Incremental and distributed item-based "Collaborative Filtering" for binary ratings with ruby and redis. In a nutshell: You feed in `user -> item` interactions and it spits out similarity vectors between items ("related items").

|

|

4

|

+

Incremental and distributed item-based "Collaborative Filtering" for binary ratings with ruby and redis. In a nutshell: You feed in `user -> item` interactions and it spits out similarity vectors between items ("related items"). __scroll down for a demo...__

|

|

5

5

|

|

|

6

6

|

[  ](http://travis-ci.org/paulasmuth/recommendify)

|

|

7

7

|

|

|

8

|

+

|

|

8

9

|

### use cases

|

|

9

10

|

|

|

10

11

|

+ "Users that bought this product also bought...".

|

|

@@ -12,23 +13,20 @@ Incremental and distributed item-based "Collaborative Filtering" for binary rati

|

|

|

12

13

|

+ "Users that follow this person also follow...".

|

|

13

14

|

|

|

14

15

|

|

|

16

|

+

usage

|

|

17

|

+

-----

|

|

15

18

|

|

|

16

|

-

|

|

17

|

-

|

|

18

|

-

Recommendify keeps an incrementally updated `item x item` matrix, the "co-concurrency matrix". This matrix stores the number of times that a combination of two items has appeared in an interaction/preferrence set. The co-concurrence counts are processed with a similarity measure to retrieve another `item x item` similarity matrix, which is used to find the N most similar items for each item. This approach was described by Miranda, Alipio et al. [1]

|

|

19

|

-

|

|

20

|

-

1. Group the input user->item pairs by user-id and store them into interaction sets

|

|

21

|

-

2. For each item<->item combination in the interaction set increment the respective element in the co-concurrence matrix

|

|

22

|

-

3. For each item<->item combination in the co-concurrence matrix calculate the item<->item similarity

|

|

23

|

-

3. For each item store the N most similar items in the respective output set.

|

|

24

|

-

|

|

25

|

-

|

|

26

|

-

Fnord is not a draft!

|

|

27

|

-

|

|

19

|

+

Your data should look something like this:

|

|

28

20

|

|

|

21

|

+

```

|

|

22

|

+

# which items are frequently bought togehter?

|

|

23

|

+

[order23] product5 produt42 product17

|

|

24

|

+

[order42] product8 produt16 product32

|

|

29

25

|

|

|

30

|

-

|

|

31

|

-

|

|

26

|

+

# which users are frequently watched/followed together?

|

|

27

|

+

[user4] user9 user11 user12

|

|

28

|

+

[user9] user6 user8 user11

|

|

29

|

+

```

|

|

32

30

|

|

|

33

31

|

You can add new interaction-sets to the processor incrementally, but the similarity matrix has to be manually re-processed after new interactions were added to any of the applied processors. However, the processing happens on-line and you can keep track of the changed items so you only have to re-calculate the changed rows of the matrix.

|

|

34

32

|

|

|

@@ -91,24 +89,32 @@ recommender.for("item23")

|

|

|

91

89

|

recommender.remove_item!("item23")

|

|

92

90

|

```

|

|

93

91

|

|

|

92

|

+

### how it works

|

|

94

93

|

|

|

94

|

+

Recommendify keeps an incrementally updated `item x item` matrix, the "co-concurrency matrix". This matrix stores the number of times that a combination of two items has appeared in an interaction/preferrence set. The co-concurrence counts are processed with a similarity measure to retrieve another `item x item` similarity matrix, which is used to find the N most similar items for each item. This approach was described by Miranda, Alipio et al. [1]

|

|

95

95

|

|

|

96

|

-

|

|

97

|

-

|

|

96

|

+

1. Group the input user->item pairs by user-id and store them into interaction sets

|

|

97

|

+

2. For each item<->item combination in the interaction set increment the respective element in the co-concurrence matrix

|

|

98

|

+

3. For each item<->item combination in the co-concurrence matrix calculate the item<->item similarity

|

|

99

|

+

3. For each item store the N most similar items in the respective output set.

|

|

98

100

|

|

|

99

|

-

[  ](http://falbala.23loc.com/~paul/recommendify_out_1.html)

|

|

100

101

|

|

|

101

|

-

|

|

102

|

+

### does it scale?

|

|

102

103

|

|

|

103

|

-

|

|

104

|

+

The maximum number of entries in the co-concurrence and similarity matrix is k(n) = (n^2)-(n/2), it grows O(n^2). However, in a real scenario it is very unlikely that all item<->item combinations appear in a interaction set and we use a sparse matrix which will only use memory for elemtens with a value > 0. The size of the similarity grows O(n).

|

|

104

105

|

|

|

105

106

|

|

|

106

107

|

|

|

108

|

+

example

|

|

109

|

+

-------

|

|

107

110

|

|

|

108

|

-

|

|

111

|

+

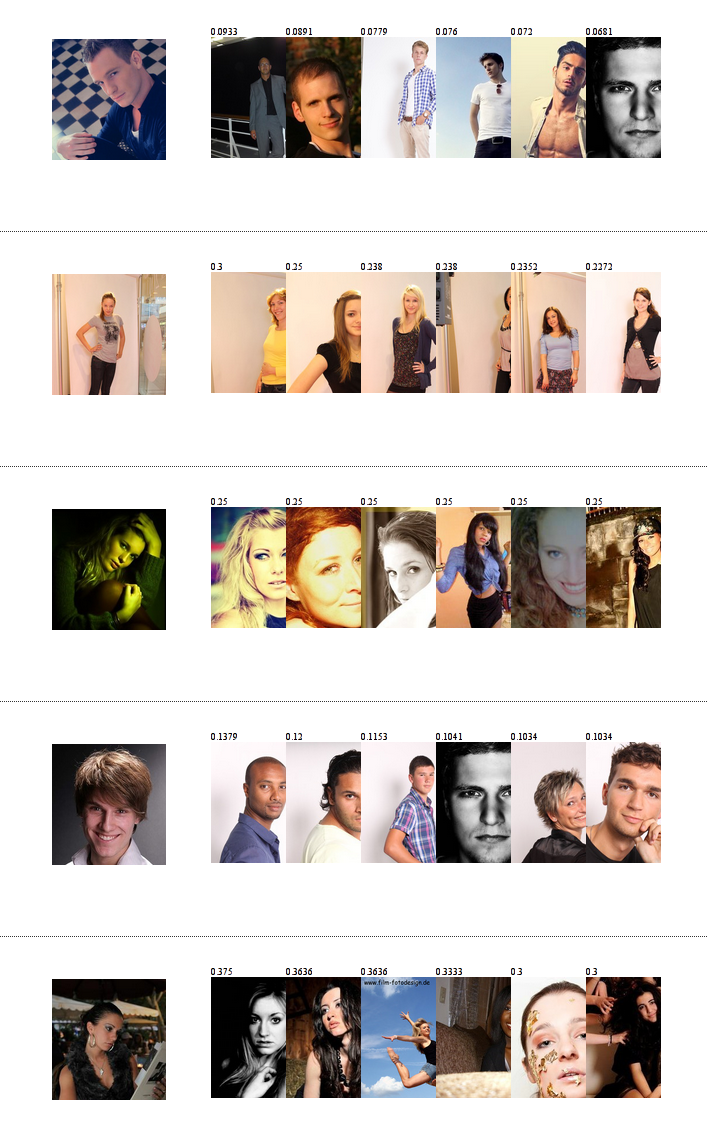

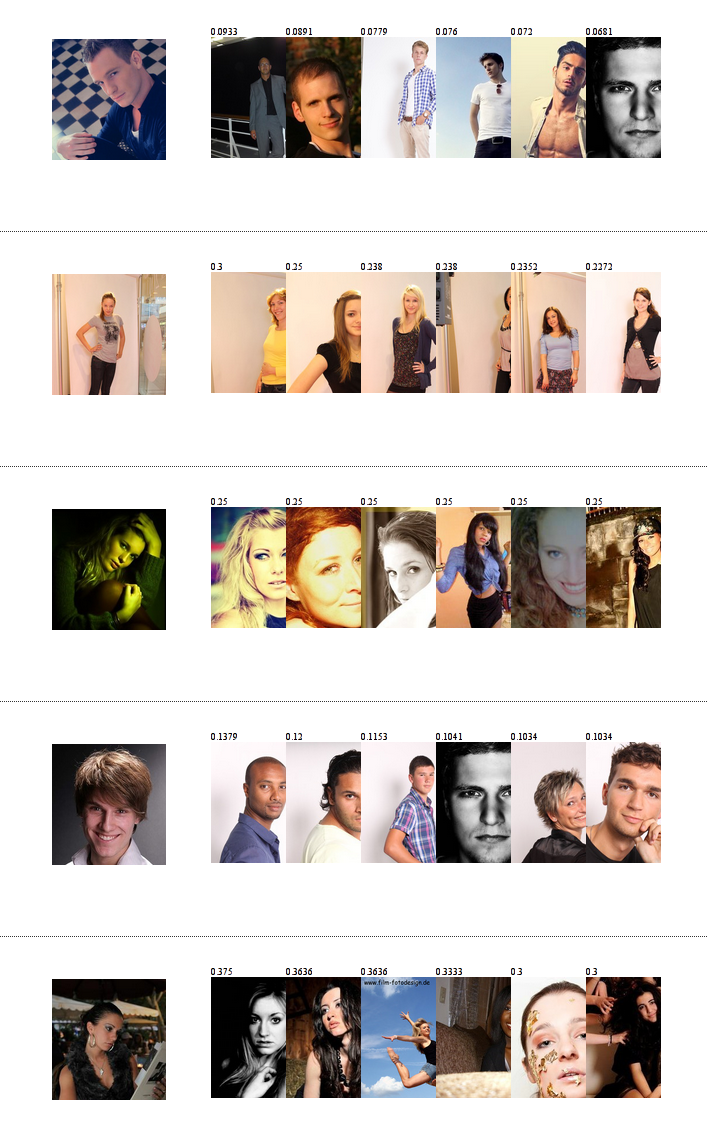

These recommendations were calculated from 2,3mb "profile visit"-data (taken from www.talentsuche.de) - keep in mind that the recommender uses only visitor->visited data, it __doesn't know the gender__ of a user.

|

|

109

112

|

|

|

110

|

-

|

|

113

|

+

[  ](http://falbala.23loc.com/~paul/recommendify_out_1.html)

|

|

114

|

+

|

|

115

|

+

full snippet: http://falbala.23loc.com/~paul/recommendify_out_1.html

|

|

111

116

|

|

|

117

|

+

Initially processing the 120.047 `visitor_id->profile_id` pairs currently takes around half an hour on a single core and creates a 126.64mb hashtable in redis. The high memory usage of >100mb for only 5000 items is due to the very long user rows. If you limit the user rows to 100 items (mahout's default) it shrinks to 31mb for the 5k items from example_data.csv. In another real data set with very short user rows (purchase/payment data) it used only 3.4mb for 90k items with very good results. You can try this for yourself; the complete data and code is in `doc/example.rb` and `doc/example_data.csv`.

|

|

112

118

|

|

|

113

119

|

|

|

114

120

|

|

|

@@ -151,4 +157,3 @@ THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLI

|

|

|

151

157

|

+ optimize sparsematrix memory usage (somehow)

|

|

152

158

|

+ make max_row length configurable

|

|

153

159

|

+ option: only add items where co-concurreny/appearnce-count > n

|

|

154

|

-

|

data/Rakefile

CHANGED

|

@@ -10,3 +10,12 @@ task :default => "spec"

|

|

|

10

10

|

|

|

11

11

|

desc "Generate documentation"

|

|

12

12

|

task YARD::Rake::YardocTask.new

|

|

13

|

+

|

|

14

|

+

|

|

15

|

+

desc "Compile the native client"

|

|

16

|

+

task :build_native do

|

|

17

|

+

out_dir = ::File.expand_path("../bin", __FILE__)

|

|

18

|

+

src_dir = ::File.expand_path("../src", __FILE__)

|

|

19

|

+

%x{mkdir -p #{out_dir}}

|

|

20

|

+

%x{gcc -Wall #{src_dir}/recommendify.c -lhiredis -o #{out_dir}/recommendify}

|

|

21

|

+

end

|

data/doc/example.rb

CHANGED

|

@@ -26,7 +26,8 @@ end

|

|

|

26

26

|

|

|

27

27

|

# add the test data to the recommender

|

|

28

28

|

buckets.each do |user_id, items|

|

|

29

|

-

puts "#{user_id} -> #{items.join(",")}"

|

|

29

|

+

puts "#{user_id} -> #{items.join(",")}"

|

|

30

|

+

items = items[0..99] # do not add more than 100 items per user

|

|

30

31

|

recommender.visits.add_set(user_id, items)

|

|

31

32

|

end

|

|

32

33

|

|

|

@@ -2,27 +2,28 @@ class Recommendify::JaccardInputMatrix < Recommendify::InputMatrix

|

|

|

2

2

|

|

|

3

3

|

include Recommendify::CCMatrix

|

|

4

4

|

|

|

5

|

-

def initialize(opts={})

|

|

6

|

-

|

|

5

|

+

def initialize(opts={})

|

|

6

|

+

check_native if opts[:native]

|

|

7

|

+

super(opts)

|

|

7

8

|

end

|

|

8

9

|

|

|

9

10

|

def similarity(item1, item2)

|

|

10

11

|

calculate_jaccard_cached(item1, item2)

|

|

11

12

|

end

|

|

12

13

|

|

|

13

|

-

# optimize: get all item-counts and the cc-row with 2 redis hmgets.

|

|

14

|

-

# optimize: don't return more than sm.max_neighbors items (truncate set while collecting)

|

|

15

14

|

def similarities_for(item1)

|

|

16

|

-

|

|

17

|

-

|

|

18

|

-

|

|

15

|

+

return run_native(item1) if @opts[:native]

|

|

16

|

+

calculate_similarities(item1)

|

|

17

|

+

end

|

|

18

|

+

|

|

19

|

+

private

|

|

20

|

+

|

|

21

|

+

def calculate_similarities(item1)

|

|

19

22

|

(all_items - [item1]).map do |item2|

|

|

20

23

|

[item2, similarity(item1, item2)]

|

|

21

24

|

end

|

|

22

25

|

end

|

|

23

26

|

|

|

24

|

-

private

|

|

25

|

-

|

|

26

27

|

def calculate_jaccard_cached(item1, item2)

|

|

27

28

|

val = ccmatrix[item1, item2]

|

|

28

29

|

val.to_f / (item_count(item1)+item_count(item2)-val).to_f

|

|

@@ -32,4 +33,22 @@ private

|

|

|

32

33

|

(set1&set2).length.to_f / (set1 + set2).uniq.length.to_f

|

|

33

34

|

end

|

|

34

35

|

|

|

36

|

+

def run_native(item_id)

|

|

37

|

+

res = %x{#{native_path} --jaccard "#{redis_key}" "#{item_id}"}

|

|

38

|

+

res.split("\n").map do |line|

|

|

39

|

+

sim = line.match(/OUT: \(([^\)]*)\) \(([^\)]*)\)/)

|

|

40

|

+

raise "error: #{res}" unless sim

|

|

41

|

+

[sim[1], sim[2].to_f]

|

|

42

|

+

end

|

|

43

|

+

end

|

|

44

|

+

|

|

45

|

+

def check_native

|

|

46

|

+

return true if ::File.exists?(native_path)

|

|

47

|

+

raise "recommendify_native not found - you need to run rake build_native first"

|

|

48

|

+

end

|

|

49

|

+

|

|

50

|

+

def native_path

|

|

51

|

+

::File.expand_path('../../../bin/recommendify', __FILE__)

|

|

52

|

+

end

|

|

53

|

+

|

|

35

54

|

end

|

|

@@ -0,0 +1,24 @@

|

|

|

1

|

+

# -*- encoding: utf-8 -*-

|

|

2

|

+

$:.push File.expand_path("../lib", __FILE__)

|

|

3

|

+

|

|

4

|

+

Gem::Specification.new do |s|

|

|

5

|

+

s.name = "recommendify"

|

|

6

|

+

s.version = "0.1.0"

|

|

7

|

+

s.date = Date.today.to_s

|

|

8

|

+

s.platform = Gem::Platform::RUBY

|

|

9

|

+

s.authors = ["Paul Asmuth"]

|

|

10

|

+

s.email = ["paul@paulasmuth.com"]

|

|

11

|

+

s.homepage = "http://github.com/paulasmuth/recommendify"

|

|

12

|

+

s.summary = %q{Distributed item-based "Collaborative Filtering" with ruby and redis}

|

|

13

|

+

s.description = %q{Distributed item-based "Collaborative Filtering" with ruby and redis}

|

|

14

|

+

s.licenses = ["MIT"]

|

|

15

|

+

|

|

16

|

+

s.add_dependency "redis", ">= 2.2.2"

|

|

17

|

+

|

|

18

|

+

s.add_development_dependency "rspec", "~> 2.8.0"

|

|

19

|

+

|

|

20

|

+

s.files = `git ls-files`.split("\n") - [".gitignore", ".rspec", ".travis.yml"]

|

|

21

|

+

s.test_files = `git ls-files -- spec/*`.split("\n")

|

|

22

|

+

s.executables = `git ls-files -- bin/*`.split("\n").map{ |f| File.basename(f) }

|

|

23

|

+

s.require_paths = ["lib"]

|

|

24

|

+

end

|

data/src/cc_item.h

ADDED

data/src/cosine.c

ADDED

data/src/iikey.c

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

1

|

+

char* item_item_key(char *item1, char *item2){

|

|

2

|

+

int keylen = strlen(item1) + strlen(item2) + 2;

|

|

3

|

+

char *key = (char *)malloc(keylen * sizeof(char));

|

|

4

|

+

|

|

5

|

+

if(!key){

|

|

6

|

+

printf("cannot allocate\n");

|

|

7

|

+

return 0;

|

|

8

|

+

}

|

|

9

|

+

|

|

10

|

+

// FIXPAUL: make shure this does exactly the same as ruby sort

|

|

11

|

+

if(rb_strcmp(item1, item2) <= 0){

|

|

12

|

+

snprintf(key, keylen, "%s:%s", item1, item2);

|

|

13

|

+

} else {

|

|

14

|

+

snprintf(key, keylen, "%s:%s", item2, item1);

|

|

15

|

+

}

|

|

16

|

+

|

|

17

|

+

return key;

|

|

18

|

+

}

|

data/src/jaccard.c

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

1

|

+

void calculate_jaccard(char *item_id, int itemCount, struct cc_item *cc_items, int cc_items_size){

|

|

2

|

+

int j, n;

|

|

3

|

+

|

|

4

|

+

for(j = 0; j < cc_items_size; j++){

|

|

5

|

+

n = cc_items[j].coconcurrency_count;

|

|

6

|

+

if(n>0){

|

|

7

|

+

cc_items[j].similarity = (

|

|

8

|

+

(float)n / (

|

|

9

|

+

(float)itemCount +

|

|

10

|

+

(float)cc_items[j].total_count -

|

|

11

|

+

(float)n

|

|

12

|

+

)

|

|

13

|

+

);

|

|

14

|

+

} else {

|

|

15

|

+

cc_items[j].similarity = 0.0;

|

|

16

|

+

}

|

|

17

|

+

}

|

|

18

|

+

|

|

19

|

+

}

|

data/src/output.c

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

1

|

+

int print_version(){

|

|

2

|

+

printf(

|

|

3

|

+

VERSION_STRING,

|

|

4

|

+

VERSION_MAJOR,

|

|

5

|

+

VERSION_MINOR,

|

|

6

|

+

VERSION_MICRO

|

|

7

|

+

);

|

|

8

|

+

return 0;

|

|

9

|

+

}

|

|

10

|

+

|

|

11

|

+

int print_usage(char *bin){

|

|

12

|

+

printf(USAGE_STRING, bin);

|

|

13

|

+

return 1;

|

|

14

|

+

}

|

|

15

|

+

|

|

16

|

+

void print_item(struct cc_item item){

|

|

17

|

+

printf(

|

|

18

|

+

"OUT: (%s) (%.4f)\n",

|

|

19

|

+

item.item_id,

|

|

20

|

+

item.similarity

|

|

21

|

+

);

|

|

22

|

+

}

|

data/src/recommendify.c

ADDED

|

@@ -0,0 +1,184 @@

|

|

|

1

|

+

#include <stdio.h>

|

|

2

|

+

#include <string.h>

|

|

3

|

+

#include <stdlib.h>

|

|

4

|

+

#include <hiredis/hiredis.h>

|

|

5

|

+

|

|

6

|

+

#include "version.h"

|

|

7

|

+

#include "cc_item.h"

|

|

8

|

+

#include "jaccard.c"

|

|

9

|

+

#include "cosine.c"

|

|

10

|

+

#include "output.c"

|

|

11

|

+

#include "sort.c"

|

|

12

|

+

#include "iikey.c"

|

|

13

|

+

|

|

14

|

+

|

|

15

|

+

int main(int argc, char **argv){

|

|

16

|

+

int i, j, n, similarityFunc = 0;

|

|

17

|

+

int itemCount = 0;

|

|

18

|

+

char *itemID;

|

|

19

|

+

char *redisPrefix;

|

|

20

|

+

redisContext *c;

|

|

21

|

+

redisReply *all_items;

|

|

22

|

+

redisReply *reply;

|

|

23

|

+

int cur_batch_size;

|

|

24

|

+

char* cur_batch;

|

|

25

|

+

char *iikey;

|

|

26

|

+

|

|

27

|

+

int batch_size = 200; /* FIXPAUL: make option */

|

|

28

|

+

int maxItems = 50; /* FIXPAUL: make option */

|

|

29

|

+

|

|

30

|

+

|

|

31

|

+

/* option parsing */

|

|

32

|

+

if(argc < 2)

|

|

33

|

+

return print_usage(argv[0]);

|

|

34

|

+

|

|

35

|

+

if(!strcmp(argv[1], "--version"))

|

|

36

|

+

return print_version();

|

|

37

|

+

|

|

38

|

+

if(!strcmp(argv[1], "--jaccard"))

|

|

39

|

+

similarityFunc = 1;

|

|

40

|

+

|

|

41

|

+

if(!strcmp(argv[1], "--cosine"))

|

|

42

|

+

similarityFunc = 2;

|

|

43

|

+

|

|

44

|

+

if(!similarityFunc){

|

|

45

|

+

printf("invalid option: %s\n", argv[1]);

|

|

46

|

+

return 1;

|

|

47

|

+

} else if(argc != 4){

|

|

48

|

+

printf("wrong number of arguments\n");

|

|

49

|

+

print_usage(argv[0]);

|

|

50

|

+

return 1;

|

|

51

|

+

}

|

|

52

|

+

|

|

53

|

+

redisPrefix = argv[2];

|

|

54

|

+

itemID = argv[3];

|

|

55

|

+

|

|

56

|

+

|

|

57

|

+

/* connect to redis */

|

|

58

|

+

struct timeval timeout = { 1, 500000 };

|

|

59

|

+

c = redisConnectWithTimeout("127.0.0.2", 6379, timeout);

|

|

60

|

+

|

|

61

|

+

if(c->err){

|

|

62

|

+

printf("connection to redis failed: %s\n", c->errstr);

|

|

63

|

+

return 1;

|

|

64

|

+

}

|

|

65

|

+

|

|

66

|

+

|

|

67

|

+

/* get item count */

|

|

68

|

+

reply = redisCommand(c,"HGET %s:items %s", redisPrefix, itemID);

|

|

69

|

+

itemCount = atoi(reply->str);

|

|

70

|

+

freeReplyObject(reply);

|

|

71

|

+

|

|

72

|

+

if(itemCount == 0){

|

|

73

|

+

printf("item count is zero\n");

|

|

74

|

+

return 0;

|

|

75

|

+

}

|

|

76

|

+

|

|

77

|

+

|

|

78

|

+

/* get all items_ids and the total counts */

|

|

79

|

+

all_items = redisCommand(c,"HGETALL %s:items", redisPrefix);

|

|

80

|

+

|

|

81

|

+

if(all_items->type != REDIS_REPLY_ARRAY)

|

|

82

|

+

return 1;

|

|

83

|

+

|

|

84

|

+

|

|

85

|

+

/* populate the cc_items array */

|

|

86

|

+

int cc_items_size = all_items->elements / 2;

|

|

87

|

+

int cc_items_mem = cc_items_size * sizeof(struct cc_item);

|

|

88

|

+

struct cc_item *cc_items = malloc(cc_items_mem);

|

|

89

|

+

cc_items_size--;

|

|

90

|

+

|

|

91

|

+

if(!cc_items){

|

|

92

|

+

printf("cannot allocate memory: %i", cc_items_mem);

|

|

93

|

+

return 1;

|

|

94

|

+

}

|

|

95

|

+

|

|

96

|

+

i = 0;

|

|

97

|

+

for (j = 0; j < all_items->elements/2; j++){

|

|

98

|

+

if(strcmp(itemID, all_items->element[j*2]->str) != 0){

|

|

99

|

+

strncpy(cc_items[i].item_id, all_items->element[j*2]->str, ITEM_ID_SIZE);

|

|

100

|

+

cc_items[i].total_count = atoi(all_items->element[j*2+1]->str);

|

|

101

|

+

i++;

|

|

102

|

+

}

|

|

103

|

+

}

|

|

104

|

+

|

|

105

|

+

freeReplyObject(all_items);

|

|

106

|

+

|

|

107

|

+

|

|

108

|

+

// batched redis hmgets on the ccmatrix

|

|

109

|

+

cur_batch = (char *)malloc(((batch_size * (ITEM_ID_SIZE + 4) * 2) + 100) * sizeof(char));

|

|

110

|

+

|

|

111

|

+

if(!cur_batch){

|

|

112

|

+

printf("cannot allocate memory");

|

|

113

|

+

return 1;

|

|

114

|

+

}

|

|

115

|

+

|

|

116

|

+

n = cc_items_size;

|

|

117

|

+

while(n >= 0){

|

|

118

|

+

cur_batch_size = ((n-1 < batch_size) ? n-1 : batch_size);

|

|

119

|

+

sprintf(cur_batch, "HMGET %s:ccmatrix ", redisPrefix);

|

|

120

|

+

|

|

121

|

+

for(i = 0; i < cur_batch_size; i++){

|

|

122

|

+

iikey = item_item_key(itemID, cc_items[n-i].item_id);

|

|

123

|

+

|

|

124

|

+

strcat(cur_batch, iikey);

|

|

125

|

+

strcat(cur_batch, " ");

|

|

126

|

+

|

|

127

|

+

if(iikey)

|

|

128

|

+

free(iikey);

|

|

129

|

+

}

|

|

130

|

+

|

|

131

|

+

redisAppendCommand(c, cur_batch);

|

|

132

|

+

redisGetReply(c, (void**)&reply);

|

|

133

|

+

|

|

134

|

+

for(j = 0; j < reply->elements; j++){

|

|

135

|

+

if(reply->element[j]->str){

|

|

136

|

+

cc_items[n-j].coconcurrency_count = atoi(reply->element[j]->str);

|

|

137

|

+

} else {

|

|

138

|

+

cc_items[n-j].coconcurrency_count = 0;

|

|

139

|

+

}

|

|

140

|

+

}

|

|

141

|

+

|

|

142

|

+

freeReplyObject(reply);

|

|

143

|

+

n -= batch_size;

|

|

144

|

+

}

|

|

145

|

+

|

|

146

|

+

free(cur_batch);

|

|

147

|

+

|

|

148

|

+

|

|

149

|

+

|

|

150

|

+

/* calculate similarities */

|

|

151

|

+

if(similarityFunc == 1)

|

|

152

|

+

calculate_jaccard(itemID, itemCount, cc_items, cc_items_size);

|

|

153

|

+

|

|

154

|

+

if(similarityFunc == 2)

|

|

155

|

+

calculate_cosine(itemID, itemCount, cc_items, cc_items_size);

|

|

156

|

+

|

|

157

|

+

|

|

158

|

+

/* find the top x items with simple bubble sort */

|

|

159

|

+

for(i = 0; i < maxItems - 1; ++i){

|

|

160

|

+

for (j = 0; j < cc_items_size - i - 1; ++j){

|

|

161

|

+

if (cc_items[j].similarity > cc_items[j + 1].similarity){

|

|

162

|

+

struct cc_item tmp = cc_items[j];

|

|

163

|

+

cc_items[j] = cc_items[j + 1];

|

|

164

|

+

cc_items[j + 1] = tmp;

|

|

165

|

+

}

|

|

166

|

+

}

|

|

167

|

+

}

|

|

168

|

+

|

|

169

|

+

|

|

170

|

+

/* print top k items */

|

|

171

|

+

n = ((cc_items_size < maxItems) ? cc_items_size : maxItems);

|

|

172

|

+

for(j = 0; j < n; j++){

|

|

173

|

+

i = cc_items_size-j-1;

|

|

174

|

+

if(cc_items[i].similarity > 0){

|

|

175

|

+

print_item(cc_items[i]);

|

|

176

|

+

}

|

|

177

|

+

}

|

|

178

|

+

|

|

179

|

+

|

|

180

|

+

free(cc_items);

|

|

181

|

+

return 0;

|

|

182

|

+

}

|

|

183

|

+

|

|

184

|

+

|

data/src/sort.c

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

1

|

+

int lesser(int i1, int i2){

|

|

2

|

+

if(i1 > i2){

|

|

3

|

+

return i2;

|

|

4

|

+

} else {

|

|

5

|

+

return i1;

|

|

6

|

+

}

|

|

7

|

+

}

|

|

8

|

+

|

|

9

|

+

int rb_strcmp(char *str1, char *str2){

|

|

10

|

+

long len;

|

|

11

|

+

int retval;

|

|

12

|

+

len = lesser(strlen(str1), strlen(str2));

|

|

13

|

+

retval = memcmp(str1, str2, len);

|

|

14

|

+

if (retval == 0){

|

|

15

|

+

if (strlen(str1) == strlen(str2)) {

|

|

16

|

+

return 0;

|

|

17

|

+

}

|

|

18

|

+

if (strlen(str1) > strlen(str2)) return 1;

|

|

19

|

+

return -1;

|

|

20

|

+

}

|

|

21

|

+

if (retval > 0) return 1;

|

|

22

|

+

return -1;

|

|

23

|

+

}

|

data/src/version.h

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

1

|

+

#ifndef VERSION_H

|

|

2

|

+

#define VERSION_H

|

|

3

|

+

|

|

4

|

+

#define VERSION_MAJOR 0

|

|

5

|

+

#define VERSION_MINOR 0

|

|

6

|

+

#define VERSION_MICRO 1

|

|

7

|

+

|

|

8

|

+

#define VERSION_STRING "recommendify_native %i.%i.%i\n" \

|

|

9

|

+

"\n" \

|

|

10

|

+

"Copyright © 2012\n" \

|

|

11

|

+

" Paul Asmuth <paul@paulasmuth.com>\n"

|

|

12

|

+

|

|

13

|

+

#define USAGE_STRING "usage: %s " \

|

|

14

|

+

"{--version|--jaccard|--cosine} " \

|

|

15

|

+

"[redis_key] [item_id]\n"

|

|

16

|

+

|

|

17

|

+

#endif

|

metadata

CHANGED

|

@@ -1,13 +1,8 @@

|

|

|

1

1

|

--- !ruby/object:Gem::Specification

|

|

2

2

|

name: recommendify

|

|

3

3

|

version: !ruby/object:Gem::Version

|

|

4

|

-

hash: 29

|

|

5

4

|

prerelease:

|

|

6

|

-

|

|

7

|

-

- 0

|

|

8

|

-

- 0

|

|

9

|

-

- 1

|

|

10

|

-

version: 0.0.1

|

|

5

|

+

version: 0.1.0

|

|

11

6

|

platform: ruby

|

|

12

7

|

authors:

|

|

13

8

|

- Paul Asmuth

|

|

@@ -15,7 +10,8 @@ autorequire:

|

|

|

15

10

|

bindir: bin

|

|

16

11

|

cert_chain: []

|

|

17

12

|

|

|

18

|

-

date: 2012-02-

|

|

13

|

+

date: 2012-02-12 00:00:00 +01:00

|

|

14

|

+

default_executable:

|

|

19

15

|

dependencies:

|

|

20

16

|

- !ruby/object:Gem::Dependency

|

|

21

17

|

name: redis

|

|

@@ -25,11 +21,6 @@ dependencies:

|

|

|

25

21

|

requirements:

|

|

26

22

|

- - ">="

|

|

27

23

|

- !ruby/object:Gem::Version

|

|

28

|

-

hash: 3

|

|

29

|

-

segments:

|

|

30

|

-

- 2

|

|

31

|

-

- 2

|

|

32

|

-

- 2

|

|

33

24

|

version: 2.2.2

|

|

34

25

|

type: :runtime

|

|

35

26

|

version_requirements: *id001

|

|

@@ -41,11 +32,6 @@ dependencies:

|

|

|

41

32

|

requirements:

|

|

42

33

|

- - ~>

|

|

43

34

|

- !ruby/object:Gem::Version

|

|

44

|

-

hash: 47

|

|

45

|

-

segments:

|

|

46

|

-

- 2

|

|

47

|

-

- 8

|

|

48

|

-

- 0

|

|

49

35

|

version: 2.8.0

|

|

50

36

|

type: :development

|

|

51

37

|

version_requirements: *id002

|

|

@@ -76,6 +62,7 @@ files:

|

|

|

76

62

|

- lib/recommendify/recommendify.rb

|

|

77

63

|

- lib/recommendify/similarity_matrix.rb

|

|

78

64

|

- lib/recommendify/sparse_matrix.rb

|

|

65

|

+

- recommendify.gemspec

|

|

79

66

|

- spec/base_spec.rb

|

|

80

67

|

- spec/cc_matrix_shared.rb

|

|

81

68

|

- spec/cosine_input_matrix_spec.rb

|

|

@@ -86,6 +73,15 @@ files:

|

|

|

86

73

|

- spec/similarity_matrix_spec.rb

|

|

87

74

|

- spec/sparse_matrix_spec.rb

|

|

88

75

|

- spec/spec_helper.rb

|

|

76

|

+

- src/cc_item.h

|

|

77

|

+

- src/cosine.c

|

|

78

|

+

- src/iikey.c

|

|

79

|

+

- src/jaccard.c

|

|

80

|

+

- src/output.c

|

|

81

|

+

- src/recommendify.c

|

|

82

|

+

- src/sort.c

|

|

83

|

+

- src/version.h

|

|

84

|

+

has_rdoc: true

|

|

89

85

|

homepage: http://github.com/paulasmuth/recommendify

|

|

90

86

|

licenses:

|

|

91

87

|

- MIT

|

|

@@ -99,23 +95,17 @@ required_ruby_version: !ruby/object:Gem::Requirement

|

|

|

99

95

|

requirements:

|

|

100

96

|

- - ">="

|

|

101

97

|

- !ruby/object:Gem::Version

|

|

102

|

-

hash: 3

|

|

103

|

-

segments:

|

|

104

|

-

- 0

|

|

105

98

|

version: "0"

|

|

106

99

|

required_rubygems_version: !ruby/object:Gem::Requirement

|

|

107

100

|

none: false

|

|

108

101

|

requirements:

|

|

109

102

|

- - ">="

|

|

110

103

|

- !ruby/object:Gem::Version

|

|

111

|

-

hash: 3

|

|

112

|

-

segments:

|

|

113

|

-

- 0

|

|

114

104

|

version: "0"

|

|

115

105

|

requirements: []

|

|

116

106

|

|

|

117

107

|

rubyforge_project:

|

|

118

|

-

rubygems_version: 1.

|

|

108

|

+

rubygems_version: 1.6.2

|

|

119

109

|

signing_key:

|

|

120

110

|

specification_version: 3

|

|

121

111

|

summary: Distributed item-based "Collaborative Filtering" with ruby and redis

|