minimum-term 0.2.0

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- checksums.yaml +7 -0

- data/.gitignore +9 -0

- data/.rspec +1 -0

- data/.ruby-gemset +1 -0

- data/.ruby-version +1 -0

- data/Gemfile +2 -0

- data/Guardfile +41 -0

- data/README.markdown +58 -0

- data/Rakefile +9 -0

- data/circle.yml +5 -0

- data/lib/minimum-term/compare/json_schema.rb +123 -0

- data/lib/minimum-term/consume_contract.rb +9 -0

- data/lib/minimum-term/consumed_object.rb +16 -0

- data/lib/minimum-term/contract.rb +34 -0

- data/lib/minimum-term/conversion/apiary_to_json_schema.rb +9 -0

- data/lib/minimum-term/conversion/data_structure.rb +92 -0

- data/lib/minimum-term/conversion/error.rb +7 -0

- data/lib/minimum-term/conversion.rb +125 -0

- data/lib/minimum-term/infrastructure.rb +75 -0

- data/lib/minimum-term/object_description.rb +34 -0

- data/lib/minimum-term/publish_contract.rb +22 -0

- data/lib/minimum-term/published_object.rb +14 -0

- data/lib/minimum-term/service.rb +63 -0

- data/lib/minimum-term/tasks.rb +66 -0

- data/lib/minimum-term/version.rb +3 -0

- data/lib/minimum-term.rb +10 -0

- data/minimum-term.gemspec +33 -0

- data/stuff/contracts/author/consume.mson +0 -0

- data/stuff/contracts/author/publish.mson +20 -0

- data/stuff/contracts/edward/consume.mson +7 -0

- data/stuff/contracts/edward/publish.mson +0 -0

- data/stuff/explore/blueprint.apib +239 -0

- data/stuff/explore/swagger.yaml +139 -0

- data/stuff/explore/test.apib +14 -0

- data/stuff/explore/test.mson +239 -0

- data/stuff/explore/tmp.txt +27 -0

- metadata +220 -0

checksums.yaml

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

1

|

+

---

|

|

2

|

+

SHA1:

|

|

3

|

+

metadata.gz: c8b2676a106025dc8b1940e68c3c711b82c77fda

|

|

4

|

+

data.tar.gz: 562cea8babc12813ac818aaa140ec5df1d24e865

|

|

5

|

+

SHA512:

|

|

6

|

+

metadata.gz: 8b3072fecfb406b52f68f26d1085496cb33fc09a07dc64c42ca73d904c6576bf70efb8d92bb50af1807f5d7696fe1400874dac9a830aedc7bf9d137bc1c95167

|

|

7

|

+

data.tar.gz: d6621d9603a127b7b50169f258f22512f794232e40cd8c6daa751c00d8ad2ed30e2676ccd0164387c5e3aa78587a7228db132bbd122f25089847e9935a1f847f

|

data/.gitignore

ADDED

data/.rspec

ADDED

|

@@ -0,0 +1 @@

|

|

|

1

|

+

--color

|

data/.ruby-gemset

ADDED

|

@@ -0,0 +1 @@

|

|

|

1

|

+

minimum-term

|

data/.ruby-version

ADDED

|

@@ -0,0 +1 @@

|

|

|

1

|

+

2

|

data/Gemfile

ADDED

data/Guardfile

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

1

|

+

# A sample Guardfile

|

|

2

|

+

# More info at https://github.com/guard/guard#readme

|

|

3

|

+

|

|

4

|

+

## Uncomment and set this to only include directories you want to watch

|

|

5

|

+

# directories %w(app lib config test spec features) \

|

|

6

|

+

# .select{|d| Dir.exists?(d) ? d : UI.warning("Directory #{d} does not exist")}

|

|

7

|

+

|

|

8

|

+

## Note: if you are using the `directories` clause above and you are not

|

|

9

|

+

## watching the project directory ('.'), then you will want to move

|

|

10

|

+

## the Guardfile to a watched dir and symlink it back, e.g.

|

|

11

|

+

#

|

|

12

|

+

# $ mkdir config

|

|

13

|

+

# $ mv Guardfile config/

|

|

14

|

+

# $ ln -s config/Guardfile .

|

|

15

|

+

#

|

|

16

|

+

# and, you'll have to watch "config/Guardfile" instead of "Guardfile"

|

|

17

|

+

|

|

18

|

+

guard :bundler do

|

|

19

|

+

watch('Gemfile')

|

|

20

|

+

# Uncomment next line if your Gemfile contains the `gemspec' command.

|

|

21

|

+

watch(/^.+\.gemspec/)

|

|

22

|

+

end

|

|

23

|

+

|

|

24

|

+

guard 'ctags-bundler', :src_path => ["lib"] do

|

|

25

|

+

watch(/^(app|lib|spec\/support)\/.*\.rb$/)

|

|

26

|

+

watch('Gemfile.lock')

|

|

27

|

+

watch(/^.+\.gemspec/)

|

|

28

|

+

end

|

|

29

|

+

|

|

30

|

+

guard :rspec, cmd: 'IGNORE_LOW_COVERAGE=1 rspec', all_on_start: true do

|

|

31

|

+

watch(%r{^spec/support/.*\.mson$}) { "spec" }

|

|

32

|

+

watch(%r{^spec/support/.*\.rb$}) { "spec" }

|

|

33

|

+

watch('spec/spec_helper.rb') { "spec" }

|

|

34

|

+

|

|

35

|

+

# We could run individual specs, sure, but for now I dictate the tests

|

|

36

|

+

# are only green when we have 100% coverage, so partial runs will never

|

|

37

|

+

# succeed. Therefore, always run all the things.

|

|

38

|

+

watch(%r{^(spec/.+_spec\.rb)$}) { "spec" }

|

|

39

|

+

watch(%r{^lib/(.+)\.rb$}) { "spec" }

|

|

40

|

+

end

|

|

41

|

+

|

data/README.markdown

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

1

|

+

# Minimum term

|

|

2

|

+

|

|

3

|

+

This shall be a framework so that in each of our services one can define what messages it publishes and what messages it consumes. That way when changing what one service publishes or consumes, one can immediately see the effects on our other services.

|

|

4

|

+

|

|

5

|

+

|

|

6

|

+

## Prerequesites

|

|

7

|

+

|

|

8

|

+

- install drafter via `brew install --HEAD

|

|

9

|

+

https://raw.github.com/apiaryio/drafter/master/tools/homebrew/drafter.rb`

|

|

10

|

+

- run `bundle`

|

|

11

|

+

|

|

12

|

+

## Tests and development

|

|

13

|

+

- run `guard` in a spare terminal which will run the tests,

|

|

14

|

+

install gems, and so forth

|

|

15

|

+

- run `rspec spec` to run all the tests

|

|

16

|

+

- check out `open coverage/index.html` or `open coverage/rcov/index.html`

|

|

17

|

+

- run `bundle console` to play around with a console

|

|

18

|

+

|

|

19

|

+

## Structure

|

|

20

|

+

- Infrastructure

|

|

21

|

+

- Service

|

|

22

|

+

- Contracts

|

|

23

|

+

- Publish contract

|

|

24

|

+

- PublishedObjects

|

|

25

|

+

- Consume contract

|

|

26

|

+

- ConsumedObjects

|

|

27

|

+

|

|

28

|

+

|

|

29

|

+

## Convert MSON to JSON Schema files

|

|

30

|

+

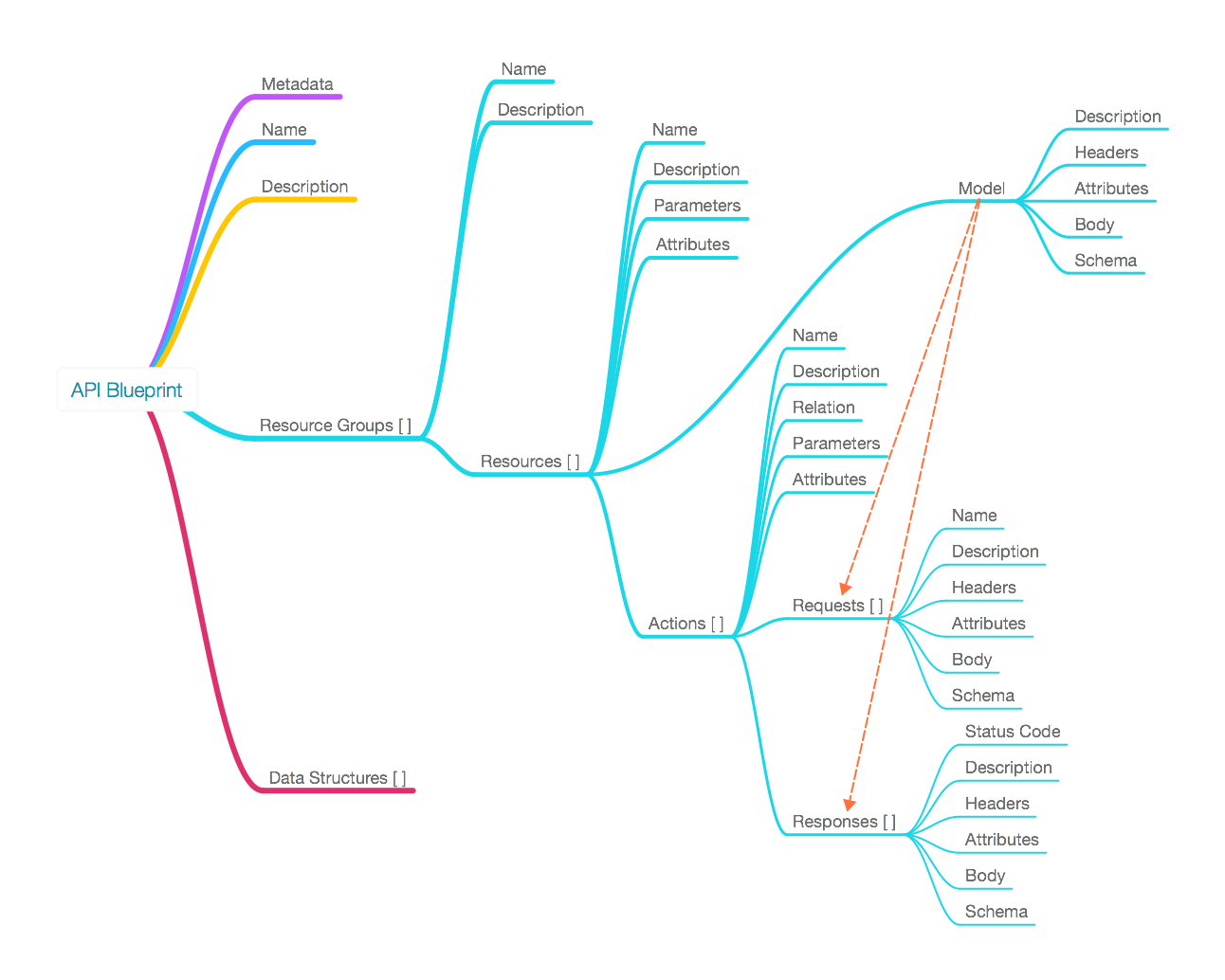

First, check out [this API Blueprint map](https://github.com/apiaryio/api-blueprint/wiki/API-Blueprint-Map) to understand how _API Blueprint_ documents are laid out:

|

|

31

|

+

|

|

32

|

+

|

|

33

|

+

|

|

34

|

+

You can see that their structure covers a full API use case with resource groups, single resources, actions on those resources including requests and responses. All we want, though, is the little red top level branch called `Data structures`.

|

|

35

|

+

|

|

36

|

+

In theory, we'd use a ruby gem called [RedSnow](https://github.com/apiaryio/redsnow), which has bindings to [SnowCrash](https://github.com/apiaryio/snowcrash) which parses _API Blueprints_ into an AST. Unfortunately, RedSnow ignores that red `Data structures` branch we want (SnowCrash parses it just fine).

|

|

37

|

+

|

|

38

|

+

So for now, we use a command line tool called [drafter](https://github.com/apiaryio/drafter) to convert MSON into an _API Blueprint_ AST. From that AST we pic the `Data structures` entry and convert it into [JSON Schema]()s

|

|

39

|

+

|

|

40

|

+

Luckily, a rake task does all that for you. To convert all `*.mson` files in `contracts/` into `*.schema.json` files,

|

|

41

|

+

|

|

42

|

+

put this in your `Rakefile`:

|

|

43

|

+

|

|

44

|

+

```ruby

|

|

45

|

+

require "minimum-term/tasks"

|

|

46

|

+

```

|

|

47

|

+

|

|

48

|

+

and smoke it:

|

|

49

|

+

|

|

50

|

+

```shell

|

|

51

|

+

/home/dev/minimum-term$ DATA_DIR=contracts/ rake minimum_term:mson_to_json_schema

|

|

52

|

+

Converting 4 files:

|

|

53

|

+

OK /home/dev/minimum-term/contracts/consumer/consume.mson

|

|

54

|

+

OK /home/dev/minimum-term/contracts/invalid_property/consume.mson

|

|

55

|

+

OK /home/dev/minimum-term/contracts/missing_required/consume.mson

|

|

56

|

+

OK /home/dev/minimum-term/contracts/publisher/publish.mson

|

|

57

|

+

/home/dev/minimum-term$

|

|

58

|

+

```

|

data/Rakefile

ADDED

data/circle.yml

ADDED

|

@@ -0,0 +1,123 @@

|

|

|

1

|

+

module MinimumTerm

|

|

2

|

+

module Compare

|

|

3

|

+

class JsonSchema

|

|

4

|

+

ERRORS = {

|

|

5

|

+

:ERR_ARRAY_ITEM_MISMATCH => nil,

|

|

6

|

+

:ERR_MISSING_DEFINITION => nil,

|

|

7

|

+

:ERR_MISSING_POINTER => nil,

|

|

8

|

+

:ERR_MISSING_PROPERTY => nil,

|

|

9

|

+

:ERR_MISSING_REQUIRED => nil,

|

|

10

|

+

:ERR_MISSING_TYPE_AND_REF => nil,

|

|

11

|

+

:ERR_TYPE_MISMATCH => nil,

|

|

12

|

+

:ERR_NOT_SUPPORTED => nil

|

|

13

|

+

}

|

|

14

|

+

|

|

15

|

+

attr_reader :errors

|

|

16

|

+

|

|

17

|

+

def initialize(containing_schema)

|

|

18

|

+

@containing_schema = containing_schema

|

|

19

|

+

end

|

|

20

|

+

|

|

21

|

+

def contains?(contained_schema, pry = false)

|

|

22

|

+

@errors = []

|

|

23

|

+

@contained_schema = contained_schema

|

|

24

|

+

definitions_contained?

|

|

25

|

+

end

|

|

26

|

+

|

|

27

|

+

private

|

|

28

|

+

|

|

29

|

+

def definitions_contained?

|

|

30

|

+

@contained_schema['definitions'].each do |property, contained_property|

|

|

31

|

+

containing_property = @containing_schema['definitions'][property]

|

|

32

|

+

return _e(:ERR_MISSING_DEFINITION, [property]) unless containing_property

|

|

33

|

+

return false unless schema_contains?(containing_property, contained_property, [property])

|

|

34

|

+

end

|

|

35

|

+

true

|

|

36

|

+

end

|

|

37

|

+

|

|

38

|

+

def _e(error, location, extra = nil)

|

|

39

|

+

message = [ERRORS[error], extra].compact.join(": ")

|

|

40

|

+

@errors.push(error: error, message: message, location: location.join("/"))

|

|

41

|

+

false

|

|

42

|

+

end

|

|

43

|

+

|

|

44

|

+

def schema_contains?(publish, consume, location = [])

|

|

45

|

+

|

|

46

|

+

# We can only compare types and $refs, so let's make

|

|

47

|

+

# sure they're there

|

|

48

|

+

return _e!(:ERR_MISSING_TYPE_AND_REF) unless

|

|

49

|

+

(consume['type'] or consume['$ref']) and

|

|

50

|

+

(publish['type'] or publish['$ref'])

|

|

51

|

+

|

|

52

|

+

# There's four possibilities here:

|

|

53

|

+

#

|

|

54

|

+

# 1) publish and consume have a type defined

|

|

55

|

+

# 2) publish and consume have a $ref defined

|

|

56

|

+

# 3) publish has a $ref defined, and consume an inline object

|

|

57

|

+

# 4) consume has a $ref defined, and publish an inline object

|

|

58

|

+

# (we don't support this yet, as otherwise couldn't check for

|

|

59

|

+

# missing definitions, because we could never know if something

|

|

60

|

+

# specified in the definitions of the consuming schema exists in

|

|

61

|

+

# the publishing schema as an inline property somewhere).

|

|

62

|

+

# TODO: check if what I just said makes sense. I'm not sure anymore.

|

|

63

|

+

# Let's go:

|

|

64

|

+

|

|

65

|

+

# 1)

|

|

66

|

+

if (consume['type'] and publish['type'])

|

|

67

|

+

if consume['type'] != publish['type']

|

|

68

|

+

return _e(:ERR_TYPE_MISMATCH, location, "#{consume['type']} != #{publish['type']}")

|

|

69

|

+

end

|

|

70

|

+

|

|

71

|

+

# 2)

|

|

72

|

+

elsif(consume['$ref'] and publish['$ref'])

|

|

73

|

+

resolved_consume = resolve_pointer(consume['$ref'], @contained_schema)

|

|

74

|

+

resolved_publish = resolve_pointer(publish['$ref'], @containing_schema)

|

|

75

|

+

return schema_contains?(resolved_publish, resolved_consume, location)

|

|

76

|

+

|

|

77

|

+

# 3)

|

|

78

|

+

elsif(consume['type'] and publish['$ref'])

|

|

79

|

+

if resolved_ref = resolve_pointer(publish['$ref'], @containing_schema)

|

|

80

|

+

return schema_contains?(resolved_ref, consume, location)

|

|

81

|

+

else

|

|

82

|

+

return _e(:ERR_MISSING_POINTER, location, publish['$ref'])

|

|

83

|

+

end

|

|

84

|

+

|

|

85

|

+

# 4)

|

|

86

|

+

elsif(consume['$ref'] and publish['type'])

|

|

87

|

+

return _e(:ERR_NOT_SUPPORTED, location)

|

|

88

|

+

end

|

|

89

|

+

|

|

90

|

+

# Make sure required properties in consume are required in publish

|

|

91

|

+

consume_required = consume['required'] || []

|

|

92

|

+

publish_required = publish['required'] || []

|

|

93

|

+

missing = (consume_required - publish_required)

|

|

94

|

+

return _e(:ERR_MISSING_REQUIRED, location, missing.to_json) unless missing.empty?

|

|

95

|

+

|

|

96

|

+

# We already know that publish and consume's type are equal

|

|

97

|

+

# but if they're objects, we need to do some recursion

|

|

98

|

+

if consume['type'] == 'object'

|

|

99

|

+

consume['properties'].each do |property, schema|

|

|

100

|

+

return _e(:ERR_MISSING_PROPERTY, location, property) unless publish['properties'][property]

|

|

101

|

+

return false unless schema_contains?(publish['properties'][property], schema, location + [property])

|

|

102

|

+

end

|

|

103

|

+

end

|

|

104

|

+

|

|

105

|

+

if consume['type'] == 'array'

|

|

106

|

+

sorted_publish = publish['items'].sort

|

|

107

|

+

consume['items'].sort.each_with_index do |item, i|

|

|

108

|

+

next if schema_contains?(sorted_publish[i], item)

|

|

109

|

+

return _e(:ERR_ARRAY_ITEM_MISMATCH, location)

|

|

110

|

+

end

|

|

111

|

+

end

|

|

112

|

+

|

|

113

|

+

true

|

|

114

|

+

end

|

|

115

|

+

|

|

116

|

+

def resolve_pointer(pointer, schema)

|

|

117

|

+

type = pointer[/\#\/definitions\/([^\/]+)$/, 1]

|

|

118

|

+

return false unless type

|

|

119

|

+

schema['definitions'][type]

|

|

120

|

+

end

|

|

121

|

+

end

|

|

122

|

+

end

|

|

123

|

+

end

|

|

@@ -0,0 +1,16 @@

|

|

|

1

|

+

require 'minimum-term/object_description'

|

|

2

|

+

|

|

3

|

+

module MinimumTerm

|

|

4

|

+

class ConsumedObject < MinimumTerm::ObjectDescription

|

|

5

|

+

|

|

6

|

+

def publisher

|

|

7

|

+

i = @scoped_name.index(MinimumTerm::SCOPE_SEPARATOR)

|

|

8

|

+

return @defined_in_service unless i

|

|

9

|

+

@defined_in_service.infrastructure.services[@scoped_name[0...i].underscore.to_sym]

|

|

10

|

+

end

|

|

11

|

+

|

|

12

|

+

def consumer

|

|

13

|

+

@defined_in_service

|

|

14

|

+

end

|

|

15

|

+

end

|

|

16

|

+

end

|

|

@@ -0,0 +1,34 @@

|

|

|

1

|

+

require 'active_support/core_ext/hash/indifferent_access'

|

|

2

|

+

require 'minimum-term/published_object'

|

|

3

|

+

require 'minimum-term/consumed_object'

|

|

4

|

+

|

|

5

|

+

module MinimumTerm

|

|

6

|

+

class Contract

|

|

7

|

+

attr_reader :service, :schema

|

|

8

|

+

|

|

9

|

+

def initialize(service, schema_or_file)

|

|

10

|

+

@service = service

|

|

11

|

+

load_schema(schema_or_file)

|

|

12

|

+

end

|

|

13

|

+

|

|

14

|

+

def objects

|

|

15

|

+

return [] unless @schema[:definitions]

|

|

16

|

+

@schema[:definitions].map do |scoped_name, schema|

|

|

17

|

+

object_description_class.new(service, scoped_name, schema)

|

|

18

|

+

end

|

|

19

|

+

end

|

|

20

|

+

|

|

21

|

+

private

|

|

22

|

+

|

|

23

|

+

def load_schema(schema_or_file)

|

|

24

|

+

if schema_or_file.is_a?(Hash)

|

|

25

|

+

@schema = schema_or_file

|

|

26

|

+

elsif File.readable?(schema_or_file)

|

|

27

|

+

@schema = JSON.parse(open(schema_or_file).read)

|

|

28

|

+

else

|

|

29

|

+

@schema = {}

|

|

30

|

+

end

|

|

31

|

+

@schema = @schema.with_indifferent_access

|

|

32

|

+

end

|

|

33

|

+

end

|

|

34

|

+

end

|

|

@@ -0,0 +1,92 @@

|

|

|

1

|

+

require 'active_support/core_ext/string'

|

|

2

|

+

|

|

3

|

+

module MinimumTerm

|

|

4

|

+

module Conversion

|

|

5

|

+

class DataStructure

|

|

6

|

+

PRIMITIVES = %w{boolean string number array enum object}

|

|

7

|

+

|

|

8

|

+

def self.scope(scope, string)

|

|

9

|

+

[scope, string.to_s].compact.join(MinimumTerm::SCOPE_SEPARATOR).underscore

|

|

10

|

+

end

|

|

11

|

+

|

|

12

|

+

def initialize(id, data, scope = nil)

|

|

13

|

+

@scope = scope

|

|

14

|

+

@data = data

|

|

15

|

+

@id = self.class.scope(@scope, id)

|

|

16

|

+

@schema = json_schema_blueprint

|

|

17

|

+

@schema['title'] = @id

|

|

18

|

+

add_description_to_json_schema

|

|

19

|

+

add_properties_to_json_schema

|

|

20

|

+

end

|

|

21

|

+

|

|

22

|

+

def to_json

|

|

23

|

+

@schema

|

|

24

|

+

end

|

|

25

|

+

|

|

26

|

+

private

|

|

27

|

+

|

|

28

|

+

def add_description_to_json_schema

|

|

29

|

+

return unless @data['meta']

|

|

30

|

+

description = @data['meta']['description']

|

|

31

|

+

return unless description

|

|

32

|

+

@schema['description'] = description.strip

|

|

33

|

+

end

|

|

34

|

+

|

|

35

|

+

def add_properties_to_json_schema

|

|

36

|

+

return unless @data['content']

|

|

37

|

+

members = @data['content'].select{|d| d['element'] == 'member' }

|

|

38

|

+

members.each do |s|

|

|

39

|

+

content = s['content']

|

|

40

|

+

type = content['value']['element']

|

|

41

|

+

|

|

42

|

+

spec = {}

|

|

43

|

+

name = s['content']['key']['content'].underscore

|

|

44

|

+

|

|

45

|

+

# This is either type: primimtive or $ref: reference_name

|

|

46

|

+

spec.merge!(primitive_or_reference(type))

|

|

47

|

+

|

|

48

|

+

value_content = content['value']['content']

|

|

49

|

+

|

|

50

|

+

# We might have a description

|

|

51

|

+

spec['description'] = s['meta']['description'] if s['meta']

|

|

52

|

+

|

|

53

|

+

# If it's an array, we need to pluck out the item types

|

|

54

|

+

if type == 'array'

|

|

55

|

+

nestedTypes = value_content.map{|d| d['element'] }.compact

|

|

56

|

+

spec['items'] = nestedTypes.map{|t| primitive_or_reference(t) }

|

|

57

|

+

|

|

58

|

+

# If it's an object, we need recursion

|

|

59

|

+

elsif type == 'object'

|

|

60

|

+

spec['properties'] = {}

|

|

61

|

+

value_content.select{|d| d['element'] == 'member'}.each do |data|

|

|

62

|

+

data_structure = DataStructure.new('tmp', content['value'], @scope).to_json

|

|

63

|

+

spec['properties'].merge!(data_structure['properties'])

|

|

64

|

+

end

|

|

65

|

+

end

|

|

66

|

+

|

|

67

|

+

@schema['properties'][name] = spec

|

|

68

|

+

if attributes = s['attributes']

|

|

69

|

+

@schema['required'] << name if attributes['typeAttributes'].include?('required')

|

|

70

|

+

end

|

|

71

|

+

end

|

|

72

|

+

end

|

|

73

|

+

|

|

74

|

+

def primitive_or_reference(type)

|

|

75

|

+

if PRIMITIVES.include?(type)

|

|

76

|

+

{ 'type' => type }

|

|

77

|

+

else

|

|

78

|

+

{ '$ref' => "#/definitions/#{self.class.scope(@scope, type)}" }

|

|

79

|

+

end

|

|

80

|

+

end

|

|

81

|

+

|

|

82

|

+

def json_schema_blueprint

|

|

83

|

+

{

|

|

84

|

+

"type" => "object",

|

|

85

|

+

"properties" => {},

|

|

86

|

+

"required" => []

|

|

87

|

+

}

|

|

88

|

+

end

|

|

89

|

+

|

|

90

|

+

end

|

|

91

|

+

end

|

|

92

|

+

end

|

|

@@ -0,0 +1,125 @@

|

|

|

1

|

+

require 'fileutils'

|

|

2

|

+

require 'open3'

|

|

3

|

+

require 'minimum-term/conversion/apiary_to_json_schema'

|

|

4

|

+

require 'minimum-term/conversion/error'

|

|

5

|

+

|

|

6

|

+

module MinimumTerm

|

|

7

|

+

module Conversion

|

|

8

|

+

def self.mson_to_json_schema(filename:, keep_intermediary_files: false, verbose: false)

|

|

9

|

+

begin

|

|

10

|

+

mson_to_json_schema!(filename: filename, keep_intermediary_files: keep_intermediary_files, verbose: verbose)

|

|

11

|

+

puts "OK ".green + filename if verbose

|

|

12

|

+

true

|

|

13

|

+

rescue

|

|

14

|

+

puts "ERROR ".red + filename if verbose

|

|

15

|

+

false

|

|

16

|

+

end

|

|

17

|

+

end

|

|

18

|

+

|

|

19

|

+

def self.mson_to_json_schema!(filename:, keep_intermediary_files: false, verbose: true)

|

|

20

|

+

|

|

21

|

+

# For now, we'll use the containing directory's name as a scope

|

|

22

|

+

service_scope = File.dirname(filename).split(File::SEPARATOR).last.underscore

|

|

23

|

+

|

|

24

|

+

# Parse MSON to an apiary blueprint AST

|

|

25

|

+

# (see https://github.com/apiaryio/api-blueprint/wiki/API-Blueprint-Map)

|

|

26

|

+

to_ast = mson_to_ast_json(filename)

|

|

27

|

+

raise Error, "Error: #{to_ast}" unless to_ast[:status] == 0

|

|

28

|

+

|

|

29

|

+

# Pluck out Data structures from it

|

|

30

|

+

data_structures = data_structures_from_blueprint_ast(to_ast[:outfile])

|

|

31

|

+

|

|

32

|

+

# Generate json schema from each contained data structure

|

|

33

|

+

schema = {

|

|

34

|

+

"$schema" => "http://json-schema.org/draft-04/schema#",

|

|

35

|

+

"title" => service_scope,

|

|

36

|

+

"definitions" => {},

|

|

37

|

+

"type" => "object",

|

|

38

|

+

"properties" => {},

|

|

39

|

+

}

|

|

40

|

+

|

|

41

|

+

# The scope for the data structure is the name of the service

|

|

42

|

+

# publishing the object. So if we're parsing a 'consume' schema,

|

|

43

|

+

# the containing objects are alredy scoped (because a consume

|

|

44

|

+

# schema says 'i consume object X from service Y'.

|

|

45

|

+

basename = File.basename(filename)

|

|

46

|

+

if basename.end_with?("publish.mson")

|

|

47

|

+

data_structure_autoscope = service_scope

|

|

48

|

+

elsif basename.end_with?("consume.mson")

|

|

49

|

+

data_structure_autoscope = nil

|

|

50

|

+

else

|

|

51

|

+

raise Error, "Invalid filename #{basename}, can't tell if it's a publish or consume schema"

|

|

52

|

+

end

|

|

53

|

+

|

|

54

|

+

# The json schema we're constructing contains every known

|

|

55

|

+

# object type in the 'definitions'. So if we have definitions for

|

|

56

|

+

# the objects User, Post and Tag, the schema will look like this:

|

|

57

|

+

#

|

|

58

|

+

# {

|

|

59

|

+

# "$schema": "..."

|

|

60

|

+

#

|

|

61

|

+

# "definitions": {

|

|

62

|

+

# "user": { "type": "object", "properties": { ... }}

|

|

63

|

+

# "post": { "type": "object", "properties": { ... }}

|

|

64

|

+

# "tag": { "type": "object", "properties": { ... }}

|

|

65

|

+

# }

|

|

66

|

+

#

|

|

67

|

+

# "properties": {

|

|

68

|

+

# "user": "#/definitions/user"

|

|

69

|

+

# "post": "#/definitions/post"

|

|

70

|

+

# "tag": "#/definitions/tag"

|

|

71

|

+

# }

|

|

72

|

+

#

|

|

73

|

+

# }

|

|

74

|

+

#

|

|

75

|

+

# So when testing an object of type `user` against this schema,

|

|

76

|

+

# we need to wrap it as:

|

|

77

|

+

#

|

|

78

|

+

# {

|

|

79

|

+

# user: {

|

|

80

|

+

# "your": "actual",

|

|

81

|

+

# "data": "goes here"

|

|

82

|

+

# }

|

|

83

|

+

# }

|

|

84

|

+

#

|

|

85

|

+

data_structures.each do |data|

|

|

86

|

+

schema_data = data['content'].select{|d| d['element'] == 'object' }.first

|

|

87

|

+

id = schema_data['meta']['id']

|

|

88

|

+

json= DataStructure.new(id, schema_data, data_structure_autoscope).to_json

|

|

89

|

+

member = json.delete('title')

|

|

90

|

+

schema['definitions'][member] = json

|

|

91

|

+

schema['properties'][member] = {"$ref" => "#/definitions/#{member}"}

|

|

92

|

+

end

|

|

93

|

+

|

|

94

|

+

# Write it in a file

|

|

95

|

+

outfile = filename.gsub(/\.\w+$/, '.schema.json')

|

|

96

|

+

File.open(outfile, 'w'){ |f| f.puts JSON.pretty_generate(schema) }

|

|

97

|

+

|

|

98

|

+

# Clean up

|

|

99

|

+

FileUtils.rm_f(to_ast[:outfile]) unless keep_intermediary_files

|

|

100

|

+

true

|

|

101

|

+

end

|

|

102

|

+

|

|

103

|

+

def self.data_structures_from_blueprint_ast(filename)

|

|

104

|

+

c = JSON.parse(open(filename).read)['content'].first

|

|

105

|

+

return [] unless c

|

|

106

|

+

c['content']

|

|

107

|

+

end

|

|

108

|

+

|

|

109

|

+

def self.mson_to_ast_json(filename)

|

|

110

|

+

input = filename

|

|

111

|

+

output = filename.gsub(/\.\w+$/, '.blueprint-ast.json')

|

|

112

|

+

|

|

113

|

+

cmd = "drafter -u -f json -o #{Shellwords.escape(output)} #{Shellwords.escape(input)}"

|

|

114

|

+

stdin, stdout, status = Open3.capture3(cmd)

|

|

115

|

+

|

|

116

|

+

{

|

|

117

|

+

cmd: cmd,

|

|

118

|

+

outfile: output,

|

|

119

|

+

stdin: stdin,

|

|

120

|

+

stdout: stdout,

|

|

121

|

+

status: status

|

|

122

|

+

}

|

|

123

|

+

end

|

|

124

|

+

end

|

|

125

|

+

end

|

|

@@ -0,0 +1,75 @@

|

|

|

1

|

+

require 'active_support/core_ext/hash/indifferent_access'

|

|

2

|

+

|

|

3

|

+

module MinimumTerm

|

|

4

|

+

class Infrastructure

|

|

5

|

+

attr_reader :services, :errors, :data_dir

|

|

6

|

+

|

|

7

|

+

def initialize(data_dir:, verbose: false)

|

|

8

|

+

@verbose = !!verbose

|

|

9

|

+

@data_dir = data_dir

|

|

10

|

+

@mutex = Mutex.new

|

|

11

|

+

load_services

|

|

12

|

+

end

|

|

13

|

+

|

|

14

|

+

def reload

|

|

15

|

+

load_services

|

|

16

|

+

end

|

|

17

|

+

|

|

18

|

+

def contracts_fulfilled?

|

|

19

|

+

load_services

|

|

20

|

+

@mutex.synchronize do

|

|

21

|

+

@errors = {}

|

|

22

|

+

publishers.each do |publisher|

|

|

23

|

+

publisher.satisfies_consumers?(verbose: @verbose)

|

|

24

|

+

next if publisher.errors.empty?

|

|

25

|

+

@errors.merge! publisher.errors

|

|

26

|

+

end

|

|

27

|

+

@errors.empty?

|

|

28

|

+

end

|

|

29

|

+

end

|

|

30

|

+

|

|

31

|

+

def publishers

|

|

32

|

+

services.values.select do |service|

|

|

33

|

+

service.published_objects.length > 0

|

|

34

|

+

end

|

|

35

|

+

end

|

|

36

|

+

|

|

37

|

+

def consumers

|

|

38

|

+

services.values.select do |service|

|

|

39

|

+

service.consumed_objects.length > 0

|

|

40

|

+

end

|

|

41

|

+

end

|

|

42

|

+

|

|

43

|

+

def convert_all!(keep_intermediary_files = false)

|

|

44

|

+

json_files.each{ |file| FileUtils.rm_f(file) }

|

|

45

|

+

mson_files.each do |file|

|

|

46

|

+

MinimumTerm::Conversion.mson_to_json_schema!(

|

|

47

|

+

filename: file,

|

|

48

|

+

keep_intermediary_files: keep_intermediary_files,

|

|

49

|

+

verbose: @verbose)

|

|

50

|

+

end

|

|

51

|

+

reload

|

|

52

|

+

end

|

|

53

|

+

|

|

54

|

+

def mson_files

|

|

55

|

+

Dir.glob(File.join(@data_dir, "/**/*.mson"))

|

|

56

|

+

end

|

|

57

|

+

|

|

58

|

+

def json_files

|

|

59

|

+

Dir.glob(File.join(@data_dir, "/**/*.schema.json"))

|

|

60

|

+

end

|

|

61

|

+

|

|

62

|

+

private

|

|

63

|

+

|

|

64

|

+

def load_services

|

|

65

|

+

@mutex.synchronize do

|

|

66

|

+

@services = {}.with_indifferent_access

|

|

67

|

+

dirs = Dir.glob(File.join(@data_dir, "*/"))

|

|

68

|

+

dirs.each do |dir|

|

|

69

|

+

service = MinimumTerm::Service.new(self, dir)

|

|

70

|

+

@services[service.name] = service

|

|

71

|

+

end

|

|

72

|

+

end

|

|

73

|

+

end

|

|

74

|

+

end

|

|

75

|

+

end

|

|

@@ -0,0 +1,34 @@

|

|

|

1

|

+

# This represents a description of an Object (as it was in MSON and later

|

|

2

|

+

# JSON Schema). It can come in two flavors:

|

|

3

|

+

#

|

|

4

|

+

# 1) A published object

|

|

5

|

+

# 2) A consumed object

|

|

6

|

+

#

|

|

7

|

+

# A published object only refers to one servce:

|

|

8

|

+

#

|

|

9

|

+

# - its publisher

|

|

10

|

+

#

|

|

11

|

+

# However, a consumed object is referring to two services:

|

|

12

|

+

#

|

|

13

|

+

# - its publisher

|

|

14

|

+

# - its consumer

|

|

15

|

+

#

|

|

16

|

+

#

|

|

17

|

+

module MinimumTerm

|

|

18

|

+

class ObjectDescription

|

|

19

|

+

attr_reader :service, :name, :schema

|

|

20

|

+

def initialize(defined_in_service, scoped_name, schema)

|

|

21

|

+

@defined_in_service = defined_in_service

|

|

22

|

+

@scoped_name = scoped_name

|

|

23

|

+

@name = remove_service_from_scoped_name(scoped_name)

|

|

24

|

+

@schema = schema

|

|

25

|

+

end

|

|

26

|

+

|

|

27

|

+

private

|

|

28

|

+

|

|

29

|

+

def remove_service_from_scoped_name(n)

|

|

30

|

+

n[n.index(MinimumTerm::SCOPE_SEPARATOR)+1..-1]

|

|

31

|

+

end

|

|

32

|

+

|

|

33

|

+

end

|

|

34

|

+

end

|