faraday_dynamic_timeout 1.0.0

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- checksums.yaml +7 -0

- data/Appraisals +7 -0

- data/CHANGELOG.md +11 -0

- data/MIT-LICENSE.txt +20 -0

- data/README.md +135 -0

- data/VERSION +1 -0

- data/faraday_dynamic_timeout.gemspec +33 -0

- data/gemfiles/faraday_1.gemfile +15 -0

- data/gemfiles/faraday_2.gemfile +15 -0

- data/lib/faraday_dynamic_timeout/bucket.rb +70 -0

- data/lib/faraday_dynamic_timeout/capacity_strategy.rb +79 -0

- data/lib/faraday_dynamic_timeout/counter.rb +43 -0

- data/lib/faraday_dynamic_timeout/middleware.rb +161 -0

- data/lib/faraday_dynamic_timeout/request_info.rb +43 -0

- data/lib/faraday_dynamic_timeout/strategy.rb +10 -0

- data/lib/faraday_dynamic_timeout.rb +27 -0

- metadata +101 -0

checksums.yaml

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

1

|

+

---

|

|

2

|

+

SHA256:

|

|

3

|

+

metadata.gz: 8e0d5c3fe787914d19d470b70182719f5c4863106a5d9fc4854df38e219f1a5a

|

|

4

|

+

data.tar.gz: 485e82cfdd0b9098801cffd08fad0426a763ec4a51c746b97a3a553c12281d2d

|

|

5

|

+

SHA512:

|

|

6

|

+

metadata.gz: a5025c609b11ae25188e685a15dc44ae55499fea41f16bad4c1cf925b3465942cf42ea77d734eeff8af417f6df7610c8c4dbe5032169dac22100004d1348bda0

|

|

7

|

+

data.tar.gz: 3443e7adc22b8bcc9c8b3adc2a25d24187b2f6b284e03429fcaabee8ff80299e976f8fe019cce1ffb3ff55a2020eb6b2be7b31cfe39c8773922327bb86fdc4de

|

data/Appraisals

ADDED

data/CHANGELOG.md

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

1

|

+

# Changelog

|

|

2

|

+

All notable changes to this project will be documented in this file.

|

|

3

|

+

|

|

4

|

+

The format is based on [Keep a Changelog](https://keepachangelog.com/en/1.0.0/),

|

|

5

|

+

and this project adheres to [Semantic Versioning](https://semver.org/spec/v2.0.0.html).

|

|

6

|

+

|

|

7

|

+

## 1.0.0

|

|

8

|

+

|

|

9

|

+

### Added

|

|

10

|

+

|

|

11

|

+

- Initial release

|

data/MIT-LICENSE.txt

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

1

|

+

Copyright 2023 Brian Durand

|

|

2

|

+

|

|

3

|

+

Permission is hereby granted, free of charge, to any person obtaining

|

|

4

|

+

a copy of this software and associated documentation files (the

|

|

5

|

+

"Software"), to deal in the Software without restriction, including

|

|

6

|

+

without limitation the rights to use, copy, modify, merge, publish,

|

|

7

|

+

distribute, sublicense, and/or sell copies of the Software, and to

|

|

8

|

+

permit persons to whom the Software is furnished to do so, subject to

|

|

9

|

+

the following conditions:

|

|

10

|

+

|

|

11

|

+

The above copyright notice and this permission notice shall be

|

|

12

|

+

included in all copies or substantial portions of the Software.

|

|

13

|

+

|

|

14

|

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

|

|

15

|

+

EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF

|

|

16

|

+

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

|

|

17

|

+

NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE

|

|

18

|

+

LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION

|

|

19

|

+

OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION

|

|

20

|

+

WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

data/README.md

ADDED

|

@@ -0,0 +1,135 @@

|

|

|

1

|

+

# Faraday Dynamic Timeout Middleware

|

|

2

|

+

|

|

3

|

+

[](https://github.com/bdurand/faraday_dynamic_timeout/actions/workflows/continuous_integration.yml)

|

|

4

|

+

[](https://github.com/bdurand/faraday_dynamic_timeout/actions/workflows/regression_test.yml)

|

|

5

|

+

[](https://github.com/testdouble/standard)

|

|

6

|

+

[](https://badge.fury.io/rb/faraday_dynamic_timeout)

|

|

7

|

+

|

|

8

|

+

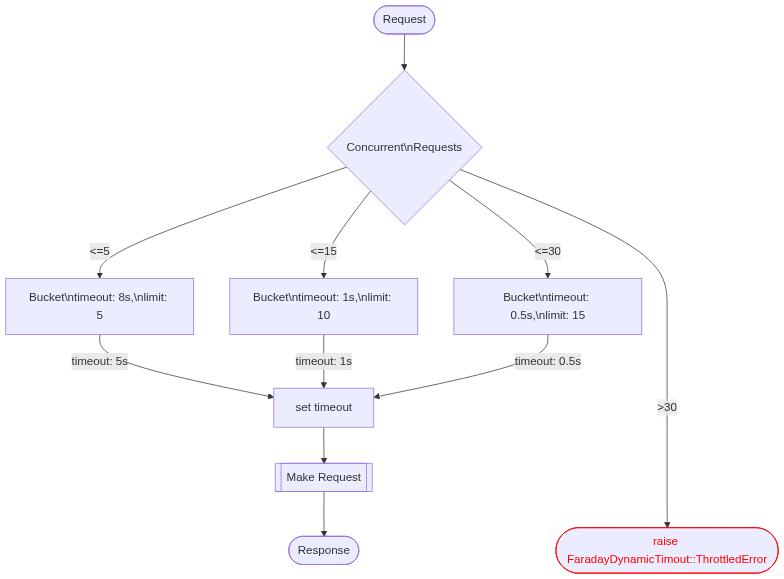

This gem provides Faraday middleware that allows you to set dynamic timeouts on HTTP requests based on the current number of requests being made to an endpoint. This allows you to set a long enough timeout to handle your slowest requests when the system is healthy, but then progressively set shorter timeouts as load increases so requests can fail fast in an unhealthy system.

|

|

9

|

+

|

|

10

|

+

It's always hard to figure out what the proper timeout is for an HTTP request. If you set it too short, then you will get errors on an occasional request that takes just a little longer than normal. If you set it too long, then you will end up with a system that becomes unresponsive when something goes wrong and most of your application resouces can end up waiting on an external system that just isn't working.

|

|

11

|

+

|

|

12

|

+

This middleware works by letting you set "buckets" of timeouts. In a simple use case, you would setup a small bucket with a long timeout and a large bucket with a short timeout. Each concurrent request to a service will use up one slot in each bucket and will use the highest available request timeout available.

|

|

13

|

+

|

|

14

|

+

Under normal load, the long timeout will be used for all requests (if you've set the bucket limit high enough). However, as the number of concurrent requests increases (for example, when the external service starts responding more slowly), the request timeouts will be automatically reduced as requests start falling over to the next bucket with a lower timeout. If the number of concurrent requests exceeds the limit of the last bucket, then an exception will be raised without even making the request.

|

|

15

|

+

|

|

16

|

+

This chart shows the basic logic:

|

|

17

|

+

|

|

18

|

+

[](https://mermaid-js.github.io/mermaid-live-editor/edit#pako:eNptk8tuwyAQRX8F0U0qOZWjKlJltZUSJ91102ZV2wuExwkKjxSwqijJvxcMVHnUq5m5Z4C5mAOmqgVc4I6rH7oh2qLVopbIfR_w3YOxoyoGzX2oz6p5T7dg61paJkD1tkBPJnMpZ4K5ZNoEcP4PODkHJ3kky3_I_GF6waZVl1orPao0YQbQG9GkJfvFXhLB6IoJ31qsNlpZy6Ed2HRuY_ccQruLtdpCcdfleUYVV3oIA7cKJ6gMWBRPE7d-J1uIZlSVT5JHTZMsMzslDXjPQpQ2jyAaj19RuQG6PZRK0l5rkH7qKJtT9MMTnj0-v0yPaHZbnbjy_Lb8mB9ReVkee-XVC8Po8Q4H_s_rqTmmsePVXeqTa7281P1dXRExGQY-8-3GyAFIbuEMC9CCsNb9kQfP1thuQECNCxe20JGe2xpnQRLMTzSjVmnjiY5wA1GTysKMs7UMrRw611fLk9uC9FZ97iXFhdU9ZLjftcTCgpG1JgIXYRUMLXPrvofXMTySRC4H5Q_cEfmlVGo8_QJywxkA)

|

|

19

|

+

|

|

20

|

+

This setup makes for a system that degrades gracefully and predictably. When the external system starts having issues, you will still be sending requests to it, but they will start failing fast and at a certain limit they will fail immediately without even trying to make a request (which would have most likely only added to the issues on the external system). This can allow your system to remain functional (albeit in a degraded state) and to automatically recover when the external system recovers.

|

|

21

|

+

|

|

22

|

+

The middleware requires a redis server which is used to count the number of concurrent requests being made to an endpoint across all processes.

|

|

23

|

+

|

|

24

|

+

## Usage

|

|

25

|

+

|

|

26

|

+

The middleware can be installed in your Faraday connection like this:

|

|

27

|

+

|

|

28

|

+

```ruby

|

|

29

|

+

require "faraday_dynamic_timeout"

|

|

30

|

+

|

|

31

|

+

connection = Faraday.new do |faraday|

|

|

32

|

+

faraday.use(

|

|

33

|

+

:dynamic_timeout,

|

|

34

|

+

buckets: [

|

|

35

|

+

{timeout: 8, limit: 5},

|

|

36

|

+

{timeout: 1, limit: 10},

|

|

37

|

+

{timeout: 0.5, limit: 20}

|

|

38

|

+

]

|

|

39

|

+

)

|

|

40

|

+

end

|

|

41

|

+

```

|

|

42

|

+

|

|

43

|

+

In this example, the timeout will be set to 8 seconds if there are 5 or fewer requests being made to an endpoint, 1 second if there are 10 or fewer requests, and 0.5 seconds if there are 20 or fewer requests. If there are more than 20 requests being made to an endpoint, an exception will be raised.

|

|

44

|

+

|

|

45

|

+

### Configuration Options

|

|

46

|

+

|

|

47

|

+

- `:buckets` - An array of bucket configurations. Each bucket is a hash with two keys: `:timeout` and `:limit`. The `:timeout` value is the timeout to use for requests when it falls into that bucket. The `:limit` value is the maximum number of concurrent requests that can use that bucket. Requests will always try to use the bucket with the highest timeout, so order does not matter. If a bucket has a limit less than zero, it will be considered unlimited and requests will never fall over to the next bucket. This value can also be set as a `Proc` (or any object that responds to `call`) that will be evaluated at runtime when each request is made.

|

|

48

|

+

|

|

49

|

+

- `:redis` - The redis connection to use. This should be a `Redis` object or a `Proc` that yields a `Redis` object. If not provided, a default `Redis` connection will be used (configured using environment variables). If the value is explicitly set to nil, then the middleware will pass through all requests without doing anything.

|

|

50

|

+

|

|

51

|

+

- `:name` - An optional name for the resource. By default the hostname and port of the request URL will be used to identify the resource. Each resource will report a separate count of concurrent requests and processes. You can group multiple resources from different hosts together with the `:name` option.

|

|

52

|

+

|

|

53

|

+

- `:callback` - An optional callback that will be called after each request. The callback can be a `Proc` or any object that responds to `call`. It will be called with a `FaradayDyamicTimeout::RequestInfo` argument. You can use this to log the number of concurrent requests or to report metrics to a monitoring system. This can be very useful for tuning the bucket settings.

|

|

54

|

+

|

|

55

|

+

### Capacity Strategy

|

|

56

|

+

|

|

57

|

+

You can use the `FaraadyDynamicTimeout::CapacityStrategy` class to build a bucket configuration based on the current capacity of your application rather than hard coding bucket limits. This can be useful if you have a system that can scale up and down based on load. It works by estimating the total number of threads available in your application and uses that value to calculate bucket limits based on a percentage provided by the `:capacity` option.

|

|

58

|

+

|

|

59

|

+

```ruby

|

|

60

|

+

capacity = FaradayDynamicTimeout::CapacityStrategy.new(

|

|

61

|

+

buckets: [

|

|

62

|

+

{timeout: 8, capacity: 0.05, limit: 3},

|

|

63

|

+

{timeout: 1, capacity: 0.05},

|

|

64

|

+

{timeout: 0.5, capacity: 0.10},

|

|

65

|

+

{timeout: 0.2, capacity: 1.0}

|

|

66

|

+

],

|

|

67

|

+

threads_per_process: 4

|

|

68

|

+

)

|

|

69

|

+

```

|

|

70

|

+

|

|

71

|

+

In this example, it will be assumed that each process has 4 threads, The first bucket will have a limit of 5% of the application threads but it will never be less than 3 (because of the hardcoded `:limit` option). The second bucket will have a limit of 5% of the application threads (rounded up). The third bucket will have a limit of 10% of the application threads. The forth bucket will have unlimited capacity (100%) and will serve all requests that exceed the limits of the previous buckets.

|

|

72

|

+

|

|

73

|

+

The number of processes is estimated. Every time a process makes a request through the middleware, it will be remembered for 60 seconds. So if you have processes that have not made any requests through the middleware, they will not be counted. Processes that have been terminated will also be considered in the calculation for up to 60 seconds. You should still get a pretty good estimate of the number of processes that are currently running. The number will be less accurate if you application is scaling up and down very quickly (i.e. during a deployment) or when it is not very active. In the deployment case the estimate will be high so it should not cause any problems. In the low activity case you aren't really worried about load and the bucket sizes will never be lower than 1. In any case, it's a good idea to set a minimum limit on the first bucket.

|

|

74

|

+

|

|

75

|

+

### Full Example

|

|

76

|

+

|

|

77

|

+

For this example, we will configure the `opensearch` gem with this middleware along with metrics tracking to a statsd servers.

|

|

78

|

+

|

|

79

|

+

```ruby

|

|

80

|

+

# Set up a redis connection to coordinate counting concurrent requests.

|

|

81

|

+

redis = Redis.new(url: ENV.fetch("REDIS_URL"))

|

|

82

|

+

|

|

83

|

+

# Set up a statsd client to report metrics with the DataDog extensions.

|

|

84

|

+

statsd = Statsd.new(ENV.fetch("STATSD_HOST"), ENV.fetch("STATSD_PORT"))

|

|

85

|

+

|

|

86

|

+

metrics_callback = ->(request_info) do

|

|

87

|

+

batch = Statsd::Batch.new(statsd)

|

|

88

|

+

batch.gauge("opensearch.concurrent_requests", request_info.request_count)

|

|

89

|

+

batch.timing("opensearch.duration", (request_info.duration * 1000).round)

|

|

90

|

+

batch.increment("opensearch.throttled") if request_info.throttled?

|

|

91

|

+

batch.increment("opensearch.timed_out") if request_info.timed_out?

|

|

92

|

+

batch.flush

|

|

93

|

+

end

|

|

94

|

+

|

|

95

|

+

client = OpenSearch::Client.new(host: 'localhost', port: '9200') do |faraday|

|

|

96

|

+

faraday.request :dynamic_timeout,

|

|

97

|

+

buckets: [

|

|

98

|

+

{timeout: 8, max_requests: 5},

|

|

99

|

+

{timeout: 1, max_requests: 10},

|

|

100

|

+

{timeout: 0.5, max_requests: 20}

|

|

101

|

+

],

|

|

102

|

+

name: "opensearch",

|

|

103

|

+

redis: redis,

|

|

104

|

+

filter: ->(env) { env.url.path.end_with?("/_search") },

|

|

105

|

+

callback: metrics_callback

|

|

106

|

+

end

|

|

107

|

+

```

|

|

108

|

+

|

|

109

|

+

## Installation

|

|

110

|

+

|

|

111

|

+

Add this line to your application's Gemfile:

|

|

112

|

+

|

|

113

|

+

```ruby

|

|

114

|

+

gem "faraday_dynamic_timeout"

|

|

115

|

+

```

|

|

116

|

+

|

|

117

|

+

And then execute:

|

|

118

|

+

```bash

|

|

119

|

+

$ bundle

|

|

120

|

+

```

|

|

121

|

+

|

|

122

|

+

Or install it yourself as:

|

|

123

|

+

```bash

|

|

124

|

+

$ gem install faraday_dynamic_timeout

|

|

125

|

+

```

|

|

126

|

+

|

|

127

|

+

## Contributing

|

|

128

|

+

|

|

129

|

+

Open a pull request on GitHub.

|

|

130

|

+

|

|

131

|

+

Please use the [standardrb](https://github.com/testdouble/standard) syntax and lint your code with `standardrb --fix` before submitting.

|

|

132

|

+

|

|

133

|

+

## License

|

|

134

|

+

|

|

135

|

+

The gem is available as open source under the terms of the [MIT License](https://opensource.org/licenses/MIT).

|

data/VERSION

ADDED

|

@@ -0,0 +1 @@

|

|

|

1

|

+

1.0.0

|

|

@@ -0,0 +1,33 @@

|

|

|

1

|

+

Gem::Specification.new do |spec|

|

|

2

|

+

spec.name = "faraday_dynamic_timeout"

|

|

3

|

+

spec.version = File.read(File.expand_path("VERSION", __dir__)).strip

|

|

4

|

+

spec.authors = ["Brian Durand"]

|

|

5

|

+

spec.email = ["bbdurand@gmail.com"]

|

|

6

|

+

|

|

7

|

+

spec.summary = "Faraday middleware to dynamically set a request timeout based on the number of concurrent requests and throttle the number of requests that can be made."

|

|

8

|

+

spec.homepage = "https://github.com/bdurand/faraday_dynamic_timeout"

|

|

9

|

+

spec.license = "MIT"

|

|

10

|

+

|

|

11

|

+

# Specify which files should be added to the gem when it is released.

|

|

12

|

+

# The `git ls-files -z` loads the files in the RubyGem that have been added into git.

|

|

13

|

+

ignore_files = %w[

|

|

14

|

+

.

|

|

15

|

+

Gemfile

|

|

16

|

+

Gemfile.lock

|

|

17

|

+

Rakefile

|

|

18

|

+

bin/

|

|

19

|

+

spec/

|

|

20

|

+

]

|

|

21

|

+

spec.files = Dir.chdir(File.expand_path("..", __FILE__)) do

|

|

22

|

+

`git ls-files -z`.split("\x0").reject { |f| ignore_files.any? { |path| f.start_with?(path) } }

|

|

23

|

+

end

|

|

24

|

+

|

|

25

|

+

spec.require_paths = ["lib"]

|

|

26

|

+

|

|

27

|

+

spec.add_dependency "faraday"

|

|

28

|

+

spec.add_dependency "restrainer", ">= 1.1.3"

|

|

29

|

+

|

|

30

|

+

spec.add_development_dependency "bundler"

|

|

31

|

+

|

|

32

|

+

spec.required_ruby_version = ">= 2.5"

|

|

33

|

+

end

|

|

@@ -0,0 +1,15 @@

|

|

|

1

|

+

# This file was generated by Appraisal

|

|

2

|

+

|

|

3

|

+

source "https://rubygems.org"

|

|

4

|

+

|

|

5

|

+

gem "webmock"

|

|

6

|

+

gem "appraisal"

|

|

7

|

+

gem "dotenv"

|

|

8

|

+

gem "rake"

|

|

9

|

+

gem "rspec", "~> 3.10"

|

|

10

|

+

gem "standard", "~> 1.0"

|

|

11

|

+

gem "simplecov", "~> 0.21", require: false

|

|

12

|

+

gem "yard"

|

|

13

|

+

gem "faraday", "~> 1.0"

|

|

14

|

+

|

|

15

|

+

gemspec path: "../"

|

|

@@ -0,0 +1,15 @@

|

|

|

1

|

+

# This file was generated by Appraisal

|

|

2

|

+

|

|

3

|

+

source "https://rubygems.org"

|

|

4

|

+

|

|

5

|

+

gem "webmock"

|

|

6

|

+

gem "appraisal"

|

|

7

|

+

gem "dotenv"

|

|

8

|

+

gem "rake"

|

|

9

|

+

gem "rspec", "~> 3.10"

|

|

10

|

+

gem "standard", "~> 1.0"

|

|

11

|

+

gem "simplecov", "~> 0.21", require: false

|

|

12

|

+

gem "yard"

|

|

13

|

+

gem "faraday", "~> 2.0"

|

|

14

|

+

|

|

15

|

+

gemspec path: "../"

|

|

@@ -0,0 +1,70 @@

|

|

|

1

|

+

# frozen_string_literal: true

|

|

2

|

+

|

|

3

|

+

module FaradayDynamicTimeout

|

|

4

|

+

# Internal class for storing bucket configuration.

|

|

5

|

+

# @api private

|

|

6

|

+

class Bucket

|

|

7

|

+

attr_reader :timeout, :limit

|

|

8

|

+

|

|

9

|

+

class << self

|

|

10

|

+

def from_hashes(hashes)

|

|

11

|

+

all_buckets = hashes.collect { |hash| new(timeout: fetch_indifferent_key(hash, :timeout), limit: fetch_indifferent_key(hash, :limit)) }

|

|

12

|

+

grouped_buckets = all_buckets.select(&:valid?).group_by(&:timeout).values

|

|

13

|

+

return [] if grouped_buckets.empty?

|

|

14

|

+

|

|

15

|

+

unique_buckets = grouped_buckets.collect do |buckets|

|

|

16

|

+

buckets.reduce do |merged, bucket|

|

|

17

|

+

merged.nil? ? bucket : merged.merge(bucket)

|

|

18

|

+

end

|

|

19

|

+

end

|

|

20

|

+

|

|

21

|

+

unique_buckets.sort_by(&:timeout)

|

|

22

|

+

end

|

|

23

|

+

|

|

24

|

+

private

|

|

25

|

+

|

|

26

|

+

def fetch_indifferent_key(hash, key)

|

|

27

|

+

hash[key] || hash[key.to_s]

|

|

28

|

+

end

|

|

29

|

+

end

|

|

30

|

+

|

|

31

|

+

# @param timeout [Float] The timeout in seconds.

|

|

32

|

+

# @param limit [Integer] The limit.

|

|

33

|

+

def initialize(timeout:, limit: 0)

|

|

34

|

+

@timeout = timeout.to_f.round(3)

|

|

35

|

+

@limit = limit.to_i

|

|

36

|

+

end

|

|

37

|

+

|

|

38

|

+

# Return true if the bucket has no limit. A bucket has no limit if the limit is negative.

|

|

39

|

+

# @return [Boolean] True if the bucket has no limit.

|

|

40

|

+

def no_limit?

|

|

41

|

+

limit < 0

|

|

42

|

+

end

|

|

43

|

+

|

|

44

|

+

# Return true if the bucket is valid. A bucket is valid if the timeout is positive and

|

|

45

|

+

# the limit is non-zero.

|

|

46

|

+

def valid?

|

|

47

|

+

timeout.positive? && limit != 0

|

|

48

|

+

end

|

|

49

|

+

|

|

50

|

+

def ==(other)

|

|

51

|

+

return false unless other.is_a?(self.class)

|

|

52

|

+

|

|

53

|

+

timeout == other.timeout && limit == other.limit

|

|

54

|

+

end

|

|

55

|

+

|

|

56

|

+

def merge(bucket)

|

|

57

|

+

combined_limit = if no_limit?

|

|

58

|

+

limit

|

|

59

|

+

elsif bucket.no_limit?

|

|

60

|

+

bucket.limit

|

|

61

|

+

else

|

|

62

|

+

limit + bucket.limit

|

|

63

|

+

end

|

|

64

|

+

|

|

65

|

+

combined_timeout = [timeout, bucket.timeout].max

|

|

66

|

+

|

|

67

|

+

self.class.new(timeout: combined_timeout, limit: combined_limit)

|

|

68

|

+

end

|

|

69

|

+

end

|

|

70

|

+

end

|

|

@@ -0,0 +1,79 @@

|

|

|

1

|

+

# frozen_string_literal: true

|

|

2

|

+

|

|

3

|

+

module FaradayDynamicTimeout

|

|

4

|

+

class CapacityStrategy

|

|

5

|

+

def initialize(buckets:, redis:, name: nil, threads_per_process: 1)

|

|

6

|

+

@config = buckets

|

|

7

|

+

@redis = redis

|

|

8

|

+

@name = (name || "default").to_s

|

|

9

|

+

@threads_per_process = threads_per_process

|

|

10

|

+

end

|

|

11

|

+

|

|

12

|

+

def call

|

|

13

|

+

process_count = count_processes

|

|

14

|

+

threads_count = total_threads(process_count)

|

|

15

|

+

|

|

16

|

+

buckets_config.collect do |bucket|

|

|

17

|

+

timeout = fetch_indifferent_key(bucket, :timeout)&.to_f

|

|

18

|

+

limit = fetch_indifferent_key(bucket, :limit)&.to_i

|

|

19

|

+

capacity = fetch_indifferent_key(bucket, :capacity)&.to_f

|

|

20

|

+

{timeout: timeout, limit: capacity_limit(capacity, limit, threads_count)}

|

|

21

|

+

end

|

|

22

|

+

end

|

|

23

|

+

|

|

24

|

+

private

|

|

25

|

+

|

|

26

|

+

def capacity_limit(capacity, limit, threads_count)

|

|

27

|

+

if capacity && (limit.nil? || limit >= 0)

|

|

28

|

+

if capacity < 0 || capacity >= 1.0

|

|

29

|

+

limit = -1

|

|

30

|

+

else

|

|

31

|

+

capacity_limit = (capacity * threads_count).ceil

|

|

32

|

+

limit = [limit, capacity_limit].compact.max

|

|

33

|

+

end

|

|

34

|

+

end

|

|

35

|

+

|

|

36

|

+

limit

|

|

37

|

+

end

|

|

38

|

+

|

|

39

|

+

def count_processes

|

|

40

|

+

process_counter = Counter.new(name: process_counter_name, redis: redis_client, ttl: 60)

|

|

41

|

+

process_counter.track!(process_id)

|

|

42

|

+

process_counter.value

|

|

43

|

+

end

|

|

44

|

+

|

|

45

|

+

def buckets_config

|

|

46

|

+

if @config.respond_to?(:call)

|

|

47

|

+

@config.call

|

|

48

|

+

else

|

|

49

|

+

@config

|

|

50

|

+

end

|

|

51

|

+

end

|

|

52

|

+

|

|

53

|

+

def redis_client

|

|

54

|

+

if @redis.is_a?(Proc)

|

|

55

|

+

@redis.call

|

|

56

|

+

else

|

|

57

|

+

@redis

|

|

58

|

+

end

|

|

59

|

+

end

|

|

60

|

+

|

|

61

|

+

def total_threads(process_count)

|

|

62

|

+

threads_per_process = (@threads_per_process.respond_to?(:call) ? @threads_per_process.call : @threads_per_process).to_i

|

|

63

|

+

threads_per_process = 1 if threads_per_process <= 0

|

|

64

|

+

[process_count, 1].max * threads_per_process

|

|

65

|

+

end

|

|

66

|

+

|

|

67

|

+

def process_id

|

|

68

|

+

"#{Socket.gethostname}:#{Process.pid}"

|

|

69

|

+

end

|

|

70

|

+

|

|

71

|

+

def process_counter_name

|

|

72

|

+

"#{self.class.name}:#{@name}.processes"

|

|

73

|

+

end

|

|

74

|

+

|

|

75

|

+

def fetch_indifferent_key(hash, key)

|

|

76

|

+

hash[key] || hash[key.to_s]

|

|

77

|

+

end

|

|

78

|

+

end

|

|

79

|

+

end

|

|

@@ -0,0 +1,43 @@

|

|

|

1

|

+

# frozen_string_literal: true

|

|

2

|

+

|

|

3

|

+

module FaradayDynamicTimeout

|

|

4

|

+

class Counter

|

|

5

|

+

def initialize(name:, redis:, ttl: 60.0)

|

|

6

|

+

@ttl = ttl.to_f

|

|

7

|

+

@ttl = 60.0 if @ttl <= 0.0

|

|

8

|

+

@redis = redis

|

|

9

|

+

@key = "FaradayDynamicTimeout:#{name}"

|

|

10

|

+

end

|

|

11

|

+

|

|

12

|

+

def execute

|

|

13

|

+

id = track!

|

|

14

|

+

begin

|

|

15

|

+

yield

|

|

16

|

+

ensure

|

|

17

|

+

release!(id)

|

|

18

|

+

end

|

|

19

|

+

end

|

|

20

|

+

|

|

21

|

+

def value

|

|

22

|

+

total_count, expired_count = @redis.multi do |transaction|

|

|

23

|

+

transaction.zcard(@key)

|

|

24

|

+

transaction.zremrangebyscore(@key, "-inf", Time.now.to_f - @ttl)

|

|

25

|

+

end

|

|

26

|

+

|

|

27

|

+

total_count - expired_count

|

|

28

|

+

end

|

|

29

|

+

|

|

30

|

+

def track!(id = nil)

|

|

31

|

+

id ||= SecureRandom.hex

|

|

32

|

+

@redis.multi do |transaction|

|

|

33

|

+

transaction.zadd(@key, Time.now.to_f, id)

|

|

34

|

+

transaction.pexpire(@key, (@ttl * 1000).round)

|

|

35

|

+

end

|

|

36

|

+

id

|

|

37

|

+

end

|

|

38

|

+

|

|

39

|

+

def release!(id)

|

|

40

|

+

@redis.zrem(@key, id)

|

|

41

|

+

end

|

|

42

|

+

end

|

|

43

|

+

end

|

|

@@ -0,0 +1,161 @@

|

|

|

1

|

+

# frozen_string_literal: true

|

|

2

|

+

|

|

3

|

+

module FaradayDynamicTimeout

|

|

4

|

+

class Middleware < Faraday::Middleware

|

|

5

|

+

def initialize(*)

|

|

6

|

+

super

|

|

7

|

+

|

|

8

|

+

@redis_client = option(:redis)

|

|

9

|

+

if @redis_client.nil? && !(options.include?(:redis) || options.include?("redis"))

|

|

10

|

+

@redis_client = Redis.new

|

|

11

|

+

end

|

|

12

|

+

|

|

13

|

+

@memoized_buckets = []

|

|

14

|

+

@mutex = Mutex.new

|

|

15

|

+

end

|

|

16

|

+

|

|

17

|

+

def call(env)

|

|

18

|

+

buckets = sorted_buckets

|

|

19

|

+

redis = redis_client

|

|

20

|

+

return app.call(env) if !enabled?(env) || buckets.empty? || redis.nil?

|

|

21

|

+

|

|

22

|

+

error = nil

|

|

23

|

+

bucket_timeout = nil

|

|

24

|

+

callback = option(:callback)

|

|

25

|

+

start_time = monotonic_time if callback

|

|

26

|

+

|

|

27

|

+

count_request(env.url, redis, buckets, callback) do |request_count|

|

|

28

|

+

execute_with_timeout(env.url, buckets, request_count, redis) do |timeout|

|

|

29

|

+

bucket_timeout = timeout

|

|

30

|

+

set_timeout(env.request, timeout) if timeout

|

|

31

|

+

|

|

32

|

+

# Resetting the start time to more accurately reflect the time spent in the request.

|

|

33

|

+

start_time = monotonic_time if callback

|

|

34

|

+

app.call(env)

|

|

35

|

+

end

|

|

36

|

+

rescue => e

|

|

37

|

+

error = e

|

|

38

|

+

raise

|

|

39

|

+

ensure

|

|

40

|

+

if callback

|

|

41

|

+

duration = monotonic_time - start_time

|

|

42

|

+

request_info = RequestInfo.new(env: env, duration: duration, timeout: bucket_timeout, request_count: request_count, error: error)

|

|

43

|

+

callback.call(request_info)

|

|

44

|

+

end

|

|

45

|

+

end

|

|

46

|

+

end

|

|

47

|

+

|

|

48

|

+

private

|

|

49

|

+

|

|

50

|

+

# Return the valid buckets sorted by timeout.

|

|

51

|

+

# @return [Array<Bucket>] The sorted buckets.

|

|

52

|

+

# @api private

|

|

53

|

+

def sorted_buckets

|

|

54

|

+

config = option(:buckets)

|

|

55

|

+

config = config.call if config.respond_to?(:call)

|

|

56

|

+

config = Array(config)

|

|

57

|

+

memoized_config, memoized_buckets = @memoized_buckets

|

|

58

|

+

|

|

59

|

+

if config == memoized_config

|

|

60

|

+

memoized_buckets

|

|

61

|

+

else

|

|

62

|

+

duplicated_config = @mutex.synchronize { config.collect(&:dup) }

|

|

63

|

+

buckets = Bucket.from_hashes(duplicated_config)

|

|

64

|

+

@memoized_buckets = [duplicated_config, buckets]

|

|

65

|

+

buckets

|

|

66

|

+

end

|

|

67

|

+

end

|

|

68

|

+

|

|

69

|

+

def enabled?(env)

|

|

70

|

+

filter = option(:filter)

|

|

71

|

+

if filter

|

|

72

|

+

filter.call(env)

|

|

73

|

+

else

|

|

74

|

+

true

|

|

75

|

+

end

|

|

76

|

+

end

|

|

77

|

+

|

|

78

|

+

def execute_with_timeout(uri, buckets, request_count, redis)

|

|

79

|

+

buckets = buckets.dup

|

|

80

|

+

total_requests = 0

|

|

81

|

+

|

|

82

|

+

while (bucket = buckets.pop)

|

|

83

|

+

if bucket.no_limit?

|

|

84

|

+

retval = yield(bucket.timeout)

|

|

85

|

+

break

|

|

86

|

+

else

|

|

87

|

+

restrainer = Restrainer.new(restrainer_name(uri, bucket.timeout), limit: bucket.limit, timeout: bucket.timeout, redis: redis)

|

|

88

|

+

begin

|

|

89

|

+

retval = restrainer.throttle { yield(bucket.timeout) }

|

|

90

|

+

break

|

|

91

|

+

rescue Restrainer::ThrottledError

|

|

92

|

+

total_requests += bucket.limit

|

|

93

|

+

if buckets.empty?

|

|

94

|

+

# Since request_count is a snapshot before the request was started it is subject to

|

|

95

|

+

# race conditions, so we'll make sure to report a higher number if we calculated one.

|

|

96

|

+

request_count = [request_count, total_requests + 1].max

|

|

97

|

+

raise ThrottledError.new("Request to #{base_url(uri)} aborted due to #{request_count} concurrent requests", request_count: request_count)

|

|

98

|

+

end

|

|

99

|

+

end

|

|

100

|

+

end

|

|

101

|

+

end

|

|

102

|

+

|

|

103

|

+

retval

|

|

104

|

+

end

|

|

105

|

+

|

|

106

|

+

def set_timeout(request, timeout)

|

|

107

|

+

request.timeout = timeout

|

|

108

|

+

request.open_timeout = nil

|

|

109

|

+

request.write_timeout = nil

|

|

110

|

+

request.read_timeout = nil

|

|

111

|

+

end

|

|

112

|

+

|

|

113

|

+

# Track how many requests are currently being executed only if a callback has been configured.

|

|

114

|

+

def count_request(uri, redis, buckets, callback)

|

|

115

|

+

if callback

|

|

116

|

+

ttl = buckets.last.timeout

|

|

117

|

+

ttl = 60 if ttl <= 0

|

|

118

|

+

request_counter = Counter.new(name: request_counter_name(uri), redis: redis, ttl: ttl)

|

|

119

|

+

request_counter.execute do

|

|

120

|

+

yield request_counter.value

|

|

121

|

+

end

|

|

122

|

+

else

|

|

123

|

+

yield 1

|

|

124

|

+

end

|

|

125

|

+

end

|

|

126

|

+

|

|

127

|

+

def request_counter_name(uri)

|

|

128

|

+

"#{redis_key_namespace(uri)}.requests"

|

|

129

|

+

end

|

|

130

|

+

|

|

131

|

+

def restrainer_name(uri, timeout)

|

|

132

|

+

"#{redis_key_namespace(uri)}.#{timeout}"

|

|

133

|

+

end

|

|

134

|

+

|

|

135

|

+

def redis_key_namespace(uri)

|

|

136

|

+

name = option(:name).to_s

|

|

137

|

+

name = base_url(uri) if name.empty?

|

|

138

|

+

"FaradayDynamicTimeout:#{name}"

|

|

139

|

+

end

|

|

140

|

+

|

|

141

|

+

def base_url(uri)

|

|

142

|

+

url = "#{uri.scheme}://#{uri.host.downcase}"

|

|

143

|

+

url = "#{url}:#{uri.port}" unless uri.port == uri.default_port

|

|

144

|

+

url

|

|

145

|

+

end

|

|

146

|

+

|

|

147

|

+

def redis_client

|

|

148

|

+

redis = option(:redis) || @redis_client

|

|

149

|

+

redis = redis.call if redis.is_a?(Proc)

|

|

150

|

+

redis

|

|

151

|

+

end

|

|

152

|

+

|

|

153

|

+

def monotonic_time

|

|

154

|

+

Process.clock_gettime(Process::CLOCK_MONOTONIC)

|

|

155

|

+

end

|

|

156

|

+

|

|

157

|

+

def option(key)

|

|

158

|

+

options[key] || options[key.to_s]

|

|

159

|

+

end

|

|

160

|

+

end

|

|

161

|

+

end

|

|

@@ -0,0 +1,43 @@

|

|

|

1

|

+

# frozen_string_literal: true

|

|

2

|

+

|

|

3

|

+

module FaradayDynamicTimeout

|

|

4

|

+

class RequestInfo

|

|

5

|

+

attr_reader :env, :duration, :timeout, :error

|

|

6

|

+

|

|

7

|

+

def initialize(env:, duration:, timeout:, request_count:, error: nil)

|

|

8

|

+

@env = env

|

|

9

|

+

@duration = duration

|

|

10

|

+

@timeout = timeout

|

|

11

|

+

@request_count = request_count

|

|

12

|

+

@error = error

|

|

13

|

+

end

|

|

14

|

+

|

|

15

|

+

def http_method

|

|

16

|

+

env.method

|

|

17

|

+

end

|

|

18

|

+

|

|

19

|

+

def uri

|

|

20

|

+

env.url

|

|

21

|

+

end

|

|

22

|

+

|

|

23

|

+

def status

|

|

24

|

+

env.status

|

|

25

|

+

end

|

|

26

|

+

|

|

27

|

+

def request_count

|

|

28

|

+

throttled? ? error.request_count : @request_count

|

|

29

|

+

end

|

|

30

|

+

|

|

31

|

+

def throttled?

|

|

32

|

+

@error.is_a?(ThrottledError)

|

|

33

|

+

end

|

|

34

|

+

|

|

35

|

+

def timed_out?

|

|

36

|

+

@error.is_a?(Faraday::TimeoutError)

|

|

37

|

+

end

|

|

38

|

+

|

|

39

|

+

def error?

|

|

40

|

+

!!@error

|

|

41

|

+

end

|

|

42

|

+

end

|

|

43

|

+

end

|

|

@@ -0,0 +1,27 @@

|

|

|

1

|

+

# frozen_string_literal: true

|

|

2

|

+

|

|

3

|

+

require "faraday"

|

|

4

|

+

require "restrainer"

|

|

5

|

+

require "socket"

|

|

6

|

+

|

|

7

|

+

require_relative "faraday_dynamic_timeout/bucket"

|

|

8

|

+

require_relative "faraday_dynamic_timeout/capacity_strategy"

|

|

9

|

+

require_relative "faraday_dynamic_timeout/counter"

|

|

10

|

+

require_relative "faraday_dynamic_timeout/middleware"

|

|

11

|

+

require_relative "faraday_dynamic_timeout/request_info"

|

|

12

|

+

|

|

13

|

+

module FaradayDynamicTimeout

|

|

14

|

+

VERSION = File.read(File.expand_path("../VERSION", __dir__)).strip

|

|

15

|

+

|

|

16

|

+

# Error raised when a request is not executed due to too many concurrent requests.

|

|

17

|

+

class ThrottledError < Restrainer::ThrottledError

|

|

18

|

+

attr_reader :request_count

|

|

19

|

+

|

|

20

|

+

def initialize(message, request_count:)

|

|

21

|

+

super(message)

|

|

22

|

+

@request_count = request_count

|

|

23

|

+

end

|

|

24

|

+

end

|

|

25

|

+

end

|

|

26

|

+

|

|

27

|

+

Faraday::Middleware.register_middleware(dynamic_timeout: FaradayDynamicTimeout::Middleware)

|

metadata

ADDED

|

@@ -0,0 +1,101 @@

|

|

|

1

|

+

--- !ruby/object:Gem::Specification

|

|

2

|

+

name: faraday_dynamic_timeout

|

|

3

|

+

version: !ruby/object:Gem::Version

|

|

4

|

+

version: 1.0.0

|

|

5

|

+

platform: ruby

|

|

6

|

+

authors:

|

|

7

|

+

- Brian Durand

|

|

8

|

+

autorequire:

|

|

9

|

+

bindir: bin

|

|

10

|

+

cert_chain: []

|

|

11

|

+

date: 2023-12-29 00:00:00.000000000 Z

|

|

12

|

+

dependencies:

|

|

13

|

+

- !ruby/object:Gem::Dependency

|

|

14

|

+

name: faraday

|

|

15

|

+

requirement: !ruby/object:Gem::Requirement

|

|

16

|

+

requirements:

|

|

17

|

+

- - ">="

|

|

18

|

+

- !ruby/object:Gem::Version

|

|

19

|

+

version: '0'

|

|

20

|

+

type: :runtime

|

|

21

|

+

prerelease: false

|

|

22

|

+

version_requirements: !ruby/object:Gem::Requirement

|

|

23

|

+

requirements:

|

|

24

|

+

- - ">="

|

|

25

|

+

- !ruby/object:Gem::Version

|

|

26

|

+

version: '0'

|

|

27

|

+

- !ruby/object:Gem::Dependency

|

|

28

|

+

name: restrainer

|

|

29

|

+

requirement: !ruby/object:Gem::Requirement

|

|

30

|

+

requirements:

|

|

31

|

+

- - ">="

|

|

32

|

+

- !ruby/object:Gem::Version

|

|

33

|

+

version: 1.1.3

|

|

34

|

+

type: :runtime

|

|

35

|

+

prerelease: false

|

|

36

|

+

version_requirements: !ruby/object:Gem::Requirement

|

|

37

|

+

requirements:

|

|

38

|

+

- - ">="

|

|

39

|

+

- !ruby/object:Gem::Version

|

|

40

|

+

version: 1.1.3

|

|

41

|

+

- !ruby/object:Gem::Dependency

|

|

42

|

+

name: bundler

|

|

43

|

+

requirement: !ruby/object:Gem::Requirement

|

|

44

|

+

requirements:

|

|

45

|

+

- - ">="

|

|

46

|

+

- !ruby/object:Gem::Version

|

|

47

|

+

version: '0'

|

|

48

|

+

type: :development

|

|

49

|

+

prerelease: false

|

|

50

|

+

version_requirements: !ruby/object:Gem::Requirement

|

|

51

|

+

requirements:

|

|

52

|

+

- - ">="

|

|

53

|

+

- !ruby/object:Gem::Version

|

|

54

|

+

version: '0'

|

|

55

|

+

description:

|

|

56

|

+

email:

|

|

57

|

+

- bbdurand@gmail.com

|

|

58

|

+

executables: []

|

|

59

|

+

extensions: []

|

|

60

|

+

extra_rdoc_files: []

|

|

61

|

+

files:

|

|

62

|

+

- Appraisals

|

|

63

|

+

- CHANGELOG.md

|

|

64

|

+

- MIT-LICENSE.txt

|

|

65

|

+

- README.md

|

|

66

|

+

- VERSION

|

|

67

|

+

- faraday_dynamic_timeout.gemspec

|

|

68

|

+

- gemfiles/faraday_1.gemfile

|

|

69

|

+

- gemfiles/faraday_2.gemfile

|

|

70

|

+

- lib/faraday_dynamic_timeout.rb

|

|

71

|

+

- lib/faraday_dynamic_timeout/bucket.rb

|

|

72

|

+

- lib/faraday_dynamic_timeout/capacity_strategy.rb

|

|

73

|

+

- lib/faraday_dynamic_timeout/counter.rb

|

|

74

|

+

- lib/faraday_dynamic_timeout/middleware.rb

|

|

75

|

+

- lib/faraday_dynamic_timeout/request_info.rb

|

|

76

|

+

- lib/faraday_dynamic_timeout/strategy.rb

|

|

77

|

+

homepage: https://github.com/bdurand/faraday_dynamic_timeout

|

|

78

|

+

licenses:

|

|

79

|

+

- MIT

|

|

80

|

+

metadata: {}

|

|

81

|

+

post_install_message:

|

|

82

|

+

rdoc_options: []

|

|

83

|

+

require_paths:

|

|

84

|

+

- lib

|

|

85

|

+

required_ruby_version: !ruby/object:Gem::Requirement

|

|

86

|

+

requirements:

|

|

87

|

+

- - ">="

|

|

88

|

+

- !ruby/object:Gem::Version

|

|

89

|

+

version: '2.5'

|

|

90

|

+

required_rubygems_version: !ruby/object:Gem::Requirement

|

|

91

|

+

requirements:

|

|

92

|

+

- - ">="

|

|

93

|

+

- !ruby/object:Gem::Version

|

|

94

|

+

version: '0'

|

|

95

|

+

requirements: []

|

|

96

|

+

rubygems_version: 3.4.12

|

|

97

|

+

signing_key:

|

|

98

|

+

specification_version: 4

|

|

99

|

+

summary: Faraday middleware to dynamically set a request timeout based on the number

|

|

100

|

+

of concurrent requests and throttle the number of requests that can be made.

|

|

101

|

+

test_files: []

|