dorothy2 0.0.1 → 0.0.2

This diff represents the content of publicly available package versions that have been released to one of the supported registries. The information contained in this diff is provided for informational purposes only and reflects changes between package versions as they appear in their respective public registries.

- data/README.md +16 -20

- data/bin/dorothy_start +4 -2

- data/bin/dparser_start +12 -8

- data/bin/dparser_stop +8 -3

- data/dorothy2.gemspec +5 -2

- data/lib/doroParser.rb +254 -282

- data/lib/dorothy2.rb +7 -10

- data/lib/dorothy2/MAM.rb +2 -2

- data/lib/dorothy2/do-init.rb +11 -0

- data/lib/dorothy2/do-parsers.rb +3 -3

- data/lib/dorothy2/do-utils.rb +1 -1

- data/lib/dorothy2/environment.rb +0 -4

- data/lib/dorothy2/version.rb +1 -1

- data/share/img/Dorothy-Basic.pdf +0 -0

- metadata +53 -4

data/README.md

CHANGED

|

@@ -8,8 +8,8 @@ A malware/botnet analysis framework written in Ruby.

|

|

|

8

8

|

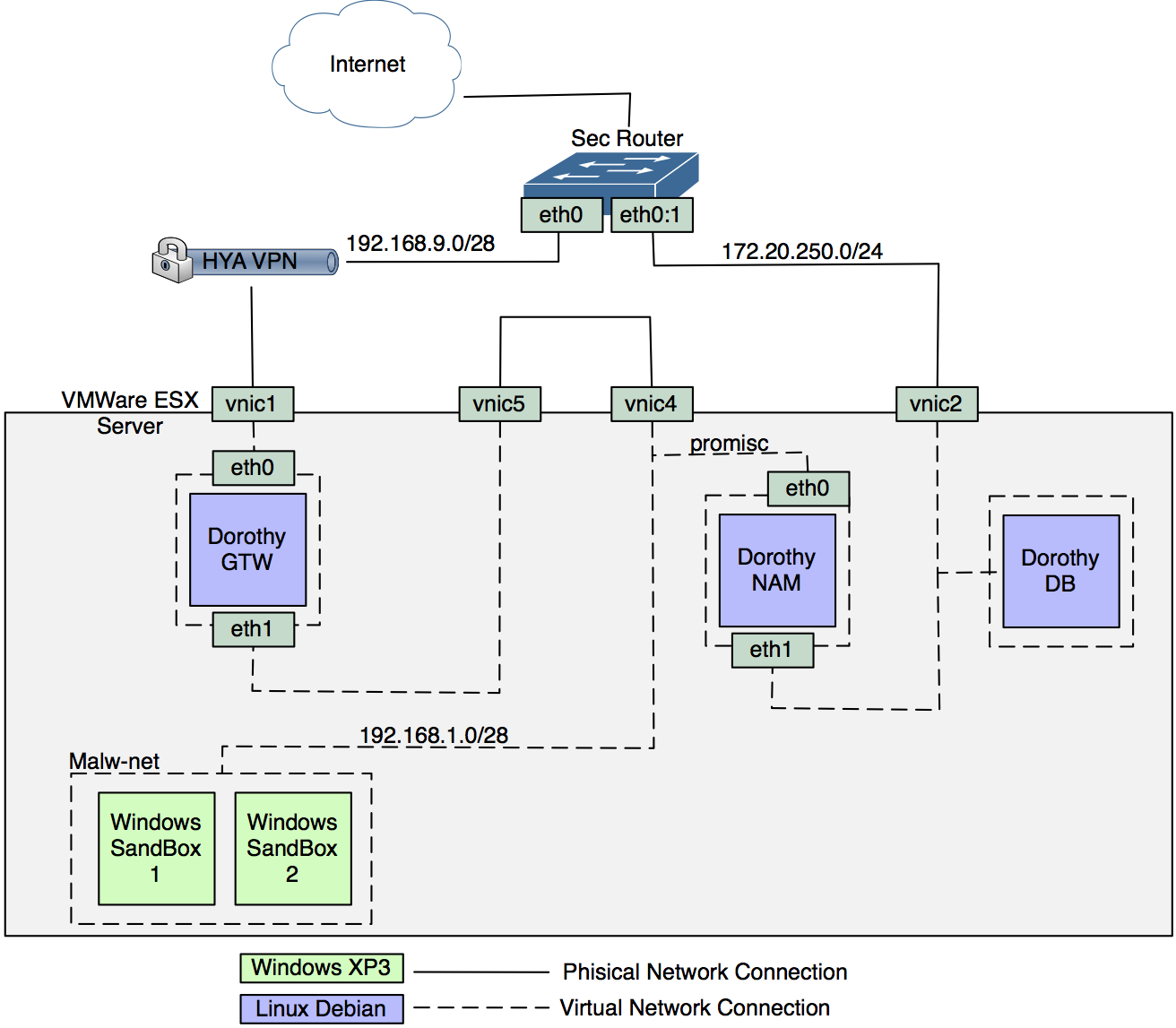

Dorothy2 is a framework created for mass malware analysis. Currently, it is mainly based on analyzing the network behavior of a virtual machine where a suspicious executable was executed.

|

|

9

9

|

However, static binary analysis and system behavior analysis will be shortly introduced in the next version.

|

|

10

10

|

|

|

11

|

-

Dorothy2 is a continuation of my degree's final project (Dorothy: inside the Storm

|

|

12

|

-

The main framework's structure remained almost the same, and it has been fully detailed in my degree's final project or in this short paper

|

|

11

|

+

Dorothy2 is a continuation of my degree's final project ([Dorothy: inside the Storm](https://www.honeynet.it/wp-content/uploads/Dorothy/The_Dorothy_Project.pdf) ) that I presented on Feb 2009.

|

|

12

|

+

The main framework's structure remained almost the same, and it has been fully detailed in my degree's final project or in this short [paper](http://www.honeynet.it/wp-content/uploads/Dorothy/EC2ND-Dorothy.pdf). More information about the whole project can be found on the Italian Honeyproject [website](http://www.honeynet.it).

|

|

13

13

|

|

|

14

14

|

|

|

15

15

|

The framework is manly composed by four big elements that can be even executed separately:

|

|

@@ -19,25 +19,19 @@ The framework is manly composed by four big elements that can be even executed s

|

|

|

19

19

|

* The Webgui (Coded in Rails by Andrea Valerio, and not yet included in this gem)

|

|

20

20

|

* The Java Dorothy Drone (Mainly coded by Patrizia Martemucci and Domenico Chiarito, but not part of this gem and not publicly available.)

|

|

21

21

|

|

|

22

|

-

The first three modules are (or will be soon) publicly released under GPL 2/3 license as tribute to the the Honeynet Project Alliance

|

|

22

|

+

The first three modules are (or will be soon) publicly released under GPL 2/3 license as tribute to the the [Honeynet Project Alliance](http://www.honeynet.org).

|

|

23

23

|

All the information generated by the framework - i.e. binary info, timestamps, dissected network analysis - are stored into a postgres DB (Dorothive) in order to be used for further analysis.

|

|

24

|

-

A no-SQL database (CouchDB) is also used to mass strore all the traffic dumps thanks to the pcapr/xtractr

|

|

24

|

+

A no-SQL database (CouchDB) is also used to mass strore all the traffic dumps thanks to the [pcapr/xtractr](https://code.google.com/p/pcapr/wiki/Xtractr) technology.

|

|

25

25

|

|

|

26

26

|

I started to code this project in late 2009 while learning Ruby at the same time. Since then, I´ve been changing/improving it as long as my Ruby coding skills were improving. Because of that, you may find some parts of code not-really-tidy :)

|

|

27

27

|

|

|

28

|

-

[1] https://www.honeynet.it/wp-content/uploads/Dorothy/The_Dorothy_Project.pdf

|

|

29

|

-

[2] http://www.honeynet.it/wp-content/uploads/Dorothy/EC2ND-Dorothy.pdf

|

|

30

|

-

[3] http://www.honeynet.it

|

|

31

|

-

[4] http://www.honeynet.org

|

|

32

|

-

[5] https://code.google.com/p/pcapr/wiki/Xtractr

|

|

33

|

-

|

|

34

28

|

##Requirements

|

|

35

29

|

|

|

36

30

|

>WARNING:

|

|

37

31

|

The current version of Dorothy only utilizes VMWare ESX5 as its Virtual Sandbox Module (VSM). Thus, the free version of ESXi is not supported due to its limitations in using the

|

|

38

32

|

vSphere 5 API.

|

|

39

33

|

However, the overall framework could be easily customized in order to use another virtualization engine. Dorothy2 is

|

|

40

|

-

very modular,and any customization or modification is very welcome.

|

|

34

|

+

very [modular](http://www.honeynet.it/wp-content/uploads/The_big_picture.pdf),and any customization or modification is very welcome.

|

|

41

35

|

|

|

42

36

|

Dorothy needs the following software (not expressly in the same host) in order to be executed:

|

|

43

37

|

|

|

@@ -77,21 +71,23 @@ It is recommended to follow this step2step process:

|

|

|

77

71

|

* After configuring everything on the Guest OS, create a snapshot of the sandbox VM from vSphere console. Dorothy will use it when reverting the VM after a binary execution.

|

|

78

72

|

|

|

79

73

|

3. Configure the unix VM dedicated to the NAM

|

|

80

|

-

|

|

74

|

+

* Configure the NIC on the virtual machine that will be used for the network sniffing purpose (NAM).

|

|

81

75

|

>The vSwitch where the vNIC resides must allow the promisc mode, to enable it from vSphere:

|

|

82

76

|

|

|

83

77

|

>Configuration->Networking->Proprieties on the vistualSwitch used for the analysis->Double click on the virtual network used for the analysis->Securiry->Tick "Promiscuous Mode", then select "Accept" from the list menu.

|

|

84

78

|

|

|

85

|

-

* Install tcpdump and sudo

|

|

86

79

|

|

|

87

|

-

|

|

80

|

+

* Install tcpdump and sudo

|

|

81

|

+

|

|

82

|

+

#apt-get install tcpdump sudo

|

|

83

|

+

|

|

84

|

+

* Create a dedicated user for dorothy (e.g. "dorothy")

|

|

88

85

|

|

|

89

|

-

|

|

86

|

+

#useradd dorothy

|

|

90

87

|

|

|

91

|

-

|

|

92

|

-

* Add dorothy's user permission to execute/kill tcpdump to the sudoers file:

|

|

88

|

+

* Add dorothy's user permission to execute/kill tcpdump to the sudoers file:

|

|

93

89

|

|

|

94

|

-

|

|

90

|

+

#visudo

|

|

95

91

|

add the following line:

|

|

96

92

|

dorothy ALL = NOPASSWD: /usr/sbin/tcpdump, /bin/kill

|

|

97

93

|

|

|

@@ -100,13 +96,13 @@ It is recommended to follow this step2step process:

|

|

|

100

96

|

> In the following example, the Dorothy gem is installed in the same host where Dorothive (the DB) resides.

|

|

101

97

|

> This setup is strongly recommended

|

|

102

98

|

|

|

103

|

-

>

|

|

104

100

|

|

|

105

101

|

2. Advanced setup

|

|

106

102

|

> This setup is recommended if Dorothy is going to be installed in a Corporate environment.

|

|

107

103

|

> By leveraging a private VPN, all the sandbox traffics exits from the Corporate network with an external IP addresses.

|

|

108

104

|

|

|

109

|

-

>

|

|

110

106

|

|

|

111

107

|

### 2. Install the required software

|

|

112

108

|

|

data/bin/dorothy_start

CHANGED

|

@@ -142,7 +142,7 @@ if Util.exists?(sfile)

|

|

|

142

142

|

end

|

|

143

143

|

end

|

|

144

144

|

else

|

|

145

|

-

puts "[WARNING]".red + " A source file doesn't exist, please crate one into #{home}/etc. See the example file in #{HOME}/etc/

|

|

145

|

+

puts "[WARNING]".red + " A source file doesn't exist, please crate one into #{home}/etc. See the example file in #{HOME}/etc/sources.yml.example"

|

|

146

146

|

exit(0)

|

|

147

147

|

end

|

|

148

148

|

|

|

@@ -169,8 +169,10 @@ db.close

|

|

|

169

169

|

begin

|

|

170

170

|

Dorothy.start sources[opts[:source]], daemon

|

|

171

171

|

rescue => e

|

|

172

|

+

puts "[Dorothy]".yellow + " An error occurred: ".red + $!

|

|

173

|

+

puts "[Dorothy]".yellow + " For more information check the logfile" + $! if daemon

|

|

172

174

|

LOGGER.error "Dorothy", "An error occurred: " + $!

|

|

173

175

|

LOGGER.debug "Dorothy", "#{e.inspect} --BACKTRACE: #{e.backtrace}"

|

|

174

|

-

LOGGER.

|

|

176

|

+

LOGGER.info "Dorothy", "Dorothy has been stopped"

|

|

175

177

|

end

|

|

176

178

|

|

data/bin/dparser_start

CHANGED

|

@@ -9,8 +9,9 @@ require 'trollop'

|

|

|

9

9

|

require 'dorothy2'

|

|

10

10

|

require 'doroParser'

|

|

11

11

|

|

|

12

|

-

load '../lib/doroParser'

|

|

12

|

+

#load '../lib/doroParser.rb'

|

|

13

13

|

|

|

14

|

+

include Dorothy

|

|

14

15

|

include DoroParser

|

|

15

16

|

|

|

16

17

|

|

|

@@ -45,22 +46,25 @@ VERBOSE = opts[:verbose] ? true : false

|

|

|

45

46

|

daemon = opts[:daemon] ? true : false

|

|

46

47

|

|

|

47

48

|

|

|

49

|

+

|

|

48

50

|

conf = "#{File.expand_path("~")}/.dorothy.yml"

|

|

49

51

|

DoroSettings.load!(conf)

|

|

50

52

|

|

|

51

53

|

#Logging

|

|

52

54

|

logout = (daemon ? DoroSettings.env[:logfile_parser] : STDOUT)

|

|

55

|

+

LOGGER_PARSER = DoroLogger.new(logout, DoroSettings.env[:logage])

|

|

56

|

+

LOGGER_PARSER.sev_threshold = DoroSettings.env[:loglevel]

|

|

57

|

+

|

|

53

58

|

LOGGER = DoroLogger.new(logout, DoroSettings.env[:logage])

|

|

54

59

|

LOGGER.sev_threshold = DoroSettings.env[:loglevel]

|

|

55

60

|

|

|

56

61

|

begin

|

|

57

|

-

DoroParser.start

|

|

62

|

+

DoroParser.start(daemon)

|

|

58

63

|

rescue => e

|

|

59

|

-

|

|

60

|

-

|

|

61

|

-

|

|

64

|

+

puts "[PARSER]".yellow + " An error occurred: ".red + $!

|

|

65

|

+

puts "[PARSER]".yellow + " For more information check the logfile" + $! if daemon

|

|

66

|

+

LOGGER_PARSER.error "Parser", "An error occurred: " + $!

|

|

67

|

+

LOGGER_PARSER.debug "Parser", "#{e.inspect} --BACKTRACE: #{e.backtrace}"

|

|

68

|

+

LOGGER_PARSER.info "Parser", "Dorothy-Parser has been stopped"

|

|

62

69

|

end

|

|

63

70

|

|

|

64

|

-

|

|

65

|

-

|

|

66

|

-

|

data/bin/dparser_stop

CHANGED

|

@@ -10,14 +10,19 @@ require 'trollop'

|

|

|

10

10

|

require 'dorothy2'

|

|

11

11

|

require 'doroParser'

|

|

12

12

|

|

|

13

|

-

#load '../lib/doroParser'

|

|

13

|

+

#load '../lib/doroParser.rb'

|

|

14

14

|

|

|

15

|

+

include Dorothy

|

|

15

16

|

include DoroParser

|

|

16

17

|

|

|

18

|

+

conf = "#{File.expand_path("~")}/.dorothy.yml"

|

|

19

|

+

DoroSettings.load!(conf)

|

|

20

|

+

|

|

17

21

|

|

|

18

22

|

#Logging

|

|

19

|

-

LOGGER_PARSER = DoroLogger.new(

|

|

20

|

-

|

|

23

|

+

LOGGER_PARSER = DoroLogger.new(STDOUT, 'weekly')

|

|

24

|

+

|

|

25

|

+

LOGGER = DoroLogger.new(STDOUT, 'weekly')

|

|

21

26

|

|

|

22

27

|

DoroParser.stop

|

|

23

28

|

|

data/dorothy2.gemspec

CHANGED

|

@@ -11,7 +11,6 @@ Gem::Specification.new do |gem|

|

|

|

11

11

|

gem.description = %q{A malware/botnet analysis framework written in Ruby.}

|

|

12

12

|

gem.summary = %q{More info at http://www.honeynet.it}

|

|

13

13

|

gem.homepage = "https://github.com/m4rco-/dorothy2"

|

|

14

|

-

|

|

15

14

|

gem.files = `git ls-files`.split($/)

|

|

16

15

|

gem.executables = gem.files.grep(%r{^bin/}).map{ |f| File.basename(f) }

|

|

17

16

|

gem.test_files = gem.files.grep(%r{^(test|spec|features)/})

|

|

@@ -26,5 +25,9 @@ Gem::Specification.new do |gem|

|

|

|

26

25

|

gem.add_dependency(%q<virustotal>, [">= 2.0.0"])

|

|

27

26

|

gem.add_dependency(%q<rbvmomi>, [">= 1.3.0"])

|

|

28

27

|

gem.add_dependency(%q<ruby-filemagic>, [">= 0.4.2"])

|

|

29

|

-

|

|

28

|

+

#for dparser

|

|

29

|

+

gem.add_dependency(%q<net-dns>, [">= 0.8.0"])

|

|

30

|

+

gem.add_dependency(%q<geoip>, [">= 1.2.1"])

|

|

31

|

+

gem.add_dependency(%q<tmail>, [">= 1.2.7.1"])

|

|

30

32

|

end

|

|

33

|

+

|

data/lib/doroParser.rb

CHANGED

|

@@ -3,29 +3,35 @@

|

|

|

3

3

|

# See the file 'LICENSE' for copying permission.

|

|

4

4

|

|

|

5

5

|

#!/usr/local/bin/ruby

|

|

6

|

-

|

|

7

|

-

|

|

6

|

+

|

|

7

|

+

#load 'lib/doroParser.rb'; include Dorothy; include DoroParser; LOGGER = DoroLogger.new(STDOUT, "weekly")

|

|

8

|

+

|

|

9

|

+

#Install mu/xtractr from svn checkout http://pcapr.googlecode.com/svn/trunk/ pcapr-read-only

|

|

10

|

+

|

|

8

11

|

require 'rubygems'

|

|

9

12

|

require 'mu/xtractr'

|

|

10

13

|

require 'md5'

|

|

11

14

|

require 'rbvmomi'

|

|

12

15

|

require 'rest_client'

|

|

16

|

+

require 'net/dns'

|

|

13

17

|

require 'net/dns/packet'

|

|

14

18

|

require 'ipaddr'

|

|

15

19

|

require 'colored'

|

|

16

20

|

require 'trollop'

|

|

17

21

|

require 'ftools'

|

|

18

22

|

require 'filemagic' #require 'pcaplet'

|

|

19

|

-

require 'geoip'

|

|

23

|

+

require 'geoip'

|

|

20

24

|

require 'pg'

|

|

21

25

|

require 'iconv'

|

|

22

26

|

require 'tmail'

|

|

23

27

|

require 'ipaddr'

|

|

24

28

|

|

|

25

|

-

require File.dirname(__FILE__) + '/

|

|

26

|

-

require File.dirname(__FILE__) + '/

|

|

27

|

-

require File.dirname(__FILE__) + '/

|

|

28

|

-

require File.dirname(__FILE__) + '/

|

|

29

|

+

require File.dirname(__FILE__) + '/dorothy2/environment'

|

|

30

|

+

require File.dirname(__FILE__) + '/dorothy2/do-parsers'

|

|

31

|

+

require File.dirname(__FILE__) + '/dorothy2/do-utils'

|

|

32

|

+

require File.dirname(__FILE__) + '/dorothy2/do-logger'

|

|

33

|

+

require File.dirname(__FILE__) + '/dorothy2/deep_symbolize'

|

|

34

|

+

|

|

29

35

|

|

|

30

36

|

|

|

31

37

|

module DoroParser

|

|

@@ -39,17 +45,14 @@ module DoroParser

|

|

|

39

45

|

|

|

40

46

|

util = Util.new

|

|

41

47

|

|

|

42

|

-

|

|

43

48

|

ircvalues = []

|

|

44

49

|

streamdata.each do |m|

|

|

45

50

|

# if m[1] == 0 #we fetch only outgoing traffic

|

|

46

51

|

direction_bool = (m[1] == 0 ? false : true)

|

|

47

52

|

LOGGER_PARSER.info "PARSER", "FOUND IRC DATA".white

|

|

48

53

|

LOGGER_PARSER.info "IRC", "#{m[0]}".yellow

|

|

49

|

-

#puts "..::: #{parsed.command}".white + " #{parsed.content}".yellow

|

|

50

54

|

|

|

51

55

|

ircvalues.push "default, currval('dorothy.connections_id_seq'), E'#{Insertdb.escape_bytea(m[0])}', #{direction_bool}"

|

|

52

|

-

# end

|

|

53

56

|

end

|

|

54

57

|

return ircvalues

|

|

55

58

|

end

|

|

@@ -71,202 +74,238 @@ module DoroParser

|

|

|

71

74

|

|

|

72

75

|

LOGGER_PARSER.debug "PARSER", "Analyzing dump: ".yellow + dump['hash'].gsub(/\s+/, "") if VERBOSE

|

|

73

76

|

|

|

77

|

+

downloadir = "#{DoroSettings.env[:analysis_dir]}/#{dump['anal_id']}/downloads"

|

|

74

78

|

|

|

75

|

-

#gets

|

|

76

|

-

|

|

77

|

-

downloadir = "#{ANALYSIS_DIR}/#{dump['sample'].gsub(/\s+/, "")}/downloads"

|

|

78

|

-

|

|

79

|

-

#puts "Sighting of #{malw.sha} imported"

|

|

80

|

-

|

|

81

|

-

##NETWORK DUMP PARSING#

|

|

82

|

-

#######################

|

|

83

|

-

#LOAD XTRACTR INSTANCE#

|

|

84

|

-

|

|

85

|

-

# begin

|

|

86

|

-

# t = RestClient.get "http://172.20.250.13:8080/pcaps/1/about/#{dump['pcapr_id'].gsub(/\s+/, "")"

|

|

87

|

-

# jt = JSON.parse(t)

|

|

88

|

-

# rescue RestClient::InternalServerError

|

|

89

|

-

# puts ".:: File not found: http://172.20.250.13:8080/pcaps/1/about/#{dump['pcapr_id'].gsub(/\s+/, "")".red

|

|

90

|

-

# puts ".:: #{$!}".red

|

|

91

|

-

## puts ".:: Skipping malware #{dump['hash']} and doing DB ROLLBACK".red

|

|

92

|

-

# next

|

|

93

|

-

# end

|

|

94

|

-

|

|

95

|

-

# puts ".:: File PCAP found on PCAPR DB - #{jt['filename']} - ID #{dump['pcapr_id'].gsub(/\s+/, "")"

|

|

96

|

-

#xtractr = Mu::Xtractr.create "http://172.20.250.13:8080/home/index.html#/browse/pcap/dd737a00ff0495083cf6edd772fe2a18"

|

|

97

|

-

# 843272e9a0b6a5f4aa5985d151cb6721

|

|

98

79

|

|

|

99

80

|

begin

|

|

100

|

-

|

|

101

|

-

|

|

102

|

-

|

|

103

|

-

|

|

104

|

-

|

|

105

|

-

|

|

106

|

-

rescue

|

|

107

|

-

LOGGER_PARSER.fatal "PARSER", "Can't create a XTRACTR instance, try with nextone".red

|

|

108

|

-

# LOGGER_PARSER.debug "PARSER", "#{$!}"

|

|

81

|

+

xtractr = Doroxtractr.create "http://#{DoroSettings.pcapr[:host]}:#{DoroSettings.pcapr[:port]}/pcaps/1/pcap/#{dump['pcapr_id'].gsub(/\s+/, "")}"

|

|

82

|

+

|

|

83

|

+

rescue => e

|

|

84

|

+

LOGGER_PARSER.fatal "PARSER", "Can't create a XTRACTR instance, try with nextone"

|

|

85

|

+

LOGGER_PARSER.debug "PARSER", "#{$!}"

|

|

86

|

+

LOGGER_PARSER.debug "PARSER", e

|

|

109

87

|

next

|

|

110

88

|

end

|

|

111

89

|

|

|

112

90

|

|

|

113

91

|

LOGGER_PARSER.info "PARSER", "Scanning network flows and searching for unknown host IPs".yellow

|

|

114

92

|

|

|

115

|

-

#xtractr.flows('flow.service:HTTP').each { |flow|

|

|

116

|

-

|

|

117

93

|

xtractr.flows.each { |flow|

|

|

118

|

-

#TODO: begin , make exception hangling for every flow

|

|

119

94

|

|

|

120

|

-

|

|

121

|

-

#puts flow.id

|

|

95

|

+

begin

|

|

122

96

|

|

|

123

|

-

|

|

97

|

+

flowdeep = xtractr.flows("flow.id:#{flow.id}")

|

|

124

98

|

|

|

125

99

|

|

|

126

100

|

|

|

127

|

-

|

|

128

|

-

|

|

129

|

-

|

|

130

|

-

|

|

131

|

-

|

|

101

|

+

#Skipping if NETBIOS spreading activity:

|

|

102

|

+

if flow.dport == 135 or flow.dport == 445

|

|

103

|

+

LOGGER_PARSER.info "PARSER", "Netbios connections, skipping flow" unless NONETBIOS

|

|

104

|

+

next

|

|

105

|

+

end

|

|

132

106

|

|

|

133

107

|

|

|

134

|

-

|

|

108

|

+

title = flow.title[0..200].gsub(/'/,"") #xtool bug ->')

|

|

135

109

|

|

|

136

110

|

|

|

137

|

-

|

|

138

|

-

|

|

139

|

-

|

|

140

|

-

|

|

141

|

-

|

|

111

|

+

#insert hosts (geo) info into db

|

|

112

|

+

#TODO: check if is a localaddress

|

|

113

|

+

localip = xtractr.flows.first.src.address

|

|

114

|

+

localnet = IPAddr.new(localip)

|

|

115

|

+

multicast = IPAddr.new("224.0.0.0/4")

|

|

142

116

|

|

|

143

|

-

|

|

144

|

-

|

|

145

|

-

|

|

146

|

-

|

|

147

|

-

|

|

117

|

+

#check if already present in DB

|

|

118

|

+

unless(@insertdb.select("host_ips", "ip", flow.dst.address).one? || hosts.include?(flow.dst.address))

|

|

119

|

+

LOGGER_PARSER.info "PARSER", "Analyzing #{flow.dst.address}".yellow

|

|

120

|

+

hosts << flow.dst.address

|

|

121

|

+

dest = flow.dst.address

|

|

148

122

|

|

|

149

123

|

|

|

150

|

-

|

|

151

|

-

|

|

124

|

+

#insert Geoinfo

|

|

125

|

+

unless(localnet.include?(flow.dst.address) || multicast.include?(flow.dst.address))

|

|

152

126

|

|

|

153

|

-

|

|

154

|

-

|

|

155

|

-

|

|

127

|

+

geo = Geoinfo.new(flow.dst.address.to_s)

|

|

128

|

+

geoval = ["default", geo.coord, geo.country, geo.city, geo.updated, geo.asn]

|

|

129

|

+

LOGGER_PARSER.debug "GEO", "Geo-values for #{flow.dst.address.to_s}: " + geo.country + " " + geo.city + " " + geo.coord if VERBOSE

|

|

130

|

+

|

|

131

|

+

if geo.coord != "null"

|

|

132

|

+

LOGGER_PARSER.debug "DB", " Inserting geo values for #{flow.dst.address.to_s} : #{geo.country}".blue if VERBOSE

|

|

133

|

+

@insertdb.insert("geoinfo",geoval)

|

|

134

|

+

geoval = "currval('dorothy.geoinfo_id_seq')"

|

|

135

|

+

else

|

|

136

|

+

LOGGER_PARSER.warn "DB", " No Geovalues found for #{flow.dst.address.to_s}".red if VERBOSE

|

|

137

|

+

geoval = "null"

|

|

138

|

+

end

|

|

156

139

|

|

|

157

|

-

if geo.coord != "null"

|

|

158

|

-

LOGGER_PARSER.debug "DB", " Inserting geo values for #{flow.dst.address.to_s} : #{geo.country}".blue if VERBOSE

|

|

159

|

-

@insertdb.insert("geoinfo",geoval)

|

|

160

|

-

geoval = "currval('dorothy.geoinfo_id_seq')"

|

|

161

140

|

else

|

|

162

|

-

LOGGER_PARSER.warn "

|

|

163

|

-

geoval =

|

|

141

|

+

LOGGER_PARSER.warn "PARSER", "#{flow.dst.address} skipped while searching for GeoInfo (it's a local network))".yellow

|

|

142

|

+

geoval = 'null'

|

|

143

|

+

dest = localip

|

|

144

|

+

end

|

|

145

|

+

|

|

146

|

+

#Insert host info

|

|

147

|

+

#ip - geoinfo - sbl - uptime - is_online - whois - zone - last-update - id - dns_name

|

|

148

|

+

hostname = (dns_list[dest].nil? ? "null" : dns_list[dest])

|

|

149

|

+

hostval = [dest, geoval, "null", "null", true, "null", "null", get_time, "default", hostname]

|

|

150

|

+

|

|

151

|

+

if !@insertdb.insert("host_ips",hostval)

|

|

152

|

+

LOGGER_PARSER.debug "DB", " Skipping flow #{flow.id}: #{flow.src.address} > #{flow.dst.address}" if VERBOSE

|

|

153

|

+

next

|

|

164

154

|

end

|

|

165

155

|

|

|

166

156

|

else

|

|

167

|

-

LOGGER_PARSER.

|

|

168

|

-

|

|

169

|

-

dest = localip

|

|

157

|

+

LOGGER_PARSER.debug "PARSER", "Host already #{flow.dst.address} known, skipping..." if VERBOSE

|

|

158

|

+

#puts ".:: Geo info host #{flow.dst.address} already present in geodatabase, skipping.." if @insertdb.select("host_ips", "ip", flow.dst.address)

|

|

170

159

|

end

|

|

171

160

|

|

|

172

|

-

#

|

|

173

|

-

|

|

174

|

-

|

|

175

|

-

hostval = [dest, geoval, "null", "null", true, "null", "null", get_time, "default", hostname]

|

|

161

|

+

#case TCP xtractr.flows('flow.service:SMTP').first.proto = 6

|

|

162

|

+

|

|

163

|

+

flowvals = [flow.src.address, flow.dst.address, flow.sport, flow.dport, flow.bytes, dump['hash'], flow.packets, "default", flow.proto, flow.service.name, title, "null", flow.duration, flow.time, flow.id ]

|

|

176

164

|

|

|

177

|

-

if !@insertdb.insert("

|

|

178

|

-

LOGGER_PARSER.

|

|

165

|

+

if !@insertdb.insert("flows",flowvals)

|

|

166

|

+

LOGGER_PARSER.info "PARSER", "Skipping flow #{flow.id}: #{flow.src.address} > #{flow.dst.address}"

|

|

179

167

|

next

|

|

180

168

|

end

|

|

181

169

|

|

|

182

|

-

|

|

183

|

-

LOGGER_PARSER.debug "PARSER", "Host already #{flow.dst.address} known, skipping..." if VERBOSE

|

|

184

|

-

#puts ".:: Geo info host #{flow.dst.address} already present in geodatabase, skipping.." if @insertdb.select("host_ips", "ip", flow.dst.address)

|

|

185

|

-

end

|

|

170

|

+

LOGGER_PARSER.debug("DB", "Inserting flow #{flow.id} - #{flow.title}".blue) if VERBOSE

|

|

186

171

|

|

|

187

|

-

|

|

172

|

+

flowid = "currval('dorothy.connections_id_seq')"

|

|

188

173

|

|

|

189

|

-

|

|

174

|

+

#Layer 3 analysis

|

|

175

|

+

service = flow.service.name

|

|

190

176

|

|

|

191

|

-

|

|

192

|

-

|

|

193

|

-

|

|

194

|

-

|

|

177

|

+

case flow.proto

|

|

178

|

+

when 6 then

|

|

179

|

+

#check if HTTP,IRC, MAIL

|

|

180

|

+

#xtractr.flows('flow.service:SMTP').first.service.name == "TCP" when unknow

|

|

195

181

|

|

|

196

|

-

|

|

182

|

+

#Layer 4 analysis

|

|

183

|

+

streamdata = xtractr.streamdata(flow.id)

|

|

197

184

|

|

|

198

|

-

|

|

185

|

+

case service #TODO: don't trust service field: it's based on default-port definition, do a packet inspection instead.

|

|

199

186

|

|

|

200

|

-

|

|

201

|

-

|

|

187

|

+

#case HTTP

|

|

188

|

+

when "HTTP" then

|

|

189

|

+

http = DoroHttp.new(flowdeep)

|

|

202

190

|

|

|

203

|

-

|

|

204

|

-

|

|

191

|

+

if http.method =~ /GET|POST/

|

|

192

|

+

LOGGER_PARSER.info "HTTP", "FOUND an HTTP request".white

|

|

193

|

+

LOGGER_PARSER.info "HTTP", "HTTP #{http.method}".white + " #{http.uri}".yellow

|

|

205

194

|

|

|

206

|

-

|

|

207

|

-

|

|

208

|

-

#check if HTTP,IRC, MAIL

|

|

209

|

-

#xtractr.flows('flow.service:SMTP').first.service.name == "TCP" when unknow

|

|

195

|

+

t = http.uri.split('/')

|

|

196

|

+

filename = (t[t.length - 1].nil? ? "noname-#{flow.id}" : t[t.length - 1])

|

|

210

197

|

|

|

211

|

-

|

|

212

|

-

|

|

198

|

+

if http.method =~ /POST/

|

|

199

|

+

role_values = [CCDROP, flow.dst.address]

|

|

200

|

+

@insertdb.insert("host_roles", role_values ) unless @insertdb.select("host_roles", "role", role_values[0], "host_ip", role_values[1]).one?

|

|

201

|

+

http.data = xtractr.flowcontent(flow.id)

|

|

202

|

+

LOGGER_PARSER.debug "DB", "POST DATA SAVED IN THE DB"

|

|

203

|

+

end

|

|

213

204

|

|

|

214

|

-

|

|

215

|

-

|

|

205

|

+

if http.contype =~ /application/ # STORING ONLY application* type GET DATA (avoid html pages, etc)

|

|

206

|

+

LOGGER_PARSER.info "HTTP", "FOUND an Application Type".white

|

|

207

|

+

LOGGER_PARSER.debug "DB", " Inserting #{filename} downloaded file info" if VERBOSE

|

|

216

208

|

|

|

217

|

-

|

|

209

|

+

#download

|

|

210

|

+

flowdeep.each do |flow|

|

|

211

|

+

flow.contents.each do |c|

|

|

218

212

|

|

|

219

|

-

|

|

220

|

-

when "HTTP" then

|

|

221

|

-

http = DoroHttp.new(flowdeep)

|

|

213

|

+

LOGGER_PARSER.debug("DB", "Inserting downloaded http file info from #{flow.dst.address.to_s}".blue) if VERBOSE

|

|

222

214

|

|

|

223

|

-

|

|

224

|

-

|

|

225

|

-

LOGGER_PARSER.info "HTTP", "HTTP #{http.method}".white + " #{http.uri}".yellow

|

|

215

|

+

downvalues = [ DoroFile.sha2(c.body), flowid, downloadir, filename ]

|

|

216

|

+

@insertdb.insert("downloads", downvalues )

|

|

226

217

|

|

|

227

|

-

|

|

228

|

-

|

|

218

|

+

role_values = [CCSUPPORT, flow.dst.address]

|

|

219

|

+

@insertdb.insert("host_roles", role_values ) unless @insertdb.select("host_roles", "role", role_values[0], "host_ip", role_values[1]).one?

|

|

229

220

|

|

|

230

|

-

|

|

231

|

-

|

|

232

|

-

|

|

233

|

-

http.data = xtractr.flowcontent(flow.id)

|

|

234

|

-

LOGGER_PARSER.debug "DB", "POST DATA SAVED IN THE DB"

|

|

235

|

-

end

|

|

221

|

+

LOGGER_PARSER.debug "HTTP", "Saving downloaded file into #{downloadir}".white if VERBOSE

|

|

222

|

+

c.save("#{downloadir}/#{filename}")

|

|

223

|

+

end

|

|

236

224

|

|

|

237

|

-

|

|

238

|

-

LOGGER_PARSER.info "HTTP", "FOUND an Application Type".white

|

|

239

|

-

LOGGER_PARSER.debug "DB", " Inserting #{filename} downloaded file info" if VERBOSE

|

|

225

|

+

end

|

|

240

226

|

|

|

241

|

-

#download

|

|

242

|

-

flowdeep.each do |flow|

|

|

243

|

-

flow.contents.each do |c|

|

|

244

227

|

|

|

245

|

-

|

|

228

|

+

end

|

|

246

229

|

|

|

247

|

-

|

|

248

|

-

@insertdb.insert("downloads", downvalues )

|

|

230

|

+

httpvalues = "default, '#{http.method.downcase}', '#{http.uri}', #{http.size}, #{http.ssl}, #{flowid}, E'#{Insertdb.escape_bytea(http.data)}' "

|

|

249

231

|

|

|

250

|

-

|

|

251

|

-

|

|

232

|

+

LOGGER_PARSER.debug "DB", " Inserting http data info from #{flow.dst.address.to_s}".blue if VERBOSE

|

|

233

|

+

@insertdb.raw_insert("http_data", httpvalues)

|

|

252

234

|

|

|

253

|

-

|

|

254

|

-

|

|

235

|

+

else

|

|

236

|

+

LOGGER_PARSER.warn "HTTP", "Not a regular HTTP traffic on flow #{flow.id}".yellow

|

|

237

|

+

LOGGER_PARSER.info "PARSER", "Trying to guess if it is IRC".white

|

|

238

|

+

|

|

239

|

+

if Parser.guess(streamdata.inspect).class.inspect =~ /IRC/

|

|

240

|

+

ircvalues = search_irc(streamdata)

|

|

241

|

+

ircvalues.each do |ircvalue|

|

|

242

|

+

LOGGER_PARSER.debug "DB", " Inserting IRC DATA info from #{flow.dst.address.to_s}".blue if VERBOSE

|

|

243

|

+

@insertdb.raw_insert("irc_data", ircvalue )

|

|

244

|

+

role_values = [CCIRC, flow.dst.address]

|

|

245

|

+

@insertdb.insert("host_roles", role_values ) unless @insertdb.select("host_roles", "role", role_values[0], "host_ip", role_values[1]).one?

|

|

255

246

|

end

|

|

256

247

|

|

|

248

|

+

else

|

|

249

|

+

LOGGER_PARSER.info "PARSER", "NO-IRC".red

|

|

250

|

+

#TODO, store UNKNOWN communication data

|

|

251

|

+

|

|

257

252

|

end

|

|

258

253

|

|

|

259

254

|

|

|

255

|

+

|

|

256

|

+

|

|

257

|

+

|

|

260

258

|

end

|

|

261

259

|

|

|

262

|

-

|

|

260

|

+

#case MAIL

|

|

261

|

+

when "SMTP" then

|

|

262

|

+

LOGGER_PARSER.info "SMTP", "FOUND an SMTP request..".white

|

|

263

|

+

#insert mail

|

|

264

|

+

#by from to subject data id time connection

|

|

265

|

+

|

|

266

|

+

|

|

267

|

+

streamdata.each do |m|

|

|

268

|

+

mailfrom = 'null'

|

|

269

|

+

mailto = 'null'

|

|

270

|

+

mailcontent = 'null'

|

|

271

|

+

mailsubject = 'null'

|

|

272

|

+

mailhcmd = 'null'

|

|

273

|

+

mailhcont = 'null'

|

|

274

|

+

rdata = ['null', 'null']

|

|

275

|

+

|

|

276

|

+

case m[1]

|

|

277

|

+

when 0

|

|

278

|

+

if Parser::SMTP.header?(m[0])

|

|

279

|

+

@email = Parser::SMTP.new(m[0])

|

|

280

|

+

LOGGER_PARSER.info "SMTP", "[A]".white + @email.hcmd + " " + @email.hcont

|

|

281

|

+

if Parser::SMTP.hasbody?(m[0])

|

|

282

|

+

@email.body = Parser::SMTP.body(m[0])

|

|

283

|

+

mailto = @email.body.to

|

|

284

|

+

mailfrom = @email.body.from

|

|

285

|

+

mailsubject = @email.body.subject.gsub(/'/,"") #xtool bug ->')

|

|

286

|

+

end

|

|

287

|

+

end

|

|

288

|

+

when 1

|

|

289

|

+

rdata = Parser::SMTP.response(m[0]) if Parser::SMTP.response(m[0])

|

|

290

|

+

LOGGER_PARSER.info "SMTP", "[R]".white + rdata[0] + " " + rdata[1]

|

|

291

|

+

rdata[0] = 'null' if rdata[0].empty?

|

|

292

|

+

rdata[1] = 'null' if rdata[1].empty?

|

|

293

|

+

|

|

294

|

+

end

|

|

295

|

+

mailvalues = [mailfrom, mailto, mailsubject, mailcontent, "default", flowid, mailhcmd, mailhcont, rdata[0], rdata[1].gsub(/'/,"")] #xtool bug ->')

|

|

296

|

+

@insertdb.insert("emails", mailvalues )

|

|

297

|

+

end

|

|

263

298

|

|

|

264

|

-

LOGGER_PARSER.debug "DB", " Inserting http data info from #{flow.dst.address.to_s}".blue if VERBOSE

|

|

265

|

-

@insertdb.raw_insert("http_data", httpvalues)

|

|

266

299

|

|

|

267

|

-

|

|

268

|

-

|

|

269

|

-

|

|

300

|

+

|

|

301

|

+

#case FTP

|

|

302

|

+

when "FTP" then

|

|

303

|

+

LOGGER_PARSER.info "FTP", "FOUND an FTP request".white

|

|

304

|

+

#TODO

|

|

305

|

+

when "TCP" then

|

|

306

|

+

|

|

307

|

+

LOGGER_PARSER.info "TCP", "FOUND GENERIC TCP TRAFFIC - may be a netbios scan".white

|

|

308

|

+

LOGGER_PARSER.info "PARSER", "Trying see if it is IRC traffic".white

|

|

270

309

|

|

|

271

310

|

if Parser.guess(streamdata.inspect).class.inspect =~ /IRC/

|

|

272

311

|

ircvalues = search_irc(streamdata)

|

|

@@ -274,161 +313,96 @@ module DoroParser

|

|

|

274

313

|

LOGGER_PARSER.debug "DB", " Inserting IRC DATA info from #{flow.dst.address.to_s}".blue if VERBOSE

|

|

275

314

|

@insertdb.raw_insert("irc_data", ircvalue )

|

|

276

315

|

role_values = [CCIRC, flow.dst.address]

|

|

277

|

-

@insertdb.insert("host_roles", role_values ) unless @insertdb.select("host_roles", "role",

|

|

316

|

+

@insertdb.insert("host_roles", role_values ) unless @insertdb.select("host_roles", "role", CCIRC, "host_ip", flow.dst.address).one?

|

|

278

317

|

end

|

|

279

|

-

|

|

280

|

-

else

|

|

281

|

-

LOGGER_PARSER.info "PARSER", "NO-IRC".red

|

|

282

|

-

#TODO, store UNKNOWN communication data

|

|

283

|

-

|

|

284

318

|

end

|

|

285

319

|

|

|

286

320

|

|

|

321

|

+

else

|

|

287

322

|

|

|

323

|

+

LOGGER_PARSER.info "PARSER", "Unknown traffic, try see if it is IRC traffic"

|

|

288

324

|

|

|

289

|

-

|

|

290

|

-

|

|

291

|

-

|

|

292

|

-

|

|

293

|

-

|

|

294

|

-

|

|

295

|

-

|

|

296

|

-

|

|

297

|

-

|

|

298

|

-

|

|

299

|

-

streamdata.each do |m|

|

|

300

|

-

mailfrom = 'null'

|

|

301

|

-

mailto = 'null'

|

|

302

|

-

mailcontent = 'null'

|

|

303

|

-

mailsubject = 'null'

|

|

304

|

-

mailhcmd = 'null'

|

|

305

|

-

mailhcont = 'null'

|

|

306

|

-

rdata = ['null', 'null']

|

|

307

|

-

|

|

308

|

-

case m[1]

|

|

309

|

-

when 0

|

|

310

|

-

if Parser::SMTP.header?(m[0])

|

|

311

|

-

@email = Parser::SMTP.new(m[0])

|

|

312

|

-

LOGGER_PARSER.info "SMTP", "[A]".white + @email.hcmd + " " + @email.hcont

|

|

313

|

-

if Parser::SMTP.hasbody?(m[0])

|

|

314

|

-

@email.body = Parser::SMTP.body(m[0])

|

|

315

|

-

mailto = @email.body.to

|

|

316

|

-

mailfrom = @email.body.from

|

|

317

|

-

mailsubject = @email.body.subject.gsub(/'/,"") #xtool bug ->')

|

|

318

|

-

end

|

|

319

|

-

end

|

|

320

|

-

when 1

|

|

321

|

-

rdata = Parser::SMTP.response(m[0]) if Parser::SMTP.response(m[0])

|

|

322

|

-

LOGGER_PARSER.info "SMTP", "[R]".white + rdata[0] + " " + rdata[1]

|

|

323

|

-

rdata[0] = 'null' if rdata[0].empty?

|

|

324

|

-

rdata[1] = 'null' if rdata[1].empty?

|

|

325

|

-

|

|

325

|

+

if Parser.guess(streamdata.inspect).class.inspect =~ /IRC/

|

|

326

|

+

ircvalues = search_irc(streamdata)

|

|

327

|

+

ircvalues.each do |ircvalue|

|

|

328

|

+

LOGGER_PARSER.debug "DB", " Inserting IRC DATA info from #{flow.dst.address.to_s}".blue if VERBOSE

|

|

329

|

+

@insertdb.raw_insert("irc_data", ircvalue )

|

|

330

|

+

role_values = [CCIRC, flow.dst.address]

|

|

331

|

+

@insertdb.insert("host_roles", role_values ) unless @insertdb.select("host_roles", "role", CCIRC, "host_ip", flow.dst.address).one?

|

|

332

|

+

end

|

|

326

333

|

end

|

|

327

|

-

mailvalues = [mailfrom, mailto, mailsubject, mailcontent, "default", flowid, mailhcmd, mailhcont, rdata[0], rdata[1].gsub(/'/,"")] #xtool bug ->')

|

|

328

|

-

@insertdb.insert("emails", mailvalues )

|

|

329

|

-

end

|

|

330

334

|

|

|

335

|

+

end

|

|

331

336

|

|

|

337

|

+

when 17 then

|

|

338

|

+

#check if DNS

|

|

332

339

|

|

|

333

|

-

#

|

|

334

|

-

|

|

335

|

-

|

|

336

|

-

#TODO

|

|

337

|

-

when "TCP" then

|

|

340

|

+

#Layer 4 analysis

|

|

341

|

+

case service

|

|

342

|

+

when "DNS" then

|

|

338

343

|

|

|

339

|

-

|

|

340

|

-

|

|

344

|

+

@i = 0

|

|

345

|

+

@p = []

|

|

341

346

|

|

|

342

|

-

|

|

343

|

-

|

|

344

|

-

|

|

345

|

-

|

|

346

|

-

|

|

347

|

-

role_values = [CCIRC, flow.dst.address]

|

|

348

|

-

@insertdb.insert("host_roles", role_values ) unless @insertdb.select("host_roles", "role", CCIRC, "host_ip", flow.dst.address).one?

|

|

349

|

-

end

|

|

350

|

-

end

|

|

351

|

-

|

|

352

|

-

|

|

353

|

-

else

|

|

354

|

-

|

|

355

|

-

LOGGER_PARSER.info "PARSER", "Unknown traffic, try see if it is IRC traffic"

|

|

356

|

-

|

|

357

|

-

if Parser.guess(streamdata.inspect).class.inspect =~ /IRC/

|

|

358

|

-

ircvalues = search_irc(streamdata)

|

|

359

|

-

ircvalues.each do |ircvalue|

|

|

360

|

-

LOGGER_PARSER.debug "DB", " Inserting IRC DATA info from #{flow.dst.address.to_s}".blue if VERBOSE

|

|

361

|

-

@insertdb.raw_insert("irc_data", ircvalue )

|

|

362

|

-

role_values = [CCIRC, flow.dst.address]

|

|

363

|

-

@insertdb.insert("host_roles", role_values ) unless @insertdb.select("host_roles", "role", CCIRC, "host_ip", flow.dst.address).one?

|

|

347

|

+

flowdeep.each do |flow|

|

|

348

|

+

flow.each do |pkt|

|

|

349

|

+

@p[@i] = pkt.payload

|

|

350

|

+

@i = @i + 1

|

|

351

|

+

end

|

|

364

352

|

end

|

|

365

|

-

end

|

|

366

353

|

|

|

367

|

-

end

|

|

368

|

-

|

|

369

|

-

when 17 then

|

|

370

|

-

#check if DNS

|

|

371

|

-

|

|

372

|

-

#Layer 4 analysis

|

|

373

|

-

case service

|

|

374

|

-

when "DNS" then

|

|

375

|

-

#DEBUG

|

|

376

|

-

#puts "DNS"

|

|

377

354

|

|

|

378

|

-

|

|

379

|

-

@p = []

|

|

380

|

-

|

|

381

|

-

flowdeep.each do |flow|

|

|

382

|

-

flow.each do |pkt|

|

|

383

|

-

@p[@i] = pkt.payload

|

|

384

|

-

@i = @i + 1

|

|

385

|

-

end

|

|

386

|

-

end

|

|

355

|

+

@p.each do |d|

|

|

387

356

|

|

|

357

|

+

begin

|

|

388

358

|

|

|

389

|

-

|

|

359

|

+

dns = DoroDNS.new(d)

|

|

390

360

|

|

|

391

|

-

begin

|

|

392

361

|

|

|

393

|

-

|

|

362

|

+

dnsvalues = ["default", dns.name, dns.cls_i.inspect, dns.qry?, dns.ttl, flowid, dns.address.to_s, dns.data, dns.type_i.inspect]

|

|

394

363

|

|

|

364

|

+

LOGGER_PARSER.debug "DB", " Inserting DNS data from #{flow.dst.address.to_s}".blue if VERBOSE

|

|

365

|

+

unless @insertdb.insert("dns_data", dnsvalues )

|

|

366

|

+

LOGGER_PARSER.error "DB", " Error while Inserting DNS data".blue

|

|

367

|

+

nex

|

|

368

|

+

end

|

|

369

|

+

dnsid = @insertdb.find_seq("dns_id_seq").first['currval']

|

|

395

370

|

|

|

396

|

-

|

|

371

|

+

if dns.qry?

|

|

372

|

+

LOGGER_PARSER.info "DNS", "DNS Query:".white + " #{dns.name}".yellow + " class #{dns.cls_i} type #{dns.type_i}"

|

|

373

|

+

else

|

|

374

|

+

dns_list.merge!( dns.address.to_s => dnsid)

|

|

375

|

+

LOGGER_PARSER.info "DNS", "DNS Answer:".white + " #{dns.name}".yellow + " class #{dns.cls} type #{dns.type} ttl #{dns.ttl} " + "#{dns.address}".yellow

|

|

376

|

+

end

|

|

397

377

|

|

|

398

|

-

|

|

399

|

-

unless @insertdb.insert("dns_data", dnsvalues )

|

|

400

|

-

LOGGER_PARSER.error "DB", " Error while Inserting DNS data".blue

|

|

401

|

-

nex

|

|

402

|

-

end

|

|

403

|

-

dnsid = @insertdb.find_seq("dns_id_seq").first['currval']

|

|

378

|

+

rescue => e

|

|

404

379

|

|

|

405

|

-

|

|

406

|

-

LOGGER_PARSER.

|

|

407

|

-

|

|

408

|

-

dns_list.merge!( dns.address.to_s => dnsid)

|

|

409

|

-

LOGGER_PARSER.info "DNS", "DNS Answer:".white + " #{dns.name}".yellow + " class #{dns.cls} type #{dns.type} ttl #{dns.ttl} " + "#{dns.address}".yellow

|

|

380

|

+

LOGGER_PARSER.error "DB", "Something went wrong while adding a DNS entry into the DB (packet malformed?) - The packet will be skipped"

|

|

381

|

+

LOGGER_PARSER.debug "DB", "#{$!}" if VERBOSE

|

|

382

|

+

LOGGER_PARSER.debug "DB", e if VERBOSE

|

|

410

383

|

end

|

|

411

384

|

|

|

412

|

-

rescue

|

|

413

385

|

|

|

414

|

-

LOGGER_PARSER.error "DB", "Something went wrong while adding a DNS entry into the DB (packet malformed?) - The packet will be skipped ::."

|

|

415

|

-

LOGGER_PARSER.debug "DB", "#{$!}"

|

|

416

386

|

end

|

|

387

|

+

end

|

|

417

388

|

|

|

389

|

+

when 1 then

|

|

390

|

+

#TODO: ICMP data

|

|

391

|

+

#case ICMP xtractr.flows('flow.service:SMTP').first.proto = 1

|

|

392

|

+

else

|

|

418

393

|

|

|

419

|

-

|

|

420

|

-

end

|

|

394

|

+

LOGGER_PARSER.warn "PARSER", "Unknown protocol: #{flow.id} -- Proto #{flow.proto}".yellow

|

|

421

395

|

|

|

422

|

-

|

|

423

|

-

#TODO: ICMP data

|

|

424

|

-

#case ICMP xtractr.flows('flow.service:SMTP').first.proto = 1

|

|

425

|

-

else

|

|

396

|

+

end

|

|

426

397

|

|

|

427

|

-

|

|

398

|

+

rescue => e

|

|

428

399

|

|

|

400

|

+

LOGGER_PARSER.error "PARSER", "Error while analyzing flow #{flow.id}"

|

|

401

|

+

LOGGER_PARSER.debug "PARSER", "#{e.inspect} BACKTRACE: #{e.backtrace}"

|

|

402

|

+

LOGGER_PARSER.info "PARSER", "Flow #{flow.id} will be skipped"

|

|

403

|

+

next

|

|

429

404

|

end

|

|

430

405

|

|

|

431

|

-

|

|

432

406

|

}

|

|

433

407

|

|

|

434

408

|

#DEBUG

|

|

@@ -444,28 +418,28 @@ module DoroParser

|

|

|

444

418

|

def self.start(daemon)

|

|

445

419

|

daemon ||= false

|

|

446

420

|

|

|

447

|

-

|

|

448

|

-

LOGGER_PARSER.info "Dorothy", "Started".yellow

|

|

421

|

+

LOGGER_PARSER.info "PARSER", "Started, looking for network dumps into Dorothive.."

|

|

449

422

|

|

|

450

423

|

if daemon

|

|

451

|

-

check_pid_file DoroSettings.env[:

|

|

452

|

-

puts "[

|

|

424

|

+

check_pid_file DoroSettings.env[:pidfile_parser]

|

|

425

|

+

puts "[PARSER]".yellow + " Going in backround with pid #{Process.pid}"

|

|

426

|

+

puts "[PARSER]".yellow + " Logging on #{DoroSettings.env[:logfile_parser]}"

|

|

453

427

|

Process.daemon

|

|

454

|

-

create_pid_file DoroSettings.env[:

|

|

455

|

-

|

|

428

|

+

create_pid_file DoroSettings.env[:pidfile_parser]

|

|

429

|

+

puts "[PARSER]".yellow + " Going in backround with pid #{Process.pid}"

|

|

456

430

|

end

|

|

457

431

|

|

|

458

|

-

|

|

459

432

|

@insertdb = Insertdb.new

|

|

460

433

|

infinite = true

|

|

461

434

|

|

|

462

435

|

while infinite

|

|

463

436

|

pcaps = @insertdb.find_pcap

|

|

464

|

-

analyze_bintraffic

|

|

437

|

+

analyze_bintraffic(pcaps)

|

|

465

438

|

infinite = daemon

|

|

466

|

-

|

|

439

|

+

LOGGER.info "PARSER", "SLEEPING" if daemon

|

|

440

|

+

sleep DoroSettings.env[:dtimeout].to_i if daemon # Sleeping a while if -d wasn't set, then quit.

|

|

467

441

|

end

|

|

468

|

-

LOGGER_PARSER.info "

|

|

442

|

+

LOGGER_PARSER.info "PARSER" , "There are no more pcaps to analyze.".yellow

|

|

469

443

|

exit(0)

|

|

470

444

|

end

|

|

471

445

|

|

|

@@ -478,12 +452,11 @@ module DoroParser

|

|

|

478

452

|

Process.kill(0, pid)

|

|

479

453

|

rescue Errno::ESRCH

|

|

480

454

|

stale_pid = true

|

|

481

|

-

rescue

|

|

482

455

|

end

|

|

483

456

|

|

|

484

457

|

unless stale_pid

|

|

485

|

-

puts "[

|

|

486

|

-

exit

|

|

458

|

+

puts "[PARSER]".yellow + " Dorothy is already running (pid=#{pid})"

|

|

459

|

+

exit(1)

|

|

487

460

|

end

|

|

488

461

|

end

|

|

489

462

|

end

|

|

@@ -493,7 +466,7 @@ module DoroParser

|

|

|

493

466

|

|

|

494

467

|

# Remove pid file during shutdown

|

|

495

468

|

at_exit do

|

|

496

|

-

LOGGER_PARSER.info "

|

|

469

|

+

LOGGER_PARSER.info "PARSER", "Shutting down." rescue nil

|

|

497

470

|

if File.exist? file

|

|

498

471

|

File.unlink file

|

|

499

472

|

end

|

|

@@ -503,15 +476,14 @@ module DoroParser

|

|

|

503

476

|

# Sends SIGTERM to process in pidfile. Server should trap this

|

|

504

477

|

# and shutdown cleanly.

|

|

505

478

|

def self.stop

|

|

506

|

-

LOGGER_PARSER.info "

|

|

507

|

-

pid_file = DoroSettings.env[:

|

|

479

|

+

LOGGER_PARSER.info "PARSER", "Shutting down.."

|

|

480

|

+

pid_file = DoroSettings.env[:pidfile_parser]

|

|

508

481

|

if pid_file and File.exist? pid_file

|

|

509

482

|

pid = Integer(File.read(pid_file))

|

|

510

483

|

Process.kill -15, -pid

|

|

511

|

-

|

|

512

|

-

LOGGER_PARSER.info "DoroParser", "Process #{pid} terminated"

|

|

484

|

+

LOGGER_PARSER.info "PARSER", "Process #{DoroSettings.env[:pidfile_parser]} terminated"

|

|

513

485

|

else

|

|

514

|

-

|

|

486

|

+

LOGGER_PARSER.info "PARSER", "Can't find PID file, is DoroParser really running?"

|

|

515

487

|

end

|

|

516

488

|

end

|

|

517

489

|

|

data/lib/dorothy2.rb

CHANGED

|

@@ -27,9 +27,7 @@ require 'md5'

|

|

|

27

27

|

require File.dirname(__FILE__) + '/dorothy2/do-init'

|

|

28

28

|

require File.dirname(__FILE__) + '/dorothy2/Settings'

|

|

29

29

|

require File.dirname(__FILE__) + '/dorothy2/deep_symbolize'

|

|

30

|

-

|

|

31

30

|

require File.dirname(__FILE__) + '/dorothy2/environment'

|

|

32

|

-

|

|

33

31

|

require File.dirname(__FILE__) + '/dorothy2/vtotal'

|

|

34

32

|

require File.dirname(__FILE__) + '/dorothy2/MAM'

|

|

35

33

|

require File.dirname(__FILE__) + '/dorothy2/BFM'

|

|

@@ -65,7 +63,7 @@ module Dorothy

|

|

|

65

63

|

else

|

|

66

64

|

LOGGER.warn("SANDBOX", "File #{bin.filename} actually not supported, skipping\n" + " Filtype: #{bin.type}") # if VERBOSE

|

|

67

65

|

dir_not_supported = File.dirname(bin.binpath) + "/not_supported"

|

|

68

|

-

Dir.mkdir(dir_not_supported) unless

|

|

66

|

+

Dir.mkdir(dir_not_supported) unless File.exists?(dir_not_supported)

|

|

69

67

|

FileUtils.cp(bin.binpath,dir_not_supported) #mv?

|

|

70

68

|

FileUtils.rm(bin.binpath) ## mv?

|

|

71

69

|

return false

|

|

@@ -235,8 +233,8 @@ module Dorothy

|

|

|

235

233

|

pcapfile = bin.dir_pcap + dumpname + ".pcap"

|

|

236

234

|

dump = Loadmalw.new(pcapfile)

|

|

237

235

|

|

|

238

|

-

pcaprpath = bin.md5 + "/pcap/" + dump.filename

|

|

239

|

-

pcaprid = Loadmalw.calc_pcaprid(

|

|

236

|

+

#pcaprpath = bin.md5 + "/pcap/" + dump.filename

|

|

237

|

+

pcaprid = Loadmalw.calc_pcaprid(dump.filename, dump.size)

|

|

240

238

|

|

|

241

239

|

LOGGER.debug "NAM", "VM#{guestvm[0]} ".yellow + "Pcaprid: " + pcaprid if VERBOSE

|

|

242

240

|

|

|

@@ -248,7 +246,7 @@ module Dorothy

|

|

|

248

246

|

empty_pcap = true

|

|

249

247

|

end

|

|

250

248

|

|

|

251

|

-

dumpvalues = [dump.sha, dump.size, pcaprid,

|

|

249

|

+

dumpvalues = [dump.sha, dump.size, pcaprid, dump.binpath, 'false']

|

|

252

250

|

dump.sha = "EMPTYPCAP" if empty_pcap

|

|

253

251

|

analysis_values = [anal_id, bin.sha, guestvm[0], dump.sha, get_time]

|

|

254

252

|

|

|

@@ -368,7 +366,7 @@ module Dorothy

|

|

|

368

366

|

puts "[Dorothy]".yellow + " Logging on #{DoroSettings.env[:logfile]}"

|

|

369

367

|

Process.daemon

|

|

370

368

|

create_pid_file DoroSettings.env[:pidfile]

|

|

371

|

-

|

|

369

|

+

puts "[Dorothy]".yellow + " Going in backround with pid #{Process.pid}"

|

|

372

370

|

end

|

|

373

371

|

|

|

374

372

|

#Creating a new NAM object for managing the sniffer

|

|

@@ -402,7 +400,7 @@ module Dorothy

|

|

|

402

400

|

infinite = daemon #exit if wasn't set

|

|

403

401

|

wait_end

|

|

404

402

|

LOGGER.info "Dorothy", "SLEEPING" if daemon

|

|

405

|

-

sleep DoroSettings.env[:dtimeout] if daemon # Sleeping a while if -d wasn't set, then quit.

|

|

403

|

+

sleep DoroSettings.env[:dtimeout].to_i if daemon # Sleeping a while if -d wasn't set, then quit.

|

|

406

404

|

end

|

|

407

405

|

end

|

|

408

406

|

|

|

@@ -460,10 +458,9 @@ module Dorothy

|

|

|

460

458

|

if pid_file and File.exist? pid_file

|

|

461

459

|

pid = Integer(File.read(pid_file))

|

|

462

460

|

Process.kill(-15, -pid)

|